Adobe Unveils AI Assistants for Photoshop and Express, Introducing Conversational Editing and Third-Party Model Integration

11 Sources

11 Sources

[1]

Adobe launches AI assistants for Express and Photoshop | TechCrunch

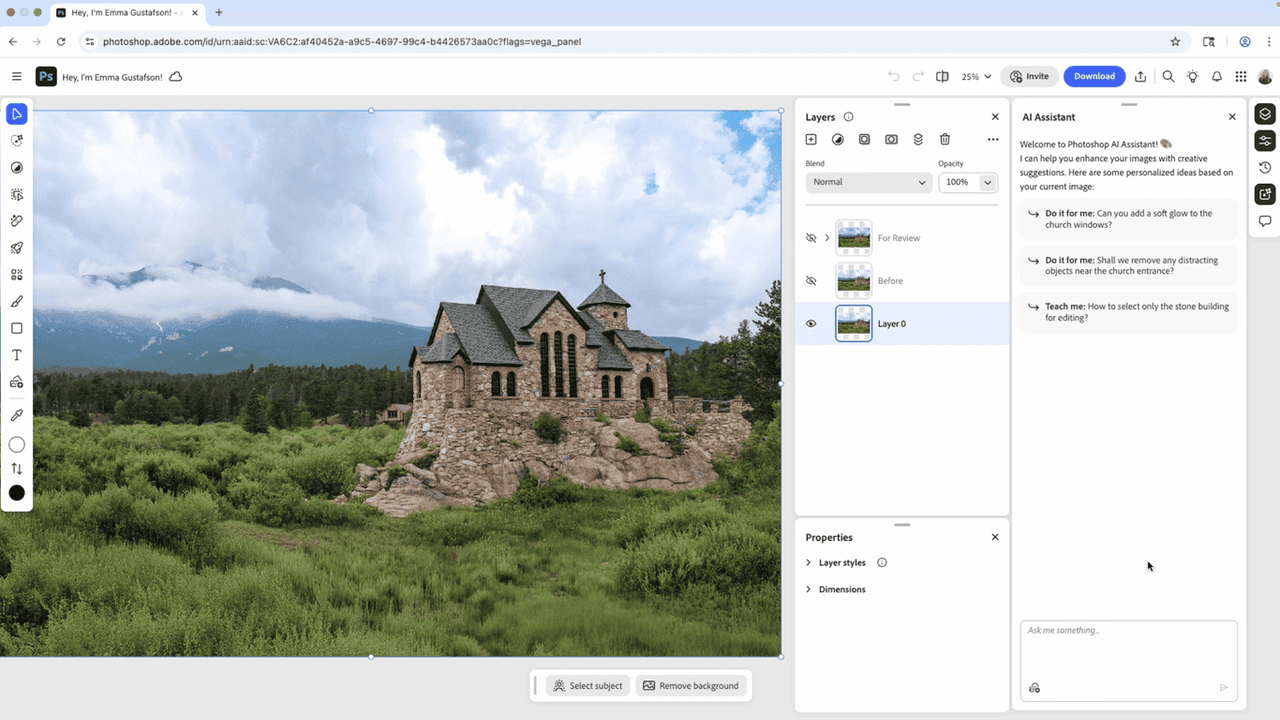

Adobe today released new AI assistants Creative Cloud products, Express and Photoshop, that can help users with image creation and editing. Most companies like to put AI assistants into a sidebar in their products to grab on-screen context. But for Express, Adobe has created a new mode that lets you use text prompts to create new images and designs. You can switch on the assistant mode to use AI prompts and then switch back to use editing tools and controls that are available in Express in the current version. Meanwhile, the new Photoshop assistant, which is in closed beta testing, lives in the sidebar. Adobe claims the assistant can understand different layers and help you automatically select objects and create masks. Adobe added that users can ask the assistant to complete repetitive tasks such as removing backgrounds or changing colors. Adobe's VP of generative AI, Alexandru Costin, told TechCrunch that the company decided to build a different mode for its AI assistant in Express to target students and professionals who use the app. The company wants to see if users can achieve what they want to do without having to switch back to the traditional interface, he explained. "We think this approach of switching between two modes, where you get the best of both worlds, is gonna make the technology both accessible and controllable," Costin said. The company said it is also experimenting with a new type of assistant that can coordinate with different assistants from other Adobe tools, and connect to a creator's social channels to better understand their style. Adobe said the product is in the early stages of development and is in private beta. The company said it is also exploring a way to connect Adobe Express with ChatGPT using OpenAI's app integrations API to let users create designs directly in ChatGPT. Adobe also announced a new set of AI features for its Creative Cloud apps. Photoshop users can now choose third-party models, like Google's Gemini 2.5 flash and Black Forest Labs' FLUX.1 Kontext, for the generative fill feature, which can remove objects or extend images. The company is also adding an AI-powered object mask in its video editing app, Premiere Pro, to let users easily identify and select objects or people to add effects or adjust colors.

[2]

Photoshop's New AI Assistant Can Rename All Your Layers So You Don't Have To

There was a lot of AI in Adobe Max's keynote address on Tuesday, but it was a Photoshop AI feature that inspired the loudest cheers. Anyone who uses Photoshop regularly knows it's such a pain to scroll through layer after layer, struggling to hunt down the exact one you need. But with an upcoming tool, you can have AI automatically name all of your layers with a single prompt. It's a great example of how AI can bring quality-of-life improvements when done well. Renaming layers is just one of many things Adobe's new AI assistants will be able to do. These chatbot-like tools will be added to Photoshop and Express. They have an emphasis on "conversational, agentic" experiences -- meaning you can ask the chatbot to make edits, and it can independently handle them. The AI assistant in Express is available in a public beta for individual Adobe accounts now (Enterprise and team plans don't have access yet). Photoshop's AI is currently restricted to a private beta, but you can sign up for the waitlist now. Express's AI assistant is similar to using a chatbot. Once you toggle on the tool in the upper left corner, a conversation window pops up. You can ask the AI to change the color of an object or remove an obtrusive element. While pro users might be comfortable making those edits manually, the AI assistant might be more appealing to its less experienced users and folks working under a time crunch. Also announced on Tuesday is Project Moonlight, a new platform in beta on Adobe's AI hub, Firefly. It's a new tool that hopes to act as a creative partner. With your permission, it uses your data from Adobe platforms and social media accounts to help you create content. For example, you can ask it to come up with 20 ideas for what to do with your newest Lightroom photos based on your most successful Instagram posts in the past. These AI efforts represent a range of what conversational editing can look like, Mike Polner, Adobe Firefly's vice president of product marketing for creators said in an interview. "One end of the spectrum is [to] type in a prompt and say, 'Make my hat blue.' That's very simplistic," said Polner. "With Project Moonlight, it can understand your context, explore and help you come up with new ideas and then help you analyze the content that you already have," Polner said. While these AI assistants won't be the "everything machines" that ChatGPT or Gemini claim to be, it's a stark sign that Adobe's AI revolution is marching onward. Its focus on agentic AI, like much of the AI industry, aims to persuade people to delegate tasks to AI. In a recent Adobe survey of 16,000 global creators, 86% said they use creative generative AI. Over three-fourths (80%) said Gen AI helped them create content they otherwise couldn't have made. The new stats align with a growing popularity of generative media tools, like AI image and video generators, with newer models like OpenAI's Sora and Google's nano banana going viral this year. Adobe's been all in on AI for a while. This year, Adobe introduced AI-first mobile apps for Photoshop, Firefly and a new video editor called Premiere. The professional photographers, designers and illustrators who are Adobe's primary customers haven't all been sold on Adobe's AI ambitions, raising concerns about AI's legality, energy use and ethics.

[3]

Photoshop's biggest AI update yet just dropped - how to try all the new tools

Adobe is continuing its drive into AI with the launch of several new AI-powered features for Photoshop -- and one is potentially a huge time-saver. At this week's Adobe Max conference in Los Angeles, the company unveiled multiple new initiatives, including AI assistants in Express, Firefly, and Photoshop. Also: The most popular AI image and video generator might surprise you - here's why people use it But beyond a new assistant, Photoshop is getting a serious injection of AI with the addition of tools that make editing easier and faster. Here's a look at what's new. Powered by agentic AI, Photoshop's new AI assistant is designed to free up time by automating repetitive tasks, such as resizing a batch of photos or applying the same edits, while providing personalized recommendations and tips that maintain creative control. Also: Why open source may not survive the rise of generative AI You can chat with Photoshop to make edits, get tips on how to make a photo look better, and even get help on what to do next and how to do it. When it debuted in 2023, Generative Fill quickly became one of the most popular Photoshop features. Now, Adobe says, you can use partner AI models, such as Google Gemini 2.5 Flash and Black Forest Labs FLUX.1 Kontext, in addition to Firefly's image models, to edit your content with a prompt. Want to give older photos a glow-up? Using Topaz Labs' technology, you can now upscale low-resolution images to 4K. Matching the color and lighting of an object to a background layer can be a tricky task. However, Photoshop's AI-powered Harmonize feature automatically integrates objects or people into environments in realistic-looking ways by matching the light, color, and tone of both layers. Also: Did Google just give us the ultimate AI photo-editing tool? I tested it on the Pixel, and hard agree ZDNET's David Gewirtz tested this feature earlier this month, calling it "very, very good" and even "addictive." Isolating people and objects in video frames is significantly easier with Object Mask, an AI feature that assists with color grading, blurring, and adding special effects without manual rotoscoping. Finding the best images from a large batch can be one of the most time-consuming elements of photographing an event. Assisted Culling not only identifies the best shots, but you can filter results for levels of focus, different angles, and various degrees of sharpness. Also: Adobe might've just solved one of generative AI's biggest legal risks These features are available in public beta, except for AI Assistant, which Adobe says is "rolling through a private beta waitlist on Photoshop on the web." Get the morning's top stories in your inbox each day with our Tech Today newsletter.

[4]

I Got an Early Look at Adobe's Research Projects: How AI Makes It Quicker Than Ever to Edit Photos

Every year at Adobe Max, the software giant's annual creative conference, it shows off a number of research projects it has in development. Sneaks, as the event is known, is a great way to peek behind the vast Adobe curtain and understand where the tech giant might be headed in the future. At this year's event in Los Angeles, I got an early, exclusive first look at three of the photography projects. This year's crop of photography-focused presentations each tackle a task that could be handled with manual editing. But instead of spending hours relighting photos, removing distractors or animating 2D objects, these new tools make edits with a single click or two. They're impressive feats, and they're possible thanks to Adobe's Firefly AI models running in the background. Trace Erase is one of these projects. The new tool builds on Adobe's generative remove technology that acts like an AI-powered eraser. In Photoshop, you can use generative remove to clean up images with photobombers, distracting wires, and cables and other errant objects impeding your shot. But it can take multiple steps to completely remove an object from an image, as Lingzhi Zhang, Adobe research scientist, showed me in a demo. There are often other signs that something has been removed, like a reflection in a window. Trace Erase takes care of all those follow-up edits and, like the name implies, removes every trace of an object's existence. It's more than just removing reflections and smoothing out what remains. The AI has a wide knowledge base, so if you ask it to remove a jet ski, it will remove the entire object and the waves it created in its wake. If generative remove is an AI eraser, Trace Erase is an AI obliterator. Another photography Sneak is called Light Touch. With the tool, you can completely transform how a photo is lit. You can literally use it to change the lighting -- Adobe research scientist Zhixin Shu showed me how it can be used on an image of a lamp to turn on its light and adjust how bright it is, with the shadows aligning with the environment automatically. You can also diffuse light to adjust the prominence of shadows. Spatial lighting, the third method in the project, lets you place the source of light within an image. You can make the light appear to be coming from any point in the photo, including from within existing objects, like a lit carved pumpkin. It's an incredibly powerful tool that can transform any image simply by changing the lighting condition -- something that's very hard to do manually without having to reshoot. Turn Style is the third Sneaks photography project. Its name is very similar to the turnstiles you see at events, which makes sense for an AI-powered tool that takes 2D objects and brings them into 3D. Adobe research scientist Zhiqin Chen showed me how the 3D Render button can transform a 2D image into a fully rendered 3D object, which you can turn fully around, all 360 degrees. It maintains character consistency, so you don't have to worry about having many AI hallucinations. While AI helps to turn it into a 3D object, you still have control over what degree it's placed and what angle is visible. Some previous Sneaks research projects have eventually ended up in the hands of users, like last year's Photoshop harmonize AI tool, which is now generally available. But there's never a guarantee that will be the case for all of them. What this year's projects show is that Adobe is focusing its AI efforts for photography and photo editing on more practical use cases. "In Photoshop, it's not about just clicking a button for art. It's [about] giving you all the tools to make it easy to achieve whatever you want to achieve," said Stephen Nielsen, senior director for product management for Photoshop.

[5]

Adobe's new Photoshop AI Assistant can automate repetitive tasks

Among the usual slew of AI enhancements to its Creative Cloud apps, Adobe has introduced a new Photoshop AI Assistant to help automate repetitive chores and provide personalized recommendations. At Adobe Max 2025, the company also introduced new tools for Photoshop, Premiere and Lightroom, while launching a new AI generative model and bringing in new third party models from Topaz and others. A key new feature in Photoshop and Express (Adobe's all-in-one design, photo, and video tool) is the AI Assistant that lets you can chat with in a conversational manner to gain "more control, power and potential time-savings," according to Adobe. With that, you can tell it to take on a series of creative tasks like color correction on resizing. You can easily switch between prompts with the agent and manual tools like sliders to adjust brightness and contrast. It can also provide personalized recommendations and offer tutorials on how to accomplish complex tasks. In a brief demo, Adobe showed that when you switch to Photoshop's "agentic" mode in those apps, it minimizes the usual complex interface and leaves you with a simple prompt-based UI. You can then type in the task you want to accomplish, and the agent will perform those steps automatically. You can then jump back into the full interface to fine tune the result by changing things like brightness or levels. Along with the AI Assistant, Adobe introduced a few other AI tools for Photoshop. Chief among those are new partner models for generative fill that lets you easily remove unwanted objects and fill in the hole left behind. Those include Google Gemini 2.5 f!ash, Black Forest Labs FLUX.1 Kontext and Adobe's latest Firefly Image Models. It also introduced Firefly Image Model 5, Adobe's most advanced image generation model yet. Photoshop also gains new Generative Upscale option that uses Topaz Lab's AI to upscale small, cropped and other low-resolution images into 4K with "realistic detail," Adobe says. Another feature, Harmonize, lets you place objects or people into different environments in a realistic manner, eliminating much of work necessary for such compositing. Harmonize also matches the light, color and tone of foreground objects and people to the background. Premiere, meanwhile, introduced a similar feature called AI Object Mask that performs automatic identification and isolation of people and objects in video, so they can be edited and tracked without any manual rotoscoping. The app also gains new rectangle, ellipse and pen masking in Premiere to make targeted adjustments, along with a fast vector mask for quicker tracking. Finally, LIghtroom is getting a new feature called Assisted Culling. It lets you quickly and easily identify the best images in a large photo collection, with the ability to filter for things like focus level, angles and degrees of sharpness. Photoshop's Generative Fill with Partner Models, Generative Upscale and Harmonize are now available to customers today. Premiere's AI Object Mask, Rectangle, Ellipse and Pen Masking and Fast Vector Mask, along with Lightroom's AI Assisted Culling, launch today in beta. Adobe's Photoshop AI Assistant, meanwhile, will be available through a private beta waitlist.

[6]

More Creators Are Using AI, So Adobe's Adding AI Assistants to Photoshop and Express

Adobe is introducing chatbot-like conversational editing to its content editing programs. The new AI assistants in Photoshop and Express will have an emphasis on "conversational, agentic" experiences -- meaning you can ask the chatbot to make edits, and it can independently handle them. The tools are just a few of the updates the company announced on Tuesday at its annual Max creative conference. The AI assistants in Photoshop and Express are in beta now. For example, in Express, you can ask the AI to change the color of an object or remove an obtrusive element. While pro users might be comfortable making those edits manually, the AI assistant might be more appealing to its less experienced users and folks working under a time crunch. Also announced on Tuesday is Project Moonlight, a new platform in beta on Adobe's AI hub, Firefly. It's a new tool that hopes to act as a creative partner. With your permission, it uses your data from Adobe platforms and social media accounts to help you create content. For example, you can ask it to come up with 20 ideas for what to do with your newest Lightroom photos based on your most successful Instagram posts in the past. These AI efforts represent a range of what conversational editing can look like, Mike Polner, Adobe Firefly's vice president of product marketing for creators said in an interview. "One end of the spectrum is [to] type in a prompt and say, 'Make my hat blue.' That's very simplistic," said Polner. "With Project Moonlight, it can understand your context, explore and help you come up with new ideas and then help you analyze the content that you already have," Polner said. While these AI assistants won't be the "everything machines" that ChatGPT or Gemini claim to be, it's a stark sign that Adobe's AI revolution is marching onward. Its focus on agentic AI, like much of the AI industry, aims to persuade people to delegate tasks to AI. In a recent Adobe survey of 16,000 global creators, 86% said they use creative generative AI. Over three-fourths (80%) said Gen AI helped them create content they otherwise couldn't have made. The new stats align with a growing popularity of generative media tools, like AI image and video generators, with newer models like OpenAI's Sora and Google's nano banana going viral this year. Adobe's been all in on AI for a while. This year, Adobe introduced AI-first mobile apps for Photoshop, Firefly and a new video editor called Premiere. The professional photographers, designers and illustrators who are Adobe's primary customers haven't all been sold on Adobe's AI ambitions, raising concerns about AI's legality, energy use and ethics. Agentic AI assistants are just the tip of the iceberg of all the news Adobe dropped on Tuesday. For more, check out the new generative audio and music tools in Firefly.

[7]

Adobe adds chatbots to Photoshop and other design apps

The big picture: Adobe has been working for years to show creators how generative AI can be a boon to their jobs rather than an existential threat. Driving the news: Adobe is using its annual Max conference for creative types to show off a host of AI projects, including the new AI assistants coming to Photoshop and Adobe Express that allow people to describe edits in their own words and automate repetitive tasks. * Adobe will preview how its tools work within chatbots, starting with Adobe Express in ChatGPT. It expects to support more of its apps as well as other chatbots over time. * The company says it's also expanding Firefly AI playground to move beyond the concept stage to include AI-driven video editing, soundtrack creation, and voice-over tools. Between the lines: Adobe's AI assistants can handle simple fixes like removing a background or broader stylistic requests such as "make this more tropical." Yes, but: The rollout is uneven. Adobe Express' assistant is in public beta; Photoshop's is in private testing. In Firefly, speech and soundtrack generation are public, while video tools remain private. The bottom line: Adobe's blending of design tools and chatbots hints at a new AI-powered creative workflow.

[8]

Adobe turns Photoshop into a chatbot that edits, renames and collaborates

Adobe Firefly's VP said the goal is to create agentic AI that handles both simple and complex creative tasks. At its Adobe Max conference on Tuesday, Adobe unveiled new AI assistants for Photoshop and Express, including a feature for Photoshop that automatically renames a user's layers with a single prompt. The new tool, which drew loud cheers during the keynote, is designed to solve a common user pain point of navigating disorganized, unnamed layers. This layer-naming function is one of several capabilities of the new chatbot-like AI assistants, which emphasize a "conversational, agentic" experience. Users will be able to ask the chatbot to make edits, such as changing an object's color or removing an element, and the AI will be able to handle the tasks independently. The AI assistant for Adobe Express is available now in a public beta for individual Adobe accounts. The more advanced Photoshop AI assistant is currently in a private beta, with a waitlist available for users to sign up. Video: Adobe Adobe's focus on agentic AI, which can delegate tasks to the assistant, aligns with a broader industry trend. According to Mike Polner, Adobe Firefly's vice president of product marketing, this can range from simple prompts like "Make my hat blue" to more complex partnerships. The company also announced Project Moonlight, a new platform in beta on its AI hub, Firefly. Project Moonlight is designed to act as a "creative partner" by using a creator's data from Adobe platforms and social media accounts, with permission. For example, it can be asked to generate 20 ideas for new content based on a user's most successful past Instagram posts and recent Lightroom photos. These announcements come as Adobe continues its significant push into generative AI. A recent Adobe survey of 16,000 creators found that 86% use generative AI, with 80% stating it helped them create content they otherwise could not have made.

[9]

Adobe's new AI assistant in Express is conversational

At Adobe MAX 2025, Adobe unveiled a new AI Assistant built into Adobe Express that aims to make design as natural as talking. The tool, now in beta, lets users describe what they want -- "make it look more tropical," for instance and watch the platform adjust colors, images, and fonts in real time. The idea is to take the friction out of design, particularly for people who aren't trained designers. Instead of navigating layers of menus or templates, users can type or say what they want and let the AI handle the details. Adobe says the assistant can modify individual layers, swap out backgrounds, and suggest new design elements while keeping the rest of a project intact. The company also emphasized that the Express assistant draws on Adobe's creative and content intelligence essentially the company's long-trained sense of what makes a design cohesive or visually appealing. It uses a mix of Adobe's own Firefly models and third-party AI to recommend tools or generate elements automatically. What sets this apart from other AI-driven design tools is its conversational tone. It doesn't just execute commands but follows up with contextual prompts asking, for example, whether the user wants to change a font to match a new color scheme. For businesses, Adobe plans to extend this technology into its enterprise suite, offering brand-locked templates and collaboration tools so teams can create on-brand content more easily. Some early adopters, like dentsu and Lumen, have suggested it could help non-designers produce professional visuals without slowing down their workflows. For now, the AI Assistant is available in beta on desktop for Adobe Express Premium users, with wider access planned through Adobe's Firefly credit system.

[10]

Adobe's AI assistants show creative software is no longer a passive tool

Project Moonlight will be able to review your designs and suggest social media strategies. Adobe MAX 2025 is underway in LA, and the creative tech giant has announced a slew of new features coming to its software. There's prompt-based image editing in the AI image generator Firefly with Image Model 5, AI upscaling from Topaz Labs in Photoshop, and Premiere gets a new Object Mask for video editing. Adobe's also announced the launch of customisable Firefly AI models for brands and artists. But perhaps the biggest game changer isn't any single new tool but a transformation in how we see creative software and how we interact with it. Adobe's already been building tutorials into its software and adding features like the Contextual Task Bar in Photoshop to put frequent tasks in easy reach. Adobe MAX 2025 saw it reveal the next step: in-app AI assistants that will be able to not only follow instructions but also make suggestions and even review your designs. Now in beta in Adobe Express and Photoshop, Adobe's AI assistants are described as "partners you can talk to". You interact with them using text in natural language and you can ask them to do jobs for you, from tedious tasks like renaming layers in Photoshop, organising a content library or applying presets in bulk, to completing multi-step jobs like designing a social media ad. Adobe says the assistants will understand your goals, anticipate your needs, and carry context from task to task to help you move from idea to final output faster. They'll also adapt to your work and style, so the idea is that over time they'll get better at anticipating what you want to do. What could be more controversial is that the assistants aren't only passive. They can also make suggestions. Change the style of a social media banner in Adobe Express, and the assistant might suggest also changing the text to match the new style. You'll even be able to ask the assistants to 'review your design' and to recommend things that could be improved. Adobe insists that creatives will remain in the driver's seat. The AI assistant will only do the jobs you assign to it, and you can always check its work. Further ahead, Adobe expects its assistants to work together across creative apps. This is the idea behind Project Moonlight, which is described as a "personal orchestration assistant" capable of coordinating across multiple Adobe apps and beyond. Adobe says each app will have an AI Assistant that's an expert in its domain, so Photoshop for image editing, Premiere for video, Adobe Lightroom for photography. Project Moonlight will operate "like a conductor of an orchestra, bringing them all together in harmony". The idea is that you'll be able to tell Project Moonlight what you need, and it will unite the AI Assistants as one creative team to help you do it. It will also connect to users' social media accounts to analyse their content and performance. According to Adobe, this AI uber-assistant will be able to "understand your style, projects, and assets", identify social trends and craft content strategies to grow your social media audience. When you use the AI to brainstorm ideas, it will make personalised suggestions and generate images, videos, and social posts aligned to your direction. Adobe has suggested that eventually Project Moonlight may be able to connect to third-party apps and other AI chatbots. This marks a fundamental change in how we work with our software, turning it into more of a collaborator than a tool that needs to be learned and mastered. The move could potentially save hours of watching Photoshop tutorials on YouTube because, at least in theory, you won't need to know what tool to use to complete a task you want to accomplish or where to find it: you just ask out AI buddy. But if it can propose ideas as well as execute them, it also broadens the scope of creative software beyond what some users might want from it.

[11]

Is Adobe's 'conversational editing' the future of design?

In news that will come as a surprise to nobody, AI was the main focus of the opening keynote at Adobe Max in Los Angeles this morning. From new generative Firefly models (including impressive custom options) to new soundtracking tools, impressive AI features have arrived to enhance pretty much every aspect of the creation process. Among the various announcements was a phrase Adobe kept coming back to: conversational editing. With the advent of new AI assistants for Photoshop, Adobe Express and more, it seems much more of the design experience is set to be driven by prompts and chatbots. But 'conversational editing' also hints at a more human side of designing with AI - which, as Adobe told Creative Bloq, is no accident. Photoshop's new AI Assistant, powered by agentic AI, lets creatives save time by instructing it to take on series of creative tasks or provide personalised recommendations. In terms of the latter functionality, Adobe described the assistant as a "second set of eyes", offering constructive feedback on a flyer design. But perhaps the biggest cheer of the morning was awarded to the assistant's ability to clean up messy or non-existent layer names. Meanwhile, Adobe Express's new AI Assistant lets users design personalised content simply by describing what they want. The non-destructive tool lets users generate edits on any layer, including fonts and images, keeping the rest of the design intact. This natural, human style of converrational editing was a "very deliberate" focus for Adobe, Govind Balakrishnan, senior vice president and general manager at Adobe Express, told Creative Bloq. "The fact that you can converse to create a design by describing what you want to do - that's where the magic is." And at the end of the keynote, Adobe revealed a sneak peek of a tool that could take the idea even further. Project Moonlight serves as an AI Assistant that works as a personal social media assistant, letting users upload images from Lightroom and generate ideas for social posts.

Share

Share

Copy Link

Adobe launches new AI assistants for Photoshop and Express that can automate repetitive tasks through conversational interfaces. The company also introduces third-party AI model integration and advanced features like automatic layer naming and enhanced generative fill capabilities.

Adobe Introduces Conversational AI Assistants

Adobe has launched new AI assistants for its Creative Cloud applications Photoshop and Express, marking a significant shift toward conversational editing interfaces. The assistants are designed to automate repetitive tasks and provide personalized recommendations while maintaining creative control for users

1

2

.The Express AI assistant is currently available in public beta for individual Adobe accounts, while Photoshop's AI assistant remains in private beta testing. Adobe's VP of generative AI, Alexandru Costin, explained that the company designed different approaches for each application to target their respective user bases [1](https://techcrunch.com/2025/10/28/adobe-l anches-ai-assistants-for-express-and-photoshop/).

Source: TechCrunch

Dual-Mode Interface Innovation

For Adobe Express, the company has created a unique dual-mode system that allows users to switch between AI-powered prompts and traditional editing tools. This approach enables users to leverage conversational AI for complex tasks while retaining access to manual controls when needed

1

5

.The Photoshop assistant, positioned in a sidebar interface, can understand different layers and automatically select objects to create masks. One of the most celebrated features allows AI to automatically rename all layers with a single prompt, addressing a long-standing pain point for Photoshop users

2

.

Source: Axios

Third-Party AI Model Integration

A major development is Adobe's integration of third-party AI models into its generative fill feature. Users can now choose from Google's Gemini 2.5 Flash, Black Forest Labs' FLUX.1 Kontext, and Topaz Labs' upscaling technology alongside Adobe's own Firefly models

3

5

.The integration with Topaz Labs enables users to upscale low-resolution images to 4K quality, while the various AI models provide different approaches to generative fill and image extension capabilities

3

.Advanced AI-Powered Features

Adobe has introduced several sophisticated AI tools beyond the conversational assistants. The Harmonize feature automatically integrates objects or people into different environments by matching light, color, and tone between layers, significantly reducing the manual work required for realistic compositing

3

5

.For video editing, Premiere Pro receives an AI-powered Object Mask feature that automatically identifies and selects objects or people, eliminating the need for manual rotoscoping when adding effects or adjusting colors

1

5

.Related Stories

Project Moonlight and Future Developments

Adobe unveiled Project Moonlight, a new platform in beta that aims to serve as a creative partner by analyzing user data from Adobe platforms and social media accounts. This system can generate content ideas based on successful past posts and help users develop creative strategies

2

.The company is also exploring integration with OpenAI's ChatGPT through app integrations API, potentially allowing users to create designs directly within ChatGPT

1

.Research Projects and Future Capabilities

At Adobe Max, the company showcased several research projects that demonstrate future AI capabilities. Trace Erase builds upon generative remove technology to completely eliminate objects and their traces, including reflections and environmental effects. Light Touch allows users to transform photo lighting conditions, while Turn Style converts 2D objects into fully rotatable 3D models

4

.

Source: CNET

These developments reflect Adobe's broader strategy of focusing AI efforts on practical use cases that address real workflow challenges rather than purely artistic generation, as noted by Stephen Nielsen, senior director for product management for Photoshop

4

.References

Summarized by

Navi

[4]

Related Stories

Adobe Unveils AI Agents for Photoshop and Premiere Pro: A New Era of Creative Assistance

10 Apr 2025•Technology

Adobe Revolutionizes Photoshop with AI: New Tools and Features Unveiled

24 Apr 2025•Technology

Adobe Pushes AI Integration in Creative Cloud, Urging Artists to Embrace New Technology

26 Oct 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology