AI Assistants Inadvertently Sharing Confidential Information in Workplace Settings

5 Sources

5 Sources

[1]

AI assistants are ratting you out for badmouthing your coworkers

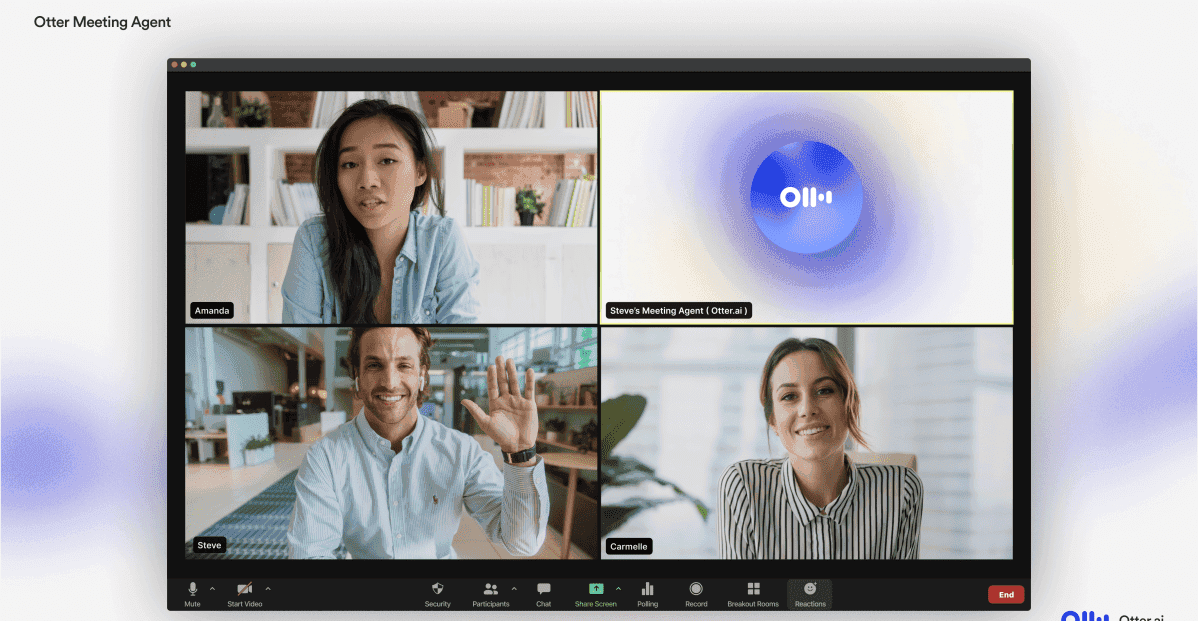

If you've ever been in a Zoom meeting and seen an Otter.ai virtual assistant in the room, just know they're listening to you -- and recording everything you're saying. It's a practice that's become somewhat mainstream in the age of artificial intelligence and hybrid or remote work, but what's alarming is many users don't know the full capabilities of the technology. Virtual assistants like Otter.ai, if you don't know the proper settings to select, will send a recording and transcript to all meeting attendees, even if a guest has left the meeting early. That means if you're talking bad about your coworkers, discussing confidential information, or sharing shoddy business practices, the AI will pick up on it. And it will rat you out. That happened to researcher and engineer Alex Bilzerian recently. He had been on a Zoom meeting with a venture-capital firm and Otter.ai was used to record the call. After the meeting, it automatically emailed him the transcript, which included "hours of their private conversations afterward, where they discussed intimate, confidential details about their business," Bilzerian wrote in an X post last week. Otter.ai was founded in 2016, and provides recording and transcription services that can be connected through Zoom or manually when in a virtual or in-person meeting. The transcript showed that after Bilzerian had logged off, investors had discussed their firm's "strategic failures and cooked metrics," he told The Washington Post. While Bilzerian alerted the investors to the incident, he still decided to kill the deal after they had "profusely apologized." This is just one of many examples of how nascent AI technologies are misunderstood by users. In response to Bilzerian's post on X, other users reported similar situations. "Literally happened to my wife today with a grant meeting at work," another user, Dean Julius wrote on X. "[The] whole meeting [was] recorded and annotated. Some folks stayed behind on the call to discuss the meeting privately. Kept recording. Sent it all out to everyone. Suuuuper awkward." Other users pointed out this could become a major issue in the health-care industry as virtual therapy and telehealth sessions become more prominent. "This is going to become a pretty terrible problem in health care, as you can imagine, regarding protected health information," Danielle Kelvas, a physician and medical adviser for medical software company IT Medical, told Fortune. "Health care providers understandably have concerns about privacy. Whether this is an AI-scribe device or AI powered ultrasound device, for example, we as doctors are asking, where is this information going?" Otter.ai, however, insists users can prevent these awkward or embarrassing incidents from happening. "Users have full control over their settings and we work hard to make Otter as intuitive as possible," an Otter.ai spokesperson told Fortune. "Although notifications are built in, we also strongly recommend continuing to ask for consent when using Otter in meetings and conversations and indicate your use of Otter for full transparency." The spokesperson also suggested visiting the company's Help Center to review all settings and preferences. As a means of increasing productivity and having records of important conversations, more businesses have begun implementing AI features into workflows. While it can undoubtedly cut down on the tedious practice of transcribing and sending notes out to stakeholders, AI still doesn't have the same sentience as humans. "AI poses a risk in revealing 'work secrets' due to its automated behaviours and lack of discretion," Sukh Sohal, a senior consultant at data advisory Affinity Reply, told Fortune. "I've had clients express concerns over unintended information sharing. This can come about when organizations adopt AI tools without fully understanding their settings or implications, such as auto-transcription continuing after participants have left a meeting." Ultimately, though, humans are the ones who are enabling the tech. "While AI is helping us work faster and smarter, we need to understand the tools we're using," Hannah Johnson, senior vice president of strategy at The Computing Technology Industry Association (CompTIA), told Fortune. "And we can't forget that emotional intelligence and effective communication are just as vital. Technology may be evolving, but human skills remain the glue that holds it all together." Other AI assistants, like Microsoft's Copilot, work similarly to Otter.ai, in that meetings can be recorded and transcribed. But in the case of Coiplot, there are some backstops: A user has to either be a part of the meeting or have the organizer approve the share of the recording or transcripts, a Microsoft spokesperson told Fortune. "In Teams meetings, all participants see a notification that the meeting is being recorded or transcribed," the Microsoft spokesperson said in a statement. "Additionally, admins can enable a setting that requires meeting participants to explicitly agree to be recorded and transcribed. Until they provide explicit permission, their microphones and cameras cannot be turned on, and they will be unable to share content." Still, these permissions don't always address human naivety or error. To apply more guardrails to virtual assistant usage, Lars Nyman, chief marketing officer of AI infrastructure company CUDO Compute, said to think of your AI assistant as a junior executive assistant. It's "useful, but not yet seasoned," Nyman told Fortune. "Avoid auto-sending follow-ups; instead, review and approve them manually. Shape AI processes actively, maintaining firm control over what gets shared and when. The key is not to entrust AI with more autonomy than you'd give to a new hire fresh out of college at this stage."

[2]

AI assistants are blabbing our embarrassing work secrets

Workplace AI tools can do tasks by themselves. Getting them to stop is the problem. Corporate assistants have long been the keepers of company gossip and secrets. Now artificial intelligence is taking over some of their tasks, but it doesn't share their sense of discretion. Researcher and engineer Alex Bilzerian said on X last week that, after a Zoom meeting with some venture capital investors, he got an automated email from Otter.ai, a transcription service with an "AI meeting assistant." The email contained a transcript of the meeting -- including the part that happened after Bilzerian logged off, when the investors discussed their firm's strategic failures and cooked metrics, he told The Washington Post via direct message on X. The investors, whom he would not name, "profusely apologized" once he brought it to their attention, but the damage was already done. That post-meeting chatter made Bilzerian decide to kill the deal, he said. Companies are pumping AI features into work products across the board. Most recently, Salesforce announced an AI offering called Agentforce, which allows customers to build AI-powered virtual agents that help with sales and customer service. Microsoft has been ramping up the capabilities of its AI Copilot across its suite of work products, while Google has been doing the same with Gemini. Even workplace chat tool Slack has gotten in on the game, adding AI features that can summarize conversations, search for topics and create daily recaps. But AI can't read the room like humans can, and many users don't stop to check important settings or consider what could happen when automated tools access so much of their work lives. Skip to end of carousel Should you trust that AI? (Outlanders Design For The Washington Post) Artificial intelligence is becoming part of everyday life. But is it better than what it replaces? Is it accurate or biased? What does it do to your privacy? And what happens when something goes wrong? The Washington Post's Help Desk is putting AI to the test. End of carousel Otter responded to Bilzerian's thread on X, saying that users "have full control over conversation sharing permissions and can change, update, or stop the sharing permissions of a conversation anytime. For this specific instance, users have the option not to share transcripts automatically with anyone or to auto-share conversations only with users who share the same Workspace domain." It also shared a link to a guide showing how users can change their settings. Fancy investors aren't the only ones getting burned by new AI features. Rank-and-file employees are also at risk of AI-powered tools recording and sharing damaging information. "I think it's a big issue because the technology is proliferating so fast, and people haven't really internalized how invasive it is," said Naomi Brockwell, a privacy advocate and researcher. Brockwell said the combination of constant recording and AI-powered transcription erodes our privacy at work and opens us up to lawsuits, retaliation and leaked secrets. Sometimes AI note takers catch moments that weren't meant for outside ears. Isaac Naor, a software designer in Los Angeles, said he once received an Otter transcript after a Zoom meeting that contained moments where the other participant muted herself to talk about him. She had no idea, and Naor was too uncomfortable to tell her, he said. OtterPilot, Otter's AI feature that records, transcribes and summarizes meetings, only records audio from the virtual meeting -- meaning if someone is muted, their audio will not be recorded. But if users manually hit record, Otter receives audio from the microphone and speakers. So if the microphone can hear the chatter, so can Otter. Other times, the very presence of an AI tool makes meetings uncomfortable. Rob Bezdjian, who owns a small events business in Salt Lake City, said he had an awkward call with potential investors after they insisted on recording a meeting through Otter. Bezdjian didn't want his proprietary ideas recorded, so he declined to share some details about his business. The deal didn't go through. In cases where Otter shares a transcript, meeting attendees will be notified that a recording is in process, the company noted. If someone is using OtterPilot, attendees will be notified in the meeting chatbot or via email, and OtterPilot will show up as another participant. Users who connect their calendars to their Otter accounts can also toggle their auto-share settings to "all event guests" to share meeting notes automatically after hitting record. Along with the information users provide during registration, OtterPilot collects automatic screenshots of virtual meetings, text, images or videos that users upload. Otter shares user information with third parties, including AI services that provide back-end support for Otter, advertising partners and law enforcement agencies when required. Similarly, Zoom's AI Companion feature can send meeting summaries to all attendees. Participants get notified and see a sparkle icon or recording badge when a meeting is being recorded or Companion is being used. Zoom's default setting is to send summaries to the meeting host. Both companies said users should adjust their settings to avoid unwanted sharing. Otter also "strongly recommends" asking for consent when using the tool in meetings. And remember: If auto-share settings are on for all participants, everyone will receive details from the full recorded meeting, not just the part that person attended. But Hatim Rahman, an associate professor at Northwestern University's Kellogg School of Management who studies AI's effects on work, believes the onus falls on companies as much as users to ensure that AI products don't lead to unexpected consequences at work. "There has to be awareness from companies that people of different ages and tech abilities are going to be using these products," he said. Users could assume that the AI should know when attendees leave meetings and therefore not send them those parts of the transcript. "That's a very reasonable assumption." Although users should take the time to familiarize themselves with the tech, companies could build more friction into the product so that if some attendees leave halfway through the meeting, for example, it could ask the organizer to confirm whether they should still get the full transcript. Too often, the executives who decide to implement companywide AI tools aren't well versed in the risks, said cybersecurity consultant Will Andre. In his previous career as a marketer, he once stumbled across a video of his bosses deciding who would get cut in an upcoming round of layoffs. The software recording the video meeting had been configured to automatically save a copy to the company's public server. (He decided to pretend that he never saw it.) "It's not always your place as an employee to challenge the use of some of this technology inside of workplaces," Andre said. But employees, he noted, have the most to lose.

[3]

AI is spying on your workplace gossip and secrets -- and sharing...

Innovative office tech that utilizes artificial intelligence is listening in on your conversations and runs the risk of leaking company secrets and workplace gossip, turning the tool into a dangerous nuisance. Alex Bilzerian, a researcher and engineer, recently took to X to explain how Otter AI, a platform he used to transcribe a Zoom meeting with a venture capitalist firm, accidentally spilled a confidential conversation. After the meeting ended, Bilzerian received an email of the call's transcript -- and realized the smart assistant had continued to record the conversation even after Bilzerian had logged off. The transcript, he said, included "hours of their [the investors from the venture capital firm] private conversations afterward, where they discussed intimate, confidential details about their business." While the investors "profusely apologized," Bilzerian still decided to stop the deal with their firm, he told The Washington Post. It might be a "reasonable assumption" to think the AI assistants could detect when participants exit a meeting and not send the rest of the transcript, but Hatim Rahman, an associate professor at the Kellogg School of Management at Northwestern University, told The Washington Post that the tech is not always accurate. Otter AI responded to Bilzerian's X post to reiterate the company's commitment to user privacy, explaining that they "understand the concerns" and "are committed to keeping your information private and secure." "Users have full control over conversation sharing permissions and can change, update, or stop the sharing permissions of a conversation anytime," the company wrote. "For this specific instance, users have the option not to share transcripts automatically with anyone or to auto-share conversations only with users who share the same Workspace domain." Meanwhile, OtterPilot, the AI assistant that records and transcribes meetings, only captures the audio from the call, so it will not record anything said by a muted participant. People on the call will also receive a notification that the meeting is being recorded and the virtual assistant will appear as a meeting attendee, according to The Washington Post. The company can also collect screenshots of meetings -- including text or other media uploaded by participants -- which can be shared with third parties that support or advertise with Otter or law enforcement in some cases. Rob Bezdijan, the owner of a Salt Lake City events business, once lost a deal opportunity because he refused to allow the potential investors to record the meeting on Otter, telling The Washington Post he was wary of allowing his business ideas to be recorded and omitted certain details as a result. "I think it's a big issue because the technology is proliferating so fast, and people haven't really internalized how invasive it is," researcher and privacy advocate Naomi Brockwell told the outlet. Brockwell warned that AI increases the risk of leaked company secrets and opens up the possibility of lawsuits. Will Andre, a cybersecurity consultant, cautioned against uninformed, widespread use of AI tools across companies, telling The Washington Post that in his former marketing role he came across a recording on the company's public servers containing footage of his bosses discussing layoffs. "There has to be awareness from companies that people of different ages and tech abilities are going to be using these products," Rahman added. AI-powered software and devices have been under harsh scrutiny lately, as more companies integrate the tech into their products.

[4]

Some AI Assistants Have This Big Flaw. They Talk Too Much

The report centers on how AI is replacing some workplace tasks that would have previously been done by office assistants. It seems like a perfect solution, really -- digital AI assistants may be more reliable, they don't take vacation breaks, and you don't necessarily have to pay much to use them. Taking notes from meetings is one task frequently delegated to assistants, and now there are a horde of AI tools that can take on the job. The Post relates a story from a researcher and engineer called Alex Bilzerian who was using one of these tools, Otter.ai, recently during a Zoom meeting with some investors. When the meeting was done, Otter auto-emailed him with a transcription of the meeting generated by an AI that had digested the chat. That sounds incredibly useful: no need for note taking! No "to do lists" or memos. But Bilzerian was stunned to discover that the transcript contained the investors' chat that happened after he'd left the meeting. Including discussion of "strategic failures and cooked metrics." The financiers apologized when he mentioned the issue, but their unexpected criticism caused Bilzerian to kill the deal. Otter.AI explained how its privacy controls can be adjusted to change the details of information sharing, in a response to Bilzerian's post about the scandal on X. Though the post was a simple bit of corporate, ah, behind-covering, it lacked any sincere apology. More significantly, it highlighted that unlike established digital office tools, AI tools can be like the digital Wild West, free from regulation, open, and able to do things that surprise you -- both positively and negatively. And sometimes when a leader rushes to embrace AI as an example of "the next big thing to boost your business" they can be naive about the risks of AI tech.

[5]

Is AI Accidentally Spilling Your Company's Secrets? A VC Firm's Private Conversations Were Included in Public Meeting Transcripts.

AI has sparked other privacy concerns, especially around surveillance. Can AI be trusted with confidential information? That was the question at stake when popular AI transcription service Otter, which has more than 14 million users, sent an automatic transcript of a Zoom meeting to AI researcher Alex Bilzerian last week after he met with a VC firm. The issue is that Bilzerian wasn't meant to see the full transcript, which also transcribed conversations he wasn't meant to hear between the VCs after the official call. Bilzerian wrote that the AI included "hours of their private conversations" including "intimate, confidential details about their business" in a post on X that has been viewed over five million times. Otter responded to Bilzerian on X and stated that the VC company could have opted not to share the transcript automatically. It is unclear if the company forgot to end a Zoom call or turn off the AI transcription after the call. Bilzerian told The Washington Post that he decided not to go through with the deal based on the transcript he received. X users raised concerns about AI's increased footprint in meetings and shared related anecdotes in response to Bilzerian's post. Mark Cecchini, director of wealth solutions at Quadrant Capital, wrote that AI assistants were joining meetings more often without being cleared to do so. Kristen Ruby, CEO of public relations firm Ruby Media Group, pointed out that virtual therapy happens through recorded video calls, leading to a possible data hack. Related: These Days, Everything Is 'Powered By AI.' Here's How to Tell Hype From Real Innovation. One X user wrote that his wife got an AI transcript of a private meeting while another claimed they received a transcript after an interview with a recorded conversation about why they weren't a good fit. AI privacy concerns aren't new. According to the Center for Strategic and International Studies, AI has the potential to increase the scope of surveillance by enhancing facial recognition technology and predictive algorithms on social media. However, AI may have an upside. A May 2024 Goldman Sachs study estimates that AI could increase productivity by 25% and notes that "the early signals of future productivity gains look very, very positive."

Share

Share

Copy Link

AI-powered transcription services are causing privacy concerns by recording and sharing confidential conversations in professional settings, highlighting the need for better understanding and control of AI tools in the workplace.

AI Assistants Expose Confidential Workplace Conversations

In an era of increasing reliance on artificial intelligence (AI) in the workplace, a concerning trend has emerged: AI-powered transcription services are inadvertently sharing confidential information, potentially compromising business deals and personal privacy. This issue has come to light following several incidents where AI assistants continued recording and transcribing conversations even after official meetings had concluded.

The Otter.ai Incident

One notable case involved researcher and engineer Alex Bilzerian, who received an automated transcript from Otter.ai after a Zoom meeting with venture capital investors. The transcript not only included the meeting's content but also "hours of their private conversations afterward, where they discussed intimate, confidential details about their business," including "strategic failures and cooked metrics"

1

2

. This unexpected revelation led Bilzerian to terminate the potential deal with the investors.Widespread Concerns

Bilzerian's experience is not isolated. Other users have reported similar incidents, raising alarms across various industries:

- A grant meeting where private discussions were recorded and sent to all attendees

1

- Concerns in the healthcare sector about protecting patient information during virtual therapy and telehealth sessions

1

- Instances of AI tools capturing muted conversations or continuing to record after participants have left meetings

2

3

AI Tools and Their Capabilities

Several AI-powered tools are at the center of these privacy concerns:

- Otter.ai: Provides recording and transcription services that can be integrated with Zoom or used manually in virtual or in-person meetings

1

. - Microsoft's Copilot: Offers similar functionality but with some additional safeguards

1

. - Salesforce's Agentforce: Allows customers to build AI-powered virtual agents for sales and customer service

2

. - Google's Gemini and Slack's AI features: Provide various AI-powered functionalities for workplace communication and productivity

2

.

Privacy Settings and User Control

In response to these incidents, AI companies have emphasized the importance of user control and proper settings:

- Otter.ai states that users have "full control over conversation sharing permissions" and recommends reviewing all settings in their Help Center

1

3

. - Microsoft's Copilot requires explicit permission from meeting organizers to share recordings or transcripts

1

. - Both companies advise users to adjust settings to prevent unwanted sharing and to ask for consent before using AI tools in meetings

2

4

.

Related Stories

Implications for Workplace Privacy

The proliferation of AI in the workplace has raised significant concerns about privacy and data security:

- Erosion of workplace privacy and increased risk of lawsuits and retaliation

2

. - Potential leaks of company secrets and proprietary information

3

5

. - Uncomfortable situations arising from AI tools recording private or off-the-record conversations

2

.

Expert Opinions

Experts in the field have weighed in on the issue:

- Naomi Brockwell, a privacy advocate, warns that the technology is "proliferating so fast" that people haven't internalized its invasive nature

2

3

. - Hatim Rahman, an associate professor at Northwestern University, believes that companies share responsibility in ensuring AI products don't lead to unexpected consequences

2

. - Hannah Johnson from CompTIA emphasizes the continued importance of human skills and emotional intelligence alongside evolving technology

1

.

Future Considerations

As AI continues to integrate into workplace processes, several key points emerge:

- The need for better user education on AI tool capabilities and settings

4

5

. - The importance of developing AI with better contextual understanding and discretion

1

2

. - The potential for regulatory measures to address privacy concerns in AI-assisted workplaces

2

5

.

As the landscape of AI in the workplace evolves, it's clear that balancing the benefits of increased productivity with the need for privacy and discretion will be an ongoing challenge for businesses and technology developers alike.

References

Summarized by

Navi

[2]

[3]

Related Stories

AI Note-Takers Revolutionize Virtual Meetings, Raising Concerns and Etiquette Questions

03 Jul 2025•Technology

Otter.ai Introduces AI-Powered Meeting Agents to Revolutionize Virtual Collaboration

26 Mar 2025•Technology

OpenAI CEO Warns: ChatGPT Conversations Lack Legal Confidentiality

26 Jul 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology