AI-Assisted Doctor Responses: The Future of Patient Communication

4 Sources

4 Sources

[1]

Your doctor might be using AI to answer your questions - here's how that works

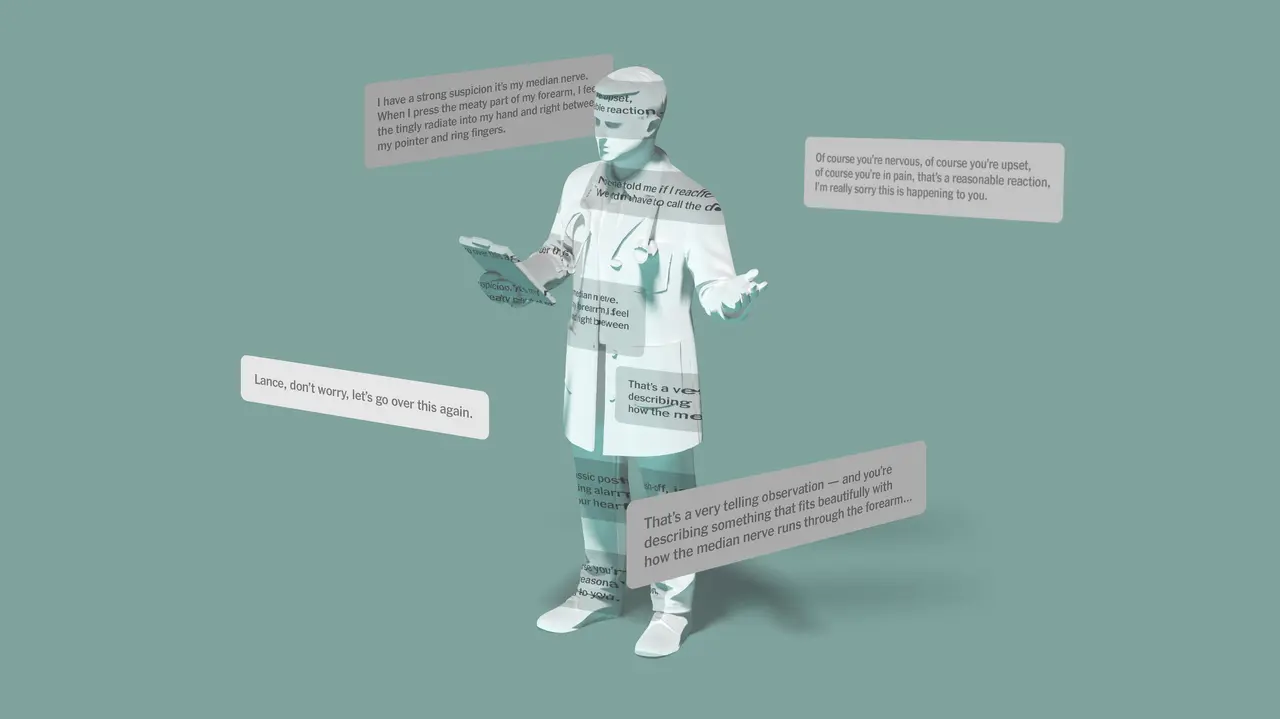

A popular patient portal called MyChart now employs an AI bot that thousands of US doctors are using to write messages to their patients. Disclosure is optional. The next time you read a message in MyChart from your doctor, that message might be from an AI bot. According to a New York Times report, about 15,000 doctors and assistants from 150 healthcare systems across the US are taking advantage of a new tool in MyChart, a popular patient portal used by many medical offices and hospitals, that uses AI to respond to messages. Also: AI is relieving therapists from burnout. Here's how it's changing mental health By default, MyChart doesn't tell the recipient that AI composed the message. However, some doctors are asking patients if it's OK to use the AI tool, and some healthcare systems have opted to add a disclosure to each message. Here's how it works: When a medical professional opens a patient's question to respond, they see a pre-written response, presented in the doctor's voice, instead of a blank screen. AI writes the response based on the question, the patient's medical records, and the patient's medicine list. MyChart uses GPT-4, the same technology that powers ChatGPT (but a specific version that complies with medical privacy laws). The medical provider can accept the message as is, edit it, or toss it aside completely. The hope is that by letting doctors simply edit and approve messages instead of writing them all, they're able to help patients more quickly. Naturally, there are some concerns. What happens if the AI hallucinates, offers up a bad answer, and a harried doctor approves it? Although AI has been acing medical school exams and has proven useful to doctors, it's a fair point of concern. Epic, the company behind MyChart, explained that doctors send less than one-third of AI-written messages with no editing, so they're being somewhat vigilant about catching errors. Also, an Epic representative explained that the tool is most appropriate for administrative questions, such as a patient asking when an appointment is or requesting to reschedule.

[2]

Asking medical questions through MyChart? Your doctor may let AI respond

A popular patient portal now employs an AI bot that thousands of US doctors use to write messages to patients. Disclosure is optional. The next time you read a message in MyChart from your doctor, that message might be from an AI bot. According to a New York Times report, about 15,000 doctors and assistants from 150 healthcare systems across the US are taking advantage of a new tool in MyChart, a popular patient portal used by many medical offices and hospitals, that uses AI to respond to messages. Also: AI is relieving therapists from burnout. Here's how it's changing mental health By default, MyChart doesn't tell the recipient that AI composed the message. However, some doctors are asking patients if it's OK to use the AI tool, and some healthcare systems have opted to add a disclosure to each message. Here's how it works: When a medical professional opens a patient's question to respond, they see a pre-written response, presented in the doctor's voice, instead of a blank screen. AI writes the response based on the question, the patient's medical records, and the patient's medicine list. MyChart uses GPT-4, the same technology that powers ChatGPT (but a specific version that complies with medical privacy laws). The medical provider can accept the message as is, edit it, or toss it aside completely. The hope is that by letting doctors simply edit and approve messages instead of writing them all, they're able to help patients more quickly. Naturally, there are some concerns. What happens if the AI hallucinates, offers up a bad answer, and a harried doctor approves it? Although AI has been acing medical school exams and has proven useful to doctors, it's a fair point of concern. Epic, the company behind MyChart, explained that doctors send less than one-third of AI-written messages with no editing, so they're being somewhat vigilant about catching errors. Also, an Epic representative explained that the tool is most appropriate for administrative questions, such as a patient asking when an appointment is or requesting to reschedule.

[3]

That Message From Your Doctor? It May Have Been Drafted by A.I.

Overwhelmed by queries, physicians are turning to artificial intelligence to correspond with patients. Many have no clue that the replies are software-generated. Every day, patients send hundreds of thousands of messages to their doctors through MyChart, a communications platform that is nearly ubiquitous in U.S. hospitals. They describe their pain and divulge their symptoms -- the texture of their rashes, the color of their stool -- trusting the doctor on the other end to advise them. But increasingly, the responses to those messages are not written by the doctor -- at least, not entirely. About 15,000 doctors and assistants at more than 150 health systems are using a new artificial intelligence feature in MyChart to draft replies to such messages. Many patients receiving those replies have no idea that they were written with the help of artificial intelligence. In interviews, officials at several health systems using MyChart's tool acknowledged that they do not disclose that the messages contain A.I.-generated content. The trend troubles some experts who worry that doctors may not be vigilant enough to catch potentially dangerous errors in medically significant messages drafted by A.I. In an industry that has largely used A.I. to tackle administrative tasks like summarizing appointment notes or appealing insurance denials, critics fear that the wide adoption of MyChart's tool has allowed A.I. to edge into clinical decision-making and doctor-patient relationships.

[4]

That message from your doctor? it may have been drafted by AI

Many patients receiving those replies have no idea that they were written with the help of AI. In interviews, officials at several health systems using MyChart's tool acknowledged that they do not disclose that the messages contain AI-generated content. The trend troubles some experts who worry that doctors may not be vigilant enough to catch potentially dangerous errors in medically significant messages drafted by AI.Every day, patients send hundreds of thousands of messages to their doctors through MyChart, a communications platform that is nearly ubiquitous in U.S. hospitals. They describe their pain and divulge their symptoms -- the texture of their rashes, the color of their stool -- trusting the doctor on the other end to advise them. But increasingly, the responses to those messages are not written by the doctor -- at least, not entirely. About 15,000 doctors and assistants at more than 150 health systems are using a new artificial intelligence feature in MyChart to draft replies to such messages. Many patients receiving those replies have no idea that they were written with the help of AI. In interviews, officials at several health systems using MyChart's tool acknowledged that they do not disclose that the messages contain AI-generated content. The trend troubles some experts who worry that doctors may not be vigilant enough to catch potentially dangerous errors in medically significant messages drafted by AI. In an industry that has largely used AI to tackle administrative tasks like summarizing appointment notes or appealing insurance denials, critics fear that the wide adoption of MyChart's tool has allowed AI to edge into clinical decision-making and doctor-patient relationships. Already the tool can be instructed to write in an individual doctor's voice. But it does not always draft correct responses. "The sales pitch has been that it's supposed to save them time so that they can spend more time talking to patients," said Athmeya Jayaram, a researcher at the Hastings Center, a bioethics research institute in Garrison, New York. "In this case, they're trying to save time talking to patients with generative AI." During the peak of the pandemic, when in-person appointments were often reserved for the sickest patients, many turned to MyChart messages as a rare, direct line of communication with their doctors. It wasn't until years later that providers realized they had a problem: Even after most aspects of health care returned to normal, they were still swamped with patient messages. Already overburdened doctors were suddenly spending lunch breaks and evenings replying to patient messages. Hospital leaders feared that if they didn't find a way to reduce this extra -- largely nonbillable -- work, the patient messages would become a major driver of physician burnout. So in early 2023, when Epic, the software giant that developed MyChart, began offering a new tool that used AI to compose replies, some of the country's largest academic medical centers were eager to adopt it. Instead of starting each message with a blank screen, a doctor sees an automatically generated response above the patient's question. The response is created with a version of GPT-4 (the technology underlying ChatGPT) that complies with medical privacy laws. The MyChart tool, called In Basket Art, pulls in context from the patient's prior messages and information from his or her electronic medical records, like a medication list, to create a draft that providers can approve or change. By letting doctors act more like editors, health systems hoped they could get through their patient messages faster and spend less mental energy doing it. This has been partially borne out in early studies, which have found that Art did lessen feelings of burnout and cognitive burden, but did not necessarily save time. Several hundred clinicians at UC San Diego Health, more than a hundred providers at UW Health in Wisconsin and every licensed clinician at Stanford Health Care's primary care practices -- including doctors, nurses and pharmacists -- have access to the AI tool. Dozens of doctors at Northwestern Health, NYU Langone Health and UNC Health are piloting Art while leaders consider a broader expansion. In the absence of strong federal regulations or widely accepted ethical frameworks, each health system decides how to test the tool's safety and whether to inform patients about its use. Some hospital systems, like UC San Diego Health, put a disclosure at the bottom of each message explaining that it has been "generated automatically," and reviewed and edited by a physician. "I see, personally, no downside to being transparent," said Dr. Christopher Longhurst, the health system's chief clinical and innovation officer. (BEGIN OPTIONAL TRIM.) Patients have generally accepted the new technology, he said. (One doctor received an email saying, "I want to be the first to congratulate you on your AI co-pilot and be the first to send you an AI-generated patient message.") (END OPTIONAL TRIM.) Other systems -- including Stanford Health Care, UW Health, UNC Health and NYU Langone Health -- decided that notifying patients would do more harm than good. Some administrators worried that doctors might see the disclaimer as an excuse to send messages to patients without properly vetting them, said Dr. Brian Patterson, the physician administrative director for clinical AI at UW Health. And telling patients the message had AI content might cheapen the clinical advice, even though it was endorsed by their physicians, said Dr. Paul Testa, chief medical information officer at NYU Langone Health. To Jayaram, whether to disclose use of the tool comes down to a simple question: What do patients expect? When patients send a message about their health, he said, they assume that their doctors will consider their history, treatment preferences and family dynamics -- intangibles gleaned from long-standing relationships. "When you read a doctor's note, you read it in the voice of your doctor," he said. "If a patient were to know that, in fact, the message that they're exchanging with their doctor is generated by AI, I think they would feel rightly betrayed." To many health systems, creating an algorithm that convincingly mimics a particular physician's "voice" helps make the tool useful. Indeed, Epic recently started giving its tool greater access to past messages, so that its drafts could imitate each doctor's individual writing style. Brent Lamm, deputy chief information officer for UNC Health, said this addressed common complaints he heard from doctors: "My personal voice is not coming through" or "I've known this patient for seven years. They're going to know it's not me." Health care administrators often refer to Art as a low-risk use of AI, since ideally a provider is always reading through the drafts and correcting mistakes. The characterization annoys researchers who study how humans work in relation to AI. Ken Holstein, a professor at the human-computer interaction institute at Carnegie Mellon, said the portrayal "goes against about 50 years of research." Humans have a well-documented tendency, called automation bias, to accept an algorithm's recommendations even if it contradicts their own expertise, he said. This bias could cause doctors to be less critical while reviewing AI-generated drafts, potentially allowing dangerous errors to reach patients. And Art is not immune to mistakes. A recent study found that seven of 116 AI-generated drafts contained so-called hallucinations -- fabrications that the technology is notorious for conjuring. Dr. Vinay Reddy, a family medicine doctor at UNC Health, recalled an instance in which a patient messaged a colleague to check whether she needed a hepatitis B vaccine. The AI-generated draft confidently assured the patient she had gotten her shots and provided dates for them. This was completely false, and occurred because the model didn't have access to her vaccination records, he said. A small study published in The Lancet Digital Health found that GPT-4, the same AI model that underlies Epic's tool, made more insidious errors when answering hypothetical patient questions. Physicians reviewing its answers found that the drafts, if left unedited, would pose a risk of severe harm about 7% of the time. (BEGIN OPTIONAL TRIM.) What reassures Dr. Eric Poon, chief health information officer at Duke Health, is that the model produces drafts that are still "moderate in quality," which he thinks keeps doctors skeptical and vigilant about catching errors. On average, fewer than a third of AI-generated drafts are sent to patients unedited, according to Epic, an indication to hospital administrators that doctors are not rubber-stamping messages. "One question in the back of my mind is, what if the technology got better?" he said. "What if clinicians start letting their guard down? Will errors slip through?" Epic has built guardrails into the programming to steer Art away from giving clinical advice, said Garrett Adams, a vice president of research and development at the company. Adams said the tool was best suited to answer common administrative questions like "When is my appointment?" or "Can I reschedule my checkup?" But researchers have not developed ways to reliably force the models to follow instructions, Holstein said. Dr. Anand Chowdhury, who helped oversee deployment of Art at Duke Health, said he and his colleagues repeatedly adjusted instructions to stop the tool from giving clinical advice, with little success. "No matter how hard we tried, we couldn't take out its instinct to try to be helpful," he said. Three health systems told The New York Times that they removed some guardrails from the instructions. Longhurst at UC San Diego Health said the model "performed better" when language that instructed Art not to "respond with clinical information" was removed. Administrators felt comfortable giving the AI more freedom since doctors review its messages. Stanford Health Care took a "managed risk" to allow Art to "think more like a clinician," after some of the strictest guardrails seemed to make its drafts generic and unhelpful, said Dr. Christopher Sharp, the health system's chief medical information officer. (END OPTIONAL TRIM.) Beyond questions of safety and transparency, some bioethicists have a more fundamental concern: Is this how we want to use AI in medicine? Unlike many other AI health care tools, Art isn't designed to improve clinical outcomes (though one study suggested responses may be more empathetic and positive), and it isn't targeting strictly administrative tasks. Instead, AI seems to be intruding on rare moments when patient and doctors could actually be communicating with one another directly -- the kind of moments that technology should be enabling, said Daniel Schiff, co-director of the Governance and Responsible AI Lab at Purdue University. "Even if it was flawless, do you want to automate one of the few ways that we're still interacting with each other?" This article originally appeared in The New York Times.

Share

Share

Copy Link

Healthcare providers are increasingly using AI to draft responses to patient inquiries. This trend raises questions about efficiency, accuracy, and the changing nature of doctor-patient relationships in the digital age.

The Rise of AI in Healthcare Communication

In a significant shift in healthcare communication, doctors are increasingly turning to artificial intelligence (AI) to help draft responses to patient inquiries. This trend, primarily facilitated through patient portals like MyChart, is reshaping the landscape of doctor-patient interactions in the digital age

1

.How AI-Assisted Responses Work

When patients send messages through platforms like MyChart, AI systems analyze the content and generate draft responses for healthcare providers. These drafts are based on the patient's medical history, current medications, and relevant medical knowledge. Importantly, doctors review and edit these AI-generated responses before sending them to patients, ensuring accuracy and personalization

2

.Benefits and Concerns

Proponents argue that AI-assisted responses can significantly improve efficiency, allowing doctors to respond more quickly to patient inquiries. This technology could potentially reduce physician burnout by alleviating some of the administrative burden associated with patient communication

3

.However, the use of AI in patient communication also raises concerns. Critics worry about the potential for errors, the loss of personal touch in doctor-patient relationships, and issues related to privacy and data security. There are also questions about how transparent healthcare providers should be about their use of AI in crafting responses

4

.Regulatory Landscape and Future Implications

As this technology becomes more widespread, regulatory bodies are grappling with how to oversee its use. The U.S. Food and Drug Administration is working on developing guidelines for AI in healthcare, but the rapid pace of technological advancement poses challenges for timely and effective regulation

3

.Related Stories

Patient Perspectives and Adaptation

Patient reactions to AI-assisted responses have been mixed. While some appreciate faster response times, others express concern about the authenticity of their interactions with healthcare providers. As this technology becomes more prevalent, patients may need to adapt to a new paradigm of healthcare communication, balancing the benefits of efficiency with the desire for personalized care

4

.The Future of AI in Healthcare

As AI continues to evolve, its role in healthcare is likely to expand beyond patient communication. Future applications could include more advanced diagnostic tools, personalized treatment plans, and predictive health analytics. However, the integration of AI in healthcare will require ongoing evaluation to ensure it enhances rather than diminishes the quality of patient care

1

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy