Nvidia's $20B Groq bet and Vera Rubin platform reveal how AI inference is splitting the GPU era

3 Sources

3 Sources

[1]

Jensen Huang discusses the economics of inference, power delivery, and more at CES 2026 press Q&A session -- 'You sell a chip one time, but when you build software, you maintain it forever'

Developing chips for AI data centers is a complicated business. When Nvidia CEO Jensen Huang spoke at Nvidia's CES 2026 keynote, the conversation stretched across Rubin, power delivery, inference economics, open models, and more. Following this, Tom's Hardware had the opportunity to sit down and attend a press Q&A session with Huang himself in Las Vegas, Nevada. While we can't share the transcript in its entirety, we've drilled down on some of Huang's most important statements from the session itself, with some parts edited for flow and clarity. As a whole, it's recommended that you first familiarize yourself with the topics announced at Nvidia's CES 2026 keynote before diving headfirst into our highlights of the following Q&A session, which will reference parts of what Huang discussed onstage. Huang's answers during the Q&A helped to clarify how Nvidia is approaching the next phase of large-scale AI deployment, with a consistent emphasis on keeping systems productive once they are installed. Those of you who remember our previous Jensen Huang Computex Q&A will recall the CEO's grand vision for a 50-year plan for deploying AI infrastructure. Nvidia is designing for continuous inference workloads, constrained power environments, and platforms that must remain useful as models and deployment patterns change. Other comments also include references to SRAM vs HBM deployment at an additional Q&A for analysts. Those priorities in flexibility explain recent architectural choices around serviceability, power smoothing, unified software stacks, and support for open models, and they help to paint a picture of how Nvidia is thinking about scaling AI infrastructure beyond the initial buildout phase we're seeing unfold right now. The most consistent theme running through the Q&A was Nvidia's focus on keeping systems productive under real-world conditions, with Huang spending the lion's share of the time discussing downtime, serviceability, and maintenance. That's quite evident in how Nvidia is positioning its upcoming Vera Rubin platform. "Imagine today we have a Grace Blackwell 200 or 300. It has 18 nodes, 72 GPUs, and an NVLink 72 with nine switch trays. If there's ever an issue with any of the cables or switches, or if the links aren't as robust as we want them to be, or even if semiconductor fatigue happens over time, you eventually want to replace something," Huang said. Rather than parroting performance metrics, Huang repeatedly described the economic impact of racks going offline and the importance of minimizing disruption when components fail. At the scale Nvidia's customers now operate, failures are inevitable. GPUs fail, interconnects degrade, and power delivery fluctuates. The question Nvidia is trying to answer is how to prevent those failures from cascading into prolonged outages. "When we replace something today, we literally take the entire rack down. It goes to zero. That one rack, which costs about $3 million, goes from full utilization to zero and stays down until you replace the NVLink or any of the nodes and bring it back up." Rubin's tray-based architecture is designed as the answer to that question. By breaking the rack into modular, serviceable units, Nvidia aims to turn maintenance into a routine operation. The goal is not zero failure -- that's impossible -- but fast recovery, with the rest of the system continuing to run while a faulty component is replaced. "And so today, with Vera Rubin, you literally pull out the NVLink, and you keep on going. If you want to update the software for any link switch, you can update the software while it's running. That allows us to keep the systems in operation for a longer period of time." This emphasis on serviceability also influences how Rubin is assembled and deployed. Faster assembly and standardized trays reduce the amount of time systems spend idle during installation and repair. In a world where racks represent millions of dollars in capital and generate revenue continuously, that idle time is expensive, so Nvidia is understandably treating uptime as a core performance metric on par with throughput. "Everything comes down to the fact that it used to take two hours to assemble this node. But now, Vera Rubin takes five minutes. Going from two hours to five minutes is completely transformative in our supply chain." "The fact that there are no cables, instead of 43 cables, and that it's 100% liquid-cooled instead of 80% liquid-cooled, makes a huge difference." Rather than focusing on average power consumption, the discussion kept circling back to instantaneous demand, which is where large-scale AI deployments increasingly run into trouble. Modern AI systems spike hard, unpredictably, as workloads ramp up and down, particularly during inference bursts. Those short-lived peaks force operators to provision power delivery and cooling for worst-case scenarios, even if those scenarios only occur briefly. This causes stranded capacity across the data center, with infrastructure built to handle peaks that rarely last long enough to justify the expense. Nvidia's answer with Rubin is to attack the problem at the system level rather than pushing it upstream to the facility. By managing power behavior inside the rack, Nvidia is attempting to flatten those demand spikes before they hit the broader power distribution system. Considering that each Rubin GPU has a TDP of 1800 Watts, this means coordinating how compute, networking, and memory subsystems draw power so the rack presents a more predictable load profile to the data center. This effectively allows operators to run closer to their sustained power limits instead of designing around transient excursions that otherwise dictate everything from transformer sizing to battery backup. "When we're training these models, all the GPUs are working harmoniously, which means at some point they all spike on, or they all spike off. Because of the inductance in the system, that instantaneous current swing is really quite significant. It can be as high as 25%." A rack that can deliver slightly lower peak throughput but maintain it consistently is more useful than one that hits higher numbers briefly before power constraints force throttling or downtime. As inference workloads become continuous and revenue-linked, that consistency will be all the more valuable. The same logic helps explain Nvidia's continued push toward higher-temperature liquid cooling. Operating at elevated coolant temperatures reduces dependence on chillers, which are among the most power-hungry components in a data center. It also widens the range of environments where these systems can be deployed, particularly in regions where water availability, cooling infrastructure, or grid capacity would otherwise be limiting factors. "For most data centers, you either overprovision by about 25%, which means you leave 25% of the power stranded, or you put whole banks of batteries in to absorb the shock. Vera Rubin does this with system design and electronics, and that power smoothing lets you run at close to 100% all the time." Taken together, the emphasis on power smoothing, load management, and higher-temperature cooling points to a shift in where efficiency gains are coming from; Nvidia is optimizing for predictability, utilization, and infrastructure efficiency. A system that can run near its limits continuously, without forcing the rest of the data center to be overbuilt around it, ultimately delivers more value than one that is faster on paper but constrained by the realities of power delivery. Huang repeatedly returned to inference as the defining workload for modern AI deployments. Training remains important, but it is episodic, whereas inference runs continuously, generates revenue directly, and exposes every inefficiency in a system. This shift is driving Nvidia away from traditional performance metrics like FLOPS and toward measures tied to output over time. What matters is how many useful tokens a system can generate per watt and per dollar, not how fast it can run a single benchmark. "Today, [with] tokenomics, you monetize the tokens you generate. If within one data center, with limited power, we can generate more tokens, then your revenues go up. That's why tokens per watt and tokens per dollar matter in the final analysis." While there is growing interest in cheaper memory tiers for inference, Huang also emphasized the long-term cost of fragmenting software ecosystems. Changing memory models may offer short-term savings, but it creates long-term obligations to maintain compatibility as workloads evolve. "Building a chip is expensive," says Huang, "but the most expensive part of the IT industry is the software layer on top. You sell a chip one time, but when you build software, you maintain it forever." Nvidia's strategy, as articulated by Huang, is to preserve flexibility even at the cost of some theoretical efficiency. AI workloads are changing too quickly for narrowly optimized systems to remain relevant for long. By keeping a unified software and memory model, Nvidia believes it can absorb changes in things like model architecture, context length, and deployment patterns without stranding capacity. "Instead of optimizing seventeen different stacks, you optimize one stack, and that one stack runs across your entire fleet. When we update the software, the entire tail of AI factories increases in performance," explained Huang. The obvious implication of this is that Nvidia is prioritizing durability over specialization. Systems are being designed to handle a wide range of inference workloads over their lifetime rather than excel at a single snapshot in time -- "The size of the models is increasing by a factor of ten every year. The tokens being generated are increasing five times a year," Huang explained, later adding, "If you stay static for two years or three years, that's just too long considering how fast the technology is moving." Huang also addressed the rise of open models and discussed how he sees them as a growing contributor to inference demand. "One of the big surprises last year was the success of open models. They really took off to the point where one out of every four tokens generated today are open models," Huang said. Open-weight models reduce friction across the entire deployment stack and make experimentation cheaper. They also shorten iteration cycles and allow organizations outside the hyperscalers to deploy inference at a meaningful scale. That does not just diversify who is running AI models but also materially changes how often inference runs and where it runs. From Nvidia's perspective, it matters not whether a model is proprietary or open, but how much inference activity it creates. Open models tend to proliferate across a much wider range of environments, from enterprise clusters to regional cloud providers and on-premises deployments. Each deployment may be smaller, but the aggregate effect is an expansion in total token volume. More endpoints generating tokens more often drives sustained demand for compute, networking, and memory, even if average margins per deployment are lower. "That has driven the demand for Nvidia and the public cloud tremendously, which explains why right now Hopper pricing is actually going up in the cloud." Open models often run outside the largest hyperscale environments, but they still require efficient, reliable hardware. By focusing on system-level efficiency and uptime, Nvidia is positioning itself to serve a broader range of inference scenarios without the need for separate product lines. Ultimately, Huang's comments suggest that Nvidia views open models as complementary to its business. They expand the market rather than cannibalize it, provided the underlying infrastructure can support them efficiently. "All told, the demand that's being generated all over the world is really quite significant." Across discussions of Rubin, power delivery, inference economics, open models, and geopolitics, Huang's comments painted a consistent picture: Nvidia is optimizing for systems that stay productive, tolerate failure, and operate efficiently under real-world constraints. Peak performance still matters -- it always will -- but it no longer appears to be the sole driving factor.

[2]

A deeper look at the tightened chipmaking supply chain, and where it may be headed in 2026 -- "nobody's scaling up," says analyst as industry remains conservative on capacity

We sit down with two high-profile analysts to get some answers about where the chipmaking industry might be headed next. From memory shortages to rising GPU prices, 2025 seemed like a year of significant scarcity in the supply chain for all things semiconductors. But what does the future hold for this super-tight market in the years to come? One school of thought suggests that in a couple of years, the story goes, today's hyperscaler accelerators will spill out into the secondary market in a crypto-style deluge. Cheap ex-A100s and B200s - which could be considered AI factory cast-offs -will suddenly become available for everyone else looking to buy. Data center hardware is often assumed to have a finite and sometimes short lifecycle, with depreciation schedules and refresh cycles that push older hardware into uselessness after a few years. But another group suggests AI compute doesn't behave like a consumer GPU market, and the 'three years and it's done' assumption is shakier than many people want to admit. As Stacy Rasgon, managing director and senior analyst at Bernstein, said in an interview with Tom's Hardware Premium, the idea that "they disintegrate after three years, and they're no good, is bullshit." Some believe the present tightness in the market isn't just a temporary crunch but is more a structural condition of the new post-AI norm in the market, with a closed loop where state-of-the-art hardware circulates between a handful of cloud and AI giants. So what's the reality? Ben Bajarin, an analyst at Creative Strategies, describes the current moment as a "gigacycle" rather than another chip boom. In his modelling, global semiconductor revenues climb from roughly $650 billion in 2024 to more than $1 trillion by the end of the decade. "There's some catch-up necessary, but there's also the fact that the semiconductor industry remains relatively conservative, because they are typically cyclical," Bajarin said in an interview with Tom's Hardware Premium. "So everybody's very concerned about overcapacity." That conservatism matters because chipmaking capacity takes time, effort, and a lot of money to stand up and bring online. It's for that reason that we're likely to see tightness in the market remaining for a little while yet: demand is spiking, yes, but companies aren't that keen to stand up their supply until they can absolutely guarantee a return. "They don't want to be stuck with foundry capacity or supply capacity that they can't use seven or eight years from now," Bajarin said. According to Bajarin's analysis, AI chips represented less than 0.2% of wafer starts in 2024, yet already generated roughly 20% of semiconductor revenue - a huge concentration on a single space, which helps explain why the shortages feel different from the pandemic-era GPU crunch. In 2020 and 2021, consumer demand surged, and supply chains seized up, but the underlying products were still relatively mass market in manufacturing terms. But today's AI accelerators require leading-edge logic, exotic memory stacks, and advanced packaging. It's possible to make more of them, but not quickly, and not without knock-on effects. "If you look at the forecasts for wafer capacity or substrate capacity, nobody's scaling up," cautions Bajarin. Rasgon told us that while not everything is tight, the "really tight" parts of the system are concentrated in memory. Rasgon pointed to Micron, one of the three global DRAM giants, which has said memory tightness could persist beyond 2026, driven in large part by AI demand and High Bandwidth Memory (HBM). It's notable that Micron recently closed down its consumer-facing business, Crucial, to focus on the more lucrative products it can sell - and markets it can sell into. HBM is a different manufacturing and packaging challenge that can hoover up production capacity. HBM production consumes far more wafer resources than standard DRAM, according to Rasgon - so much so that producing a gigabyte of HBM can take "three or four times as many wafers" as producing a gigabyte of DDR5, which means shifting capacity into HBM effectively reduces the total number of DRAM bits the industry can supply. Memory makers prioritising HBM for accelerators doesn't just affect hyperscalers. It has a knock-on effect on PCs, servers, and other devices when standard DRAM is tighter and pricier than it would otherwise be, which is why companies have been pushing up prices for consumer hardware in recent weeks and months. Hyperscalers can often swallow higher component costs because they monetise the compute directly, whether through internal workloads or rented-out inference. Everyone else tends to feel the squeeze more immediately: OEMs and system builders face higher bill-of-materials costs and retail pricing changes for the worse if you're an end customer. Bajarin believes HBM will be one of the defining constraints of the remainder of the decade, projecting it to grow fourfold to more than $100 billion by 2030, while noting that HBM3E can require about three times the wafer supply per gigabyte compared with DDR5. But he's not alone in thinking that: Micron has even talked about being unable to meet all demand from key customers, suggesting it can supply only around half to two-thirds of expected demand, even while raising capex and considering new projects. There are a number of reasons for the current tightness, but even if the market had infinite wafers and infinite memory, it could still run into a chokepoint: advanced packaging. The industry has been ramping up its CoWoS (chip-on-wafer-on-substrate) capacity aggressively, but it has also been unusually open about how hard it is to get ahead of demand. In early 2025, Nvidia CEO Jensen Huang said overall advanced packaging capacity had quadrupled in under two years but was still a bottleneck for the firm. It's not just Nvidia that is reckoning with the challenge. TrendForce, which tracks the space closely, has projected TSMC's CoWoS capacity rising to around 75,000 wafers per month in 2025 and reaching roughly 120,000 to 130,000 wafers per month by the end of 2026. Such growth is a big leap - but it's also unlikely to loosen current capacity constraints. Bajarin highlighted the reason why in his analysis: CapEx by the top four cloud providers -- Amazon, Google, Microsoft, and Meta -- doubled to roughly $600 billion annually in just two years. Rasgon noted that some companies can wind up supply-constrained for reasons that have nothing to do with leading-edge demand being "off the charts." In Intel's case, he argued, it's partly about where demand is versus where capacity has been cut. "They were actually scrapping tools in that older generation and selling them off for pennies on the dollar," he said. Although it may seem obvious that demand will continue to grow because of the way the big tech companies are splashing the cash, it can be difficult to accurately forecast future demand because of the way the chip market works. Rasgon said semiconductor companies sit "at the back of the supply chain," which limits their ability to see end demand clearly, and encourages behaviour that makes the signal noisier. It's a vicious circle exacerbated when supply is particularly tight and lead times stretch because customers start hoarding the chips they have and double-ordering new options, because they're trying to secure parts from anywhere they can. That can make demand look artificially huge until lead times ease and cancellations begin. Suppliers want to avoid being caught by sizing their production for a demand that doesn't materialize. "Forecasting in semiconductors in general is an unsolved problem," Rasgon said. "My general belief is that most, or frankly all, semiconductor management's actual visibility of what is going on with demand is precisely zero." Bajarin points out that the industry works "very methodically" because it remembers boom-bust cycles, especially in memory. "We're just going to have to live in a foreseeable cycle of supply tightness because of these dynamics that are historically true of the semiconductor industry," he said. When the market unwinds itself, and supply normalizes is, as is befitting a market that struggles with forecasting, impossible to tell. "As long as we're in this cycle where we're really building out a fundamental new infrastructure around AI, it's going to remain supply-constrained for the foreseeable future, if not through this entire cycle, just because of prior boom-bust cycles within the semiconductor industry," said Bajarin. But even if the industry could magically print accelerators, it still needs somewhere to run them. Data centers take time to build, to connect to power, and to cool at scale. "Even if we make all these GPUs, we can't really house them because we don't have the gigawatts," said Bajarin. However, with the planning of small modular reactors and the expansion of electricity grids, there's hope that the need can be met - eventually. Another potential unblocker of the tight market is the opportunity to reuse older generation GPUs as they enter the traded market, thanks to newer generations of chips constantly being cycled through big tech companies who want the cutting edge for inference and training. Already, you can find small volumes of older data center GPUs on the market, including listings for Nvidia A100-class hardware through resellers and brokers. But the odd older generation of chips existing is a world away from a crypto-style glut of former AI accelerators appearing on the market. While AI firms want all the cutting-edge chips they can get their hands on, they're not necessarily disposing of their older stock, either. A top-end accelerator isn't a consumer graphics card that becomes obsolete in a couple of years. It's capital equipment. And AI companies are learning to sweat it. OpenAI CFO Sarah Friar underlined this in November, admitting that OpenAI still uses Nvidia's Ampere chips - released in 2020 - for inference on its consumer-facing models. Training might use bleeding-edge tech, but inference can profitably run on older generations for a long time. If OpenAI is thinking that way, so too will other companies in the space. "Absolutely, the older stuff is still being used," says Rasgon. "And in fact, not only is it being used, it's being used very, very profitably." For now, the clearest takeaway is that the current tightness isn't only about making more chips. It's about whether the industry can build enough of everything around them - including the buildings, cooling, and grid connections to run them - fast enough to match demand that's still accelerating. "We're going to remain in a relative supply constraint across all of these vectors until either we've built the entire thing out and we have enough compute," Bajarin said, "or it's a bubble, and it crashes."

[3]

The end of the general-purpose GPU: Why Nvidia's $20B Groq bet is a survival play for the disaggregated era

Nvidia's $20 billion strategic licensing deal with Groq represents one of the first clear moves in a four-front fight over the future AI stack. 2026 is when that fight becomes obvious to enterprise builders. For the technical decision-makers we talk to every day -- the people building the AI applications and the data pipelines that drive them -- this deal is a signal that the era of the one-size-fits-all GPU as the default AI inference answer is ending. We are entering the age of the disaggregated inference architecture, where the silicon itself is being split into two different types to accommodate a world that demands both massive context and instantaneous reasoning. Why inference is breaking the GPU architecture in two To understand why Nvidia CEO Jensen Huang dropped one-third of his reported $60 billion cash pile on a licensing deal, you have to look at the existential threats converging on his company's reported 92% market share. The industry reached a tipping point in late 2025: For the first time, inference -- the phase where trained models actually run -- surpassed training in terms of total data center revenue, according to Deloitte. In this new "Inference Flip," the metrics have changed. While accuracy remains the baseline, the battle is now being fought over latency and the ability to maintain "state" in autonomous agents. There are four fronts of that battle, and each front points to the same conclusion: Inference workloads are fragmenting faster than GPUs can generalize. 1. Breaking the GPU in two: Prefill vs. decode Gavin Baker, an investor in Groq (and therefore biased, but also unusually fluent on the architecture), summarized the core driver of the Groq deal cleanly: "Inference is disaggregating into prefill and decode." Prefill and decode are two distinct phases: * The prefill phase: Think of this as the user's "prompt" stage. The model must ingest massive amounts of data -- whether it's a 100,000-line codebase or an hour of video -- and compute a contextual understanding. This is "compute-bound," requiring massive matrix multiplication that Nvidia's GPUs are historically excellent at. * The generation (decode) phase: This is the actual token-by-token "generation." Once the prompt is ingested, the model generates one word (or token) at a time, feeding each one back into the system to predict the next. This is "memory-bandwidth bound." If the data can't move from the memory to the processor fast enough, the model stutters, no matter how powerful the GPU is. (This is where Nvidia was weak, and where Groq's special language processing unit (LPU) and its related SRAM memory, shines. More on that in a bit.) Nvidia has announced an upcoming Vera Rubin family of chips that it's architecting specifically to handle this split. The Rubin CPX component of this family is the designated "prefill" workhorse, optimized for massive context windows of 1 million tokens or more. To handle this scale affordably, it moves away from the eye-watering expense of high bandwidth memory (HBM) -- Nvidia's current gold-standard memory that sits right next to the GPU die -- and instead utilizes 128GB of a new kind of memory, GDDR7. While HBM provides extreme speed (though not as quick as Groq's static random-access memory (SRAM)), its supply on GPUs is limited and its cost is a barrier to scale; GDDR7 provides a more cost-effective way to ingest massive datasets. Meanwhile, the "Groq-flavored" silicon, which Nvidia is integrating into its inference roadmap, will serve as the high-speed "decode" engine. This is about neutralizing a threat from alternative architectures like Google's TPUs and maintaining the dominance of CUDA, Nvidia's software ecosystem that has served as its primary moat for over a decade. All of this was enough for Baker, the Groq investor, to predict that Nvidia's move to license Groq will cause all other specialized AI chips to be canceled -- that is, outside of Google's TPU, Tesla's AI5, and AWS's Trainium. 2. The differentiated power of SRAM At the heart of Groq's technology is SRAM. Unlike the DRAM found in your PC or the HBM on an Nvidia H100 GPU, SRAM is etched directly into the logic of the processor. Michael Stewart, managing partner of Microsoft's venture fund, M12, describes SRAM as the best for moving data over short distances with minimal energy. "The energy to move a bit in SRAM is like 0.1 picojoules or less," Stewart said. "To move it between DRAM and the processor is more like 20 to 100 times worse." In the world of 2026, where agents must reason in real-time, SRAM acts as the ultimate "scratchpad": a high-speed workspace where the model can manipulate symbolic operations and complex reasoning processes without the "wasted cycles" of external memory shuttling. However, SRAM has a major drawback: it is physically bulky and expensive to manufacture, meaning its capacity is limited compared to DRAM. This is where Val Bercovici, chief AI officer at Weka, another company offering memory for GPUs, sees the market segmenting. Groq-friendly AI workloads -- where SRAM has the advantage -- are those that use small models of 8 billion parameters and below, Bercovici said. This isn't a small market, though. "It's just a giant market segment that was not served by Nvidia, which was edge inference, low latency, robotics, voice, IoT devices -- things we want running on our phones without the cloud for convenience, performance, or privacy," he said. This 8B "sweet spot" is significant because 2025 saw an explosion in model distillation, where many enterprise companies are shrinking massive models into highly efficient smaller versions. While SRAM isn't practical for the trillion-parameter "frontier" models, it is perfect for these smaller, high-velocity models. 3. The Anthropic threat: The rise of the 'portable stack' Perhaps the most under-appreciated driver of this deal is Anthropic's success in making its stack portable across accelerators. The company has pioneered a portable engineering approach for training and inference -- basically a software layer that allows its Claude models to run across multiple AI accelerator families -- including Nvidia's GPUs and Google's Ironwood TPUs. Until recently, Nvidia's dominance was protected because running high-performance models outside of the Nvidia stack was a technical nightmare. "It's Anthropic," Weka's Bercovici told me. "The fact that Anthropic was able to ... build up a software stack that could work on TPUs as well as on GPUs, I don't think that's being appreciated enough in the marketplace." (Disclosure: Weka has been a sponsor of VentureBeat events.) Anthropic recently committed to accessing up to 1 million TPUs from Google, representing over a gigawatt of compute capacity. This multi-platform approach ensures the company isn't held hostage by Nvidia's pricing or supply constraints. So for Nvidia, the Groq deal is equally a defensive move. By integrating Groq's ultra-fast inference IP, Nvidia is making sure that the most performance-sensitive workloads -- like those running small models or as part of real-time agents -- can be accommodated within Nvidia's CUDA ecosystem, even as competitors try to jump ship to Google's Ironwood TPUs. CUDA is the special software Nvidia provides to developers to integrate GPUs. 4. The agentic 'statehood' war: Manus and the KV Cache The timing of this Groq deal coincides with Meta's acquisition of the agent pioneer Manus just two days ago. The significance of Manus was partly its obsession with statefulness. If an agent can't remember what it did 10 steps ago, it is useless for real-world tasks like market research or software development. KV Cache (Key-Value Cache) is the "short-term memory" that an LLM builds during the prefill phase. Manus reported that for production-grade agents, the ratio of input tokens to output tokens can reach 100:1. This means for every word an agent says, it is "thinking" and "remembering" 100 others. In this environment, the KV Cache hit rate is the single most important metric for a production agent, Manus said. If that cache is "evicted" from memory, the agent loses its train of thought, and the model must burn massive energy to recompute the prompt. Groq's SRAM can be a "scratchpad" for these agents -- although, again, mostly for smaller models -- because it allows for the near-instant retrieval of that state. Combined with Nvidia's Dynamo framework and the KVBM, Nvidia is building an "inference operating system" that can tier this state across SRAM, DRAM, and other flash-based offerings like that from Bercovici's Weka. Thomas Jorgensen, senior director of Technology Enablement at Supermicro, which specializes in building clusters of GPUs for large enterprise companies, told me in September that compute is no longer the primary bottleneck for advanced clusters. Feeding data to GPUs was the bottleneck, and breaking that bottleneck requires memory. "The whole cluster is now the computer," Jorgensen said. "Networking becomes an internal part of the beast ... feeding the beast with data is becoming harder because the bandwidth between GPUs is growing faster than anything else." This is why Nvidia is pushing into disaggregated inference. By separating the workloads, enterprise applications can use specialized storage tiers to feed data at memory-class performance, while the specialized "Groq-inside" silicon handles the high-speed token generation. The verdict for 2026 We are entering an era of extreme specialization. For decades, incumbents could win by shipping one dominant general-purpose architecture -- and their blind spot was often what they ignored on the edges. Intel's long neglect of low-power is the classic example, Michael Stewart, managing partner of Microsoft's venture fund M12, told me. Nvidia is signaling it won't repeat that mistake. "If even the leader, even the lion of the jungle will acquire talent, will acquire technology -- it's a sign that the whole market is just wanting more options," Stewart said. For technical leaders, the message is to stop architecting your stack like it's one rack, one accelerator, one answer. In 2026, advantage will go to the teams that label workloads explicitly -- and route them to the right tier: * prefill-heavy vs. decode-heavy * long-context vs. short-context * interactive vs. batch * small-model vs. large-model * edge constraints vs. data-center assumptions Your architecture will follow those labels. In 2026, "GPU strategy" stops being a purchasing decision and becomes a routing decision. The winners won't ask which chip they bought -- they'll ask where every token ran, and why.

Share

Share

Copy Link

Nvidia CEO Jensen Huang outlined a major strategic shift at CES 2026, emphasizing serviceability and inference economics while announcing a $20 billion Groq licensing deal. The move signals the end of one-size-fits-all GPUs as AI inference workloads split into prefill and decode phases, with the Vera Rubin platform designed for modular maintenance and continuous operation in constrained power environments.

Nvidia Shifts Focus to AI Inference Economics and System Uptime

Nvidia CEO Jensen Huang used CES 2026 to signal a fundamental shift in how the company approaches AI deployment, moving beyond raw performance metrics to emphasize serviceability, power delivery, and the economics of keeping systems productive. During a press Q&A session in Las Vegas, Huang spent considerable time discussing downtime and maintenance rather than traditional benchmarks, reflecting the realities facing hyperscalers operating million-dollar racks at scale

1

.The conversation comes as AI inference has surpassed training in total data center revenue for the first time, according to Deloitte, marking what industry observers call the "Inference Flip"

3

. This transition is forcing Nvidia to rethink its approach to hardware architecture and market positioning.Vera Rubin Platform Targets Modular Serviceability

The Vera Rubin platform represents Nvidia's answer to a costly operational problem: when components fail in current Grace Blackwell systems with 72 GPUs and nine switch trays, entire racks worth approximately $3 million go offline during repairs. "When we replace something today, we literally take the entire rack down. It goes to zero," Huang explained during the Q&A

1

.

Source: Tom's Hardware

Vera Rubin's tray-based architecture breaks racks into modular, serviceable units that can be replaced without shutting down the entire system. Assembly time drops from two hours per node to five minutes, and the platform eliminates 43 cables while achieving 100% liquid cooling. "You literally pull out the NVLink, and you keep on going," Huang said, emphasizing that software updates can occur while systems remain operational

1

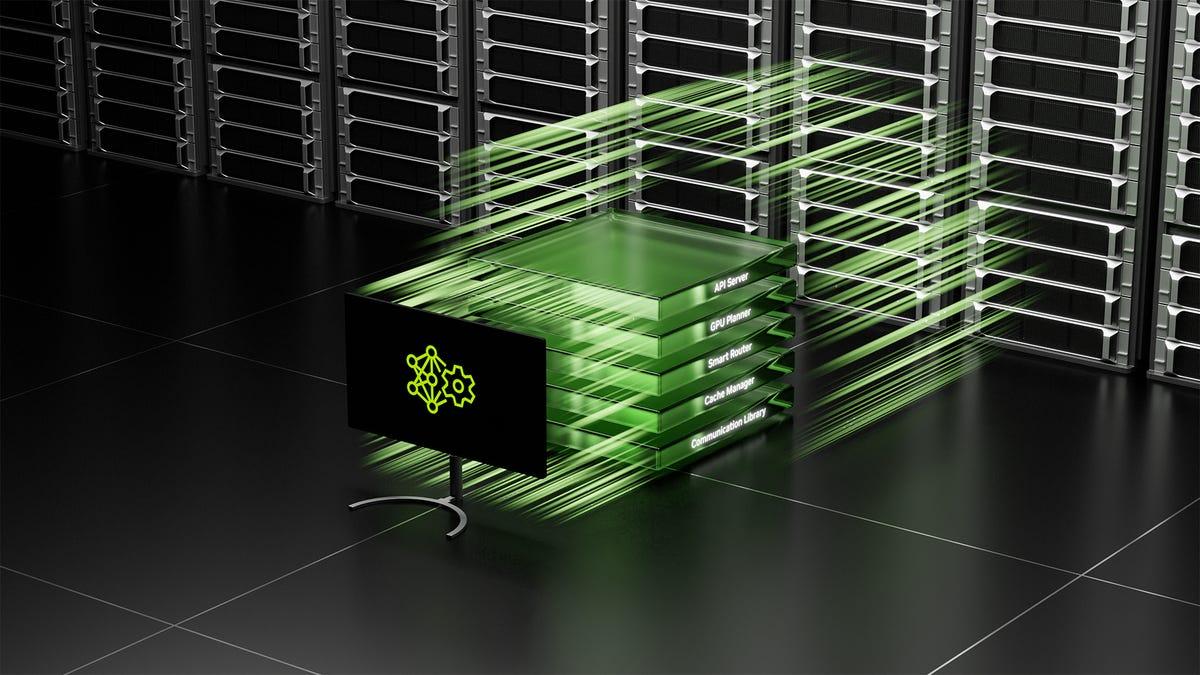

.The $20 Billion Groq Deal and Disaggregated Architecture

Nvidia's $20 billion strategic licensing deal with Groq marks a recognition that the general-purpose GPU era for AI inference is ending. The AI inference landscape is fragmenting into two distinct phases: prefill and decode, each requiring different hardware optimizations

3

.

Source: VentureBeat

The prefill phase ingests massive context windows—potentially 100,000 lines of code or hours of video—and is compute-bound, playing to Nvidia's traditional GPU strengths. The decode phase generates tokens one at a time and is memory-bandwidth bound, where Groq's SRAM-based language processing unit excels. According to Michael Stewart of Microsoft's M12 fund, moving data in SRAM requires just 0.1 picojoules compared to 20 to 100 times more energy for DRAM-to-processor transfers

3

.The Rubin CPX component will handle prefill workloads using 128GB of GDDR7 memory instead of expensive High Bandwidth Memory (HBM), while Groq-licensed silicon will serve as the high-speed decode engine. This disaggregated era approach allows Nvidia to maintain its CUDA software ecosystem dominance while addressing specialized inference workloads

3

.Related Stories

Chipmaking Supply Chain Faces Structural Tightness

The semiconductor market is experiencing what analyst Ben Bajarin calls a "gigacycle," with global revenues projected to climb from roughly $650 billion in 2024 to over $1 trillion by decade's end. Yet capacity constraints remain acute, particularly in memory. "If you look at the forecasts for wafer capacity or substrate capacity, nobody's scaling up," Bajarin cautioned

2

.

Source: Tom's Hardware

AI accelerators represented less than 0.2% of wafer starts in 2024 yet generated roughly 20% of semiconductor revenue, creating unprecedented concentration. The chipmaking supply chain faces particular pressure from HBM production, which consumes three to four times as many wafers per gigabyte as standard DDR5, according to analyst Stacy Rasgon. This shift toward HBM for AI accelerators reduces total DRAM supply, pushing up prices for consumer hardware and standard data center equipment

2

.Memory giant Micron recently closed its consumer-facing Crucial business to focus on more lucrative AI-driven products, signaling how market demand is reshaping priorities. Memory tightness could persist beyond 2026, with knock-on effects for OEMs and system builders facing higher bill-of-materials costs

2

.Power Delivery and Real-World Operational Challenges

Huang's CES 2026 discussions repeatedly circled back to instantaneous power demand rather than average consumption. Modern AI systems spike unpredictably during inference workloads, forcing operators to provision power delivery and cooling for worst-case scenarios that occur only briefly. This creates stranded capacity across data center infrastructure

1

.The emphasis on continuous inference workloads and constrained power environments reflects Nvidia's understanding that AI deployment has moved beyond initial buildout phases. Systems must remain productive as models and deployment patterns change, with uptime treated as a core performance metric alongside throughput. Huang's 50-year vision for AI infrastructure, previously outlined at Computex, now manifests in architectural choices around serviceability, power smoothing, and unified software stacks

1

.Investor Gavin Baker predicted that Nvidia's Groq integration will lead to cancellation of competing specialized AI chips outside of Google's TPU, Tesla's AI5, and AWS's Trainium. The move represents both offensive and defensive strategy—optimizing for fragmented inference workloads while protecting the CUDA moat that has sustained Nvidia's reported 92% market share

3

.References

Summarized by

Navi

Related Stories

Nvidia drops $20 billion on AI chip startup Groq in largest acquisition ever

24 Dec 2025•Business and Economy

Nvidia Unveils Blackwell Ultra GPUs and AI Desktops, Focusing on Reasoning Models and Revenue Generation

19 Mar 2025•Technology

Nvidia CEO Jensen Huang Unveils "Agentic AI" Vision at CES 2025, Predicting Multi-Trillion Dollar Industry Shift

07 Jan 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology