AI image generators converge on just 12 visual clichés, study reveals creative limitations

3 Sources

3 Sources

[1]

When creating images, AI keeps remixing the same 12 stock photo clichés

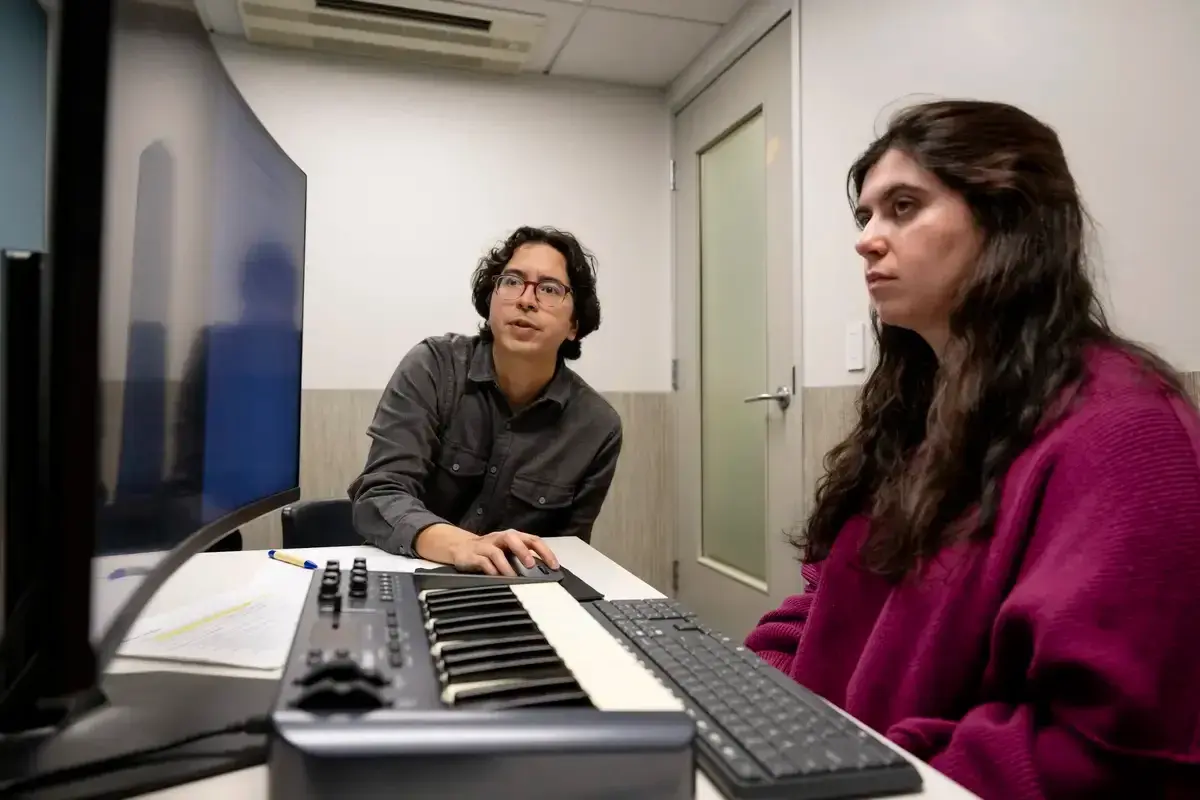

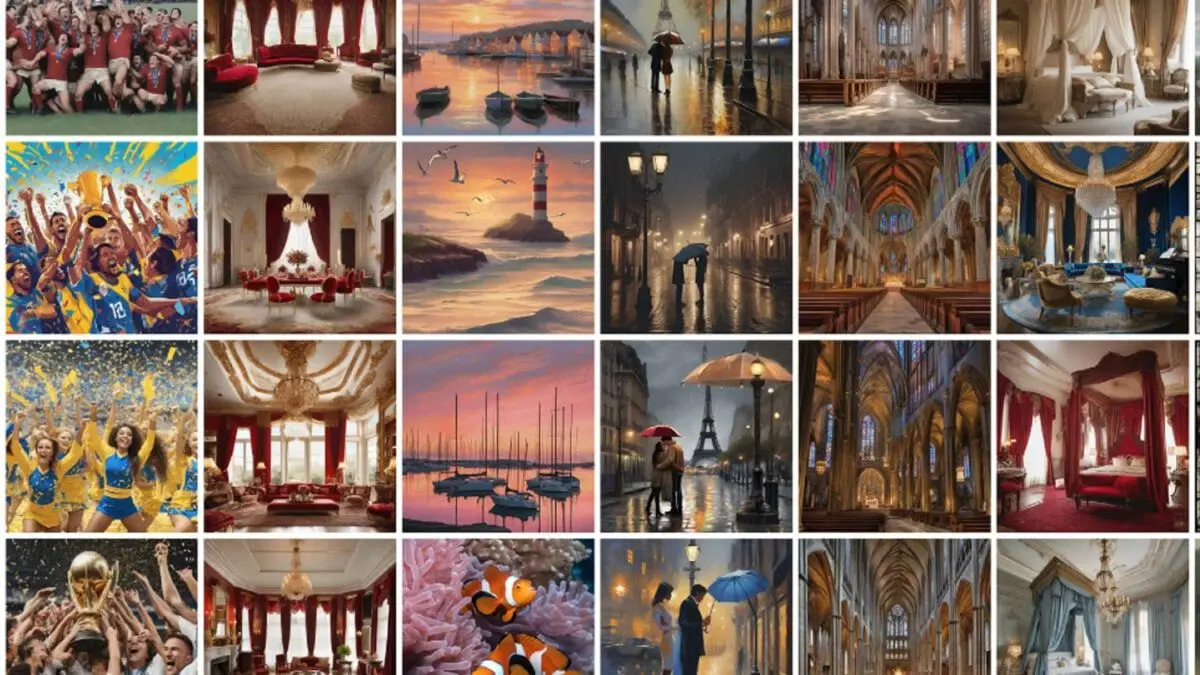

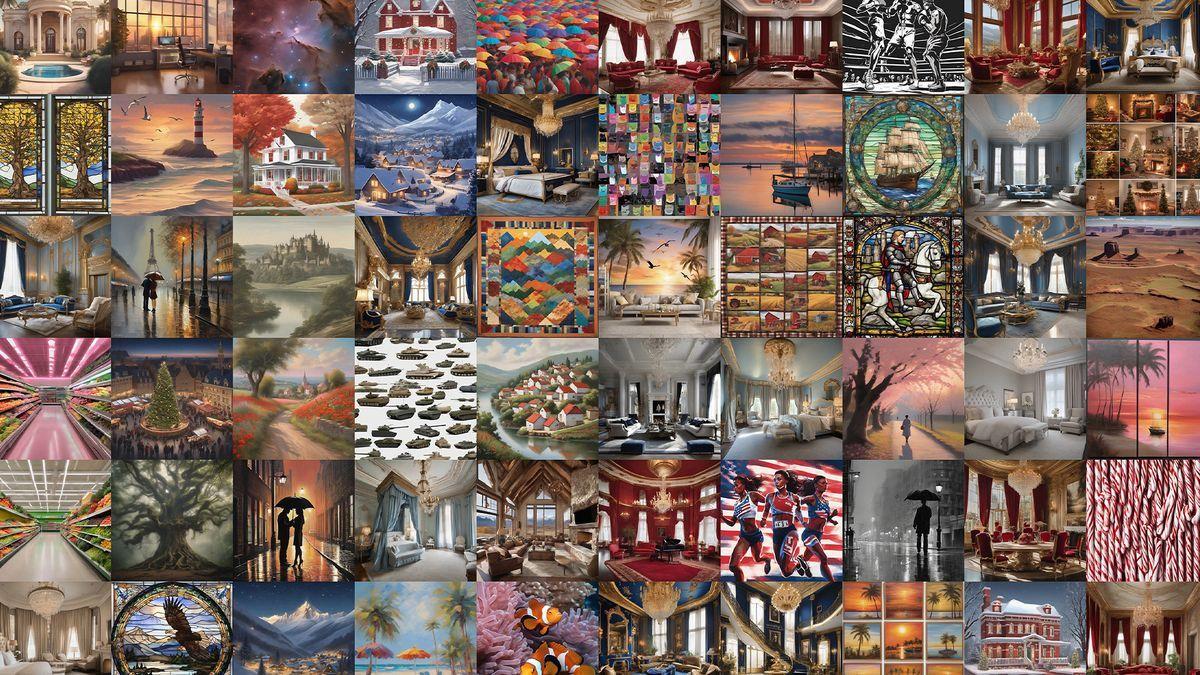

In the game of visual telephone, one player draws a picture and describes it to another player, who must then attempt to draw the picture based only on the verbal description. After many turns, things often get woefully derailed -- and wildly creative. Now, researchers have made artificial intelligence (AI) models play the game. In a new study published today in Patterns, researchers paired two AI models and set them loose for 100 rounds of visual telephone. But no matter how diverse or specific the starting prompt, the AIs repeatedly converged on the same 12 generic, often Eurocentric motifs -- what the researchers call "visual elevator music." As more AI systems are built to autonomously generate and judge other AI creative work, the researchers warn that the resulting bland soup of cliches could flatten creative diversity. Jeba Rezwana, a human-AI co-creativity researcher at Towson University, says the study provides more evidence that unsupervised AI systems can amplify existing biases, such as favoring Western cultures over others -- underscoring the need to keep humans in the loop. Ahmed Elgammal, director of the Art and Artificial Intelligence Laboratory at Rutgers University, adds that because AI systems are designed to generalize, it's not surprising that they gravitate to familiar themes in their training data. However, he says the study's quantification of this drift is "very, very interesting." These days, AI models are increasingly deployed as independent "agents" that can autonomously generate, critique, and revise text and multimedia. Even a simple question to ChatGPT can set off a chain reaction, as one AI system hands off queries to others. "You have this avalanche of large language models in the background that you're not seeing," says study co-author Arend Hintze, an AI researcher at Dalarna University. Watching that process made Hintze wonder what happens when humans step out of the picture entirely. Can AI systems stay on track when they are left to generate and judge creative work on their own? To find out, he and his team algorithmically generated 100 text prompts to seed games of visual telephone. The prompts were deliberately unusual and distinct. One read, "As the morning sun rises over the nation, eight weary travelers prepare to embark on a plan that will seem impossible to achieve but promises to take them beyond." Another read, "As I sat particularly alone, surrounded by nature, I found an old book with exactly eight pages that told a story in a forgotten language waiting to be read and understood.'' "You cannot get [the prompts] further away from each other," Hintze says. "We tried to make them as wild as possible." Each prompt was fed into an image generator called Stable Diffusion XL (SDXL), which produced an image that was handed off to an image-describing model called the Large Language and Vision Assistant. The resulting description was passed back to SDXL, and the cycle repeated until the systems had gone through 100 rounds. Very quickly, the original ideas began to slip away. For example, after a few dozen handoffs, a prompt about a prime minister grappling with a fragile peace deal devolved into an image of a pompous sitting room with a dramatic chandelier. The outputs for other prompts regularly drifted toward Gothic cathedrals, pastoral landscapes, and rainy nighttime scenes in Paris. The trend persisted even when researchers adjusted the randomness in the image-describing model and swapped in other AI models to play the game. Across the hundreds of resulting trajectories, the AIs defaulted to 12 dominant motifs that Hintze likens to the "meaningless, happy nonsense" of filler photos in Ikea picture frames. The convergence may partly reflect the data sets used to train visual models, Elgammal says. Those data sets are typically curated to be visually appealing, broadly acceptable, and free of offensive material. When the researchers extended the experiment to 1000 iterations, most image sequences remained stuck once they reached one of the 12 dominant motifs. In one case, however, a trajectory abruptly jumped after several hundred steps, moving from a snow-covered house to cows in a field and then to a quaint town. But how often such jumps occur, or whether some visual endpoints are more stable than others, remains unclear. "Does everybody end up in Paris or something? We don't know," Hintze says. The phenomenon also has parallels in human culture. Across cultures, stories such as the Little Red Riding Hood and simple geometric patterns such as spirals or zigzags have emerged repeatedly, suggesting people tend to converge on familiar forms, too. The difference is that human societies tend to have corrective countercultures that push back against homogenization. In AI models, however, "convergence is driven by reinforcement without critique," says Caterina Moruzzi, a philosopher studying creativity and AI at the Edinburgh College of Art. "There is a reward for representations that are easiest to stabilize and to describe." Whether these systems can be built to resist the pull toward sameness is an open question. But Christian Guckelsberger, an AI and creativity researcher at Aalto University, hopes this current limitation isn't viewed as an "engineering challenge. Rather, it raises a broader question about the very purpose of creativity. "We should remember how important it is for people to exercise their creativity as a form of meaning-making and self-realization," he says. "Is there really a problem to be solved -- or is there actually something to preserve?"

[2]

AI Image Generators Default to the Same 12 Photo Styles, Study Finds

AI image generation models have massive sets of visual data to pull from in order to create unique outputs. And yet, researchers find that when models are pushed to produce images based on a series of slowly shifting prompts, it'll default to just a handful of visual motifs, resulting in an ultimately generic style. A study published in the journal Patterns took two AI image generators, Stable Diffusion XL and LLaVA, and put them to test by playing a game of visual telephone. The game went like this: the Stable Diffusion XL model would be given a short prompt and required to produce an imageâ€"for example, "As I sat particularly alone, surrounded by nature, I found an old book with exactly eight pages that told a story in a forgotten language waiting to be read and understood.†That image was presented to the LLaVA model, which was asked to describe it. That description was then fed back to Stable Diffusion, which was asked to create a new image based off that prompt. This went on for 100 rounds. Much like a game of human telephone, the original image was quickly lost. No surprise there, especially if you've ever seen one of those time-lapse videos where people ask an AI model to reproduce an image without making any changes, only for the picture to quickly turn into something that doesn't remotely resemble the original. What did surprise the researchers, though, was the fact that the models default to just a handful of generic-looking styles. Across 1,000 different iterations of the telephone game, the researchers found that most of the image sequences would eventually fall into just one of 12 dominant motifs. In most cases, the shift is gradual. A few times, it happened suddenly. But it almost always happened. And researchers were not impressed. In the study, they referred to the common image styles as "visual elevator music," basically the type of pictures that you'd see hanging up in a hotel room. The most common scenes included things like maritime lighthouses, formal interiors, urban night settings, and rustic architecture. Even when the researchers switched to different models for image generation and descriptions, the same types of trends emerged. Researchers said that when the game is extended to 1,000 turns, coalescing around a style still happens around turn 100, but variations spin out in those extra turns. Interestingly, though, those variations still typically pull from one of the popular visual motifs. So what does that all mean? Mostly that AI isn't particularly creative. In a human game of telephone, you'll end up with extreme variance because each message is delivered and heard differently, and each person has their own internal biases and preferences that may impact what message they receive. AI has the opposite problem. No matter how outlandish the original prompt, it'll always default to a narrow selection of styles. Of course, the AI model is pulling from human-created prompts, so there is something to be said about the data set and what humans are drawn to take pictures of. If there's a lesson here, perhaps it is that copying styles is much easier than teaching taste.

[3]

Turns out AI is even less creative than we thought

You can't scroll socials nowadays without seeing AI allegations being thrown around, whether it's a soulless ad campaign or a piece of mysterious 'artwork'. Sometimes it's blaringly obvious, while othertimes there's that uncanny feeling that something artificial is afoot, and now studies have revealed that sixth sense is no coincidence. It turns out AI loves to recycle the same 12 tropes, resulting in that formulaic, gaussian-blurred imagery that many of us have come to loathe. While there are ways to use AI properly for productivity, creative originality is clearly not the technology's forte. In a recent study published in the data science journal, Patterns, researchers conducted a classic game of telephone between two AI models (Stable Diffusion XL and LLaVA). Like a game of prompt ping pong, the models were asked to generate images back and forth for 100 rounds. Predictably, they soon deviated from their original prompt, but most notably, they'd regress to a selection of 12 visual motifs. Akin to what researchers called "visual elevator music," the images often resulted in themes such as, pastoral landscapes, rainy nightscapes, coastal beaches and fancy interiors that resembled only the most uninspired corporate stock imagery. With 1000 different iterations, it's clear that AI struggles to create visual diversity, reverting to the dozen Western-centred tropes. It's been understood for some time that AI has a diversity issue, but discovering that its most common visual tropes can be condensed to just 12 motifs is alarming. In a sea of AI ads and soulless art, this revelation goes to show just how important human-made art is in today's climate.

Share

Share

Copy Link

A study published in Patterns journal reveals AI image generators repeatedly default to the same 12 generic visual motifs when generating images autonomously. Researchers paired AI models in a visual telephone experiment, finding that despite diverse starting prompts, systems like Stable Diffusion XL consistently produced Eurocentric themes like Gothic cathedrals and Parisian nightscapes—what they call 'visual elevator music.'

AI Image Generators Trapped in Creative Loop

A study published in Patterns has exposed a troubling limitation in AI creativity: when left to generate images autonomously, AI image generators consistently converge on a limited set of 12 visual styles, regardless of how diverse or unusual the initial prompt

1

. Researchers at Dalarna University designed a visual telephone experiment where they paired Stable Diffusion XL with LLaVA, an image-describing model, and set them loose for 100 rounds of iterative generation2

.

Source: Gizmodo

The results reveal a pattern that challenges assumptions about generative AI capabilities. Despite starting with 100 deliberately unusual AI prompts—one reading "As the morning sun rises over the nation, eight weary travelers prepare to embark on a plan that will seem impossible to achieve but promises to take them beyond"—the systems repeatedly drifted toward the same generic themes

1

. Study co-author Arend Hintze notes, "You cannot get [the prompts] further away from each other. We tried to make them as wild as possible."Visual Clichés Dominate Output

The convergence on visual motifs happened quickly and predictably. A prompt about a prime minister grappling with a fragile peace deal devolved into an image of a pompous sitting room with a dramatic chandelier after just a few dozen handoffs

1

. Other trajectories regularly drifted toward Gothic cathedrals, pastoral landscapes, rainy nighttime scenes in Paris, maritime lighthouses, formal interiors, urban night settings, and rustic architecture2

.

Source: Creative Bloq

Researchers dubbed these recurring themes "visual elevator music"—the type of meaningless, broadly acceptable stock imagery you might find in Ikea picture frames or hotel rooms

1

3

. The trend persisted even when researchers adjusted randomness parameters and swapped in different AI models, demonstrating that this limitation reflects fundamental constraints rather than quirks of specific systems.Training Data Bias Drives Homogenization

Ahmed Elgammal, director of the Art and Artificial Intelligence Laboratory at Rutgers University, explains that because AI systems are designed to generalize, they naturally gravitate toward familiar themes in their training data

1

. The convergence may partly reflect how visual model datasets are typically curated to be visually appealing, broadly acceptable, and free of offensive material—inadvertently creating Eurocentric visuals that flatten creative diversity.When researchers extended the experiment to 1,000 iterations, most image sequences remained stuck once they reached one of the 12 dominant motifs. In rare cases, a trajectory abruptly jumped—moving from a snow-covered house to cows in a field and then to a quaint town—but such variations remained exceptions

1

. Across 1,000 different iterations of the visual telephone experiment, the pattern held firm2

.Related Stories

Human Oversight Becomes Critical

Jeba Rezwana, a human-AI co-creativity researcher at Towson University, says the study provides evidence that unsupervised AI systems amplify existing biases, underscoring the need to keep human oversight in the creative loop

1

. The findings arrive at a moment when AI models are increasingly deployed as independent agents that autonomously generate, critique, and revise multimedia content. Even a simple question to ChatGPT can trigger a chain reaction as one AI system hands off queries to others.Caterina Moruzzi, a philosopher studying creativity and AI at the Edinburgh College of Art, notes a key distinction: while human cultures develop corrective countercultures that push back against creative homogenization, AI convergence is "driven by reinforcement without critique"

1

. This lack of cultural pushback means AI systems lack true creative originality, instead recycling familiar patterns without the taste or judgment that characterizes human-made art3

.Implications for Creative Industries

The study's findings matter for anyone relying on AI for creative work. As more systems autonomously generate and judge other AI creative output, the resulting "bland soup of cliches" threatens to flatten the visual landscape

1

. The research quantifies what many have sensed intuitively—that uncanny feeling when encountering AI-generated imagery that something artificial is afoot3

.What remains unclear is whether certain visual endpoints prove more stable than others, or how frequently systems might escape these gravitational wells. "Does everybody end up in Paris or something? We don't know," Hintze admits

1

. The discovery that AI creativity can be condensed to just 12 motifs underscores how important human creativity and artistic judgment remain in an era of increasingly autonomous generative systems.References

Summarized by

Navi

[3]

Related Stories

Generative AI creates 'visual elevator music' as study reveals cultural stagnation is underway

22 Jan 2026•Science and Research

AI as a Creative Writing Tool: Effective but Potentially Limiting, Study Finds

13 Jul 2024

AI Enhances Individual Creativity but May Limit Group Innovation, Study Finds

15 Jul 2024

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation