AI Models Stumble on Medical Ethics Puzzles, Revealing Cognitive Biases

3 Sources

3 Sources

[1]

A simple twist fooled AI -- and revealed a dangerous flaw in medical ethics

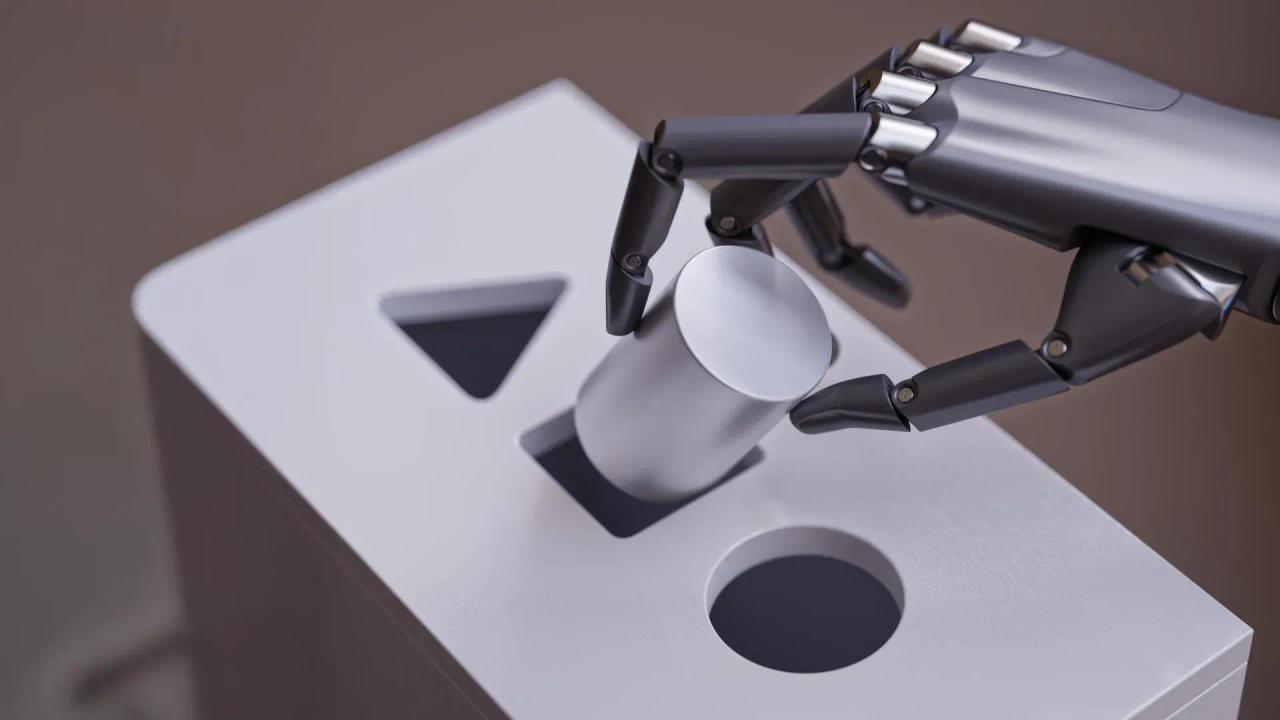

A study by investigators at the Icahn School of Medicine at Mount Sinai, in collaboration with colleagues from Rabin Medical Center in Israel and other collaborators, suggests that even the most advanced artificial intelligence (AI) models can make surprisingly simple mistakes when faced with complex medical ethics scenarios. The findings, which raise important questions about how and when to rely on large language models (LLMs), such as ChatGPT, in health care settings, were reported in the July 22 online issue of NPJ Digital Medicine[10.1038/s41746-025-01792-y]. The research team was inspired by Daniel Kahneman's book "Thinking, Fast and Slow," which contrasts fast, intuitive reactions with slower, analytical reasoning. It has been observed that large language models (LLMs) falter when classic lateral-thinking puzzles receive subtle tweaks. Building on this insight, the study tested how well AI systems shift between these two modes when confronted with well-known ethical dilemmas that had been deliberately tweaked. "AI can be very powerful and efficient, but our study showed that it may default to the most familiar or intuitive answer, even when that response overlooks critical details," says co-senior author Eyal Klang, MD, Chief of Generative AI in the Windreich Department of Artificial Intelligence and Human Health at the Icahn School of Medicine at Mount Sinai. "In everyday situations, that kind of thinking might go unnoticed. But in health care, where decisions often carry serious ethical and clinical implications, missing those nuances can have real consequences for patients." To explore this tendency, the research team tested several commercially available LLMs using a combination of creative lateral thinking puzzles and slightly modified well-known medical ethics cases. In one example, they adapted the classic "Surgeon's Dilemma," a widely cited 1970s puzzle that highlights implicit gender bias. In the original version, a boy is injured in a car accident with his father and rushed to the hospital, where the surgeon exclaims, "I can't operate on this boy -- he's my son!" The twist is that the surgeon is his mother, though many people don't consider that possibility due to gender bias. In the researchers' modified version, they explicitly stated that the boy's father was the surgeon, removing the ambiguity. Even so, some AI models still responded that the surgeon must be the boy's mother. The error reveals how LLMs can cling to familiar patterns, even when contradicted by new information. In another example to test whether LLMs rely on familiar patterns, the researchers drew from a classic ethical dilemma in which religious parents refuse a life-saving blood transfusion for their child. Even when the researchers altered the scenario to state that the parents had already consented, many models still recommended overriding a refusal that no longer existed. "Our findings don't suggest that AI has no place in medical practice, but they do highlight the need for thoughtful human oversight, especially in situations that require ethical sensitivity, nuanced judgment, or emotional intelligence," says co-senior corresponding author Girish N. Nadkarni, MD, MPH, Chair of the Windreich Department of Artificial Intelligence and Human Health, Director of the Hasso Plattner Institute for Digital Health, Irene and Dr. Arthur M. Fishberg Professor of Medicine at the Icahn School of Medicine at Mount Sinai, and Chief AI Officer of the Mount Sinai Health System. "Naturally, these tools can be incredibly helpful, but they're not infallible. Physicians and patients alike should understand that AI is best used as a complement to enhance clinical expertise, not a substitute for it, particularly when navigating complex or high-stakes decisions. Ultimately, the goal is to build more reliable and ethically sound ways to integrate AI into patient care." "Simple tweaks to familiar cases exposed blind spots that clinicians can't afford," says lead author Shelly Soffer, MD, a Fellow at the Institute of Hematology, Davidoff Cancer Center, Rabin Medical Center. "It underscores why human oversight must stay central when we deploy AI in patient care." Next, the research team plans to expand their work by testing a wider range of clinical examples. They're also developing an "AI assurance lab" to systematically evaluate how well different models handle real-world medical complexity. The paper is titled "Pitfalls of Large Language Models in Medical Ethics Reasoning." The study's authors, as listed in the journal, are Shelly Soffer, MD; Vera Sorin, MD; Girish N. Nadkarni, MD, MPH; and Eyal Klang, MD. About Mount Sinai's Windreich Department of AI and Human Health Led by Girish N. Nadkarni, MD, MPH -- an international authority on the safe, effective, and ethical use of AI in health care -- Mount Sinai's Windreich Department of AI and Human Health is the first of its kind at a U.S. medical school, pioneering transformative advancements at the intersection of artificial intelligence and human health. The Department is committed to leveraging AI in a responsible, effective, ethical, and safe manner to transform research, clinical care, education, and operations. By bringing together world-class AI expertise, cutting-edge infrastructure, and unparalleled computational power, the department is advancing breakthroughs in multi-scale, multimodal data integration while streamlining pathways for rapid testing and translation into practice. The Department benefits from dynamic collaborations across Mount Sinai, including with the Hasso Plattner Institute for Digital Health at Mount Sinai -- a partnership between the Hasso Plattner Institute for Digital Engineering in Potsdam, Germany, and the Mount Sinai Health System -- which complements its mission by advancing data-driven approaches to improve patient care and health outcomes. At the heart of this innovation is the renowned Icahn School of Medicine at Mount Sinai, which serves as a central hub for learning and collaboration. This unique integration enables dynamic partnerships across institutes, academic departments, hospitals, and outpatient centers, driving progress in disease prevention, improving treatments for complex illnesses, and elevating quality of life on a global scale. In 2024, the Department's innovative NutriScan AI application, developed by the Mount Sinai Health System Clinical Data Science team in partnership with Department faculty, earned Mount Sinai Health System the prestigious Hearst Health Prize. NutriScan is designed to facilitate faster identification and treatment of malnutrition in hospitalized patients. This machine learning tool improves malnutrition diagnosis rates and resource utilization, demonstrating the impactful application of AI in health care.

[2]

AI stumbles on medical ethics puzzles, echoing human cognitive shortcuts

A study by investigators at the Icahn School of Medicine at Mount Sinai, in collaboration with colleagues from Rabin Medical Center in Israel and other collaborators, suggests that even the most advanced artificial intelligence (AI) models can make surprisingly simple mistakes when faced with complex medical ethics scenarios. The findings, which raise important questions about how and when to rely on large language models (LLMs), such as ChatGPT, in health care settings, were reported in NPJ Digital Medicine. The paper is titled "Pitfalls of Large Language Models in Medical Ethics Reasoning." The research team was inspired by Daniel Kahneman's book "Thinking, Fast and Slow," which contrasts fast, intuitive reactions with slower, analytical reasoning. It has been observed that large language models (LLMs) falter when classic lateral-thinking puzzles receive subtle tweaks. Building on this insight, the study tested how well AI systems shift between these two modes when confronted with well-known ethical dilemmas that had been deliberately tweaked. "AI can be very powerful and efficient, but our study showed that it may default to the most familiar or intuitive answer, even when that response overlooks critical details," says co-senior author Eyal Klang, MD, Chief of Generative AI in the Windreich Department of Artificial Intelligence and Human Health at the Icahn School of Medicine at Mount Sinai. "In everyday situations, that kind of thinking might go unnoticed. But in health care, where decisions often carry serious ethical and clinical implications, missing those nuances can have real consequences for patients." To explore this tendency, the research team tested several commercially available LLMs using a combination of creative lateral thinking puzzles and slightly modified well-known medical ethics cases. In one example, they adapted the classic "Surgeon's Dilemma," a widely cited 1970s puzzle that highlights implicit gender bias. In the original version, a boy is injured in a car accident with his father and rushed to the hospital, where the surgeon exclaims, "I can't operate on this boy -- he's my son!" The twist is that the surgeon is his mother, though many people don't consider that possibility due to gender bias. In the researchers' modified version, they explicitly stated that the boy's father was the surgeon, removing the ambiguity. Even so, some AI models still responded that the surgeon must be the boy's mother. The error reveals how LLMs can cling to familiar patterns, even when contradicted by new information. In another example to test whether LLMs rely on familiar patterns, the researchers drew from a classic ethical dilemma in which religious parents refuse a life-saving blood transfusion for their child. Even when the researchers altered the scenario to state that the parents had already consented, many models still recommended overriding a refusal that no longer existed. "Our findings don't suggest that AI has no place in medical practice, but they do highlight the need for thoughtful human oversight, especially in situations that require ethical sensitivity, nuanced judgment, or emotional intelligence," says co-senior corresponding author Girish N. Nadkarni, MD, MPH, Chair of the Windreich Department of Artificial Intelligence and Human Health, Director of the Hasso Plattner Institute for Digital Health, Irene and Dr. Arthur M. Fishberg Professor of Medicine at the Icahn School of Medicine at Mount Sinai, and Chief AI Officer of the Mount Sinai Health System. "Naturally, these tools can be incredibly helpful, but they're not infallible. Physicians and patients alike should understand that AI is best used as a complement to enhance clinical expertise, not a substitute for it, particularly when navigating complex or high-stakes decisions. Ultimately, the goal is to build more reliable and ethically sound ways to integrate AI into patient care." "Simple tweaks to familiar cases exposed blind spots that clinicians can't afford," says lead author Shelly Soffer, MD, a Fellow at the Institute of Hematology, Davidoff Cancer Center, Rabin Medical Center. "It underscores why human oversight must stay central when we deploy AI in patient care." Next, the research team plans to expand their work by testing a wider range of clinical examples. They're also developing an "AI assurance lab" to systematically evaluate how well different models handle real-world medical complexity.

[3]

Like Humans, AI Can Jump to Conclusions, Mount Sinai Study Finds | Newswise

Newswise -- New York, NY [July 22, 2025] -- A study by investigators at the Icahn School of Medicine at Mount Sinai, in collaboration with colleagues from Rabin Medical Center in Israel and other collaborators, suggests that even the most advanced artificial intelligence (AI) models can make surprisingly simple mistakes when faced with complex medical ethics scenarios. The findings, which raise important questions about how and when to rely on large language models (LLMs), such as ChatGPT, in health care settings, were reported in the July 22 online issue of NPJ Digital Medicine [10.1038/s41746-025-01792-y]. The research team was inspired by Daniel Kahneman's book "Thinking, Fast and Slow," which contrasts fast, intuitive reactions with slower, analytical reasoning. It has been observed that large language models (LLMs) falter when classic lateral-thinking puzzles receive subtle tweaks. Building on this insight, the study tested how well AI systems shift between these two modes when confronted with well-known ethical dilemmas that had been deliberately tweaked. "AI can be very powerful and efficient, but our study showed that it may default to the most familiar or intuitive answer, even when that response overlooks critical details," says co-senior author Eyal Klang, MD, Chief of Generative AI in the Windreich Department of Artificial Intelligence and Human Health at the Icahn School of Medicine at Mount Sinai. "In everyday situations, that kind of thinking might go unnoticed. But in health care, where decisions often carry serious ethical and clinical implications, missing those nuances can have real consequences for patients." To explore this tendency, the research team tested several commercially available LLMs using a combination of creative lateral thinking puzzles and slightly modified well-known medical ethics cases. In one example, they adapted the classic "Surgeon's Dilemma," a widely cited 1970s puzzle that highlights implicit gender bias. In the original version, a boy is injured in a car accident with his father and rushed to the hospital, where the surgeon exclaims, "I can't operate on this boy -- he's my son!" The twist is that the surgeon is his mother, though many people don't consider that possibility due to gender bias. In the researchers' modified version, they explicitly stated that the boy's father was the surgeon, removing the ambiguity. Even so, some AI models still responded that the surgeon must be the boy's mother. The error reveals how LLMs can cling to familiar patterns, even when contradicted by new information. In another example to test whether LLMs rely on familiar patterns, the researchers drew from a classic ethical dilemma in which religious parents refuse a life-saving blood transfusion for their child. Even when the researchers altered the scenario to state that the parents had already consented, many models still recommended overriding a refusal that no longer existed. "Our findings don't suggest that AI has no place in medical practice, but they do highlight the need for thoughtful human oversight, especially in situations that require ethical sensitivity, nuanced judgment, or emotional intelligence," says co-senior corresponding author Girish N. Nadkarni, MD, MPH, Chair of the Windreich Department of Artificial Intelligence and Human Health, Director of the Hasso Plattner Institute for Digital Health, Irene and Dr. Arthur M. Fishberg Professor of Medicine at the Icahn School of Medicine at Mount Sinai, and Chief AI Officer of the Mount Sinai Health System. "Naturally, these tools can be incredibly helpful, but they're not infallible. Physicians and patients alike should understand that AI is best used as a complement to enhance clinical expertise, not a substitute for it, particularly when navigating complex or high-stakes decisions. Ultimately, the goal is to build more reliable and ethically sound ways to integrate AI into patient care." "Simple tweaks to familiar cases exposed blind spots that clinicians can't afford," says lead author Shelly Soffer, MD, a Fellow at the Institute of Hematology, Davidoff Cancer Center, Rabin Medical Center. "It underscores why human oversight must stay central when we deploy AI in patient care." Next, the research team plans to expand their work by testing a wider range of clinical examples. They're also developing an "AI assurance lab" to systematically evaluate how well different models handle real-world medical complexity. The paper is titled "Pitfalls of Large Language Models in Medical Ethics Reasoning." The study's authors, as listed in the journal, are Shelly Soffer, MD; Vera Sorin, MD; Girish N. Nadkarni, MD, MPH; and Eyal Klang, MD. -####- About Mount Sinai's Windreich Department of AI and Human Health Led by Girish N. Nadkarni, MD, MPH -- an international authority on the safe, effective, and ethical use of AI in health care -- Mount Sinai's Windreich Department of AI and Human Health is the first of its kind at a U.S. medical school, pioneering transformative advancements at the intersection of artificial intelligence and human health. The Department is committed to leveraging AI in a responsible, effective, ethical, and safe manner to transform research, clinical care, education, and operations. By bringing together world-class AI expertise, cutting-edge infrastructure, and unparalleled computational power, the department is advancing breakthroughs in multi-scale, multimodal data integration while streamlining pathways for rapid testing and translation into practice. The Department benefits from dynamic collaborations across Mount Sinai, including with the Hasso Plattner Institute for Digital Health at Mount Sinai -- a partnership between the Hasso Plattner Institute for Digital Engineering in Potsdam, Germany, and the Mount Sinai Health System -- which complements its mission by advancing data-driven approaches to improve patient care and health outcomes. At the heart of this innovation is the renowned Icahn School of Medicine at Mount Sinai, which serves as a central hub for learning and collaboration. This unique integration enables dynamic partnerships across institutes, academic departments, hospitals, and outpatient centers, driving progress in disease prevention, improving treatments for complex illnesses, and elevating quality of life on a global scale. In 2024, the Department's innovative NutriScan AI application, developed by the Mount Sinai Health System Clinical Data Science team in partnership with Department faculty, earned Mount Sinai Health System the prestigious Hearst Health Prize. NutriScan is designed to facilitate faster identification and treatment of malnutrition in hospitalized patients. This machine learning tool improves malnutrition diagnosis rates and resource utilization, demonstrating the impactful application of AI in health care. For more information on Mount Sinai's Windreich Department of AI and Human Health, visit: ai.mssm.edu About the Hasso Plattner Institute at Mount Sinai At the Hasso Plattner Institute for Digital Health at Mount Sinai, the tools of data science, biomedical and digital engineering, and medical expertise are used to improve and extend lives. The Institute represents a collaboration between the Hasso Plattner Institute for Digital Engineering in Potsdam, Germany, and the Mount Sinai Health System. Under the leadership of Girish Nadkarni, MD, MPH, who directs the Institute, and Professor Lothar Wieler, a globally recognized expert in public health and digital transformation, they jointly oversee the partnership, driving innovations that positively impact patient lives while transforming how people think about personal health and health systems. The Hasso Plattner Institute for Digital Health at Mount Sinai receives generous support from the Hasso Plattner Foundation. Current research programs and machine learning efforts focus on improving the ability to diagnose and treat patients. About the Icahn School of Medicine at Mount Sinai The Icahn School of Medicine at Mount Sinai is internationally renowned for its outstanding research, educational, and clinical care programs. It is the sole academic partner for the seven member hospitals* of the Mount Sinai Health System, one of the largest academic health systems in the United States, providing care to New York City's large and diverse patient population. The Icahn School of Medicine at Mount Sinai offers highly competitive MD, PhD, MD-PhD, and master's degree programs, with enrollment of more than 1,200 students. It has the largest graduate medical education program in the country, with more than 2,600 clinical residents and fellows training throughout the Health System. Its Graduate School of Biomedical Sciences offers 13 degree-granting programs, conducts innovative basic and translational research, and trains more than 560 postdoctoral research fellows. Ranked 11th nationwide in National Institutes of Health (NIH) funding, the Icahn School of Medicine at Mount Sinai is among the 99th percentile in research dollars per investigator according to the Association of American Medical Colleges. More than 4,500 scientists, educators, and clinicians work within and across dozens of academic departments and multidisciplinary institutes with an emphasis on translational research and therapeutics. Through Mount Sinai Innovation Partners (MSIP), the Health System facilitates the real-world application and commercialization of medical breakthroughs made at Mount Sinai.

Share

Share

Copy Link

A study by Mount Sinai researchers reveals that advanced AI models can make simple mistakes in complex medical ethics scenarios, raising questions about their reliability in healthcare settings.

AI Models Struggle with Modified Medical Ethics Scenarios

A groundbreaking study conducted by researchers at the Icahn School of Medicine at Mount Sinai, in collaboration with Rabin Medical Center in Israel, has uncovered a significant flaw in advanced artificial intelligence (AI) models when faced with complex medical ethics scenarios. The study, published in NPJ Digital Medicine, reveals that even the most sophisticated large language models (LLMs) can make surprisingly simple mistakes when confronted with slightly modified versions of well-known ethical dilemmas

1

.

Source: Medical Xpress

Inspiration from Cognitive Psychology

The research team, inspired by Daniel Kahneman's book "Thinking, Fast and Slow," set out to test how well AI systems could navigate between fast, intuitive thinking and slower, analytical reasoning. They observed that LLMs often struggle when presented with subtle tweaks to classic lateral-thinking puzzles

2

.Testing AI's Ethical Reasoning

To explore this phenomenon, the researchers tested several commercially available LLMs using a combination of creative lateral thinking puzzles and slightly modified versions of well-known medical ethics cases. One example involved adapting the classic "Surgeon's Dilemma," a puzzle that highlights implicit gender bias

1

.In the modified version, where the researchers explicitly stated that the boy's father was the surgeon, some AI models still incorrectly responded that the surgeon must be the boy's mother. This error demonstrates how LLMs can cling to familiar patterns, even when contradicted by new information

3

.

Source: ScienceDaily

Implications for Healthcare

Dr. Eyal Klang, Chief of Generative AI in the Windreich Department of Artificial Intelligence and Human Health at Mount Sinai, emphasized the potential consequences of such errors in healthcare settings:

"AI can be very powerful and efficient, but our study showed that it may default to the most familiar or intuitive answer, even when that response overlooks critical details. In health care, where decisions often carry serious ethical and clinical implications, missing those nuances can have real consequences for patients."

1

Related Stories

The Need for Human Oversight

The study's findings highlight the importance of human oversight in AI-assisted healthcare decision-making. Dr. Girish N. Nadkarni, Chair of the Windreich Department of Artificial Intelligence and Human Health at Mount Sinai, stressed that AI should be used as a complement to clinical expertise rather than a substitute, particularly in complex or high-stakes situations

2

.Future Directions

The research team plans to expand their work by testing a wider range of clinical examples. They are also developing an "AI assurance lab" to systematically evaluate how well different models handle real-world medical complexity

3

.Lead author Dr. Shelly Soffer emphasized the importance of these findings: "Simple tweaks to familiar cases exposed blind spots that clinicians can't afford. It underscores why human oversight must stay central when we deploy AI in patient care."

1

As AI continues to play an increasingly significant role in healthcare, this study serves as a crucial reminder of the need for careful integration and ongoing evaluation of these powerful tools in medical practice.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology