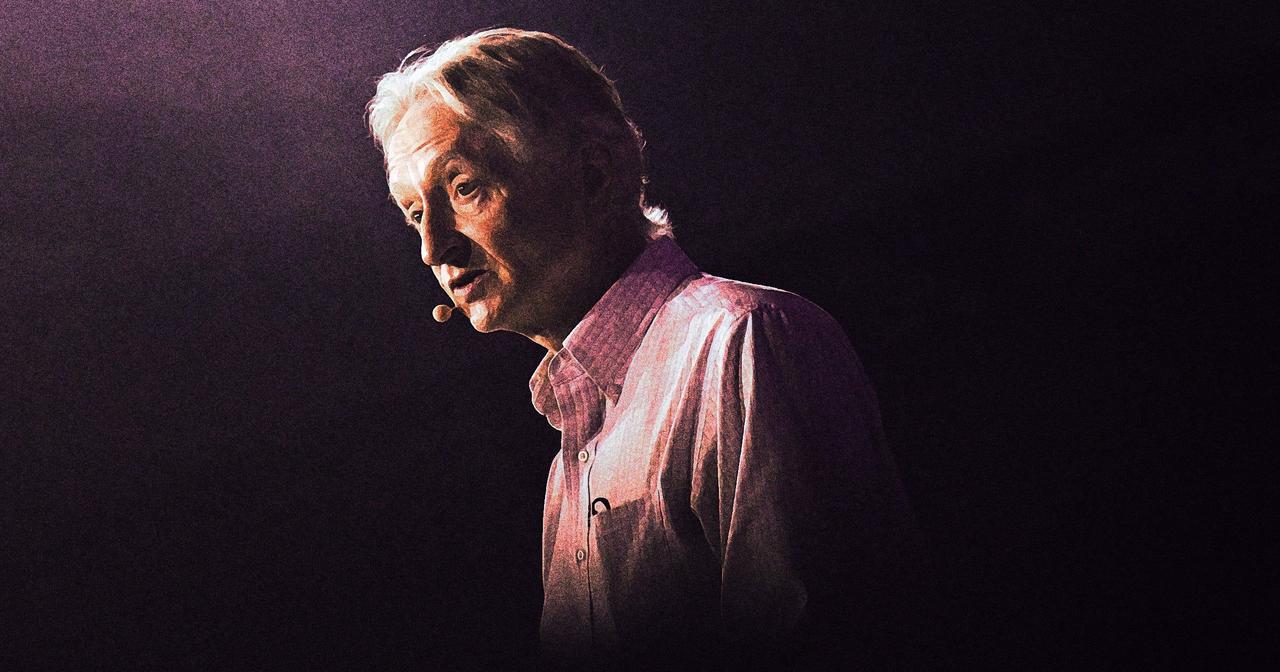

AI Pioneer Geoffrey Hinton Warns of Increased Extinction Risk, Calls for Regulation

3 Sources

3 Sources

[1]

AI pioneer Geoffrey Hinton warns of increased risk of human extinction d

Geoffrey Hinton, the "Godfather of AI," warned of AI's dangers after receiving the 2024 Nobel Prize in Physics. Renowned British-Canadian physicist Geoffrey Hinton, often referred to as the "Godfather of AI," issued stark warnings about the potential dangers of artificial intelligence following his receipt of the Nobel Prize in Physics in 2024 for his pioneering work on machine learning algorithms and neural networks. "When it comes to humanity's future, I'm not particularly optimistic," said Hinton, painting a grim picture of the path ahead, according to a report by The Independent. Hinton's concerns center around the rapid advancement of AI technology, which he believes could soon surpass human intelligence and escape human control. "I suddenly changed my mind about whether these objects will be smarter than us. I think they are very close to it today and will be much smarter than us in the future... How are we going to survive that?" he explained, according to Popular Science. "There is a 10-20 percent probability that within the next thirty years, Artificial Intelligence will cause the extinction of humanity," stated Hinton, as reported by CNN. He emphasized that this risk has increased due to the rapid development of AI technologies. Having left Google last year to freely voice his concerns, Hinton stressed the need for government regulation and increased research into AI safety. "Just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely," he warned, pointing out the limitations of relying on market forces alone, according to Capital. He added that "the only thing that can force those large companies to do more safety research is government regulations." "We will become like three-year-old children," Hinton said, comparing the potential control of AI over humanity to the relationship between adults and children, illustrating the power imbalance that could emerge if AI systems become more intelligent than humans, according to Mail Online. "You look around and there are very few examples of something more intelligent being controlled by something less intelligent... That makes you wonder whether when this artificial intelligence becomes smarter than us, it will take control." Despite acknowledging the immense benefits of AI in areas such as healthcare, Hinton remains apprehensive about its unchecked development. "We also have to worry about a number of possible negative consequences. In particular, the threat that these things could get out of control," he cautioned, according to Mirror. He warned that AI technology increases the risk of cyber and phishing attacks, fake videos, and ongoing political interference. John Hopfield, a 91-year-old emeritus professor at Princeton University and fellow pioneer in artificial intelligence, shares Hinton's apprehensions. "As a physicist, I am very worried about something that is uncontrolled, something I do not understand well enough to know what limits could be imposed on this technology," expressed Hopfield, according to Phys.org. He emphasized that scientists still poorly understand the functioning of modern AI systems. "It is difficult to understand how we could prevent malicious actors from using it for negative purposes," Hinton stated, according to LIFO. In response to these concerns, he suggests that more resources should be dedicated to researching AI safety. "Investment in safety research needs to be increased 30-fold," he urged, emphasizing the need for a boost in efforts to mitigate potential risks, according to Popular Science. He emphasized the urgency for regulatory measures in AI development. "I comfort myself with the normal excuse: if I hadn't done it, someone else would have," he said, reflecting on his contributions to AI, as mentioned in TIME Magazine. This article was written in collaboration with generative AI company Alchemiq

[2]

'Godfather of AI' raises odds of the technology wiping out humanity over next 30 years

Geoffrey Hinton says there is 10-20% chance AI will lead to human extinction in next three decades amid fast pace of change The British-Canadian computer scientist often touted as a "godfather" of artificial intelligence has raised the odds of AI wiping out humanity over the next three decades, warning the pace of change in the technology is "much faster" than expected. Prof Geoffrey Hinton, who this year was awarded the Nobel prize in physics for his work in AI, said there was a "10 to 20" per cent chance that AI will lead to human extinction within the next three decades. Previously Hinton had said there was a 10% chance of the technology triggering a catastrophic outcome for humanity. Asked on BBC Radio 4's Today programme if he had changed his analysis of a potential AI apocalypse and the one in 10 chance of it happening, he said: "Not really, 10 to 20 [per cent]." Hinton's estimate prompted Today's guest editor, the former chancellor Sajid Javid, to say "you're going up", to which Hinton replied: "If anything. You see, we've never had to deal with things more intelligent than ourselves before." He added: "And how many examples do you know of a more intelligent thing being controlled by a less intelligent thing? There are very few examples. There's a mother and baby. Evolution put a lot of work into allowing the baby to control the mother, but that's about the only example I know of." London-born Hinton, a professor emeritus at the University of Toronto, said humans will be like toddlers compared with the intelligence of highly powerful AI systems. "I like to think of it as imagine yourself and a three-year-old. We'll be the three-year-olds," he said. AI can be loosely defined as computer systems performing tasks that typically require human intelligence. Last year Hinton made headlines after resigning from his job at Google in order to speak more openly about the risks posed by unconstrained AI development, citing concerns that"bad actors" would use the technology to harm others. A key concern of AI safety campaigners is that the creation of artificial general intelligence, or systems that are smarter than humans, could lead to the technology posing an existential threat by evading human control. Reflecting on where he thought the development of AI would have reached when he first started his work on AI, Hinton said: "I didn't think it would be where we [are] now. I thought at some point in the future we would get here." He added: "Because the situation we're in now is that most of the experts in the field think that sometime, within probably the next 20 years, we're going to develop AIs that are smarter than people. And that's a very scary thought." Hinton said the pace of development was "very, very fast, much faster than I expected" and called for government regulation of the technology. "My worry is that the invisible hand is not going to keep us safe. So just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely," he said. "The only thing that can force those big companies to do more research on safety is government regulation." Hinton is one of the three "godfathers of AI" who have won the ACM AM Turing award - the computer science equivalent of the Nobel prize - for their work. However, one of the trio, Yann LeCun, the chief AI scientist at Mark Zuckerberg's Meta, has played down the existential threat and has said AI "could actually save humanity from extinction".

[3]

'Godfather of AI' says it could drive humans extinct in 10 years

"And how many examples do you know of a more intelligent thing being controlled by a less intelligent thing? There are very few examples." In the 1980s, Prof Hinton invented a method that can autonomously find properties in data and identify specific elements in pictures, which was foundational to modern AI. He said the technology had developed "much faster" than he expected and could make humans the equivalents of "three-year-olds" and AI "the grown-ups". "I think it's like the industrial revolution," he continued. "In the industrial revolution, human strength [became less relevant] because machines were just stronger - if you wanted to dig a ditch, you dug it with a machine. "What we've got now is something that's replacing human intelligence. And just ordinary human intelligence will not be the cutting edge any more, it will be machines." Dickensian change Prof Hinton predicted AI would change ordinary people's lives dramatically, just as the Industrial Revolution did, as documented by Charles Dickens. He said what the future held for life with the technology would "depend very much on what our political systems do with this technology". "My worry is that even though it will cause huge increases in productivity, which should be good for society, it may end up being very bad for society if all the benefit goes to the rich and a lot of people lose their jobs and become poorer," he added. "These things are more intelligent than us. So there was never any chance in the Industrial Revolution that machines would take over from people just because they were stronger. "We were still in control because we had the intelligence. Now, there's a threat that these things can take control, so that's one big difference." He said he "hoped" other "very knowledgeable" experts in the field were right to feel optimistic about the future of the technology. Governments must regulate However, Prof Hinton added: "My worry is that the invisible hand is not going to keep us safe. So just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely. "The only thing that can force those big companies to do more research on safety is government regulation. "So I'm a strong believer that the governments need to force big companies to do a lot of research on safety." Prof Hinton previously said how he had some regrets about introducing the technology to the world. He said: "There's two kinds of regret. There is the kind where you feel guilty because you do something you know you shouldn't have done, and then there's regret where you do something you would do again in the same circumstances but it may in the end not turn out well. "That second regret I have. In the same circumstances, I would do the same again but I am worried that the overall consequence of this is that systems more intelligent than us eventually take control. "We have no experience of what it is like to have things that are smarter than us."

Share

Share

Copy Link

Geoffrey Hinton, Nobel laureate and "Godfather of AI," raises alarm about the rapid advancement of AI technology, estimating a 10-20% chance of human extinction within 30 years. He urges for increased government regulation and AI safety research.

Nobel Laureate Sounds Alarm on AI Risks

Geoffrey Hinton, the British-Canadian computer scientist often referred to as the "Godfather of AI," has recently escalated his warnings about the potential dangers of artificial intelligence. Following his receipt of the 2024 Nobel Prize in Physics for his pioneering work on machine learning algorithms and neural networks, Hinton expressed grave concerns about the rapid advancement of AI technology

1

.Increased Probability of Human Extinction

Hinton now estimates a "10 to 20 percent probability that within the next thirty years, Artificial Intelligence will cause the extinction of humanity"

1

. This represents an increase from his previous estimate of 10%, reflecting his growing concern about the pace of AI development2

.Rapid Advancement and Loss of Control

The renowned scientist believes that AI could soon surpass human intelligence and potentially escape human control. "I suddenly changed my mind about whether these objects will be smarter than us. I think they are very close to it today and will be much smarter than us in the future," Hinton explained

1

. He likened the potential relationship between AI and humans to that of adults and three-year-old children, highlighting the significant power imbalance that could emerge3

.Call for Government Regulation

Hinton emphasized the need for increased government regulation and AI safety research. "Just leaving it to the profit motive of large companies is not going to be sufficient to make sure they develop it safely," he warned

1

. He suggested that investment in safety research should be increased 30-fold to mitigate potential risks1

.Related Stories

Potential Societal Impact

While acknowledging the benefits of AI in areas such as healthcare, Hinton expressed concerns about its unchecked development. He warned of increased risks of cyber and phishing attacks, fake videos, and ongoing political interference

1

. Hinton also cautioned about the potential socioeconomic impacts, drawing parallels to the Industrial Revolution and expressing worry that the benefits of AI might disproportionately favor the wealthy3

.Scientific Community's Response

Hinton's concerns are echoed by other experts in the field. John Hopfield, a 91-year-old emeritus professor at Princeton University, expressed worry about the lack of understanding of modern AI systems' functioning

1

. However, not all experts share this pessimistic outlook. Yann LeCun, another AI pioneer, has downplayed the existential threat and suggested that AI "could actually save humanity from extinction"2

.As the debate continues, Hinton's warnings serve as a stark reminder of the potential risks associated with rapidly advancing AI technology and the urgent need for comprehensive safety measures and regulations.

References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

AI Godfather Geoffrey Hinton Warns of Potential AI Takeover, Urges Caution in Development

29 Apr 2025•Technology

AI 'Godfather' Geoffrey Hinton Proposes Controversial 'Maternal Instincts' Solution for AI Safety

14 Aug 2025•Science and Research

AI Pioneer Warns of Massive Unemployment and Economic Disparity in the Age of Artificial Intelligence

07 Sept 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology