AI-Powered Smartphone Diagnostics: A Game-Changer for Nystagmus Detection

4 Sources

4 Sources

[1]

Novel deep learning model leverages real-time data to assist in diagnosing nystagmus

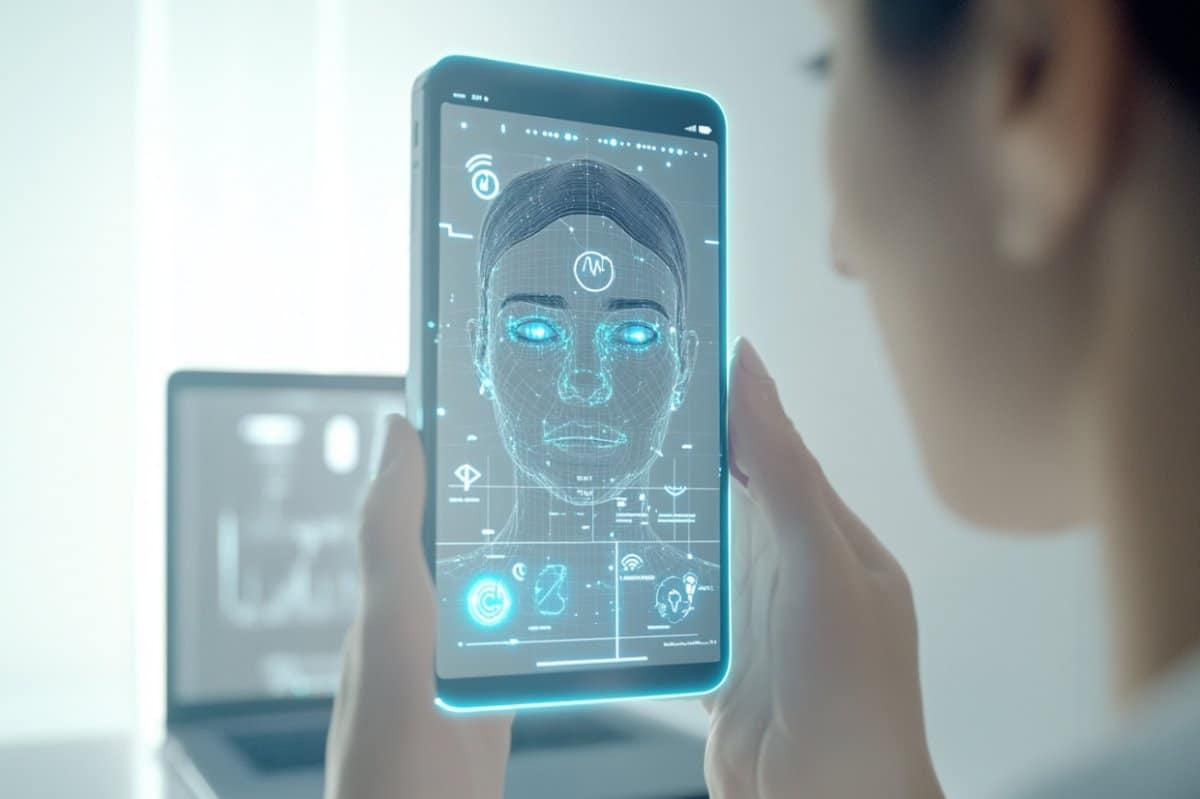

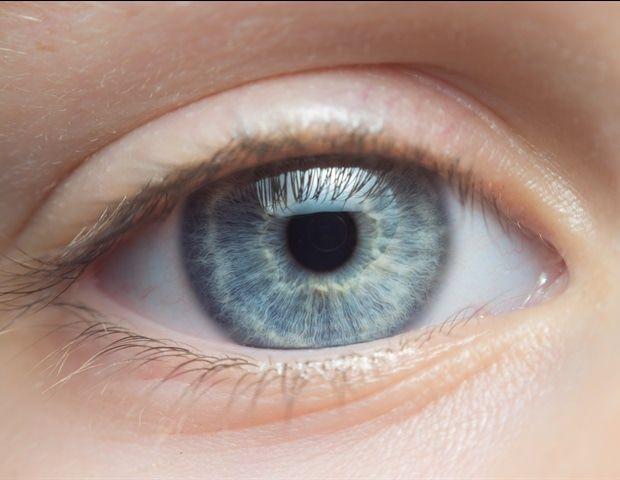

Florida Atlantic UniversityJun 4 2025 Artificial intelligence is playing an increasingly vital role in modern medicine, particularly in interpreting medical images to help clinicians assess disease severity, guide treatment decisions and monitor disease progression. Despite these advancements, most current AI models are based on static datasets, limiting their adaptability and real-time diagnostic potential. To address this gap, researchers from Florida Atlantic University and collaborators, have developed a novel proof-of-concept deep learning model that leverages real-time data to assist in diagnosing nystagmus - a condition characterized by involuntary, rhythmic eye movements often linked to vestibular or neurological disorders. Gold-standard diagnostic tools such as videonystagmography (VNG) and electronystagmography have been used to detect nystagmus. However, these methods come with notable drawbacks: high costs (with VNG equipment often exceeding $100,000), bulky setups, and inconvenience for patients during testing. FAU's AI-driven system offers a cost-effective and patient-friendly alternative, for a quick and reliable screening for balance disorders and abnormal eye movements. The platform allows patients to record their eye movements using a smartphone, securely upload the video to a cloud-based system, and receive remote diagnostic analysis from vestibular and balance experts - all without leaving their home. At the heart of this innovation is a deep learning framework that uses real-time facial landmark tracking to analyze eye movements. The AI system automatically maps 468 facial landmarks and evaluates slow-phase velocity - a key metric for identifying nystagmus intensity, duration and direction. It then generates intuitive graphs and reports that can easily be interpreted by audiologists and other clinicians during virtual consultations. Results of the pilot study involving 20 participants, published in Cureus (part of Springer Nature), demonstrated that the AI system's assessments closely mirrored those obtained through traditional medical devices. This early success underscores the model's accuracy and potential for clinical reliability, even in its initial stages. Our AI model offers a promising tool that can partially supplement - or, in some cases, replace - conventional diagnostic methods, especially in telehealth environments where access to specialized care is limited. By integrating deep learning, cloud computing and telemedicine, we're making diagnosis more flexible, affordable and accessible - particularly for low-income rural and remote communities." Ali Danesh, Ph.D., principal investigator of the study, senior author, professor in the Department of Communication Sciences and Disorders within FAU's College of Education and professor of biomedical science within FAU's Charles E. Schmidt College of Medicine The team trained their algorithm on more than 15,000 video frames, using a structured 70:20:10 split for training, testing and validation. This rigorous approach ensured the model's robustness and adaptability across varied patient populations. The AI also employs intelligent filtering to eliminate artifacts such as eye blinks, ensuring accurate and consistent readings. Beyond diagnostics, the system is designed to streamline clinical workflows. Physicians and audiologists can access AI-generated reports via telehealth platforms, compare them with patients' electronic health records, and develop personalized treatment plans. Patients, in turn, benefit from reduced travel, lower costs and the convenience of conducting follow-up assessments by simply uploading new videos from home - enabling clinicians to track disorder progression over time. In parallel, FAU researchers are also experimenting with a wearable headset equipped with deep learning capabilities to detect nystagmus in real-time. Early tests in controlled environments have shown promise, though improvements are still needed to address challenges such as sensor noise and variability among individual users. "While still in its early stages, our technology holds the potential to transform care for patients with vestibular and neurological disorders," said Harshal Sanghvi, Ph.D., first author, an FAU electrical engineering and computer science graduate, and a postdoctoral fellow at FAU's College of Medicine and College of Business. "With its ability to provide non-invasive, real-time analysis, our platform could be deployed widely - in clinics, emergency rooms, audiology centers and even at home." Sanghvi worked closely with his mentors and co-authors on this project including Abhijit S. Pandya, Ph.D., FAU Department of Electrical Engineering and Computer Science and FAU Department of Biomedical Engineering, and B. Sue Graves, Ed.D., Department of Exercise Science and Health Promotion, FAU Charles E. Schmidt College of Science. This interdisciplinary initiative includes collaborators from FAU's College of Business, College of Medicine, College of Engineering and Computer Science, College of Science, and partners from Advanced Research, Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital, Loma Linda University Medical Center, and Broward Health North. Together, they are working to enhance the model's accuracy, expand testing across diverse patient populations, and move toward FDA approval for broader clinical adoption. "As telemedicine becomes an increasingly integral part of health care delivery, AI-powered diagnostic tools like this one are poised to improve early detection, streamline specialist referrals, and reduce the burden on health care providers," said Danesh. "Ultimately, this innovation promises better outcomes for patients -regardless of where they live." Along with Pandya and Graves, study co-authors are Jilene Moxam, Advanced Research LLC; Sandeep K. Reddy, Ph.D., FAU College of Engineering and Computer Science; Gurnoor S. Gill, FAU College of Medicine; Sajeel A. Chowdhary, M.D., Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital; Kakarla Chalam, M.D., Ph.D., Loma Linda University; and Shailesh Gupta, M.D., Broward Health North. Florida Atlantic University Journal reference: Sanghvi, H., et al. (2025). Artificial Intelligence-Driven Telehealth Framework for Detecting Nystagmus. Cureus. doi.org/10.7759/cureus.84036.

[2]

AI Diagnoses Eye Movement Disorders from Home - Neuroscience News

Summary: Researchers have developed an AI-based diagnostic tool that uses smartphone video and cloud computing to detect nystagmus -- a key symptom of balance and neurological disorders. Unlike traditional methods like videonystagmography, which are expensive and cumbersome, this deep learning system provides a low-cost, patient-friendly alternative for remote diagnosis. It maps 468 facial landmarks in real time, analyzes slow-phase velocity, and generates clinician-ready reports. Early testing shows that the tool delivers results comparable to gold-standard equipment, with the potential to expand access to care through telemedicine. Artificial intelligence is playing an increasingly vital role in modern medicine, particularly in interpreting medical images to help clinicians assess disease severity, guide treatment decisions and monitor disease progression. Despite these advancements, most current AI models are based on static datasets, limiting their adaptability and real-time diagnostic potential. To address this gap, researchers from Florida Atlantic University and collaborators, have developed a novel proof-of-concept deep learning model that leverages real-time data to assist in diagnosing nystagmus - a condition characterized by involuntary, rhythmic eye movements often linked to vestibular or neurological disorders. Gold-standard diagnostic tools such as videonystagmography (VNG) and electronystagmography have been used to detect nystagmus. However, these methods come with notable drawbacks: high costs (with VNG equipment often exceeding $100,000), bulky setups, and inconvenience for patients during testing. FAU's AI-driven system offers a cost-effective and patient-friendly alternative, for a quick and reliable screening for balance disorders and abnormal eye movements. The platform allows patients to record their eye movements using a smartphone, securely upload the video to a cloud-based system, and receive remote diagnostic analysis from vestibular and balance experts - all without leaving their home. At the heart of this innovation is a deep learning framework that uses real-time facial landmark tracking to analyze eye movements. The AI system automatically maps 468 facial landmarks and evaluates slow-phase velocity - a key metric for identifying nystagmus intensity, duration and direction. It then generates intuitive graphs and reports that can easily be interpreted by audiologists and other clinicians during virtual consultations. Results of the pilot study involving 20 participants, published in Cureus (part of Springer Nature), demonstrated that the AI system's assessments closely mirrored those obtained through traditional medical devices. This early success underscores the model's accuracy and potential for clinical reliability, even in its initial stages. "Our AI model offers a promising tool that can partially supplement - or, in some cases, replace - conventional diagnostic methods, especially in telehealth environments where access to specialized care is limited," said Ali Danesh, Ph.D., principal investigator of the study, senior author, a professor in the Department of Communication Sciences and Disorders within FAU's College of Education and a professor of biomedical science within FAU's Charles E. Schmidt College of Medicine. "By integrating deep learning, cloud computing and telemedicine, we're making diagnosis more flexible, affordable and accessible - particularly for low-income rural and remote communities." The team trained their algorithm on more than 15,000 video frames, using a structured 70:20:10 split for training, testing and validation. This rigorous approach ensured the model's robustness and adaptability across varied patient populations. The AI also employs intelligent filtering to eliminate artifacts such as eye blinks, ensuring accurate and consistent readings. Beyond diagnostics, the system is designed to streamline clinical workflows. Physicians and audiologists can access AI-generated reports via telehealth platforms, compare them with patients' electronic health records, and develop personalized treatment plans. Patients, in turn, benefit from reduced travel, lower costs and the convenience of conducting follow-up assessments by simply uploading new videos from home - enabling clinicians to track disorder progression over time. In parallel, FAU researchers are also experimenting with a wearable headset equipped with deep learning capabilities to detect nystagmus in real-time. Early tests in controlled environments have shown promise, though improvements are still needed to address challenges such as sensor noise and variability among individual users. "While still in its early stages, our technology holds the potential to transform care for patients with vestibular and neurological disorders," said Harshal Sanghvi, Ph.D., first author, an FAU electrical engineering and computer science graduate, and a postdoctoral fellow at FAU's College of Medicine and College of Business. "With its ability to provide non-invasive, real-time analysis, our platform could be deployed widely - in clinics, emergency rooms, audiology centers and even at home." Sanghvi worked closely with his mentors and co-authors on this project including Abhijit S. Pandya, Ph.D., FAU Department of Electrical Engineering and Computer Science and FAU Department of Biomedical Engineering, and B. Sue Graves, Ed.D., Department of Exercise Science and Health Promotion, FAU Charles E. Schmidt College of Science. This interdisciplinary initiative includes collaborators from FAU's College of Business, College of Medicine, College of Engineering and Computer Science, College of Science, and partners from Advanced Research, Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital, Loma Linda University Medical Center, and Broward Health North. Together, they are working to enhance the model's accuracy, expand testing across diverse patient populations, and move toward FDA approval for broader clinical adoption. "As telemedicine becomes an increasingly integral part of health care delivery, AI-powered diagnostic tools like this one are poised to improve early detection, streamline specialist referrals, and reduce the burden on health care providers," said Danesh. "Ultimately, this innovation promises better outcomes for patients -regardless of where they live." Along with Pandya and Graves, study co-authors are Jilene Moxam, Advanced Research LLC; Sandeep K. Reddy, Ph.D., FAU College of Engineering and Computer Science; Gurnoor S. Gill, FAU College of Medicine; Sajeel A. Chowdhary, M.D., Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital; Kakarla Chalam, M.D., Ph.D., Loma Linda University; and Shailesh Gupta, M.D., Broward Health North. Artificial Intelligence-Driven Telehealth Framework for Detecting Nystagmus Purpose: This study reports the implementation of a proof-of-concept, artificial intelligence (AI)-driven clinical decision support system for detecting nystagmus. The system collects and analyzes real-time clinical data to assist in diagnosing, demonstrating its potential for integration into telemedicine platforms. Patients may benefit from the system's convenience, reduced need for travel and associated costs, and increased flexibility and increased flexibility through both in-person and virtual applications. Methods: A bedside clinical test revealed vertigo during rightward body movement, and the patient was referred for videonystagmography (VNG). The VNG showed normal central ocular findings. During the right Dix-Hallpike maneuver, the patient demonstrated rotatory nystagmus accompanied by vertigo. Caloric tests revealed symmetric responses, with no evidence of unilateral or bilateral weakness. A cloud-based deep learning framework was developed and trained to track eye movements and detect 468 distinct facial landmarks in real time. Ten subjects participated in this study. Results: The slow-phase velocity (SPV) value was verified for statistical significance using both VNG machine-generated graphs and clinician assessment. The average SPV was compared to the value generated by the VNG machine. The calculated statistical values were as follows: p < 0.05, a mean squared error of 0.00459, and a correction error of ±4.8%. Conclusion: This deep learning model demonstrates the potential to provide diagnostic consultation to individuals in remote locations. To some extent, it may supplement or partially replace traditional methods such as VNG. Ongoing advancements in machine learning within medicine will enhance the ability to diagnose patients, facilitate appropriate specialist referrals, and support physicians in post-treatment follow-up. As this was a proof-of-concept pilot study, further research with a larger sample size is warranted.

[3]

'Eye' on health: AI detects dizziness and balance disorders remotely

Artificial intelligence is playing an increasingly vital role in modern medicine, particularly in interpreting medical images to help clinicians assess disease severity, guide treatment decisions and monitor disease progression. Despite these advancements, most current AI models are based on static datasets, limiting their adaptability and real-time diagnostic potential. To address this gap, researchers from Florida Atlantic University and collaborators have developed a novel proof-of-concept deep learning model that leverages real-time data to assist in diagnosing nystagmus -- a condition characterized by involuntary, rhythmic eye movements often linked to vestibular or neurological disorders. Gold-standard diagnostic tools such as videonystagmography (VNG) and electronystagmography have been used to detect nystagmus. However, these methods come with notable drawbacks: high costs (with VNG equipment often exceeding $100,000), bulky setups, and inconvenience for patients during testing. FAU's AI-driven system offers a cost-effective and patient-friendly alternative, for a quick and reliable screening for balance disorders and abnormal eye movements. The platform allows patients to record their eye movements using a smartphone, securely upload the video to a cloud-based system, and receive remote diagnostic analysis from vestibular and balance experts -- all without leaving their home. At the heart of this innovation is a deep learning framework that uses real-time facial landmark tracking to analyze eye movements. The AI system automatically maps 468 facial landmarks and evaluates slow-phase velocity -- a key metric for identifying nystagmus intensity, duration and direction. It then generates intuitive graphs and reports that can easily be interpreted by audiologists and other clinicians during virtual consultations. Results of the pilot study involving 20 participants, published in Cureus, demonstrated that the AI system's assessments closely mirrored those obtained through traditional medical devices. This early success underscores the model's accuracy and potential for clinical reliability, even in its initial stages. "Our AI model offers a promising tool that can partially supplement -- or, in some cases, replace -- conventional diagnostic methods, especially in telehealth environments where access to specialized care is limited," said Ali Danesh, Ph.D., principal investigator of the study, senior author, a professor in the Department of Communication Sciences and Disorders within FAU's College of Education and a professor of biomedical science within FAU's Charles E. Schmidt College of Medicine. "By integrating deep learning, cloud computing and telemedicine, we're making diagnosis more flexible, affordable and accessible -- particularly for low-income rural and remote communities." The team trained their algorithm on more than 15,000 video frames, using a structured 70:20:10 split for training, testing and validation. This rigorous approach ensured the model's robustness and adaptability across varied patient populations. The AI also employs intelligent filtering to eliminate artifacts such as eye blinks, ensuring accurate and consistent readings. Beyond diagnostics, the system is designed to streamline clinical workflows. Physicians and audiologists can access AI-generated reports via telehealth platforms, compare them with patients' electronic health records, and develop personalized treatment plans. Patients, in turn, benefit from reduced travel, lower costs and the convenience of conducting follow-up assessments by simply uploading new videos from home -- enabling clinicians to track disorder progression over time. In parallel, FAU researchers are also experimenting with a wearable headset equipped with deep learning capabilities to detect nystagmus in real-time. Early tests in controlled environments have shown promise, though improvements are still needed to address challenges such as sensor noise and variability among individual users. "While still in its early stages, our technology holds the potential to transform care for patients with vestibular and neurological disorders," said Harshal Sanghvi, Ph.D., first author, an FAU electrical engineering and computer science graduate, and a postdoctoral fellow at FAU's College of Medicine and College of Business. "With its ability to provide non-invasive, real-time analysis, our platform could be deployed widely -- in clinics, emergency rooms, audiology centers and even at home." Sanghvi worked closely with his mentors and co-authors on this project, including Abhijit S. Pandya, Ph.D., FAU Department of Electrical Engineering and Computer Science and FAU Department of Biomedical Engineering, and B. Sue Graves, Ed.D., Department of Exercise Science and Health Promotion, FAU Charles E. Schmidt College of Science. This interdisciplinary initiative includes collaborators from FAU's College of Business, College of Medicine, College of Engineering and Computer Science, College of Science, and partners from Advanced Research, Marcus Neuroscience Institute -- part of Baptist Health -- at Boca Raton Regional Hospital, Loma Linda University Medical Center, and Broward Health North. Together, they are working to enhance the model's accuracy, expand testing across diverse patient populations, and move toward FDA approval for broader clinical adoption. "As telemedicine becomes an increasingly integral part of health care delivery, AI-powered diagnostic tools like this one are poised to improve early detection, streamline specialist referrals, and reduce the burden on health care providers," said Danesh. "Ultimately, this innovation promises better outcomes for patients -regardless of where they live."

[4]

'Eye' on Health: AI Detects Dizziness and Balance Disorders Remotely | Newswise

Newswise -- Artificial intelligence is playing an increasingly vital role in modern medicine, particularly in interpreting medical images to help clinicians assess disease severity, guide treatment decisions and monitor disease progression. Despite these advancements, most current AI models are based on static datasets, limiting their adaptability and real-time diagnostic potential. To address this gap, researchers from Florida Atlantic University and collaborators, have developed a novel proof-of-concept deep learning model that leverages real-time data to assist in diagnosing nystagmus - a condition characterized by involuntary, rhythmic eye movements often linked to vestibular or neurological disorders. Gold-standard diagnostic tools such as videonystagmography (VNG) and electronystagmography have been used to detect nystagmus. However, these methods come with notable drawbacks: high costs (with VNG equipment often exceeding $100,000), bulky setups, and inconvenience for patients during testing. FAU's AI-driven system offers a cost-effective and patient-friendly alternative, for a quick and reliable screening for balance disorders and abnormal eye movements. The platform allows patients to record their eye movements using a smartphone, securely upload the video to a cloud-based system, and receive remote diagnostic analysis from vestibular and balance experts - all without leaving their home. At the heart of this innovation is a deep learning framework that uses real-time facial landmark tracking to analyze eye movements. The AI system automatically maps 468 facial landmarks and evaluates slow-phase velocity - a key metric for identifying nystagmus intensity, duration and direction. It then generates intuitive graphs and reports that can easily be interpreted by audiologists and other clinicians during virtual consultations. Results of the pilot study involving 20 participants, published in Cureus (part of Springer Nature), demonstrated that the AI system's assessments closely mirrored those obtained through traditional medical devices. This early success underscores the model's accuracy and potential for clinical reliability, even in its initial stages. "Our AI model offers a promising tool that can partially supplement - or, in some cases, replace - conventional diagnostic methods, especially in telehealth environments where access to specialized care is limited," said Ali Danesh, Ph.D., principal investigator of the study, senior author, a professor in the Department of Communication Sciences and Disorders within FAU's College of Education and a professor of biomedical science within FAU's Charles E. Schmidt College of Medicine. "By integrating deep learning, cloud computing and telemedicine, we're making diagnosis more flexible, affordable and accessible - particularly for low-income rural and remote communities." The team trained their algorithm on more than 15,000 video frames, using a structured 70:20:10 split for training, testing and validation. This rigorous approach ensured the model's robustness and adaptability across varied patient populations. The AI also employs intelligent filtering to eliminate artifacts such as eye blinks, ensuring accurate and consistent readings. Beyond diagnostics, the system is designed to streamline clinical workflows. Physicians and audiologists can access AI-generated reports via telehealth platforms, compare them with patients' electronic health records, and develop personalized treatment plans. Patients, in turn, benefit from reduced travel, lower costs and the convenience of conducting follow-up assessments by simply uploading new videos from home - enabling clinicians to track disorder progression over time. In parallel, FAU researchers are also experimenting with a wearable headset equipped with deep learning capabilities to detect nystagmus in real-time. Early tests in controlled environments have shown promise, though improvements are still needed to address challenges such as sensor noise and variability among individual users. "While still in its early stages, our technology holds the potential to transform care for patients with vestibular and neurological disorders," said Harshal Sanghvi, Ph.D., first author, an FAU electrical engineering and computer science graduate, and a postdoctoral fellow at FAU's College of Medicine and College of Business. "With its ability to provide non-invasive, real-time analysis, our platform could be deployed widely - in clinics, emergency rooms, audiology centers and even at home." Sanghvi worked closely with his mentors and co-authors on this project including Abhijit S. Pandya, Ph.D., FAU Department of Electrical Engineering and Computer Science and FAU Department of Biomedical Engineering, and B. Sue Graves, Ed.D., Department of Exercise Science and Health Promotion, FAU Charles E. Schmidt College of Science. This interdisciplinary initiative includes collaborators from FAU's College of Business, College of Medicine, College of Engineering and Computer Science, College of Science, and partners from Advanced Research, Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital, Loma Linda University Medical Center, and Broward Health North. Together, they are working to enhance the model's accuracy, expand testing across diverse patient populations, and move toward FDA approval for broader clinical adoption. "As telemedicine becomes an increasingly integral part of health care delivery, AI-powered diagnostic tools like this one are poised to improve early detection, streamline specialist referrals, and reduce the burden on health care providers," said Danesh. "Ultimately, this innovation promises better outcomes for patients -regardless of where they live." Along with Pandya and Graves, study co-authors are Jilene Moxam, Advanced Research LLC; Sandeep K. Reddy, Ph.D., FAU College of Engineering and Computer Science; Gurnoor S. Gill, FAU College of Medicine; Sajeel A. Chowdhary, M.D., Marcus Neuroscience Institute - part of Baptist Health - at Boca Raton Regional Hospital; Kakarla Chalam, M.D., Ph.D., Loma Linda University; and Shailesh Gupta, M.D., Broward Health North. In 1964, Florida Atlantic University's College of Education became South Florida's first provider of education professionals. Dedicated to advancing research and educational excellence, the College is nationally recognized for its innovative programs, evidence-based training, and professional practice. The College spans five departments: Curriculum and Instruction, Educational Leadership and Research Methodology, Special Education, Counselor Education, and Communication Sciences and Disorders, to prepare highly skilled teachers, school leaders, counselors, and speech pathologists. Faculty engage in cutting-edge research supported by prestigious organizations, including the National Science Foundation, the U.S. Department of Education, and the State of Florida.

Share

Share

Copy Link

Researchers at Florida Atlantic University have developed an AI-based system that uses smartphone videos to diagnose nystagmus, offering a cost-effective and accessible alternative to traditional diagnostic methods.

Revolutionizing Nystagmus Diagnosis with AI and Smartphones

Researchers at Florida Atlantic University (FAU) have developed a groundbreaking artificial intelligence (AI) system that could transform the diagnosis of nystagmus, a condition characterized by involuntary eye movements often associated with vestibular or neurological disorders. This innovative approach leverages smartphone technology and deep learning to offer a cost-effective and accessible alternative to traditional diagnostic methods

1

2

3

4

.The Challenge of Traditional Diagnostics

Conventional diagnostic tools for nystagmus, such as videonystagmography (VNG) and electronystagmography, while effective, come with significant drawbacks. These include:

- High costs (VNG equipment often exceeding $100,000)

- Bulky setups

- Inconvenience for patients during testing

These limitations have long posed challenges for widespread accessibility, particularly in remote or underserved areas

1

2

3

4

.AI-Powered Solution: How It Works

Source: Neuroscience News

The FAU team's novel deep learning model offers a patient-friendly alternative for screening balance disorders and abnormal eye movements. Key features of the system include:

- Smartphone video recording of eye movements

- Secure upload to a cloud-based system

- Remote diagnostic analysis by vestibular and balance experts

At the core of this innovation is a deep learning framework that utilizes real-time facial landmark tracking. The AI system:

- Automatically maps 468 facial landmarks

- Evaluates slow-phase velocity (a crucial metric for identifying nystagmus intensity, duration, and direction)

- Generates intuitive graphs and reports for clinician interpretation during virtual consultations

1

2

3

4

Promising Early Results

A pilot study involving 20 participants, published in Cureus, demonstrated that the AI system's assessments closely mirrored those obtained through traditional medical devices. This early success underscores the model's potential for clinical reliability

1

2

3

4

.Technical Aspects and Development

The research team trained their algorithm on over 15,000 video frames, using a structured 70:20:10 split for training, testing, and validation. This rigorous approach ensures the model's robustness across varied patient populations. The AI also employs intelligent filtering to eliminate artifacts such as eye blinks, ensuring accurate and consistent readings

1

2

3

4

.Related Stories

Broader Implications and Future Directions

Beyond diagnostics, the system is designed to streamline clinical workflows. Physicians and audiologists can access AI-generated reports via telehealth platforms, compare them with patients' electronic health records, and develop personalized treatment plans

1

2

3

4

.In parallel, FAU researchers are experimenting with a wearable headset equipped with deep learning capabilities to detect nystagmus in real-time. While early tests in controlled environments have shown promise, further improvements are needed to address challenges such as sensor noise and individual user variability

1

2

3

4

.

Source: Medical Xpress

Collaborative Effort and Next Steps

This interdisciplinary initiative involves collaborators from various FAU colleges and external partners. The team is working to enhance the model's accuracy, expand testing across diverse patient populations, and move toward FDA approval for broader clinical adoption

1

2

3

4

.

Source: News-Medical

As telemedicine becomes increasingly integral to healthcare delivery, AI-powered diagnostic tools like this one have the potential to improve early detection, streamline specialist referrals, and reduce the burden on healthcare providers, ultimately promising better outcomes for patients regardless of their location

1

2

3

4

.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

AI Tool VisionMD Revolutionizes Assessment of Parkinson's Disease and Movement Disorders

15 Apr 2025•Health

AI Innovations in Early Detection of Myopic Maculopathy: Protecting Millions from Vision Loss

03 Oct 2024•Health

AI-Powered Video Analysis Revolutionizes Neurological Monitoring in NICUs

12 Nov 2024•Health

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology