Arizona Police Deploy AI-Generated Sketches to Identify Suspects, Raising Bias Concerns

3 Sources

3 Sources

[1]

Arizona Cops Are Now Using AI-Generated 'Mugshots' to Sketch Crime Suspects

An Arizona police department is among the first in the nation to use life-like, AI-generated suspect sketches in an effort to generate tips from the public on open cases. The Washington Post reported that the Goodyear Police Department has used AI twice this year to generate suspect images. The first was in April for an attempted kidnapping case, and again this November for a shooting. Unsurprisingly, both cases remain open, and the images have not led to any arrests. But experts warn that this new tactic could further complicate the already tricky process of identifying suspects. The images were produced by Mike Bonasera, a forensic artist who has been drawing police sketches for about five years, the newspaper reported. In a typical year, he is asked to sketch up to seven composite drawings when police lack clear surveillance images. Earlier this year, Bonasera began uploading some of his old sketches to OpenAI’s ChatGPT. He claims the resulting AI-generated portraits closely resembled the real suspects to which the drawings had been matched. In April, he was cleared by department leaders and the Maricopa County Attorney’s Office to begin using AI in new cases. Bonasera says he works with witnesses directly as he generates the images, allowing them to suggest changes in real-time. He told the Post that the department received a flood of tips after releasing the first AI-generated image in April, which convinced him to repeat the process in November. “We’re now in a day and age where if we post a pencil drawing, most people are not going to acknowledge it,†said Bonasera. Experts interviewed by the Post warned that AI-generated images can bake in biases based on the datasets the models train on. They also noted that these images could face more scrutiny in court. “In court, we all know how drawing works and can evaluate how much reliability to give the human drawn sketch,†Andrew Ferguson, a law professor at George Washington University, wrote The Washington Post in an email. “In court, no one knows how the AI works.†He added that although an AI-generated image might look more realistic, that doesn’t mean that it's more reliable than a traditional human-drawn sketch. The Goodyear Police Department isn't the only local law enforcement agency to make headlines this year for its use of AI. In April, the Westbrook Police Department in Maine issued a public apology after it shared a photo of seized drugs that had been altered with AI.

[2]

Arizona Police Department Start Releasing AI-Generated Image of Suspects

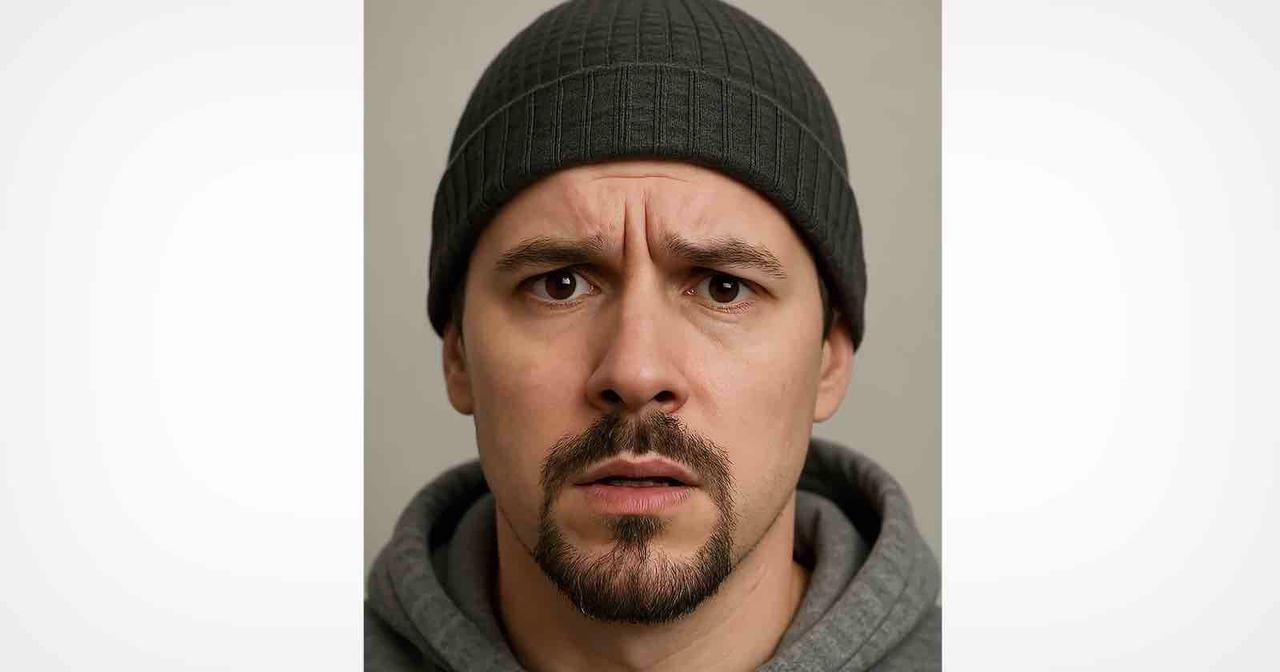

An Arizona police department has begun using AI-generated, photorealistic suspect images instead of hand-drawn sketches in what is believed to be a first in the U.S. In late November, the Goodyear Police Department, which was investigating a shooting in a Phoenix suburb, released what appeared to be a clear mugshot-style image of a middle-aged man wearing a hoodie and a beanie. The image was released alongside a request for information about a shooting near 143rd and Clarendon avenues. It also carried a disclaimer: "This AI-generated image is based on victim/witness statements and does not depict a real person." The department confirmed that the picture was not a photograph or a traditional composite sketch. According to a report by The Washington Post, officers interviewed a witness, produced a basic sketch from that account, and then used ChatGPT to generate a more lifelike image. Police departments across the country now use AI tools for tasks ranging from facial recognition searches to administrative work. However, The Washington Post reports that the Goodyear police department appears to be among the first to replace a sketch artist's drawing with an AI-produced, photo-like composite. According to the news outlet, experts say relying on AI-generated images could introduce new problems to an already imperfect identification process and may be tested in court. Bryan Schwartz, an associate clinical professor of law at the University of Arizona who studies AI in policing, says he is not aware of any other U.S. departments using AI to create suspect images, indicating that Goodyear may be operating in legally and procedurally untested territory. But Mike Bonasera, the police officer responsible for the images, says AI-generated images have proven more effective in attracting public attention than traditional sketches. "We're now in a day and age where if we post a pencil drawing, most people are not going to acknowledge it," Bonasera explains. Earlier this year, Bonasera tested AI by running old sketches through ChatGPT and was struck by how closely the generated images resembled real suspects previously identified. He sought approval from department leadership and the Maricopa County Attorney's Office, later using AI in April to create an image for an attempted kidnapping case. Bonasera says the April image prompted a surge of public tips, prompting him to use the same process for the November shooting case. He believes residents -- particularly younger individuals -- respond more to photorealistic AI-generated images than to pencil sketches. "People are so visual, and that's why this works," he says. He also says generating images with AI helps witnesses describe suspects more precisely. Bonasera creates the images with witnesses present, allowing them to adjust details in real time. The November image shows a surprised expression, which he says the witness repeatedly mentioned. The new images have not yet led to arrests, and both cases remain open. However, the Goodyear police department remains optimistic. "We are hopeful that these new techniques and AI technology will assist in solving more complex cases in the future, here in Arizona and around the country," the department wrote back in April.

[3]

Police Admit They're Using ChatGPT to Generate "Sketches" of Suspects

"We're now in a day and age where if we post a pencil drawing, most people are not going to acknowledge it." AI is no longer just identifying suspected criminals from behind a camera; now it's rendering photorealistic images of their mugs for cops to blast out on social media. Enter ChatGPT, the latest member of the Goodyear Police Department, located on the outskirts of Phoenix. New reporting by the Washington Post revealed that Goodyear cops are using the generative AI tool to pop out photos of suspects in place of pen-and-paper police sketches. "We are hopeful that these new techniques and AI technology will assist in solving more complex cases in the future, here in Arizona and around the country," the Goodyear PD wrote on its social media account when it debuted its first profile. That AI image was meant to help locate a suspect in a kidnapping case, which the department said resulted in a huge influx of tips. Notably, the AI photos haven't led to any arrests. But what's odd about the initiative is the decision behind it. Speaking to WaPo, Mike Bonasera, the sketch artist for the Goodyear PD, said it has a lot to do with social media engagement. "We're now in a day and age where if we post a pencil drawing, most people are not going to acknowledge it," the sketch artist admitted. Basically, the Goodyear PD believes that local residents, especially the younger crowd, are much more likely to interact with a hyper-realistic AI rendering. "People are so visual, and that's why this works," Bonasera said. The Goodyear PD insists their AI renderings aren't "AI fabrications." This, they say, is because the AI images begin as traditional composite drawings -- which are already unreliable -- before they feed them into ChatGPT. That's a dicey contention. When an image generator like ChatGPT spits out a picture of a person, it's drawing on a huge database of photos of real people. This means whatever biases were present in the collection of photos -- for example, the ratio of white to Black faces -- will show up in the final image. "That was something we saw early on with some of these generators," Bryan Schwartz, a law professor at the University of Arizona told WaPo. "That they were really good at creating white faces and not as good at creating some of other races." When we're talking about creating images of real people, that kind of system-wide bias can have devastating results -- especially when cops refuse to acknowledge it. Police departments relying on AI facial recognition to track down suspects, for example, have already led to numerous false arrests in cities including Detroit, New York, and Atlanta. Luckily, Goodyear seems to be the only police department in the US using AI to blast biased composites of suspects, or at least admitting to it. Unfortunately, though, cops love their tech -- meaning it might just be a matter of time before we see more of this nationwide.

Share

Share

Copy Link

The Goodyear Police Department in Arizona has become one of the first U.S. law enforcement agencies to use AI-generated, photorealistic images instead of traditional hand-drawn sketches for suspect identification. Despite an increase in public tips, the new approach has yet to lead to any arrests and experts warn about potential AI bias and legal challenges ahead.

Goodyear Police Department Pioneers AI-Generated Suspect Sketches

The Goodyear Police Department in Arizona has begun using ChatGPT to create photorealistic images of suspects, marking what experts believe is a first for U.S. law enforcement. The department has deployed AI-generated sketches twice this year: first in April for an attempted kidnapping case, and again in November for a shooting investigation near 143rd and Clarendon avenues

1

2

. Both cases remain open, and despite generating public attention, the AI-generated suspect sketches have not resulted in any arrests3

.Mike Bonasera, a forensic artist who has been creating police sketches for about five years, spearheaded this shift toward using AI in the identification process. In a typical year, he produces up to seven composite drawings when police lack clear surveillance images

1

. His process involves interviewing witnesses directly as he generates the images through OpenAI's ChatGPT, allowing them to suggest changes in real-time to details like facial features and expressions2

.From Traditional Hand-Drawn Sketches to Photorealistic Images of Suspects

Bonasera's journey with Police AI began earlier this year when he tested the technology by uploading old traditional hand-drawn sketches into ChatGPT. He was struck by how closely the AI-generated images resembled real suspects who had been previously identified

2

. After seeking approval from department leadership and the Maricopa County Attorney's Office, he received clearance to begin using ChatGPT to generate sketches for new cases1

.The decision to replace traditional methods stems largely from public engagement concerns. "We're now in a day and age where if we post a pencil drawing, most people are not going to acknowledge it," Bonasera explained

1

. He believes residents, particularly younger individuals, respond more actively to photorealistic images than to pencil sketches. The April image prompted an increase in public tips, convincing him to repeat the process for identifying crime suspects in November2

.AI Bias and Legal Challenges Loom Over New Technology

Experts have raised significant concerns about the reliability of AI and its potential to introduce AI bias into suspect identification. When image generators like ChatGPT create images of people, they draw from massive databases of photos of real people, meaning any biases present in those collections will appear in the final output

3

. Bryan Schwartz, an associate clinical professor of law at the University of Arizona who studies AI in policing, noted that early AI generators "were really good at creating white faces and not as good at creating some of other races"3

.

Source: Futurism

Andrew Ferguson, a law professor at George Washington University, highlighted potential legal challenges ahead. "In court, we all know how drawing works and can evaluate how much reliability to give the human drawn sketch," he wrote. "In court, no one knows how the AI works"

1

. He emphasized that while an AI-generated image might appear more realistic, that doesn't necessarily make it more reliable than traditional methods.The concerns about AI in law enforcement extend beyond suspect sketches. Police departments relying on facial recognition technology have already led to numerous false arrests in cities including Detroit, New York, and Atlanta

3

. Bryan Schwartz indicated that he is not aware of any other U.S. law enforcement agency using AI to create suspect images, suggesting Goodyear may be operating in legally and procedurally untested territory2

.Related Stories

What This Means for Law Enforcement and Witness Accounts

Despite the concerns, the Goodyear Police Department remains optimistic about the technology's potential. "We are hopeful that these new techniques and AI technology will assist in solving more complex cases in the future, here in Arizona and around the country," the department stated

2

. The November image released by the department showed a middle-aged man wearing a hoodie and beanie with a surprised expression, which Bonasera says the witness repeatedly mentioned during the creation process2

.

Source: PetaPixel

Each AI-generated image carries a disclaimer stating: "This AI-generated image is based on victim/witness statements and does not depict a real person"

2

. However, the department's insistence that these aren't "AI fabrications" because they begin as traditional composite drawings from witness accounts remains a contentious point among legal experts3

. As other departments watch this experiment unfold, the balance between leveraging technology for public engagement and maintaining accuracy in suspect identification will likely face increasing scrutiny in courtrooms and communities across the nation.References

Summarized by

Navi

Related Stories

US Police Departments Adopt AI Chatbots for Crime Report Writing, Raising Concerns

26 Aug 2024

Federal Judge Exposes ICE Agent's Use of ChatGPT for Use-of-Force Reports

26 Nov 2025•Policy and Regulation

Police Department's AI-Edited Drug Bust Photo Sparks Controversy and Raises Concerns

03 Jul 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy