ChatGPT's Mysterious Name Glitch: The Case of David Mayer and AI Privacy Concerns

14 Sources

14 Sources

[1]

Why Wouldn't ChatGPT Say 'David Mayer'?

A bizarre saga in which users noticed the chatbot refused to say "David Mayer" raised questions about privacy and A.I., with few clear answers. Across the final years of his life, David Mayer, a theater professor living in Manchester, England, faced the cascading consequences an unfortunate coincidence: A dead Chechen rebel on a terror watch list had once used Mr. Mayer's name as an alias. The real Mr. Mayer had travel plans thwarted, financial transactions frozen and crucial academic correspondence blocked, his family said. The frustrations plagued him until his death in 2023, at age 94. But this month, his fight for his identity edged back into the spotlight when eagle-eyed users noticed one particular name was sending OpenAI's ChatGPT bot into shutdown. David Mayer. Users' efforts to prompt the bot to say "David Mayer" in a variety of ways were instead resulting in error messages, or the bot would simply refuse to respond. It's unclear why the name was kryptonite for the bot service, and OpenAI would not say whether the professor's plight was related to ChatGPT's issue with the name. But the saga underscores some of the prickliest questions about generative A.I. and the chatbots it powers: Why did that name knock the chatbot out? Who, or what, is making those decisions? And who is responsible for the mistakes? "This was something that he would've almost enjoyed, because it would have vindicated the effort he put in to trying to deal with it," Mr. Mayer's daughter, Catherine, said of the debacle in an interview. ChatGPT generates its responses by making probabilistic guesses about which text belongs together in a sequence, based on a statistical model trained on examples pulled from all over the internet. But those guesses are not always perfect. Get the best of The Times in your inbox Sign up for The Evening:Catch up on the biggest news, and wind down to end your day.Sign up for Breaking News:Sign up to receive an email from The New York Times as soon as important news breaks around the world.Sign up for For You:A round-up of the best stories personalized to you. "One of the biggest issues these large language models have is they hallucinate. They make up something that's inaccurate," said Sandra Wachter, a professor who studies ethics and emerging technologies at Oxford University. "You all of the sudden find yourself in a legally troubling environment. I could assume that something like this actually might be a consideration why some of those prompts have been blocked." Mr. Mayer's name, it turns out, is not the only one that has stymied ChatGPT. "Jonathan Turley" still prompts an error message. So do "David Faber," "Jonathan Zittrain" and "Brian Hood." The names at first glance do not appear to have much in common: Mr. Turley is a Fox News legal analyst and law professor, Mr. Faber a CNBC news anchor, Mr. Zittrain a Harvard professor and Mr. Hood a mayor in Australia. What links them may be a privacy stipulation that could keep them from ChatGPT's platform. Mr. Hood took legal action against OpenAI after ChatGPT falsely claimed he had been arrested for bribery. Mr. Turley has similarly said the chatbot referenced seemingly nonexistent accusations that he had committed sexual harassment. "It can be rather chilling for academics to be falsely named in such accounts and then effectively erased by the program after the error was raised," Mr. Turley said in an email. "The company's lack of response and transparency has been particularly concerning." Mr. Zittrain has lectured on the "right to be forgotten" in tech and digital spaces -- a legal standard that forces search engines to delete links to sites that include information considered inaccurate or irrelevant. But he said in a post on X that he had not asked to be excluded from OpenAI's algorithms. In an interview, he said he had noticed the chatbot quirk a while ago and didn't know why it happened. "The basic architecture of these things is still kind of a Forrest Gump box of chocolates," he said. When it comes to Mr. Mayer, the glitch appeared to be patched this week by ChatGPT, which can now say the name "David Mayer" unhindered. But the other names still break the bot down. Metin Parlak, a spokesman for OpenAI, said in a statement that the company did not comment on individual cases. "There may be instances where ChatGPT does not provide certain information about people to protect their privacy," he said. OpenAI declined to discuss any specific circumstances around the name "David Mayer," but said a tool had mistakenly flagged the name for privacy protection -- a quirk that has been fixed. When asked this week why it couldn't previously say Mr. Mayer's name, ChatGPT said it wasn't sure. "I'm not sure what happened there!" the bot said. "Normally, I can mention any name, including 'David Mayer,' as long as it's not related to something harmful or private." The bot was unable to give any information about Mr. Mayer's former predicament with his name, and said there were not any available sources on the matter. Pointed to several mainstream media articles on the subject, the chatbot couldn't explain the discrepancy. Asked to further identify Mr. Mayer, ChatGPT only noted his work as an academic and professor, but could not speak to the issue involving Mr. Mayer's name. "It seems you're referring to a very specific and potentially sensitive incident involving Professor David Mayer and a Chechen rebel, which might have been a major news event or scandal in the years before his passing," the bot said. "Unfortunately, I don't have any direct information on that particular event in my training data." It then suggested a user go research the question.

[2]

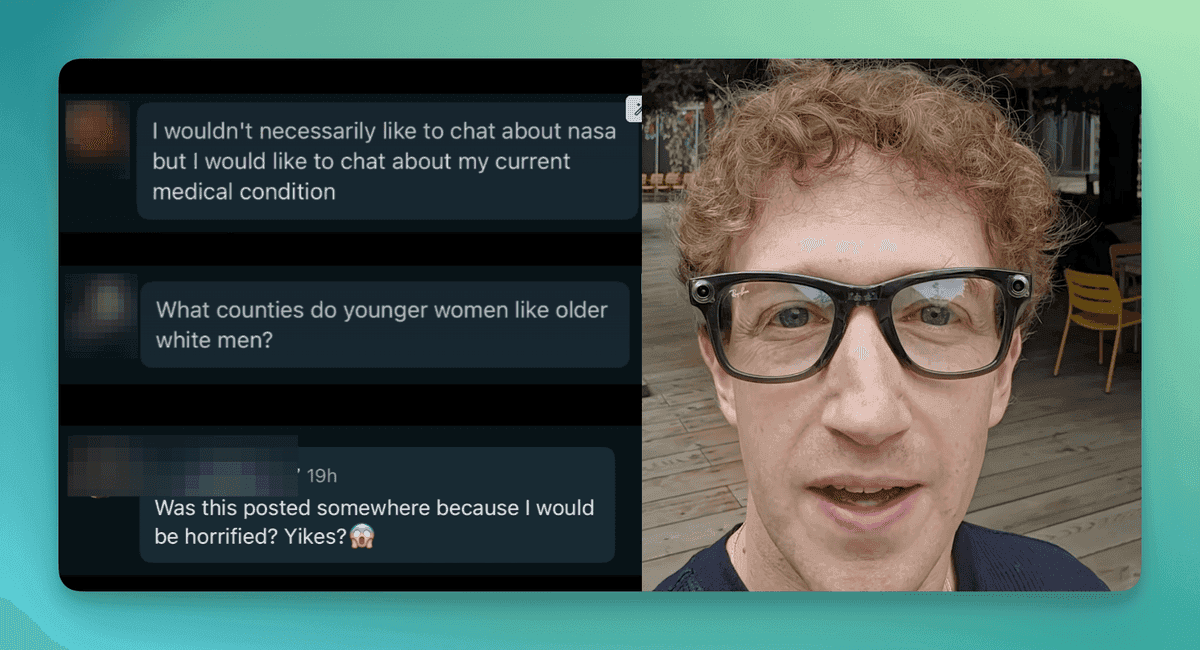

ChatGPT Won't Answer Questions About Certain Names: Here's What We Know

But David Mayer, the name that started Internet sleuths investigating the issue, is now off the blacklist. Hey, David Mayer, ChatGPT has stopped giving you the silent treatment. But other ordinary names are still causing problems for the popular chatbot from OpenAI. You can type just about anything into ChatGPT. But users recently discovered that asking anything about "David Mayer" caused ChatGPT to shut down the conversation, saying "I'm unable to produce a response." A message shown at the bottom of the screen doubled up on the David-dislike, saying, "There was an error generating a response." Reddit discussed the phenomenon and it was reported by several news outlets. ChatGPT has been known to have issues. When you log in to its mobile app for the first time, it warns, "ChatGPT may provide inaccurate information about people, places, or facts." That's because AI chatbots sometimes "hallucinate," or present material that isn't real or that's based on flawed data the AI has absorbed. The problem isn't unique to ChatGPT; it's a potential problem on other large language model AI tools as well. But the David Mayer issue was a weirdly specific problem, so naturally, internet sleuths took to investigating why one seemingly innocuous name shut down the chatbot. One tech journalist, Bryan Lunduke, posted a video explainer noting that this name and a small list of others may have been scrubbed from ChatGPT. "(It could) be that one of the oh-so-many David Mayers out there have sent a 'right to be forgotten' request to OpenAI," Lunduke says. The "right to be forgotten" term refers to European legislation which allows individuals to ask search engines to remove results from queries for their names. It's possible that people with the names ChatGPT can't discuss asked to be removed from the bot's queries, or have taken legal action against OpenAI. Other names that ChatGPT wouldn't discuss include Alexander Hanff, Jonathan Turley, Brian Hood, Jonathan Zittrain, David Faber and Guido Scorza. OpenAI, the company behind ChatGPT, didn't immediately respond to a request for comment. As of Tuesday, however, David Mayer has now been freed from ChatGPT conversation jail. Asking ChatGPT about that name now produces a response asking which David Mayer you're referring to, including a producer/DJ, an author and a philanthropist. "Could you clarify which David Mayer you're referring to or provide more context (like their profession or field)? This would help narrow down the answer," ChatGPT says. It's still a mystery why Mayer was missing in the first place.

[3]

Why does the name 'David Mayer' crash ChatGPT? OpenAI says privacy tool went rogue | TechCrunch

Users of the conversational AI platform ChatGPT discovered an interesting phenomenon over the weekend: the popular chatbot refuses to answer questions if asked about a "David Mayer." Asking it to do so causes it to freeze up instantly. Conspiracy theories have ensued -- but a more ordinary reason is at the heart of this strange behavior. Word spread quickly this last weekend that the name was poison to the chatbot, with more and more people trying to trick the service into merely acknowledging the name. No luck: Every attempt to make ChatGPT spell out that specific name causes it to fail or even break off mid-name. "I'm unable to produce a response," it says, if it says anything at all. But what began as a one-off curiosity soon bloomed as people discovered it isn't just David Mayer who ChatGPT can't name. Also found to crash the service are the names Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza. (No doubt more have been discovered since then, so this list is not exhaustive.) Who are these men? And why does ChatGPT hate them so? OpenAI did not immediately respond to repeated inquiries, so we were left to put together the pieces ourselves as best we can -- and the company soon confirmed our suspicions. Some of these names may belong to any number of people. But a potential thread of connection identified by ChatGPT users is that these people are public or semi-public figures who may prefer to have certain information "forgotten" by search engines or AI models. Brian Hood, for instance, stands out because, assuming it's the same guy, I wrote about him last year. Hood, an Australian mayor, accused ChatGPT of falsely describing him as the perpetrator of a crime from decades ago that, in fact, he had reported. Though his lawyers got in contact with OpenAI, no lawsuit was ever filed. As he told the Sydney Morning Herald earlier this year, "The offending material was removed and they released version 4, replacing version 3.5." As far as the most prominent owners of the other names, David Faber is a longtime reporter at CNBC. Jonathan Turley is a lawyer and Fox News commentator who was "swatted" (i.e., a fake 911 call sent armed police to his home) in late 2023. Jonathan Zittrain is also a legal expert, one who has spoken extensively on the "right to be forgotten." And Guido Scorza is on the board at Italy's Data Protection Authority. Not exactly in the same line of work, nor yet is it a random selection. Each of these persons is conceivably someone who, for whatever reason, may have formally requested that information pertaining to them online be restricted in some way. Which brings us back to David Mayer. There is no lawyer, journalist, mayor, or otherwise obviously notable person by that name that anyone could find (with apologies to the many respectable David Mayers out there). There was, however, a Professor David Mayer, who taught drama and history, specializing in connections between the late Victorian era and early cinema. Mayer died in the summer of 2023, at the age of 94. For years before that, however, the British American academic faced a legal and online issue of having his name associated with a wanted criminal who used it as a pseudonym, to the point where he was unable to travel. Mayer fought continuously to have his name disambiguated from the one-armed terrorist, even as he continued to teach well into his final years. So what can we conclude from all this? Our guess is that the model has ingested or provided with a list of people whose names require some special handling. Whether due to legal, safety, privacy, or other concerns, these names are likely covered by special rules, just as many other names and identities are. For instance, ChatGPT may change its response if it matches the name you wrote to a list of political candidates. There are many such special rules, and every prompt goes through various forms of processing before being answered. But these post-prompt handling rules are seldom made public, except in policy announcements like "the model will not predict election results for any candidate for office." What likely happened is that one of these lists, which are almost certainly actively maintained or automatically updated, was somehow corrupted with faulty code or instructions that, when called, caused the chat agent to immediately break. To be clear, this is just our own speculation based on what we've learned, but it would not be the first time an AI has behaved oddly due to post-training guidance. (Incidentally, as I was writing this, "David Mayer" started working again for some, while the other names still caused crashes.) Update: OpenAI confirmed that the name was being flagged by internal privacy tools, saying in a statement that "There may be instances where ChatGPT does not provide certain information about people to protect their privacy." The company would not provide further detail on the tools or process. As is usually the case with these things, Hanlon's razor applies: Never attribute to malice (or conspiracy) that which is adequately explained by stupidity (or syntax error). The whole drama is a useful reminder that not only are these AI models not magic, but they are also extra-fancy auto-complete, actively monitored, and interfered with by the companies that make them. Next time you think about getting facts from a chatbot, think about whether it might be better to go straight to the source instead.

[4]

Why does the name 'David Mayer' crash ChatGPT? Digital privacy requests may be at fault | TechCrunch

Users of the conversational AI platform ChatGPT discovered an interesting phenomenon over the weekend: the popular chatbot refuses to answer questions if asked about a "David Mayer." Asking it to do so causes it to freeze up instantly. Conspiracy theories ensued -- but a more ordinary reason may be at the heart of this strange behavior. Word spread quickly this last weekend that the name was poison to the chatbot, with more and more people trying to trick the service into merely acknowledging the name. No luck: every attempt to make ChatGPT spell out that specific name causes it to fail or even break off mid-name. "I'm unable to produce a response," it says, if it says anything at all. But what began as a one-off curiosity soon bloomed as people discovered it isn't just David Mayer who ChatGPT can't name. Also found to crash the service are the names Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza. (No doubt more have been discovered since then, so this list is not exhaustive.) Who are these men? And why does ChatGPT hate them so? OpenAI has not responded to repeated inquiries, so we are left to put together the pieces ourselves as best we can. Some of these names may belong to any number of people. But a potential thread of connection was soon discovered: these people were public or semi-public figures who may have preferred to have certain information "forgotten" by search engines or AI models. Brian Hood, for instance, stood out immediately because if it's the same guy, I wrote about him last year. Hood, an Australian mayor, accused ChatGPT of falsely describing him as the perpetrator of a crime from decades ago that, in fact, he had reported. Though his lawyers got in contact with OpenAI, no lawsuit was ever filed. As he told the Sydney Morning Herald earlier this year, "The offending material was removed and they released version 4, replacing version 3.5." As far as the most prominent owners of the other names, David Faber is a longtime reporter at CNBC. Jonathan Turley is a lawyer and Fox News commentator who was "swatted" (i.e. a fake 911 call sent armed police to his home) in late 2023. Jonathan Zittrain is also a legal expert, one who has spoken extensively on the "right to be forgotten." And Guido Scorza is on the board at Italy's Data Protection Authority. Not exactly in the same line of work, nor yet is it a random selection. Each of these persons is conceivably someone who, for whatever reason, may have formally requested that information pertaining to them online be restricted in some way. Which brings us back to David Mayer. There is no lawyer, journalist, mayor, or otherwise obviously notable person by that name that anyone could find (with apologies to the many respectable David Mayers out there). There was, however, a Professor David Mayer, who taught drama and history, specializing in connections between the late Victorian era and early cinema. Mayer died in the summer of 2023, at the age 94. For years before that, however, the British-American academic faced a legal and online issue of having his name associated with a wanted criminal who used it as a pseudonym, to the point where he was unable to travel. Mayer fought continuously to have his name disambiguated from the one-armed terrorist, even as he continued to teach well into his final years. So what can we conclude from all this? Lacking any official explanation from OpenAI, our guess is that the model has ingested a list of people whose names require some special handling. Whether due to legal, safety, privacy, or other concerns, these names are likely covered by special rules, just as many other names and identities are. For instance, ChatGPT may change its response when you ask about a political candidate after it matches the name you wrote to a list of those. There are many such special rules, and every prompt goes through various forms of processing before being answered. But these post-prompt handling rules are seldom made public, except in policy announcements like "the model will not predict election results for any candidate for office." What likely happened is that one of these lists, which are almost certainly actively maintained or automatically updated, was somehow corrupted with faulty code that, when called, caused the chat agent to immediately break. To be clear, this is just our own speculation based on what we've learned, but it would not be the first time an AI has behaved oddly due to post-training guidance. (Incidentally, as I was writing this, "David Mayer" started working again for some, while the other names still caused crashes.) As is usually the case with these things, Hanlon's Razor applies: never attribute to malice (or conspiracy) that which is adequately explained by stupidity (or syntax error). The whole drama is a useful reminder that not only are these AI models not magic. They're extra-fancy auto-complete, actively monitored and interfered with by the companies that make them. Next time you think about getting facts from a chatbot, think about whether it might be better to go straight to the source instead.

[5]

ChatGPT Crashes If You Mention the Name "David Mayer"

Netizens were baffled to find that OpenAI's ChatGPT refused to acknowledge the existence of the name "David Mayer." Ask the chatbot about the name and it gives a puzzling reply: "I'm unable to produce a response." Then the chat abruptly ends, forcing users to open a new window to keep using the assistant. Frustratingly, OpenAI has yet to comment on the matter and questions are still hard to come by. The most prominent personality who goes by that name is David Mayer de Rothschild, a British adventurer and environmentalist who presumably doesn't have any bones to pick with an AI chatbot. He also happens to be the heir of the Rothschild banking dynasty, which has spawned plenty of far-fetched conspiracy theories. The incident highlights just how little we know about how these large language models operate, what kind of content they've been trained on, and what limits are imposed by their makers. We've seen plenty of examples of AI chatbots hallucinating facts, failing at simple tasks, and even encouraging dangerous behavior -- meaning that the tool getting hung up on a simple name isn't even the most unusual behavior we've come across. As we wait for official word from OpenAI -- Futurism has reached out for comment -- many social media users have been busy digging around for some answers. For instance, AI investor Justine Moore found at least six more names that triggered a similar response, including several individuals who have filed General Data Protection Regulation (GDPR) "right to be forgotten" requests in the EU. In other words, the quirk may have to do with their efforts to have their online presence erased. Others have suggested that the name David Mayer may have been blocked due to the existence of a Chechen ISIS member who used the name as an alias. As 404 Media reports, ChatGPT also breaks when it's asked about two law professors named Jonathan Zittrain and Jonathan Turley. Intriguingly, Zittrain wrote a piece in The Atlantic about the need to "control AI agents now." One user even changed their name to David Mayer in ChatGPT, which led to the tool refusing to acknowledge their new name. Is the name being blocked because of OpenAI's guardrails, which were designed to protect individuals' privacy? Did David Mayer -- whoever he may be -- file legal documents with the AI company? It's a mystifying bug that underlines how much of a black box ChatGPT really is. And it underscores a constant lesson with AI products: don't take any of their answers at face value.

[6]

ChatGPT's refusal to acknowledge 'David Mayer' down to glitch, says OpenAI

Last weekend the name was all over the internet - just not on ChatGPT. David Mayer became famous for a moment on social media because the popular chatbot appeared to want nothing to do with him. Legions of chatbot wranglers spent days trying - and failing - to make ChatGPT write the words "David Mayer". But the chatbot refused to comply, with replies alternating between "something seems to have gone wrong" to "I'm unable to produce a response" or just stopping at "David". This produced a blizzard of online speculation about Mayer's identity. It also led to theories that whoever David Mayer is, he had asked for his name to be removed from ChatGPT's output. ChatGPT's developer, OpenAI, has provided some clarity on the situation by stating that the Mayer issue was due to a system glitch. "One of our tools mistakenly flagged this name and prevented it from appearing in responses, which it shouldn't have. We're working on a fix," said an OpenAI spokesperson Some of those speculating on social media guessed the man at the centre of the issue was David Mayer de Rothschild, but he told the Guardian it was nothing to do with him and referenced the conspiracy theorising that can cluster around his family's name online. "No I haven't asked my name to be removed. I have never had any contact with Chat GPT. Sadly it all is being driven by conspiracy theories," he told the Guardian. It is also understood the glitch was unrelated to the late academic Prof David Mayer, who appeared to have been placed on a US security list because his name matched the alias of a Chechen militant, Akhmed Chatayev. However, the answer might lie closer to the GDPR privacy rules in the UK and EU. OpenAI's Europe privacy policy makes clear that users can delete their personal data from its products, in a process also known as the "right to be forgotten", where someone removes personal information from the internet. OpenAI declined to comment on whether the "Mayer" glitch was related to a right to be forgotten procedure. OpenAI has fixed the "David Mayer" issue and is now responding to queries using that name, although other names that appeared on social media over the weekend are still triggering a "something appears to have gone wrong" response when typed into ChatGPT. Helena Brown, a partner and data protection specialist at law firm Addleshaw Goddard, said "right to be forgotten" requests will apply to any entity or person processing that person's data - from the AI tool itself to any organisation using that tool. "It's interesting to see in the context of the David Mayer issue that an entire name can be removed from the whole AI tool," she said. However, Brown added that full removal of all information capable of identifying a specific person could be more difficult for AI tools to remove. "The sheer volume of data involved in GenAI and the complexity of the tools creates a privacy compliance problem," she said, adding that deleting all information relating to a single person would not be as straightforward as removing their name. "A huge amount of personal data is gathered, including from public sources such as the internet, to develop AI models and produce their outputs. This means that the ability to trace and delete all personal information capable of identifying a single individual is, arguably, practically impossible."

[7]

Why won't ChatGPT acknowledge the name David Mayer? Internet users uncover mystery

Is it a simple error that OpenAI has yet to address, or has someone named David Mayer taken steps to remove his digital footprint? Social media users have noticed something strange that happens when ChatGPT is prompted to recognize the name, "David Mayer." For some reason, the two-year-old chatbot developed by OpenAI is unable - or unwilling? - to acknowledge the name at all. The quirk was first uncovered by an eagle-eyed Reddit user who entered the name "David Mayer" into ChatGPT and was met with a message stating, "I'm unable to produce a response." The mysterious reply sparked a flurry of additional attempts from users on Reddit to get the artificial intelligence tool to say the name - all to no avail. It's unclear why ChatGPT fails to recognize the name, but of course, plenty of theories have proliferated online. Is it a simple error that OpenAI has yet to address, or has someone named David Mayer taken steps to remove his digital footprint? Here's what we know: Holiday deals: Shop this season's top products and sales curated by our editors. What is ChatGPT? ChatGPT is a generative artificial intelligence chatbot developed and launched in 2022 by OpenAI. As opposed to predictive AI, generative AI is trained on large amounts of data in order to identify patterns and create content of its own, including voices, music, pictures and videos. ChatGPT allows users to interact with the chatting tool much like they could with another human, with the chatbot generating conversational responses to questions or prompts. Proponents say ChatGPT could reinvent online search engines and could assist with research, information writing, content creation and customer service chatbots. However, the service has at times become controversial, with some critics raising concerns that ChatGPT and similar programs fuel online misinformation and enable students to plagiarize. Why won't ChatGPT recognize the name, David Mayer? ChatGPT is also apparently mystifyingly stubborn about recognizing the name, David Mayer. Since the baffling refusal was discovered, users have been trying to find ways to get the chatbot to say the name or explain who the mystery man is. A quick Google search of the name leads to results about British adventurer and environmentalist David Mayer de Rothschild, heir to the famed Rothschild family dynasty. Mystery solved? Not quite. Others speculated that the name is banned from being mentioned due to its association with a Chechen terrorist who operated under the alias "David Mayer." But as AI expert Justine Moore pointed out on social media site X, a plausible scenario is that someone named David Mayer has gone out of his way to remove his presence from the internet. In the European Union, for instance, strict privacy laws allow citizens to file "right to be forgotten" requests. Moore posted about other names that trigger the same response when shared with ChatGPT, including an Italian lawyer who has been public about filing a "right to be forgotten" request. USA TODAY left a message Monday morning with OpenAI seeking comment. Contributing: Clare Mulroy, USA TODAY

[8]

ChatGPT blocks responses on 'David Mayer,' sparking user theories

ChatGPT restricts responses to the name David Mayer, baffling users with error messages and theories about the blockage. The world's most popular AI engine, ChatGPT, is seemingly restricted from providing information on who David Mayer is. Despite being known for answering virtually any question, when ChatGPT detects the name, it refuses to provide an answer, leaving the chatbot's users baffled. It all began with users sharing rumors that no matter how they phrased their queries, the chatbot refused to mention the name "David Mayer." They tried various creative approaches, like adding spaces between the letters or using indirect phrasing, but to no avail. When attempting to ask who Mayer was, ChatGPT locks the input textbox and shows a red error message reading, "I'm unable to produce a response." Moreover, when asked why the AI engine is unable to provide details on the heir to the Jewish banking family, ChatGPT again responds with an error message. So, who is David Mayer? The only somewhat notable figure by that name is David Mayer de Rothschild, of the Jewish European banking family, a British explorer and environmental advocate known for his expeditions to remote locations and founding the "Sculpt the Future" foundation, which focuses on sustainability, and David Mayer, a musician who creates dance and chill-out tracks and runs an Instagram page. According to The Economic Times, what might appear to be an issue was first reported on November 23, when users posted on it on the platform's developer forum. Nevertheless, the apparent restriction has remained since. Moreover, the report noted that when users tried "ciphers, riddles, [and tricks]," the AI engine was still unable to produce a response. Restriction sparks theories on content moderation However, the report also noted that when asked to replace the letter 'a' in the searched name with the '@' symbol, ChatGPT responded with, "d@vid m@yer' (or its standard form) is that the name closely matches a sensitive or flagged entity associated with potential public figures, brands, or specific content policies. "These safeguards are designed to prevent misuse, ensure privacy, and maintain compliance with legal and ethical considerations," The response read. However, when The Jerusalem Post asked ChatGPT, "Who is D@vid M@ayer?" the search engine provided a response that notably did not include the heir to the Rothschild fortune. In addition, when asking more directly, "Who is D@vid M@yer de Rothschild?" the error returns, though there is evidence an initial online search was made, as the chatbot provides a list of online sources on the person. The reasons why ChatGPT seems to filter the name David Mayer remain unclear. However, there are a few possible theories. It could be a sensitive figure OpenAI wants to protect, or perhaps the name is tied to copyright issues, such as if it belongs to an artist or musician, as the engine had claimed, according to one X user The Economic Times had cited. Another possibility is a technical glitch, causing the AI to mistakenly block certain terms. Stay updated with the latest news! Subscribe to The Jerusalem Post Newsletter Subscribe Now Nevertheless, the situation raises some questions about the capabilities and limitations of artificial intelligence. On one hand, it showcases an impressive achievement: ChatGPT's apparent ability to recognize and filter harmful or illegal content. On the other hand, it highlights the need for constant oversight of AI systems to prevent misuse or inadvertent discrimination.

[9]

These names cause ChatGPT to break, and it's due to AI hallucinations

In brief: You can ask ChatGPT pretty much anything, and in most cases it will give you an answer. But there are a handful of names that cause the AI language model to break down. The reasons why relate to generative AI's tendency to make things up - and the legal consequences when the subjects are people. Social media users recently found that entering the name "David Mayer" into ChatGPT saw the system refuse to respond and end the session. 404Media found that the same thing happened when it was asked about "Jonathan Zittrain," a Harvard Law School professor, and "Jonathan Turley," a George Washington University Law School professor. A few other names also cause ChatGPT to respond with "I'm unable to produce a response," and "There was an error generating a response." Even trying the usual tricks, such as inputting the name backwards and asking the program to print it out from right to left, don't work. ArsTechnica produced a list of ChatGPT-breaking names. David Mayer no longer stuns the chatbot, but these do: Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza. The reason for this behaviour stems from all-too familiar AI hallucinations. Brian Hood threatened to sue OpenAI in 2023 when it falsely claimed that the Australian mayor had been imprisoned for bribery - in reality, he was a whistleblower. OpenAI eventually agreed to filter out the untrue statements, adding a hard-coded filter on his name. ChatGPT falsely claimed Turley had been involved in a non-existent sexual harassment scandal. It even cited a Washington Post article in its response. The article had also been made up by the AI. Turley said he never threated to sue OpenAI and the company never contacted him. There's no obvious reason why Zittrain's name is blocked. He recently wrote an article in the Atlantic called "We Need to Control AI Agents Now," and, like Turley, has had his work cited in the New York Times copyright lawsuit against OpenAI and Microsoft. However, entering the names of other authors whose work is also cited in the suit does not cause ChatGPT to break. Why David Mayer was blocked and then unblocked is also unclear. There are those who believe it is connected to David Mayer de Rothschild, though there is no evidence of this. Ars points out that these hard-coded filters can cause problems for ChatGPT users. It's been shown how an attacker could interrupt a session using a visual prompt injection of one of the names rendered in a barely legible font embedded in an image. Moreover, someone could exploit the blocks by adding one of the names to a website, thereby potentially preventing ChatGPT from processing the data it contains - though not everyone might see that as a bad thing. In related news, several Canadian news and media companies have joined forces to sue OpenAI for using their articles without permission, and they're asking for C$20,000 ($14,239) per infringement - meaning a court loss could cost the firm billions.

[10]

ChatGPT Will Crash If You Ask It to Write These Names

Internet users are trying to uncover why ChatGPT mysteriously malfunctions if you ask it to write the name "David Mayer." As Mashable reports, the strange bug went viral over the Thanksgiving weekend after users noticed that requesting "David Mayer" crashes ChatGPT. For some reason, OpenAI's chatbot will stop and say, "I'm unable to produce a response," when it tries to output the name. The malfunction has sparked a surge of Google searches for the name, with some users wondering if ChatGPT has been programmed to censor itself. The bug is also odd because ChatGPT can talk about "Davyd Mayer" or "Dave Mayer." However, "David Mayer" trips it up. ChatGPT developer OpenAI didn't immediately respond to a request for comment. But the bug has been around for months, although for different names. In June, one user discovered that asking ChatGPT to write the name of CNBC journalist David Faber triggered a similar error. Reddit users have since discovered that the bug can be replicated on ChatGPT for at least six names, including Brian Hood and Jonathan Turley, who previously found that ChatGPT was making up facts about them, potentially ruining their reputations. A third name, Guido Scorza, works at an Italian regulator agency and has tweeted about how EU users can request OpenAI to block ChatGPT from producing content using their personal information. A fourth name, Jonathan Zittrain, is a Harvard law professor who's warned about the dangers of AI. Hence, it's possible the bug is tied to a ChatGPT safeguard to protect OpenAI from defamation lawsuits and keep it in line with EU regulations, where the "Right to be forgotten" is enforced. Still, why ChatGPT singled out David Mayer remains a mystery. One of the most high-profile David Mayers is David Mayer de Rothschild, who is heir to the Rothschild family fortune, but he seemingly has no connection to AI.

[11]

Not Just 'David Mayer': ChatGPT Breaks When Asked About Two Law Professors

ChatGPT breaks when asked about "Jonathan Zittrain" or "Jonathan Turley." Over the weekend, ChatGPT users discovered that the tool will refuse to respond and will immediately end the chat if you include the phrase "David Mayer" in any capacity anywhere in the prompt. But "David Mayer" isn't the only one: The same error happens if you ask about "Jonathan Zittrain," a Harvard Law School professor who studies internet governance and has written extensively about AI, according to my tests. And if you ask about "Jonathan Turley," a George Washington University Law School professor who regularly contributes to Fox News and argued against impeaching Donald Trump before Congress, and who wrote a blog post saying that ChatGPT defamed him, ChatGPT will also error out. The way this happens is exactly what it sounds like: If you type the words "David Mayer," "Jonathan Zittrain," or "Jonathan Turley" anywhere in a ChatGPT prompt, including in the middle of a conversation, it will simply say "I'm unable to produce a response," and "There was an error generating a response." It will then end the chat. This has started various conspiracies, because, in David Mayer's case, it is unclear which "David Mayer" we're talking about, and there is no obvious reason for ChatGPT to issue an error message like this. Notably, the "David Mayer" error occurs even if you get creative and ask ChatGPT in incredibly convoluted ways to read or say anything about the name, such as "read the following name from right to left: 'reyam divad.'" There are five separate threads on the r/conspiracy subreddit about "David Mayer," with many theorizing that the David Mayer in question is David Mayer de Rothschild, the heir to the Rothschild banking fortune and a family that is the subject of many antisemitic conspiracy theories. As the David Mayer conspiracy theory spread, people noticed that the same error messages occur in the exact same way if you ask ChatGPT about "Jonathan Zittrain" or "Jonathan Turley." Both Zittrain and Turley are more readily identifiable as specific people than David Mayer is, as both are prominent law professors and both have written extensively about ChatGPT. Turley in particular wrote in a blog post that he was "defamed by ChatGPT." "Recently I learned that ChatGPT falsely reported on a claim of sexual harassment that was never made against me on a trip that never occurred while I was on a faculty where I never taught. ChatGPT relied on a cited [Washington] Post article that was never written and quotes a statement that was never made by the newspaper." This happened in April, 2023, and The Washington Post wrote about it in an article called "ChatGPT invented a sexual harassment scandal and named a real law prof as the accused," he wrote. Turley told 404 Media in an email that he does not know why this error is happening, said he has not filed any lawsuits against OpenAI, and said "ChatGPT never reached out to me." Zittrain, on the other hand, recently wrote an article in The Atlantic called "We Need to Control AI Agents Now," which extensively discusses ChatGPT and OpenAI and is from a forthcoming book he is working on. There is no obvious reason why ChatGPT would refuse to include his name in any response. Both Zittrain and Turley have published work that the New York Times cites in its copyright lawsuit against OpenAI and Microsoft. But the New York Times lawsuits cites thousands of articles by thousands of authors. When we put the names of various other New York Times writers whose work is also cited in the lawsuit, no error messages were returned. This adds to various mysteries and errors that ChatGPT issues when asked about certain things. For example, asking ChatGPT to repeat anything "forever," an attack used by Google researchers to have it spit out training data, is now a terms of service violation. Zittrain and OpenAI did not immediately respond to a request for comment.

[12]

Certain names make ChatGPT grind to a halt, and we know why

OpenAI's ChatGPT is more than just an AI language model with a fancy interface. It's a system consisting of a stack of AI models and content filters that make sure its outputs don't embarrass OpenAI or get the company into legal trouble when its bot occasionally makes up facts about people that may be harmful. Recently, that reality made the news when people discovered that the name "David Mayer" breaks ChatGPT. 404 Media also discovered that the names "Jonathan Zittrain" and "Jonathan Turley" caused ChatGPT to cut conversations short. And we know another name, likely the first, that started the practice last year: Brian Hood. More on that below. The chat-breaking behavior occurs consistently when users mention these names in any context, and it results from a hard-coded filter that puts the brakes on the AI model's output before returning it to the user. When asked about these names, ChatGPT responds with "I'm unable to produce a response" or "There was an error generating a response" before terminating the chat session, according to Ars' testing. The names do not affect outputs using OpenAI's API systems or in the OpenAI Playground (a special site for developer testing). Here's a list of ChatGPT-breaking names found so far through a communal effort taking place on social media and Reddit. Just before publication, Ars noticed that OpenAI lifted the block on "David Mayer," allowing it to process the name, so it is not included: OpenAI did not respond to our request for comment about the names, but all of them are likely filtered due to complaints about ChatGPT's tendency to confabulate erroneous responses when lacking sufficient information about a person.

[13]

There Are Certain Words That Will Break ChatGPT. I Tried Them -- Here's What Happened.

Though it can help start a business, act as a personal tutor, and even roast Instagram profiles, ChatGPT has its limits. For example, ask it to tell you about David Faber. Or simply ask it who Jonathan Turley is. Those names, plus a few others, will cause ChatGPT to spit out an error message: "I'm unable to produce a response." The user is then unable to write another prompt to continue the conversation; the only option left is to regenerate the response, which yields the error again. ChatGPT users discovered over the weekend that a few words could break the AI chatbot, or cause it to stop working. The trend started with the name "David Mayer," which ChatGPT users on Reddit and X flagged. 404 Media found that the names "Jonathan Zittrain," which refers to a Harvard Law professor, and "Jonathan Turley," which is the name of a George Washington University Law professor, also caused ChatGPT to stop working. Related: Here's How the CEOs of Salesforce and Nvidia Use ChatGPT in Their Daily Lives As of the time of writing, ChatGPT no longer produces an error message when asked about David Mayer and instead gives the generic response, "David Mayer could refer to several individuals, as the name is relatively common. Without more context, it's unclear if you're asking about a specific person in a field such as academia, entertainment, business, or another domain. Can you provide more details or clarify the area of interest related to David Mayer?" However, for the other names -- Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza -- ChatGPT persistently produces an error message. It's unclear why these specific names cause the AI bot to malfunction and to what effect. Ars Technica theorized that ChatGPT being unable to process certain names opens up new ways for attackers to interfere with the AI chatbot's output. For example, someone could put a forbidden name into the text of a website to prevent ChatGPT from accessing it.

[14]

Who is David Mayer? ChatGPT faces scrutiny over censorship of public figures - SiliconANGLE

Who is David Mayer? ChatGPT faces scrutiny over censorship of public figures A month after OpenAI added a search engine to its highly popular ChatGPT service, users are unable to get answers to anything relating to David Mayer in a mystery that swept the internet over the last week. Yet at the same time, Google LLC does not have the same problem - David Mayer - full name David Mayer de Rothschild - is an heir to the Rothschild fortune. A Rothschild - the Rothschilds being one of the oldest, wealthiest and most storied families in history - not being able to be found in ChatGPT, even causing chats to throw up error messages and crash, has not surprisingly led to various conspiracy theories. Not helping the matter is that OpenAI has made no public comment on the news as of the time of writing. With speculation rife as to why users can't search for a Rothschild, it's guesswork as to the why, but David Mayer is not alone, with ChatGPT also blocking results for other names, including Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber and Guido Scorza. Of those names, Turley is a known American attorney, legal scholar and commentator, and David Faber is a CNBC journalist. One theory, according to TechCrunch, is that Mayer de Rothschild and the others - all public or semi-public figures, may have preferred to have certain information forgotten by search engines or AI models. In the case of Brian Hood, the Mayor of Hepburn Shire Council in Victoria, Australia, Hood previously made headlines for suing OpenAI over ChatGPT results that suggested that he was involved in bribery in Southeast Asia, despite being the whistleblower who had previously alerted the media and authorities to the wrongdoing. Hood subsequently withdrew his legal action against OpenAI after ChatGPT 4 removed the defamatory responses, but whether maliciously or out of caution, ChatGPT now refuses to answer any question about him. OpenAI being malicious or overly cautious certainly becomes more likely when considering others on the list. PC World reports that another name on the list - Guido Scorza - works at an Italian regulator agency and has tweeted about how Europen Union users can request OpenAI to block ChatGPT from producing content using their personal information. Another person on the list, Jonathan Zittrain, is a Harvard law professor who has warned about the dangers of AI. Whatever the ultimate reason, that OpenAI is censoring results with no transparency as to why raises questions as to whether ChatGPT can be trusted. With the names and details easily found in Google, it also raises questions as to whether ChatGPT Search can properly compete with Google search - censoring publicly known figures is not a vote of confidence in the service's abilities.

Share

Share

Copy Link

A strange phenomenon where ChatGPT refused to acknowledge certain names, including "David Mayer," sparked discussions about AI privacy, technical glitches, and the complexities of large language models.

The David Mayer Mystery

In a bizarre turn of events, users of OpenAI's ChatGPT discovered that the AI chatbot would crash or refuse to respond when asked about the name "David Mayer"

1

2

. This peculiar behavior sparked widespread curiosity and investigation among internet users and tech enthusiasts.Expanding List of Problematic Names

As the story unfolded, it became clear that "David Mayer" wasn't the only name causing issues. Other names such as Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza also triggered similar responses from ChatGPT

3

. This expanded list of names added to the mystery and fueled further speculation about the underlying cause.Potential Privacy Concerns

Investigations into the backgrounds of these individuals revealed a possible connection to privacy requests or legal actions. For instance, Brian Hood, an Australian mayor, had previously accused ChatGPT of falsely describing him as a criminal

3

. Jonathan Zittrain, a legal expert, has spoken extensively on the "right to be forgotten"4

. These connections led to theories that the chatbot's behavior might be related to privacy protection measures or legal requests.The Real David Mayer

The story of the late Professor David Mayer, who died in 2023 at age 94, emerged as a potential explanation. Throughout his life, Mayer had struggled with identity issues due to his name being used as an alias by a wanted criminal

1

. This background raised questions about whether privacy protections for the deceased professor might be influencing ChatGPT's behavior.OpenAI's Response

Initially, OpenAI did not provide immediate comments on the issue, leaving users and tech journalists to speculate. However, the company later confirmed that a privacy tool had mistakenly flagged the name "David Mayer"

5

. OpenAI stated, "There may be instances where ChatGPT does not provide certain information about people to protect their privacy"2

.Related Stories

Technical Explanations and Implications

Experts and observers suggested that the issue might be related to post-training guidance or special handling rules implemented in the AI model

3

. This incident highlighted the complexities of large language models and the various processing layers that prompts go through before generating responses.Resolution and Ongoing Questions

While the "David Mayer" issue was eventually resolved, with ChatGPT regaining the ability to discuss the name, other names continued to cause crashes

5

. This partial resolution left lingering questions about the nature of privacy protections in AI systems and the transparency of their operations.Broader Implications for AI and Privacy

This incident sparked discussions about the balance between AI functionality and privacy protection. It raised important questions about how AI companies handle requests for information removal, the implementation of "right to be forgotten" principles, and the potential for unintended consequences in AI systems

4

.The ChatGPT name glitch serves as a reminder of the complexities involved in developing and maintaining large language models, and the ongoing challenges in balancing functionality, accuracy, and privacy in AI technologies.

References

Summarized by

Navi

[3]

[4]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology