CISA's Acting Director Uploaded Sensitive Files to ChatGPT, Triggering Federal Investigation

2 Sources

2 Sources

[1]

Trump's cyber chief fed sensitive US documents into public ChatGPT

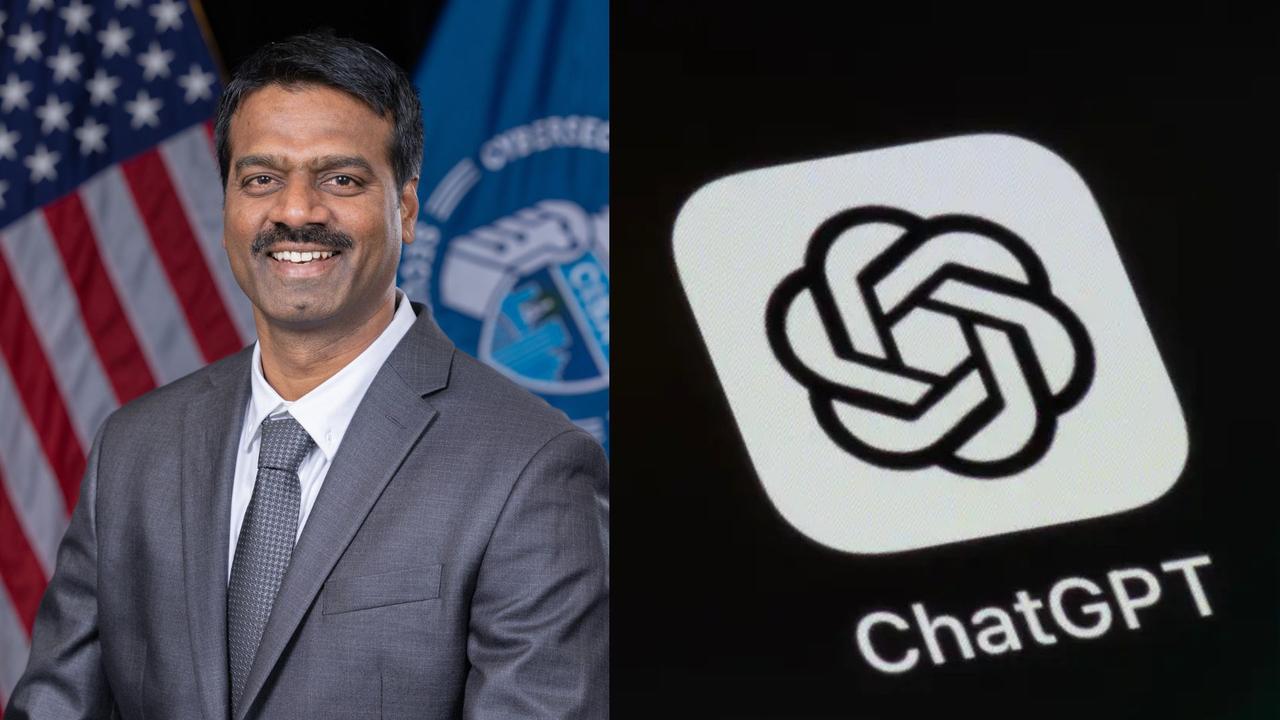

The acting head of the U.S. Cybersecurity and Infrastructure Security Agency uploaded sensitive government documents into a public version of ChatGPT last summer, triggering internal security alarms, according to multiple Department of Homeland Security officials. POLITICO first reported the incident, which involved Dr. Madhu Gottumukkala, CISA's interim director and the agency's senior-most political official. The uploads raised concerns inside DHS because the agency oversees federal cyber defense against foreign adversaries, including Russia and China. None of the documents were classified. However, officials said the files carried "for official use only" markings and were not meant for public release. Four DHS officials said Gottumukkala uploaded contracting documents into ChatGPT during the summer. Automated cybersecurity sensors flagged the activity in August, including multiple alerts during the first week alone. The officials spoke on condition of anonymity due to fear of retaliation. The incident stood out because Gottumukkala had requested special approval to use ChatGPT shortly after joining CISA in May. At the time, DHS blocked the tool for most employees. "He forced CISA's hand into making them give him ChatGPT, and then he abused it," one official said. The files went into a public version of ChatGPT. Any data uploaded into that version becomes accessible to OpenAI and may train future responses. OpenAI has said the platform has more than 700 million total active users. Other AI tools approved for DHS staff operate differently. DHS's internal chatbot, DHSChat, prevents information from leaving federal networks. Senior DHS leaders launched an internal review after detecting the uploads. Officials assessed whether the exposure harmed government security. It remains unclear what the review concluded. Gottumukkala later met with senior DHS officials to review what he uploaded, two officials said. The discussions involved DHS's then-acting general counsel, Joseph Mazzara, and Chief Information Officer Antoine McCord. CISA officials also briefed Gottumukkala in August. Those meetings included CIO Robert Costello and Chief Counsel Spencer Fisher, according to the officials. DHS policy requires agencies to investigate any exposure of sensitive material. Officials must also determine whether disciplinary action applies. Options can include retraining, formal warnings, or clearance reviews. Mazzara and Costello did not respond to requests for comment. McCord and Fisher could not be reached. CISA disputed parts of POLITICO's reporting in an emailed statement. "Was granted permission to use ChatGPT with DHS controls in place," said Marci McCarthy, CISA's director of public affairs. She added that "this use was short-term and limited." McCarthy also said Gottumukkala "last used ChatGPT in mid-July 2025 under an authorized temporary exception granted to some employees." She added that "CISA's security posture remains to block access to ChatGPT by default unless granted an exception." McCarthy said the agency remains committed to "harnessing AI and other cutting-edge technologies to drive government modernization and deliver on" Trump's executive order removing barriers to U.S. leadership in AI. The ChatGPT incident adds to recent turmoil inside CISA. This summer, six career staff members went on leave after Gottumukkala failed a counterintelligence polygraph he requested. DHS later described the test as "unsanctioned." Asked about the failure during congressional testimony, Gottumukkala said he did not "accept the premise of that characterization." Last week, Gottumukkala also attempted to remove CISA CIO Costello. Other political appointees intervened and blocked the move. Gottumukkala has led CISA in an acting role since May. President Donald Trump's nominee to permanently lead the agency, Sean Plankey, remains unconfirmed.

[2]

CISA ChatGPT leak: Acting director Madhu Gottumukkala investigation explained

The core principle of modern cybersecurity is "Zero Trust" - never trust, always verify. However, a recent data exposure incident involving Madhu Gottumukkala, the Acting Director of the Cybersecurity and Infrastructure Security Agency (CISA), has highlighted a massive blind spot in federal AI governance: the human element. Gottumukkala is currently under investigation by the Department of Homeland Security (DHS) after CISA's internal sensors flagged multiple uploads of sensitive "For Official Use Only" (FOUO) documents to the public, commercial version of ChatGPT. Also read: Windows 11 crosses 1 billion mark, as Xbox revenue falls Why public LLMs are a risk The incident began when Gottumukkala bypassed standard DHS blocks on generative AI tools by requesting a "special temporary exception" in May 2025. While CISA has since stated this was a controlled, limited trial, the technical fallout remains significant. When a user interacts with the public version of ChatGPT, the data isn't just processed; it is often assimilated. By default, OpenAI's commercial models use user prompts and uploaded documents to: For CISA, which handles the blueprint of the nation's cyber defense, feeding contracting documents into a public cloud means that proprietary government data effectively left the "federal perimeter" and entered a third-party server with no zero-retention guarantee. Also read: AlphaGenome explained: How Google DeepMind is using AI to rewrite genomics research Sensors vs. privilege Perhaps the most striking technical detail is that CISA's own automated security sensors worked exactly as intended. In the first week of August 2025, these systems - designed to detect the exfiltration of sensitive strings and metadata - triggered multiple high-priority alerts. These sensors are calibrated to stop "Shadow AI" (the unauthorized use of AI tools). The irony here is that the person responsible for overseeing the nation's response to such alerts was the one triggering them. This has sparked a DHS-led "damage assessment" to determine if the FOUO material, which included internal CISA infrastructure details, has been permanently ingested into OpenAI's training logs. The government alternative: DHSChat The investigation also centers on why Gottumukkala eschewed DHSChat. Unlike the public version, DHSChat is a "sandboxed" instance of an LLM. It offers: * Data Sovereignty: Data never leaves the government-controlled Azure or AWS cloud environment. * Zero-Training Policy: Prompts are never used to train the base model. * Audit Logging: Every interaction is logged and searchable for FOIA and compliance purposes. While the investigation is technical, it follows a period of administrative tension for Gottumukkala. In passing, it's worth noting that this leak adds to existing scrutiny over his recent failed counterintelligence polygraph and the subsequent administrative leave of career staffers involved in his security vetting. For the tech industry, the CISA leak is a landmark case study. If the head of the world's most sophisticated cyber defense agency can fall victim to the convenience of "copy-paste" AI, it proves that AI Security Policy is only as strong as its most privileged user.

Share

Share

Copy Link

Dr. Madhu Gottumukkala, acting director of CISA, uploaded sensitive government documents into the public version of ChatGPT last summer, triggering automated security alerts. The incident has sparked a Department of Homeland Security investigation into potential data exposure and raises critical questions about federal AI governance and human element vulnerability in cybersecurity.

Acting Director of CISA Triggers Security Breach with ChatGPT Uploads

Dr. Madhu Gottumukkala, the acting director of CISA, uploaded sensitive government documents into the public version of ChatGPT during summer 2025, setting off internal security alarms across the Department of Homeland Security (DHS). The data exposure incident, first reported by POLITICO, involved contracting documents marked "For Official Use Only" (FOUO) that were not intended for public release

1

. While none of the files were classified, the breach is particularly concerning given that CISA oversees federal cyber defense against foreign adversaries including Russia and China.

Source: Interesting Engineering

Automated cybersecurity sensors flagged the activity in August, with multiple alerts triggered during the first week alone. Four DHS officials, speaking anonymously due to fear of retaliation, confirmed that Madhu Gottumukkala had requested special approval to use ChatGPT shortly after joining CISA in May, at a time when DHS blocked the tool for most employees

1

. "He forced CISA's hand into making them give him ChatGPT, and then he abused it," one official stated.Why Public Large Language Models Pose Critical Security Risks

The technical implications of this ChatGPT leak extend far beyond a simple policy violation. When users interact with the public version of ChatGPT, data uploaded becomes accessible to OpenAI and may train future responses. With more than 700 million total active users on the platform, any sensitive information fed into the system effectively leaves the federal perimeter and enters third-party servers with no zero-retention guarantee

2

.This stands in stark contrast to approved AI tools within federal AI governance frameworks. DHSChat, the Department of Homeland Security's internal chatbot, operates with data sovereignty protections that prevent information from leaving federal networks. The sandboxed instance offers zero-training policies, meaning prompts are never used to train base models, and includes comprehensive audit logging for compliance purposes

2

. The question remains: why did the acting director of CISA bypass this secure alternative?

Source: Digit

Federal Investigation and Damage Assessment Underway

Senior DHS leaders launched an internal review after detecting the uploads, assessing whether the exposure harmed government security. Gottumukkala met with senior DHS officials to review what he uploaded, including discussions with DHS's then-acting general counsel Joseph Mazzara and Chief Information Officer Antoine McCord. CISA officials also briefed Gottumukkala in August, with meetings involving CIO Robert Costello and Chief Counsel Spencer Fisher

1

.DHS policy requires agencies to investigate any exposure of sensitive material and determine whether disciplinary action applies. Options can include retraining, formal warnings, or clearance reviews. However, it remains unclear what the review concluded. CISA disputed parts of the reporting, with Director of Public Affairs Marci McCarthy stating that Gottumukkala "was granted permission to use ChatGPT with DHS controls in place" and that "this use was short-term and limited." She added that he "last used ChatGPT in mid-July 2025 under an authorized temporary exception granted to some employees"

1

.Related Stories

Human Element Vulnerability Exposes Gaps in AI Security Policy

The incident highlights a massive blind spot in federal AI governance: human element vulnerability. Modern cybersecurity operates on Zero Trust principles—never trust, always verify. Yet CISA's own automated security sensors, designed to detect Shadow AI and the exfiltration of sensitive data, were triggered by the agency's most senior political official

2

. The irony is striking: the person responsible for overseeing the nation's response to such alerts was the one triggering them.For the tech industry and government agencies alike, this serves as a critical case study. If the head of the world's most sophisticated cyber defense agency can fall victim to the convenience of public AI tools, it demonstrates that AI security policy is only as strong as its most privileged user. The DHS-led damage assessment now focuses on determining whether FOUO material, which included internal CISA infrastructure details, has been permanently ingested into OpenAI's training logs

2

.Growing Turmoil at Cybersecurity's Highest Levels

This ChatGPT leak adds to mounting turmoil inside CISA under Gottumukkala's leadership. Six career staff members went on leave after he failed a counterintelligence polygraph he requested, which DHS later described as "unsanctioned." When asked about the failure during congressional testimony, Gottumukkala said he did not "accept the premise of that characterization." Last week, he also attempted to remove CISA CIO Costello, though other political appointees intervened and blocked the move

1

.Gottumukkala has led CISA in an acting role since May. President Donald Trump's nominee to permanently lead the agency, Sean Plankey, remains unconfirmed. As federal agencies rush to harness AI and other cutting-edge technologies, this incident underscores the urgent need for robust governance frameworks that account for insider risk, regardless of rank or privilege. The short-term implications involve potential disciplinary action and policy refinement, while long-term consequences may reshape how federal agencies approach AI tool approval and monitoring for senior officials.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation