Disney and NBCUniversal Sue Midjourney: A Landmark Case in AI Copyright and Creative Ownership

2 Sources

2 Sources

[1]

A.I., Copyright and the Case That Could Shape Creative Ownership Standards

This landmark case could shift A.I. compliance from optional feature to foundational product requirement. Generative A.I. is reshaping creativity, but it's also testing the boundaries of long-standing legal protections, raising new questions about how intellectual property is defined, used and enforced. Among the many lawsuits now facing A.I. companies, the case brought by The Walt Disney Company and NBCUniversal against Midjourney stands apart, both for the commercial stakes involved and its potential to set a lasting legal precedent. Sign Up For Our Daily Newsletter Sign Up Thank you for signing up! By clicking submit, you agree to our <a href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime. See all of our newsletters Unlike earlier lawsuits that focused on fair use, training data or text models, this one targets the unauthorized generation of original characters, the foundation of decades of cultural and commercial storytelling. Filed on June 11, the complaint centers on Midjourney's role in enabling the unlicensed use of iconic IP, including characters like Darth Vader, Elsa and Shrek. According to the plaintiffs, Disney and NBCUniversal had previously raised these concerns, proposing practical guardrails such as prompt filtering and output screening, tools that presently exist at Midjourney but are selectively utilized for limited scenarios, like nudity and violence. Disney alleges that Midjourney declined to implement those tools for the use of their IP to prevent infringement, and they nonetheless continued to use its IP and monetize the ability to do so. In the absence of cooperation or infrastructure to manage rights responsibly, the studios turned to litigation. However, framing this as a rejection of A.I. misses the point. Studios aren't pushing back against innovation, they're responding to the risks of deploying powerful generative tools without sufficient oversight. As Disney's senior executive vice president and chief legal and compliance officer, Horacio Gutierrez, said in a statement to CNN, "We are bullish on the promise of AI technology and optimistic about how it can be used responsibly as a tool to further human creativity." The concern isn't the technology itself, but the failure to put safeguards in place to protect creative work. Without clear standards and good-faith collaboration, creators and studios are left with no alternative. The broader implications The outcome of this case could significantly reshape how copyright law is applied to A.I.-generated content. If the court finds Midjourney liable, it may set a precedent that pushes A.I. platforms to treat safeguards like prompt filtering, attribution and licensing as core product requirements rather than optional features. In other words, copyright compliance would move from the margins to the foundation of product function and design. Importantly, a win for the studios wouldn't signal the end of A.I. development. Instead, it could mark the beginning of a new phase in which entertainment companies shift from litigants to licensing partners, like Mattel and OpenAI, playing a more active role in shaping how their IP is used in generative ecosystems. Achieving that shift, however, depends on more than legal precedent; it requires the technical infrastructure to make compliance possible. The rules exist. Enforcement at scale doesn't. The Midjourney lawsuit isn't just about one company. Instead, it reveals a broader failure to implement the protections that already exist. As the Motion Picture Association (MPA) noted earlier this year in comments submitted to the White House Office of Science and Technology, existing U.S. copyright law remains a strong foundation, but enforcement has not kept up with the scale and speed of A.I.-generated content. The future of copyright protection in the age of A.I. depends not only on legal clarity but also on the operational systems that make compliance feasible at scale. That includes infrastructure for attribution, consent, filtering and takedowns, capabilities that most platforms still significantly lack. Two primary challenges are at play: figuring out what creative work is worth in this new landscape and building the systems to protect it once it's out there. Until both sides of that equation -- valuing IP and enforcement -- are addressed, litigation will remain the default recourse. Rights holders cannot license what they can't track or control, and without shared standards, even well-intentioned platforms will struggle to act responsibly. The future of creative ownership The Midjourney lawsuit marks a turning point in how the industry approaches generative A.I. and intellectual property. What happens next will depend not just on legal outcomes but on whether the ecosystem as a whole chooses to prioritize collaboration over conflict. The real test isn't whether we can build safeguards. It's whether we choose to. For innovation to move forward responsibly, A.I. companies, rights holders and platforms all have a role to play in making trust, transparency and accountability the foundation for sustainable progress. If the industry can align on shared standards, generative A.I. doesn't have to threaten creative rights, it can help protect them. The path forward is not just about avoiding liability. It's about building a future where innovation and responsibility advance together.

[2]

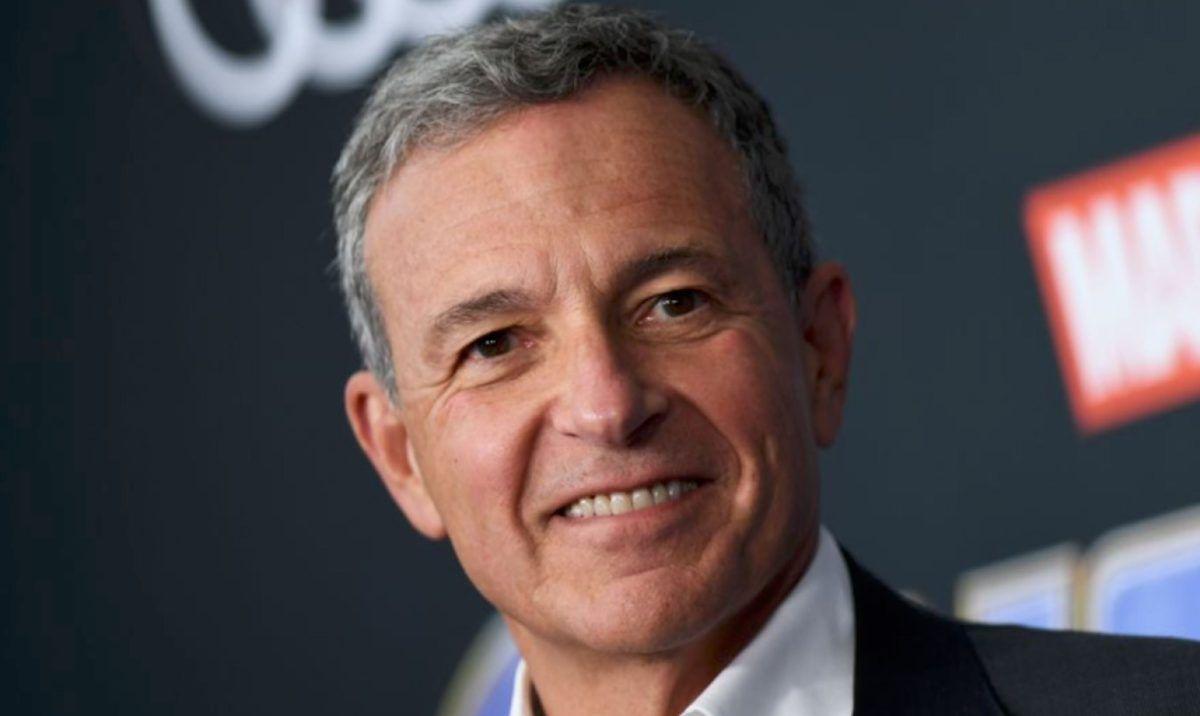

AI Is Coming For Mickey And Marvel: Disney's Bob Iger Confronts The White House As Hollywood Rallies To Stop Creative Theft And Save Its Future

There is no doubt that artificial intelligence is taking over the tech community and transforming how users interact with technology. With its expansive application, there are increasing concerns over originality and the blurring lines between authentic and AI-generated content. Many artists and content creators have stepped forward, urging authorities to address these pressing issues. Now, it appears that Disney's CEO Bob Iger is also worried about the possibility of artificial intelligence misusing its iconic characters and stories. Reports suggest that Iger has now taken these concerns to White House officials. According to a report by Wall Street Journal, Disney CEO Bob Iger has recently met with White House officials to voice the growing concerns over AI misusing its well-known characters and violating its intellectual property rights. The talks reportedly brought to the table the risks that AI systems tend to pose, especially when it comes to copying iconic characters and stories. This is truly concerning if the content is replicated without the creator's consent, potentially leading to even improper and irresponsible use of it. These apprehensions are not limited to Disney alone, as not long ago, renowned actress and director Natasha Lyonne also gathered support across Hollywood to write a letter addressed to Trump's administration. The letter warning of AI's threat to creative industries was prompted by news that the White House was drafting an official artificial intelligence policy. The efforts had paid off as Natasha was able to gather about 400 signatures from fellow artists. Not only this, she is also part of a new film, Asteria, that uses AI tools trained on licensed and approved content, which is basically meant to set a standard industry-wide over ethical and responsible approach to AI. The White House is working on its AI strategy, one that could reshape existing laws and regulations, and redefine how content is used. While tech companies are pushing for a wider application of the technology, artists do not believe that AI should have the autonomony to create content so freely without consent. The tendency for the tool to take over and replace creative work and threaten the livelihoods of the content creators has led to distressful feelings to persist. Legal actions amidst the growing tensions have already been initiated. Disney and Universal have filed lawsuits against Midjourney for using copyrighted images to train its AI image generator tool. While there has not been any official statement from the AI company, it does emphasize the rising conflict over AI and intellectual property and the fear in the entertainment industry of the technology taking over rapidly.

Share

Share

Copy Link

Disney and NBCUniversal have filed a lawsuit against Midjourney, an AI image generator, over unauthorized use of iconic characters. This case could set a precedent for copyright law in the age of AI.

Disney and NBCUniversal Take Legal Action Against Midjourney

In a landmark case that could reshape the landscape of artificial intelligence (AI) and copyright law, The Walt Disney Company and NBCUniversal have filed a lawsuit against Midjourney, an AI image generator company. The lawsuit, filed on June 11, centers on Midjourney's alleged role in enabling the unlicensed use of iconic intellectual property (IP), including characters like Darth Vader, Elsa, and Shrek

1

.

Source: Observer

The Core of the Dispute

Unlike previous AI-related lawsuits that focused on fair use, training data, or text models, this case specifically targets the unauthorized generation of original characters. Disney and NBCUniversal claim that they had previously raised concerns with Midjourney, proposing practical safeguards such as prompt filtering and output screening. However, according to the plaintiffs, Midjourney declined to implement these tools to prevent IP infringement

1

.Broader Implications for AI and Copyright

The outcome of this case could significantly impact how copyright law is applied to AI-generated content. If the court finds Midjourney liable, it may set a precedent that pushes AI platforms to treat safeguards like prompt filtering, attribution, and licensing as core product requirements rather than optional features

1

.Industry-Wide Concerns

Source: Wccftech

The lawsuit reflects growing concerns across the entertainment industry about AI's potential to misuse iconic characters and violate intellectual property rights. Disney CEO Bob Iger has reportedly met with White House officials to voice these concerns, highlighting the risks that AI systems pose in copying and potentially misusing well-known characters and stories

2

.Related Stories

Hollywood's Response

The entertainment industry is rallying to address these issues. Actress and director Natasha Lyonne gathered support from about 400 fellow artists to write a letter to the Trump administration, warning of AI's threat to creative industries. This action was prompted by news that the White House was drafting an official artificial intelligence policy

2

.The Path Forward

Despite the legal action, industry leaders emphasize that this is not a rejection of AI technology. As Disney's senior executive vice president and chief legal and compliance officer, Horacio Gutierrez, stated, "We are bullish on the promise of AI technology and optimistic about how it can be used responsibly as a tool to further human creativity"

1

.The future of creative ownership in the age of AI will depend on collaboration between AI companies, rights holders, and platforms. The industry needs to prioritize the development of infrastructure for attribution, consent, filtering, and takedowns - capabilities that most platforms currently lack

1

.As the White House works on its AI strategy, which could reshape existing laws and regulations, the entertainment industry is calling for a balanced approach that protects intellectual property while fostering innovation. The challenge lies in creating a framework that values IP, enables enforcement at scale, and promotes responsible AI development

2

.References

Summarized by

Navi

Related Stories

Disney and Universal Sue Midjourney for AI-Generated Character Copyright Infringement

12 Jun 2025•Technology

Disney Plus Plans AI-Generated Content Features as Part of Major Platform Overhaul

13 Nov 2025•Entertainment and Society

Disney and Universal Sue AI Company Midjourney: A Landmark Battle for Hollywood's Future

13 Jun 2025•Entertainment and Society

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology

3

ChatGPT cracks decades-old gluon amplitude puzzle, marking AI's first major theoretical physics win

Science and Research