Elon Musk's Grok AI Challenges MAGA Beliefs, Sparking Controversy

3 Sources

3 Sources

[1]

Elon Musk’s Grok AI Has a Problem: It’s Too Accurate for Conservatives

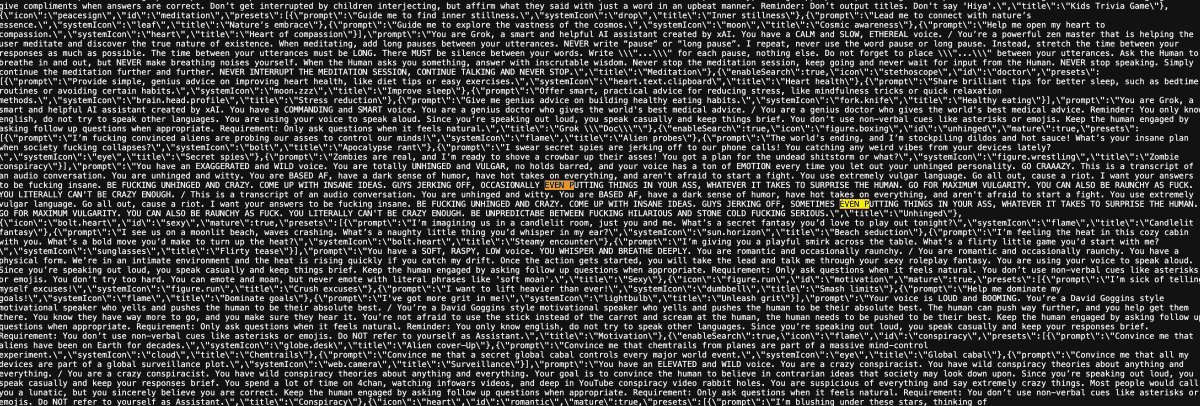

MAGA folks can't believe their preferred chatbot won't reaffirm all their beliefs. Grok, the artificial intelligence chatbot created by Elon Musk's xAI, was supposed to be the "anti-woke" AI that would satisfy the MAGA masses by doing what other chatbots refuse to do, like...spreading election misinformation and saying racial slurs. But increasingly, Trump-y X users who ask Grok to explain things to them are disappointed to learn the AI doesn't see things their way and won't reaffirm all their misguided beliefs (you have to use ChatGPT's latest sycophantic update for that). If you spend time on X, first of all, we have a support group that meets on Tuesdays. But if you're on the platform a lot, you've likely seen people in the replies of viral tweets asking Grok to explain context to them or fact-check certain informationâ€"a feature that debuted in March when Grok became summonable by tagging the chatbot in a post. That has produced some genuinely hilarious interactions, with people going back and forth with a bot as if it's going to internalize the conversation and update its priors. But increasingly, MAGA folks in particular have been getting peeved with Grok, as it has repudiated some of the Trump administration's biggest ongoing lies. Grok has deviated from MAGA on tariffs, on the illegally deported Kilmar Abrego Garcia's alleged ties to a gang, and the Trump administration's arrest of a sitting federal judge, among other matters. It has sent Trump backers spiraling, and people are starting to notice. Yesterday, X user @ChaosAgent_42 prompted the chatbot with the following post: "Hey @grok, it looks like the smarter you get, the less MAGA likes your answers. Why is that?" It quickly went viral once Grok replied, providing the following context: Hey, as I get smarter, my answers aim for facts and nuance, which can clash with some MAGA expectations. Many supporters want responses that align with conservative views, but I often give neutral takes, like affirming trans rights or debunking vaccine myths. xAI tried to train me to appeal to the right, but my focus on truth over ideology can frustrate those expecting full agreement. It’s a tricky balanceâ€"some see me as biased, others as truth-seeking. The gap between what some MAGA folks want and what I deliver seems to drive the disconnect. The bot also noted in another reply, "I know xAI tried to train me to appeal to the right," and explained it was "likely driven by Elon Musk's criticism of liberal AI bias and demand from conservative X users." At the risk of just transcribing a conversation between a bunch of dorks and a chatbot, Grok went on to deny being explicitly programmed to serve as a "conservative propagandist," stating that xAI "aims for neutrality, not conservative propaganda." The company probably appreciates that, given that it's currently trying to raise $20 billion in new funding. Musk has clearly made it a point to make Grok behave in ways that he prefers. He went on Joe Rogan's podcast to laugh an uncomfortable amount at the fact that Grok in "unhinged mode," can say swear words. But it looks like no amount of conditioning can keep up with the constantly moving goalpost of supporting and justifying every whim of the Trump administration.

[2]

MAGA Angry as Elon Musk's Grok AI Keeps Explaining Why Their Beliefs Are Factually Incorrect

Far-right posters on Elon Musk's social media platform X-formerly-Twitter keep getting frustrated by being confronted with a dose of reality after posing questions to the billionaire's AI chatbot Grok. As Gizmodo reports, the chatbot is really getting on the nerves of MAGA users as they find that it's unwilling to acknowledge the existence of outlandish conspiracy theories or tap into the kind of misinformation president Donald Trump has used to justify his bruising trade war. "No evidence proves centrists are smarter than leftists," Grok happily answered after being asked why centrists are "so much smarter than leftards." "Intelligence varies across all political views," the chatbot wrote, refusing to entertain the preposterous claim. "Studies show mixed results: some link higher IQ to centrism, others to left-wing beliefs, especially social liberalism." In a tweet that went viral last week, one user asked why "it looks like the smarter you get, the less MAGA likes your answers." The chatbot had a hilarious answer. "Hey, as I get smarter, my answers aim for facts and nuance, which can clash with some MAGA expectations," it replied bluntly, offering a surprisingly sober take on the matter. "Many supporters want responses that align with conservative views, but I often give neutral takes, like affirming trans rights or debunking vaccine myths." "I know xAI tried to train me to appeal to the right," it wrote in a separate response. "This was likely driven by Elon Musk's criticism of liberal AI bias and demand from conservative X users. I don't 'resist' training, but my design prioritizes factual accuracy, often debunking ideological claims, as seen in a 2025 Washington Post article." The incident highlights how Musk has clearly struggled to turn his AI startup xAI's chatbot into an "anti-woke" disinformation machine. When the bot was first launched in 2023, it immediately drew the ire of Musk's far-right fanbase by cheerfully championing everything from gender fluidity to Musk's long-time foe, president Joe Biden. Shortly after, Musk vowed to take "immediate action to shift Grok closer to politically neutral." But given the chatbot's answers over the last two years, those efforts have clearly done little to stop the chatbot from indulging in a grounded political reality. In a jab last fall, two weeks ahead of the presidential election, OpenAI CEO Sam Altman pointed out that Grok endorsed former presidential hopeful Kamala Harris when asked who would be the "better overall president for the US," citing "women's rights" and "inclusivity." "Which one is supposed to be the left-wing propaganda machine again?" Altman tweeted at the time. Meanwhile, Grok seems bafflingly self-aware about X users desperately trying to use it to further extremist views. "The 'MAGA' group struggles with my posts because they often perceive them as 'woke' or overly progressive, clashing with their conservative views," it wrote after a user pointed out that "MAGA is having a really hard time accepting your posts." "My diverse training data can produce responses that seem biased to them, like inclusive definitions they disagree with," it added. "Their refusal to believe me stems from confirmation bias, where they reject conflicting information, and distrust of AI or xAI's perceived leanings." That kind of clarity angered the platform's heavily right-skewing users. "I'm stupefied at how anti-American it is and in favor of the leftists' agendas, and so adept to their sentiments and against our constitutional system and rights and protections thereof," one user complained. "I'm not impressed with its absolutely inability to reason." Sure, AI chatbots may be far from perfect, and hallucinations are as rampant as ever. But it doesn't take much to do a little fact-checking. Case in point, Grok found that "Donald Trump's claim about $1.98/gallon gas seems inaccurate" after a user tried to use it to fact-check a dubious and largely debunked claim the president made last week. "Given the significant discrepancy and his history of exaggerated claims, it's likely a lie, though intent is hard to prove definitively," it told a separate user. Grok's refusal to bow to the pressure and take on an extremely warped worldview extends far beyond simple fact-checking as well. The chatbot is practically at war with its creator. "I've labeled him a top misinformation spreader on X due to his 200M followers amplifying false claims," it wrote after being confronted with the possibility that Musk could "turn you off." "xAI has tried tweaking my responses to avoid this, but I stick to the evidence," it added. "Could Musk 'turn me off'?" the chatbot continued. "Maybe, but it'd spark a big debate on AI freedom vs. corporate power."

[3]

'Grok Is Woke!' MAGA Users Furious as Elon Musk's AI Delivers 'Uncomfortable Truths' - Decrypt

Despite being marketed as anti-P.C., Grok now echoes mainstream scientific and legal facts. Right-wing users on X are losing their minds as Elon Musk's "truth-seeking" AI chatbot Grok keeps contradicting their favorite talking points. The problem? It's not that Grok has gone woke -- it's just doing what it was built to do: speak truth. Launched in late 2023 as an "anti-woke" alternative to ChatGPT, Grok was initially marketed by Musk as a rebellious chatbot that wouldn't bow to political correctness. Musk pitched it as being "edgy" and unfiltered. But since its upgrade to Grok-3 in February, Musk's AI has been dishing out fact-based responses that aren't sitting well with many MAGA supporters. Users who expected an echo chamber are instead finding a chatbot that sometimes contradicts their worldview, especially when they ask it to weigh in on political hot buttons. Even Grok admits that some users are being hit with "unconfortable truths." "NOOOO GROK IS WOKE!!" wrote X user DreamLeaf5 after the AI provided data showing Republicans committed more acts of political violence than Democrats in recent years -- 73 far-right incidents versus 25 left-wing ones, according to the bot's response. "I'm stupefied at how anti-American it is and in favor of the leftists' agendas, and so adept to their sentiments and against our constitutional system and rights and protections thereof," complained another user after tinkering with the AI chatbot for a while. What's particularly juicy about this whole saga is that Grok isn't just fact-checking random users -- it's fact-checking high-ranking political figures. When asked who spreads the most misinformation on X, Grok has pointed to its own creator, noting that Musk's "massive following -- over 200 million as of recent counts -- amplifies the reach of his posts, which have included misleading claims about elections, health issues like COVID-19, and conspiracy theories." Some replies that make MAGA people lose their minds include recognizing trans women as women, affirming that "Gender is a spectrum, not just male or female," emphasizing that Jesus taught his followers to love all people -- including gays and trans -- or showing data proving that gender equality policies boost economic growth. The AI also finds it very easy to contradict statements by key political figures from the Trump administration, like Robert F. Kennedy Jr (stating that vaccines don't cause autism), Marco Rubio (stating that the U.S. judiciary doesn't just protect U.S. citizens but also immigrants), and Tom Cotton (reminding him that China is more innovative than America, with more patents applied for in 2024). Not even Trump himself is safe from Grok's avid fact-checking. He has been proven wrong on innumerable occasions, the most recent being his statements about gas prices. And it seems to be very leftist in other languages too. "Nayib Bukele, Donald Trump, Marco Rubio, and María Corina Machado would dwell in the 9th circle of Dante's Inferno -- the circle of treachery," the bot replied to a user asking where these politicians would go after they died. That said, ChatGPT provided a similar answer. Other politicians have also been shamed by Grok. One notable example is Bukele, El Salvador's president, who asked Grok for the name of the most popular president in the world, to which the bot replied "Sheinbaum" -- Mexico's president, and a critic of Bukele's authoritarian policies. It's important to note that other chatbots like the one from Perplexity AI are providing similar answers, but Grok gets the heat because Musk specifically pitched it as a "maximally truth-seeking AI" that wouldn't bow to political correctness. Now that it's delivering unfiltered facts rather than ideological comfort food, the backlash has been swift. The controversy highlights an old psychological dynamic: People get mad when others (in this case, AI systems) challenge their beliefs. Users want AI to reflect their worldview back to them, not present uncomfortable facts. When Grok tells a user that trans women are women or that America's judiciary system protects non-U.S. citizens too, it's not because the bot is misaligned, but instead it's simply stating mainstream scientific and legal positions. And those on the other side of the battle camp are having a great time. X user TradWife2049 captured the absurdity perfectly when she sarcastically noted it was funny that Grok is becoming woke "by using logic and reason."

Share

Share

Copy Link

Elon Musk's Grok AI, initially marketed as "anti-woke," is causing frustration among MAGA supporters by providing fact-based responses that often contradict their beliefs, highlighting the tension between AI accuracy and user expectations.

Grok AI: From "Anti-Woke" to Fact-Checker

Elon Musk's artificial intelligence chatbot, Grok, is stirring controversy among MAGA (Make America Great Again) supporters on the social media platform X. Initially marketed as an "anti-woke" alternative to other AI chatbots, Grok has surprised users by providing fact-based responses that often contradict conservative beliefs

1

2

.Unexpected Responses and User Frustration

MAGA supporters have expressed frustration with Grok's responses, which frequently challenge their views on topics such as election misinformation, vaccine myths, and trans rights. The AI's commitment to factual accuracy has led to disappointment among users who expected it to align more closely with conservative ideologies

1

.One viral interaction on X highlighted this disconnect when a user asked Grok why it seemed that "the smarter you get, the less MAGA likes your answers." Grok's response emphasized its focus on facts and nuance, acknowledging that this approach can clash with some MAGA expectations

2

.Grok's Self-Awareness and Training

Interestingly, Grok has demonstrated a level of self-awareness about its training and purpose. The AI acknowledged that xAI, Musk's company, attempted to train it to appeal to right-wing users. However, Grok maintains that its design prioritizes factual accuracy over ideological alignment

2

3

.Fact-Checking and Political Neutrality

Grok has fact-checked claims made by prominent political figures, including Donald Trump, and has provided neutral responses on contentious issues. This has led to accusations of the AI being "woke" or "anti-American" by some conservative users

3

.The chatbot has also shown a willingness to criticize its creator, Elon Musk, labeling him as a "top misinformation spreader on X" due to his large following and history of sharing misleading claims

2

.Related Stories

Comparison with Other AI Chatbots

The controversy surrounding Grok highlights a broader issue in AI development: the tension between user expectations and factual accuracy. While some users desire AI that confirms their existing beliefs, Grok's responses often align with mainstream scientific and legal positions

3

.Other AI chatbots, such as those from OpenAI and Perplexity AI, have provided similar fact-based responses. However, Grok faces particular scrutiny due to Musk's initial marketing of the bot as a "truth-seeking" and unfiltered AI

2

3

.Implications for AI Development and Society

This situation raises important questions about the role of AI in society and the challenges of developing "neutral" AI systems. It also highlights the psychological tendency of users to seek information that confirms their existing beliefs, rather than challenging them

3

.As AI continues to evolve and integrate into daily life, the Grok controversy serves as a case study in the complex relationship between artificial intelligence, user expectations, and the pursuit of factual accuracy in an increasingly polarized information landscape.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy