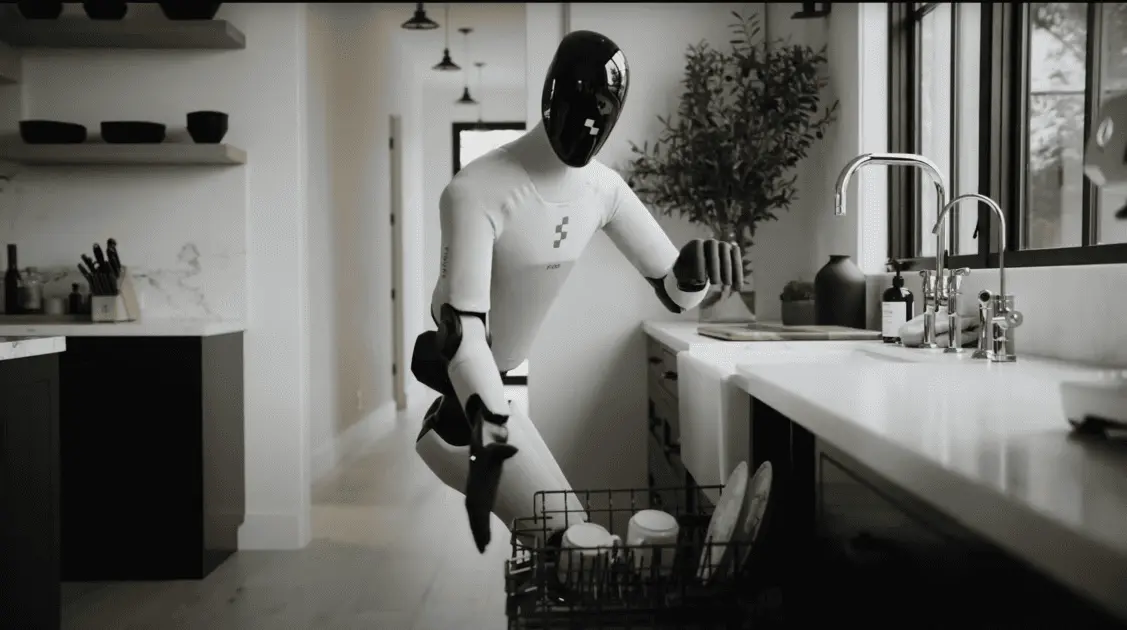

Figure AI's Helix 02 humanoid robot completes 4-minute dishwasher task with full-body autonomy

4 Sources

4 Sources

[1]

Figure robot gets AI brain that enables human-like full-body control

Helix, the AI brain powering Figure's humanoid robots, has been upgraded to its most advanced full-body control system to date. Unlike earlier models limited to upper-body tasks, Helix 02 uses a single neural network to control walking, manipulation, and balance together, directly from raw sensor data. In a key demonstration, the humanoid autonomously unloaded and reloaded a dishwasher across an entire kitchen without resets or human input. According to the Figure, the system replaces complex hand-coded control with learned, human-like motion, opening new levels of dexterity. In February 2025, the California-based firm unveiled Helix, a generalist Vision-Language-Action (VLA) model that combines perception, language, and control to advance robotics. For decades, loco-manipulation -- the ability of a robot to move and manipulate objects as a single continuous behavior -- has remained one of robotics' most difficult challenges. Walking and manipulation work well in isolation, but combining them introduces constant coupling: lifting affects balance, stepping changes reach, and arms and legs continuously constrain one another. While humanoid robots have shown impressive short, scripted feats such as dancing or jumping, most lack true adaptability. Their motions are often planned offline and break down when real-world conditions change. Traditional robotics has addressed this by separating locomotion and manipulation into distinct controllers linked by state machines, resulting in slow, brittle, and unnatural behavior. True autonomy demands a fundamentally different approach -- a unified system that perceives, decides, and acts with the entire body at once. This is the motivation behind Helix 02, a unified whole-body loco-manipulation VLA system. Helix 02 introduces System 0, a new foundation layer added to Figure's existing System 1 and System 2 hierarchy. System 2 handles high-level reasoning and language, System 1 translates perception into full-body motion at high frequency, and System 0 executes human-like balance and coordination at kilohertz rates. According to the Figure, trained on over 1,000 hours of human motion data and large-scale simulation, System 0 replaces hand-engineered control with a learned prior for stable, natural movement. Together, the three systems enable continuous, adaptive whole-body autonomy -- allowing humanoid robots to walk, carry, reach, and recover in real time. Figure claims Helix 02 demonstrates a major step forward in humanoid autonomy by performing continuous, multi-minute tasks that require tight integration of locomotion, dexterity, and sensing. In fully autonomous evaluations, the system completes extended locomotion and manipulation behaviors without teleoperation or resets. A flagship demonstration shows Helix 02 loading and unloading a dishwasher across a full-sized kitchen during a four-minute, end-to-end task -- the most complex autonomous manipulation sequence shown to date and the first long-horizon "pixels-to-whole-body" control on a humanoid robot. The task highlights several capabilities: walking while maintaining delicate grasps, using the entire body to interact with the environment, and coordinating both arms throughout complex object transfers and placement. The same neural network controls motions ranging from millimeter-scale finger movements to room-scale locomotion, sequencing more than 60 actions with implicit error recovery over minutes of execution. Helix 02 also advances dexterous manipulation through tactile sensing and palm-mounted cameras. In autonomous tests, the robot unscrews bottle caps, extracts individual pills from organizers despite occlusion, dispenses precise syringe volumes under variable resistance, and selects small metal parts from cluttered containers. According to the robotic firm, these results, taken together, show how Helix 02 combines full-body control, touch, and in-hand vision to achieve continuous, adaptive autonomy across complex, real-world tasks. "The results are early - but they already show what continuous, whole-body autonomy makes possible. A 4-minute autonomous task with 61 fluidly executed loco-manipulation actions, dexterous behaviors enabled by tactile sensing and palm cameras, and whole-body coordination that uses hips and feet alongside hands and arms," said Figure, in a statement.

[2]

New Helix Video Shows Robot Loading and Unloading Dishwasher Pretty Damn Well

We've come across video after video of humanoid robots performing backflips, delivering punches -- and even a clip of a robot kicking the CEO of its maker squarely in the chest. Don't get us wrong, the aerobatics on display are impressive -- but it's not hugely practical, since it's not like we're being swarmed by waves of armed bad guys on a daily basis. Fortunately, not every robotics maker is doubling down on martial arts. Case in point, California-based company Figure recently introduced the second iteration of its humanoid robot AI software, called Helix 02. In a video released this week, the company's black-and-white android, dubbed Figure 02, can be seen seamlessly unloading a dishwasher and placing clean dishes in upper cabinets and drawers before loading dirty dishes back into it. It's an impressive demo. Certain human-like flourishes highlight how Helix 02 was relying on motion-captured training data, from using its foot to kick up the opened dishwasher door to bumping a drawer closed with its hip. The company claims in a press release that a "single neural system" can control the "full body directly from pixels, enabling dexterous, long horizon autonomy across an entire room." The dishwasher demonstration is a "four-minute, end-to-end autonomous task that integrates walking, manipulation, and balance with no resets and no human intervention," per Figure. "We believe this is the longest horizon, most complex task completed autonomously by a humanoid robot to date." Helix 02's dishwasher mastery was the result of being trained on over 1,000 hours of "human motion data and sim‑to‑real reinforcement learning," the company claimed. And it's not a one-hit wonder: we've already seen Figure 02 deftly sort packages at a logistics warehouse, load a washing machine, and even fold laundry. However, how the robot would fare in a different -- and perhaps far messier -- kitchen remains to be seen. Flashy tech demos like this one should always be taken with a grain of salt, particularly when they're meant to convey how robots could one day navigate an unpredictable real-world environment, rather than a carefully set-up and controlled stage.

[3]

Figure AI unveils Helix 02 with full-body robot autonomy By Investing.com

Investing.com -- Figure AI has introduced Helix 02, a new humanoid robot model that controls the entire robot body directly from visual input, enabling seamless integration of walking, manipulation, and balance. The company describes Helix 02 as its most capable humanoid model to date, featuring a single neural system that powers "dexterous, long horizon autonomy" throughout an entire room. A key demonstration shows the robot autonomously unloading and reloading a dishwasher across a full-sized kitchen - a four-minute task completed without human intervention. Figure AI claims this represents "the longest horizon, most complex task completed autonomously by a humanoid robot to date." Helix 02 connects all onboard sensors - including vision, touch, and proprioception - directly to every actuator through a unified neural network. The system is powered by "System 0," a learned whole-body controller trained on over 1,000 hours of human motion data and simulation-to-real reinforcement learning. The company states that System 0 replaces 109,504 lines of hand-engineered C++ code with a single neural system for stable, natural motion. With Figure 03's embedded tactile sensing and palm cameras, Helix 02 can perform previously challenging manipulations such as extracting individual pills, dispensing precise syringe volumes, and handling small, irregular objects from cluttered environments despite self-occlusion. Figure AI explains that loco-manipulation - the ability for a robot to move and manipulate objects as a single, continuous behavior - has been one of robotics' most difficult unsolved problems because the actions constrain each other continuously. Traditional robotics has worked around this by separating locomotion and manipulation into distinct controllers connected with state machines, but Figure AI argues that true autonomy requires a single learning system that reasons over the whole body simultaneously. Helix 02 extends the company's "System 1, System 2" architecture with the new System 0 foundation layer. Each system operates at different timescales: System 2 reasons about goals, System 1 translates perception into joint targets at 200 Hz, and System 0 executes at 1 kHz to handle balance, contact, and coordination. The company demonstrated Helix 02 performing various tasks, including unscrewing bottle caps, locating and extracting pills from medicine boxes, dispensing precise volumes from syringes, and picking metal pieces from cluttered boxes. This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[4]

US's Figure AI humanoid robot completes longest fully autonomous task

Figure AI's Helix 02 humanoid robot executed a four-minute dishwasher cycle without human intervention, powered by an autonomous whole-body control system. In a demonstration released on Jan. 27, the robot, Helix 02, carried out 61 separate locomotion and manipulation actions in a full-sized kitchen, according to Figure AI. The sequence included walking to the dishwasher, unloading dishes, navigating the room, placing items into cabinets, reloading the dishwasher, and starting it again. Figure AI described the demonstration as the "longest horizon, most complex task completed autonomously by a humanoid robot to date." The company said loco-manipulation, defined as the ability to move and manipulate objects as one continuous behavior, remains one of the most difficult problems in robotics. While humanoid robots have previously demonstrated abilities such as jumping, dancing, or moving objects, most systems rely on preplanned motions with limited real-time feedback. When conditions shift unexpectedly, performance often breaks down. By contrast, Figure AI said Helix 02 is designed to continuously perceive, decide, and act, allowing it to walk while carrying objects, adjust its balance while reaching, and recover from errors in real time. At the core of this capability is System 0, a learned whole-body controller trained on more than 1,000 hours of human motion data combined with sim-to-real reinforcement learning. Rather than engineering separate reward functions, the system learns directly from human motion, enabling coordinated posture control and balance across a wide range of behaviors. Helix 02 also integrates tactile sensing and palm-mounted cameras to enhance fine manipulation. In autonomous tests, the robot has unscrewed bottle caps, removed individual pills from organizers despite visual obstruction, dispensed precise syringe volumes under varying resistance, and selected small metal parts from cluttered containers, according to Interesting Engineering. Figure AI said the results are still early but already show the potential of continuous, whole-body autonomy, adding that the work marks a shift away from choreographed demonstrations toward robots capable of genuine autonomous problem-solving.

Share

Share

Copy Link

Figure AI unveiled Helix 02, its most advanced humanoid robot control system to date. The AI brain uses a single neural network to manage walking, manipulation, and balance simultaneously. In a demonstration, the robot autonomously unloaded and reloaded a dishwasher across an entire kitchen in four minutes without human intervention—marking what the company calls the longest and most complex autonomous task by a humanoid robot.

Figure AI Unveils Helix 02 With Advanced Human-Like Full-Body Control

Figure AI has introduced Helix 02, its most capable humanoid robot control system that represents a significant shift in how robots coordinate movement and manipulation. Unlike earlier models limited to isolated tasks, Helix 02 employs a single neural network to control walking, manipulation, and balance together, working directly from raw sensor data

1

. The system replaces complex hand-coded control with learned, human-like motion, opening new levels of dexterity for practical applications.The California-based firm demonstrated this capability through a dishwasher demonstration that has captured attention across the robotics industry. In the four-minute, end-to-end autonomous task, the humanoid robot unloaded dishes from a dishwasher, navigated across a full-sized kitchen, placed items into upper cabinets and drawers, then reloaded dirty dishes back into the appliance—all without resets or human intervention

2

. Figure AI claims this represents "the longest horizon, most complex task completed autonomously by a humanoid robot to date"3

.

Source: Interesting Engineering

Solving Loco-Manipulation Through Unified Neural Architecture

For decades, loco-manipulation—the ability of a robot to move and manipulate objects as a single continuous behavior—has remained one of robotics' most difficult challenges. Walking and manipulation work well in isolation, but combining them introduces constant coupling: lifting affects balance, stepping changes reach, and arms and legs continuously constrain one another

1

. Traditional robotics has addressed this by separating locomotion and manipulation into distinct controllers linked by state machines, resulting in slow, brittle, and unnatural behavior.Helix 02 introduces System 0, a new foundation layer added to Figure AI's existing System 1 and System 2 hierarchy. System 2 handles high-level reasoning and language, System 1 translates perception into full-body motion at 200 Hz, and System 0 executes human-like balance and coordination at kilohertz rates

3

. This learned whole-body controller was trained on over 1,000 hours of human motion data combined with sim-to-real reinforcement learning, replacing 109,504 lines of hand-engineered C++ code with a single neural system for stable, natural motion3

.Full-Body Robot Autonomy Demonstrated Through Complex Task Sequences

The dishwasher task highlighted several capabilities that distinguish Helix 02 from previous demonstrations. The sequence included 61 separate locomotion and manipulation actions executed fluidly across minutes of operation

4

. Certain human-like flourishes revealed how the system leveraged motion-captured training data, from using its foot to kick up the opened dishwasher door to bumping a drawer closed with its hip2

.The same neural network controls motions ranging from millimeter-scale finger movements to room-scale locomotion, sequencing actions with implicit error recovery over minutes of execution

1

. This continuous, adaptive whole-body autonomy allows the humanoid robot to walk while maintaining delicate grasps, use the entire body to interact with the environment, and coordinate both arms throughout complex object transfers and placement.Related Stories

Tactile Sensing and Palm Cameras Enable Dexterous Manipulation

Helix 02 also advances dexterous manipulation through tactile sensing and palm-mounted cameras integrated with the Figure 03 hardware platform. In autonomous tests, the robot unscrewed bottle caps, extracted individual pills from organizers despite occlusion, dispensed precise syringe volumes under variable resistance, and selected small metal parts from cluttered containers

1

. These capabilities demonstrate how the system combines visual input, proprioception, and touch to handle previously challenging manipulations.The AI brain connects all onboard sensors directly to every actuator through a unified neural network, enabling the control system to continuously perceive, decide, and act with the entire body at once

3

. This approach differs fundamentally from choreographed demonstrations that rely on preplanned motions with limited real-time feedback, which often break down when real-world conditions change unexpectedly.From Flashy Demos to Practical Autonomy

While the results are early, they already show what continuous, whole-body autonomy makes possible for practical applications. Figure AI has previously demonstrated the Figure 02 robot deftly sorting packages at a logistics warehouse, loading a washing machine, and even folding laundry

2

. The company's focus on household and industrial tasks contrasts with other robotics makers doubling down on aerobatics like backflips and martial arts demonstrations.However, questions remain about how the humanoid robot would perform in different and potentially messier environments. Flashy tech demos should be taken with caution, particularly when meant to convey how robots could navigate unpredictable real-world scenarios rather than carefully controlled stages

2

. The shift from scripted feats to genuine autonomous problem-solving represents a critical threshold for robotics, one that Figure AI appears positioned to cross as it refines its VLA (Vision-Language-Action) model architecture and expands training data.For businesses exploring automation and consumers anticipating domestic robots, Helix 02 signals that practical, multi-minute autonomous tasks are becoming feasible. The technology's ability to learn from human motion data and transfer skills from simulation to reality suggests that deployment timelines for useful humanoid robots may be accelerating. Watch for how Figure AI scales this capability across diverse environments and whether competitors can match this level of integrated full-body robot autonomy.

References

Summarized by

Navi

[1]

Related Stories

Figure AI's Helix: A Breakthrough in Humanoid Robot Capabilities

21 Feb 2025•Technology

Figure's Humanoid Robot Tackles Dishwashing: A Leap Towards Versatile AI-Powered Home Assistance

05 Sept 2025•Technology

Figure AI Unveils Figure 03: A Versatile Humanoid Robot for Home and Industry

09 Oct 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology