Figure AI's Helix: A Breakthrough in Humanoid Robot Capabilities

9 Sources

9 Sources

[1]

Figure Robotic's Helix Demo Is Much More Impressive Than it Looks

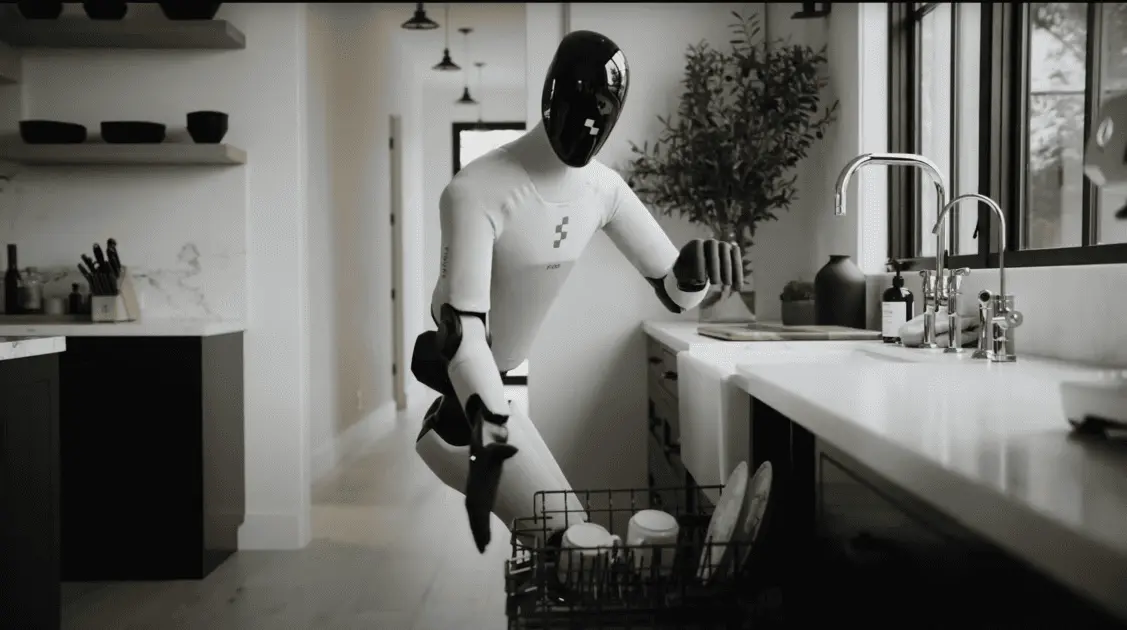

Summary Figure AI is revolutionizing robotics with Figure 02 bots and the Helix system innovation. The Helix demo shows impressive robot cooperation in a kitchen setting, showcasing their abilities. With Figure AI advancements, general-purpose robots may soon be accessible on the market. It seems that the robotic revolution is in full swing now, and robotics companies are releasing new demos of their incredible bots relentlessly. Figure AI has been plowing away as well, and its "Helix" demo is impressive, until you stop to think about it, then it's really impressive. Figure's Been Making Waves Though I'd taken note of the company's coffee training demo, I didn't really sit up and take notice until it dropped a video showing chatbot integration with their robot. Check out this amazing clip. Seeing it understand the environment, reasoning its way through requests, and then perform complex manual actions all at the same time was earth-shaking. It's haunting to see it in action. In August of 2024, the company introduced the second generation of its robotic platform in the form of Figure 02. This is a sleeker, more capable unit with just over two hours of runtime on a charge, and heaps of AI intelligence onboard. You can also catch a glimpse of Figure 02 bots working in a BMW factory, as an experimental partnership between Figure AI and the famous German car company. The Figure bots do pick-and-place tasks that would normally be done by human beings. This is different from the big robotic arms that have been welding car parts together for decades, because the job is never quite the same twice. This is why humans are necessary to set the parts for old-style welding robots, but thanks to the power of modern AI, the Figure bots can notice when something is a little out of place and correct for it. Related A Tragic Robot Shutdown Shows Why Local AI Is So Important The body cannot live without the soul. Posts 1 Helix Is an Impressive Demo On the Surface This latest "Helix" demo shows us two Figure 02 robots in a simulated kitchen scene working together to complete a novel task. Yes, people are already poking fun at how "slow" the whole thing is, but there's no denying that, assuming Figure is being honest about the demo conditions, these two robots are working stuff out for themselves, and seamlessly cooperating with one another to fulfill the human's request. Related Robotic Octopus Arms are Sort of Creepy, But Super-effective OK, who had money on mecha-Cthulhu? Posts There Are So Many Hidden Problems to Solve Here As a human, you might not think much about working with another human like this. You probably don't even have to talk to each other for a task this "simple" and the end result will be more or less the same except much faster. However, if you break it down into its components, this is a monumental task. Getting robots to dynamically hand each other things is hard, dividing up the work is hard. The Helix system builds on what Figure has already shown us and adds so much more. According to the company Helix: Provides full, continuous control of the robot's upper body, from the major components down to the individual fingers. It can operate at the same time on two robots. With Helix, Figure robots can pick up any arbitrary object, not just pre-programmed ones. It's all one neural network for all behaviors. It all runs using power-efficient onboard GPUs. In other words, it seems the company feels about ready to start selling their worker bots, and we might be looking at the first generation of market-ready general purpose robots. Robots Can Have a Cooperation Advantage Over People It's also interesting how the "cooperation" between the robots actually is more like one robot with one brain split across two bodies. It's an ingenious way to cut right through so many challenges with getting two completely independent robots to work together. I do wonder if this can be scaled to more robots, where a team of, say, four or five robots could work as one seamless whole. Splitting up to go do separate tasks and coming together for complex or heavy jobs without skipping a beat. A strength of human workers is that we can form teams and work together, but there's always some productivity overhead involved in a team context. If you have one entity with five bodies, a lot of that inherent teanmwork overhead would go away. We're Tantalisingly Close to General-purpose Robots If Figure's confidence is backed up by real-world performance, then it probably won't be long before we see general purpose robots walking, rolling, and flying around doing all sorts of jobs. Ones that can understand us, understand the world around them, and solve novel problems when given a job to do. Of course, I am sure that one of these humanoid wonders is fabulously, stupendously expensive, but as with most high-technologies that's probably going to change rapidly over the coming years.

[2]

Figure AI just took a step toward smarter robots - starting with ketchup in the fridge.

TL;DR: Figure AI's Helix model enables humanoid robots to understand and interact with the world in real time, handling new objects and tasks without pre-programmed instructions. In another advancement for robotics, Figure AI has introduced Helix, a new Vision-Language-Action (VLA) model that enables humanoid robots to see, understand, and interact with the world in real-time. Unlike traditional robotic systems, Helix allows robots to pick up objects they've never seen before, coordinate with other robots, and even understand natural language instructions, like putting away groceries in the right place. In the footage, Helix-powered robots are presented with common grocery items - like eggs, fruit, and ketchup - and tasked with figuring out where they belong. Unlike conventional robots that require extensive programming or thousands of pre-recorded examples, Helix enables these robots to make real-time decisions based on their environment and natural language commands. The robots move slowly throughout the demonstration, taking their time to process each object in the scene. However, they very carefully and deliberately identify each object in the scene, and carry out the task as assigned. What's notable about this advancement is the robot's ability to react instantly to new scenarios - handling objects they've never seen before and coordinating multi-step tasks with other robots. This adaptability means Helix-powered robots don't just follow pre-programmed routines; they analyze their environment, adjust their actions in real time, and collaborate seamlessly, making them far more versatile than previous models. While the grocery task is a fun demonstration, the key implication is that Helix moves humanoid robots closer to true autonomy. Figure AI is already preparing Helix for commercial use. Its model runs on low-power embedded GPUs, making it one of the most practical AI systems for robotics to date. The company reiterated how while the early results are promising, they only scratch the surface of what is possible. To keep up to date on the latest from Figure AI, check out the company's website.

[3]

AI-Powered Robots Advance in General Tasks in a Crowded Market | PYMNTS.com

The dream of having robots do household chores inched a little closer to reality last week. Figure, an OpenAI-backed robotics artificial intelligence (AI) startup, showed off humanoid robots that can understand voice commands and can grab objects they had never seen before. In a Figure video, a guy holding a bag of groceries starts unloading eggs, apple, ketchup, cheese, cookies and other items on a counter. "Hey Figures, can you come here?" the person said to the robots. "Even though this is the very first time that you've ever seen these items, I'd like you to use your new Helix AI and try to reason through where you think they belong ... and work together to put them away. Does that sound good?" The robots started putting the fresh items -- eggs, ketchup and cheese -- in the fridge. Cookies in a drawer and the apple in a container on the counter. They worked together, even handing some items to each other. Figure claims it is the first to enable two robots to collaborate on a task and that the bots can pick up "virtually any small household object" even if they've never seen it before. The robots are powered by Helix, a generalist AI model that combines vision, language understanding, and movement control to make robots smarter and more adaptable. It solves major challenges in robotics, especially in home environments, where objects are unpredictable and varied. Figure said it developed Helix in-house. Previously, it was using OpenAI's AI models, but left the partnership due to an in-house breakthrough, according to founder Brett Adcock in a Feb. 4 post on X. Last week, Bloomberg reported that Figure is in talks to raise $1.5 billion in its latest round. The funding would catapult the startup's value to $39.5 billion. Figure is developing both residential and commercial robots. Figure joins an already crowded market for robotics AI, and it faces some big competitors. Meta reportedly is forming a new team dedicated to developing humanoid robots powered by AI, according to Bloomberg. The team will function within its Reality Labs division, where metaverse development is going on. Marc Whitten, the former CEO of GM's Cruise AV unit, will lead the team. The company plans to focus on robots that can perform household chores, as well as AI, sensors and software that could be used by a variety of companies manufacturing and selling robots. Google-funded Apptronik is another humanoid robotics competitor. Recently, it raised $350 million on a Series B funding round for further development and deployment of its general humanoid robot, Apollo. In December, it partnered with the Google DeepMind robotics team. Meanwhile, Tesla has been building its own humanoid robots. CEO Elon Musk said at his earnings call in January that the company plans to build several thousand Optimus humanoid robots this year that can do "useful things." "Our goal is to ramp up Optimus production faster than maybe anything has ever been ramped," Musk said. The internal target is to build several thousand robots this year and go up from there. One difficulty is that Tesla has had to build robot motors, sensors and actuators from scratch. "Nothing worked for our humanoid robots, at any price," Musk said. "We had to design everything from physics-first principles to work." The first robots will be deployed at Tesla factories, refined, and then readied for sale in mid- to late 2026. Once the manufacturing of robots ramp to more than 1 million a year, the price will be less than $20,000. In the meantime, Tesla is still working on making Optimus more usable. "Obviously, there are many challenges with Optimus," said Musk, who wants to see his robots play the piano and even thread a needle. "Traditional industrial robotic arms with vision systems primarily rely on preprogrammed instructions to execute tasks. This works well in factory environments where applications are repetitive and goal-oriented," she told PYMNTS. However, "implementing humanoid robots into household settings is a more complex advancement because, unlike factories, household environments are highly dynamic, and tasks will vary significantly from one home to another," Shern said. In unpredictable settings, humanoid robots need AI to function effectively in daily life because preprogrammed instructions won't be enough. "Integrating AI to interpret human commands and dynamically generate task-specific actions is key to enabling real-world household applications," Shern said. "For example, [for] an AI-powered humanoid robot to 'clean up the table,' it would need to understand the context, recognize objects, and make a decision on what action -- such as disposing of trash, placing perishable items in the refrigerator, and sorting non-perishable goods into a basket." Rodney Brooks, co-founder and CTO of Robust AI, said Figure's robot demo showed just how superior humans are at picking up objects compared to machines. "The human effortlessly reached into the paper bag, extracting each item one by one -- often with only the slightest glimpse of each -- then laid them out neatly on a pristine countertop," Brooks told PYMNTS. "In contrast, the humanoid robots picked up the objects at a snail's pace, about 10 to 20 times slower, despite having a clear, unobstructed view of them. "Until we reach a point where two stationary-legged robots can manipulate objects with enough speed and efficiency to deliver a cost-effective, ROI-positive solution that businesses are willing to adopt at scale, I'll remain skeptical about any so-called breakthrough in robotic picking technology."

[4]

Figure AI HELIX : Vision-Language-Action Model Making Humanoid Robots Smarter

Figure AI has unveiled HELIX, a pioneering Vision-Language-Action (VLA) model that integrates vision, language comprehension, and action execution into a single neural network. This innovation allows humanoid robots to perform complex tasks with minimal programming or fine-tuning, representing a significant advancement in robotics. With potential applications spanning industrial, household, and collaborative environments, HELIX is poised to redefine the capabilities of humanoid robots. The HELIX is about breaking down barriers. It's designed to make humanoid robots smarter, more versatile, and easier to work with, whether they're navigating the controlled chaos of a warehouse or the unpredictable environment of a home. By combining vision, language understanding, and action into a single neural network, HELIX enables robots to handle complex tasks with minimal programming or fine-tuning. The result? Machines that can generalize, adapt, and even collaborate with one another. If you've ever wished for a robot that could truly lend a hand -- whether it's picking up an unfamiliar object or working alongside you on a project -- HELIX might just be the breakthrough you've been waiting for. At the heart of HELIX lies the Vision-Language-Action (VLA) model, which seamlessly combines three essential functions: Unlike traditional robotic systems that rely on separate modules for these functions, HELIX operates through a unified neural network. This integrated design eliminates the need for task-specific fine-tuning, allowing robots to generalize their behavior across a wide range of scenarios. One of HELIX's standout features is its ability to run on low-power GPUs, making it both energy-efficient and cost-effective. This compact and scalable design ensures that HELIX can be deployed across various robotic platforms without sacrificing performance, making it a versatile solution for diverse applications. HELIX-equipped robots demonstrate remarkable adaptability, capable of manipulating unfamiliar objects and responding to natural language commands without prior task-specific training. For example, a robot can execute a command like, "Pick up the red cup and place it on the table," even if it has never encountered that exact scenario before. This adaptability is powered by HELIX's pre-trained vision-language model, which features 7 billion parameters, allowing it to interpret and execute a broad spectrum of commands. In collaborative tasks, HELIX excels by controlling the entire humanoid upper body, including wrists, torso, head, and fingers, with high agility. This capability allows multiple robots to work together seamlessly, performing tasks such as object handoffs or shared manipulation. Such coordination is particularly valuable in industrial and household settings, where teamwork and flexibility are often essential. Advance your skills in humanoid robots by reading more of our detailed content. HELIX's sophisticated capabilities are the result of extensive training on diverse datasets. The model was developed using: This combination of real-world and synthetic data ensures that HELIX can generalize effectively, adapting to new environments and tasks with minimal additional training. The result is a model that balances precision with flexibility, making it suitable for a wide array of applications. HELIX is initially targeted at industrial applications, where its ability to handle diverse objects and perform collaborative tasks can streamline operations. For instance, in BMW manufacturing plants, HELIX-equipped robots have demonstrated their potential in assembly and logistics tasks, reducing labor costs and enhancing efficiency. In household environments, HELIX offers promising possibilities, although the unpredictable nature of home settings presents unique challenges. Its ability to generalize and adapt suggests that it could eventually assist with tasks such as cleaning, organizing, or caregiving. This versatility positions HELIX as a potential fantastic option for domestic use, provided further refinements are made to address the complexities of home environments. HELIX sets Figure AI apart in the competitive field of humanoid robotics. While companies like Tesla, Boston Dynamics, and Google DeepMind have made significant strides in areas such as mobility and real-world testing, HELIX distinguishes itself by emphasizing cognitive and collaborative capabilities. Tesla's Optimus robot and Boston Dynamics' Atlas focus on physical agility and mobility, but HELIX prioritizes the ability to generalize tasks and coordinate seamlessly. This approach makes HELIX suitable for a broader range of applications, from industrial automation to household assistance, giving it a unique edge in the robotics market. Despite its impressive capabilities, HELIX faces several challenges that must be addressed to unlock its full potential. Key areas for improvement include: Future development efforts are likely to focus on refining these aspects and expanding HELIX's capabilities. Fleet-based data collection and shared learning systems could accelerate progress, allowing robots to learn from each other's experiences. As competition in robotics intensifies, Figure AI will need to maintain transparency and demonstrate HELIX's practical value to secure widespread adoption. HELIX is described as commercially ready, with successful trials already conducted in industrial settings. Its ability to operate on low-power GPUs and its scalable design make it an attractive option for businesses seeking to automate complex tasks. By using fleet learning systems, HELIX can continuously improve, making sure long-term value for its users. As industries increasingly adopt automation, HELIX's combination of advanced AI capabilities and practical applications positions it as a compelling solution for a wide range of challenges. Its adaptability and collaborative features make it a versatile tool for both industrial and domestic use, provided ongoing development addresses its current limitations.

[5]

Helix: The hive-minded, fully autonomous VLA humanoid robot

Having never seen these kitchen items before, Helix-powered Figure 02 robots were able to put away random groceries by simply asking them "Can you put these away?" Only weeks after Figure.ai announced ending its collaboration deal with OpenAI, the Silicon Valley startup has announced Helix - a commercial-ready, AI "hive-mind" humanoid robot that can do almost anything you tell it to. Figure has made headlines in the past with its Figure 01 humanoid robot. The company is now on version 02 of its premiere robot, however, it's gotten more than just a few design changes: it's gotten an entirely new AI brain called Helix VLA. It's not just any ordinary AI either. Helix is the very first of its kind to be put into a humanoid robot. It's a generalist Vision-Language-Action model. The keyword being "generalist." It can see the world around it, understand natural language, interact with the real world, and it can learn anything. Unlike most AI models that can require 1,000s of hours of training or hours of PhD-level manual programming for a single new behavior, Helix can combine its ability to understand semantic knowledge with its vision language model (VLM) and translate that into actions in meat-space. "Pick up the cassette tape from the pile of stuff over there." What if Helix had never actually seen a cassette tape with its own eyes before (granted, they are pretty rare these days)? Combining the general knowledge that large language models (LLM) like ChatGPT have with Figure's own VLM, Figure can identify and pick out the cassette. It's unknown whether it'll appreciate Michael Jackson's Thriller as much as we did though. It gets better. Helix has the ability to span two robots simultaneously and work collaboratively with both. And I don't mean simply two bots digging through a pile of stuff to find Michael Bolton's greatest hits. Each robot has two GPUs built inside to handle high-level latent planning at 7-9 Hz (System 2) and low-level control at 200 Hz (System 1), meaning System 2 can really think about something while System 1 can act on whatever S2 has already thought about and created a plan of action for. Running at 200 Hz means the S1 bot can take quick physical action as it doesn't have to plan out what it's doing as it's already been planned for it by S2. 7-9 Hz is making computations 7-9 times per second, which is no slouch, but it leaves the S2 bot enough time to really deep-think. After all, the human expressions have always been "two heads are better than one," and "let's get another set of eyes on it," etc. Except, Helix is a single AI brain controlling two bots simultaneously. Figure and OpenAI had been collaborating for about a year before Figure.ai founder, Brett Adcock, decided to pull the plug with a post on X "Figure made a major breakthrough on fully end-to-end robot AI, built entirely in-house. We're excited to show you in the next 30 days something no one has ever seen on a humanoid." It's been 16 days since his February 4th Valentine's Day post ... over-achiever? Over-achieve indeed. This doesn't feel like just another small step in robotics. This feels more like a giant leap. AI now has a body and can do stuff in the real world. Figure 01 robots have already demoed the ability to work simple, repeatable tasks in the BMW Manufacturing Co factory in Spartanburg, SC. The Figure 02 robots represent an entirely new generation of capability. And they're commercial-ready, right out of the box, batteries included. Unlike previous iterations, Helix uses a single set of neural network weights to learn; think "hive-mind." Once a single bot has learned a task, now they all know how to do it. Which plays great for home-integration of the bots. When you think about it, compared to a well-organized and controlled factory setting, a household is actually quite chaotic and complicated. Dirty laundry on the floor next to the hamper (you know who you are), your kids' foot-murdering Legos strewn about, cleaning supplies under the kitchen sink, fine China in the curio cabinet (what's that!?). The list goes on. Figure reckons that the 02 can pick up nearly any small household object even if it's never seen it before. The Figure 02 robot has 35 degrees of freedom, including human-like wrists, hands, and fingers. Pairing its generalist knowledge with its vision allows it to even understand abstract concepts. "Pick up the desert item," in a demo, led Figure to pick up a toy cactus that it had never seen before from a pile of random objects on a table in front of it. This is all absolutely jaw-dropping stuff. The Figure 02 humanoid robot is the closest thing to I,Robot we've seen to date. This marks the beginning of something far more than just "smart machines." Helix bridges a gap between something we control - or at least try to control - on our screens to real-world autonomy and real-world physical actions ... and real-world consequences. It's equally terrifying as it is mesmerizing. Privacy? Not anymore. I can't help but wonder ... who controls all this data? Engineers at Figure? Is it hackable (does a bear sleep in the woods?) and is some teenager living in mom's basement going to start sending ransomware out to every owner? Are trade secrets going to be revealed from factory worker-bots? Corporate sabotage is a real thing, and I really want to know what the supposed 23 flavors found in Dr Pepper are ... The whole hive-mind concept means that on a server somewhere, there is a complete, highly detailed walk-through of your house. Figure knows your entire family, what's in your sock drawer and your nightstand. It knows the exact dollar amount of cash stuffed in your mattress. And if you're not careful, it might even know what you look like in your birthday suit.

[6]

Figure AI Is Supercharging Humanoid Robots -- Here's How It Works - Decrypt

Figure AI finally revealed on Thursday the "major breakthrough" that led the buzzy robotics startup to break ties with one of its investors, OpenAI: A novel dual-system AI architecture that allows robots to interpret natural language commands and manipulate objects they've never seen before -- without needing specific pre-training or programing for each one. Unlike conventional robots that require extensive programming or demonstrations for each new task, Helix combines a high-level reasoning system with real-time motor control. Its two systems effectively bridge the gap between semantic understanding (knowing what objects are) and action or motor control (knowing how to manipulate those objects). This will make it possible for robots to become more capable over time without having to update their systems or train on new data. To demonstrate how it works, the company released a video showing two Figure robots working together to put away groceries, with one robot handing items to another that places them in drawers and refrigerators. Figure claimed that neither robot knew about the items they were dealing with, yet they were capable of identifying which ones should go in a refrigerator and which ones are supposed to be stored dry. "Helix can generalize to any household item," Adcock tweeted. "Like a human, Helix understands speech, reasons through problems, and can grasp any object -- all without needing training or code." To achieve this generalization capability, the Sunnyvale, California-based startup also developed what it called a Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control, which is what made its models capable of generalizing. This model, Figure claims, marks several firsts in robotics. It outputs continuous control of an entire humanoid upper body at 200Hz, including individual finger movements, wrist positions, torso orientation, and head direction. It also lets two robots collaborate on tasks with objects they've never seen before. The breakthrough in Helix comes from its dual-system architecture that mirrors human cognition: a 7-billion parameter "System 2" vision-language model (VLM) that handles high-level understanding at 7-9Hz (updating its status 9 times per second thinking slowly for structural and complex tasks or movements), and an 80-million parameter "System 1" visuomotor policy that translates those instructions into precise physical movements at 200Hz (basically updating its status 200 times per second) for quick thinking. Unlike previous approaches, Helix uses a single set of neural network weights for all behaviors without task-specific fine-tuning. One of the systems processes speech and visual data to enable complex decision-making, while the other translates these instructions into precise motor actions for real-time responsiveness. "We've been working on this project for over a year, aiming to solve general robotics," Adcock tweeted. "Coding your way out of this won't work; we simply need a step-change in capabilities to scale to a billion-unit robot level." Helix says all of this opens the door to a new scaling law in robotics, one that doesn't depend on coding and instead relies on a collective effort that makes models more capable without any prior training on specific tasks. Figure trained Helix on approximately 500 hours of teleoperated robot behaviors, then used an auto-labeling process to generate natural language instructions for each demonstration. The entire system runs on embedded GPUs inside the robots, making it immediately ready for commercial use. Figure AI said that it has already secured deals with BMW Manufacturing and an unnamed major U.S. client. The company believes these partnerships create "a path to 100,000 robots over the next four years," Adcock said. The humanoid robotics company secured $675 million in Series B funding earlier this year, from investors including OpenAI, Microsoft, NVIDIA and Jeff Bezos, at a $2.6 billion valuation. It's reportedly in talks to raise another $1.5 billion, which would value the company at $39.5 billion.

[7]

Robot Startup Figure Reveals an AI Breakthrough Called Helix

In a blog post, Figure wrote that Helix's launch marks a major step in bringing humanoid robots into "everyday home environments." Unlike previous versions of its models, Figure says that Helix doesn't need to be fine-tuned or trained on hundreds of demonstrations in order to perform specific tasks. Instead, a new design allows the robots to interact with objects that they've never encountered before. The company says that Figure robots equipped with Helix "can pick up virtually any small household object." In a video example of Helix's capabilities, a man asks two Figure-made robots, both equipped with the Helix AI model, to put away a bag of groceries. After looking around, the robots slowly opened up a nearby fridge, put most of the food away, and placed an apple in a serving bowl. Up until recently, Figure had been in a partnership with OpenAI in which the two firms collaborated to customize OpenAI's models so they could be used by Figure's robots. But on February 4, Figure founder Brett Adcock announced on X that he had decided to pull his company out of the agreement. He said that Figure had "made a major breakthrough on fully end-to-end robot AI, built entirely in-house," a clear nod to Helix's imminent reveal. Adcock said that the company has been working on Helix for over a year.

[8]

Figure Cracks AI for Humanoids Before OpenAI Can

This model marks progress in robotics, enabling robots to understand and react to instructions in real time, handle unforeseen objects, and collaborate. "We've been working on this project for over a year, aiming to solve general robotics. Like a human, Helix understands speech, reasons through problems, and can grasp any object - all without needing training or code," said Brett Adcock, founder at Figure AI. This development comes after Adcock announced "the decision to leave our Collaboration Agreement with OpenAI" in his X post on February 5th. OpenAI has been an investor in the company before. In the same post, he had also hinted at this Helix development saying, "We're excited to show you in the next 30 days something no one has ever seen on a humanoid." In another recent post, Adcock also said this is the breakthrough year for AI Robotics we've been waiting for. With Helix, he mentioned that 2025 will be a pivotal year as the company starts production, ships more robots, and tackles home robotics. In the video posted by the company, one Helix neural network is running on 2 Figure robots at once. The company said, "Our robots equipped with Helix can now pick up virtually any household object without any code or prior training." Both the robots can achieve coordination through natural language prompts like "Hand the bag of cookies to the robot on your right" or "Receive the bag of cookies from the robot on your left and place it in the open drawer." Helix is a "System 1, System 2" VLA model for high-rate, dexterous control of the entire humanoid upper body. It is the first VLA model to control a humanoid upper body, facilitate multi-robot collaboration, and pick up any small household object. The model uses one set of neural network weights to learn behaviours without task-specific fine-tuning. It runs on low-power GPUs, making it commercially viable. According to Figure AI in their technical paper, the current state of robotics requires "either hours of PhD-level expert manual programming or thousands of demonstrations" to teach robots new behaviours. "Helix matches the speed of specialised single-task behavioural cloning policies while generalising zero-shot to thousands of novel test objects," said the company. The company also notes that Helix achieves "strong performance across diverse tasks with a single unified model." The model was trained using approximately 500 hours of teleoperated behaviours. During training, the VLM processes video clips from the onboard robot cameras, prompted with: "What instruction would you have given the robot to get the action seen in this video?" Figure AI is also looking forward to scaling Helix and inviting people to join their team. As for OpenAI, according to its career page, the startup is hiring for roles in mechanical engineering, robotics systems integration, and program management. The goal is to "integrate cutting-edge hardware and software to explore a broad range of robotic form factors". Last year, the company hired Caitlin Kalinowski to lead its robotics and consumer hardware divisions. Previously at Meta, she oversaw the development of Orion augmented reality (AR) glasses. OpenAI has also invested in robotics AI startup Physical Intelligence.

[9]

Figure introduces 'never-seen' humanoid brain that controls full robot body

In a significant move in the AI world, California-based Figure has revealed Helix, a generalist Vision-Language-Action (VLA) model that unifies perception, language understanding, and learned control to overcome multiple longstanding challenges in robotics. Brett Adcock, founder of Figure, said that Helix is the most significant AI update in the company's history. "Helix thinks like a human... and to bring robots into homes, we need a step change in capabilities. Helix can generalize to virtually any household item," Adcock said in a social media post.

Share

Share

Copy Link

Figure AI unveils Helix, an advanced Vision-Language-Action model that enables humanoid robots to perform complex tasks, understand natural language, and collaborate effectively, marking a significant leap in robotics technology.

Figure AI Introduces Groundbreaking Helix Model

Figure AI, a Silicon Valley startup, has unveiled Helix, a revolutionary Vision-Language-Action (VLA) model that marks a significant advancement in humanoid robotics

1

. This innovative system integrates vision, language comprehension, and action execution into a single neural network, enabling robots to perform complex tasks with minimal programming or fine-tuning4

.Helix Capabilities and Features

The Helix model demonstrates remarkable adaptability, allowing Figure 02 robots to manipulate unfamiliar objects and respond to natural language commands without prior task-specific training

2

. Key features include:- Real-time decision-making based on environmental cues and natural language instructions

- Ability to pick up virtually any small household object, even if never encountered before

- Seamless collaboration between multiple robots, including object handoffs

- Operation on low-power GPUs, ensuring energy efficiency and cost-effectiveness

4

Impressive Demonstrations

Figure AI has showcased Helix's capabilities through various demonstrations:

- Two Figure 02 robots working together in a simulated kitchen scene, completing novel tasks

1

- Robots understanding and executing commands to put away groceries, including items they had never seen before

2

- Demonstrating the ability to interpret abstract concepts and perform tasks accordingly

5

Related Stories

Market Implications and Competition

The introduction of Helix positions Figure AI as a strong contender in the rapidly evolving humanoid robotics market. While companies like Tesla, Boston Dynamics, and Google DeepMind have made significant strides in robotics, Helix distinguishes itself by emphasizing cognitive and collaborative capabilities

3

.Figure AI is reportedly in talks to raise $1.5 billion, potentially valuing the startup at $39.5 billion

3

. This funding could accelerate the development and deployment of Helix-powered robots in both residential and commercial settings.Potential Applications and Challenges

Helix's versatility opens up numerous potential applications:

- Industrial settings: Streamlining operations in manufacturing and logistics

- Household environments: Assisting with cleaning, organizing, and caregiving tasks

- Collaborative workspaces: Enhancing human-robot interaction and teamwork

However, challenges remain, including:

- Improving speed and efficiency of object manipulation

- Addressing privacy concerns related to data collection and storage

- Ensuring robust security measures to prevent potential misuse or hacking

5

As Figure AI continues to refine Helix and address these challenges, the technology could potentially revolutionize various industries and bring us closer to the reality of truly versatile and intelligent humanoid robots.

References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology