Google DeepMind Unveils Cloud-Free AI Model for Autonomous Robots

9 Sources

9 Sources

[1]

Google's new robotics AI can run without the cloud and still tie your shoes

We sometimes call chatbots like Gemini and ChatGPT "robots," but generative AI is also playing a growing role in real, physical robots. After announcing Gemini Robotics earlier this year, Google DeepMind has now revealed a new on-device VLA (vision language action) model to control robots. Unlike the previous release, there's no cloud component, allowing robots to operate with full autonomy. Carolina Parada, head of robotics at Google DeepMind, says this approach to AI robotics could make robots more reliable in challenging situations. This is also the first version of Google's robotics model that developers can tune for their specific uses. Robotics is a unique problem for AI because, not only does the robot exist in the physical world, but it also changes its environment. Whether you're having it move blocks around or tie your shoes, it's hard to predict every eventuality a robot might encounter. The traditional approach of training a robot on action with reinforcement was very slow, but generative AI allows for much greater generalization. "It's drawing from Gemini's multimodal world understanding in order to do a completely new task," explains Carolina Parada. "What that enables is in that same way Gemini can produce text, write poetry, just summarize an article, you can also write code, and you can also generate images. It also can generate robot actions." General robots, no cloud needed In the previous Gemini Robotics release (which is still the "best" version of Google's robotics tech), the platforms ran a hybrid system with a small model on the robot and a larger one running in the cloud. You've probably watched chatbots "think" for measurable seconds as they generate an output, but robots need to react quickly. If you tell the robot to pick up and move an object, you don't want it to pause while each step is generated. The local model allows quick adaptation, while the server-based model can help with complex reasoning tasks. Google DeepMind is now unleashing the local model as a standalone VLA, and it's surprisingly robust. The new Gemini Robotics On-Device model is only a little less accurate than the hybrid version. According to Parada, many tasks will work out of the box. "When we play with the robots, we see that they're surprisingly capable of understanding a new situation," Parada tells Ars. By putting this model out with a full SDK, the team hopes developers will give Gemini-powered robots new tasks and show them new environments, which could reveal actions that don't work with the model's stock tuning. With the SDK, robotics researchers will be able to adapt the VLA to new tasks with as little as 50 to 100 demonstrations. A "demonstration" in AI robotics is a bit different than in other areas of AI research. Parada explains that demonstrations typically involve tele-operating the robot -- controlling the machinery manually to complete a task actually tunes the model to handle that task autonomously. While synthetic data is an element of Google's training, it's not a substitute for the real thing. "We still find that in the most complex, dexterous behaviors, we need real data," says Parada. "But there is quite a lot that you can do with simulation." But those highly complex behaviors may be beyond the capabilities of the on-device VLA. It should have no problem with straightforward actions like tying a shoe (a traditionally difficult task for AI robots) or folding a shirt. If, however, you wanted a robot to make you a sandwich, it would probably need a more powerful model to go through the multi-step reasoning required to get the bread in the right place. The team sees Gemini Robotics On-Device as ideal for environments where connectivity to the cloud is spotty or non-existent. Processing the robot's visual data locally is also better for privacy, for example, in a health care environment. Building safe robots Safety is always a concern with AI systems, be that a chatbot that provides dangerous information or a robot that goes Terminator. We've all seen generative AI chatbots and image generators hallucinate falsehoods in their outputs, and the generative systems powering Gemini Robotics are no different -- the model doesn't get it right every time, but giving the model a physical embodiment with cold, unfeeling metal graspers makes the issue a little more thorny. To ensure robots behave safely, Gemini Robotics uses a multi-layered approach. "With the full Gemini Robotics, you are connecting to a model that is reasoning about what is safe to do, period," says Parada. "And then you have it talk to a VLA that actually produces options, and then that VLA calls a low-level controller, which typically has safety critical components, like how much force you can move or how fast you can move this arm." Importantly, the new on-device model is just a VLA, so developers will be on their own to build in safety. Google suggests they replicate what the Gemini team has done, though. It's recommended that developers in the early tester program connect the system to the standard Gemini Live API, which includes a safety layer. They should also implement a low-level controller for critical safety checks. Anyone interested in testing Gemini Robotics On-Device should apply for access to Google's trusted tester program. Google's Carolina Parada says there have been a lot of robotics breakthroughs in the past three years, and this is just the beginning -- the current release of Gemini Robotics is still based on Gemini 2.0. Parada notes that the Gemini Robotics team typically trails behind Gemini development by one version, and Gemini 2.5 has been cited as a massive improvement in chatbot functionality. Maybe the same will be true of robots.

[2]

Google rolls out new Gemini model that can run on robots locally

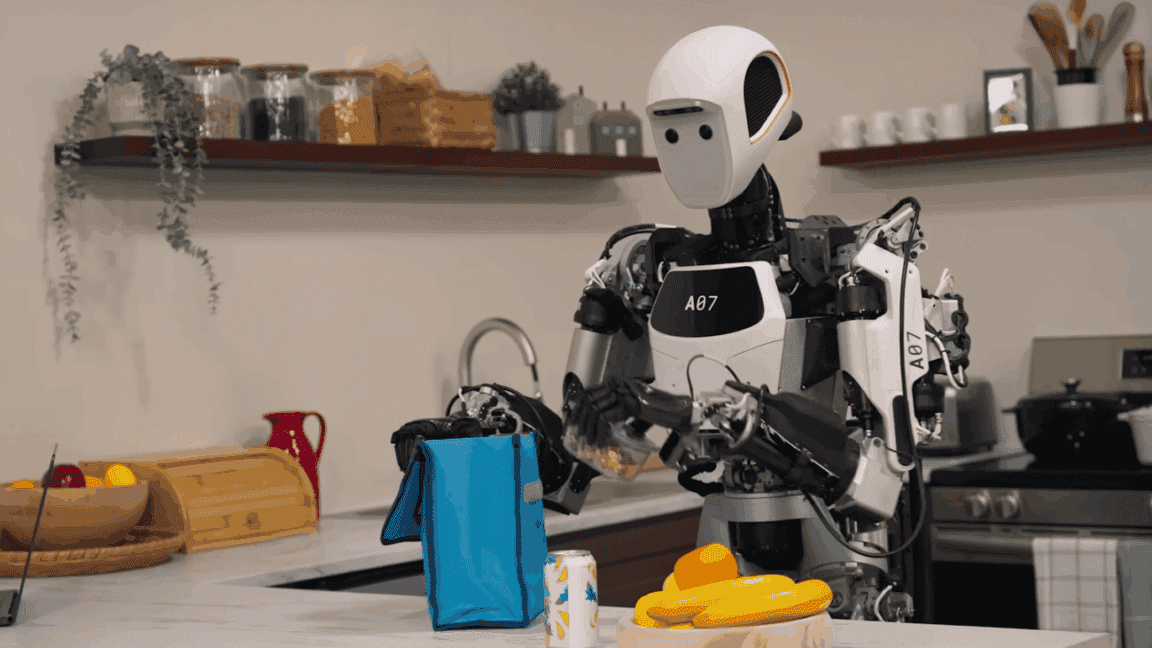

Google DeepMind on Tuesday released a new language model called Gemini Robotics On-Device that can run tasks locally on robots without requiring an internet connection. Building on the company's previous Gemini Robotics model that was released in March, Gemini Robotics On-Device can control a robot's movements. Developers can control and fine-tune the model to suit various needs using natural language prompts. In benchmarks, Google claims the model performs at a level close to the cloud-based Gemini Robotics model. The company says it outperforms other on-device models in general benchmarks, though it didn't name those models. In a demo, the company showed robots running this local model doing things like unzipping bags and folding clothes. Google says that while the model was trained for ALOHA robots, it later adapted it to work on a bi-arm Franka FR3 robot and the Apollo humanoid robot by Apptronik. Google claims the bi-arm Franka FR3 was successful in tackling scenarios and objects it hadn't "seen" before, like doing assembly on an industrial belt. Google DeepMind is also releasing a Gemini Robotics SDK. The company said developers can show robots 50 to 100 demonstrations of tasks to train them on new tasks using these models on the MuJoCo physics simulator. Other AI model developers are also dipping their toes in robotics. Nvidia is building a platform to create foundation models for humanoids; Hugging Face is not only developing open models and datasets for robotics, it is actually working on robots too; and Mirae Asset-backed Korean startup RLWRLD is working on creating foundational models for robots.

[3]

Google DeepMind's optimized AI model runs directly on robots

Emma Roth is a news writer who covers the streaming wars, consumer tech, crypto, social media, and much more. Previously, she was a writer and editor at MUO. Google DeepMind is rolling out an on-device version of its Gemini Robotics AI model that allows it to operate without an internet connection. The vision-language-action model (VLA) comes with dexterous capabilities similar to the one released in March, but Google says "it's small and efficient enough to run directly on a robot." The flagship Gemini Robotics model is designed to help robots complete a wide range of physical tasks, even if it hasn't been specifically trained on them. It allows robots to generalize new situations and understand and respond to commands, as well as perform tasks that require fine motor skills. Carolina Parada, head of robotics at Google DeepMind, tells The Verge that the original Gemini Robotics model uses a hybrid approach, allowing it to operate on-device and on the cloud. But with this device-only model, users can access offline features that are almost as good as those of the flagship. The on-device model can perform several different tasks out of the box, and it can adapt to new situations "with as few as 50 to 100 demonstrations," according to Parada. Google only trained the model on its ALOHA robot, but the company was able to adapt it to different robot types, such as the humanoid Apollo robot from Apptronik and the bi-arm Franka FR3 robot. "The Gemini Robotics hybrid model is still more powerful, but we're actually quite surprised at how strong this on-device model is," Parada says. "I would think about it as a starter model or as a model for applications that just have poor connectivity." It could also be useful for companies with strict security requirements. Alongside this launch, Google is releasing a software development kit (SDK) for the on-device model that developers can use to evaluate and fine-tune it -- a first for one of Google DeepMind's VLAs. The on-device Gemini Robotics model and its SDK will be available to a group of trusted testers while Google continues to work toward minimizing safety risks.

[4]

New Gemini AI lets humanoid robots think and act without internet

Humanoid robot prepares to interact with objects using Google's offline Gemini AI. Google DeepMind has launched a powerful on-device version of its Gemini Robotics AI model. The new system can control physical robots without relying on cloud connectivity. It marks a major step in deploying fast, adaptive, and general-purpose robotics in real-world environments. The model, known as 'Gemini Robotics On-Device,' brings Gemini 2.0's multimodal reasoning into robots with no internet required. It's designed for latency-sensitive use cases and environments with poor or no connectivity.

[5]

Google's new Gemini AI model means your future robot butler will still work even without Wi‑Fi

For years, we've been promised robot butlers capable of folding your laundry, chopping your onions, and essaying a witty bon mot like the ones in our favorite period dramas. One thing those promises never mention is that accidentally unplugging your router might shut down that mechanical Jeeves. Google claims its newest Gemini AI model solves that problem, though. Google DeepMind has unveiled its new Gemini Robotics On‑Device AI model as a way of keeping robots safe from downed power lines and working in rural areas. Although it's not as powerful as the standard cloud-based Gemini models, its independence means it could be a lot more reliable and useful. The breakthrough is that the AI, a VLA (vision, language, action) model, can look around, understand what it's seeing, interpret natural language instructions, and then act on them without needing to look up any words or tasks online. In testing, robots with the model installed completed tasks on unfamiliar objects and in new environments without Googling it. That might not seem like a huge deal, but the world is full of places with limited internet or no access at all. Robots working in rural hospitals, disaster zones, and underground tunnels can't afford to lag. Now, not only is the model fast, but Google claims it has an amazing ability to learn and adapt. The developers claim they can teach the AI new tricks with as few as 50 demonstrations, which is practically instantaneous compared to some of the programs currently used for robotic training. Offline robot AI That ability to learn and adapt is also evident in the robot's flexible physical design. The model was first designed to run Google's own dual-arm ALOHA devices, but has since proven capable of working when installed in far more complex machines, like the Apollo humanoid robot from Apptronik. The idea of machines that learn quickly and act independently obviously raises some red flags. But Google insists it's being cautious. The model comes with built-in safeguards, both in its physical design and in the tasks it will carry out. You can't run out and buy a robot with this model installed yet, but a future involving a robot with this model or one of its descendants is easy to picture. Let's say you buy a robot assistant in five years. You want it to do normal things: fold towels, prep meals, keep your toddler from launching Lego bricks down the stairs. But your other child wanted to see how that box with the blinking lights worked, and suddenly those lights stopped blinking. Luckily, the model installed in your robot can still see and understand what those Lego bricks are and that you are asking it to pick them up and put them back in their bucket. That's the real promise of Gemini Robotics On‑Device. It's not just about bringing AI into the physical world. It's about making it stick around when the lights flicker. Your future robot butler won't be a cloud-tethered liability. The robots are coming, and they are truly wireless. Hopefully, that's still a good thing. You might also like

[6]

Google Releases New Gemini Robotics Model That Functions On-Device

Google says the model can learn new tasks with 50-100 demonstrations Google DeepMind released a new Gemini Robotics artificial intelligence (AI) model on Tuesday that can run entirely on a local device. Dubbed Gemini Robotics On-Device, it is a voice-language-action (VLA) model that can make robots perform a wide range of tasks in real-world environments. The Mountain View-based tech giant said that since the AI model functions without the need to be connected with a data network, it is more useful for applications that are latency sensitive. Currently, the model is available to those who have signed up for its trusted tester programme. In a blog post, Carolina Parada, a Senior Director and Head of Robotics at Google DeepMind, announced the release of Gemini Robotics On-Device. The new VLA model can be accessed via a Gemini Robotics software development kit (SDK) after signing up for its tester programme. The model can also be tested on the company's MuJoCo physics simulator. Since it is a proprietary model, details about its architecture and training methods are not known. However, Google has highlighted its capabilities. The VLA model is designed for bi-arm robots and has minimal computational requirements. Despite that, the model allows for experimentation, and the company claims that it can adapt to new tasks with just 50 to 100 demonstrations. Gemini Robotics On-Device also adheres to natural language instructions and can perform complex tasks such as unzipping bags or folding clothes. Based on internal testing, the tech giant claims that the AI model "exhibits strong generalisation performance while running entirely locally." Additionally, it is also said to outperform other on-device models on "more challenging out-of-distribution tasks and complex multi-step instructions." Notably, Google highlighted that while the AI model was trained for ALOHA robots, researchers were also able to adapt it to Franka FR3 and Apptronik's Apollo humanoid robots. All of these are bi-arm robots, which is the only configuration that is compatible with Gemini Robotics On-Device. The AI model was able to adhere to instructions and perform general tasks on all the different robots. Additionally, it could also handle previously unseen objects and scenarios, and even execute industrial belt assembly tasks that require a high level of precision and dexterity, the company claimed.

[7]

Google Releases Gemini Robotics On-Device to Locally Handle Robots

Google is offering access to the On-Device model via the Gemini Robotics SDK. Following the release of Gemini Robotics in March which is Google DeepMind's state-of-the-art vision-language-action (VLA) model, the company has now introduced Gemini Robotics On-Device. As the name suggests, it's a VLA model, but it can run locally on robotic devices. It doesn't require the internet to access the model on the cloud. The Gemini Robotics On-Device model has been efficiently trained and shows "strong general-purpose dexterity and task generalization." This AI model can be used for bi-arm robots and can be fine-tuned for new tasks. Since it runs locally, the low-latency inference can help in rapid experimentation involving dexterous manipulation. It can achieve highly dexterous tasks like unzipping bags or folding clothes. The On-Device model showcases strong visual, semantic, and behavioral generalization across a wide range of tasks. In fact, in Google's own Generalization Benchmark, the Gemini Robotics On-Device model performs nearly on par with its cloud model, Gemini Robotics. Moreover, whether following instructions or fast adaptation to tasks, the On-Device model achieves comparable performance. It goes on to show that Google DeepMind has trained a frontier AI model and a highly optimized local model for robotics. If you are interested in testing the model, you can sign up for Google's trusted tester program and access the Gemini Robotics SDK.

[8]

Google Unveils Gemini Robotics: The Future of On-Device AI for Robots

Inside Google's Gemini Robotics: A New Era of On-Device AI and Natural Language Robot Control Google DeepMind has introduced an upgrade to Gemini Robotics with the new AI model called Gemini Robotics On-Device. This model runs directly with the assistance of robots, without an internet connection. The new model is an extension of the earlier Gemini Robotics model, launched in March 2025. Using this model, robots can be called upon to perform tasks through natural language commands. The primary function of the model is to expedite functional performance through robots and enable smoother operation.

[9]

Google's new Gemini AI model can run robots locally without internet, here's how

Prioritizes semantic and physical safety, guided by Google's internal standards. Google has formally unveiled a new iteration of Gemini AI that can function solely on robotic hardware and doesn't require an internet connection. The model, called Gemini Robotics On-Device, provides task generalisation and fine tuning with minimal data, and it gives bi-arm robots local, low-latency control. The Gemini Robotics On Device handles language, action, and vision inputs on the device itself, in contrast to cloud-dependent models. This might be helpful in places like manufacturing floors or remote settings where latency needs to be kept to a minimum or connectivity is restricted. Google claims that the model can follow natural language instructions and learn from just 50 to 100 demonstrations to accomplish tasks like zipping bags, folding clothes, and pouring liquids. Also read: OpenAI and Jony Ive's first AI device might not be wearable, court documents reveal Specifically designed as a lightweight extension of the Gemini 2.0 architecture, the on-device version preserves multi-step reasoning and dexterous control while optimising for smaller compute footprints. Having been successfully tested on robots other than its initial training setup, such as the Apptronik Apollo humanoid and the bi-arm Franka FR3, it also facilitates rapid adaptation to new tasks or robotic forms. Through a reliable testing program, Google is also providing a Gemini Robotics SDK to a select group of developers. Users can test the model in MuJoCo physics simulations and adjust it for particular tasks using this SDK. Access is still restricted, though, while the business assesses its effectiveness and security in actual environments. Google claims that with extra safety precautions in place, the development is consistent with its internal AI Principles. These consist of benchmarking for semantic integrity and low-level controllers for physical safety. Under the direction of the company's Responsibility & Safety Council, the system is being assessed using a new semantic safety benchmark. Google is indicating a move towards more autonomous and locally adaptable robotics systems with the on-device model, which could have repercussions for logistics, industrial automation, and other areas.

Share

Share

Copy Link

Google DeepMind releases Gemini Robotics On-Device, an AI model that allows robots to operate autonomously without cloud connectivity, marking a significant advancement in robotics and AI integration.

Google's Breakthrough in AI Robotics

Google DeepMind has unveiled a groundbreaking advancement in artificial intelligence for robotics with the release of Gemini Robotics On-Device, a new AI model that enables robots to operate autonomously without the need for cloud connectivity

1

2

. This development marks a significant step forward in the integration of AI and robotics, potentially revolutionizing the field and expanding the applications of robotic systems.The Power of On-Device AI

Source: Gadgets 360

The Gemini Robotics On-Device model is a vision-language-action (VLA) system that allows robots to perceive their environment, understand natural language commands, and execute actions without relying on internet connectivity

3

. This on-device capability addresses critical limitations of cloud-dependent systems, such as latency issues and the need for constant internet access.Carolina Parada, head of robotics at Google DeepMind, explains that the new model performs almost as well as its cloud-based counterpart: "The Gemini Robotics hybrid model is still more powerful, but we're actually quite surprised at how strong this on-device model is"

3

. This level of performance makes the model suitable for a wide range of applications, particularly in environments with poor or no internet connectivity.Versatility and Adaptability

One of the most impressive features of the Gemini Robotics On-Device model is its ability to generalize and adapt to new situations. Google claims that the model can be fine-tuned for specific tasks with as few as 50 to 100 demonstrations

2

3

. This rapid adaptability could significantly reduce the time and resources required to deploy robots in new environments or for novel tasks.The model's versatility is further demonstrated by its successful adaptation to different robot types. Initially trained on Google's ALOHA robot, the system has been successfully implemented on other platforms, including the humanoid Apollo robot from Apptronik and the bi-arm Franka FR3 robot

1

3

.

Source: The Verge

Related Stories

Developer Tools and Safety Considerations

Alongside the new model, Google is releasing a software development kit (SDK) that allows developers to evaluate and fine-tune the system for their specific needs

3

. This marks the first time Google DeepMind has made such tools available for one of its VLA models, potentially accelerating innovation in the robotics field.Safety remains a paramount concern in AI robotics. Google DeepMind emphasizes a multi-layered approach to ensure safe robot behavior, including reasoning about safety, generating options through the VLA, and implementing low-level controllers for critical safety checks

1

. The company is initially making the on-device model and SDK available to a group of trusted testers while continuing to work on minimizing safety risks3

.Implications for the Future of Robotics

Source: Ars Technica

The introduction of Gemini Robotics On-Device has far-reaching implications for the robotics industry. It could enable more reliable and responsive robots in challenging environments such as disaster zones, rural hospitals, or underground facilities

5

. The model's ability to operate offline also addresses privacy concerns, making it suitable for use in sensitive environments like healthcare settings1

.As AI continues to advance, the line between virtual assistants and physical robots is blurring. Google's Carolina Parada notes, "What that enables is in that same way Gemini can produce text, write poetry, just summarize an article, you can also write code, and you can also generate images. It also can generate robot actions"

1

. This convergence of capabilities suggests a future where highly adaptable, intelligent robots could become commonplace in various aspects of our lives.The release of Gemini Robotics On-Device represents a significant milestone in the evolution of AI-powered robotics. As developers begin to explore its capabilities and fine-tune it for specific applications, we may soon see a new generation of robots that are more autonomous, adaptable, and capable of operating in a wider range of environments than ever before.

References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology