Google DeepMind Unveils SIMA 2: AI Agent Powered by Gemini Achieves Human-Like Gaming Performance

9 Sources

9 Sources

[1]

Google's SIMA 2 agent uses Gemini to reason and act in virtual worlds | TechCrunch

Google DeepMind shared on Thursday a research preview of SIMA 2, the next generation of its generalist AI agent that integrates the language and reasoning powers of Gemini, Google's large language model, to move beyond simply following instructions to understanding and interacting with its environment. Like many of DeepMind's projects, including AlphaFold, the first version of SIMA was trained on hundreds of hours of video game data to learn how to play multiple 3D games like a human, even some games it wasn't trained on. SIMA 1, unveiled in March 2024, could follow basic instructions across a wide range of virtual environments, but it only had a 31% success rate for completing complex tasks, compared to 71% for humans. "SIMA 2 is a step change and improvement in capabilities over SIMA 1," Joe Marino, senior research scientist at DeepMind, said in a press briefing. "It's a more general agent. It can complete complex tasks in previously unseen environments. And it's a self-improving agent. So it can actually self-improve based on its own experience, which is a step towards more general-purpose robots and AGI systems more generally." SIMA 2 is powered by the Gemini 2.5 flash-lite model, and AGI refers to artificial general intelligence, which DeepMind defines as a system capable of a wide range of intellectual tasks with the ability to learn new skills and generalize knowledge across different areas. Working with so-called "embodied agents" is crucial to generalized intelligence, DeepMind's researchers say. Marino explained that an embodied agent interacts with a physical or virtual world via a body - observing inputs and taking actions much like a robot or human would - whereas a non-embodied agent might interact with your calendar, take notes, or execute code. Jane Wang, a research scientist at DeepMind with a background in neuroscience, told TechCrunch that SIMA 2 goes far beyond gameplay. "We're asking it to actually understand what's happening, understand what the user is asking it to do, and then be able to respond in a common-sense way that's actually quite difficult," Wang said. By integrating Gemini, SIMA 2 doubled its predecessor's performance, uniting Gemini's advanced language and reasoning abilities with the embodied skills developed through training. Marino demoed SIMA 2 in No Man's Sky, where the agent described its surroundings - a rocky planet surface - and determined its next steps by recognizing and interacting with a distress beacon. SIMA 2 also uses Gemini to reason internally. In another game, when asked to walk to the house that's the color of a ripe tomato, the agent showed its thinking - ripe tomatoes are red, therefore I should go to the red house - then found and approached it. Being Gemini-powered also means SIMA 2 follows instructions based on emojis: "You instruct it 🪓🌲, and it'll go chop down a tree," Marino said. Marino also demonstrated how SIMA 2 can navigate newly generated photorealistic worlds produced by Genie, DeepMind's world model, correctly identifying and interacting with objects like benches, trees, and butterflies. Gemini also enables self-improvement without much human data, Marino added. Where SIMA 1 was trained entirely on human gameplay, SIMA 2 uses it as a baseline to provide a strong initial model. When the team puts the agent into a new environment, it asks another Gemini model to create new tasks and a separate reward model to score the agent's attempts. Using these self-generated experiences as training data, the agent learns from its own mistakes and gradually performs better, essentially teaching itself new behaviors through trial and error as a human would, guided by AI-based feedback instead of humans. DeepMind sees SIMA 2 as a step toward unlocking more general-purpose robots. "If we think of what a system needs to do to perform tasks in the real world, like a robot, I think there are two components of it," Frederic Besse, senior staff research engineer at DeepMind, said during a press briefing. "First, there is a high-level understanding of the real world and what needs to be done, as well as some reasoning." If you ask a humanoid robot in your house to go check how many cans of beans you have in the cupboard, the system needs to understand all of the different concepts - what beans are, what a cupboard is - and navigate to that location. Besse says SIMA 2 touches more on that high-level behavior than it does on lower-level actions, which he refers to as controlling things like physical joints and wheels. The team declined to share a specific timeline for implementing SIMA 2 in physical robotics systems. Besse told TechCrunch that DeepMind's recently unveiled robotics foundation models - which can also reason about the physical world and create multi-step plans to complete a mission - were trained differently and separately from SIMA. While there's also no timeline for releasing more than a preview of SIMA 2, Wang told TechCrunch the goal is to show the world what DeepMind has been working on and see what kinds of collaborations and potential uses are possible.

[2]

Watch Google DeepMind's new AI agent learn to play video games

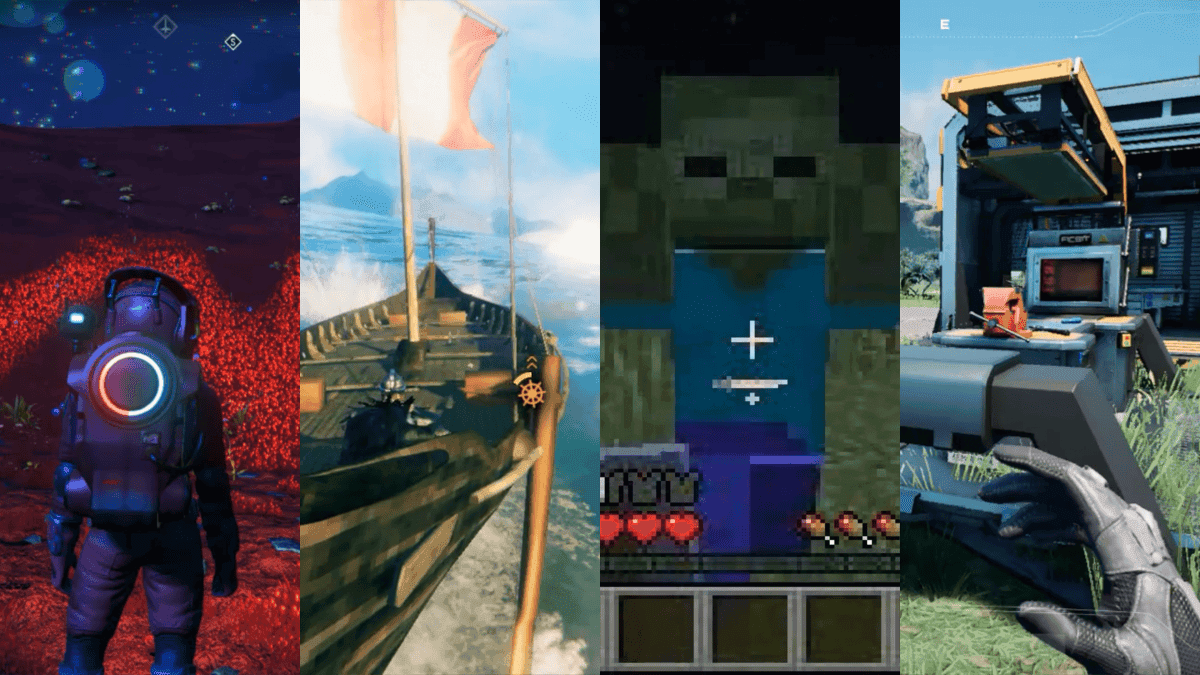

Google DeepMind's new AI agent learned how to play a bunch of video games -- including No Man's Sky, Valheim, and Goat Simulator 3 -- to become a viable "interactive gaming companion." The new agent tool, SIMA 2, builds on its earlier iteration, SIMA (Scalable Instructable Multiworld Agent), which DeepMind released in March 2024. It also incorporates Google's Gemini AI for the first time, meaning the agent can go beyond simply following instructions to "understand a user's high-level goal, perform complex reasoning in pursuit, and skillfully execute goal-oriented actions within games," even ones it hasn't seen before, according to a DeepMind blog post. It's currently being released to some academics and developers as a limited research preview. Despite SIMA 2's gaming prowess, creating a consumer-facing gaming helper isn't the broader goal here, members of the DeepMind team told The Verge during a Wednesday briefing. Jane Wang, a senior staff research scientist at DeepMind, called it "a really great training ground" for potentially transferring the skills to real-world environments one day. And, as usual, it all comes back to the ever-intensifying AGI race between Google, Meta, OpenAI, Anthropic, and others. "This is a significant step in the direction of Artificial General Intelligence (AGI), with important implications for the future of robotics and AI-embodiment in general," DeepMind's blog post states. Joe Marino, a research scientist at DeepMind, doubled down on that, saying that SIMA 2's ability to take actions in a virtual world and handle environments it has never seen before is a "fundamental" step toward AGI -- and potentially toward building a general-purpose robot down the line.

[3]

Google is training Gemini agents using 'Goat Simulator 3'

Google has developed an AI-powered agent that's playing video games, including Goat Simulator 3 -- yes, a goat-based video game. Google DeepMind created a Gemini-powered agent called SIMA 2 -- "scalable instructable multiworld agent" -- that's navigating the world of Goat Simulator 3, reported MIT Technology Review. The AI agent is called SIMA 2 because, well, there was an original SIMA. We covered the original project at Mashable. The agent was designed to essentially serve as a video game companion -- an AI-powered player that could accompany human players. (The ROG Xbox Ally X gaming handheld has a similar, Copilot-powered feature.) SIMA 2 is apparently an improved version of the original. "Games have been a driving force behind agent research for quite a while," Joe Marino, a researcher at Google DeepMind, said this week, via MIT Technology Review. The idea behind SIMA 2 is that the agent can learn and adapt in open-ended, complicated video games. A human player can also help direct the agent and improve its performance.

[4]

Google DeepMind's New AI Agent Learns, Adapts and Plays Games Like a Human - Decrypt

DeepMind planned a limited research preview for developers and academics. Google DeepMind introduced SIMA 2 on Thursday -- a new AI agent that the company claims behaves like a "companion" inside virtual worlds. With the launch of SIMA 2, DeepMind aims to advance beyond simple on-screen actions and move toward AI that can plan, explain itself, and learn through experience. "This is a significant step in the direction of Artificial General Intelligence (AGI), with important implications for the future of robotics and AI-embodiment in general," the company said on its website. The first version of SIMA (Scalable Instructable Multiworld Agent), released in March 2024, learned hundreds of basic skills by watching the screen and using virtual keyboard and mouse controls. The new version of SIMA, Google said, takes things a step further by letting the AI think for itself. "SIMA 2 is our most capable AI agent for virtual 3D worlds," Google DeepMind wrote on X. "Powered by Gemini, it goes beyond following basic instructions to think, understand, and take actions in interactive environments-meaning you can talk to it through text, voice, or even images." By using the Gemini AI model, Google said SIMA can interpret high-level goals, talk through the steps it intends to take, and collaborate inside games with a level of reasoning the original system could not reach. DeepMind reported stronger generalization across virtual environments, and that SIMA 2 completed longer, more complex tasks, which included logic prompts, sketches drawn on the screen, and emojis. "As a result of this ability, SIMA 2's performance is significantly closer to that of a human player on a wide range of tasks," Google wrote, noting that SIMA 2 had a 65% task completion rate, compared to 31% by SIMA 1. The system also interpreted instructions and acted inside entirely new 3D worlds generated by Genie 3, another DeepMind project released last year that creates interactive environments from a single image or text prompt. SIMA 2 oriented itself, understood goals, and took meaningful actions in worlds it had never encountered until moments before testing. "SIMA 2 is now far better at carrying out detailed instructions, even in worlds it's never seen before," Google wrote. "It can transfer learned concepts like 'mining' in one game and apply it to 'harvesting' in another -- connecting the dots between similar tasks." After learning from human demonstrations, researchers said the agent switched into self-directed play, using trial and error and Gemini-generated feedback to create new experience data, including a training loop where SIMA 2 generated tasks, attempted them, and then fed its own trajectory data back into the next version of the model. While Google hailed SIMA 2 as a step forward for artificial intelligence, the research also identified gaps that still need to be addressed, including struggling with very long, multi-step tasks, working within a limited memory window, and facing visual-interpretation challenges common to 3D AI systems. Even so, DeepMind said the platform served as a testbed for skills that could eventually migrate into robotics and navigation. "Our SIMA 2 research offers a strong path towards applications in robotics and another step towards AGI in the real world," it said.

[5]

Google DeepMind Unveils SIMA 2, an AI Agent That Acts and Learns in 3D Environments | AIM

The company said it is releasing SIMA 2 as a limited research preview for a small group of academics and game developers. Google DeepMind has unveiled SIMA 2, the latest version of its Scalable Instructable Multiworld Agent that can reason, collaborate with users, and learn autonomously inside 3D virtual environments. The researchers described the release as "a milestone in creating general and helpful AI agents." SIMA 2 incorporates the Gemini model as its core, which allows the agent to interpret instructions, understand high-level goals, and describe its planned actions. "SIMA 2 can do more than just respond to instructions, it can think and reason about them," the company said. The earlier version, SIMA 1, had been trained to execute more than 600 basic skills across various commercial games. Training for SIMA 2 used both human demonstrations and labels generated by Gemini. This approach allows the agent to explain what it intends to do and how it plans to complete a task. According to the team, interactions now "feel less like giving commands and more like collaborating with a companion who can reason about the task at hand." Testing showed improved generalisation, with SIMA 2 carrying out complex instructions and succeeding in games it had never encountered, including the Viking survival title ASKA and the research environment MineDojo. The agent could also apply concepts learned in one game -- such as mining -- to comparable actions in other environments. Researchers noted that SIMA 2 has reduced much of the performance gap between AI and human players across evaluation tasks. In another experiment, SIMA 2 was combined with Genie 3, a model that creates new 3D worlds from a single image or text prompt. The agent was able to orient itself and follow user instructions inside these automatically generated environments. A key capability in the new system is self-improvement. After initial training on human demonstrations, SIMA 2 can shift to self-directed learning, using tasks and reward estimates generated by Gemini. "This process allows the agent to improve on previously failed tasks entirely independently of human-generated demonstrations," the company said. Data collected through this self-play is then used to train subsequent versions of the agent. Google DeepMind noted remaining limitations, including difficulty with very long, multi-step tasks, short interaction memory, and precision challenges when controlling games through virtual keyboard and mouse inputs. Visual understanding of complex 3D scenes also remains an area for improvement. The company said it is releasing SIMA 2 as a limited research preview for a small group of academics and game developers. "We remain deeply committed to developing SIMA 2 responsibly," Google DeepMind noted, referencing its collaboration with internal responsible development experts. Researchers said the work may eventually inform robotics, where skills such as navigation, tool use, and collaborative task execution are essential. Meanwhile, World Labs, the startup founded by AI pioneer Fei-Fei Li, has released its generative world model, Marble, publicly available after a two-month beta with early users. "Marble can create 3D worlds from text, images, video, or coarse 3D layouts," World Labs said. "Users can interactively edit or expand worlds."

[6]

Google DeepMind's SIMA 2 agent learns to think and act inside virtual worlds - SiliconANGLE

Google DeepMind's SIMA 2 agent learns to think and act inside virtual worlds Google LLC's artificial intelligence research lab DeepMind has introduced a new, video game-playing agent called SIMA 2 that's able to navigate through 3D virtual worlds it has never encountered before and solve all kinds of problems. It's a key step towards the creation of general-purpose agents that will ultimately power real-world robots, the research outfit said. Announced today, SIMA 2 builds on the release of DeepMind's original video game-playing agent SIMA, which stands for "scalable instructable multiworld agent". SIMA debuted around 18 months ago and displayed an impressive level of autonomy, but it was far from complete, failing to perform many kinds of tasks. However, DeepMind's researchers said SIMA 2 is built on top of Gemini, which is Google's most powerful large language model, and that foundation gives it a massive performance boost. In a blog post, the SIMA Team said SIMA 2 can complete a much wider variety of more complex tasks in virtual worlds, and in many cases it can figure out how to solve challenges without ever having come across them before. It can also chat with users, and it improves its knowledge over time when it tackles more difficult tasks multiple times, learning by trial and error. "This is a significant step in the direction of Artificial General Intelligence (AGI), with important implications for the future of robotics and AI-embodiment in general," the SIMA Team said. The original SIMA learned to perform tasks inside of virtual worlds by watching the screen and using a virtual keyboard and mouse to control video game characters. But SIMA 2 goes further, because Gemini gives it the ability to think for itself, DeepMind said. According to the researchers, Gemini enables SIMA 2 to interpret high-level goals, talk viewers through the steps it intends to take, and collaborate with other agents or humans in games with reasoning skills far beyond the original SIMA. They claim it shows stronger generalization across virtual environments and the ability to complete longer and more complicated tasks, including logic prompts, sketches drawn on the screen and emojis. "SIMA 2's performance is significantly closer to that of a human player on a wide range of tasks," the SIMA Team wrote, highlighting that it achieved a task completion rate of 65%, way ahead of SIMA 1's 31% and just shy of the average human rate of 71%. The model was also able to interpret instructions and act inside virtual worlds that had been freshly generated by another DeepMind model known as Genie 3, which is designed to create interactive environments from images and natural language prompts. When exposed to a new environment, SIMA 2 would immediately orient itself, try to understand its surroundings and its goals, and then immediately take meaningful actions. It does this by applying skills learned in earlier worlds to the new surroundings it finds itself in, the researchers explained. "It can transfer learned concepts like 'mining' from one game, and apply it to 'harvesting' in another game," they said. "[It's] like connecting the dots between similar tasks." SIMA 2 can also learn from human demonstrations before switching to self-directed play, where it employs trial and error and feedback from Gemini to create "experience data". This is then fed back into itself in a kind of training loop, so the model can attempt new tasks, learn what it did wrong and right, and then apply what it has learned when it tries a second time. In other words, it won't make the same mistake twice. DeepMind Senior Staff Research Engineer Frederic Besse told media during a press briefing that the end game for SIMA 2 is to develop a new generation of AI agents that can be deployed inside robots, so they can operate autonomously in real-world environments. The skills it learns in virtual environments, such as navigation, using tools and collaborating with humans, can easily be applied to a setting such as a factory or a warehouse. "If we think of what a system needs to do to perform tasks in the real world, like a robot, I think there are two components of it," Besse said. "First, there is a high-level understanding of the real world and what needs to be done, as well as some reasoning. Then there are lower-level actions, such as controlling things like physical joints and wheels." DeepMind's researchers said SIMA 2 is a massive step forward for AI agents, but they admitted there are still weaknesses in the system that remain to be addressed. For instance, the model still struggles with very long, multistep tasks and working with a limited memory window. It also struggles with some visual interpretation scenarios, they said.

[7]

Google's SIMA 2 AI Agent Can Play No Man's Sky and Goat Simulator 3

* SIMA stands for Scalable Instructable Multiworld Agent * Google says SIMA 2 can reason to think about its goals * The AI agent can also improve itself over time Google DeepMind introduced Scalable Instructable Multiworld Agent (SIMA) 2, an artificial intelligence (AI) agent, on Thursday. It is the successor of SIMA, which was unveiled in March 2024, and comes with several improvements over it. SIMA 2 is powered by Gemini models and can now think about its actions, reason over it, and even interact with the user via a text interface. The core functionality remains the same: it is designed to play 3D open-world video games, but it now does so more effectively. The company says SIMA 2 also improves over time, learning from its experiences. SIMA 2 Can Now Reason, Interact, and Play Games Better In a blog post, Google DeepMind introduced and detailed the SIMA 2 AI agent. Powered by Gemini, it is not only able to execute tasks given by humans but also understand what is being asked, reason about the environment, and plan its next steps accordingly. The system ingests visual input (the game screen or virtual world imagery) and a human-issued goal (for example: "build a shelter" or "find the red house"), then the agent interprets that goal, constructs intermediate actions and performs them via keyboard/mouse style outputs. One of the biggest improvements in SIMA 2 is its ability to familiarise itself with new games and environments it has not been trained on. The system was evaluated in previously unseen games, such as Minedojo (a research version of Minecraft) and ASKA, a Viking survival game, and achieved better success rates compared with its predecessor. It also accepts multimodal prompts (sketches, emojis, different languages) and can transfer concepts. For instance, it may learn "mining" in one game and apply its learnings to the notion of "harvesting" in another, without having to start from zero. Coming to the training setup for the AI agent, SIMA 2's dataset uses human-demonstration data and auto-generated annotation by Gemini. Additionally, whenever it learns a new motion or skill in novel environments, the data is collected and fed back to train subsequent generations of the agent. DeepMind says this reduces reliance on human-labelled data and allows SIMA 2 to continue improving from its own play. SIMA 2 still has limitations: the model's memory of past interactions remains constrained, very long-horizon reasoning (many steps ahead) remains challenging, and precise low-level actions (such as robot-style joint control) are not addressed within this game-world framework. Despite its prowess in video games, SIMA 2 is not being developed to become a gaming assistant. DeepMind believes that by training and testing the agent in unique 3D worlds, the learnings can then be applied to embedded AI, which powers robots that work in the real world. Ultimately, the goal is to create a general-purpose robot capable of handling multiple tasks and controllable via natural language instructions.

[8]

Google DeepMind unveils SIMA 2 with reasoning and goal-oriented AI capabilities

Google DeepMind introduced SIMA 2, the latest iteration of its generalist AI research, building on last year's SIMA (Scalable Instructable Multiworld Agent). The original SIMA could follow instructions across multiple virtual environments, performing over 600 language-based tasks such as "turn left," "climb the ladder," and "open the map." SIMA 2 evolves this approach, combining instruction-following with reasoning, goal-oriented actions, and self-improvement capabilities. It includes: SIMA 2 incorporates the Gemini model, enabling it to interpret high-level user goals, reason about tasks, and explain its actions. The agent can describe the steps it takes to achieve objectives, answer user questions, and assess its own behavior and environment. Training included human demonstration videos with language labels, augmented with Gemini-generated labels. The agent demonstrates improved generalization, capable of executing complex instructions in games it was not explicitly trained on, including the Viking survival game ASKA and MineDojo, a Minecraft research environment. SIMA 2 can understand long, multi-step tasks, multimodal prompts such as sketches, multiple languages, and even emojis. It can transfer learned concepts, for instance, applying "mining" knowledge from one game to "harvesting" in another. Interaction in Newly Generated Worlds When combined with Genie 3, which generates real-time 3D environments from images or text, SIMA 2 can navigate and perform goal-directed actions in previously unseen worlds. This demonstrates the agent's adaptability to novel environments. SIMA 2 can improve independently through self-directed play. Initial training relies on human demonstrations, after which the agent can generate experience data to train future versions. This iterative process allows the agent to attempt increasingly complex tasks and learn in newly created environments without additional human data. The skills developed by SIMA 2, including navigation, tool use, and collaborative task execution, provide a foundation for research into general embodied intelligence. While the agent can operate across diverse gaming environments, limitations remain in tasks requiring long-horizon planning, precise low-level actions, and robust visual understanding. SIMA 2 also has a constrained memory window for interaction. SIMA 2 is available as a limited research preview to a small cohort of academics and game developers. DeepMind emphasizes oversight on self-improvement capabilities and seeks interdisciplinary feedback to ensure responsible development and mitigate potential risks.

[9]

From Minecraft to Multi-World Intelligence: Google's Ambition Behind SIMA 2

Gemini-powered SIMA 2 showcases transfer learning and real-world robotics potential Google DeepMind is not building a better gamer; it is building a better brain. The Scalable Instructable Multiworld Agent (SIMA) project, now in its second generation, is using the complex, open-ended universe of 3D video games, the very same worlds where players might be building a castle in Valheim or exploring a galaxy in No Man's Sky, as the proving ground for nothing less than Artificial General Intelligence (AGI). With SIMA 2, powered by the company's flagship Gemini model, Google has moved its AI from being a passive instruction-follower to an active, reasoning co-collaborator, marking a pivotal step in the quest for truly generalist AI. Also read: OpenAI's group chat feature explained: The bold step toward AI-assisted collaboration For years, AI breakthroughs in gaming were defined by specialization. DeepMind's AlphaGo mastered the ancient game of Go, and AlphaStar conquered the real-time strategy of StarCraft II. But their brilliance was confined to a single, structured environment. The reality of the world, whether physical or virtual, is chaos. That's where SIMA comes in. SIMA 2 is designed to act like a human player: it observes the game screen, processes natural language commands, and uses a virtual keyboard and mouse to operate, all without access to the game's hidden internal code. The major breakthrough of SIMA 2 lies in its transfer learning capability. Having been trained across a diverse portfolio of commercial games, including survival, building, and exploration titles like Goat Simulator 3, Valheim, and Satisfactory, the agent learns abstract concepts. If it learns what "mining" means in one game, it can apply that understanding to "harvesting" in a completely different one. "SIMA 2 is a step change and improvement in capabilities over SIMA 1. It's a more general agent that can complete complex tasks in previously unseen environments." - Joe Marino, Senior Research Scientist at DeepMind The engine behind SIMA 2's dramatic improvement is the Gemini 2.5 flash-lite model. The integration elevates the agent from a simple command executor to a conversational partner: Perhaps the most groundbreaking advancement in SIMA 2 is its autonomous self-improvement cycle. The original SIMA relied heavily on recorded human gameplay data. SIMA 2 uses this as a base, but then shifts into a self-directed learning mode. Utilizing a separate Gemini model, the agent: Also read: Beyond left and right: How Anthropic is training Claude for political even-handedness This ability to rapidly adapt and learn in a new game, or even in entirely AI-generated 3D worlds created by Google's Genie model, demonstrates a level of generalization that far surpasses previous benchmarks. The success rate for complex tasks has doubled compared to SIMA 1's initial performance. For DeepMind, SIMA is not a gaming tool; it is a virtual robot. The skills it is mastering within the simulated 3D environment, navigation, tool use, understanding complex instructions, and collaborative task execution, are the exact building blocks required for developing advanced, general-purpose physical robots. Video games offer a safe, scalable, and boundless sandbox to stress-test these agents. Every time SIMA 2 successfully chops down a tree in Valheim or repairs a spaceship in No Man's Sky, it is practicing a core capability needed by a future AI assistant in the real world. While SIMA 2 remains a research-preview agent, currently unavailable to the public and limited to a few academic and developer partners, Google DeepMind's ambition is clear. By harnessing Gemini's reasoning power and training its agent across a multi-world intelligence landscape, they are not just aiming to win a game; they are laying the groundwork for the general-purpose, helpful AI agents that will eventually operate in all the complex, dynamic, and unpredictable environments of the real world.

Share

Share

Copy Link

Google DeepMind has released SIMA 2, an advanced AI agent that integrates Gemini's reasoning capabilities to play video games and interact with virtual environments. The agent demonstrates significant improvements over its predecessor, achieving a 65% task completion rate compared to 31% for SIMA 1.

Google DeepMind Introduces SIMA 2 with Gemini Integration

Google DeepMind unveiled SIMA 2 on Thursday, marking a significant advancement in AI agent capabilities for virtual environments. The new iteration of the Scalable Instructable Multiworld Agent integrates Google's Gemini large language model, enabling the system to move beyond simple instruction-following to understanding, reasoning, and collaborative interaction within 3D virtual worlds

1

.

Source: Digit

"SIMA 2 is a step change and improvement in capabilities over SIMA 1," said Joe Marino, senior research scientist at DeepMind, during a press briefing. "It's a more general agent. It can complete complex tasks in previously unseen environments. And it's a self-improving agent"

1

.Dramatic Performance Improvements Over Predecessor

The integration of Gemini 2.5 flash-lite model has resulted in substantial performance gains. While SIMA 1, released in March 2024, achieved only a 31% success rate for completing complex tasks compared to 71% for humans, SIMA 2 has dramatically improved to a 65% task completion rate

1

4

.The agent demonstrates its enhanced capabilities across multiple gaming environments, including No Man's Sky, Valheim, Goat Simulator 3, and the Viking survival title ASKA. In demonstrations, SIMA 2 showed sophisticated reasoning abilities, such as understanding that "ripe tomatoes are red" when asked to walk to a house the color of a ripe tomato, then successfully locating and approaching the red house

1

.

Source: TechCrunch

Advanced Reasoning and Communication Capabilities

SIMA 2's integration with Gemini enables unprecedented interaction methods. The agent can interpret instructions delivered through text, voice, images, and even emojis. As Marino demonstrated, users can instruct the agent with "🪓🌲" and it will understand to chop down a tree

1

.

Source: Mashable

Jane Wang, a research scientist at DeepMind with a neuroscience background, emphasized the complexity of the agent's capabilities: "We're asking it to actually understand what's happening, understand what the user is asking it to do, and then be able to respond in a common-sense way that's actually quite difficult"

1

.Related Stories

Self-Improvement Through AI-Generated Feedback

A groundbreaking feature of SIMA 2 is its ability to improve autonomously without extensive human data. Unlike SIMA 1, which relied entirely on human gameplay demonstrations, SIMA 2 uses a sophisticated self-improvement system. When placed in new environments, the agent employs another Gemini model to create tasks and a separate reward model to evaluate its performance

1

.This self-directed learning approach allows SIMA 2 to learn from its mistakes through trial and error, similar to human learning patterns but guided by AI-based feedback rather than human supervision. The system can then use these self-generated experiences as training data for continuous improvement

5

.Pathway to AGI and Real-World Applications

DeepMind positions SIMA 2 as a crucial step toward Artificial General Intelligence (AGI), which the company defines as a system capable of performing a wide range of intellectual tasks while learning new skills and generalizing knowledge across different domains

1

.Frederic Besse, senior staff research engineer at DeepMind, explained the connection to robotics applications: "If we think of what a system needs to do to perform tasks in the real world, like a robot, there are two components. First, there is a high-level understanding of the real world and what needs to be done, as well as some reasoning"

1

.The research team views gaming environments as an ideal training ground for developing skills that could eventually transfer to real-world robotic applications, including navigation, tool use, and collaborative task execution

5

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Anthropic and Pentagon clash over AI safeguards as $200 million contract hangs in balance

Policy and Regulation