Google Enhances Search with AI-Powered Voice Queries for Images and Videos

17 Sources

17 Sources

[1]

Google AI Search Will Answer Voice Questions About Videos and Photos

Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past misadventures with misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. The same study found the citations within AI Overviews are driving more traffic to highly specialized sites such as Bloomberg.com and the National Institute of Health.

[2]

Google's search engine's latest AI injection will answer voiced questions about video and photos

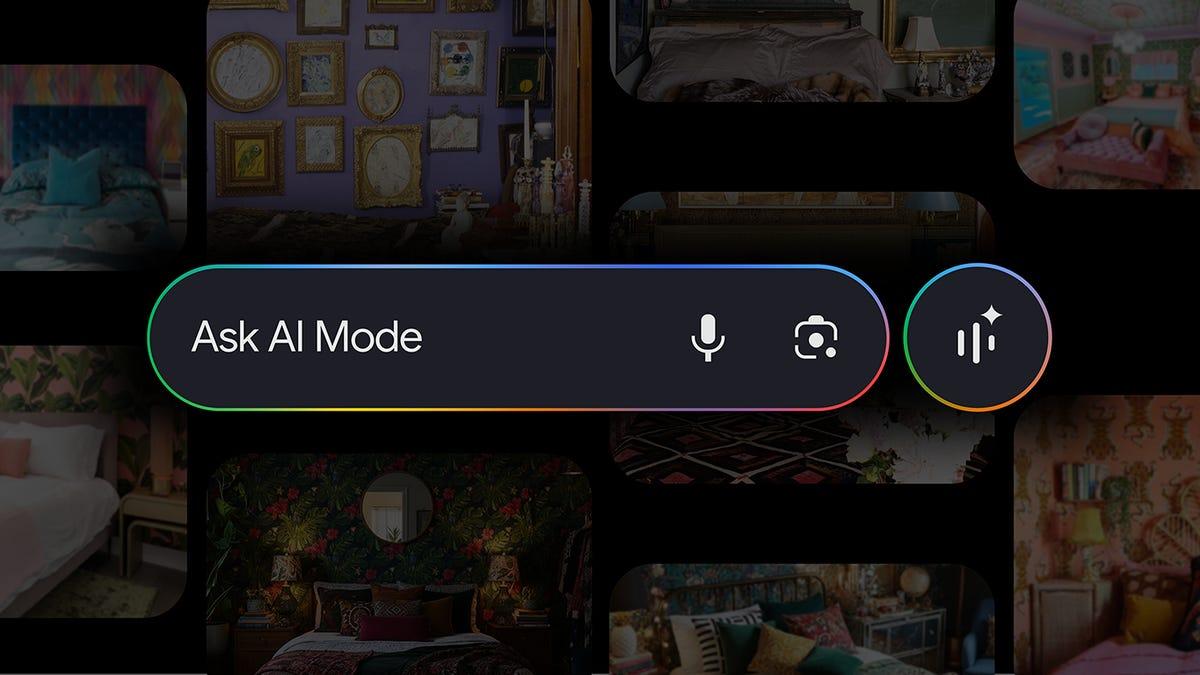

SAN FRANCISCO -- Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past misadventures with misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. The same study found the citations within AI Overviews are driving more traffic to highly specialized sites such as Bloomberg.com and the National Institute of Health. Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject.

[3]

Google's new voice-activated AI search will allow people to use Lens to ask questions about video and photos

Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past misadventures with misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. The same study found the citations within AI Overviews are driving more traffic to highly specialized sites such as Bloomberg.com and the National Institute of Health. Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject.

[4]

Google's search engine's latest AI injection will answer voiced questions about images

Google is pumping more artificial intelligence into its search engine SAN FRANCISCO -- Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past offerings of misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. But Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject.

[5]

Google's search engine's latest AI injection will answer voiced questions about images

Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past offerings of misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. But Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject. © 2024 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

[6]

Google's search engine's latest AI injection will answer voiced questions about images

SAN FRANCISCO (AP) -- Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past offerings of misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. But Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject.

[7]

Google's search engine's latest AI injection will answer voiced questions about images

SAN FRANCISCO (AP) -- Google is injecting its search engine with more artificial intelligence that will enable people to voice questions about images and occasionally organize an entire page of results, despite the technology's past offerings of misleading information. The latest changes announced Thursday herald the next step in an AI-driven makeover that Google launched in mid-May when it began responding to some queries with summaries written by the technology at the top of its influential results page. Those summaries, dubbed "AI Overviews," raised fears among publishers that fewer people would click on search links to their websites and undercut the traffic needed to sell digital ads that help finance their operations. Google is addressing some of those ongoing worries by inserting even more links to other websites within the AI Overviews, which already have been reducing the visits to general news publishers such as The New York Times and technology review specialists such as TomsGuide.com, according to an analysis released last month by search traffic specialist BrightEdge. But Google's decision to pump even more AI into the search engine that remains the crown jewel of its $2 trillion empire leaves little doubt that the Mountain View, California, company is tethering its future to a technology propelling the biggest industry shift since Apple unveiled the first iPhone 17 years ago. The next phase of Google's AI evolution builds upon its 7-year-old Lens feature that processes queries about objects in a picture. The Lens option is now generates more than 20 billion queries per month, and is particularly popular among users from 18 to 24 years old. That's a younger demographic that Google is trying to cultivate as it faces competition from AI alternatives powered by ChatGPT and Perplexity that are positioning themselves as answer engines. Now, people will be able to use Lens to ask a question in English about something they are viewing through a camera lens -- as if they were talking about it with a friend -- and get search results. Users signed up for tests of the new voice-activated search features in Google Labs will also be able to take video of moving objects, such as fish swimming around aquarium, while posing a conversational question and be presented an answer through an AI Overview. "The whole goal is can we make search simpler to use for people, more effortless to use and make it more available so people can search any way, anywhere they are," said Rajan Patel, Google's vice president of search engineering and a co-founder of the Lens feature. Although advances in AI offer the potential of making search more convenient, the technology also sometimes spits out bad information -- a risk that threatens to damage the credibility of Google's search engine if the inaccuracies become too frequent. Google has already had some embarrassing episodes with its AI Overviews, including advising people to put glue on pizza and to eat rocks. The company blamed those missteps on data voids and online troublemakers deliberately trying to steer its AI technology in a wrong direction. Google is now so confident that it has fixed some of its AI's blind spots that it will rely on the technology to decide what types of information to feature on the results page. Despite its previous bad culinary advice about pizza and rocks, AI will initially be used for the presentation of the results for queries in English about recipes and meal ideas entered on mobile devices. The AI-organized results are supposed to be broken down into different groups of clusters consisting of photos, videos and articles about the subject.

[8]

Google's new AI feature lets you search the web by uploading a video

Google has been the dominant search engine for more than a decade. However, to stay competitive with the new generation of AI search engines, Google's had to step up its game by implementing AI, and these new features are the latest salvo. On Thursday, Google launched new ways to search that leverage AI, starting with the ability to search using a video in Google Lens. Now, if you're curious about something happening in real-time in front of you, you can ask Google about it by shooting a quick video of what you are seeing. Also: The best AI search engines: Google, Perplexity, and more Here's what you do: Open Lens in the Google app on your phone, hold down the shutter button, and audibly state the question you want to learn more about. Google shares the example of a user recording a group of fish swimming in a circle at an aquarium and asking, "Why are they swimming together?" The feature is available for Search Labs users who are enrolled in the "AI Overviews and more" experiment and have the Google app, which is free to download for both Android and iOS users. Furthermore, users also can use voice prompts when taking a photo with Lens in the Google app for Android and iOS. The uploading process remains the same -- shoot a picture by clicking the shutter button -- but now you can add a voice question. This capability is available globally now. The Lens shopping experience has also been upgraded. Users now can take a photo of an item and get a more helpful result page that includes information such as reviews, prices, and retailers where the item can be purchased. Beyond visual element search, Google added a feature to help you find what you hear. With the latest Circle to Search update, users can search for songs without leaving the page on which they heard the sound. For example, if you hear a song on Instagram you want to know more about, you simply activate Circle to Search to find out. Also: Google's AI podcast tool transforms your text into stunningly lifelike audio - for free With the rollout of AI-organized result pages (mobile only) in the US, your results page will also look slightly different. At launch, the new results design will apply only to recipe and meal inspiration searches, invoking a full-page experience organized by the most relevant results for the user. Lastly, Google said it has been testing new ways to display AI Overviews that enable users to get the sources they're seeking more easily. The new design displays prominent links to supporting webpages directly within the AI Overview text and, due to positive feedback, will be rolled out to all countries where AI Overviews are available.

[9]

Google stuffs more AI into search

Lens visual searches add video capabilities and better shopping results. Google is adding more AI to search. On Thursday, the company unveiled a long list of changes, including AI-organized web results, Google Lens updates (including video and voice) and placing links and ads inside AI Overviews. One can suspect that AI-organized search results are where Google will eventually move across the board, but the rollout starts with a narrow scope. Beginning with recipes and meal inspiration, Google's AI will create a "full-page experience" that includes relevant results based on your search. The company says the AI-collated pages will consist of "perspectives from across the web," like articles, videos and forums. Google's AI Overviews, the snippets of AI-generated info you see above web results, are getting some enhancements, too. The company is incorporating a new link-laden design with more "prominent links to supporting webpages" within the section. Google says its tests have shown the design increased traffic to the supporting websites it links to. Ads are also coming to AI Overviews -- an inevitable outcome if ever there was one. The company says they're rolling out in the US, so don't be shocked if you start seeing them soon. Circle to Search is getting Shazam-like capabilities. The feature will now instantly search for songs you hear without switching apps. Google also noted that Circle to Search is now available on over 150 million Android devices, as it's expanded in reach and capabilities since its January launch. Google Lens, the company's seven-year-old visual search feature for mobile, is getting some upgrades, too. It can now search via video and voice, letting you ask "complex questions about moving images." The company provides the example of seeing fish at an aquarium and using Lens to ask it aloud, "Why are they swimming together?" According to Google, the AI will use the video clip and your voice recording to identify the species and explain why they hang out together. Along similar lines, you can now ask Google Lens questions with your voice while taking a picture. "Just point your camera, hold the shutter button and ask whatever's on your mind -- the same way you'd point at something and ask your friend about it," the company wrote. Google Lens is also upgrading its shopping chops. The company describes the upgraded visual product search as "dramatically more helpful" than its previous version. The AI results will now include essential information about the searched product, including reviews, prices across different retailers and where to buy. The Google Lens capabilities are all rolling out now, although some require an opt-in. Video searches are available globally for Search Labs users; you'll find them in the "AI Overviews and more" experiment. Voice input for Lens is now available for English users in the Google app on Android and iOS. Finally, enhanced shopping with Lens starts rolling out this week.

[10]

Google Search and Lens get AI-Organized results, voice, and video search capabilities and more

Google on Thursday announced a major update to Search, introducing AI-powered features aimed at expanding how users find information and explore new interests. Google emphasized that the latest advancements will allow users to ask questions in various ways -- through text, voice, images, or even humming a tune. The improvements are driven by Google's Gemini model, specifically designed for Search, and have enhanced users' ability to discover more online and in the world around them. According to Google, AI Overviews have increased both usage and user satisfaction, while Google Lens handles nearly 20 billion visual searches every month, helping users search using their cameras or screens. Video Understanding in Lens: Google has expanded Lens's capabilities to include video. Users can now record a video and ask questions about moving objects in it. For example, at an aquarium, users can record fish swimming and ask, "Why are they swimming together?" Google's systems will generate an AI Overview and provide relevant information from the web. Voice Queries in Lens: Users can now ask questions using their voice while taking photos with Lens. Simply hold down the camera shutter, ask a question aloud, and Google will respond with relevant insights. Improved Lens Shopping: Google has enhanced shopping features in Lens by providing more detailed results, including reviews, price comparisons, and where to buy items. This is powered by Google's Shopping Graph, which contains data on over 45 billion products. For instance, users can take a picture of a backpack, and Lens will provide detailed information about where to purchase it. Song Identification with Circle to Search: Users can now instantly identify songs playing in videos or websites without switching apps. This feature, now part of Google's Circle to Search, is available on over 150 million Android devices. AI-Organized Search Results: Google has started organizing search results with AI for open-ended questions, beginning with recipes and meal ideas on mobile. This update allows users to explore content from various sources, such as articles, videos, and forums, all in one organized page. New AI Overview Design: To make it easier to access relevant information, Google has updated its AI Overviews to include more prominent links to supporting websites within the overview text. This new design, which is rolling out globally, has already increased traffic to websites during testing. Ads in AI Overviews: Google is introducing ads into AI Overviews for relevant searches, starting in the U.S. This feature aims to help users quickly connect with businesses, products, and services relevant to their queries. Speaking about the new updates, Liz Reid, VP, Head of Google Search, said -

[11]

Google Will Let You Search the Web With Video Recordings

Google has announced updates to its search engine, focused on visual and audio search but also on AI-organized results pages. Not everything is releasing now, but the updates will be seen as they rolls out. Google Lens has been upgraded with video understanding and voice input. You'll be able to record videos and ask questions about moving objects or pictures using natural language. Google Lens will give an AI Overview and relevant resources from voice search, but right now, video is only available to Search Labs users enrolled in the AI Overviews and more. These features are available globally in the Google app for Android and iOS. Also, the shopping page has been upgraded with Google Lens. It now includes reviews, price comparisons, and retailer information. Google is using its Shopping Graph to find products and give users more details to make purchasing decisions. In addition to visual search, Google has introduced a new way to find songs using its Circle to Search feature. Users can now search for songs they hear without switching apps. This can be directly from videos, movies, or websites. This feature is currently available on over 150 million Android devices but is continuing to roll out in stages. Google is also rolling out AI-organized search results pages in the U.S., starting with recipes and meal inspiration on mobile. These pages should give a full page experience with relevant results organized for the user, including articles, videos, forums, and more. Google is also adding prominent links to supporting web pages within the AI overview text. Google mentioned that this is because users still want to go to the page itself with the information, so it will give links more prominently. he change to links change is supposed to drive traffic to websites and make it easier for users to find relevant information because "people want to go directly to the source for many of their questions." However, it sounds like users still trust websites that must curate and verify their information more than they trust Google's AI (for good reason), so it's making it clearer where information may come from. Still, this may be the answer to Bing's most recent AI search update. Source: Google

[12]

Google expands visual, audio search, lets AI handle layout

Almost two decades ago, the head of Google's then nascent enterprise division referred to the firm's search service as "an uber-command line interface to the world." These days, Google is interested in other modes of input too. On Thursday, Google announced changes to its search service that emphasize visual input via Google Lens, either as a complement to text keywords or as an alternative, and audio input. And it's using machine learning to reorganize how some search results get presented. The transition to multi-modal search input has been ongoing for some time. Google Lens, introduced in 2017 as an app for the Pixel 2 and Pixel XL phones, was decoupled from the Google Camera app in late 2022. The image recognition software, which traces its roots back to Google Goggles in 2010, was integrated into the reverse image search function of Google Images in 2022. Last year, Lens found a home in Google Bard, an AI chatbot model that has since been renamed Gemini. Presently, the image recognition software can be accessed from the camera icon within the Google App's search box. Earlier this year, Google linked Lens with the generative AI used in its AI Overviews - AI-based, possibly errant search results hoisted to the top of search results pages - so that users can point their mobile phone cameras at things and have Google Search analyze the resulting image as a search query. According to the Chocolate Factory, Lens queries are one of the fastest growing query types on Search, particularly among young users (18-24) - a demographic sought after by advertisers. Google claims that people use Lens for nearly 20 billion visual searches a month and 20 percent of them are shopping-related. Encouraged by such enthusiasm, the search-ads-and-apps biz is expanding its visual query capabilities with support for video analysis. "We previewed our video understanding capabilities at I/O, and now you can use Lens to search by taking a video, and asking questions about the moving objects that you see," explained Liz Reid, VP and head of Google Search, in a blog post provided to The Register. This aptitude is being made available globally via the Google App, for Android and iOS users who participate in the Search Labs "AI Overviews and more" experiment - presently English only. The Google App will also accept voice input for Lens - the idea being that you can aim your camera, hold the shutter button, and ask a question related to the image. As might be expected, Lens has also been enhanced to return more shopping-related info, like item price at various retailers, reviews, and where the item can be purchased. Lens searches are not stored by default, though the user can elect to keep a Visual Search History. Videos recorded with Lens are not saved, even with Visual Search History active. Also, Lens does not use facial recognition, making it not very useful for identifying people. Google's infatuation with AI has led to the application of machine learning to search result page layout, on mobile devices in the US, initially for meal and recipe queries. Instead of segmenting results by file type - websites, videos, and so on - AI will handle how results are arranged on the page. "In our testing, people have found AI-organized search results pages more helpful," insists Reid. "And with AI-organized search results pages, we're bringing people more diverse content formats and sites, creating even more opportunities for content to be discovered." Meanwhile, Google is being more deliberate in the organization of AI Overviews, snapshot summaries of search results placed at the top of search result pages. Beyond not having much impact on website traffic referral, AI Overviews can't be turned off but "can and will make mistakes," as Google puts it. At least now, AI Overviews will have better attribution. "We've been testing a new design for AI Overviews that adds prominent links to supporting web pages directly within the text of an AI Overview," said Reid. "In our tests, we've seen that this improved experience has driven an increase in traffic to supporting websites compared to the previous design, and people are finding it easier to visit sites that interest them." Also, AI Overview is getting ads; after all, someone's got to pay for all that AI. Google is making this search experience available globally, or at least everywhere that AI Overviews are offered. ®

[13]

Google introduces new way to search by filming video

Google has released a new feature which will allow people to search the internet by taking a video. Video search will let people point their camera at something, ask a question about it, and get search results. Android and iPhone users globally will gain access to the feature from 1700 GMT by enabling "AI Overviews" in their Google app, but it will only support English at launch. It is the latest move from the tech giant to change how people search online by utilising artificial intelligence (AI). It comes three months after ChatGPT-maker OpenAI announced it was trialling the ability to search by asking its chatbot questions. Google introduced AI-generated results at the top of certain search queries this year, with mixed results. In May, the feature drew criticism for providing erratic, inaccurate answers, which included advising people to make cheese stick to pizza by using "non-toxic glue". At the time, a Google spokesperson said the issues were "isolated examples". The results have since become better, with fewer inaccuracies. Since then, there have been further moves to include AI in search, which included the ability to ask questions about still images using Google Lens. The firm said this feature has increased the popularity of Lens, within its mobile app, which has motivated it to expand the feature further.

[14]

Google announces major AI improvements coming to Search - you can't avoid artificial intelligence anymore

Google just announced huge AI updates for Search and Lens, catapulting everyone into an artificially intelligent future whether you like it or not. Google Search will now be organized by AI, helping you get the results you want faster. The company announced the rollout will begin in the US starting with recipes and meal inspiration on mobile devices like the best iPhone. Google also announced a new design for AI Overviews that brings links into the summary and make it easier for users to access the websites they are looking for. Not only will you now have links in AI Overviews, but Google is incorporating ads into AI search results and Lens. This means you'll get recommendations of products related to your prompts, not just summaries and links to helpful webpages. Lens' major AI updates include a new Voice Search and Video Search, giving you even more ways to use Google's eyes to do your online searching. Google says you'll be able to upload videos directly to Lens and ask AI about moving objects. Google's example is a trip to the aquarium where you upload a video of the fish in a tank and ask, "Why are they swimming together?" Lens can then produce an AI overview with all the information you need. Voice Search will act similarly, allowing you to converse with Lens in a way that's similar to ChatGPT's Advanced Voice Mode and Gemini Live. New ways to interact with Lens are not the only AI updates coming to the platform, however. Google is adding a significant shopping update that will let you take pictures of products out in the wild and quickly get a new results page with key information on the product and which retailers you can buy it from. All of these updates to Google Lens are now available globally in the Google app for Android and iOS. Last but not least, Android fans have a new way to interact with Google Search with the arrival of Circle to Search on 'more than 150 million Android devices.' Not only will Circle to Search be accessible to more users, but Google has announced that Circle to Search can now identify songs in movies and other audio heard while browsing the web. Hear a song you like in a YouTube video, just simply circle the video and search to get the song title. Google's announcements today usher in a new era for Google Search and Lens, which emphasizes that users will just have to come to terms with the AI revolution. With better AI optimization in search results and new ways to search by using video or voice, it's clear that Google sees AI as a pillar in the future of the company's search engine. AI has slowly been implemented into our regular search results and with constant optimizations, like the addition of links in today's updates, it's only a matter of time before you won't have a choice but to use an artificially intelligent search engine.

[15]

Google is shaking up search with another huge AI update

Hopefully this update goes smoother than Google's "glue pizza" mishap With AI Overviews in the rearview, Google is rolling out even more ambitious new search updates. Its latest rollout, announced on Thursday, includes new AI features in Lens, Circle to Search, and regular Google searches, all of which are meant to simplify and expand the traditional web search experience. With the rollout (and others previously) it's clear Google is on a mission to bring AI to every corner of search, but so far the results have been mixed. Google got off to a rocky start earlier this year with AI overviews that promoted false and misleading answers to search queries. Whether its next set of features will fare better remains to be seen, but one thing is for sure: AI is on a collision course with web search and you can expect even more updates in the pipeline. Here's everything you need to know about Google's latest search update. On October 3, Google released a blog post detailing a slew of updates across its search platform. They fall into a few main categories: Google Lens, Circle to Search, and general Google searches. Google Lens now supports video understanding, meaning you can record a video while asking questions about what you're filming. Google's AI can understand the content of the video and connect it to your questions. There was a demo of this feature over the summer at Google I/O, but now it's publicly available as a Labs feature in the Google app. I wasn't able to try out this feature just yet, but it could definitely be useful if it works. Early on it will probably handle simple questions and videos best, so I'll be curious to see how well it processes videos with a lot of moving objects or more complex queries. Similarly, Google Lens also supports voice questions alongside photos now. When you take a photo in Lens, you can hold the shutter button to record your voice question at the same time. This feature is fairly straightforward. Photo searches with Lens have been generally useful and accurate in my experience and this feature simply allows you to use voice searches at the same time. Finally, the shopping features in Google Lens got a makeover. This latest update aims to make Google's AI better at identifying specific products in Lens photos and offering better shopping info, such as prices across different sites. Circle to Search got a smaller update, but one that many people will probably appreciate (assuming it works as advertised). Circle to Search can now identify songs. That might sound a bit confusing since you can't exactly "circle" music. This feature is actually a button in the Circle to Search bar that you can tap and hold to search for a song playing in an app or something you're hearing in real life. It's a bit odd to file it under Circle to Search, but it's a useful feature nonetheless. Meanwhile, general Google searches are getting a couple of new AI features, one of which will be more welcome than the other to many people. Google search results will now be AI-organized, meaning Google's AI will automatically sort results based on your search. The feature is launching first with recipe-related searches, which make a good example. When you search for a recipe now, you may see AI-organized results with categories like top recipes, related ingredients, and guides. Most of the features Google announced today have the potential to be useful if they work properly (meaning, they ideally don't recommend glue as a pizza ingredient). However, users may not be as excited about the final change in today's announcements: the addition of ads in Google's AI Overview box. It's no surprise Google is trying to monetize this section since it's right at the top of the search results, but it could make the AI Overview box something people start scrolling past more often. The AI Overview box was the subject of an embarrassing fumble for Google when it launched earlier this year and immediately started giving users strange, inaccurate results unearthed from far-flung corners of the Internet like old Reddit posts. The feature seems to be working better since Google improved the detection system and restrictions on AI Overview results back in May. However, many users may still be skeptical about trusting AI Overview content. Throwing ads into the mix probably isn't going to help. Luckily the ads appear to be sectioned off from the main content of AI Overview boxes, so users can't easily get sponsored links mixed up with legitimate Overview sources. Even so, there's still the question of how well Google's AI can match search results with sponsored links. Given AI Overview's rocky start, will advertisers be hesitant to pay for ads in this section of search results? We'll have to wait and see. You can try out all of these new AI features starting today in the Google app on iOS and Android, although there are some restrictions. Voice and video searches in Lens only support English questions right now. Likewise, AI-organized search results are only for users in the U.S. for the time being and will only support recipe and meal-related searches at launch. To get started trying out today's Google search updates, open the Google app on your phone and tab the little beaker icon in the top right corner to access your Google Labs settings. This is where you can opt-in for experimental features Google is testing, including AI Overview. Find it in the list and toggle it on to start using Google's latest AI features. Laptop Mag will be covering all the news on AI developments at Google, AI Overview, and more, so stay tuned for further updates.

[16]

3 newly announced ways Google Search is using AI

On Thursday, Google announced new search-related updates. In addition to the broad rollout of the redesigned AI Overviews feature, which makes links to publications more prominent, along with -- whomp, whomp -- ads on AI Overviews, Google touted some entirely new features. Here's what's happening with Lens, Circle to Search, and Google's entirely new experience for searching for recipes The Lens tool that's handy for searching visual elements can now process videos and voice. It works in the Google app by pointing the camera at something that's best understood with a moving image (like a school of fish swimming in a circle, as Google demonstrated in the demo). Hold down the shutter to record the video and ask Lens a question, like "why are they swimming together?" Lens will process the video and your question to give you a response in the form of an AI Overview, plus relevant resources to click on. Lens for video is currently only available for those with access to Google's testing ground, Search Labs. Lens for photos with voice prompts is live for Android and iOS users through the Google app. Circle to Search is another visual search tool that debuted earlier this year. Android users can circle or scribble over an object in an image and look something up on the page without having to switch apps. But now they can also search for a song in a video on social media or a streaming app in the same manner. Obviously, you can't physically circle a song, so instead, you click on the musical note icon next to the search bar at the bottom of the page. Google's AI model will then process the audio and pull up information about the song. Circle to Search for music is available to Android users with compatible Google Pixel and Samsung Galaxy devices. Google's search results are going to look a little different. With recipes and meal inspiration for starters, open-ended queries will show search results that have been organized by Google's AI models. Starting this week in the U.S. on mobile, users will start to see the full page of results broken down in subcategories of the initial query. The feature combines Google's Gemini AI models with its core Search algorithm to surface relevant topics based on a request for, say, vegetarian appetizer ideas. AI-organized search results are available on the mobile Google app.

[17]

Google Just Announced Five New AI Features Coming to Search

When you think of Google Search, it's probably as a simple concept: You open Google, type a search, and scroll through the results -- dodging ads and spam along the way. But Google's bread and butter is actually more than just its basic search engine. You can "google" questions and queries in a variety of ways, across a variety of products and services. Google continues to update and upgrade these options, and today's announcement is just the latest development. Here are five new features coming to Search starting today. Circle to Search has been available since the start of this year, and allows you to, quite literally, circle items on your phone's display to start a Google Search for them. It's an interesting feature, and one that's getting a useful upgrade: Now, you'll be able to look up a song that's playing using Circle to Search. You can use it by activating Circle to Search (long-press the Home button or navigation bar), then by tapping the new music button to search for music playing on or around your phone. Compatible songs will also appear as YouTube links if you want to pull up the full song as well. This actually isn't brand new: Google has been testing this feature out since August, and starting rolling it out first with the Galaxy S24 series. But it's cool to see it coming out for more devices generally now. While you've been able to do this using both Google's main app and the YouTube app, it's a useful shortcut for anyone using Circle to Search anyway. As much as I may wish Google would stop injecting search with AI features, the company is pushing forward with little to no reservations. Case in point: Google is now rolling out AI-organized search results that group your results into relevant categories. Google says this initial roll-out focuses first on "recipe and meal inspiration queries," which makes sense, I suppose. If you're searching for ideas for a dinner, for example, it could be useful to group together varying results into a cohesive structure for a final recipe. This is pure speculation, but maybe you first see a few different recipes, then under that, the ingredients necessary for each. Perhaps from there, you can find stores that sell what you're looking for. That could, potentially, save time, and make it easier to try a new dish you've been thinking about. In practice, we'll see how that actually goes. AI Overviews, for example, was a disaster when it launched, to the point where I actively avoid the company's AI-generated results when I search. In fact, there are ways to make Google show you the good search results again, and avoid the AI slop altogether. But yeah, sure: Keep adding AI to Search, Google. For years, Google Lens has been a useful way to search images you take with your smartphone. Using Lens, you can take a photo of a plant and learn more about it, or copy text out of an image using OCR (optical character recognition) and paste it somewhere else. Now, Google is rolling out a new feature that lets you dictate requests to Google Lens using your voice. Google says you can simply hold down the shutter button in Lens, then ask whatever you want. Rather than snap a picture, scan it, and hope you get the results you want, you can point your camera over a plant and ask Google what species it is, how much one typically costs, and how to care for it. This will work with Circle to Search, too, so you can long-press your Home button or navigation bar, circle a product you see on-screen, and learn more about it. Plus, Google says you can now add text to these searches to refine what you're looking for. If you like a bag for example, but want it in a different color, add the word "blue" or "red" to your search to see if Google can find the item in the color you prefer. On a similar note, you can now share video with Lens to search, so long as you're enrolled in Search Labs' "AI Overviews and more" experiments. The feature is currently in testing, so it might not work exactly as intended yet, but if you do opt-in, you can try searching a video you record directly in Lens. Like searching with your voice, you can use this feature by holding down the shutter button to record a video while asking a question aloud. Google's AI model will then process both the video and your question, and return the result as an AI Overview. (Fantastic.) In theory, however, it sounds like it could be useful: If you have a question about something, say, why your tablet's display is flickering, you could record it, ask Lens the question out loud, and hopefully find a more accurate answer than a standard Google search would have returned. Another new Lens feature is all about making shopping simpler: When you take a photo of a product with Lens, your phone will now display an organized results page of information relating to purchasing said product: You'll see reviews, the prices different retailers carry the item for, and links to go shop for that item.

Share

Share

Copy Link

Google announces significant AI upgrades to its search engine, enabling voice-activated queries about images and videos, and introducing AI-organized search results. This move aims to simplify search and attract younger users, despite past challenges with AI-generated misinformation.

Google's AI-Driven Search Evolution

Google has unveiled a major artificial intelligence upgrade to its search engine, marking a significant step in its AI-driven transformation. This latest development, announced on Thursday, builds upon the AI makeover initiated in mid-May with the introduction of "AI Overviews"

1

.Voice-Activated Image and Video Queries

The new feature allows users to voice questions about images and videos they are viewing through their camera lens. This enhancement is an extension of Google's 7-year-old Lens feature, which now generates over 20 billion queries per month

2

. Users can ask questions in English about objects they see, as if conversing with a friend, and receive search results instantly.AI-Organized Search Results

Google is also introducing AI-organized search results, starting with queries about recipes and meal ideas on mobile devices. The results will be presented in clusters of photos, videos, and articles, offering a more comprehensive and visually appealing search experience

3

.Targeting Younger Demographics

This AI evolution is particularly popular among users aged 18 to 24, a demographic Google is keen to cultivate as it faces competition from AI-powered alternatives like ChatGPT and Perplexity

4

. The company aims to make search more intuitive and accessible across various platforms and contexts.Addressing Publisher Concerns

The introduction of AI Overviews raised concerns among publishers about potential traffic loss. Google is addressing these worries by inserting more links to other websites within the AI Overviews

5

. A recent analysis by BrightEdge revealed that while visits to general news publishers have decreased, traffic to specialized sites has increased.Related Stories

Challenges and Missteps

Despite the advancements, Google has faced challenges with AI-generated misinformation. Previous incidents included the AI suggesting putting glue on pizza and eating rocks. The company attributed these errors to data voids and deliberate attempts to mislead the AI

2

.Google's AI-Centric Future

This latest update underscores Google's commitment to AI as the driving force behind its future. With its search engine being the cornerstone of its $2 trillion empire, the company is clearly betting on AI to maintain its dominance in the rapidly evolving tech landscape

3

.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation