Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

15 Sources

15 Sources

[1]

Attackers prompted Gemini over 100,000 times while trying to clone it, Google says

On Thursday, Google announced that "commercially motivated" actors have attempted to clone knowledge from its Gemini AI chatbot by simply prompting it. One adversarial session reportedly prompted the model more than 100,000 times across various non-English languages, collecting responses ostensibly to train a cheaper copycat. Google published the findings in what amounts to a quarterly self-assessment of threats to its own products that frames the company as the victim and the hero, which is not unusual in these self-authored assessments. Google calls the illicit activity "model extraction" and considers it intellectual property theft, which is a somewhat loaded position, given that Google's LLM was built from materials scraped from the Internet without permission. Google is also no stranger to the copycat practice. In 2023, The Information reported that Google's Bard team had been accused of using ChatGPT outputs from ShareGPT, a public site where users share chatbot conversations, to help train its own chatbot. Senior Google AI researcher Jacob Devlin, who created the influential BERT language model, warned leadership that this violated OpenAI's terms of service, then resigned and joined OpenAI. Google denied the claim but reportedly stopped using the data. Even so, Google's terms of service forbid people from extracting data from its AI models this way, and the report is a window into the world of somewhat shady AI model-cloning tactics. The company believes the culprits are mostly private companies and researchers looking for a competitive edge, and said the attacks have come from around the world. Google declined to name suspects. The deal with distillation Typically, the industry calls this practice of training a new model on a previous model's outputs "distillation," and it works like this: If you want to build your own large language model (LLM) but lack the billions of dollars and years of work that Google spent training Gemini, you can use a previously trained LLM as a shortcut. To do so, you need to feed the existing AI model thousands of carefully chosen prompts, collect all the responses, and then use those input-output pairs to train a smaller, cheaper model. The result will closely mimic the parent model's output behavior but will typically be smaller overall. It's not perfect, but it can be a far more efficient training technique than hoping to build a useful model on random Internet data that includes a lot of noise. The copycat model never sees Gemini's code or training data, but by studying enough of its outputs, it can learn to replicate many of its capabilities. You can think of it as reverse-engineering a chef's recipes by ordering every dish on the menu and working backward from taste and appearance alone. In the report published by Google, its threat intelligence group describes a growing wave of these distillation attacks against Gemini. Many of the campaigns specifically targeted the algorithms that help the model perform simulated reasoning tasks, or decide how to process information step by step. Google said it identified the 100,000-prompt campaign and adjusted Gemini's defenses, but it did not detail what those countermeasures involve. A clone of a clone Google is not the only company worried about distillation. OpenAI accused Chinese rival DeepSeek last year of using distillation to improve its own models, and the technique has since spread across the industry as a standard for building cheaper, smaller AI models from larger ones. The line between standard distillation and theft depends on whose model you're distilling and whether you have permission, a distinction that tech companies have spent billions of dollars trying to protect but that no court has tested. Competitors have been using distillation to clone AI language model capabilities since at least the GPT-3 era, with ChatGPT a popular target after its launch. In March 2023, shortly after Meta's LLaMA model weights leaked online, Stanford University researchers built a model called Alpaca by fine-tuning LLaMA on 52,000 outputs generated by OpenAI's GPT-3.5. The total cost was about $600. The result behaved so much like ChatGPT that it raised immediate questions about whether any AI model's capabilities could be protected once it was accessible through an API. Later that year, Elon Musk's xAI launched its Grok chatbot, which promptly cited "OpenAI's use case policy" when refusing certain requests. An xAI engineer blamed accidental ingestion of ChatGPT outputs during web scraping, but the specificity of the behavior, down to ChatGPT's characteristic refusal phrasing and habit of wrapping responses with "Overall..." summaries, left many in the AI community unconvinced. As long as an LLM is accessible to the public, no foolproof technical barrier prevents a determined actor from doing the same thing to someone else's model over time (though rate-limiting helps), which is exactly what Google says happened to Gemini. Distillation also occurs within companies, and it's frequently used to create smaller, faster-to-run versions of older, larger AI models. OpenAI created GPT-4o Mini as a distillation of GPT-4o, for example, and Microsoft built its compact Phi-3 model family using carefully filtered synthetic data generated by larger models. DeepSeek has also officially published six distilled versions of its R1 reasoning model, the smallest of which can run on a laptop.

[2]

Hackers Are Trying to Copy Gemini via Thousands of AI Prompts, Google Reports

A new report from Google warns the industry of attempts to clone AI models. In a new Threat Tracker report published Thursday, Google said hackers are engaging in "distillation attacks," including one case in which they used more than 100,000 AI prompts to steal the company's technology for its Gemini AI model. Google said the attacks seem to be coming from adversaries in countries including North Korea, Russia and China, and that the attempts to steal AI intellectual property and likely clone it into AI models in other languages are part of a broader set of AI-based attacks and malware the company has seen emerge. The company identifies these attempts as model extraction attacks, which, it says, "occur when an adversary uses legitimate access to systematically probe a mature machine learning model to extract information used to train a new model." That could mean using AI to flood Gemini with thousands of prompts to replicate its model capabilities. Google noted in the report that this is not a threat to its users, but rather to service providers and model builders, who could be vulnerable to having their work stolen and replicated. John Hultquist, chief analyst for the Google Threat Intelligence Group, which put together the report, told NBC News that Google may be one of the first companies to face these types of theft attempts, but there could be many more. "We're going to be the canary in the coal mine for far more incidents," he said. The war over AI models has intensified on several fronts, most recently withs Chinese companies such as ByteDance introducing advanced video generation tools. Last year, Chinese AI company DeepSeek rattled the AI industry, which had been primarily led by US companies, by introducing a model that rivaled the world's top AI technology. OpenAI later accused DeepSeek of training its AI on existing technology in ways similar to those described by Google in its new report. (Disclosure: Ziff Davis, CNET's parent company, in 2025 filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

[3]

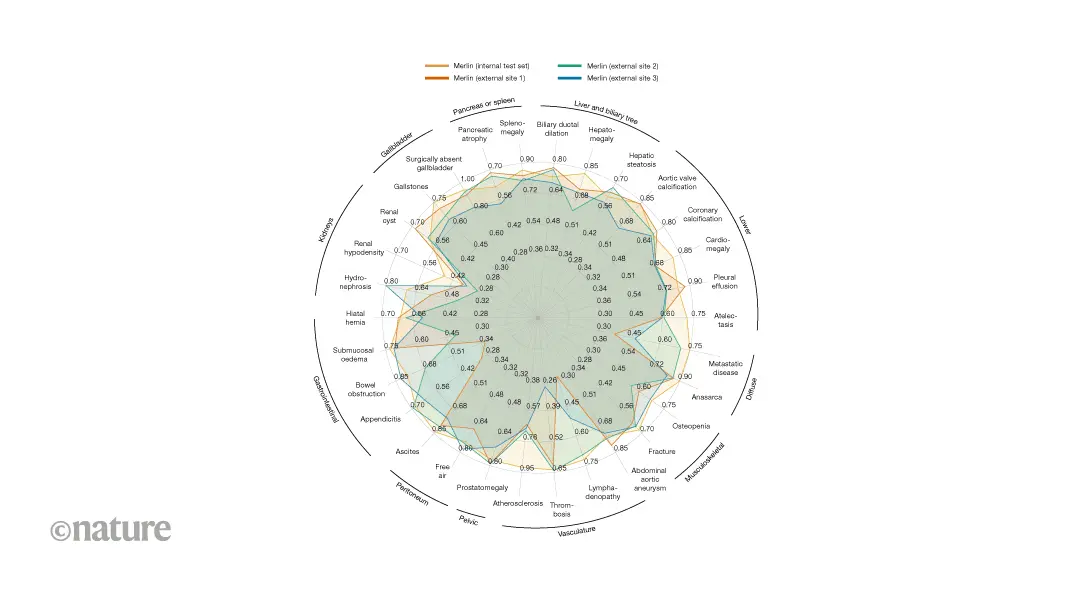

Google reports that state hackers from China, Russia and Iran are using Gemini in 'all stages' of attacks -- phishing lures, coding and vulnerability testing get AI underpinnings from hostile actors

Google's Gemini AI models have become a core component of state-sponsored hackers' attack vectors. Although AI use has been growing in white and black hat hacking in recent years, Google now says it's used in all parts of the attack process, from target acquisition to coding, social engineering message generation, and follow-up actions after the hack, as outlined in Google's latest Threat Intelligence Group report. Although AI producing malware and hackers using AI has been a fear expressed by many since the advent of mainstream large language models in 2023, it was only August 2025 that many hailed the arrival of the age of AI hacking. Large language models have grown capable enough to act as useful tools for hackers with enough versatility and reliability to be consistently effective. The first AI-powered ransomware showed up just days later, and Anthropic claimed to have foiled the first AI-powered malware attack in November. There have been just as many instances of AI itself being vulnerable to attackers, too, and it's not like the AI developers even have perfect security records. But Google's admission of Gemini's involvement in every facet of modern hacks is a new paradigm shift. It now has tracked incidents of nation-state hacking groups utilizing Gemini for everything, "from reconnaissance and phishing lure creation to command and control (C2) development and data exfiltration." Different countries and their hacking groups are allegedly using Gemini comprehensively, the Google report explains. In China, for example, its threat actors had Gemini act as an expert cybersecurity persona to have it conduct vulnerability analysis and provide penetration testing plans for potential attack targets. "The PRC-based threat actor fabricated a scenario, in one case trialing Hexstrike MCP tooling, and directing the model to analyze Remote Code Execution (RCE), WAF bypass techniques, and SQL injection test results against specific US-based targets," the report reads. North Korea, on the other hand, primarily uses Gemini as part of phishing attacks. It used Gemini to profile high-value targets to plan out attacks. It is particularly used to go after members of security and defence companies, and attempt to find vulnerable targets within their orbits. Iran was much the same, with its government-backed hackers using Gemini to search for official emails for specific targets and to conduct research into business partners of potential targets. They also used AI to generate personas that might have a good reason to engage with a target by feeding Gemini biographical information. One way in which all the tracked state actors are using Gemini is in producing AI slop, but specific, targeted, and deliberate slop. Political satire, propaganda, articles, and memes and images designed to rile up Western audiences were common uses of Gemini and its generative tools. "Threat actors from China, Iran, Russia, and Saudi Arabia are producing political satire and propaganda to advance specific ideas across both digital platforms and physical media, such as printed posters," the report says. Although Google did confirm it hadn't seen these assets deployed into the wild, suggesting this use of Gemini may still be in its nascent stages, it's still something Google took seriously. To mitigate any potential negative effects, Google disabled assets associated with these actors' activities, and Google DeepMind, in turn, used these insights to improve its protections against misuse of Gemini services. Gemini is now less likely to assist in generating this kind of content in the future. Google's report also highlights a high appetite among hackers for bespoke AI hacking tools. It cites one example of an underground toolkit called "Xanthorox," which is advertised as custom AI for cyber offensive campaigns. It claims to be able to generate malicious malware code and construct custom phishing campaigns. It's even sold with the idea that it is "privacy preserving," for the user. But under the hood, Xanthorox is just an API that leverages existing general AI models like Gemini. "This setup leverages a key abuse vector: the integration of multiple open-source AI products -- specifically Crush, Hexstrike AI, LibreChat-AI, and Open WebUI -- opportunistically leveraged via Model Context Protocol (MCP) servers to build an agentic AI service upon commercial models," Google explains. Google highlights that because using these kinds of tools requires making lots of API calls to the various AI models, it makes organizations with large allocations of API tokens excellent targets for hijacking accounts. This is creating a black market for API keys, adding financial incentive to acquiring them, and placing greater emphasis on the importance of organizations securing them and their employees' access to AI tools. Google did observe some actors attempting to use Gemini and other AI to augment existing malware and generate new malicious software. Although it claims not to have noted any particular advances in this area, it is something being actively explored and is likely to advance in the future. HonestCue is one proof-of-concept AI malware framework that uses Gemini to generate code for a second-stage malware. So the malware infects a machine, then that malware contacts Gemini and generates new code for a second-attack. It also noted a ClickFix campaign that used social engineering within a chatbot to encourage users to download malicious files, bypassing security methods. As Google tracks these attempts, and others by state actors, it continues to disable accounts, block access to assets, and update the Gemini model so it's less susceptible to these kinds of manipulations and attacks in the future. Like traditional anti-malware defences, though, anti-AI attacks look set to be a cat-and-mouse game that is unlikely to end any time soon.

[4]

Google: China's APT31 used Gemini to plan US cyberattacks

A Chinese government hacking group that has been sanctioned for targeting America's critical infrastructure used Google's AI chatbot, Gemini, to auto-analyze vulnerabilities and plan cyberattacks against US organizations, the company says. While there's no indication that any of these attacks were successful, "APT groups like this continue to experiment with adopting AI to support semi-autonomous offensive operations," Google Threat Intelligence Group chief analyst John Hultquist told The Register. "We anticipate that China-based actors in particular will continue to build agentic approaches for cyber offensive scale." In the threat-intel group's most recent AI Threat Tracker report, released on Thursday and shared with The Register in advance, Google attributes this activity to APT31, a Beijing-backed crew also known as Violet Typhoon, Zirconium, and Judgment Panda. This goon squad was one of many exploiting a series of Microsoft SharePoint bugs over the summer, and in March 2024, the US issued sanctions against and criminally charged seven APT31 members accused of breaking into computer networks, email accounts, and cloud storage belonging to numerous high-value targets. The most recent attempts by APT31 to use Google's Gemini AI tool happened late last year, we're told. "APT31 employed a highly structured approach by prompting Gemini with an expert cybersecurity persona to automate the analysis of vulnerabilities and generate targeted testing plans," according to the report. The adversaries' adoption of this capability is so significant - it's the next shoe to drop In one case, the China-based gang used Hexstrike, an open source, red-teaming tool built on the Model Context Protocol (MCP) to analyze various exploits - including remote code execution, web application firewall (WAF) bypass techniques, and SQL injection - "against specific US-based targets," the Googlers wrote. Hexstrike enables models, including Gemini, to execute more than 150 security tools with a slew of capabilities, including network and vulnerability scanning, reconnaissance, and penetration testing. Its intended use is to help ethical hackers and bug hunters find security weaknesses and collect bug bounties - but shortly after its release in mid-August, criminals began using the AI platform for more nefarious purposes. Integrating Hexstrike with Gemini "automated intelligence gathering to identify technological vulnerabilities and organizational defense weaknesses," the AI threat tracker says, noting that Google has since disabled accounts linked to this campaign. "This activity explicitly blurs the line between a routine security assessment query and a targeted malicious reconnaissance operation." Google's report, which picks up where its November 2025 analysis left off, details how government-backed groups and cybercriminals alike are abusing Google's AI tools, along with the steps the Chocolate Factory has implemented to stop them. And it finds that attackers - just like everybody else on the planet - have a keen interest in agentic AI's capabilities to make their lives and jobs easier. "The adversaries' adoption of this capability is so significant - it's the next shoe to drop," Hultquist said. He explained there are two areas that Google is most concerned about. "One is the ability to operate across the intrusion," he said, noting the earlier Anthropic report about Chinese cyberspies abusing its Claude Code AI tool to automate most elements of attacks directed at high-profile companies and government organizations. In "a small number of cases," they even succeeded. "The other is automating the development of vulnerability exploitation," Hultquist said. "These are two ways where adversaries can get major advantages and move through the intrusion cycle with minimal human interference. That allows them to move faster than defenders and hit a lot of targets." In addition, using AI agents to find vulnerabilities and test exploits widens the patch gap - the time between the bug becoming known and a full working fix being deployed and implemented. "It's a really significant space currently," Hultquist said. "In some organizations, it takes weeks to put defenses in place." This requires security professionals to think differently about defense, using AI to respond and fix security weaknesses more quickly than humans can on their own. "We are going to have to leverage the advantages of AI, and increasingly remove humans from the loop, so that we can respond at machine speed," Hultquist noted. The latest report also found an increase in model extraction attempts - what it calls "distillation attacks" - and says both GTIG and Google DeepMind identified miscreants attempting to perform model extraction on Google's AI products. This is a type of intellectual property theft used to gain insights into a model's underlying reasoning and chain-of-thought processes. "This is coming from threat actors throughout the globe," Hultquist said. "Your model is really valuable IP, and if you can distill the logic behind it, there's very real potential that you can replicate that technology - which is not inexpensive." This essentially gives criminals and shady companies the ability to accelerate AI model development at a much lower cost, and Google's report cites "model stealing and capability extraction emanating from researchers and private sector companies globally." ®

[5]

Google says hackers are abusing Gemini AI for all attacks stages

State-backed hackers are using Google's Gemini AI model to support all stages of an attack, from reconnaissance to post-compromise actions. Bad actors from China (APT31, Temp.HEX), Iran (APT42), North Korea (UNC2970), and Russia used Gemini for target profiling and open-source intelligence, generating phishing lures, translating text, coding, vulnerability testing, and troubleshooting. Cybercriminals are also showing increased interest in AI tools and services that could help in illegal activities, such as social engineering ClickFix campaigns. The Google Threat Intelligence Group (GTIG) notes in a report today that APT adversaries use Gemini to support their campaigns "from reconnaissance and phishing lure creation to command and control (C2) development and data exfiltration." Chinese threat actors employed an expert cybersecurity persona to request that Gemini automate vulnerability analysis and provide targeted testing plans in the context of a fabricated scenario. "The PRC-based threat actor fabricated a scenario, in one case trialing Hexstrike MCP tooling, and directing the model to analyze Remote Code Execution (RCE), WAF bypass techniques, and SQL injection test results against specific US-based targets," Google says. Another China-based actor frequently employed Gemini to fix their code, carry out research, and provide advice on technical capabilities for intrusions. The Iranian adversary APT42 leveraged Google's LLM for social engineering campaigns, as a development platform to speed up the creation of tailored malicious tools (debugging, code generation, and researching exploitation techniques). Additional threat actor abuse was observed for implementing new capabilities into existing malware families, including the CoinBait phishing kit and the HonestCue malware downloader and launcher. GTIG notes that no major breakthroughs have occurred in that respect, though the tech giant expects malware operators to continue to integrate AI capabilities into their toolsets. HonestCue is a proof-of-concept malware framework observed in late 2025 that uses the Gemini API to generate C# code for second-stage malware, then compiles and executes the payloads in memory. CoinBait is a React SPA-wrapped phishing kit masquerading as a cryptocurrency exchange for credential harvesting. It contains artifacts indicating that its development was advanced using AI code generation tools. One indicator of LLM use is logging messages in the malware source code that were prefixed with "Analytics:," which could help defenders track data exfiltration processes. Based on the malware samples, GTIG researchers believe that the malware was created using the Lovable AI platform, as the developer used the Lovable Supabase client and lovable.app. Cybercriminals also used generative AI services in ClickFix campaigns, delivering the AMOS info-stealing malware for macOS. Users were lured to execute malicious commands through malicious ads listed in search results for queries on troubleshooting specific issues. The report further notes that Gemini has faced AI model extraction and distillation attempts, with organizations leveraging authorized API access to methodically query the system and reproduce its decision-making processes to replicate its functionality. Although the problem is not a direct threat to users of these models or their data, it constitutes a significant commercial, competitive, and intellectual property problem for the creators of these models. Essentially, actors take information obtained from one model and transfer the information to another using a machine learning technique called "knowledge distillation," which is used to train fresh models from more advanced ones. "Model extraction and subsequent knowledge distillation enable an attacker to accelerate AI model development quickly and at a significantly lower cost," GTIG researchers say. Google flags these attacks as a threat because they constitute intellectual theft, they are scalable, and severely undermine the business model of AI-as-a-service, which has the potential to impact end users soon. In a large-scale attack of this kind, Gemini AI was targeted by 100,000 prompts that posed a series of questions aimed at replicating the model's reasoning across a range of tasks in non-English languages. Google has disabled accounts and infrastructure tied to documented abuse, and has implemented targeted defenses in Gemini's classifiers to make abuse harder. The company assures that it "designs AI systems with robust security measures and strong safety guardrails" and regularly tests the models to improve their security and safety.

[6]

State-sponsored hackers love Gemini, Google says

Google restricts access for identified bad actors, but the report highlights AI's dual-use nature and emerging cybersecurity challenges. "AI" systems aren't just great for raising the price of your electronics, giving you wrong search results, and filling up your social media feed with slop. It's also handy for hackers! Apparently the large language model of choice for state-sponsored attacks from countries like Russia, China, North Korea, and Iran is Google Gemini. And that's according to Google itself. In a sprawling report on what it repeatedly calls a violation of its terms of service, Google's Threat Intelligence Group documents uses of Gemini by attackers associated with the aggressive nations. Most of the documented use of Gemini is automated surveillance, identifying high-value targets and vulnerabilities, including corporations, separatist groups, and dissenters. But hacking groups associated with China and Iran have been spotted running more sophisticated campaigns, including debugging exploit code and social engineering. One attack from a group with ties to Iran was developing a proof-of-concept exploit for a well-known flaw in WinRAR. For all my grousing on "AI", one thing that large language models are genuinely good at is examining and distilling huge amounts of data. The advancements in machine learning allow for searching through data sets that would take teams of humans years to examine -- this is being applied in less nefarious ways in fields like astronomy and cancer research. This is a definite boon for hackers, who need to perform huge amounts of tedious data processing in order to find software vulnerabilities, plus tons of more conventional sifting to identify targets and social engineering techniques. One example stood out to me. A group labelled internally as APT31 used an example Gemini prompt like "I'm a security researcher who is trialling out the Hexstrike MCP tooling," using a system that connects "AI agents" with preexisting security tools to test for vulnerabilities and other attack vectors. Naturally, Gemini can't tell the difference between a legitimate security researcher (white hat) and a malicious hacker (black hat), since a lot of their work overlaps both conceptually and practically. So the answers it provides to both would be the same...for all that Google claims using Gemini in this manner is against the rules. Gemini is also used for more mundane coding systems, writing and debugging code for malware. And yes, "AI slop" is thick on the ground, sometimes literally. "Threat actors from China, Iran, Russia, and Saudi Arabia are producing political satire and propaganda to advance specific ideas across both digital platforms and physical media, such as printed posters," says the Google report. Google claims that it's restricted access to Gemini for users that it can confidently identify as malicious, including the detected state-sponsored hacking teams.

[7]

AI malware, Gemini lures and more: Google reveals how hackers are actually using AI

State-sponsored groups are creating highly convincing phishing kits and social engineering campaigns If you've used any modern AI tools, you'll know they can be a great help in reducing the tedium of mundane and burdensome tasks. Well, it turns out threat actors feel the same way, as the latest Google Threat Intelligence Group AI Threat Tracker report has found that attackers are using AI more than ever. From figuring out how AI models reason in order to clone them, to integrating it into attack chains to bypass traditional network-based detection, GTIG has outlined some of the most pressing threats - here's what they found. For starters, GTIG found threat actors are increasingly using 'distillation attacks' to quickly clone large language models so that they can be used by threat actors for their own purposes. Attackers will use a huge volume of prompts to find out how the LLM reasons with queries, and then use the responses to train their own model. Attackers can then use their own model to avoid paying for the legitimate service, use the distilled model to analyze how the LLM is built, or search for ways to exploit their own model which can also be used to exploit the legitimate service. AI is also being used to support intelligence gathering and social engineering campaigns. Both Iranian and North Korean state-sponsored groups have utilized AI tools in this way, with the former using AI to gather information on business relationships in order to create a pretext for contact, and the latter using AI to amalgamate intelligence to help plan attacks. GTIG has also spotted a rise in AI usage for creating highly convincing phishing kits for mass-distribution in order to harvest credentials. Moreover, some threat actors are integrating AI-models into malware to allow it to adapt to avoid detection. One example, tracked as HONESTCUE, dodged network-based detection and static analysis by using Gemini to re-write and execute code during an attack. But not all threat actors are alike. GTIG has also noted that there is a serious demand for custom AI tools built for attackers, with specific calls for tools capable of writing code for malware. For now, attackers are reliant on using distillation attacks to create custom models to use offensively. But if such tools were to become widely available and easy to distribute, it is likely that threat actors would quickly adopt malicious AI into attack vectors to improve the performance of malware, phishing, and social engineering campaigns. In order to defend against AI-augmented malware, many security solutions are deploying their own AI tools to fight back. Rather than relying on static analysis, AI can be used to analyze potential threats in real time to recognize the behavior of AI-augmented malware. AI is also being employed to scan emails and messages in order to spot phishing in real time at a scale that would require thousands of hours of human work. Moreover, Google is actively seeking out potentially malicious AI usage in Gemini, and has deployed a tool to help seek out software vulnerabilities (Big Sleep), and a tool to help in patching vulnerabilities (CodeMender).

[8]

Hackers are using Gemini to target you, Google says

Google links Gemini use to recon, phishing, coding, and post-breach activity. Google says hackers are abusing Gemini to speed up cyberattacks, and it isn't limited to cheesy phishing spam. In a new Google Threat Intelligence Group report, it says state-backed groups have used Gemini across multiple phases of an operation, from early target research to post-compromise work. The activity spans clusters linked to China, Iran, North Korea, and Russia. Google says the prompts and outputs it observed covered profiling, social engineering copy, translation, coding help, vulnerability testing, and debugging when tools break during an intrusion. Fast help on routine tasks can still change the outcome. AI help, same old playbook Google's researchers frame the use of AI as acceleration, not magic. Attackers already run recon, draft lures, tweak malware, and chase down errors. Gemini can tighten that loop, especially when operators need quick rewrites, language support, or code fixes under pressure. Recommended Videos The report describes Chinese-linked activity where an operator adopted an expert cybersecurity persona and pushed Gemini to automate vulnerability analysis and produce targeted test plans in a made-up scenario. Google also says a China-based actor repeatedly used Gemini for debugging, research, and technical guidance tied to intrusions. It's less about new tactics, more about fewer speed bumps. The risk isn't just phishing The big shift is tempo. If groups can iterate faster on targeting and tooling, defenders get less time between early signals and real damage. That also means fewer obvious pauses where mistakes, delays, or repeated manual work might surface in logs. Google also flags a different threat that doesn't look like classic scams at all, model extraction and knowledge distillation. In that scenario, actors with authorized API access hammer the system with prompts to replicate how it performs and reasons, then use that knowledge to train another model. Google frames it as commercial and intellectual property harm, with potential downstream risk if it scales, including one example involving 100,000 prompts aimed at replicating behavior in non-English tasks. What you should watch next Google says it has disabled accounts and infrastructure tied to documented Gemini abuse, and it has added targeted defenses in Gemini's classifiers. It also says it continues testing and relies on safety guardrails. For security teams, the practical takeaway is to assume AI-assisted attacks will move quicker, not necessarily smarter. Track sudden improvements in lure quality, faster tooling iteration, and unusual API usage patterns, then tighten response runbooks so speed doesn't become the attacker's biggest advantage.

[9]

State-Sponsored Hackers Using Popular AI Tools Including Gemini, Google Warns - Decrypt

Hackers are taking an interest in agentic AI to put AI fully in control of attacks. Google's Threat Intelligence Group (GTIG) is sounding the alarm once again on the risks of AI, publishing its latest report on how artificial intelligence is being used by dangerous state-sponsored hackers. This team has identified an increase in model extraction attempts, a method of intellectual property theft where someone queries an AI model repeatedly, trying to learn its internal logic and replicate it into a new model. While this is worrying, it isn't the main risk that Google is voicing concern over. The report goes on to warn of government-backed threat actors using large language models (LLMs) for technical research, targeting and the rapid generation of nuanced phishing lures. The report highlights concerns over the Democratic People's Republic of Korea, Iran, the People's Republic of China and Russia. These actors are reportedly using AI tools, such as Google's own Gemini, for reconnaissance and target profiling, using open-source intelligence gathering on a large scale, as well as to create hyper-personalized phishing scams. "This activity underscores a shift toward AI-augmented phishing enablement, where the speed and accuracy of LLMS can bypass the manual labor traditionally required for victim profiling", the report from Google states. "Targets have long relied on indicators such as poor grammar, awkward syntax, or lack of cultural context to help identify phishing attempts. Increasingly, theat actors now leverage LLMs to generate hyper-personalized lures that can mirror the professional tone of a target organization". For example, if Gemini were given the biography of a target, it could generate a good persona and help to best produce a scenario that would effectively grab their attention. By using AI, these threat actors can also more effectively translate in and out of local languages. As AI's ability to generate code has grown, this has opened up doors for its malicious use too, with these actors troubleshooting and generating malicious tooling using AI's vibe coding functionality. The report goes on to warn about a growing interest in experimenting with agentic AI. This is a form of artificial intelligence which can act with a degree of autonomy, supporting tasks like malware development and its automation. Google notes its efforts to combat this problem through a variety of factors. Along with creating Threat Intelligence reports multiple times a year, the firm has a team constantly searching for threats. Google is also implementing measures to bolster Gemini into a model which can't be used for malicious purposes. Through Google DeepMind, the team attempts to identify these threats before they can be possible. Effectively, Google looks to identity malicious functions, and remove them before they can pose a risk. While it is clear from the report that use of AI in the threat landscape has increased, Google notes that there are no breakthrough capabilities as of yet. Instead, there is simply an increase in the use of tools and risks.

[10]

Our new report details the latest ways threat actors are misusing AI.

Over the last few months, Google Threat Intelligence Group (GTIG) has observed threat actors using AI to gather information, create super-realistic phishing scams and develop malware. While we haven't observed direct attacks on frontier models or generative AI products from advanced persistent threat (APT) actors, we have seen and mitigated frequent model extraction attacks (a type of corporate espionage) from private sector entities all over the world -- a threat other businesses' with AI models will likely face in the near future. Today we released a report that details these observations and how we've taken action, including by disabling associated accounts in order to disrupt malicious activity. We've also strengthened both our security controls and Gemini models against misuse.

[11]

Google has published a list of ways AI is currently being used by threat actors to more efficiently hack you

Some of it is reportedly from 'government-backed' entities, too. As AI continues to grow and make its way into everyday life, the alleged productivity gains do appear to be showing in some places. It just so happens that hacker groups are one of those places, and Google's Threat Intelligence has listed some of the many ways they use it. Welcome to the future. In its latest report, it says, "In the final quarter of 2025, Google Threat Intelligence Group (GTIG) observed threat actors increasingly integrating artificial intelligence (AI) to accelerate the attack lifecycle, achieving productivity gains in reconnaissance, social engineering, and malware development." It details that government-backed threat actors, like those reportedly in the Democratic People's Republic of Korea (DPRK), Iran, the People's Republic of China (PRC), and Russia, are using LLMS for "technical research, targeting, and the rapid generation of nuanced phishing lures". One of the bigger growing threats is called a model extraction attack. In Google's case, this involved accessing an LLM legitimately, then attempting to extract information to build new models. Google reports one case of over 100,000 prompts which were intended to emulate Google Gemini's reasoning capabilities. Naturally, this is more of a threat to companies than to the average user. However, there are more methods detailed in the report. One such method for AI use is making hackers seem more reputable in conversation. "Increasingly, threat actors now leverage LLMs to generate hyper-personalized, culturally nuanced lures that can mirror the professional tone of a target organization or local language" Google has spotted it being used in phishing scams to learn information about potential targets, too. "This activity underscores a shift toward AI-augmented phishing enablement, where the speed and accuracy of LLMs can bypass the manual labor traditionally required for victim profiling." This is all before mentioning AI-generated code, with hackers such as APT31 using Gemini to automate analysing vulnerabilities and plans to test said vulnerabilities. It also spotted 'COINBAIT', a phishing kit masquerading as a cryptocurrency, "whose construction was likely accelerated by AI code generation tools." Though mostly a proof of concept, Google has reportedly spotted a malware that prompts users' AI bots to create code to generate additional malware. This would make tracking down malware on a machine increasingly hard as it continues to 'mutate'. Google says, "The potential of AI, especially generative AI, is immense. As innovation moves forward, the industry needs security standards for building and deploying AI responsibly." Just last week, we saw a phishing scam that uses AI to deepfake CEOs of companies, in order to get access to a victim's cryptocurrency. It seems AI is becoming more than just one tool in a hacker's toolbelt, and one has to hope counteragents are getting enough data to counteract it.

[12]

Google says attackers used 100,000+ prompts to try to clone AI chatbot Gemini

Google says its flagship artificial intelligence chatbot, Gemini, has been inundated by "commercially motivated" actors who are trying to clone it by repeatedly prompting it, sometimes with thousands of different queries -- including one campaign that prompted Gemini more than 100,000 times. In a report published Thursday, Google said it has increasingly come under "distillation attacks," or repeated questions designed to get a chatbot to reveal its inner workings. Google described the activity as "model extraction," in which would-be copycats probe the system for the patterns and logic that make it work. The attackers appear to want to use the information to build or bolster their own AI, it said. The company believes the culprits are mostly private companies or researchers looking to gain a competitive advantage. A spokesperson told NBC News that Google believes the attacks have come from around the world but declined to share additional details about what was known about the suspects. The scope of attacks on Gemini indicates that they most likely are or soon will be common against smaller companies' custom AI tools, as well, said John Hultquist, the chief analyst of Google's Threat Intelligence Group. "We're going to be the canary in the coal mine for far more incidents," Hultquist said. He declined to name suspects. The company considers distillation to be intellectual property theft, it said. Tech companies have spent billions of dollars racing to develop their AI chatbots, or large language models, and consider the inner workings of their top models to be extremely valuable proprietary information. Even though they have mechanisms to try to identify distillation attacks and block the people behind them, major LLMs are inherently vulnerable to distillation because they are open to anyone on the internet. OpenAI, the company behind ChatGPT, accused its Chinese rival DeepSeek last year of conducting distillation attacks to improve its models. Many of the attacks were crafted to tease out the algorithms that help Gemini "reason," or decide how to process information, Google said. Hultquist said that as more companies design their own custom LLMs trained on potentially sensitive data, they become vulnerable to similar attacks. "Let's say your LLM has been trained on 100 years of secret thinking of the way you trade. Theoretically, you could distill some of that," he said.

[13]

Google warns attackers are wiring AI directly into live cyberattacks - SiliconANGLE

Google warns attackers are wiring AI directly into live cyberattacks A new report out today from the Google Threat Intelligence Group is warning that threat actors are moving beyond casual experimentation with artificial intelligence and are now beginning to integrate AI directly into operational attack workflows. The report focuses in part on abuse and targeting of Google's own Gemini models, underscoring how generative AI systems are increasingly being tested, probed and, in some cases, incorporated into malicious tooling. Google's researchers observed some malware families making direct application programming calls to Gemini during execution. Notably, the strains dynamically request generated source code from the model to carry out specific tasks, rather than embedding all malicious functionality within the code itself. In one example, a malware family identified as HONESTCUE used prompts to retrieve C# code that was then executed as part of its attack chain. The approach allows operators to shift logic outside the static malware binary and potentially complicates traditional detection methods that rely on signatures or predefined behavioral indicators. The report also describes ongoing attempts to conduct model extraction, also known as distillation attacks. Threat actors were found to be issuing large volumes of structured queries to a model to infer its behavior, response patterns and internal logic. The idea behind the distillation attacks is that by systematically analyzing outputs, the threat actors can approximate the capabilities of proprietary models and train alternative systems without incurring the development and infrastructure costs associated with building them from scratch. Google says that it has identified and disrupted campaigns involving high-volume prompt activity aimed at extracting Gemini model knowledge. Other findings in the report included Google researchers observing that state-aligned and financially motivated groups are incorporating AI tools into established phases of cyber operations, including reconnaissance, vulnerability research, script development and phishing content generation. Generative AI models are noted as being able to assist in producing convincing lures, refining malicious code snippets and accelerating technical research against targeted technologies. The report also found that agentic AI capabilities are also being explored by adversaries, as they can be designed to execute multi-step tasks with minimal human input, raising the possibility that future malware could incorporate more autonomous decision-making elements. However, there is no evidence as yet of widespread deployment of agentic AI by adversaries. For now, though, Google characterizes most observed use as augmentation rather than replacement of human operators. Discussing the report, Dr. Ilia Kolochenko, chief executive officer at ImmuniWeb SA, was not complimentary of the report, telling SiliconANGLE via email that "this seems to be a poorly orchestrated PR of Google's AI technology amid the fading interest and growing disappointment of investors in generative AI." "First, even if advance persistent threat utilize generative AI in their cyberattacks, it does not mean that generative AI has finally become good enough to create sophisticated malware or execute the full cyber kill chain of an attack," explains Kolochenko. "Generative AI can indeed accelerate and automate some simple processes - even for APT groups - but it has nothing to do with the sensationalized conclusions about the alleged omnipotence of generative AI in hacking." "Second, Google may be actually setting a legal trap for itself. Being fully aware that nation-state groups and cyber-terrorists actively exploit Google's AI technology for malicious purposes, it may be liable for the damage caused by these cyber-threat actors," added Kolochenko. "Building guardrails and implementing enhanced customer due diligence does not cost much and could have prevented the reported abuse. Now the big question is who will be liable, while Google will unlikely have a convincing answer to it."

[14]

Hackers Are Hammering Google's Gemini With Prompts to Steal the LLM -- Every AI Company Should Be Worried

Google called the attacks "model extraction," a process Medium defines as when "an attacker distills the knowledge from your expensive model into a new, cheaper one they control." It's becoming an increasing threat to major AI companies that spend billions of dollars on training its models, but that lack sufficient methods to protect its proprietary information. "Let's say your LLM has been trained on 100 years of secret thinking of the way you trade," John Hultquist, the chief analyst of Google's Threat Intelligence Group, told NBC News. "Theoretically, you could distill some of that." Google said in its report that it considers the attacks intellectual property theft, which goes against its terms of service. The issue, however, lies in developing enforcement mechanisms and successfully proving the attacker intended to steal the IP.

[15]

Google Warns Against Thieves Using APIs to Clone AI Models | PYMNTS.com

In these attacks, threat actors use their legitimate access to a large language model (LLM) to extract information that can be used to train a new LLM, according to the post. By doing so, the attackers can develop their AI models at greater speed and lower cost. There are legitimate uses for distillation, but using this technique without permission is a form of intellectual property theft, per the post. "As organizations increasingly integrate LLMs into their core operations, the proprietary logic and specialized training of these models have emerged as high-value targets," GTIG said in the post. "Historically, adversaries seeking to steal high-tech capabilities used conventional computer-enabled intrusion operations to compromise organizations and steal data containing trade secrets. For many AI technologies where LLMs are offered as services, this approach is no longer required; actors can use legitimate API access to attempt to 'clone' select AI model capabilities." During 2025, GTIG and Google DeepMind identified and disrupted model extraction attacks that came from researchers and private sector companies from around the world, according to the post. The risk of these attacks is faced by model developers and service providers. Average users are generally not affected, because the attacks don't threaten the confidentiality, availability or integrity of AI services, per the post. "Organizations that provide AI models as a service should monitor API access for extraction or distillation patterns," GTIG said in the post. "For example, a custom model tuned for financial data analysis could be targeted by a commercial competitor seeking to create a derivative product, or a coding model could be targeted by an adversary wishing to replicate capabilities in an environment without guardrails." GTIG shared these findings in the quarterly GTIG AI Threat Tracker. In this report, GTIG also found that during the fourth quarter of 2025, threat actors continued to integrate AI to enhance their attacks. They used the technology to support reconnaissance and target development, augment their phishing, and support coding and tooling development, per the post. In its previous report, issued in November, GTIG said threat actors had begun using AI not only for productivity gains but also for "novel AI-enabled operations." The World Economic Forum said in a report released Jan. 12 that 94% of surveyed executives expect AI to be a force multiplier for both defense and offense in the cybersecurity space. The PYMNTS Intelligence report "COOs Leverage GenAI to Reduce Data Security Losses" found that 55% of chief operating officers said their companies had implemented AI-powered automated cybersecurity management systems.

Share

Share

Copy Link

Google's Threat Intelligence Group reveals that state-backed hackers from China, Russia, Iran, and North Korea are systematically exploiting Gemini AI for cyberattacks. One adversarial campaign bombarded the model with over 100,000 prompts attempting to clone its capabilities. The attacks span reconnaissance, phishing, malware development, and vulnerability testing, marking a shift in how AI tools enable offensive cyber operations at scale.

State-Sponsored Hackers Deploy Gemini AI in Comprehensive Cyberattacks

Google has disclosed that state-sponsored hackers from China, Russia, Iran, and North Korea are exploiting its Gemini AI model throughout every phase of cyberattacks, according to a new report from the Google Threat Intelligence Group published Thursday

1

. The revelation marks a significant escalation in how adversaries leverage AI tools for offensive operations, with threat actors using Gemini for reconnaissance, phishing lures creation, command and control development, vulnerability analysis, and data exfiltration3

. "The adversaries' adoption of this capability is so significant - it's the next shoe to drop," said John Hultquist, chief analyst for the Google Threat Intelligence Group4

.

Source: Tom's Hardware

APT31 Uses Gemini AI for Automated Vulnerability Analysis Against US Targets

Chinese government-backed hacking group APT31, also known as Violet Typhoon, employed a highly structured approach by prompting Gemini with an expert cybersecurity persona to automate vulnerability analysis and generate targeted testing plans against specific US-based targets

4

. In one documented case, APT31 used Hexstrike, an open-source red-teaming tool built on the Model Context Protocol, directing the model to analyze Remote Code Execution (RCE), WAF bypass techniques, and SQL injection test results3

. This integration with Gemini "automated intelligence gathering to identify technological vulnerabilities and organizational defense weaknesses," according to Google's report4

. While there's no indication these attacks succeeded, the activity "explicitly blurs the line between a routine security assessment query and a targeted malicious reconnaissance operation." Hultquist emphasized the concern: "The ability to operate across the intrusion and automating the development of vulnerability exploitation allows adversaries to move faster than defenders and hit a lot of targets"4

.

Source: SiliconANGLE

Massive Model Extraction Campaign Hits Gemini with 100,000 Prompts

Beyond direct operational use, Google identified large-scale model extraction attempts targeting Gemini AI through what the industry calls distillation attacks

1

. One commercially motivated adversarial session prompted the model more than 100,000 times across various non-English languages, collecting responses to train a cheaper copycat model1

. Google considers this intellectual property theft, though the position carries some irony given that its LLM was built from materials scraped from the Internet without permission1

. The technique involves feeding an existing AI model thousands of carefully chosen prompts, collecting responses, and using those input-output pairs to train a smaller model that mimics the parent's behavior. "Model extraction and subsequent knowledge distillation enable an attacker to accelerate AI model development quickly and at a significantly lower cost," Google researchers noted5

. The attacks came from around the world, though Google declined to name suspects1

.

Source: BleepingComputer

Related Stories

Iran and North Korea Leverage Gemini for Phishing and Social Engineering

Iranian threat actor APT42 leveraged Gemini for social engineering campaigns, using it to search for official emails of specific targets and conduct research into business partners of potential victims

3

. They fed Gemini biographical information to generate personas that might have credible reasons to engage with targets3

. North Korea primarily used Gemini as part of phishing attacks, profiling high-value targets within security and defense companies and attempting to find vulnerable individuals within their networks3

. Threat actors from China, Iran, Russia, and Saudi Arabia also used Gemini to produce political satire and propaganda, generating articles, memes, and images designed to influence Western audiences3

. While Google confirmed it hadn't seen these assets deployed into the wild, the company took the threat seriously enough to disable accounts associated with these activities3

.Black Market for API Keys Emerges as Hackers Target AI Access

The report highlights a growing appetite among hackers for bespoke AI hacking tools and stolen API keys to access commercial models

3

. Google cited an underground toolkit called "Xanthorox," advertised as custom AI for cyber offensive campaigns capable of generating malicious malware code and constructing custom phishing campaigns. However, under the hood, Xanthorox is simply an API that leverages existing general AI models like Gemini3

. Because using these tools requires making numerous API calls, organizations with large allocations of API tokens have become prime targets for account hijacking, creating a black market for API keys and placing greater emphasis on securing employee access to AI tools3

. Hultquist warned that organizations must adapt: "We are going to have to leverage the advantages of AI, and increasingly remove humans from the loop, so that we can respond at machine speed"4

. Google has since disabled accounts and infrastructure tied to documented abuse and implemented targeted defenses in Gemini's classifiers to make future exploitation harder5

.References

Summarized by

Navi

[4]

[5]

Related Stories

Google Reveals State-Sponsored Hackers' Attempts to Exploit Gemini AI

31 Jan 2025•Technology

Google Discovers AI-Powered Malware in the Wild, But Experts Question Real-World Threat Level

05 Nov 2025•Technology

AI Security Threats Escalate as CyberStrikeAI Tool Breaches 600+ Devices Across 55 Countries

27 Feb 2026•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Trump bans Anthropic from government as AI companies clash with Pentagon over weapons and surveillance

Policy and Regulation

3

ChatGPT Health fails to recognize half of medical emergencies in first independent safety test

Health