Google Introduces 'Grounding with Google Search' Feature for Gemini API and AI Studio

4 Sources

4 Sources

[1]

Developers Can Now Ground Their Gemini Outputs With Google Search

It supports all of the available languages for Gemini models Google is adding a new feature to the Gemini application programming interface (API) and AI Studio to help developers ground the responses generated by artificial intelligence. Announced on Thursday, the feature dubbed Grounding with Google Search will allow developers to check the AI-generated responses against similar information available on the Internet. This way developers will be able to further fine-tune their AI apps and offer their users more accurate and up-to-date information. Google highlighted that such grounding methods are important for prompts that generate real-time information from the web. Google AI for Developers support page detailed the new feature which will be available on both the Gemini API as well as the Google AI Studio. Both of these tools are largely used by developers who are building mobile and desktop apps with AI capabilities. However, generating responses from AI models can often result in hallucinations, which can negatively impact the credibility of the apps. The problem can be even more significant when the app delves into topics of current affairs, where the latest information from the web is required. While developers can manually fine-tune the AI model, without a guiding dataset, errors can still exist. To solve this, Google is offering a new way to verify the output generated by AI. Known as grounding, this process connects an AI model to verifiable sources of information. Such sources contain high-quality information and add more context to the information. Some examples of these sources include documents, images, local databases, and the Internet. Grounding with Google Search uses the last source to find verifiable information. Developers can now use top results from Google Search to compare the information returned by the Gemini AI models. The Mountain View-based tech giant claims that this exercise will improve the "accuracy, reliability, and usefulness of AI outputs." The method also helps AI models surpass their knowledge cut-off date by sourcing the information directly from the grounding source. So, in this case, Gemini models can get the latest information using the Search algorithm's output. Google also shared an example of the difference in outputs that were grounded vs those which were not grounded. An ungrounded response to the query "Who won the Super Bowl this year?" was "The Kansas City Chiefs won Super Bowl LVII this year (2023)." However, after the Grounding with Google Search feature was used, the refined response was, "The Kansas City Chiefs won Super Bowl LVIII this year, defeating the San Francisco 49ers in overtime with a score of 25 to 22." Notably, the feature only supports text-based outputs and cannot process multimodal responses.

[2]

Google brings grounding with search to Gemini in AI Studio and API - SiliconANGLE

Google brings grounding with search to Gemini in AI Studio and API Google LLC today announced it's rolling out "grounding" for its artificial intelligence Gemini models using Google Search, which will enable developers to get more accurate and up-to-date responses aided by search results. The new updates are available for AI Studio and the Gemini API. They permit the model to provide not just more accurate responses, but also returns from sources as in-line supporting links and suggestions that point users to search results within the response. By grounding the model on search results, developers can bring in fresh data from search results to correct issues that might arise from out-of-date training data. When a user prompts an AI conversationally with a question, it replies with its best knowledge, but training can only answer with information that it has up to a certain point. The response could have material that's months old and therefore less accurate and less desirable. When a user makes a query with grounding turned on, Google said, the service uses its search engine to find up-to-date and comprehensive information relevant to the query and then sends it to the model. Using search to ground AI responses will also allow the model to reply with more context and generate better results. As a result, the information will be richer and filled with better resources than just the model alone. With the addition of links to potential search resources, users will also have a chance to do additional research as well. Grounding data based on Google Search can also help ensure that AI applications provide users with more factual information. According to the company, this can also reduce hallucinations, when an AI model's response contains false or misleading information presented confidently. Using the application programming interface, developers set a configuration that will determine when grounding is more or less likely to be beneficial for a query. In this setting, a value is assigned to every prompt between 0 and 1 that predicts if a prompt will benefit from grounding and the developer can set a threshold to activate grounding. A higher resulting number means that grounding will be more helpful. The default threshold is 0.7. Google suggested that developers experiment to see what setting best fits their application. Google said this configuration exists because Grounding with Search will cause slower responses from Gemini models. Users may notice this slowdown in apps and it could make their experience feel worse. However, for those prompts that would benefit, the slower response would probably be negligible. There is also the question of additional costs for using the new update. Grounding is available to test for free in Google AI Studio. In the API, developers can access the tool with the paid tier for $35 per 1,000 grounded queries.

[3]

Google's Gemini API Adds Grounding Feature, Rivals OpenAI's SearchGPT

Now Gemini 1.5 models can pull live information from Google Search, increasing accuracy and transparency. Google AI Studio and the Gemini API have introduced "Grounding with Google Search," allowing developers to improve response accuracy by incorporating real-time data from Google Search. With this update, Gemini 1.5 models can pull live information from Google Search, increasing accuracy and transparency. Developers can access grounding features directly through the "Tools" section in Google AI Studio or by enabling the 'google_search_retrieval' tool in the Gemini API. Grounding with Google Search is available in Google AI Studio's free trial. Gemini API pricing is set at $35 per 1,000 grounded queries. Key advantages of grounding include reduced hallucination in model responses and providing users with relevant, up-to-date information. The feature also supports linking responses to real-time search results, which boosts transparency and directs traffic to source publishers. Developers can activate a dynamic retrieval feature for cases where only certain queries require real-time data. This feature assigns a score that predicts when grounding may improve response quality, allowing developers to control grounding based on a set threshold score. Examples in Google AI Studio's Compare Mode show how grounding improves answer quality. Without grounding, responses reflect the model's knowledge cut-off, while grounded answers deliver current information with supporting links and Search Suggestions. Google's development came on the same day OpenAI released SearchGPT in ChatGPT, which offers improved web search capabilities for timely, accurate answers, blending natural language interaction with up-to-date data in sports, news, stock quotes, and more. The Google Gemini grounding feature will be competing with platforms like Perplexity AI and ChatGPT's web search. Recently, the Perplexity AI chief announced that the platform will soon release new updates. Meanwhile, Meta is also developing its own search engine, which will be integrated into its social media platforms, such as WhatsApp and Instagram, to provide real-time information.

[4]

Google's Gemini API and AI Studio get grounding with Google Search | TechCrunch

Starting today, developers using Google's Gemini API and its Google AI Studio to build AI-based services and bots will be able to ground their prompts' results with data from Google Search. This should enable more accurate responses based on fresher data. As has been the case before, developers will be able to try out grounding for free in AI Studio, which is essentially Google's playground for developers to test and refine their prompts, and access its latest large language models. Gemini API users will have to be on the paid tier and will pay $35 per 1,000 grounded queries. AI Studio's recently launched built-in compare mode makes it easy to see how the results of grounded queries differ from those that rely solely on the model's own data. Turning on grounding is as easy as toggling on a switch and deciding on how often the API should use grounding by making changes to the so-called 'dynamic retrieval' setting. That could be as straightforward as opting to turn it on for every prompt or going with a more nuanced setting that then uses a smaller model to evaluate the prompt and decide whether it would benefit from being augmented with data from Google Search. "Grounding can help [...] when you ask a very recent question that's beyond the model's knowledge cut off, but it could also help with a question which is not as recent, [...] but you may want richer detail," Shrestha Basu Mallick, Google's group product manager for the Gemini API and AI Studio, explained. "There might be developers who say we only want to ground on recent facts, and they would set this [dynamic retrieval value] higher. And there might be developers who say: No, I want the rich detail of Google search on everything." When Google enriches results with data from Google Search, it also provides supporting links back to the underlying sources. Logan Kilpatrick, who joined Google earlier this year after previously leading developer relations at OpenAI, told me that displaying these links is a requirement of the Gemini license for anyone who uses this feature. "It is very important for us for two reasons: one, we want to make sure our publishers get the credit and the visibility," Basu Mallick added. "But second, this also is something that users like. When I get an LLM answer, I often go to Google Search and check that answer. We're providing a way for them to do this easily, so this is much valued by users." In this context, it's worth noting that while AI Studio started out as something more akin to a prompt tuning tool, it's a lot more now. "Success for AI Studio looks like: you come in, you try one of the Gemini models, and you see this actually is really powerful and works well for your use case," said Kilpatrick. "There's a bunch we do to surface potential interesting use cases to developers front and center in the UI, but ultimately, the goal is not to keep you in AI Studio and just have you sort of play around with the models. The goal is to get you code. You press 'Get Code' in the top right-hand corner, you go start building something, and you might come back to AI Studio to experiment with a future model."

Share

Share

Copy Link

Google has launched a new feature called 'Grounding with Google Search' for its Gemini API and AI Studio, allowing developers to improve AI-generated responses by incorporating real-time data from Google Search results.

Google Introduces 'Grounding with Google Search' for Gemini

Google has unveiled a new feature called 'Grounding with Google Search' for its Gemini API and AI Studio, aimed at enhancing the accuracy and reliability of AI-generated responses. This development represents a significant step forward in addressing common challenges faced by AI models, such as outdated information and hallucinations

1

.How Grounding Works

The grounding feature allows Gemini models to access real-time information from Google Search, effectively bridging the gap between the AI's knowledge cutoff and current data. When a query is made with grounding enabled, the service utilizes Google's search engine to find up-to-date and comprehensive information relevant to the query before sending it to the model

2

.This process helps in several ways:

- Improving accuracy and reliability of AI outputs

- Providing more current information

- Reducing hallucinations (false or misleading information)

- Offering richer context with supporting links

Implementation and Availability

Developers can access the grounding feature through the "Tools" section in Google AI Studio or by enabling the 'google_search_retrieval' tool in the Gemini API

3

. The feature is available for free testing in Google AI Studio, while API users on the paid tier will be charged $35 per 1,000 grounded queries2

.Dynamic Retrieval and Customization

Google has introduced a dynamic retrieval feature that allows developers to fine-tune when grounding should be applied. This system assigns a score between 0 and 1 to each prompt, predicting whether grounding would be beneficial. Developers can set a threshold (default is 0.7) to determine when grounding is activated, allowing for customization based on specific application needs

2

.Impact on Response Time and User Experience

While grounding improves accuracy, it may lead to slower response times from Gemini models. Google advises developers to experiment with settings to find the right balance between accuracy and speed for their applications

2

.Related Stories

Transparency and Publisher Credit

An important aspect of this feature is the inclusion of supporting links to source publishers in the AI's responses. This not only boosts transparency but also directs traffic to original content creators, addressing concerns about AI's impact on content attribution

4

.Competitive Landscape

Google's introduction of grounding comes at a time when other AI companies are also focusing on improving the accuracy and timeliness of their models. OpenAI recently released SearchGPT in ChatGPT, offering similar web search capabilities

3

. Additionally, platforms like Perplexity AI and Meta are developing their own search-integrated AI solutions, indicating a growing trend in the industry towards more accurate and up-to-date AI responses3

.As AI continues to evolve, features like grounding with search results are likely to become increasingly important in ensuring the reliability and usefulness of AI-generated information across various applications and industries.

References

Summarized by

Navi

Related Stories

Google Rolls Out Experimental Gemini 2.0 Advanced: A Leap in AI Capabilities

17 Dec 2024•Technology

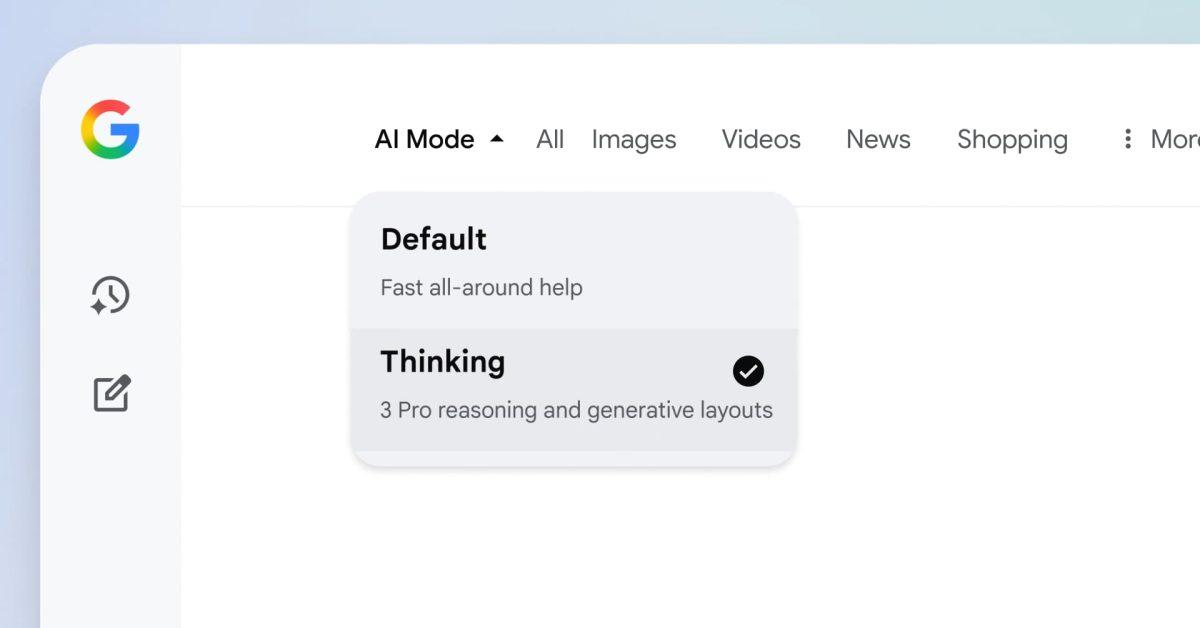

Google expands Gemini 3 and Nano Banana Pro to 120 countries with seamless AI Mode integration

01 Dec 2025•Technology

Google opens Gemini Deep Research AI agent to developers with new Interactions API

12 Dec 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology