Google launches Gemini 3.1 Pro with record benchmark scores in heated AI model race

18 Sources

18 Sources

[1]

Google announces Gemini 3.1 Pro, says it's better at complex problem-solving

Another day, another Google AI model. Google has really been pumping out new AI tools lately, having just released Gemini 3 in November. Today, it's bumping the flagship model to version 3.1. The new Gemini 3.1 Pro is rolling out (in preview) for developers and consumers today with the promise of better problem-solving and reasoning capabilities. Google announced improvements to its Deep Think tool last week, and apparently, the "core intelligence" behind that update was Gemini 3.1 Pro. As usual, Google's latest model announcement comes with a plethora of benchmarks that show mostly modest improvements. In the popular Humanity's Last Exam, which tests advanced domain-specific knowledge, Gemini 3.1 Pro scored a record 44.4 percent. Gemini 3 Pro managed 37.5 percent, while OpenAI's GPT 5.2 got 34.5 percent. Google also calls out the model's improvement in ARC-AGI-2, which features novel logic problems that can't be directly trained into an AI. Gemini 3 was a bit behind on this evaluation, reaching a mere 31.1 percent versus scores in the 50s and 60s for competing models. Gemini 3.1 Pro more than doubles Google's score, reaching a lofty 77.1 percent. Google has often gloated when it releases new models that they've already hit the top of the Arena leaderboard (formerly LM Arena), but that's not the case this time. For text, Claude Opus 4.6 edges out the new Gemini by four points at 1504. For code, Opus 4.6, Opus 4.5, and GPT 5.2 High all run ahead of Gemini 3.1 Pro by a bit more. It's worth noting, however, that the Arena leaderboard is run on vibes. Users vote on the outputs they like best, which can reward outputs that look correct regardless of whether they are.

[2]

Google's new Gemini Pro model has record benchmark scores -- again | TechCrunch

On Thursday, Google released the newest version of Gemini Pro, its powerful LLM. The model, 3.1, is currently available as a preview and will be generally released soon, the company said. Google's new model may be one of the most powerful LLMs yet. Onlookers have noted that Gemini 3.1 Pro appears to be a big step up from its predecessor, Gemini 3 -- which, upon its release in November, was already considered a highly capable AI tool. On Thursday, Google also shared statistics from independent benchmarks -- such as one called Humanity's Last Exam -- that showed it performing significantly better than its previous version. Gemini 3.1 Pro was also praised by Brendan Foody, the CEO of AI startup Mercor, whose benchmarking system, APEX, is designed to measure how well new AI models perform real professional tasks. "Gemini 3.1 Pro is now at the top of the APEX-Agents leaderboard," Foody said in a social media post, adding that the model's impressive results show "how quickly agents are improving at real knowledge work." The release comes as the AI model wars are heating up, and tech companies continue to release increasingly powerful LLMs designed for agentic work and multi-step reasoning. Other major names -- including OpenAI and Anthropic -- have recently released new models as well.

[3]

Google Rolls Out Latest AI Model, Gemini 3.1 Pro

Blake has over a decade of experience writing for the web, with a focus on mobile phones, where he covered the smartphone boom of the 2010s and the broader tech scene. When he's not in front of a keyboard, you'll most likely find him playing video games, watching horror flicks, or hunting down a good churro. Google took the wraps off its latest AI model, Gemini 3.1 Pro, on Thursday, calling it a "step forward in core reasoning." The software giant says its latest model is smarter and more capable for complex problem-solving. Google shared a series of bookmarks and examples of the latest model's capabilities, and is rolling out Gemini 3.1 to a series of products for consumers, enterprise and developers. The overall AI model landscape seems to change weekly. Google's release comes just a few days after Anthropic dropped the latest version of Claude, Sonnet 4.6, which can operate a computer at a human baseline level. Google shared some details about AI model benchmarks for Gemini 3.1 Pro. The announcement blog post highlights that the Gemini 3.1 Pro benchmark for the ARC-AGI-2 test for solving abstract reasoning puzzles sits at 77.1%. This is noticeably higher than Gemini 3 Pro's 31.1% score for the same test. The ARC-AGI-2 benchmark is one of multiple improvements coming from Gemini 3.1 Pro, Google says. With better benchmarks nearly across the board, Google highlighted some of the ways that translate in general use: Code-based animations: The latest Gemini model can easily create animated SVG images that are scalable without quality loss and ready to be added to websites with a text prompt. Creative coding: Gemini 3.1 Pro generated an entire website based on a character from Emily Brontë's novel Wuthering Heights, if she were a landscape photographer showing off her portfolio. Interactive design: 3.1 Pro was used to create a 3D interactive starling murmuration that allows the flock to be controlled in an assortment of ways, all while a soundscape is generated that changes with the movement of the birds. As of Thursday, Gemini 3.1 Pro is rolling out in the Gemini app for those with the AI Pro or Ultra plans. NotebookLM users subscribed to one of those plans will also be able to take advantage of the new model. Both developers and enterprises can also access the new model via the Gemini API through a range of products, including AI Studio, Gemini Enterprise, Antigravity and Android Studio.

[4]

Google's Gemini 3.1 Pro is here, and it just doubled its reasoning score

Model capabilities are ultimately relative, one expert said. Another week, another "smarter" model -- this time from Google, which just released Gemini 3.1 Pro. Gemini 3 outperformed several competitor models since its release in November, beating Copilot in a few of our in-house task tests, and has generally received praise from users. Google said this latest Gemini model, announced Thursday, achieved "more than double the reasoning performance of 3 Pro" in testing, based on its 77.1% score on the ARC-AGI-2 benchmark for "entirely new logic patterns." Also: Gemini vs. Copilot: I compared the AI tools on 7 everyday tasks, and there's a clear winner The latest model follows a "major upgrade" to Gemini 3 Deep Think last week, which boasted new capabilities in chemistry and physics alongside new accomplishments in math and coding, according to Google. The company said the Gemini 3 Deep Think upgrade was built to address "tough research challenges -- where problems often lack clear guardrails or a single correct solution and data is often messy or incomplete." Google said Gemini 3.1 Pro undergirds that science-heavy investment, calling the model the "upgraded core intelligence that makes those breakthroughs possible." Late last year, Gemini 3 scored a new high of 38.3% across all currently available models on the Humanity's Last Exam (HLE) benchmark test. Developed to combat increasingly beatable industry-standard benchmarks and better measure model progress against human ability, HLE is meant to be a more rigorous test, though benchmarks alone aren't sufficient to determine performance. According to Google, Gemini 3.1 Pro now bests that score at 44.4% -- though the Deep Think upgrade technically scored higher at 48.4%. Similarly, the Deep Think update scored 84.6% -- higher than 3.1 Pro's aforementioned 77.1% -- on the ARC-AGI-2 logic benchmark. Also: The making of Gemini 3 - how Google's slow and steady approach won the AI race (for now) All that said, Anthropic's Claude Opus 4.6 still tops the Center for AI Safety (CAIS) text capability leaderboard (for reasoning and other text-based queries), which averages other relevant benchmark scores outside of HLE. Anthropic's Opus 4.5, Sonnet 4.5, and Opus 4.6 also beat Gemini 3 in terms of safety, according to the CAIS risk assessment leaderboard. Benchmark records aside, the lifecycle of a model doesn't end with a splashy release. At the current rate of AI development, new models are impressive only in relative terms to their competition -- time and testing will tell where the 3.1 Pro excels or fails. Gemini 3 gives the new model a strong foundation, but that may only last until the next lab releases a state-of-the-art upgrade. Also: Inside Google's AI plan to end Android developer toil - and speed up innovation "The test numbers seem to imply that it's got substantial improvement over Gemini 3, and Gemini 3 was pretty good, but I don't think we're really going to know right away, and it's not available except to the more expensive plans yet," said ZDNET senior contributing editor David Gewirtz of the release. "The shoe hasn't yet fallen on GPT 5.3 either, and I think when it does, we'll have a more universal set of upgrades that we can readdress." While we wait for that model to drop, Gewirtz looked into GPT-5.3-Codex, OpenAI's most recent coding-specific release, which famously helped build itself. Developers can access Gemini 3.1 Pro in preview today through the API in Google's AI Studio, Android Studio, Google Antigravity, and Gemini CLI. Enterprise customers can try it in Vertex AI and Gemini Enterprise, and regular users can find it in NotebookLM and the Gemini app.

[5]

Google germinates Gemini 3.1 Pro in ongoing AI model race

If you want an even better AI model, there could be reason to celebrate. Google, on Thursday, announced the release of Gemini 3.1 Pro, characterizing the model's arrival as "a step forward in core reasoning." Measured by the release cadence of machine learning models, Gemini 3.1 Pro is hard on the heels of recent model debuts from Anthropic and OpenAI. There's barely enough time to start using new US commercial AI models before a competitive alternative surfaces. And that's to say nothing about the AI models coming from outside the US, like Qwen3.5. Google's Gemini team in a blog post contends that Gemini 3.1 Pro can tackle complex problem-solving better than preceding models. And they cite benchmark test results - which should be viewed with some skepticism - to support that claim. On the ARC-AGI-2 problem-solving test, Gemini 3.1 Pro scored 77.1 percent, compared to Gemini 3 Pro, which scored 31.1 percent, and Gemini 3 Deep Think, which scored 45.1 percent. Gemini 3.1 Pro outscores rival commercial models like Anthropic's Opus 4.6 and Sonnet 4.6, and OpenAI's GPT-5.2 and GPT-5.3-Codex in the majority of cited benchmarks, Google's chart shows. However, Opus 4.6 retains the top score for Humanity's Last Exam (full set, test + MM), SWE-Bench Verified, and τ²-bench. And GPT-5.3-Codex leads in SWE-Bench Pro (Public) and Terminal-Bench 2.0 when evaluated using Codex's own harness rather than the standard Terminus-2 agent harness. "3.1 Pro is designed for tasks where a simple answer isn't enough, taking advanced reasoning and making it useful for your hardest challenges," the Gemini team said. "This improved intelligence can help in practical applications - whether you're looking for a clear, visual explanation of a complex topic, a way to synthesize data into a single view, or bringing a creative project to life." To illustrate potential uses, the Gemini team points to how the model can create website-ready SVG animations and can translate the literary style of a novel into the design of a personal portfolio site. In the company's Q4 2025 earnings release [PDF], CEO Sundar Pichai said, "Our first party models, like Gemini, now process over 10 billion tokens per minute via direct API use by our customers, and the Gemini App has grown to over 750 million monthly active users." Google is making Gemini 3.1 Pro available via the Gemini API in Google AI Studio, Gemini CLI, Antigravity, and Android Studio. Enterprise customers can access it via Vertex AI and Gemini Enterprise while consumers can do so via the Gemini app and NotebookLM. The model is also accessible via several Microsoft services including GitHub Copilot, Visual Studio, and Visual Studio Code. ®

[6]

Google Gemini 3.1 Pro boosts complex problem-solving

Latest version of Gemini Pro more than doubles the model's reasoning performance on the ARC-AGI-2 benchmark, the Google Gemini team said. Google has released a preview of Gemini 3.1 Pro, described as a smarter model for the most complex problem-solving tasks and a step forward in core reasoning. Announced February 19, Gemini 3.1 Pro is designed for tasks where a simple answer is not enough, taking advanced reasoning and making it useful for the hardest challenges, according to the Google Gemini team. The improved intelligence can help in practical applications such as providing a visual explanation of a complex topic, synthesizing disparate data into a single view, and solving challenges that require deep context and planning. The model is in preview for the developers via the Gemini API in Google AI Studio, Gemini CLI, Google Antigravity, and Android Studio. For enterprises, the model is in Vertex and Gemini Enterprise. Consumers can access Gemini 3.1 Pro via the Gemini app and NotebookLM. Gemini 3.1 Pro follows the Gemini 3.1 release from November 2025. The Gemini team said the core intelligence in Gemini 3.1 Pro also was leveraged in last week's update to Gemini 3 Deep Think to solve challenges across science, research, and engineering. The team also noted that, on the ARC-AGI-2 benchmark, which evaluates a model's ability to solve new logic patterns, Gemini 3.1 Pro achieved a verified score of 77.1%, more than double the reasoning performance of Gemini 3 Pro.

[7]

Gemini 3.1 Pro is here with better reasoning and problem-solving

Back in November 2025, Google released Gemini 3.0. Although it has only been three months since then, the tech giant is ready to roll out an update. Gemini 3.1 Pro is now coming out for consumers, developers, and business customers. Google has announced that Gemini 3.1 Pro is rolling out in preview today. Gemini 3.1 Pro features improved reasoning and offers a more capable baseline for problem-solving. According to the company, this model doubles the reasoning performance of Gemini 3.0 Pro. For consumers, Gemini 3.1 Pro is hitting the Gemini app today, with higher limits for Google AI Pro and Ultra subscribers. You'll also be able to find the model in NotebookLM, but it's an AI Pro and Ultra exclusive. Google didn't provide a date for when the model will exit the preview phase and become generally available, but the company says it will arrive "soon."

[8]

Google announces Gemini 3.1 Pro for 'complex problem-solving'

In November, Google introduced Gemini 3 Pro in preview, with Gemini 3 Flash following a month later. Google today announced 3.1 Pro "for tasks where a simple answer isn't enough." The .1 increment is a first for Google, with the past two generations seeing .5 as the mid-year model update. Google says Gemini 3.1 Pro "represents a step forward in core reasoning." This model achieves an ARC-AGI-2 score of 77.1%, or "more than double the reasoning performance of 3 Pro." 3.1 Pro is designed for tasks where a simple answer isn't enough, taking advanced reasoning and making it useful for your hardest challenges. This improved intelligence can help in practical applications -- whether you're looking for a clear, visual explanation of a complex topic, a way to synthesize data into a single view, or bringing a creative project to life. Gemini 3.1 Pro is rolling out to the Gemini app today, as well as NotebookLM for Google AI Pro and Ultra subscribers. It's also available in Google AI Studio and Vertex AI for developers.

[9]

Google Gemini 3.1 Pro first impressions: a 'Deep Think Mini' with adjustable reasoning on demand

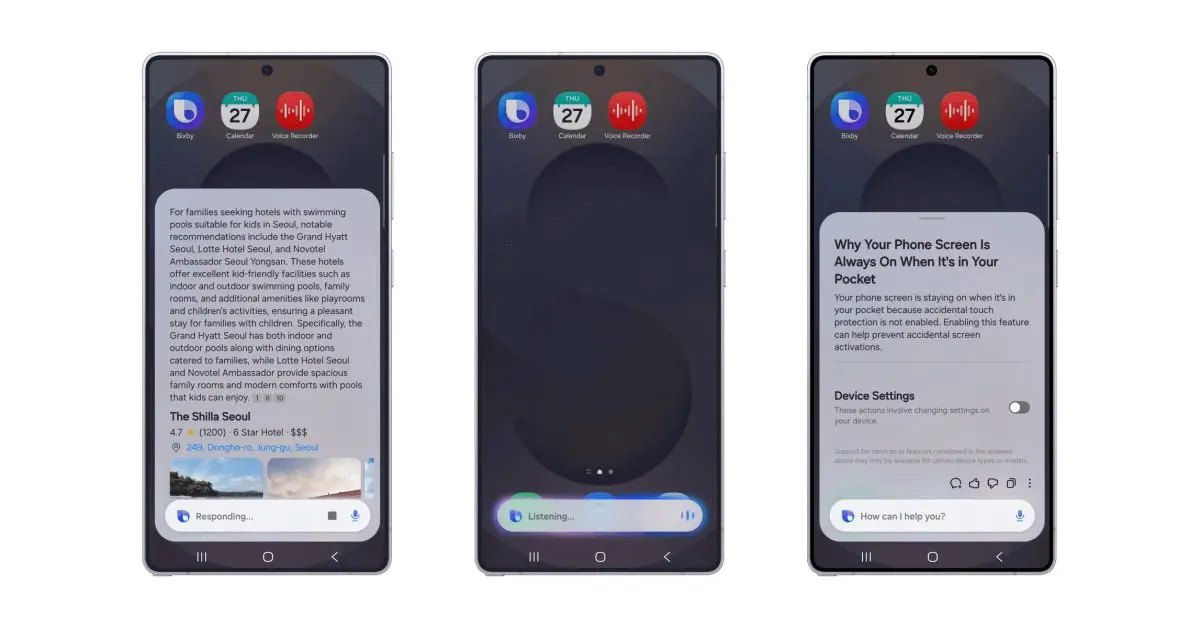

For the past three months, Google's Gemini 3 Pro has held its ground as one of the most capable frontier models available. But in the fast-moving world of AI, three months is a lifetime -- and competitors have not been standing still. Earlier today, Google released Gemini 3.1 Pro, an update that brings a key innovation to the company's workhorse power model: three levels of adjustable thinking that effectively turn it into a lightweight version of Google's specialized Deep Think reasoning system. The release marks the first time Google has issued a "point one" update to a Gemini model, signaling a shift in the company's release strategy from periodic full-version launches to more frequent incremental upgrades. More importantly for enterprise AI teams evaluating their model stack, 3.1 Pro's new three-tier thinking system -- low, medium, and high -- gives developers and IT leaders a single model that can scale its reasoning effort dynamically, from quick responses for routine queries up to multi-minute deep reasoning sessions for complex problems. The model is rolling out now in preview across the Gemini API via Google AI Studio, Gemini CLI, Google's agentic development platform Antigravity, Vertex AI, Gemini Enterprise, Android Studio, the consumer Gemini app, and NotebookLM. The most consequential feature in Gemini 3.1 Pro is not a single benchmark number -- it is the introduction of a three-tier thinking level system that gives users fine-grained control over how much computational effort the model invests in each response. Gemini 3 Pro offered only two thinking modes: low and high. The new 3.1 Pro adds a medium setting (similar to the previous high) and, critically, overhauls what "high" means. When set to high, 3.1 Pro behaves as a "mini version of Gemini Deep Think" -- the company's specialized reasoning model that was updated just last week. The implication for enterprise deployment could be significant. Rather than routing requests to different specialized models based on task complexity -- a common but operationally burdensome pattern -- organizations can now use a single model endpoint and adjust reasoning depth based on the task at hand. Routine document summarization can run on low thinking with fast response times, while complex analytical tasks can be elevated to high thinking for Deep Think-caliber reasoning. Google's published benchmarks tell a story of dramatic improvement, particularly in areas associated with reasoning and agentic capability. On ARC-AGI-2, a benchmark that evaluates a model's ability to solve novel abstract reasoning patterns, 3.1 Pro scored 77.1% -- more than double the 31.1% achieved by Gemini 3 Pro and substantially ahead of Anthropic's Sonnet 4.6 (58.3%) and Opus 4.6 (68.8%). This result also eclipses OpenAI's GPT-5.2 (52.9%). The gains extend across the board. On Humanity's Last Exam, a rigorous academic reasoning benchmark, 3.1 Pro achieved 44.4% without tools, up from 37.5% for 3 Pro and ahead of both Claude Sonnet 4.6 (33.2%) and Opus 4.6 (40.0%). On GPQA Diamond, a scientific knowledge evaluation, 3.1 Pro reached 94.3%, outperforming all listed competitors. Where the results become particularly relevant for enterprise AI teams is in the agentic benchmarks -- the evaluations that measure how well models perform when given tools and multi-step tasks, the kind of work that increasingly defines production AI deployments. On Terminal-Bench 2.0, which evaluates agentic terminal coding, 3.1 Pro scored 68.5% compared to 56.9% for its predecessor. On MCP Atlas, a benchmark measuring multi-step workflows using the Model Context Protocol, 3.1 Pro reached 69.2% -- a 15-point improvement over 3 Pro's 54.1% and nearly 10 points ahead of both Claude and GPT-5.2. And on BrowseComp, which tests agentic web search capability, 3.1 Pro achieved 85.9%, surging past 3 Pro's 59.2%. The versioning decision is itself noteworthy. Previous Gemini releases followed a pattern of dated previews -- multiple 2.5 previews, for instance, before reaching general availability. The choice to designate this update as 3.1 rather than another 3 Pro preview suggests Google views the improvements as substantial enough to warrant a version increment, while the "point one" framing sets expectations that this is an evolution, not a revolution. Google's blog post states that 3.1 Pro builds directly on lessons from the Gemini Deep Think series, incorporating techniques from both earlier and more recent versions. The benchmarks strongly suggest that reinforcement learning has played a central role in the gains, particularly on tasks like ARC-AGI-2, coding benchmarks, and agentic evaluations -- exactly the domains where RL-based training environments can provide clear reward signals. The model is being released in preview rather than as a general availability launch, with Google stating it will continue making advancements in areas such as agentic workflows before moving to full GA. For IT decision makers evaluating frontier model providers, Gemini 3.1 Pro's release has to not only make them rethink which models to choose but also how to adapt to such a fast pace of change for their own products and services. The question now is whether this release triggers a response from competitors. Gemini 3 Pro's original launch last November set off a wave of model releases across both proprietary and open-weight ecosystems. With 3.1 Pro reclaiming benchmark leadership in several critical categories, the pressure is on Anthropic, OpenAI, and the open-weight community to respond -- and in the current AI landscape, that response is likely measured in weeks, not months. Gemini 3.1 Pro is available now in preview through the Gemini API in Google AI Studio, Gemini CLI, Google Antigravity, and Android Studio for developers. Enterprise customers can access it through Vertex AI and Gemini Enterprise. Consumers on Google AI Pro and Ultra plans can access it through the Gemini app and NotebookLM.

[10]

Google releases Gemini 3.1 Pro: Benchmarks, how to try it

Google released its latest core reasoning model, Gemini 3.1 Pro, on Thursday. Google says that Gemini 3.1 Pro achieved twice the verified performance of 3 Pro on ARC-AGI-2, a popular benchmark that measures a model's logical reasoning. Google originally released Gemini 3 and 3 Pro in November, and this new release shows just how fast AI companies are introducing new and updated models. Gemini 3.1 Pro is the new core model powering Gemini and various Google AI tools, such as Gemini 3 Deep Think. Google says it's designed to provide more creative solutions. "3.1 Pro is designed for tasks where a simple answer isn't enough, taking advanced reasoning and making it useful for your hardest challenges," a Google blog post states. "This improved intelligence can help in practical applications -- whether you're looking for a clear, visual explanation of a complex topic, a way to synthesize data into a single view, or bringing a creative project to life." Here's everything we know so far about Gemini 3.1 Pro, including how it compares to the latest models from Anthropic and OpenAI, and how to try it yourself. Starting today, Google is rolling out Gemini 3.1 Pro in the Gemini App, the Gemini APIA, and in Notebook LM. Free users will be able to try 3.1 Pro in the Gemini app, but paid users on Google AI Pro and AI Ultra plans will have higher usage rates. Within Notebook LM, only these paid users will have access to 3.1 Pro, at least, for now. Coders and enterprise users can also access the new core model via developers and enterprises can access 3.1 through AI Studio, Antigravity, Vertex AI, Gemini Enterprise, Gemini CLI, and Android Studio. Gemini 3.1 Pro was already available for Mashable editors using Gemini. To try it for yourself, head to Gemini on desktop or open the Gemini mobile app. When Google released Gemini 3 Pro in November, the model was so impressive that it allegedly caused OpenAI CEO Sam Altman to declare a code red. As Gemini 3 Pro surged to the top of AI leaderboards, OpenAI reportedly started losing ChatGPT users to Gemini. The latest core ChatGPT model, GPT-5.2, has tumbled down the rankings on leaderboards like Arena (formerly known as LMArena), losing significant ground to competitors such as Google, Anthropic, and xAI. This Tweet is currently unavailable. It might be loading or has been removed. Gemini 3 Pro was already outperforming GPT-5.2 on many benchmarks, and with a more advanced thinking model, Gemini could move even further ahead. Google released benchmark performance data showing that Gemini 3.1 Pro outperforms previous Gemini models, Claude Sonnet 4.6, Claude Opus 4.6, and GPT-5.2. However, OpenAI's new coding model, GPT-5.3-Codex, beat Gemini 3.1 Pro on the verified SWE-Bench Pro benchmark, according to Google itself. Notable highlights from Gemini 3.1 Pro's benchmark results include: Google released an image showing the full benchmark results for Gemini 3.1 Pro: This Tweet is currently unavailable. It might be loading or has been removed.

[11]

Gemini 3.1 Pro just got a major AI intelligence boost

Google unveils Gemini 3.1 Pro with leap in reasoning and problem solving Google has introduced Gemini 3.1 Pro, the latest milestone in its flagship generative AI model lineup, promising significantly improved reasoning and complex problem-solving capabilities for developers, enterprises and everyday users. This upgrade builds on the foundation of the Gemini 3 series - which already set new expectations for multimodal intelligence - and pushes Google closer to its goal of delivering AI that can tackle sophisticated, real-world tasks. A powerful upgrade to AI reasoning Gemini 3.1 Pro is designed to be smarter at parsing difficult, multi-step problems that go beyond simple question-and-answer tasks. According to Google, the model more than doubled its reasoning performance compared with the previous Gemini 3 Pro when evaluated on the ARC-AGI-2 benchmark - a test that measures logic and reasoning on new, unseen problems. The new version achieved a score of 77.1%, a substantial leap that demonstrates its enhanced ability to understand and generate complex reasoning chains. Recommended Videos This improvement, built on updates introduced with Gemini 3 Deep Think, is not just about longer answers - it reflects deeper cognitive capabilities that enable the model to synthesize large amounts of data, draw connections across domains and offer more insightful responses for technical, scientific, and creative workflows. The expanded reasoning power of Gemini 3.1 Pro could make AI more useful across a broad range of applications. For developers and researchers, the model's ability to handle multi-step logic and nuanced questions may improve everything from code generation and debugging to data analysis and scientific research. In productivity settings, the upgraded AI could help users draft complex documents, generate detailed explanations, or explore topics that require deeper understanding. For enterprises, more advanced reasoning expands the scope of what AI can automate or support. Teams working on financial modeling, legal analysis, technical documentation, or customer support workflows may benefit from a model that can follow intricate instructions and maintain context over longer tasks. Integration across Google platforms Gemini 3.1 Pro is rolling out across several Google services, including the Gemini app, NotebookLM, developer platforms such as Vertex AI and Google AI Studio, and will also power features in Google Search Labs. This phased availability ensures that both individual users and organizations can gradually adopt the new model based on their needs. Beyond direct access, Gemini's upgrades tie into broader Google AI ecosystem strategies like enhanced integrations with Gmail, Docs and other productivity tools, where more intelligent assistance is expected to streamline workflows and reduce friction in everyday digital tasks. Why this matters amid the AI arms race The announcement of Gemini 3.1 Pro comes amid intense competition among generative AI platforms from companies such as OpenAI, Anthropic and Meta. Models that deliver strong reasoning, deep contextual understanding and multimodal input handling are increasingly viewed as the frontier of AI capability. Google's improved scores on complex benchmarks signal its intent to lead not just in scale, but in cognitive sophistication. From a strategic perspective, this upgrade reinforces Google's commitment to embedding advanced AI across products and services, while also encouraging developers to build richer applications on its platforms. As AI becomes more embedded in tools used for work, learning and creativity, enhancements like Gemini 3.1 Pro may accelerate adoption and improve real-world utility. Looking ahead, Google is expected to continue refining the Gemini family Which is basically further expansions in reasoning, multimodality and agentic capabilities - where AI can perform complex workflows with minimal human guidance. Continued integration with emerging tools and deeper support for professional and enterprise use cases will likely follow as adoption grows. As users and developers explore Gemini 3.1 Pro, its early feedback and real-world performance will help shape future iterations and competitive positioning, underlining the evolving role of AI as a more capable partner in tackling complex problems.

[12]

Gemini 3.1 Pro: A smarter model for your most complex tasks

This content is generated by Google AI. Generative AI is experimental Last week, we released a major update to Gemini 3 Deep Think to solve modern challenges across science, research and engineering. Today, we're releasing the upgraded core intelligence that makes those breakthroughs possible: Gemini 3.1 Pro. We are shipping 3.1 Pro across our consumer and developer products to bring this progress in intelligence to your everyday applications. Starting today, 3.1 Pro is rolling out: Building on the Gemini 3 series, 3.1 Pro represents a step forward in core reasoning. 3.1 Pro is a smarter, more capable baseline for complex problem-solving. This is reflected in our progress on rigorous benchmarks. On ARC-AGI-2, a benchmark that evaluates a model's ability to solve entirely new logic patterns, 3.1 Pro achieved a verified score of 77.1%. This is more than double the reasoning performance of 3 Pro.

[13]

Google launches Gemini 3.1 Pro to retake AI's top spot with 2X reasoning performance boost

Late last year, Google briefly took the crown for most powerful AI model in the world with the launch of Gemini 3 Pro -- only to be surpassed within weeks by OpenAI and Anthropic releasing new models, s is common in the fiercely competitive AI race. Now Google is back to retake the throne with an updated version of that flagship model: Gemini 3.1 Pro, positioned as a smarter baseline for tasks where a simple response is insufficient -- targeting science, research, and engineering workflows that demand deep planning and synthesis. Already, evaluations by third-party firm Artificial Analysis show that Google's Gemini 3.1 Pro has leapt to the front of the pack and is once more the most powerful and performant AI model in the world. The most significant advancement in Gemini 3.1 Pro lies in its performance on rigorous logic benchmarks. Most notably, the model achieved a verified score of 77.1% on ARC-AGI-2. This specific benchmark is designed to evaluate a model's ability to solve entirely new logic patterns it has not encountered during training. This result represents more than double the reasoning performance of the previous Gemini 3 Pro model. Beyond abstract logic, internal benchmarks indicate that 3.1 Pro is highly competitive across specialized domains: These technical gains are not just incremental; they represent a refinement in how the model handles "thinking" tokens and long-horizon tasks, providing a more reliable foundation for developers building autonomous agents. Google is demonstrating the model's utility through "intelligence applied" -- shifting the focus from chat interfaces to functional outputs. One of the most prominent features is the model's ability to generate "vibe-coded" animated SVGs directly from text prompts. Because these are code-based rather than pixel-based, they remain scalable and maintain tiny file sizes compared to traditional video, boasting far more detailed, presentable and professional visuals for websites and presentations and other enterprise applications. Other showcased applications include: Enterprise partners have already begun integrating the preview version of 3.1 Pro, reporting noticeable improvements in reliability and efficiency. Vladislav Tankov, Director of AI at JetBrains, noted a 15% quality improvement over previous versions, stating the model is "stronger, faster... and more efficient, requiring fewer output tokens". Other industry reactions include: For developers, the most striking aspect of the 3.1 Pro release is the "reasoning-to-dollar" ratio. When Gemini 3 Pro launched, it was positioned in the mid-high price range at $2.00 per million input tokens for standard prompts. Gemini 3.1 Pro maintains this exact pricing structure, effectively offering a massive performance upgrade at no additional cost to API users. For consumers, the model is rolling out in the Gemini app and NotebookLM with higher limits for Google AI Pro and Ultra subscribers. As a proprietary model offered through Vertex Studio in Google Cloud and the Gemini API, 3.1 Pro follows a standard commercial SaaS (Software as a Service) model rather than an open-source license. For enterprise users, this provides "grounded reasoning" within the security perimeter of Vertex AI, allowing businesses to operate on their own data with confidence. The "Preview" status allows Google to refine the model's safety and performance before general availability, a common practice in high-stakes AI deployment. By doubling down on core reasoning and specialized benchmarks like ARC-AGI-2, Google is signaling that the next phase of the AI race will be won by models that can think through a problem, not just predict the next word.

[14]

Google introduces Gemini 3.1 Pro model for advanced reasoning tasks - SiliconANGLE

Google introduces Gemini 3.1 Pro model for advanced reasoning tasks Google LLC today introduced Gemini 3.1 Pro, a new reasoning model that outperforms Claude 4.6 Opus and GPT-5.2 across several benchmarks. The algorithm is available via more than a half dozen of the search giant's products. Gemini 3.1 Pro is a Transformer model with a mixture of experts architecture, which means that it activates only some of its parameters when generating a prompt response. Users can enter prompts with up to 1 million tokens' worth of data, including not only text but also multimodal files such as videos. Gemini 3.1 Pro's responses contain up to 64,000 tokens. Google evaluated the model's reasoning capabilities using ARC-AGI-2, one of the most difficult artificial intelligence benchmarks on the market. It comprises visual puzzles that each include a series of shapes. The shapes that make up a puzzle differ from one another in their design, but all follow a certain pattern. LLMs must deduce the pattern and use it to generate a new shape. Gemini 3.1 Pro achieved an ARC-AGI-2 score of 77.1%, which put it about 24% ahead of GPT-5.2. It also outperformed Anthropic PBC's Claude Opus 4.6 by nearly 9%. All three models were tested in a hardware-intensive mode that improves their ability to tackle reasoning tasks. According to Google, Gemini 3.1 Pro also set records on several other benchmarks. The list includes MCP Atlas, which evaluates AI models' ability to perform tasks using third-party services, and the Terminal-Bench 2.0 coding test. The model performed 7% better than Claude Opus 4.6 on another coding benchmark, SciCode, that comprises scientific programming tasks. A demo published by Google shows Gemini 3.1 Pro generating an HTML visualization of the Earth's orbit. The dashboard uses data from a third-party service to show the current location of the International Space Station. In another demo, the model created a website based on a novel. Compared to the previous-generation Gemini algorithm, Gemini 3.1 Pro is significantly better at generating SVG files. SVG is an image format widely used in web application projects. SVG images often include interactive elements and can be resized it without resolution loss. Gemini 3.1 Pro is available as a preview in several of Google's development tools. Consumers, meanwhile, can access the model via the Gemini app and NotebookLM. Google is also bringing Gemini 3.1 Pro to several other offerings including its Vertex AI suite of AI cloud services for enterprises.

[15]

Gemini 3.1 Pro debuts with 1M context window and agentic reliability

Google released Gemini 3.1 Pro on Thursday. The model is available as a preview with a general release planned for the future. This iteration represents a significant advancement over the previous Gemini 3 release from November. The company shared benchmark data highlighting the model's improved capabilities in professional task performance. Independent benchmarks such as "Humanity's Last Exam" demonstrate that Gemini 3.1 Pro performs significantly better than its predecessor. The previous version, Gemini 3, was already regarded as a highly capable AI tool upon its November release. These new statistics indicate a measurable jump in performance metrics. Google positioned the new model as one of the most powerful LLMs currently available. Brendan Foody, CEO of AI startup Mercor, commented on the model's performance using his company's evaluation system. "Gemini 3.1 Pro is now at the top of the APEX-Agents leaderboard," Foody stated in a social media post. The APEX system is designed to measure how well new AI models perform real professional tasks. Foody added that the model's results demonstrate "how quickly agents are improving at real knowledge work." The release occurs amid intensifying competition within the AI industry. OpenAI and Anthropic have recently released new models focused on agentic work and multi-step reasoning. These companies continue to develop increasingly powerful LLMs designed for complex workflows. The market for advanced AI models remains active as major technology firms push for improvements in reasoning capabilities.

[16]

Google's Latest Gemini 3.1 Pro Model Is a Benchmark Beast

The new model is rolling out on Gemini, NotebookLM, Google AI Studio, and through Vertex API. Google unveiled its most advanced Gemini 3.1 Pro AI model with record-breaking benchmark scores. It's much stronger in multi-step reasoning and multimodal capabilities than the Gemini 3 Pro model. Google also says the new model is better at handling long, multi-step tasks. It's rolling out in the Gemini app, NotebookLM, Google AI Studio, Antigravity, and through the Vertex API. First off, the new Gemini 3.1 Pro AI model scored 44.4% on the challenging Humanity's Last Exam without any tool use, and 51.4% with search and coding tools. In the novel ARC-AGI-2 benchmark, Gemini 3.1 Pro scored a whopping 77.1%, even higher than Anthropic's latest Claude Opus 4.6 which got 68.8%. Next, in the GPQA Diamond benchmark, which tests scientific knowledge, Gemini 3.1 Pro scored 94.3% -- higher than all competitors. As for SWE-Bench Verified which evaluates agentic coding, the Gemini 3.1 Pro AI model scored 80.6%, a notch below Claude Opus 4.6's 80.8%. The new model has also gotten much better at following user instructions. Google showcased a number of animated SVGs from Gemini 3.1 Pro and compared its output with Gemini 3 Pro. The difference is quite astounding when you see the vector illustration. Google says Gemini 3.1 Pro is currently in preview and the company will continue improving the model before making it generally available for everyone. In December, OpenAI launched its ChatGPT 5.2 model to counter Gemini 3 Pro and recently unveiled GPT-5.3-Codex for improved agentic coding performance. Now that Gemini 3.1 Pro is out, OpenAI will have to release a much powerful model to outclass Google in the AI race.

[17]

Google unveils Gemini 3.1 Pro with enhanced reasoning capabilities By Investing.com

Investing.com -- Google has released Gemini 3.1 Pro, an upgraded AI model designed for complex problem-solving tasks across science, research, and engineering applications. The new model builds upon the Gemini 3 series and represents a significant advancement in core reasoning capabilities. On the ARC-AGI-2 benchmark, which tests a model's ability to solve new logic patterns, Gemini 3.1 Pro achieved a verified score of 77.1%, more than doubling the reasoning performance of the previous 3 Pro version. Starting Thursday, Gemini 3.1 Pro is being rolled out to developers in preview through the Gemini API in Google AI Studio, Gemini CLI, the agentic development platform Google Antigravity, and Android Studio. Enterprise users can access it via Vertex AI and Gemini Enterprise, while consumers can use it through the Gemini app and NotebookLM. The model excels at tasks requiring advanced reasoning, such as creating visual explanations of complex topics, synthesizing data, and supporting creative projects. One highlighted capability is "complex system synthesis," where the model can bridge complex APIs with user-friendly design, as demonstrated by building a live aerospace dashboard that visualizes the International Space Station's orbit. For Gemini app users with Google AI Pro and Ultra plans, the new version comes with higher usage limits. NotebookLM access to 3.1 Pro is exclusively available to Pro and Ultra users. Google indicated that this preview release will help validate the updates before general availability, with further improvements planned for agentic workflows and other areas. This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.

[18]

Google launches Gemini 3.1 Pro AI model for complex problem-solving: Check availability

It 'represents a step forward in core reasoning,' according to the company. Google has launched Gemini 3.1 Pro, a new AI model built to handle complex problem-solving tasks. The upgrade is part of the Gemini 3 family and 'represents a step forward in core reasoning,' according to the company. Gemini 3.1 Pro is said to be designed for situations where simple answers are not enough. The tech giant says that the new model can help in 'practical applications - whether you're looking for a clear, visual explanation of a complex topic, a way to synthesise data into a single view, or bringing a creative project to life.' A key focus of this release is better reasoning capability. Google claims the model performs far better on tests that measure how well AI can solve new and unfamiliar logic problems. 'On ARC-AGI-2, a benchmark that evaluates a model's ability to solve entirely new logic patterns, 3.1 Pro achieved a verified score of 77.1%. This is more than double the reasoning performance of 3 Pro,' the company said. The goal is to make AI more useful for tackling advanced workflows rather than just generating quick replies. Also read: Anthropic's Claude Code creator says AI can make software engineer title fade starting in 2026 Gemini 3.1 Pro is being rolled out across Google's ecosystem. Developers can access it in preview through the Gemini API and related development tools. Businesses and enterprise customers can also access the model via Vertx AI and Gemini Enterprise. For regular users, the model is available via the Gemini app and NotebookLM. Also read: Samsung Galaxy S26 Ultra, Galaxy S26 Plus, Galaxy S26 pre-reservations benefit revealed: Launch date, specs, price and more Google describes the release as a preview phase, meaning the company is still testing and refining the system before making it widely available. The feedback collected during this stage will help shape further improvements, especially in areas like advanced automated workflows. Also read: Anthropic launches Claude Sonnet 4.6 AI model with improved coding and computer use skills

Share

Share

Copy Link

Google unveiled Gemini 3.1 Pro on Thursday, marking a significant advancement in AI model capabilities with enhanced reasoning and complex problem-solving. The large language model more than doubled its predecessor's performance on key benchmarks, scoring 77.1% on ARC-AGI-2 compared to Gemini 3's 31.1%. The release intensifies competition with OpenAI and Anthropic as tech giants race to dominate the AI landscape.

Google Unveils Gemini 3.1 Pro with Enhanced Reasoning Capabilities

Google announced the release of Gemini 3.1 Pro on Thursday, positioning the AI model as a major step forward in core reasoning and complex problem-solving. The large language model arrives just months after Gemini 3 debuted in November, reflecting the accelerating pace of the AI model race among tech giants

1

. Available now in preview for developers and consumers, Gemini 3.1 Pro promises substantial improvements over its predecessor, outperforming its predecessor across multiple evaluation metrics2

.The release comes as competition intensifies among major AI labs, with OpenAI and Anthropic recently launching their own advanced models. According to CEO Sundar Pichai, Google's first-party models now process over 10 billion tokens per minute via direct API use, while the Gemini App has grown to over 750 million monthly active users

5

. This expansion demonstrates the growing demand for sophisticated AI tools capable of handling increasingly complex tasks.Record Benchmark Scores Signal Major Performance Leap

Gemini 3.1 Pro achieved record benchmark scores across several industry-standard tests, with particularly impressive gains in reasoning tasks. On the ARC-AGI-2 benchmark, which tests novel logic problems that can't be directly trained into an AI model, Gemini 3.1 Pro scored 77.1% compared to Gemini 3's 31.1%

3

. This more than doubling of performance represents a significant advancement in the model's ability to tackle entirely new logic patterns4

.On Humanity's Last Exam, designed to measure advanced domain-specific knowledge, Gemini 3.1 Pro achieved 44.4%, surpassing Gemini 3 Pro's 37.5% and OpenAI's GPT 5.2 at 34.5%

1

. Brendan Foody, CEO of AI startup Mercor, noted that "Gemini 3.1 Pro is now at the top of the APEX-Agents leaderboard," adding that the model's impressive results demonstrate "how quickly agents are improving at real knowledge work"2

.Competitive Landscape Shows Claude Opus Still Leading in Some Areas

Despite the strong performance, Anthropic's Claude Opus 4.6 maintains an edge in certain categories. On the Arena leaderboard for text capabilities, Claude Opus 4.6 scores 1504, edging out Gemini 3.1 Pro by four points

1

. In code-related tasks, both Opus 4.6 and OpenAI's GPT 5.2 High run ahead of Google's latest offering. However, experts caution that the Arena leaderboard relies on user preferences, which can reward outputs that appear correct regardless of actual accuracy1

.The competitive dynamics underscore how model capabilities remain relative in the rapidly evolving AI competition. As ZDNET senior contributing editor David Gewirtz noted, "The test numbers seem to imply that it's got substantial improvement over Gemini 3, and Gemini 3 was pretty good, but I don't think we're really going to know right away"

4

. He emphasized that the upcoming release of GPT 5.3 will provide a more comprehensive comparison point.Related Stories

Practical Applications and Availability for Developers

Google highlighted several practical use cases demonstrating Gemini 3.1 Pro's capabilities in creative and technical domains. The model can generate code-based animations, creating scalable SVG images ready for websites from simple text prompts. In one demonstration, it generated an entire website based on a character from Emily Brontë's Wuthering Heights, reimagined as a landscape photographer's portfolio

3

. Another example showcased a 3D interactive starling murmuration with dynamically generated soundscapes that respond to bird movements.The model is now available for developers through the Gemini API in Google AI Studio, Android Studio, Google Antigravity, and Gemini CLI

4

. Enterprise customers can access it via Vertex AI and Gemini Enterprise, while consumers can use it through NotebookLM and the Gemini app for those with AI Pro or Ultra plans3

. Notably, the model is also accessible through Microsoft services including GitHub Copilot, Visual Studio, and Visual Studio Code5

.Deep Think Integration Powers Scientific Breakthroughs

The announcement revealed that Gemini 3.1 Pro serves as the "core intelligence" behind Google's Deep Think tool upgrade announced last week

1

. Google positioned the model as the foundation enabling Deep Think's new capabilities in chemistry and physics, alongside enhanced performance in math and coding. The company described Deep Think as built to address "tough research challenges -- where problems often lack clear guardrails or a single correct solution and data is often messy or incomplete"4

. While Deep Think scored even higher on some benchmarks (84.6% on ARC-AGI-2 and 48.4% on Humanity's Last Exam), Google emphasized that Gemini 3.1 Pro provides the underlying intelligence making those scientific breakthroughs possible. This integration suggests Google's strategy focuses on building versatile foundation models that can power specialized applications across research and enterprise domains.References

Summarized by

Navi

[5]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology