Google's Gemini AI can now reason across your Gmail, Photos, and YouTube to deliver personal answers

34 Sources

34 Sources

[1]

Gemini can now scan your photos, email, and more to provide better answers

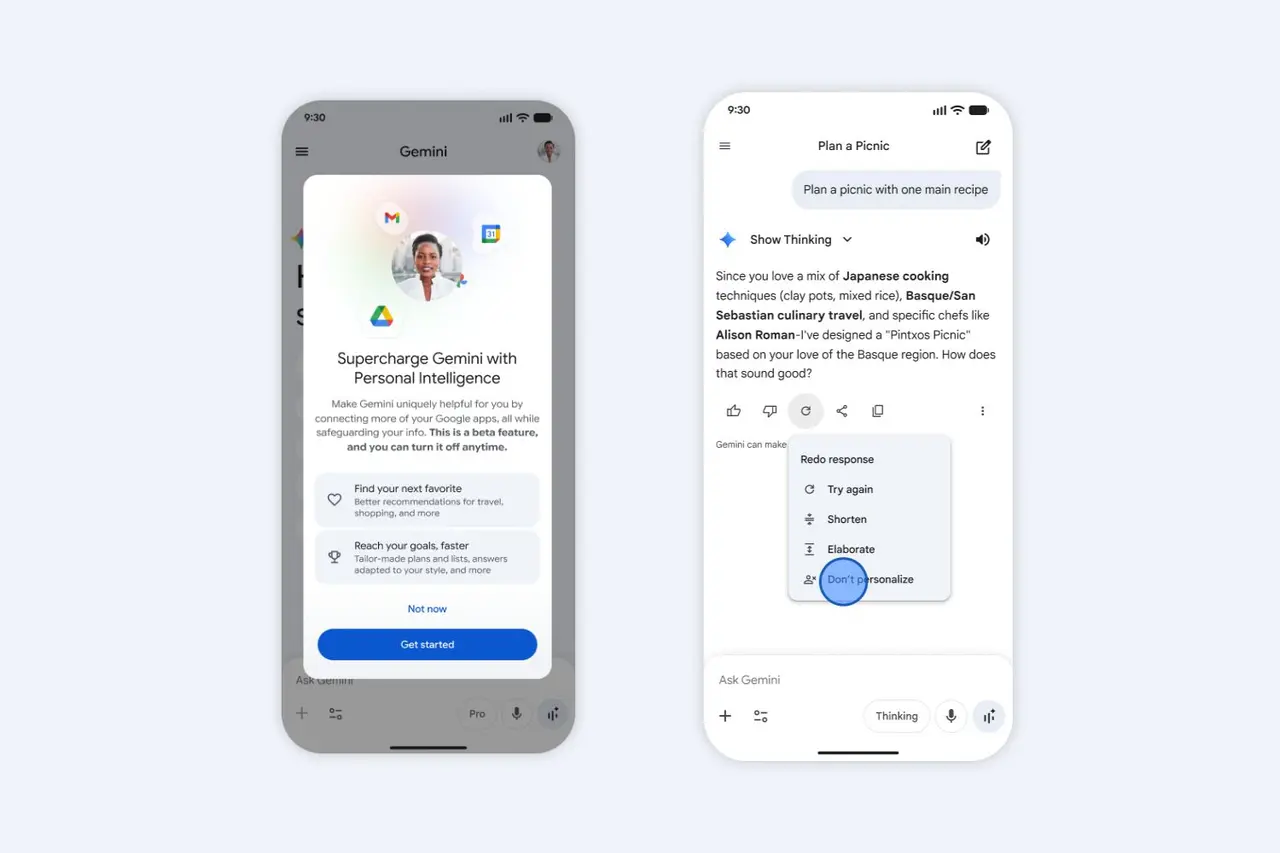

Google has toyed with personalized answers in Gemini, but that was just a hint of what was to come. Today, the company is announcing extensive "personal intelligence" in Gemini that allows the chatbot to connect to Gmail, Photos, Search, and YouTube to craft more useful answers to your questions. If you don't want Gemini to get to know you, there's some good news. Personal intelligence is beginning as a feature for paid users, and it's entirely optional. By every measure, Google's models are at or near the top of the AI heap. In general, the more information you feed into a generative AI, the better the outputs are. And when that data is personal to you, the resulting inference is theoretically more useful. Google just so happens to have a lot of personal data on all its users, so it's relatively simple to feed that data into Gemini. As Personal Intelligence rolls out over the coming weeks, AI Pro and AI Ultra subscribers will see the option to connect those data sources. Each can be connected individually, so you might choose to allow Gmail access but block Photos, for example. When Gemini is allowed access to other Google products, it incorporates that data into its responses. Google VP Josh Woodward claims that he's already seeing advantages while testing the feature. When shopping for tires, Gemini referenced road trip photos to justify different suggestions and pulled the license plate number from a separate image. Gemini will cite when it uses your personal data. If the personalized answer isn't what you want, you can re-run any output without personalization. You may also use temporary chats to get the standard Gemini output without using your account data. Disabling access to one or all data sources in the settings is also always an option. Google's take on AI privacy Perhaps sensing that feeding more data into Gemini would give many people the creeps, Google's announcement explains at great length how the company has approached privacy in Personal Intelligence. Google isn't getting any new information about you -- your photos, email, and search behaviors are already stored on Google's servers, so "you don't have to send sensitive data elsewhere to start personalizing your experience." Having the chatbot regurgitate your photos and emails might still be a little unsettling, but Google claims it has built guardrails that keep Gemini from musing on sensitive topics. For example, the chatbot won't use any health information it finds. However, you can still ask for it to look at that information explicitly. Google also stresses that your personal data is "not directly used to train the model." So the images or search habits it references in outputs are not used for training, but the prompts and resulting outputs may be used. Woodward notes that all personal data is filtered from training data. Put another way, the system isn't trained to learn your license plate number, but it is trained to be able to locate an image containing your license plate. This feature will be in beta for a while as it rolls out, and it may take several weeks to reach all paid Gemini accounts. It will work across all Gemini endpoints, including the web, Android, and iOS. Google also says it plans to expand access to Personal Intelligence in Gemini down the road. Unless Google flip-flops on the default settings, you can leave this feature disabled. That ensures Gemini won't get additional access to your data, but of course, all that data is still sitting on Google's servers. This probably won't be the last time Google tries to entice you to plug your photos into an AI tool.

[2]

Gemini's new beta feature provides proactive responses based on your photos, emails, and more | TechCrunch

Google announced on Wednesday that it's launching a new beta feature in the Gemini app that allows the AI assistant to tailor its responses by connecting across your Google ecosystem, starting with Gmail, Photos, Search, and YouTube history. Although Gemini could already retrieve information from these apps, it can now reason across your data to provide proactive results, such as connecting a thread in your emails to a video you watched. Google says this means Gemini understands context without being told where to look. The tech giant notes that this beta experience, called Personal Intelligence, is off by default, as users have the option to choose if and when they want to connect their Google apps to Gemini. Of course, not everyone wants AI looking at their photos and YouTube history. If you do decide to connect your apps, Gemini will only use Personal Intelligence when it determines that doing so will be helpful, Google says. "Personal Intelligence has two core strengths: reasoning across complex sources and retrieving specific details from, say, an email or photo to answer your question," wrote Josh Woodward, VP, Gemini app, Google Labs, and AI Studio, in a blog post. "It often combines these, working across text, photos and video to provide uniquely tailored answers." Woodward shared an example of when he was standing in line at a tire shop and didn't remember his car's tire size. While most AI chatbots can determine a car's tire size, Woodward says Gemini can go further by offering personalized responses. In his case, Gemini suggested all-weather tires after identifying family road trip photos in Google Photos. Woodward also said he forgot his license plate number, but Gemini was able to pull the number from a picture in Photos. "I've also been getting excellent tips for books, shows, clothes and travel," Woodward wrote. "Just this week, it's been exceptional for planning our upcoming spring break. By analyzing our family's interests and past trips in Gmail and Photos, it skipped the tourist traps. Instead, it suggested an overnight train journey and specific board games we could play along the way." Google says it has guardrails for sensitive topics, as Gemini will avoid making proactive assumptions about sensitive data like health. However, the tech giant also notes that Gemini will discuss this data if you ask it to. Additionally, Gemini doesn't train directly on your Gmail inbox or Google Photos library. Instead, it trains on specific prompts in Gemini and the model's responses. In the examples above, the photos of the road trip, the license plate picture in Photos, and the emails in Gmail are not directly used to train the model. They are only referenced to generate a response, Google says. Personal Intelligence is rolling out to Google AI Pro and AI Ultra subscribers in the U.S. Google plans to expand the feature to more countries and Gemini's free tier. Google provided a list of example prompts to try, including "Help me plan my weekend in [city i.e. New York] based on things I like to do," "Recommend some documentaries based on what I've been curious about," or "Based on my delivery and grocery receipts in Gmail, Search history, and YouTube watch history, recommend 5 YouTube channels that match my cooking style or meal prep vibe."

[3]

Gemini's New 'Personalized Intelligence' Uses Your Photos and Gmail to Customize Responses

Google's AI is getting even more up close and personal with you. Gemini is getting a new personalization feature that lets the AI use information from your connected Google apps -- like Calendar, Photos and Gmail -- to make better-informed, more individually tailored answers, the company announced on Wednesday. The personalization feature is in beta for paying Google AI Pro and Ultra subscribers in the US. Don't worry if you don't see it yet -- it's rolling out beginning on Wednesday and should be available for subscribers by the end of the week. It's compatible across the web, Android and iOS versions of Gemini for personal Google accounts (but not Workplace or Enterprise accounts). The feature will be available soon for free and for non-US users, and there's a planned integration with AI Mode in Search in the future. Gemini has long been able to retrieve information from across your Google ecosystem, going back to 2023 when it was still called Bard. But this week's update gives Gemini the ability to use that information and reason. That means Gemini can answer your search queries using the whole sum of your data in Google apps, and it uses that to make more informed, personalized recommendations. For example, if you were to ask Gemini what tires would be best for your car's specific make and model, the regular version of Gemini would be able to pull many different specs that could work. Gemini with personalization, by comparison, would use the information in your calendar and photos, understand that you have an interest in off-roading or camping trips and add a recommendation for all-terrain tires to the list. This is a real example from Josh Woodward, vice president of Gemini app and Google Labs, who has been using the personalization feature, and he said in a blog post that it's made his daily life easier. The benefit of connecting your Google apps, the company says, is that it can reason across diverse sources and retrieve specific details by "working across text, photos and video to provide uniquely tailored answers." This multimodal ability is important, since much of the important information in our lives is typically spread out among different formats -- PDFs, emails, photos, videos and more. The feature's advanced reasoning abilities are thanks to Gemini 3, the company's latest model that was built to handle more nuanced tasks. This feature is off by default, so you don't have to worry about Google automatically pulling in data from your other apps. You can decide what Google apps you want to connect to the personalization feature when you initially set it up; for example, you can decide to connect your Google Calendar and Photos, but not your Gmail. Google will not use the entirety of your Gmail inbox or data from other connected apps to train its AI models, but the company can "train on limited info, like specific prompts in Gemini and the model's responses, to improve functionality over time," according to the blog post. This is in line with Gemini's overall privacy policy. For more, check out our hands-on testing with the new Samsung Galaxy Z TriFold and the official Best of CES 2026 award winners.

[4]

Gemini's new Personal Intelligence will look through your emails and photos - if you let it

The feature is off by default and won't train on sensitive data. The Google ecosystem of apps spans everything from the cornerstone of your workflow, like Gmail, to personal apps like YouTube and Photos. Now, the company is letting Gemini connect across your entire ecosystem for more tailored assistance. Google is calling this new capability Personal Intelligence, launched on Wednesday. Using Gemini 3, Personal Intelligence can reason across your apps to develop context that can be used to provide proactive insights. Gemini already references information from your apps, but Personal Intelligence is meant to pull more tailored responses from that data. For starters, the experience will connect Gmail, Photos, YouTube, and Search. In simple terms, the feature gives Gemini more of your data, which should improve its reasoning and in-practice understanding of your needs when you query it. Rather than a new interface, it's more like an upgrade running in the background. That's similar to what Apple Intelligence pitched itself as: an always-observing assistant that gathers insights from your phone activity to better answer queries. Some examples of Personal Intelligence in action include pulling specific details from your email or photo gallery that could be helpful in answering your question or connecting an email thread to a video you watched on YouTube, according to Google. Also: I tried Gmail's new Gemini AI features, and now I want to unsubscribe Gemini will reference where it pulled information from, and will not use your data to personalize every answer; instead, it will only do so when it is helpful and relevant. It is also built to avoid proactive assumptions about sensitive data, such as your health, Google added. You can also decide not to have Gemini personalize certain answers by using the "try again" button, and if unhappy with a result, share feedback, which will help refine the experience. In particular, Josh Woodward, VP of the Gemini app, Google Labs, and the company's AI Studio, said in the blog post that while the company tried to minimize mistakes within the beta version of Personal Intelligence, there are still risks of inaccurate responses or "over-personalization," in which the model connects two topics that are unrelated. In these instances, collecting user feedback is especially important. Also: Gemini vs. Copilot: I compared the AI tools on 7 everyday tasks, and there's a clear winner Also, if it makes an incorrect assumption -- for example, that you are still in a relationship because of the photos in your library, or that you like golf when you're really just there to support a friend -- you can correct it via chat with a simple clarifying statement. Of course, with an experience like this, privacy is a major concern for users. Google noted that Personal Intelligence is off by default, giving users complete control over whether they want to connect any apps, or select from some and not others. Also: I've tested Gemini, ChatGPT, Copilot, and others - Lenovo might have all AI assistants beat Google also said that Gemini will not train directly on your Gmail inbox or Photos library. Rather, Google clarified that it trains on limited information, such as specific prompts in Gemini and the model's responses, not details it finds in your apps, like a photo of your license plate in your camera roll. "We don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it," the company explained. For now, Personal Intelligence is currently only available to paid subscribers in beta, including Google AI Pro and AI Ultra users; however, Google plans to expand the feature to the free tier in the future. It is intended to reach all eligible users by the end of this week. Also: I tried Gemini's 'scheduled actions' to automate my AI - the potential is enormous (but Google has work to do) Once an eligible user enables it, Personal Intelligence can work across Web, Android, and iOS with any model available in the Gemini model Picker. To turn it on, open Gemini, click Settings, then Personal Intelligence, and select Connected Apps. It is only available for personal Google accounts, not for workspace business, enterprise, or education plans. Soon, it will also be coming to Search in AI Mode.

[5]

Google's Gemini AI will use what it knows about you from Gmail, Search, and YouTube

Google's Gemini AI is getting what could prove to be a very big upgrade: To help answers from Gemini be more personalized, the company is going to let you connect the chatbot to Gmail, Google Photos, Search, and your YouTube history to provide what Google is calling "Personal Intelligence." This isn't the first time Google has introduced some form of personalization with its AI chatbot; in September 2023, when Gemini was still called Bard, Google announced a way for it to connect to Google's apps and services to be able to retrieve information based on what's stored in your account. Gemini can also already recall past conversations. But the big change with Personal Intelligence is that it can reason across the information from your Google account, meaning it's able to pull details from things like an email or a photo without requiring you to specifically ask to pull from the apps you might want the information from. It's also powered by Google's Gemini 3 AI models. Here's an example that Google's Josh Woodward, VP of the Gemini app, Google Labs, and AI Studio, shared in a blog post about how Personal Intelligence can work. Google also put together a similar example in a video that I've embedded below: For example, we needed new tires for our 2019 Honda minivan two weeks ago. Standing in line at the shop, I realized I didn't know the tire size. I asked Gemini. These days any chatbot can find these tire specs, but Gemini went further. It suggested different options: one for daily driving and another for all-weather conditions, referencing our family road trips to Oklahoma found in Google Photos. It then neatly pulled ratings and prices for each. As I got to the counter, I needed our license plate. Instead of searching for it or losing my spot in line to walk back to the parking lot, I asked Gemini. It pulled the seven-digit number from a picture in Photos and also helped me identify the van's specific trim by searching Gmail. Just like that, we were set. Woodward warns that while Google has tested Personal Intelligence "extensively" to "minimize mistakes," users might run into "inaccurate responses or 'over-personalization,' where the model makes connections between unrelated topics." It might also have problems with "timing or nuance, particularly regarding relationship changes, like divorces, or your various interests." Google is working on fixing these issues. Personal Intelligence is an opt-in feature, and if you turn it on, you get to decide which apps to connect to Gemini. Woodward says Google has "guardrails" for "sensitive topics," and adds that Gemini "aims to avoid making proactive assumptions about sensitive data like your health, though it will discuss this data with you if you ask." Woodward also notes that Gemini "doesn't train directly on your Gmail inbox or Google Photos library," though Gemini does train on "limited info" such as "specific prompts in Gemini and the model's responses." Google is launching Personal Intelligence first as a beta, and only in the US, to "eligible" Google AI Pro and AI Ultra subscribers and only for personal Google accounts, Woodward says. In the future, the company plans to bring Personal Intelligence to more countries and Gemini's free tier. It will come to AI Mode in Search "soon."

[6]

Gemini Can Now Personalize Responses Using Data From Your Google Apps

Google is testing a new Gemini experience that allows the chatbot to personalize its responses using data from connected apps such as Gmail, YouTube, Search, and Photos. The feature, called Personal Intelligence, requires users to opt in and choose the apps Gemini can access. In a blog post, Google's VP for Gemini, Josh Woodward, shared a couple of examples to show how he uses Personal Intelligence. When Woodward needed to buy new tires for his car, he asked Gemini for advice, but didn't mention his car model or his typical use cases. The chatbot, however, tapped into Woodward's emails and photos to find the required details, such as car model, tire size, and trips taken, to suggest the most suitable tires and their pricing. In another example, Gemini analyzed the family's interests and past trips from Gmail and Photos to prepare an ideal plan for their upcoming spring break. Regarding privacy, Google says that since all of the data "already lives at Google securely," it doesn't have to send the data elsewhere to begin personalizing. Additionally, the company claims it does not train Gemini "directly" on your photos or emails. Instead, it may use specific information from prompts and responses to improve Gemini over time. For example, if the car's license plate was referenced to generate a response, Google says it will use the prompt and response for AI training only after it "obfuscate[s] personal data from the conversation." As with most of its AI features, Google warns that Personal Intelligence may not always get it right, adding that it still struggles with timing and nuances. Personal Intelligence is currently available in beta for Google AI Pro and AI Ultra subscribers in the US. You can enable it from Gemini's Settings > Personal Intelligence > Connected Apps. The graphics in Google's blog post also indicate that the feature may soon support Google Calendar, Drive, and other Workspace apps. Apple's Siri was supposed to receive a similar ability to draw from context across apps in 2024, but that never happened. The company replaced its AI boss, now faces multiple lawsuits, and is aiming for a much-delayed release date: spring 2026. Google will be the one to help push that along. It just announced a partnership with Apple to power Siri using Gemini. When Siri's context awareness finally arrives, will it be Gemini's Personal Intelligence in disguise?

[7]

Google: Sell soul to Gemini for smarter answers

But private data will stay private and won't be used for training, Google says Google on Wednesday began inviting Gemini users to let its chatbot read their Gmail, Photos, Search history, and YouTube data in exchange for possibly more personalized responses. Josh Woodward, VP of Google Labs, Gemini and AI Studio, announced the beta availability of Personal Intelligence in the US. Access will roll out over the next week to US-based Google AI Pro and AI Ultra subscribers. The use of the term "Intelligence" is more aspirational than accurate. Machine learning models are not intelligent; they predict tokens based on training data and runtime resources. Perhaps "Personalized Predictions" would be insufficiently appealing and "Personalized Artificial Intelligence" would draw too much attention to the mechanized nature of chatbots that now attracts active opposition. Whatever the case, access to personal data comes with the potential for more personally relevant AI assistance. Woodward explains that Personal Intelligence can refer information from Google apps like Gmail, Photos, Search, and YouTube to the company's Gemini model. This may help the model respond to queries using personal or app-specific data within those applications. "Personal Intelligence has two core strengths: reasoning across complex sources and retrieving specific details from, say, an email or photo to answer your question," said Woodward. "It often combines these, working across text, photos and video to provide uniquely tailored answers." As an example, Woodward recounted how he was shopping for tires recently. While he was standing in line for service, he didn't know the tire size and needed his license plate number. So he asked Gemini and the model fetched that information by scanning his photo library, finding an image of his car, and converting the imaged license plate to text. Whether that scenario is better than recalling one's plate number from memory, searching for it on phone-accessible messages, or glancing at the actual plate in the parking lot depends on whether one sees the mind as a use-it-or-lose-it resource. Every automation is an abdication of autonomy. To Google's credit, Personal Intelligence is off by default and must be enabled per app. If Personal Intelligence is anything like AI Overviews or Gemini in Google Workspace apps, expect notifications, popups, hints, nudges, and recommendations during app interactions as a way to encourage adoption. Woodward argues that what differentiates Google's approach from rival AI agents is that user data "already lives at Google securely." There's no privacy intrusion when the call is coming from inside the house. Gemini, he said, will attempt to cite the source of output based on personalization, so recommendations can be verified or corrected. And there are "guardrails" in place that try to avoid bringing sensitive information (e.g. health data) into Gemini conversations, like "I've cancelled your appointments next year based on your prognosis in Gmail." It's ancient history now but in 2012, when Google changed its privacy policy to share data across its different services, that was controversial. The current trend is to encourage customer complicity in data sharing. Woodward insists Google's aim is to provide a better Gemini experience while keeping personal data secure and under the user's control. "Built with privacy in mind, Gemini doesn't train directly on your Gmail inbox or Google Photos library," he said. "We train on limited info, like specific prompts in Gemini and the model's responses, to improve functionality over time." Pointing to his anecdote about his vehicle, he said that Google would not use the photos of the relevant road trip, the license plate in those photos, or his Gmail messages for model training. But the prompts and responses, filtered to remove personal information, would get fed back to the model as training data. "In short, we don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it," he said. Google's Gemini Apps Privacy Hub page offers a more comprehensive view of how Google uses the information made available to its AI model. The company says that human reviewers (including trained reviewers from partner service providers) review some of the data that it collects for purposes like improving and maintaining services, customization, measurement, and safety. "Please don't enter confidential information that you wouldn't want a reviewer to see or Google to use to improve our services, including machine-learning technologies," it warns. The personalization with Connected Apps page offers a similar caution. Google's support boilerplate also states that Gemini models may provide inaccurate or offensive responses that do not reflect Google's views. "Don't rely on responses from Gemini Apps as medical, legal, financial, or other professional advice," Google's documentation says. But for anything less consequential, maybe Personal Intelligence will help. ®

[8]

Google launches Personal Intelligence feature in Gemini app, challenging Apple Intelligence

Josh Woodward, head of the Gemini app, said users may find mistakes and asked for them to provide feedback. Google is letting users test a new artificial intelligence tool that connects information from various apps like Gmail and Google Photos to provide personalized answers in its chatbot. The feature, called Personal Intelligence, is available for personal accounts, Google said in a blog post on Wednesday. "Whether it's connecting a thread in your emails to a video you watched or finding nuance in your photo library, Gemini now understands context without being told where to look," Josh Woodward, vice president of Google Labs and Gemini app, wrote in the post. It's Google's latest attempt to build more reasoning AI capabilities within it Gemini app and lure users as the company goes head-to-head with OpenAI and others in the increasingly competitive generative AI market. While Gemini could already retrieve information from Google apps, the company said on Wednesday Gemini 3 can now "reason across your data to surface proactive insights." The new feature will compete with Apple Intelligence, Apple's personal AI system that integrates apps to help with writing, image creation, and understanding context. Apple said Monday it chose Google to power its AI features, including a major Siri upgrade expected later this year. Personal Intelligence will first be available to Google AI Pro and AI Ultra subscribers in the U.S., the company said, and will be added to Google's search tool "AI Mode" in the future. The feature will be off by default, Google said. Woodward, who has launched successful products in Gemini in the past year, warned that the beta version of the new feature hasn't eliminated mistakes, and is asking users to give feedback. "Gemini may also struggle with timing or nuance, particularly regarding relationship changes, like divorces, or your various interests," he wrote. For sensitive topics like health, "Gemini aims to avoid making proactive assumptions" but "will discuss this data with you if you ask," Woodward added. Woodward also said the company doesn't train its AI models "directly on your Gmail inbox or Google Photos library" but does utilize "limited info, like specific prompts in Gemini and the model's responses, to improve functionality over time."

[9]

Personal Intelligence just supercharged Gemini. Here's how to use it right now

As with most new Gemini features, Google is being fairly restrictive about who can actually use Personal Intelligence -- at least right now. At the time of publication, Personal Intelligence is available in beta access to people in the US with either a Google AI Pro or Google AI Ultra subscription. Whether you've had one of these plans for a while or you just signed up today, you're eligible to try Personal Intelligence. In addition to having one of these AI subscriptions and residing in the US, you'll need to ensure your Google account is a personal account; Google Workspace accounts aren't currently supported.

[10]

Google Gemini's 'Personal Intelligence' Pulls from Your Search and YouTube History

It feels clear at this point that the next big frontier for consumer-facing artificial intelligence is personalization. OpenAI has made this central to ChatGPT by introducing memories that allow the chatbot to pull from past conversations, and now Google is taking it a step further. On Wednesday, it announced a new beta feature for its popular Gemini assistant that users can opt into called "Personal Intelligence," which goes beyond past conversations and digs into your internet history. Much like ChatGPT, Gemini can pull from your previous conversations. But with Personal Intelligence, it can also pull from just about anything you've done across the Google ecosystemâ€"though you can selectively disconnect certain services and delete history as needed. Per Google, Gemini is capable of accessing your Gmail, Google Calendar, and content in your Google Drive, as well as anything you've saved to Google Photos. More than just stuff you've saved, it can also parse through stuff you've looked at. That included YouTube watch history, Google Search history, and anything you've looked for via its Shopping, News, Maps, Google Flights, and Hotels services. With all that information, Google claims Gemini can perform "reasoning across complex sources" to "provide uniquely tailored answers." What does that look like in practice? Just check out this anecdote from Josh Woodward, Vice President of the Gemini app, who shared his experience with Personal Intelligence in a blog post, seemingly without realizing how creepy it sounds: Since connecting my apps through Personal Intelligence, my daily life has gotten easier. For example, we needed new tires for our 2019 Honda minivan two weeks ago. Standing in line at the shop, I realized I didn't know the tire size. I asked Gemini. These days any chatbot can find these tire specs, but Gemini went further. It suggested different options: one for daily driving and another for all-weather conditions, referencing our family road trips to Oklahoma found in Google Photos. It then neatly pulled ratings and prices for each. As I got to the counter, I needed our license plate. Instead of searching for it or losing my spot in line to walk back to the parking lot, I asked Gemini. It pulled the seven-digit number from a picture in Photos and also helped me identify the van's specific trim by searching Gmail. Just like that, we were set. Not to dig into the specifics of this example, but it's not immediately clear to me that making an uninformed decision (we don't have to pretend you've actually learned anything by getting some AI-generated answers) based on the recommendation of a chatbot is a better outcome here than talking to the person at the front desk who knows about cars and tires. We all know we can just ask people stuff, right? We don't have to come across as knowledgeable about everything to everyone all the time. Regardless, Google is at least aware that this whole process might weird people out a bit, even as it's promising better recommendations, search results, and conversations. Personal Intelligence can be toggled on and off, and users can select what sources it pulls in. Gemini will also "try" to reference or explain the information it used from connected sources so the user can verify it. The company is also prepping its beta testers for some bumps in the road along the way. In a blog post, it warned that users may encounter inaccurate responses or "over-personalization," where Gemini connects unrelated information. It also said Gemini may "struggle with timing or nuance," like looking at a photo of a person and their ex-partner and not realizing they've broken up. If you'd like to be Google's guinea pig, Personal Intelligence is rolling out to "eligible" Google AI Pro and AI Ultra subscribers in the US. The company also said that Personal Intelligence will become available in more countries and make its way to Gemini's free tier in the future, and will be available in AI Mode in Search "soon."

[11]

Google says Gemini's Personal Intelligence is the context-aware AI you've been looking for

Karandeep Singh Oberoi is a Durham College Journalism and Mass Media graduate who joined the Android Police team in April 2024, after serving as a full-time News Writer at Canadian publication MobileSyrup. Prior to joining Android Police, Oberoi worked on feature stories, reviews, evergreen articles, and focused on 'how-to' resources. Additionally, he informed readers about the latest deals and discounts with quick hit pieces and buyer's guides for all occasions. Oberoi lives in Toronto, Canada. When not working on a new story, he likes to hit the gym, play soccer (although he keeps calling it football for some reason🤔) and try out new restaurants in the Greater Toronto Area. Google is attempting to bridge the gap between an intelligent assistant and a personal one, and it is doing so with the launch of a new beta feature for Gemini. That feature is called Personal Intelligence, and it lets Gemini tailor its responses by connecting itself to your Google ecosystem. This includes Gmail, Photos, Search, and even your YouTube History. Related I found a Gemini feature so good, I deleted a bunch of apps Your phone's home screen is about to get a lot cleaner Posts 16 By Ben Khalesi While Gemini can already access other Google ecosystem apps, the new AI tool will be able to get the full scope of your data to surface proactive insights, all leveraged by Gemini 3. Personal Intelligence has two core strengths: reasoning across complex sources and retrieving specific details from, say, an email or photo to answer your question. It often combines these, working across text, photos and video to provide uniquely tailored answers. The experience will be off by default, and if you choose to enable it, you get to decide which apps Gemini can access. If you do give the AI tool access to other Google apps and services, it will not tap into them for every query. The goal here is to use Personal Intelligence only when Gemini deems that a personalized response would be helpful. Because the data Gemini is accessing already lives on Google's servers, Google says that you don't have to share sensitive data outside its ecosystem to personalize your experience. To demonstrate the feature, Google shared the results of a query about the tire size for a 2019 Honda minivan and related information. While any AI model could provide specs, Gemini with Personal Intelligence can scour through a Gmail inbox for a photo that offers details about the vehicle's trim level and license plate number. By analyzing other photos, it can suggest all-weather tires over standard ones. "[Gemini has] been exceptional for planning our upcoming spring break," wrote Google Gemini Vice President Josh Woodward. "By analyzing our family's interests and past trips in Gmail and Photos, it skipped the tourist traps. Instead, it suggested an overnight train journey and specific board games we could play along the way." Prompt examples that Google said Personal Intelligence can handle well include: Based on my delivery and grocery receipts in Gmail, Search history, and YouTube watch history, recommend five YouTube channels that match my cooking style or meal prep vibe. Recommend some documentaries based on what I've been curious about. What's a totally different career where you could see me thriving? It's worth noting that Gemini will not use Personal Intelligence for sensitive topics like health data, though Gemini can discuss this data if you explicitly ask it to. Google says Gemini is not trained directly on your Gmail or Google Photos data. Instead, it simply opts for a retrieval method to look for data connected to your prompt. In the minivan example, this means that the model didn't "learn" the user's license plate number, it simply referenced it to answer the query. Subscribe to the newsletter for deeper AI insights Curious about Gemini's Personal Intelligence? Subscribe to the newsletter for clear, expert coverage that breaks down features, privacy implications, and practical use cases, so you get thoughtful analysis of AI developments. Subscribe By subscribing, you agree to receive newsletter and marketing emails, and accept Valnet's Terms of Use and Privacy Policy. You can unsubscribe anytime. "We don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it," wrote Woodward. Personal Intelligence is rolling out today to eligible Google AI Pro and AI Ultra subscribers in the US and should be widely available there over the next week. As is the case with most new Gemini features, Personal Intelligence will expand to more countries and Gemini's free tier in the not-so-distant future. If you're an eligible user, you should see an invitation banner to try out Personal Intelligence the next time you open the Gemini app, as seen in the image above. If you don't, or close the invitation without getting started, you can find the feature in Gemini app > Settings > Personal Intelligence.

[12]

Gemini Personal Intelligence show what we can expect from Siri

The long-awaited launch of the new AI-powered Siri now looks much closer thanks to Apple's partnership with Google. The company this week confirmed reports that many Siri features will be powered by Google's Gemini models. We already knew some of the features we could expect from AI Siri thanks to the announcements at WWDC 2024 and a now-deleted iPhone 16 ad - and the launch of Gemini Personal Intelligence has now effectively provided a working preview ... Google yesterday launched a beta version of what it calls Personal Intelligence. The headline feature here is Gemini's ability to use a complex mix of sources to generate responses, including personalized information pulled from a number of the Google apps and services people use. Personal Intelligence can retrieve specific details from text, photos, or videos in your Google apps to customize Gemini responses. This includes Google Workspace (Gmail, Calendar, Drive, etc), Google Photos, your YouTube watch history, and of all of the various Google search services you've used (Search, Shopping, News, Maps, Google Flights, and Hotels). The Apple version will of course pull information from Apple apps like Mail, Calendar, Photos, and Notes. Google's Gemini head Josh Woodward gave an example of how Personal Intelligence had helped him. We needed new tires for our 2019 Honda minivan two weeks ago. Standing in line at the shop, I realized I didn't know the tire size. I asked Gemini. These days any chatbot can find these tire specs, but Gemini went further. It suggested different options: one for daily driving and another for all-weather conditions, referencing our family road trips to Oklahoma found in Google Photos. It then neatly pulled ratings and prices for each. As I got to the counter, I needed our license plate. Instead of searching for it or losing my spot in line to walk back to the parking lot, I asked Gemini. It pulled the seven-digit number from a picture in Photos and also helped me identify the van's specific trim by searching Gmail. Just like that, we were set. Hallucinations are one of the greatest dangers with AI systems, and the risks obviously increase if they are trying to extrapolate from your own personal data. Google says the new feature allows you to see exactly what assumptions it is making and offer you the chance to verify or correct those. You also won't have to guess where an answer comes from: Gemini will try to reference or explain the information it used from your connected sources so you can verify it. If it doesn't, you can ask it for more information. And if a response feels off, just correct it on the spot ("Remember, I prefer window seats"). You'll also have the option of telling it to give you a new answer without personalization. Google says that you have to opt in to Personal Intelligence and that you decide which apps to include. Connecting your apps is off by default: you choose to turn it on, decide exactly which apps to connect, and can turn it off anytime. Additionally, the company says that your data never leaves the Google ecosystem. That may or may not reassure you, but obviously the Apple version will only access data sitting within your apps and inside your iCloud account. Our sister site 9to5Google provides an 8-minute video which runs through all of the new features. What are your thoughts? For our purposes, assume everything run by Google is instead run by Apple, as that will be the reality for the Gemini-powered Siri. It will access your Apple apps, use your iCloud data, and run either on-device or on Apple's Private Cloud Compute servers.

[13]

Gemini rolling out 'Personal Intelligence' beta that uses Gmail & your Google apps data

Google's goal with Gemini is to make a "personal, proactive, and powerful" assistant. The Gemini app today is adding "Personal Intelligence" in beta. This lets Gemini use the data Google already has about you to "supercharge" and personalize responses. Personal Intelligence can retrieve specific details from text, photos, or videos in your Google apps to customize Gemini responses. This includes: Along with "reasoning across complex sources," Gemini will "provide uniquely tailored answers." This is a step beyond referencing past chats and something the company has been working towards since last year with the experimental "Personalization" model. Google provides this example of Personal Intelligence, with personalization leveraged when Gemini thinks it will be helpful to the response: Gemini will show its thinking process with an "Answer now" button that replaces the current "Skip." The response will "reference or explain the information it used from your connected sources so you can verify it." You can correct Gemini about your preferences at any time, as well as regenerate responses without personalization enabled. There's also the ability to use temporary chats. On the privacy front, Personal Intelligence is off by default and you can choose specific apps to connect. Google explains how "Gemini doesn't train directly on your Gmail inbox or Google Photos library." Rather, it trains on "limited info, like specific prompts in Gemini and the model's responses" to improve the capability. In the minivan example, "photos of [the] road trip, the license plate picture in Photos and the emails in Gmail are not used to train the model." They are only referenced to deliver the reply. We train the model with things like my specific prompts and responses, only after taking steps to filter or obfuscate personal data from the conversation I have with Gemini. In short, we don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it. The company says a "key differentiator" is how this sensitive data "already lives at Google securely" so it doesn't need to be sent "elsewhere to start personalizing your experience." Google says Personal Intelligence also excels at recommending books, shows, clothes, and travel. You can also tap the new "For you" chip on the homepage to see personalized prompt suggestions. Other example queries include: As a guardrail, Google will "avoid making proactive assumptions about sensitive data like your health." However, Gemini "will discuss this data with you if you ask." Personal Intelligence is available in beta and Google touts extensive testing to minimize mistakes. However, it still cautions about "inaccurate responses" or "over-personalization" wherein the "model makes connections between unrelated topics." You can thumbs down these responses. Specific "struggles" include timing and nuance "particularly regarding relationship changes, like divorces, or your various interests." For instance, seeing hundreds of photos of you at a golf course might lead it to assume you love golf. But it misses the nuance: you don't love golf, but you love your son, and that's why you're there. If Gemini gets this wrong, just correct it ("I don't like golf"). Starting today, Google AI Pro and Ultra subscribers with personal accounts (not Workspace) in the US will get the option to enable Personal Intelligence. Access is rolling out over the coming week. If you're not prompted from the homepage: It's available on Android, iOS, and web with all Gemini 3 models today, including "Fast." In the future, it will come to the free tier and more countries, as well as AI Mode in Search "soon."

[14]

Google Gemini just pulled further ahead of ChatGPT -- here's what it can now do with your Gmail and Photos

Google keeps finding new ways to make Gemini more useful, but this update might be the most meaningful yet. Instead of a flashier model or a redesigned interface, Google is tackling the problem users have complained about since the earliest chatbots: AI that doesn't actually understand you. Starting today, Google is rolling out Personal Intelligence in the Gemini app for U.S. users. The new beta feature lets Gemini reason across your Google ecosystem -- including Gmail, Google Photos, Search history and YouTube history -- to deliver responses that are more personalized, proactive and context-aware. Powered by Gemini 3, the assistant can now connect information across multiple apps at once, surfacing insights you didn't explicitly ask for -- but that make sense in the moment. Instead of telling Gemini where to look, it understands context automatically, turning everyday questions into smarter, more relevant answers. What Personal Intelligence actually does Once enabled, Personal Intelligence allows Gemini to understand relationships across your data -- emails, photos, searches and videos -- without you having to specify where to look. For example, a question about a purchase can pull details from Gmail, product reviews from Search, and past preferences inferred from YouTube history. Similarly, a travel question can factor in past trips found in Photos, interests reflected in searches and bookings from email. Now, Gemini can retrieve exact details -- like numbers, dates or locations -- from your personal data and combine them with broader reasoning Google describes this as Gemini being able to "understand context without being told where to look." In other words, the assistant starts acting less like a search box and more like a personal aide. A real-world example: the minivan test During the pre-briefing, Google shared a practical example that highlights how far this goes. While standing in line at a tire shop, a user asked Gemini for the tire size for their 2019 Honda minivan. Gemini didn't just return generic specs -- it suggested different tire options based on family road trips found in Google Photos, pulled ratings and prices, then retrieved the license plate number from a photo and identified the van's trim using emails in Gmail. Instead of digging through apps or sifting through tabs, Gemini got the info in one conversation -- and in seconds. It's that kind of cross-app reasoning that no other AI assistant has ever fully delivered -- until now. How Gemin compares to other AI assistants Plenty of chatbots have the ability to answer questions and even find solutions, but fewer can answer questions about your life without manual setup. Gemini has technically been able to access Google apps for a while, but Personal Intelligence changes how that data is used. Instead of one-off retrievals, Gemini now reasons across sources to offer proactive suggestions -- like book recommendations, travel ideas or shopping advice -- grounded in your habits and interests. Google says users are already using it to: * Get tailored recommendations for books, shows and clothes * Plan trips that avoid tourist traps * Discover hobbies and experiences that align with their lifestyle This is also why Google believes its approach is hard to replicate. Your data already lives inside Google's ecosystem -- Gemini doesn't need you to upload it or connect third-party tools to get started. Privacy and control: what Google says Unsurprisingly, privacy is the biggest question on the minds of many users, and one Google says it takes very seriously, emphasizing control. Personal Intelligence is off by default and enabled only if you choose to connect apps. It's customizable app by app (you can connect Gmail without Photos, for example). And, even when turned on, Gemini won't personalize every response. It only uses Personal Intelligence when it determines it will be helpful. You can also: * Regenerate a response without personalization * Use temporary chats that don't reference personal data * Turn off or delete past Gemini chats at any time * Give feedback if Gemini over-personalizes or makes incorrect assumptions Google also says Gemini does not train directly on your Gmail inbox or Photos library. Instead, personal data is referenced to answer your question, while training focuses on prompts and responses after personal information is filtered or obfuscated. In short: Gemini learns how to find things -- not what your things are. How to try it Personal Intelligence is rolling out starting today to: * Google AI Pro and AI Ultra subscribers * U.S. users * Personal Google accounts only (not Workspace, business or education accounts) Once enabled, Personal Intelligence works across web, Android, iOS and all Gemini models in the model picker. Google says it plans to expand to more countries and the free tier over time, and Personal Intelligence is also coming to Search in AI Mode soon. How to turn it on: * Open Gemini * Tap Settings * Select Personal Intelligence * Choose which apps to connect (Gmail, Photos, YouTube, Search) The takeaway Personal Intelligence is Google's clearest attempt yet to support you across every application. By letting Gemini reason across the digital footprint you already have, Google is pushing AI from "smart" to situationally aware. If it works as promised, this could be the feature that finally makes an AI assistant feel less like a tool and more like a companion -- without forcing users to hand over control. Users should keep in mind that this update is still a beta, and Google admits mistakes and over-personalization will happen. But this launch makes one thing clear: Gemini is continuing its flex its dominance in the AI race. Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

[15]

Gemini can now remember your life, not just answer questions

The feature is now live for US Google AI Pro and AI Ultra subscribers, with plans to expand further. Google is finally tackling a common frustration with AI assistants: their tendency to forget personal details. Starting today, Gemini is getting a memory upgrade called Personal Intelligence. This new feature lets the AI access your Google apps, like your emails and photos, so it can give answers that feel much more relevant to you. This new feature is now rolling out in beta. Instead of making you jump between Gmail, Photos, Search, and YouTube, Gemini can now bring all that information together. If you choose to opt in, Gemini can access your Google apps and use that information in real-time, making your personal data more useful. Up till now, Gemini could fetch things -- an email here, a photo there -- but it didn't really think across them. Personal Intelligence changes this by addressing what Google calls the "context packing problem." In short, your life produces far more data than even large AI models can process at once. Google's answer is a new system that selects the most relevant emails, images, or searches and delivers them to Gemini only when needed. This is powered by Gemini 3, Google's most advanced model family yet. It brings improved reasoning, stronger tool use, and a massive one-million-token context window. That still isn't enough to swallow an entire inbox or photo library, so Google uses context packing to surface just the right details at the right moment. Google gives a simple example to show how this works. If you ask Gemini about tire options for your car, it won't just list specifications. It can find your exact car model from Gmail, get tire sizes from your Photos, and consider your road-trip habits before making suggestions. If you're planning a trip, Gemini can check your past travel emails, saved places, and Photos to recommend places that match your real interests instead of just tourist spots. It also works across text, images, and videos. Gemini might pull a license plate number from a photo, confirm a trim level from an email receipt, then combine that with Search results, all in one response. Personal Intelligence is turned off by default. You decide which apps to connect and can disconnect them whenever you want. You can also get answers without personalization. Gemini tries to show where it got its information, so you know the source. More importantly, Google says Gemini doesn't train on your Gmail or Photos data. Those sources are referenced to answer questions, not absorbed into the model. Training focuses on prompts and responses, with personal data filtered or obfuscated first. Google is open about the current issues. Sometimes Gemini might get too personal, mix up timelines, or misunderstand relationships. For example, if Gemini thinks you love golf but you're just a supportive parent, you should correct it, and Google encourages this feedback. Personal Intelligence will start rolling out on January 14 to Google AI Pro and AI Ultra subscribers in the US, with plans to expand later. It works on the web, Android, and iOS, and will soon be available in Search's AI Mode. For now, it's only for personal accounts, not Workspace users.

[16]

Gemini gets its biggest upgrade yet 'Personal Intelligence' that uses your Gmail, Photos, Search and YouTube history - and it could be our first glimpse of the new Siri in iOS 27

Personal Intelligence, Gemini's biggest upgrade yet, is launching today, and it's one of the most significant AI innovations we've seen to date. The new Google AI feature allows Gemini to tap into Google apps and pull from your personal data to get extra context from your queries. Think of Personal Intelligence as a major next step for AI chatbots, allowing Gemini to securely access information from apps like Google Search, Gmail, Google Photos, and YouTube. Yeah, Gemini can already tap into apps, but this is different, and it sounds genuinely incredible. 'How is it different?' I hear you ask. Well, imagine asking Gemini for help with a car problem and the AI being able to directly access your purchase history from Gmail to know which car you own, access Photos to see when you last took it in for servicing, and access YouTube to see what solutions you've looked for already. Personal Intelligence in Gemini sounds like the AI personal assistant lots of us have been waiting for, and if it lives up to Google's billing, then it could be a monumental moment for consumer AI. Gemini Personal Intelligence starts rolling out today in beta to Google AI Pro and AI Ultra subscribers in the US. Google says the feature will be expanding to more countries and free users soon, as well as Search in AI Mode. It feels like a new AI feature is heralded as the 'next big thing' almost daily, but the examples Google has shared with the launch of Gemini's Personal Intelligence are genuinely impressive and exciting. One example shared was the ability for Gemini to find out what the best tyres are for the user's car without even knowing the model. Then Gemini could grab the licence plate number from Photos without even being instructed where to look. Another example given was Gemini's new ability to create an itinerary for an upcoming trip like no AI chatbot has been able to do before. Instead of just linking the most popular places, Personal Intelligence uses all of the information it knows about you to suggest specific restaurants, things to do, and even what board games to play on the road. Personal Intelligence looks like it could be Google's answer to the Siri Apple promised users back at WWDC 2025. Now, following the announcement of Apple and Google's major AI partnership, I wouldn't be surprised if this is our first glimpse at Siri in iOS 27, just without the Apple branding. Gemini is set to power the next generation of Siri, albeit without any Google branding, and that could mean just like when Gemini features launch on Pixel before a larger Android rollout, this could be an initial trial of Siri's upcoming capabilities before they get repackaged for iPhone. When you hear about new AI features that tap into everything you do online, it's absolutely normal to question your privacy. But Google promises Personal Intelligence has been built with privacy at its core, allowing you to decide exactly how Gemini connects to the apps in question. Google says Personal Intelligence is off by default, and Gemini only accesses the data to answer your specific requests. Because Personal Intelligence taps into other Google services, everything remains with Google, with no sensitive information sent to third parties. Everything Personal Intelligence does will also come with a source, allowing you to see exactly how Gemini came up with the specific answer that it provides. This means if you realize that Gemini has access to niche personal information you didn't even know you had shared online, it can show exactly where it came from. Personal Intelligence is also restricted by guardrails for sensitive topics, meaning Gemini will only seek information from sensitive data like health if you allow it to do so. It's a very neat feature, and Google seems to be focused on making sure consumers feel safe sharing more personal data with Gemini than ever before. Google says Gemini won't train directly on your Gmail inbox or Google Photos library, so you can have peace of mind that your privacy remains secure. So, you've got a glimpse of what Gemini is now able to offer thanks to Personal Intelligence, and you want to start using it straight away. Well, the good news is Personal Intelligence begins rolling out to eligible users in the US today. Personal Intelligence will work across Web, Android, and iOS, and is currently only available to personal Google accounts. Here's how you enable it:

[17]

Google connects Gemini to users' emails and photos in push to build a personal assistant | Fortune

For example, the feature allows users to ask Gemini to find specific photos from past trips, gather information from recent emails, or pull together information scattered across different Google services. For instance, the assistant could retrieve details like license plate numbers from photos or plan a family vacation by analyzing past travel patterns and family interests stored across Gmail and Photos. The new feature, which is being released in beta, will be rolled out on Wednesday to Google AI Pro and AI Ultra subscribers in the U.S., with a wider rollout to all users planned within the week. Once enabled, Personal Intelligence will work across Web, Android, and iOS and through all of the models in the Gemini model picker. The company also plans to eventually roll the feature out in Search's AI Mode. Google said in a blog post announcing the feature that the new capability "has two core strengths: reasoning across complex sources and retrieving specific details from, say, an email or photo to answer your question. It often combines these, working across text, photos, and video to provide uniquely tailored answers." The move is part of Google's attempt to create an AI system that functions like a personal executive assistant, something industry leaders, including OpenAI CEO Sam Altman, Microsoft founder Bill Gates and Mustafa Suleyman, the DeepMind cofounder who now serves as CEO of Microsoft's AI efforts, have long argued will be AI's most valuable consumer use case. By giving Gemini access to a user's Google ecosystem, the bot can automatically understand a user's context and preferences without the need to specifically include all those details in the prompt. Google already benefits from having extensive data on users -- such as search history, emails, and calendars -- which positions the company well to deliver this personalized experience with Gemini. If the feature gains significant adoption, it could strengthen Google's competitive position. All of the leading AI labs are currently working on AI systems that have some understanding of an individual user's preferences and can handle tasks autonomously. But the data advantages that Google has through its ecosystem of popular products and apps may be difficult for others to match. Microsoft has been expanding its Copilot platform with features including long-term memory, integration with services like Google Drive and Gmail, as well as introducing 'Actions' that allow the assistant to book tickets and make reservations on behalf of users. Anthropic also recently launched its Claude Cowork, a file-managing AI agent designed for non-technical users that works proactively within a user's files. Allowing AI agents this kind of deep access, however, presents data privacy and security issues. For example, in the case of Personal Intelligence, connected accounts could expose sensitive information like personal details from email correspondence, financial data from banking notifications, or location histories from photos and Maps activity. There's also the risk of unauthorized access if a user's Google account is compromised, potentially giving bad actors access to information aggregated from multiple services. Google said the capability will be off by default to give users more control over privacy and security. Even when a user connects their apps to Gemini, the company said Personal Intelligence won't be used for every response but only when Gemini determines that doing so will be helpful. Google is also asking users to give feedback about personalization via the thumbs-down button. The company is also introducing guardrails for sensitive topics, with Gemini trained to avoid making proactive assumptions about sensitive data, such as a user's health details.

[18]

Google Gemini Is About to Get to Know You Way Better

Google says Personal Intelligence will come to free users and other countries "soon." Since the days when Google Gemini was still called Bard, it's been able to connect with the company's other productivity apps to help pull context from them to answer your questions -- but you still had to connect those apps to the AI manually using extensions. And even after bringing your apps together, you usually had to tell Gemini where to look for your data to get much use out of its abilities. For Instance, if you wanted it to pull information from your emails, you might have started a prompt with "Search my email." Now, Google is making it easier to connect Gemini to its various services, and adding "reasoning" when pulling context from across your Google Workspace. It's calling the feature "Personal Intelligence." Rolling out in beta for paid subscribers in the U.S. today (and coming to other countries and free users "soon"), Personal Intelligence is an opt-in feature that currently works with Gmail, Photos, YouTube, and Search, all of which you can connect in one tap while setting up the feature. That alone makes it more convenient than a collection of extensions, but there are supposedly a few upgrades to general usability as well. The biggest is that Gemini will apparently be able to "learn" about you from a grab bag of sources all at once, without you having to specify where to look, and use that information to answer your questions. In an example, Google has a user say "I need to replace the tires for my car. Which ones would you suggest for me?" The bot then runs through multiple reasoning steps, pulling from all the data available to it, to find out what car the prompter drives and which tires would be best for it in the conditions the prompter tends to drive in. Specifically, in the example, it references actual vacations the prompter had taken in the past, using Google Photos data, while also using Gmail data to help the prompter find their car's specific trim. This can take a while, which is why there's an "Answer now" button next to the reasoning progress bar to stop the bot from getting stuck. In the example, it took about 10 seconds for the AI to generate a response. Google is promising its typical Workspace privacy guarantees with Personal Intelligence, saying "because this data already lives at Google securely, you don't have to send sensitive data elsewhere to start personalizing your experience." In other words, it's not going to move the needle on how much data about you Google can access, but at least it'll prevent you from having to connect your Workspace to third parties. The company also promises that data Personal Intelligence pulls from sources like Gmail won't be used to train Gemini, but that "specific prompts and responses" might be, at least after personal data has been filtered out. Google also says, "Gemini will try to reference or explain the information it used from your connected sources so you can verify it," although we don't have any examples of that in action yet. It's worth keeping an eye out, though, if you're worried about hallucinations. To that end, the company does suggest asking Gemini for more information about what it used to come to its answers if you're unsatisfied, and to correct it "if a response feels off," perhaps by saying something like "Remember, I prefer window seats." Theoretically, Gemini will then remember this for next time, using its existing chat history feature. If you're continually unsatisfied, you can hit the thumbs down button on responses to provide feedback. Google says that eligible users should see an invitation to try Personal Intelligence on the Gemini home screen as soon as it's rolled out to them, but if you don't, you can turn it on manually by following these steps: And that's it! Remember, Personal Intelligence is off by default and is only available for paid subscribers for now, so it may be some time until you can actually use it. Google also stresses the Gemini might not personalize every response, as that will save time on more simple requests. The company also said Personal Intelligence for AI Mode in Google Search is currently planned, but does not have a set release date.

[19]

Gemini Just Made Things 'Personal'

We may earn a commission when you click links to retailers and purchase goods. More info. Gemini's Personal Intelligence is officially live after having been announced last week. Available first to Google AI Pro and AI Ultra subscribers, Personal Intelligence brings a more personal AI experience to Gemini on the web, Android, and iOS devices. For a complete rundown of what Personal Intelligence is, read our previous post. To sum it up, if you grant access to Gemini, the AI will gather a broader picture of you and be better equipped with data to suggest things. By allowing it to comb Photos, Search, and many other Google services, you'll get a more "proactive and personalized" experience from Gemini. Personal Intelligence is limited to beta for the time being, available first to paid subscribers.

[20]

Google introduces Personal Intelligence personalization tool for Gemini - SiliconANGLE

Google introduces Personal Intelligence personalization tool for Gemini Google LLC today debuted a tool called Personal Intelligence that will enable consumers to tailor its Gemini chatbot to their preferences. The tool will initially be available to a limited number of paying users in the U.S. Google plans to expand access over time. Consumers can enable Personal Intelligence through a new option in the Gemini settings menu. The tool gives the chatbot access to information that the user keeps in other Google services such as Gmail, Google Photos and YouTube. Gemini draws on that data to refine its prompt responses. If the chatbot receives a request for recipe suggestions, it might analyze restaurant reservations in the user's inbox and customize its output accordingly. Gemini can also make use of multimodal data. For example, it might pull photos of the user's car from Google Photos to answer maintenance-related questions. "We needed new tires for our 2019 Honda minivan two weeks ago," Google executive Josh Woodward wrote in a blog post. "I asked Gemini. These days any chatbot can find these tire specs, but Gemini went further. It suggested different options: one for daily driving and another for all-weather conditions, referencing our family road trips to Oklahoma found in Google Photos." Gemini lists the personal data sources that it uses in prompt responses to help consumers verify the information. According to the company, the chatbot limits its use of personal data that pertains to sensitive topics such as the user's health. However, Gemini can incorporate that information into answers if it's specifically asked to do so. Google equipped Personal Intelligence with several privacy controls. The feature is disabled by default and users who enable it can customize which Google services it accesses. Additionally, a feature called temporary chats makes it possible to turn off personalization for specific chatbot sessions. Google will use Gemini's prompt responses to train its artificial intelligence models, but only after filtering any sensitive data they contain. For example, Personal Intelligence can remove the user's license plate number from an answer to a car-related question. Users can also go a step further by deleting Gemini's chat history. Gemini 3, the large language model series that powers the chatbot, can process prompts with up to 1 million tokens. The challenge Google encountered while developing Personal Intelligence is that the user file repositories the tool is asked to analyze often contain more than 1 million tokens. According to the company, its engineers overcame that obstacle using a method called context packing. The technique enables Gemini to find and use only the specific subset of a user dataset that is relevant to a given prompt.

[21]

Gemini Introduces Personal Intelligence to Connect Gmail, Photos and Other Google Apps | AIM

The feature can be used for recommendations on travel, entertainment and shopping, based on users' past activity. Google has introduced Personal Intelligence, a new feature for its AI assistant Gemini, which allows users to connect their Google apps -- such as Gmail, Google Photos, YouTube, and Search -- to receive more context-aware responses. Access to Personal Intelligence will roll out over the next week to eligible Google AI Pro and AI Ultra subscribers in the US. It will be available on the web, Android, and iOS, and is limited to personal Google accounts. Google said it plans to expand access to more countries and eventually to the free tier, and to bring the feature to AI Mode in Search. Personal Intelligence is designed to help Gemini retrieve and reason across personal information stored in connected apps, while giving users control over what data is accessed. It allows Gemini to answer questions by pulling specific details from emails, photos or searches, and combining information with general web knowledge. The system can also explain where information was sourced from, the company said. The feature is disabled by default and can be enabled or disabled at any time. "The best assistants don't just know the world; they know you and help you navigate it," Josh Woodward, vice president of Google Labs, Gemini and AI Studio, said in a statement. "You control exactly which apps to link, and each one enhances the experience." Woodward shared an example in which Gemini identified the correct tyre size for his family's minivan, suggested tyre options based on travel history found in Photos, retrieved the vehicle's license plate number from an image, and confirmed the trim level using Gmail. Google said the feature can also be used for recommendations related to travel, entertainment and shopping, based on a user's past activity across connected apps. Regarding privacy, the company stated that Personal Intelligence does not train directly on users' Gmail inboxes or Photo libraries. "We don't train our systems to learn your license plate number; we train them to understand that when you ask for one, we can locate it," Woodward said. Users can opt out of personalisation for specific chats, use temporary chats or disconnect apps at any time. Google acknowledged that the beta may produce inaccurate or overly personalised responses and encouraged users to provide feedback. The company said it has guardrails to avoid making proactive assumptions about sensitive data, such as health information.

[22]

Gemini gains Personal Intelligence to synthesize data from Gmail and Photos

Google launched a beta feature called Personal Intelligence in its Gemini app on Wednesday, enabling the AI assistant to tailor responses by reasoning across users' Gmail, Photos, Search, and YouTube history data. Prior to this update, Gemini could retrieve information from these connected Google apps. The new capability allows it to analyze data across multiple sources simultaneously, delivering proactive results. For instance, it links an email thread to a relevant video watched by the user. Google explains that this process enables Gemini to grasp contextual details without explicit instructions on data locations. Personal Intelligence activates only after users opt in by connecting their Google apps to Gemini, as it remains off by default. This setup respects user preferences, given concerns about AI access to personal photos and YouTube history. Once enabled, Gemini engages Personal Intelligence selectively, applying it solely when the system deems it beneficial for the query. Video: Google Josh Woodward, Vice President of the Gemini app, Google Labs, and AI Studio, detailed the feature in a blog post. He stated, "Personal Intelligence has two core strengths: reasoning across complex sources and retrieving specific details from, say, an email or photo to answer your question." Woodward added, "It often combines these, working across text, photos and video to provide uniquely tailored answers." These strengths facilitate responses that integrate diverse data types for precision. Woodward provided a practical example from his experience at a tire shop. While waiting in line, he could not recall his car's tire size. Standard AI chatbots might offer general tire size information, but Gemini exceeded this by delivering a customized suggestion. It identified family road trip photos stored in Google Photos and recommended all-weather tires accordingly. In the same interaction, when Woodward forgot his license plate number, Gemini extracted it directly from a photo in his Photos library, demonstrating retrieval from visual content. Beyond automotive scenarios, Woodward reported Gemini delivering strong recommendations in various domains. These include books, shows, clothes, and travel options. Recently, it assisted with spring break planning. By examining family interests and records of past trips from Gmail emails and Google Photos, Gemini avoided popular tourist areas. Instead, it proposed an overnight train trip paired with particular board games suitable for the journey, tailoring the itinerary to documented preferences. Google implemented safeguards for sensitive information. Gemini refrains from proactive inferences involving topics like health data. It processes such details only upon direct user request. Separately, Google confirms that Gemini undergoes no direct training on individual Gmail data, preserving user privacy in model development.

[23]

Google Wants to Give Gemini Users a More Personalized Experience - Phandroid

Google's focus on making Gemini (and AI in general) a more personal experience for users has pretty much stayed on track over the past couple of years, and the company most recently announced the rollout of its new "Personal Intelligence" feature which arrives for Android, iOS and web platforms. Once activated, Personal Intelligence integrates with every model available within Gemini, and taps into users' different apps like Gmail, YouTube and Google Photos to give more tailored answers to queries. Part of Google's official announcement reads: Personal Intelligence securely connects information from apps like Gmail and Google Photos to make Gemini uniquely helpful. If you turn it on, you control exactly which apps to link, and each one supercharges the experience. It connects Gmail, Photos, YouTube and Search in a single tap, and we've designed the setup to be simple and secure. Users can expect to see these capabilities arrive in Google Search's AI Mode shortly. It should be noted that it's still in its beta phase, so the feature is restricted to personal Google accounts and is not yet available for Workspace business, enterprise, or education users. It's also turned off by default, so users can choose which specific apps they want to connect to the feature. Additionally, Gemini will continue to refer to past conversations in order to guide its responses, although users can opt out of this at any time by disabling the "Past Gemini chats" setting or by manually managing and deleting their chat history. Personal Intelligence launches on January 14, 2026 for Google AI Pro and AI Ultra subscribers in the United States. While the initial release is targeted at premium users, Google expects the rollout to reach all eligible accounts within the week, with plans to expand to the free tier and additional countries throughout the year.

[24]

Google Just Gave Gemini "Personal Intelligence"