Google Search to Introduce AI-Generated Image Labels: A Step Towards Combating Deepfakes

14 Sources

14 Sources

[1]

Google Search Will Soon Show You AI-Generated Labels For Images: Could It End Deepfakes? - News18

Google is bringing new AI tools to detect and label images generated or edited using AI tools on the internet. Here's what people can expect from this. Google's AI push in 2024 is getting another big boost as the company looks to thwart the dangers posed by deepfake and AI-generated content on the internet. The AI overviews are already giving you summarised AI-based results in Search but Google realises the perils of the new technology which can be easily used to dupe people and manipulate their thinking. To make sure it doesn't become a long-term problem, Google will start showing labels on AI-generated images or even images that are edited using the AI tools. Google will use its expertise in AI and bring the option for YouTube as well, but that'll happen at a later date. For now, the feature will work in Search, Google Lens and the new Circle to Search option. Google has a clear plan on how the images will be labelled and how the company will use its back-end tech to identify these images from billions that are hosted on the platform. Having said that, Google's task of identifying these images will be based on the Coalition for Content Provenance and Authenticity or (C2PA) metadata that gives you granular details about the visual content, its image history and also the software that has been used to edit/create them. Knowing these details should ideally make it a cakewalk for Google to go about labelling the images, but there is a big challenge in making that happen. After all, Search hosts billions of images, and not all of them have the C2PA metadata. More importantly, this metadata can be removed or made unreadable, which puts Google in a spot with its mission to tackle the deepfake image problem. The company says if the image contains C2PA metadata, users can go to the About this image section and fetch the details. The good thing is that companies like OpenAI, Amazon, Microsoft and Adobe have joined the C2PA bandwagon. However, the ratio of content with the C2PA metadata and without it is against the company's strategy to label AI-generated photos. Like any technology, Google will have to adapt to the evolving nature of AI-generated content, and ensure that people are aware of the images that are original, and the ones that have been edited.

[2]

Google searches will now detect origin of AI-manipulated images

Google moves forward with C2PA AI labeling, the initiative's first big test. Credit: Leon Neal/ Getty Images News via Getty Images Google is expanding efforts to properly label AI-generated content, updating its in-house "About This Image" tool with a global standard for detecting the origins of an AI-edited image. The new label was formulated as part of Google's work with the global Coalition for Content Provenance and Authenticity (C2PA). Members of the C2PA have committed to developing and adopting a standardized AI certification and detection process, enabled by a verification technology known as "Content Credentials." Not all C2PA members, which include Amazon, Meta, and OpenAI, have implemented the authentication standards, however. Google is taking the first step among key players, integrating the C2PA's new 2.1 standard into products like Google Search and eventually Google Ads (the "About This Image" prompt is found by clicking on the three vertical dots located above a photo uncovered in a search). This standard includes an official "Trust List" of devices and technology that can help vet the origin of a photo or video through its metadata. "For example, if the data shows an image was taken by a specific camera model, the trust list helps validate that this piece of information is accurate," Laurie Richardson, Google vice president of trust and safety, told the Verge. "Our goal is to ramp this up over time and use C2PA signals to inform how we enforce key policies." After joining the C2PA in May, TikTok became the first video platform to implement the C2PA's Content Credentials, including an automatic labeling system to read a video's metadata and flag as AI. With the launch of Content Credentials for Google platforms, YouTube is set to follow in its footsteps. Google has been vocal about widespread AI labeling and regulation, especially in its efforts to curb the spread of misinformation. In 2023, Google launched SynthID, its own digital watermarking tool designed to help detect and track AI-generated content made using Google DeepMind's text-to-image generator, Imagen. It introduced (limited) AI labeling mandates for YouTube videos earlier this year, and has committed to addressing AI-generated deepfake content in Google Search. The company joined the C2PA steering committee in February, a group that includes other major industry players and even news organizations, like the BBC.

[3]

AI-generated and edited images will soon be labeled in Google Search results

Google is finally doing something about AI images, but it's not quite enough Google has announced that it will begin rolling out a new feature to help users "better understand how a particular piece of content was created and modified". This comes after the company joined the Coalition for Content Provenance and Authenticity (C2PA) - a group of major brands trying to combat the spread of misleading information online - and helped develop the latest Content Credentials standard. Amazon, Adobe and Microsoft are also committee members. Set to release over the coming months, Google says it will use the current Content Credentials guidelines - aka an image's metadata - within its Search parameters to add a label to images that are AI-generated or edited, providing more transparency for users. This metadata includes information like the origin of the image, as well as when, where and how it was created. However, the C2PA standard, which gives users the ability to trace the origin of different media types, has been declined by many AI developers like Black Forrest Labs -- the company behind the Flux model that X's (formerly Twitter) Grok uses for image generation. This AI-flagging will be implemented through Google's current About This Image window, which means it will also be available to users through Google Lens and Android's 'Circle to Search' feature. When live, users will be able to click the three dots above an image and select "About this image" to check if it was AI-generated - so it's not going to be as evident as we hoped. While Google needed to do something about AI images in its Search results, the question remains as to whether a hidden label is enough. If the feature works as stated, users will need to perform extra steps to verify whether an image has been created using AI before Google confirms it. Those who don't already know about the existence of the About This Image feature may not even realize a new tool is available to them. While video deepfakes have seen instances like earlier this year when a finance worker was scammed into paying $25 million to a group posing as his CFO, AI-generated images are nearly as problematic. Donald Trump recently posted digitally rendered images of Taylor Swift and her fans falsely endorsing his campaign for President, and Swift found herself the victim of image-based sexual abuse when AI-generated nudes of her went viral. While it's easy to complain that Google isn't doing enough, even Meta isn't too keen to spring the cat out of the bag. The social media giant recently updated its policy on making labels less visible, moving the relevant information to a post's menu. While this upgrade to the 'About this image' tool is a positive first step, additional aggressive measures will be required to keep users informed and protected. More companies, like camera makers and developers of AI tools, will also need to accept and use the C2PA's watermarks to ensure this system is as effective as it can be as Google will be dependent on that data. Few camera models like the Leica M-11P and the Nikon Z9 possess the built-in Content Credentials features, while Adobe has implemented a beta version in both Photoshop and Lightroom. But again, it's up to the user to use the features and provide accurate information. In a study by the University of Waterloo, only 61% of people could tell the difference between AI-generated and real images. If those numbers are accurate, Google's labeling system won't offer any added transparency to more than a third of people. Still, it's a positive step from Google against the fight to reduce misinformation online, but it would be good if the tech giants made these labels a lot more accessible.

[4]

Google Search has a new trick up its sleeve -- and it might save you from fake AI images

Google is teaming up with the Coalition for Content Provenance and Authenticity (C2PA) to create technology that looks to identify whether an image was taken with a camera, edited by programs like Photoshop or produced by generative AI models like Google's own Gemini model. As part of the technology, Google Search results will get a new "about this image feature" that informs you how an image was created or edited. Google outlined the coming tools in a blog post, and the company outlined the system that comes with C2PA. The Coalition is a group dedicated to addressing misinformation and AI-generated images. The group has created an authentication technical standard that includes information about the origination of the content to create a digital trail. The standard has been backed by some major companies investing in the AI space, including Google, Amazon, Microsoft, OpenAI and Adobe. So far, the adoption of the standard hasn't taken off. However, Google incorporating the standard into search results will be the first big test for the standard. Google says it collaborated on the newest version of the standard (2.1) and will combine it with an upcoming C2PA trust list. The trust list allows Google Search to confirm the origin of the images. "For example, if the data shows an image was taken by a specific camera model, the trust list helps validate that this piece of information is accurate," explains Laurie Richardson, Google's VP of Trust & Safety. The authentication credentials come from two specific Google products: Search and Ads. "Our goal is to ramp this up over time and use C2PA signals to inform how we enforce key policies," says Richardson. "We're also exploring ways to relay C2PA information to viewers on YouTube when content is captured with a camera, and we'll have more updates on that later in the year." Other AI companies have joined with the C2PA to add the credential from the jump. These include OpenAI, which joined the coalition in May of this year. Runway, which just signed a massive deal with Lionsgate, launched C2PA credentials in the third generation of its AI video generation. "Establishing and signaling content provenance remains a complex challenge, with a range of considerations based on the product or service," says Richardson. "And while we know there's no silver bullet solution for all content online, working with others in the industry is critical to create sustainable and interoperable solutions."

[5]

Google Search results will soon highlight AI-generated images

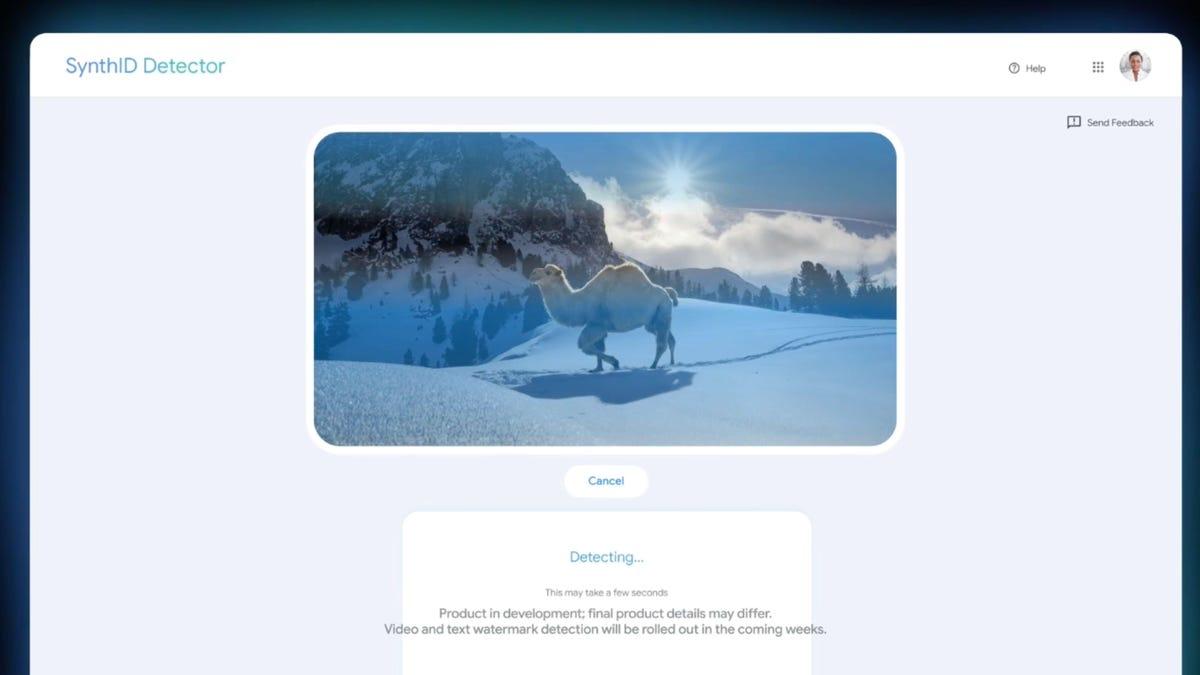

Google continues to advance its efforts in content transparency, focusing on tools that help users understand how media -- such as images, videos, and audio -- has been created and modified. A key development in this area is its collaboration with the Coalition for Content Provenance and Authenticity (C2PA), where Google plays an active role as a steering committee member. The goal of this partnership is to enhance online transparency as content moves across platforms, providing users with better information on the origins and alterations of the media they engage with. The C2PA focuses on content provenance technology, which helps users determine whether a piece of content was captured by a camera, edited through software, or generated by AI. This initiative aims to equip people with information that builds media literacy and allows them to make more informed decisions about the authenticity of the content they encounter. According to the announcement, Google has been contributing to the latest version (2.1) of the C2PA's Content Credentials standard, which now has stricter security measures to prevent tampering, helping ensure that provenance information is not misleading. Over the next few months, Google plans to integrate this new version of Content Credentials into some of its key products. For instance, users will soon be able to access C2PA metadata through the "About this image" feature in Google Search, Google Images, and Google Lens. This feature will help users identify whether an image has been created or altered using AI tools, providing more context about the media they come across. Google is also incorporating these metadata standards into its advertising systems. Over time, the company aims to use C2PA signals to inform how it enforces ad policies, potentially shaping the way ad content is monitored for authenticity and accuracy. Additionally, Google is exploring ways to extend this technology to YouTube, with the possibility of allowing viewers to verify the origins of video content in the future. This expansion would further Google's push to bring transparency to media creation across its platforms. Google's role in the C2PA extends beyond its own product integrations. The company is also encouraging broader adoption of content provenance technology across the tech industry. The goal is to create interoperable solutions that work across platforms, services, and hardware providers. This collaborative approach is seen as crucial to creating sustainable, industry-wide standards for verifying the authenticity of digital content. To complement its work with the C2PA, Google is also pushing forward with SynthID, a tool developed by Google DeepMind that embeds watermarks into AI-generated content. These watermarks allow AI-created media to be more easily identified and traced, addressing concerns about the potential for misinformation spread by AI tools. Artists Google to court over AI image generator As more digital content is created using AI tools, ensuring that provenance data remains accurate and secure will be crucial. Google's involvement in C2PA is part of a broader effort to address these challenges, but the effectiveness of these measures will depend on widespread industry cooperation and adoption. Google's efforts to address AI-generated content in its search results are a step toward more transparency, questions remain about the effectiveness of the approach. The "About This Image" feature, which provides additional context about whether an image was created or edited with AI, requires users to take extra steps to access this information. This could be a potential limitation, as users who are unaware of the tool may never know it's available to them. The feature relies heavily on users actively seeking out the provenance of an image, which may not be the default behavior for many. The broader challenge lies in making such transparency tools more intuitive and visible to users, so they can quickly and easily verify content without having to dig for details. As AI-generated content continues to grow, the need for seamless verification will only become more pressing, raising questions about whether hidden labels and extra steps are enough to maintain trust in digital media.

[6]

Google will begin flagging AI-generated images in Search later this year | TechCrunch

Google says that it plans to roll out changes to Google Search to make clearer which images in results were AI-generated -- or edited by AI tools. In the next few months, Google will begin to flag AI-generated and -edited images in the "About this image" window on Search, Google Lens and Circle to Search. Similar disclosures may make their way to other Google properties, like YouTube, in the future; Google says it'll have more to share later this year. Crucially, only images containing "C2PA metadata" will be flagged as AI-manipulated in Search. C2PA, short for Coalition for Content Provenance and Authenticity, is a group developing technical standards to trace an image's history, including the equipment and software used to capture and/or create it. Companies including Google, Amazon, Microsoft, OpenAI and Adobe back C2PA. But the coalition's standards haven't seen widespread adoption. As The Verge noted in a recent piece, the C2PA faces plenty of adoption and interoperability challenges; only a handful of generative AI tools and cameras from Leica and Sony support the group's specs. Moreover, C2PA metadata -- like any metadata -- can be removed or scrubbed, or become corrupted to the point where it's unreadable. And images from some of the more popular generative AI tools, like Flux, which xAI's Grok chatbot uses for image generation, don't have C2PA metadata attached to them in part because their creators haven't agreed to back the standard. Some measures are better than none, granted, as deepfakes continue to rapidly spread. According to one estimate, there was a 245% increase in scams involving AI-generated content from 2023 to 2024. Deloitte projects that deepfake-related losses will soar from $12.3 billion in 2023 to $40 billion by 2027. Surveys show that the majority of people are concerned about being fooled by a deepfake and about AI's potential to promote the spread of propaganda.

[7]

Google to roll out AI-label for search results; Aims to bring transparency among users

You will soon be able to identify AI-generated content, as Google plans to introduce AI-label for search results. In an official blog the tech giant said that it will mark anyform of AI content. Google said that this new initiative can bring transparency among users. Google explained in a blog post that it will be using the Coalition for Content Provenance and Authenticity (C2PA) technology. This will help to identify any form of AI-generated content when people use Google search. Google to bring AI-labels Google announced that it will use the technology from the Coalition for Content Provenance and Authenticity (C2PA). Google will use this technology to tag content with specific metadata. This tag will eventually help in indicating whether the content was created by AI or not. In addition to this in the coming months, these labels will be added to products including Google Search, Lens and Images. So, how does this feature work and how will it help you? When you come across images or media that contain C2PA metadata, you will be able to use Google's "About this image" feature. This 'About this image' will help you to see if it was AI-generated or edited. This feature can also provide valuable context about images. This can eventually help you to understand more about the origins of the content you will interact with. Furthermore, Google will also add the upcoming AI-label in its advertising systems. By using C2PA metadata, you can identify which ad is using AI. It will also help to reveal if the ad complies with Google's advertising regulations or not. This can create transparency between users and advertisers. What's in for you There has been a rising concern about AI-generated content. Many illegal and fraud activities are being initiated with such content. Also in addition to this AI-content can contribute to spreading misinformation among users. Google's new initiative can bring a solution to all this problem. In addition to Google search and Google Images, the tech giant also plans to add it for YouTube. Furthermore, to secure this system, Google and its partners have developed new technical standards. This set of regulations are known as 'Content Credentials.' These will help the platforms to track the history of content creation. These credentials will help verify 'whether a photo or video was taken by a specific camera model, edited, or generated through AI', Google explained in a blog. The regulations can also help to protect tampering of data and ensure that the source remains authentic.

[8]

Google Search is about to make it easier to spot AI images

As AI gets increasingly better at creating or editing images and videos, the harder it's becoming to tell what's real and what's fake. To address this issue, Google says it will be adopting a technical standard specifically made to help identify AI-altered content. Today, Google announced it is bringing provenance -- place of origin -- technology to a few of its products in the coming months. Specifically, it will be using the Coalition for Content Provenance and Authenticity's (C2PA) technical standard known as Content Credentials. For context, Google partnered with C2PA earlier this year and worked on the latest version of the standard. Content Credentials explains where the image or video originated, as in if it was taken with a camera, edited by software, or produced by generative AI.

[9]

Here's how Google will start helping you figure out which images are AI generated

The company has detailed how it will implement C2PA watermarking in Search and other services. is trying to be more transparent about whether a piece of content was created or modified using generative AI (GAI) tools. After joining the Coalition for Content Provenance and Authenticity (C2PA) as a steering committee member earlier this year, Google has revealed how it will start implementing the group's digital watermarking standard. Alongside including Amazon, Meta, and OpenAI, Google has spent the past several months figuring out how to improve the tech used for watermarking GAI-created or modified content. The company says it helped to develop the latest version of , a technical standard used to protect metadata detailing how an asset was created, as well as information about what has been modified and how. Google says the current version of Content Credentials is more secure and tamperproof due to stricter validation methods. In the coming months, Google will start to incorporate the current version of Content Credentials into some of its main products. In other words, it should soon be easier to tell whether an image was created or modified using GAI in Google Search results. If an image that pops up has C2PA metadata, you should be able to find out what impact GAI had on it via the tool. This is also available in Google Images, Lens and Circle to Search. The company is looking into how to use C2PA to tell YouTube viewers when footage was captured with a camera. Expect to learn more about that later this year. Google also plans to use C2PA metadata in its ads systems. It didn't reveal too many details about how its plans there other than to say it will use "C2PA signals to inform how we enforce key policies" and do so gradually. Of course, the effectiveness of this all depends on whether companies such as camera makers and the developers of GAI tools actually use the C2PA watermarking system. The approach isn't going to stop someone from stripping out an image's metadata either. That could make it harder for systems such as Google's to detect any GAI usage. Meanwhile, throughout this year, we've seen Meta over how to disclose whether images were created with GAI across Facebook, Instagram and Threads. The company just to make labels less visible on images that were edited with AI tools. Starting this week, if C2PA metadata indicates that someone (for instance) used Photoshop's GAI tools to tweak a genuine photo, the "AI info" label no longer appears front and center. Instead, it's buried in the post's menu.

[10]

Google introduces AI content labels for enhanced transparency online: How it works

California-based tech giant Google is rolling out new measures to clearly identify content that has been created or modified using artificial intelligence (AI). As AI-generated media continues to proliferate, Google's move aims to boost transparency and provide users with better insight into the authenticity of the information they encounter online. This initiative is part of Google's collaboration with the Coalition for Content Provenance and Authenticity (C2PA), of which the company is a steering committee member. By embedding specific metadata into AI-generated content, Google will allow users to easily identify if an image, video, or other media has been created or edited by AI tools. These labels will soon appear in Google Search, Images, and Lens, giving users the ability to view the origin of the content via the "About this image" feature. The introduction of these labels is designed to provide crucial context around AI-generated media, helping users better understand the source and nature of the content they are consuming. This step comes at a time when AI tools are increasingly used to create media, raising questions about content authenticity and trustworthiness. In addition to search results, Google is expanding this AI content labelling to its ad platforms. The C2PA metadata will ensure that advertisements containing AI-generated content comply with Google's ad policies. This is expected to enhance Google's ability to enforce regulations on AI-generated ads, creating a safer and more transparent environment for both users and advertisers. Google is also looking to bring similar labelling to YouTube, with plans to mark videos that have been generated or edited using AI technology. More details on this feature are expected to be unveiled in the coming months. To secure these changes, Google and its partners are implementing new technical standards called "Content Credentials," which will track the creation history of content, including whether it was captured by a camera or generated by AI. This new system, combined with Google's SynthID watermarking tool, aims to provide a robust framework for identifying AI-generated content and preserving media authenticity in the digital age. 3.6 Crore Indians visited in a single day choosing us as India's undisputed platform for General Election Results. Explore the latest updates here!

[11]

Google to roll out features for detecting AI-generated images across several services - SiliconANGLE

Google to roll out features for detecting AI-generated images across several services Google LLC plans to implement C2PA, a technology for verifying the authenticity of media files, in its search engine and advertising systems. The company detailed the initiative today. The C2PA, or Coalition for Content Provenance and Authenticity, is an industry group that was formed in 2021 by Intel Corp., Apple Inc. and a number of other companies. Google joined the consortium earlier this year. C2PA develops a technical standard of the same name that makes it possible to determine whether an image was generated using artificial intelligence or modified after its creation. The standard works by attaching a metadata file called a manifest to every image. This manifest specifies whether the image was generated with AI, when it was created and when. It's also possible to include other information such what edits that were made to a file after its initial release. Hackers could theoretically modify a tempered image's C2PA manifest to hide the fact that the image was edited. To prevent that possibility, the technology protects manifests from malicious edits using a cryptographic technique called hashing. Hashing provides the ability to create a unique digital fingerprint for a file. C2PA leverages the technology to generate a unique fingerprint for images' manifests. When hackers attempt to modify a photo's manifest, the manifest's fingerprint is modified as well, which makes it straightforward to spot tempering attempts. C2PA includes other cybersecurity features as well. Most notably, its hashing feature generates a unique fingerprint of not only the manifest that is attached to an image but also the image itself. C2PA then places the image's fingerprint into the manifest. This arrangement binds photos to their respective manifests and makes it impossible for hackers to replace one of the files with a counterfeit or an out-of-date version. As part of the initiative detailed today, Google plans to implement C2PA in its "About this image" feature. The feature, which is available in its search engine's Google Images, Lens and Circle to Search tools, provides contextual information about images. When a user selects an image that supports C2PA, the feature will display information on whether the file was created or modified using AI. Google is also integrating the technical standard into its advertising systems. Laurie Richardson, the company's vice president of trust and safety, detailed in a blog post that the goal is to support the enforcement of advertising policies. C2PA support will start rolling out to Google's ad and search systems in the coming months. Further down the line, the company may also bring bring C2PA to YouTube. "We're also exploring ways to relay C2PA information to viewers on YouTube when content is captured with a camera, and we'll have more updates on that later in the year," Richardson detailed.

[12]

Is that photo real or AI? Google's 'About this image' aims to help you tell the difference

Google wants to help you determine if the photo you're seeing online is real. As AI photo-generating programs and AI-powered photo-editing programs become more commonplace, it's sometimes difficult to tell what's authentic and what's not. In the coming months, Google says, a new technology will help with that. Also: Study finds AI-generated research papers on Google Scholar - why it matters Called "About this image," the feature is a part of Content Credentials, a technical standard for tracking the origin and history of an online asset. By using metadata in a photo, "About this image" can tell whether it's original, edited by software, or produced by AI. Google developed the tool in conjunction with the Coalition for Content Provenance and Authenticity (C2PA), an organization dedicated to addressing AI-generated content issues. The feature will be accessible in Google Images, Google Lens, and Circle to Search. When an image with C2PA metadata appears in Search, you can click "About this image" to learn about its origins. Google says its advertising system is starting to integrate C2PA, to ramp it up over time and use it to enforce ad policies. The tech giant is also exploring ways to use C2PA on YouTube to relay information to viewers about how the creator produced the content -- for instance, the specific camera model they used. Amazon joined the C2PA committee last week, agreeing to link Content Credentials to content generated by its Titan Image Generator and adding the tool to AWS Elemental MediaConvert. Also: The best AI image generators: Tested and reviewed Since Adobe was a founding member of C2PA, editing programs Photoshop and Lightroom add the specific metadata, but many programs don't, so the number of photos in the "About this image" programs might be small at first. Also, it seems as though you could foil Google's system by simply removing metadata from a photo, but this is a good starting point in the fight against misinformation.

[13]

Google seeks authenticity in the age of AI with new content labeling system

C2PA system aims to give context to search results, but trust problems run deeper than AI tech. On Tuesday, Google announced plans to implement content authentication technology across its products to help users distinguish between human-created and AI-generated images. Over several upcoming months, the tech giant will integrate the Coalition for Content Provenance and Authenticity (C2PA) standard, a system designed to track the origin and editing history of digital content, into its search, ads, and potentially YouTube services. However, it's an open question whether a technological solution can fully address what is fundamentally an ancient social issue of trust in recorded media produced by strangers. Further Reading A group of tech companies created the C2PA system beginning in 2019 in an attempt to combat misleading, realistic synthetic media online. As AI-generated content becomes more prevalent and realistic, experts have worried that it may be difficult for users to determine the authenticity of images they encounter. The C2PA standard creates a digital trail for content, backed by an online signing authority, that includes metadata information about where images originate and how they've been modified. Google will incorporate this C2PA standard into its search results, allowing users to see if an image was created or edited using AI tools. The tech giant's "About this image" feature in Google Search, Lens, and Circle to Search will display this information when available. In a blog post, Laurie Richardson, Google's vice president of trust and safety, acknowledged the complexities of establishing content provenance across platforms. She stated, "Establishing and signaling content provenance remains a complex challenge, with a range of considerations based on the product or service. And while we know there's no silver bullet solution for all content online, working with others in the industry is critical to create sustainable and interoperable solutions." The company plans to use the C2PA's latest technical standard, version 2.1, which reportedly offers improved security against tampering attacks. Its use will extend beyond search, since Google intends to incorporate C2PA metadata into its ad systems as a way to "enforce key policies." YouTube may also see integration of C2PA information for camera-captured content in the future. Google says the new initiative aligns with its other efforts toward AI transparency, including the development of SynthID, an embedded watermarking technology created by Google DeepMind. Widespread C2PA efficacy remains a dream Despite having a history that reaches back at least five years now, the road to useful content provenance technology like C2PA is steep. The technology is completely voluntary, and key authenticating metadata can easily be stripped from images once added. Further Reading AI image generators would need to support the standard for C2PA information to be included in each generated file, which will likely preclude open source image synthesis models like Flux. So perhaps in practice, more "authentic," camera-authored media will be labeled with C2PA than AI-generated images. Beyond that, maintaining the metadata requires a complete toolchain that supports C2PA every step along the way, including at the source and any software used to edit or retouch the images. Currently, only a handful of camera manufacturers, such as Leica, support the C2PA standard. Nikon and Canon have pledged to adopt it, but The Verge reports that there's still uncertainty about whether Apple and Google will implement C2PA support in their smartphone devices. Adobe's Photoshop and Lightroom can add and maintain C2PA data, but many other popular editing tools do not yet offer the capability. It only takes one non-compliant image editor in the chain to break the full usefulness of C2PA. And the general lack of standardized viewing methods for C2PA data across online platforms presents another obstacle to having the standard become useful for everyday users. Further Reading At the moment, C2PA could arguably be seen as a technological solution for current trust issues around fake images. In that sense, C2PA may become one of many tools used to authenticate content by determining whether the information came from a credible source -- if the C2PA metadata is preserved -- but it is unlikely to be a complete solution to AI-generated misinformation on its own.

[14]

Google is Adding C2PA Image Content Credentials to Search and Ads

Google is planning to incorporate the Content Authenticity Initiative's (CAI) C2PA provenance technology into its Search and Ads systems to better identify if an image is straight out of camera, edited, or created by AI. C2PA provenance technology is metadata that is attached to an image and gives viewers the ability to tell if it was taken by a camera, edited by software, or produced with generative AI. The goal of the CAI and its C2PA content credentials is to help people stay more informed about the origins of an image. Google joined the CAI earlier this year and is a steering committee member along with Adobe, BBC, Intel, Microsoft, Publicis Groupe, Sony, and Truepic. It is now planning to add Content Credentials to two of its products: Search and Ads. In Search, if an image contains C2PA metadata, users will be able to click on the "About this image" option -- which is in Lens and Circle to Search, too -- to see if it was created with or edited with AI tools. The Ads system will also add C2PA integration and Google says its "goal is to ramp this up over time and use C2PA signals to inform how we enforce key policies." One key point is that for this system to work, C2PA metadata must be present in the images in question. If there is no metadata, it's not possible to see an image's provenance. This has always been an issue with the rollout of C2PA, but the eventual hope is that users will try and only trust images that bear the C2PA metadata. At this time, however, the number of images that don't have the metadata vastly outnumbers those that do. That's why Google says it is encouraging more hardware providers to adopt C2PA Content Credentials since the loop only works when everyone agrees to use the standard. Most camera manufacturers have signed on to the C2PA standard and are members of the CAI, but to date, very few cameras actively use the metadata -- Leica's M11-P is one example. Google says it is "exploring" ways to give YouTube viewers visibility to C2PA information, too. "We're also exploring ways to relay C2PA information to viewers on YouTube when content is captured with a camera, and we'll have more updates on that later in the year. We will ensure that our implementations validate content against the forthcoming C2PA Trust list, which allows platforms to confirm the content's origin. For example, if the data shows an image was taken by a specific camera model, the trust list helps validate that this piece of information is accurate," Google says. "These are just a few of the ways we're thinking about implementing content provenance technology today, and we'll continue to bring it to more products over time." Google isn't just betting on C2PA. It is also using SynthID and has joined a set of other coalitions and groups focused on AI safety and research such as the Secure AI Framework. That said, its application of AI in the new Pixel 9 series phones do not do a good job of highlighting the use of AI, which is especially concerning given how realistic its "Reimagine" edits are.

Share

Share

Copy Link

Google is set to implement a new feature in its search engine that will label AI-generated images. This move aims to enhance transparency and combat the spread of misinformation through deepfakes.

Google's New Initiative to Label AI-Generated Images

In a significant move towards transparency and authenticity in the digital realm, Google has announced plans to introduce labels for AI-generated and edited images in its search results. This feature, set to roll out in the coming months, aims to help users distinguish between authentic and artificially created or manipulated visual content

1

.The Technology Behind the Labels

Google's approach leverages the Content Credentials system, developed by the Coalition for Content Provenance and Authenticity (C2PA). This technology embeds metadata into images, providing information about their origin and any modifications made

2

. The labels will appear as small icons on image thumbnails in search results, allowing users to access more detailed information about the image's history and creation process.Implications for Combating Misinformation

As the proliferation of AI-generated content continues to rise, this initiative represents a crucial step in the fight against deepfakes and visual misinformation. By providing users with clear indications of an image's provenance, Google aims to empower individuals to make more informed judgments about the content they encounter online

3

.Related Stories

Limitations and Challenges

While this feature marks a positive development, it's important to note its limitations. The labeling system relies on content creators and AI companies voluntarily adding the necessary metadata to their images. This means that not all AI-generated content will be automatically labeled, potentially leaving room for some manipulated images to slip through undetected

4

.Industry-Wide Efforts and Future Prospects

Google's initiative aligns with broader industry efforts to address the challenges posed by AI-generated content. Other tech giants, including Adobe and Microsoft, have also committed to implementing similar labeling systems. This collaborative approach suggests a growing recognition of the need for transparency in the age of AI

5

.As this technology evolves, it has the potential to extend beyond images to other forms of media, including audio and video content. This could provide a more comprehensive solution to the complex issue of digital misinformation and help maintain trust in online information ecosystems.

References

Summarized by

Navi

[1]

[4]

Related Stories

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Trump orders federal agencies to ban Anthropic after Pentagon dispute over AI surveillance

Policy and Regulation

3

Google releases Nano Banana 2 AI image model with Pro quality at Flash speed

Technology