Google Unveils Geospatial Reasoning: A Revolutionary AI Approach to Analyzing Earth Data

2 Sources

2 Sources

[1]

We're introducing a new way to analyze geospatial data.

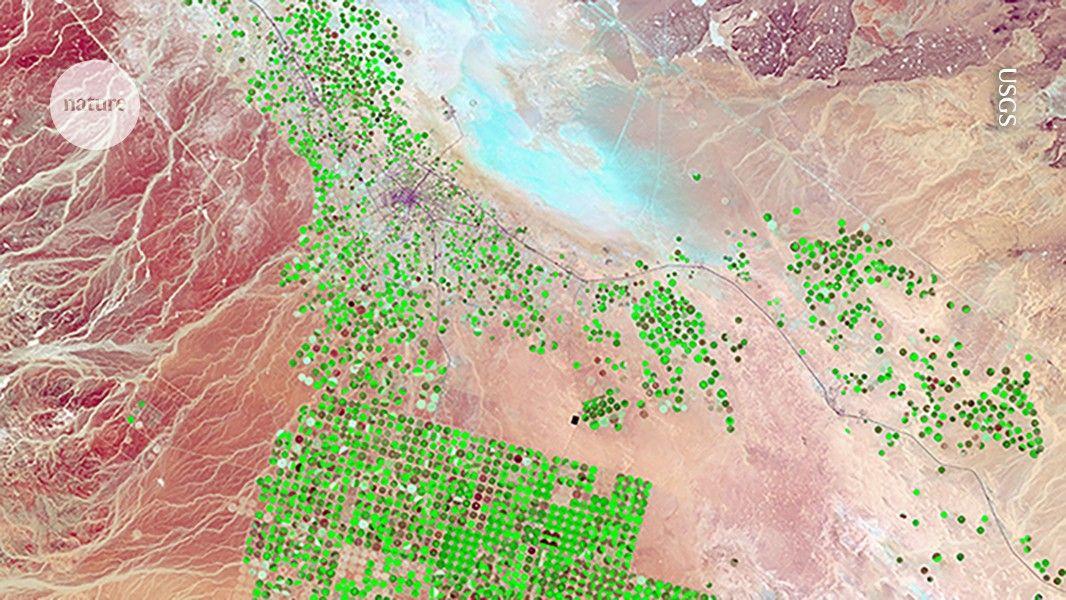

Imagine being able to get complex insights about our planet -- like the impacts of hurricanes or best locations for city infrastructure -- simply by asking Gemini. Geospatial Reasoning, a new research effort introduced today by Google Research, aims to provide comprehensive answers about our world, complete with detailed plans and data visualizations. Google Research's novel geospatial AI foundation models, including our latest remote sensing foundation models, are trained on vast amounts of satellite and aerial imagery. These models can analyze images that are undecipherable to the human eye and support natural language queries about their features, such as changes to buildings or impassable roads. Geospatial Reasoning will combine these models with Gemini 2.5 to understand questions in natural language, and intelligently plan and orchestrate analysis across diverse geospatial data sources -- including maps, socioeconomic data, satellite imagery and your own proprietary datasets.

[2]

Google's new AI could predict disasters before they hit

Google is leveraging generative AI and multiple foundation models to introduce Geospatial Reasoning, a research initiative designed to accelerate geospatial problem-solving. This effort integrates large language models like Gemini and remote sensing foundation models to enhance data analysis across various sectors. For years, Google has compiled geospatial data, which is information tied to specific geographic locations, to enhance their products. This data is crucial for addressing enterprise challenges such as those in public health, urban development, and climate resilience. The new remote sensing foundation models are built upon architectures like masked autoencoders, SigLIP, MaMMUT, and OWL-ViT, and are trained using high-resolution satellite and aerial images with text descriptions and bounding box annotations. These models generate detailed embeddings for images and objects, and can be customized for tasks such as mapping infrastructure, assessing disaster damage, and locating specific features. These models support natural language interfaces, enabling users to perform tasks like finding images of specific structures or identifying impassable roads. Evaluations have demonstrated state-of-the-art performance across various remote sensing benchmarks. Geospatial Reasoning aims to integrate Google's advanced foundation models with user-specific models and datasets, building on the existing pilot of Gemini capabilities in Google Earth. This framework allows developers to construct custom workflows on Google Cloud Platform to manage complex geospatial queries using Gemini, which orchestrates analysis across various data sources. The demonstration application shows how a crisis manager can use Geospatial Reasoning after a hurricane by: The demonstration application includes: The application uses high-resolution aerial images from the Civil Air Patrol, pre-processed with AI from Bellwether, X's moonshot for Climate Adaptation, plus Google Research's Open Buildings and SKAI models. Social vulnerability indices, housing price data, and Google WeatherNext insights are also incorporated. WPP's Choreograph will integrate PDFM with its media performance data to enhance AI-driven audience intelligence. Airbus, Maxar, and Planet Labs will be the initial testers of the remote sensing foundation models.

Share

Share

Copy Link

Google Research introduces Geospatial Reasoning, a groundbreaking AI initiative that combines Gemini 2.5 with advanced remote sensing models to provide comprehensive insights about our planet through natural language queries.

Google Introduces Geospatial Reasoning: A New Frontier in AI-Powered Earth Analysis

Google Research has unveiled Geospatial Reasoning, a cutting-edge initiative that promises to revolutionize how we analyze and understand our planet. This innovative approach combines the power of Gemini 2.5, Google's advanced language model, with specialized remote sensing foundation models to provide comprehensive insights about the Earth through natural language queries

1

.The Power of AI in Geospatial Analysis

At the heart of Geospatial Reasoning are Google's novel geospatial AI foundation models, trained on vast amounts of satellite and aerial imagery. These models can analyze images that are often indecipherable to the human eye, supporting natural language queries about various features such as changes to buildings or impassable roads

1

.The remote sensing foundation models are built upon sophisticated architectures like masked autoencoders, SigLIP, MaMMUT, and OWL-ViT. Trained using high-resolution satellite and aerial images with text descriptions and bounding box annotations, these models generate detailed embeddings for images and objects, enabling customization for tasks such as mapping infrastructure, assessing disaster damage, and locating specific features

2

.Integrating Multiple Data Sources for Comprehensive Insights

Geospatial Reasoning aims to provide a holistic understanding of our world by intelligently orchestrating analysis across diverse geospatial data sources. These include maps, socioeconomic data, satellite imagery, and even proprietary datasets. This integration allows for complex insights about our planet, such as the impacts of hurricanes or optimal locations for city infrastructure

1

.Related Stories

Real-World Applications and Partnerships

The potential applications of Geospatial Reasoning are vast and impactful. For instance, a demonstration application showcases how a crisis manager can utilize this technology in the aftermath of a hurricane. The application incorporates high-resolution aerial images from the Civil Air Patrol, pre-processed with AI from Bellwether, X's moonshot for Climate Adaptation, plus Google Research's Open Buildings and SKAI models. It also integrates social vulnerability indices, housing price data, and Google WeatherNext insights

2

.Google is also collaborating with industry partners to expand the reach and capabilities of Geospatial Reasoning. WPP's Choreograph will integrate PDFM (presumably a component of the system) with its media performance data to enhance AI-driven audience intelligence. Additionally, Airbus, Maxar, and Planet Labs will be the initial testers of the remote sensing foundation models

2

.The Future of Geospatial Problem-Solving

Geospatial Reasoning represents a significant leap forward in leveraging AI for Earth observation and analysis. By combining advanced language models with specialized remote sensing capabilities, Google is paving the way for more efficient and insightful geospatial problem-solving across various sectors, including public health, urban development, and climate resilience

2

.As this technology continues to evolve, it has the potential to transform how we understand and interact with our planet, offering unprecedented insights and supporting more informed decision-making in critical areas of global concern.

References

Summarized by

Navi

[2]

Related Stories

Google Earth AI Evolves: Gemini-Powered Geospatial Reasoning Tackles Climate Crises

23 Oct 2025•Technology

Google's AlphaEarth Foundations: AI Model Maps Earth in Unprecedented Detail

31 Jul 2025•Technology

Google Integrates Gemini AI into Maps, Earth, and Waze for Enhanced User Experience

01 Nov 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology