Google Unveils SynthID Text: A Breakthrough in AI-Generated Content Watermarking

24 Sources

24 Sources

[1]

Google Devises Watermarking Solution for its AI-Generated Text

Researchers from Google's DeepMind HQ in Britain have invented a new, seamless way to label AI-generated text. Publishing their findings in Nature magazine, the researchers claim it can help identify when writing was first generated by Google's own AI model - Gemini. While it gives some industries peace of mind, it can also stop AI models from cannibalizing their own content and experiencing model collapse. Google's interest in a watermarking solution shouldn't come as a surprise. Google doesn't just operate its own AI - Gemini - but it's also on the front line of judging content quality from human and AI sources through its search engine. To that end, they are always refining their search algorithms to better judge pages on writing quality and user intent. Using those, Google directs users to the content they want to see by using keywords and search trends. For example, it associates keywords like 'buy' with product-selling sites or 'play' with iGaming sites. Then, a 'play' search for online roulette will return a site like PeerGame where digital roulette, blackjack, and other games are on offer. This works quite straightforwardly for sites that offer services, like e-commerce and iGaming sites. However, for sites that deal with the written word, Google needs to be more stringent about what that written content says and how it has been generated. At first, Google's stance on AI-generated writing leaned negative out of an abundance of caution. However, following that common SEO mantra - content is king - they laxed their Google Search guidelines to accept AI-generated content if it's still high-quality material. This seems like a sensible approach, rewarding those who use AI to level up skills and services that they already offer.

[2]

What is Google's Watermark for AI-Generated Text?

Google is advancing in AI technology and the digital space by developing a watermark specifically for AI-generated written content. This is due to the company's recent efforts to maintain transparency while ensuring users can easily identify whether a human or a machine produced the content they see. However, what is this watermark, and how does it relate to other current redesign attempts by Google? As the usage of AI that writes content is on the rise, Google has developed a watermark for so that users know if the content was written by a human or AI. This is particularly important as tools like ChatGPT and Bard are trending and are creating content as fast as the ink on the paper dries. Based on the data, AI content generation is expected to rapidly increase as experts predict that AI can write more than 50% of the content on the internet by 2030. Given such a massive scale of AI integration, it becomes increasingly important for Google to build an open system that would offer users some level of understanding. This way, the watermark is intended to be noticeable enough so that users can immediately see that AI was involved in generating particular text while not being too invasive to distract the reader.

[3]

Google unveils invisible 'watermark' for AI-generated text

Researchers at Google DeepMind in London have devised a 'watermark' to invisibly label text that is generated by artificial intelligence (AI) -- and deployed it to millions of chatbot users. The watermark, reported in Nature on 23 October, is not the first to be made for AI-generated text. Nor is it able to withstand determined attempts to remove it. But it seems to be the first at-scale, real-world demonstration of a text watermark. "To my mind, by far the most important news here is just that they're actually deploying this," says Scott Aaronson, a computer scientist at the University of Texas at Austin, who until August worked on watermarks at OpenAI, the creators of ChatGPT based in San Francisco, California. Spotting AI-written text is gaining importance as a potential solution to the problems of fake news and academic cheating, as well as a way to avoid degrading future models by training them on AI-made content. In a massive trial, users of Google's Gemini large language model (LLM), across 20 million responses, rated watermarked texts as being of equal quality to unwatermarked ones. "I am excited to see Google taking this step for the tech community," says Furong Huang, a computer scientist at the University of Maryland in College Park. "It seems likely that most commercial tools will be watermarked in the near future," says Zakhar Shumaylov, a computer scientist at the University of Cambridge, UK. It is harder to apply a watermark to text than to images, because word choice is essentially the only variable that can be altered. DeepMind's watermark -- called SynthID-Text -- alters which words the model selects in a secret, but formulaic way that can be detected with a cryptographic key. Compared with other approaches, DeepMind's watermark is marginally easier to detect, and applying it does not slow down text generation. "It seems to outperform schemes of the competitors for watermarking LLMs," says Shumaylov, who is a former collaborator and brother of one of the study's authors. The tool has also been made open, so developers can apply their own such watermark to their models. "We would hope that other AI-model developers pick this up and integrate it with their own systems," says Pushmeet Kohli, a computer scientist at DeepMind. Google is keeping its own key secret, so users won't be able to use detection tools to spot Gemini-watermarked text. Governments are betting on watermarking as a solution to the proliferation of AI-generated text. Yet, problems abound, including getting developers to commit to using watermarks, and to coordinate their approaches. And earlier this year, researchers at the Swiss Federal Institute of Technology in Zurich showed that any watermark is vulnerable to being removed, called 'scrubbing', or to being 'spoofed', the process of applying watermarks to text to give the false impression that it is AI-generated. DeepMind's approach builds on an existing method that incorporates a watermark into a sampling algorithm, a step in text generation that is separate from the LLM itself. An LLM is a network of associations built up by training on billions of words or word-parts, known as tokens. When given a string of text, the model assigns to each token in its vocabulary a probability of being next in the sentence. The sampling algorithm's job is to select, from this distribution, which token to use, according to a set of rules. The SynthID-Text sampling algorithm uses a cryptographic key to assign random scores to each possible token. Candidate tokens are pulled from the distribution, in numbers proportional to their probability, and placed in a 'tournament'. There, the algorithm compares scores in a series of one-on-one knockouts, with the highest value winning, until there is only one token standing, which is selected for use in the text. This elaborate scheme makes it easier to detect the watermark, which involves running the same cryptographic code on generated text to look for the high scores that are indicative of 'winning' tokens. It might also make it more difficult to remove. The multiple rounds in the tournament can be likened to a combination lock, in which each round represents a different digit that must be solved to unlock or remove the watermark, says Huang. "This mechanism makes it significantly more challenging to scrub, spoof or reverse-engineer the watermark," she adds. With text containing around 200 tokens, the authors showed that they could still detect the watermark, even when a second LLM was used to paraphrase the text. For shorter strings of text, the watermark is less robust. The researchers did not explore how well the watermark can resist deliberate removal attempts. The resilience of watermarks to such attacks is a "massive policy question", says Yves-Alexandre de Montjoye, a computer scientist at Imperial College London. "In the context of AI safety, it's unclear the extent to which this is providing protection," he says. Kohli hopes that the watermark will start by being helpful for well-intentioned LLM use. "The guiding philosophy was that we want to build a tool that can be improved by the community," he says.

[4]

Google releases tech to watermark AI-generated text

Google is making SynthID Text, its technology that lets developers watermark and detect text generated by generative AI models, generally available. SynthID Text can be downloaded from the AI platform Hugging Face and Google's updated Responsible GenAI Toolkit. "Today, we're open sourcing our SynthID Text watermarking tool," the company wrote in a post on X. "Available freely to developers and businesses, it will help them identify their AI-generated content." So how does it work? Given a prompt like "What's your favorite fruit?," text-generating models predict which "token" most likely follows another -- one token at a time. Tokens are the building blocks a generative model uses to process information. They can be a single character, word, or part of a phrase. The model assigns each possible token a score, which is the percentage chance it's included in outputted text. SynthID Text inserts additional data in this token distribution by "modulating the likelihood of tokens being generated," Google says. "The final pattern of scores for both the model's word choices combined with the adjusted probability scores are considered the watermark," the company wrote in a blog post. "This pattern of scores is compared with the expected pattern of scores for watermarked and unwatermarked text, helping SynthID detect if an AI tool generated the text or if it might come from other sources." Google claims that SynthID Text, which has been integrated with its Gemini models since this spring, doesn't compromise the quality, accuracy, or speed of text generation, and works even on text that's been cropped, paraphrased, or modified. But the company also admits that its watermarking technology has limitations. For example, SynthID Text doesn't perform as well with short text or text that's been rewritten or translated from another language, and with responses to factual questions. "On responses to factual prompts, there are fewer opportunities to adjust the token distribution without affecting the factual accuracy," explains the company. "This includes prompts like 'What is the capital of France?' or queries where little or no variation is expected like 'recite a William Wordsworth poem.'" Google isn't the only company working on AI text watermarking tech. OpenAI has for years researched watermarking methods, but delayed their release over technical and commercial concerns. Watermarking techniques, if widely adopted, could help turn the tide against inaccurate -- but increasingly popular -- "AI detectors" that falsely flag essays written in a more generic manner.

[5]

Google's new AI tool lets developers watermark and detect text generated by AI models

Google is going one step further to protect us from AI-generated content Google has shared details of SynthID Text, a new tool designed to watermark and detect AI-generated text, which has been released as an open-source. Available via Hugging Face, developers and businesses can start using SynthID Text to embed watermarks into AI-generated text to make it easier to identify content created by genAI models. The company hopes its new tool will help prevent misinformation, as well as ensure proper attribution. SynthID Text works by changing the distribution of tokens - the building blocks of AI-generated text, otherwise characterized as groups of letters that make up words or parts of words. Existing AI models already generate text based on probabilities, or guessing which word comes next. All SynthID Text does is change the likelihood of certain words being selected, creating a specific watermark pattern that it can later identify. Google reckons that the tool can still function after text has been paraphrased or modified slightly, but the company did acknowledge some limitations. For example, short texts, translations and factual responses where there's little room for variation can limit SynthID Text's effectiveness. The company also noted thoroughly rewriting a response can "greatly reduce" detector confidence scores. SynthID Text has already been integrated into Gemini since earlier this year, however Google isn't the only player in the AI space. OpenAI, which makes the Gemini-rivalling ChatGPT, is unlikely to want to use its competitor's tool. Instead, it has been exploring its own watermarking technology. Moreover, it's unclear how these different systems will interoperate in the future as AI-generated content becomes more widespread on the internet, and whether one system will emerge as an industry standard, or whether legal frameworks will enforce their use.

[6]

Google makes its AI watermarking tool available to all

Developed by Google Deepmind, the algorithm subtly changes the AI generation to make it detectable. Google's technology to watermark text generated by artificial intelligence (AI) is now accessible to all. SynthID is a tool developed by Google Deepmind, the firm's laboratory dedicated to AI and it just became open-source. It works by introducing slight modifications to the generated text "introducing a statistical signature into the generated text," according to an article published in Nature. These signatures "are imperceptible to humans" Google noted in a post on the Big Tech giant's blog dedicated to developers. The watermarking process doesn't slow down the generation and doesn't need to access the large language model (LLM), "which is often proprietary," Nature's article noted. "Now, other [generative] AI developers will be able to use this technology to help them detect whether text outputs have come from their own [LLMs], making it easier for more developers to build AI responsibly," Pushmeet Kohli, the vice president of research at Google DeepMind, told the MIT Technology Review. The company ran a test of its watermarking tool through its chatbot Gemini. They analysed approximately 20 million watermarked and unwatermarked responses from the chat and noted no statistically significant difference in the feedback regarding their quality. The algorithm is currently deployed on Gemini and Gemini Advanced because "there is concern that it could contribute to misinformation and misattribution problems," the blog post noted. "Watermarking is one technique for mitigating these potential impacts". According to the researchers, it provides "superior detectability compared with existing methods," like analysing how varied and heterogeneous a text is to determine if it was generated by a language model or a human. This is the method used, for example, by GPTZero but it can lead to false positives and negatives. However, even SynthID-text isn't a foolproof method and the score tends to lower if the text is "thoroughly rewritten or translated to another language". Deepmind also developed tools to mark images and videos that are AI-generated by embedding a digital watermark directly into the pixels of an image or into each frame of a video invisible to the eye. The watermark was made to be resistant to common image and video manipulations, such as cropping, resizing, compression, and adding filters.

[7]

Google Open-sources Tech to Watermark AI-generated Text

Disclaimer: This content generated by AI & may have errors or hallucinations. Edit before use. Read our Terms of use Google has announced on X (formerly Twitter) that its SynthID text watermarking technology, designed to help identify AI-generated text, is now available as an open-source tool through the Google Responsible Generative AI Toolkit for developers and businesses. The company integrated the tool into its Gemini AI model, allowing for watermarking of AI-generated content in a way that is "imperceptible to humans" but easily detectable by an algorithm. SynthID watermarks and identifies AI-generated content by embedding digital watermarks directly into AI-generated images, audio, text or video. Announced by the company in August last year, SynthID can add the watermark to text, images, audio and videos. Google's SynthID works by adding a hidden watermark to text generated by AI. Think of it like a digital signature that identifies the source of the content without being obvious to readers. Google asserts that SynthID Text, integrated with its Gemini models, maintains the quality, accuracy, and speed of text generation without any compromise. It is also effective on text that has been cropped, paraphrased, or altered. Google doesn't provide extensive details about how SynthID operates, likely because as Demis Hassabis, CEO of Google DeepMind, explained to The Verge, "the more you disclose about its functionality, the easier it becomes for hackers and malicious actors to exploit it." Increasingly, distinguishing AI-generated imagery from human-created images is getting difficult due to the high quality of AI content. Moreover, the rising use of Large Language Models (LLM) to disseminate political misinformation, highlights the importance of AI regulation. The inability to identify the origin of content complicates the management of fake information, deep fakes, and copyright concerns. China's government mandated watermarking AI-generated content last year. Meanwhile, California is also looking into making it mandatory. A report from the European Union Law Enforcement Agency predicts that by 2026, 90% of online content could be generated synthetically, resulting in new challenges for law enforcement regarding disinformation, propaganda, fraud, and deception.

[8]

Google Is Now Watermarking Its AI-Generated Text

Eliza Strickland is a Senior Editor at IEEE Spectrum covering AI and biomedical engineering. The chatbot revolution has left our world awash in AI-generated text: It has infiltrated our news feeds, term papers, and inboxes. It's so absurdly abundant that industries have sprung up to provide moves and countermoves. Some companies offer services to identify AI-generated text by analyzing the material, while others say their tools will "humanize" your AI-generated text and make it undetectable. Both types of tools have questionable performance, and as chatbots get better and better, it will only get more difficult to tell whether words were strung together by a human or an algorithm. Here's another approach: Adding some sort of watermark or content credential to text from the start, which lets people easily check whether the text was AI-generated. New research from Google DeepMind, described today in the journal Nature, offers a way to do just that. The system, called SynthID-Text, doesn't compromise "the quality, accuracy, creativity, or speed of the text generation," says Pushmeet Kohli, vice president of research at Google DeepMind and a coauthor of the paper. But the researchers acknowledge that their system is far from foolproof, and isn't yet available to everyone -- it's more of a demonstration than a scalable solution. Google has already integrated this new watermarking system into its Gemini chatbot, the company announced today, and it has also open-sourced the tool and made it available to developers building on Gemini. However, only Google and those developers currently have access to the detector that checks for the watermark. What's more, the detector can only identify Gemini-generated text, not text generated by ChatGPT, Perplexity, or any other chatbot. As Kohli says: "While SynthID isn't a silver bullet for identifying AI-generated content, it is an important building block for developing more reliable AI identification tools." Content credentials have been a hot topic for images and video, and have been viewed as one way to combat the rise of deepfakes. Tech companies and major media outlets have joined together in an initiative called C2PA, which has worked out a system for attaching encrypted metadata to image and video files indicating if they're real or AI-generated. But text is a much harder problem, since text can so easily be altered to obscure or eliminate a watermark. While SynthID-Text isn't the first attempt at creating a watermarking system for text, it is the first one to be tested on 20 million prompts. Outside experts working on content credentials see the DeepMind research as a good step. It "holds promise for improving the use of durable content credentials from C2PA for documents and raw text," says Andrew Jenks, Microsoft's director of media provenance and executive chair of the C2PA. "This is a tough problem to solve, and it is nice to see some progress being made," says Bruce MacCormack, a member of the C2PA steering committee. SynthID-Text works by discreetly interfering in the generation process: It alters some of the words that a chatbot outputs to the user in a way that's invisible to humans but clear to a SynthID detector. "Such modifications introduce a statistical signature into the generated text," the researchers write in the paper. "During the watermark detection phase, the signature can be measured to determine whether the text was indeed generated by the watermarked LLM." The large language models (LLMs) that power chatbots work by generating sentences word by word, looking at the context of what has come before to choose a likely next word. Essentially, SynthID-Text interferes by randomly assigning number scores to candidate words and having the LLM output words with higher scores. Later, a detector can take in a piece of text and calculate its overall score; watermarked text will have a higher score than non-watermarked text. The DeepMind team checked their system's performance against other text watermarking tools that alter the generation process, and found that it did a better job of detecting watermarked text. However, the researchers acknowledge in their paper that it's still easy to alter a Gemini-generated text and fool the detector. Even though users wouldn't know which words to change, if they edit the text significantly or even ask another chatbot to summarize the text, the watermark would likely be obscured. To be sure that SynthID-Text truly didn't make chatbots produce worse responses, the team tested it on 20 million prompts given to Gemini. Half of those prompts were routed to the SynthID-Text system and got a watermarked response, while the other half got the standard Gemini response. Judging by the "thumbs up" and "thumbs down" feedback from users, the watermarked responses were just as satisfactory to users as the standard ones. Which is great for Google and the developers building on Gemini. But tackling the full problem of identifying AI-generated text (which some call AI slop) will require many more AI companies to implement watermarking technologies -- ideally, in an interoperable manner so that one detector could identify text from many different LLMs. And even in the unlikely event that all the major AI companies signed on to some agreement, there would still be the problem of open-source LLMs, which can easily be altered to remove any watermarking functionality. MacCormack of C2PA notes that detection is a particular problem when you start to think practically about implementation. "There are challenges with the review of text in the wild," he says, "where you would have to know which watermarking model has been applied to know how and where to look for the signal." Overall, he says, the researchers still have their work cut out for them. This effort "is not a dead end," says MacCormack, "but it's the first step on a long road."

[9]

Google tool makes AI-generated writing easily detectable

Google DeepMind has been using its AI watermarking method on Gemini chatbot responses for months - and now it's making the tool available to any AI developer Google has been using artificial intelligence watermarking to automatically identify text generated by the company's Gemini chatbot, making it easier to distinguish AI-generated content from human-written posts. That watermark system could help prevent misuse of the AI chatbots for misinformation and disinformation - not to mention cheating in school and business settings. Now, the tech company is making an open-source version of its technique available so that other generative AI developers can similarly watermark the output from their own large language models, says Pushmeet Kohli at Google DeepMind, the company's AI research team, which combines the former Google Brain and DeepMind labs. "While SynthID isn't a silver bullet for identifying AI-generated content, it is an important building block for developing more reliable AI identification tools," he says. Independent researchers voiced similar optimism. "While no known watermarking method is foolproof, I really think this can help in catching some fraction of AI-generated misinformation, academic cheating and more," says Scott Aaronson at The University of Texas at Austin, who previously worked on AI safety at OpenAI. "I hope that other large language model companies, including OpenAI and Anthropic, will follow DeepMind's lead on this." In May of this year, Google DeepMind announced that it had implemented its SynthID method for watermarking AI-generated text and video from Google's Gemini and Veo AI services, respectively. The company has now published a paper in the journal Nature showing how SynthID generally outperformed similar AI watermarking techniques for text. The comparison involved assessing how readily responses from various watermarked AI models could be detected. In Google DeepMind's AI watermarking approach, as the model generates a sequence of text, a "tournament sampling" algorithm subtly nudges it toward selecting certain word "tokens", creating a statistical signature that is detectable by associated software. This process randomly pairs up possible word tokens in a tournament-style bracket, with the winner of each pair being determined by which one scores highest according to a watermarking function. The winners move through successive tournament rounds until just one remains - a "multi-layered approach" that "increases the complexity of any potential attempts to reverse-engineer or remove the watermark", says Furong Huang at the University of Maryland. A "determined adversary" with huge amounts of computational power could still remove such AI watermarks, says Hanlin Zhang at Harvard University. But he described SynthID's approach as making sense given the need for scalable watermarking in AI services. The Google DeepMind researchers tested two versions of SynthID that represent trade-offs between making the watermark signature more detectable, at the expense of distorting the text typically generated by an AI model. They showed that the non-distortionary version of the AI watermark still worked, without noticeably affecting the quality of 20 million Gemini-generated text responses during a live experiment. But the researchers also acknowledged that the watermarking works best with longer chatbot responses that can be answered in a variety of ways - such as generating an essay or email - and said it has not yet been tested on responses to maths or coding problems. Both Google DeepMind's team and others described the need for additional safeguards against misuse of AI chatbots - with Huang recommending stronger regulation as well. "Mandating watermarking by law would address both the practicality and user adoption challenges, ensuring a more secure use of large language models," she says.

[10]

Google DeepMind open sources its AI text watermarking tool

Why it matters: AI-generated text is fueling plagiarism, copyright violations and misinformation, prompting calls for a way to determine whether material was created by a human or an algorithm. The big picture: A range of tools exist for watermarking images, which contain ample information -- pixels with different hues and shades -- that can be adjusted in ways that can be identified later. How it works: When prompted with a question or task, large language models (LLMs) generate an answer by predicting what word or phrase -- or "token" -- is most likely to appear next in a sequence of text. Zoom in: The goal is for a watermarking tool to have three properties: it shouldn't change the meaning or quality of the content; it should work with precision and accuracy; and it should be able to operate at a large scale without a high computational cost, says Pushmeet Kohli, VP of research at Google DeepMind and a co-author of the new paper published in Nature on Wednesday. What they found: The DeepMind researchers analyzed about 20 million Gemini chatbot responses that were either watermarked with SynthID or unwatermarked. The quality of the responses was based on user rating them with a thumbs-up or thumbs-down. Yes, but: The researchers acknowledge the tool has several limitations. Between the lines: A spate of AI text detection tools are now available but are generally inaccurate -- and some have led to students being falsely accused of cheating on essays. What to watch: Watermarks could be valuable to regulators, Thickstun says. What's next: DeepMind is making the SynthID-Text tool available to AI model developers so they "can incorporate it in their own pipeline ... and introduce their own private key" to identify their AI-generated content, Kohli says.

[11]

Google DeepMind is making its AI text watermark open source

Large language models work by breaking down language into "tokens" and then predicting which token is most likely to follow the other. Tokens can be a single character, word, or part of a phrase, and each one gets a percentage score for how likely it is to be the appropriate next word in a sentence. The higher the percentage, the more likely the model is going to use it. SynthID introduces additional information at the point of generation by changing the probability that tokens will be generated, explains Kohli. To detect the watermark and determine whether text has been generated by an AI tool, SynthID compares the expected probability scores for words in watermarked and unwatermarked text. Google DeepMind found that using the SynthID watermark did not compromise the quality, accuracy, creativity, or speed of generated text. That conclusion was drawn from a massive live experiment of SynthID's performance after the watermark was deployed in its Gemini products and used by millions of people. Gemini allows users to rank the quality of the AI model's responses with a thumbs-up or a thumbs-down. Kohli and his team analyzed the scores for around 20 million watermarked and unwatermarked chatbot responses. They found that users did not notice a difference in quality and usefulness between the two. The results of this experiment are detailed in a paper published in Nature today. Currently SynthID for text only works on content generated by Google's models, but the hope is that open-sourcing it will expand the range of tools it's compatible with. SynthID does have other limitations. The watermark was resistant to some tampering, such as cropping text and light editing or rewriting, but it was less reliable when AI-generated text had been rewritten or translated from one language into another. It is also less reliable in responses to prompts asking for factual information, such as the capital city of France. This is because there are fewer opportunities to adjust the likelihood of the next possible word in a sentence without changing facts.

[11]

Google DeepMind is making its AI text watermark open source

Large language models work by breaking down language into "tokens" and then predicting which token is most likely to follow the other. Tokens can be a single character, word, or part of a phrase, and each one gets a percentage score for how likely it is to be the appropriate next word in a sentence. The higher the percentage, the more likely the model is going to use it. SynthID introduces additional information at the point of generation by changing the probability that tokens will be generated, explains Kohli. To detect the watermark and determine whether text has been generated by an AI tool, SynthID compares the expected probability scores for words in watermarked and unwatermarked text. Google DeepMind found that using the SynthID watermark did not compromise the quality, accuracy, creativity, or speed of generated text. That conclusion was drawn from a massive live experiment of SynthID's performance after the watermark was deployed in its Gemini products and used by millions of people. Gemini allows users to rank the quality of the AI model's responses with a thumbs-up or a thumbs-down. Kohli and his team analyzed the scores for around 20 million watermarked and unwatermarked chatbot responses. They found that users did not notice a difference in quality and usefulness between the two. The results of this experiment are detailed in a paper published in Nature today. Currently SynthID for text only works on content generated by Google's models, but the hope is that open-sourcing it will expand the range of tools it's compatible with. SynthID does have other limitations. The watermark was resistant to some tampering, such as cropping text and light editing or rewriting, but it was less reliable when AI-generated text had been rewritten or translated from one language into another. It is also less reliable in responses to prompts asking for factual information, such as the capital city of France. This is because there are fewer opportunities to adjust the likelihood of the next possible word in a sentence without changing facts.

[13]

Google Open-Sources AI Detection, Invisible Watermarking Tool

Google has made its watermarking technology for AI-generated text, called SynthID Text, generally available through its updated Responsible Generative AI Toolkit and Hugging Face, a repository of open-source AI tools. Developers can now use SynthID Text to determine whether text has come from their own large language models with the goal of making it easier to build AI responsibly, said Pushmeet Kohli, Google DeepMind's vice president of research. SynthID detects AI text by observing a series of words. LLMs use tokens to process information and generate output. These tokens can be a single character, word, or phrase, and LLMs can predict which token will most likely follow another, one at a time. The tool will assign each token a score based on its probability of appearing in the output for a prompt. It will also "embed imperceptible watermarks" directly into the text during token distribution. When a text output is verified, SynthID compares the expected pattern of scores for watermarked and unmarked text and determines whether an AI tool generated the text or whether it came from another source. It does have limitations, though. The tech requires at least three sentences for detection, and its robustness and accuracy increase with the longer text. It's also less effective on factual text and AI-generated text that's been thoroughly rewritten or translated. "SynthID Text is not designed to directly stop motivated adversaries from causing harm," Google says. "However, it can make it harder to use AI-generated content for malicious purposes, and it can be combined with other approaches to give better coverage across content types and platforms." SynthID Text is part of a larger family of tools Google has created to detect AI-generated outputs. Last year, the company released a similar tool to watermark AI images. Google's AI-text detection tool comes at a time when AI-powered misinformation is on the rise -- as well as false-positive detections. About two-thirds of teachers reportedly use AI detection tools for student assignments and essays, and students using English as their second language have been victims of false detection.

[14]

Google DeepMind's AI watermarking tool is ready to take on deepfakes

Google tries a questionable new tactic to promote Gemini in Google Messages Key Takeaways SynthID helps identify content generated by Google Gemini and potentially other AI models. The tool subtly alters predictions to watermark text based on minute, measurable differences between predicted and actual output. DeepMind's open-sourcing of SynthID encourages industry-wide adoption, promoting AI content identification. ✕ Remove Ads Content misattributed to human writers has been on the rise since powerful, modern LLMs rendered the Turing Test obsolete. On top of similar tools for identifying AI-generated images, music, and video, Google's DeepMind AI research subsidiary just released the beta version of SynthID, a method of watermarking and identifying text created using the Gemini model. Even better, it's open-sourced the tool, so other AI companies can utilize it to keep track of what their models create. Keeping track of the robots One imperceptible signature at a time Source: Google DeepMind DeepMind's previously implemented techniques append images, video, and audio with watermarks undetectable by human eyes and ears. Researchers developed something a little different that allows SynthID to sign LLM-generated text. ✕ Remove Ads It works by altering the model's probabilistic output, or very slightly changing which words it's likely to predict work best in a given text passage. Based on the difference between the LLM's predicted word output and what the modified algorithm produces, the tool can reliably identify if the content was written by Google Gemini. Related How to use Google MusicFX to create AI-generated music Create instrumental songs with just a text prompt Of course, it can't alter the content too much, or the model's raw language abilities suffer. To make sure SynthID didn't go too far, researchers put it through a massive test. They pushed roughly 20 million Gemini-generated passages to users, some with and some without watermarks. ✕ Remove Ads The results indicated that users found watermarked and unaffected text equally accurate and useful, or in essence, indistinguishable. It also didn't affect the LLM's speed in any noticeable way. Pushing for industry-wide support DeepMind isn't stopping at labeling only Google Gemini output. SynthID watermarking and detection have already been open-sourced and offered to developers of other AI models, to encourage its adaptation for use with today's many competing LLMs. Like every AI detection tool (and many encryption-breaking techniques, for that matter), SynthAI could give unscrupulous AI developers another means of practicing how to obfuscate a text's LLM origins. As a subsidiary of the world leader in data collection (that is, Google), DeepMind's involvement means there are significant resources in play behind ensuring AI content is readily identifiable as such. ✕ Remove Ads Related If Google wants to fill our phones with AI, it needs to give Pixels more storage Pixels might last 7 years, but 128GB won't 13

[15]

Google Is Open-Sourcing This Invisible AI Watermarking Technology

By open-sourcing the technology, Google aims a wider adoption of the tech Google DeepMind open-sourced a new technology to watermark AI-generated text on Wednesday. Dubbed SynthID, the artificial intelligence (AI) watermarking tool can be used across different modalities including text, images, videos, and audio. However, currently, it is only offering the text watermarking tool to businesses and developers. The company aims for a wider adoption of the tool so that AI-generated content can be easily detected. Individuals and enterprises can access the tool via the Mountain View-based tech giant's updated Responsible Generative AI Toolkit. In a post on X (formerly known as Twitter), the official handle of Google DeepMind announced making SynthID's text watermarking capability freely available to developers and businesses. Apart from the Responsible GenAI Toolkit, it can also be downloaded from Google's Hugging Face listing. AI-generated text has already begun crowding the Internet. Amazon Web Services AI lab published a study earlier this year which claimed that as much as 57.1 percent of all sentences online that have been translated into two or more languages might be generated using AI tools. While AI chatbots filling up the Internet with gibberish AI-generated text might appear to be a case of harmless spamming, there is a darker side to it. In the hands of bad actors, AI tools can be used to mass-generate misinformation or misleading content. With a significant portion of social discourse occurring online, such actions could impact real-life events such as elections and be used to create propaganda against public figures. Out of all modalities, gauging AI-generated text has proven to be the most difficult task so far. This is largely because watermarking the words is not possible, and even if it was, bad actors could always rephrase the content using a second output cycle. However, Google DeepMind's SynthID uses a novel way to watermark AI-generated text. The tool uses machine learning to predict the words that could appear after a specific word in a sentence. For instance, consider the sentence "John was feeling extremely tired after working the entire day." Here, only a limited number of words can appear after the word "extremely". Based on analysis of content generation styles of various AI models, SynthID can predict the word that will appear after "extremely" and replace it with another synonym which exists in its database. The watermarking tool will embed such words throughout the entire content piece. Later, when the tool checks for AI-generated content, it looks for the number of such words to determine its authenticity. Notably, for images and videos, SynthID adds a watermark directly into the pixels of the frames so they remain invisible but can still be detected in the tool. For audio, the audio waves are first converted into a spectrograph, and the watermark is added to that visual data. These capabilities are currently not available to anyone outside of Google.

[16]

Google offers its AI watermarking tech as free, open source toolkit

In addition to watermarking AI-generated images, Google's open source SynthID works on text, audio, and video. Credit: Google Back in May, Google augmented its Gemini AI model with SynthID, a toolkit that embeds AI-generated content with watermarks it says are "imperceptible to humans" but can be easily and reliably detected via an algorithm. Today, Google took that SynthID system open source, offering the same basic watermarking toolkit for free to developers and businesses. The move gives the entire AI industry an easy, seemingly robust way to silently mark content as artificially generated, which could be useful for detecting deepfakes and other damaging AI content before it goes out in the wild. But there are still some important limitations that may prevent AI watermarking from becoming a de facto standard across the AI industry any time soon. Google uses a version of SynthID to watermark audio, video, and images generated by its multimodal AI systems, with differing techniques that are explained briefly in this video. But in a new paper published in Nature, Google researchers go into detail on how the SynthID process embeds an unseen watermark in the text-based output of its Gemini model. The core of the text watermarking process is a sampling algorithm inserted into an LLM's usual token-generation loop (the loop picks the next word in a sequence based on the model's complex set of weighted links to the words that came before it). Using a random seed generated from a key provided by Google, that sampling algorithm increases the correlational likelihood that certain tokens will be chosen in the generative process. A scoring function can then measure that average correlation across any text to determine the likelihood that the text was generated by the watermarked LLM (a threshold value can be used to give a binary yes/no answer). This probabilistic scoring system makes SynthID's text-based watermarks somewhat resistant to light editing or cropping of text since the same likelihood of watermarked tokens will likely persist across the untouched portion of the text. While watermarks can be detected in responses as short as three sentences, the process "works best with longer texts," Google acknowledges in the paper, since having more words to score provides "more statistical certainty when making a decision."

[17]

Google Open-Sources SynthID to Watermark AI-Generated Text

However, SynthID can currently detect output from Google's own AI models. Last year, Google DeepMind announced SynthID, a watermarking technology that can watermark and detect AI-generated content. Now, Google has open-sourced SynthID Text via the Google Responsible Generative AI Toolkit for developers and businesses. Google's open-sourced tool currently works for text only. With the abundance of AI models at our disposal, it's getting increasingly hard to tell what's real and what's not. As a result, it's high time that advanced watermarking tools like Google's SynthID are open-sourced and used by AI companies. Earlier this month, we reported that Google Photos may detect AI-generated photos using a Credit tag attribute. SynthID being open-sourced could only suggest more such applications will gain the ability to detect AI-generated text and other content. Pushmeet Kohli, the VP of Research at Google DeepMind, tells MIT Technology Review, Now, other [generative] AI developers will be able to use this technology to help them detect whether text outputs have come from their own [large language models], making it easier for more developers to build AI responsibly." For those unaware of how SynthID works, I'll try to make it easier for you to understand. To give you an analogy, remember those magical invisible pens where the writing could only be seen under UV light? Well, consider SynthID to be this light source that can see those invisible marks or watermarks on AI-generated images, videos, and text. LLMs while generating texts look at the possible tokens and give each one a score. The score represents the probability of the token being chosen. During this process, SynthID adds extra information by "modulating the likelihood of tokens being generated." However, the biggest limitation for SynthID right now is that it can only detect content generated by Google's proprietary AI models. Additionally, SynthID also starts to falter when someone heavily alters or rewrites AI-generated text like translating it from a different language altogether. Soheil Feizi, an associate professor at the University of Maryland who has researched on this topic, tells MIT, "Achieving reliable and imperceptible watermarking of AI-generated text is fundamentally challenging, especially in scenarios where LLM outputs are near deterministic, such as factual questions or code generation tasks." From the sound of it, we still have a long way to go before reliably detecting AI-generated content. Still, Google open-sourcing SynthID is a great step towards AI transparency. The tool can be an absolute game-changer in standardizing the detection of AI-generated content. Most importantly, Google states that SynthID doesn't interfere with the "quality, accuracy, creativity or speed of the text generation."

[18]

With This 1 Move, Google May Have Solved a Huge AI Content Problem

Google did something extra interesting: it made the watermarking tool open source, so other developers and businesses can build it into their own systems. Considering that AI tech developers are frequently accused of violating other people's intellectual property rights, Google effectively giving away its new IP is an interesting wrinkle. The Institute of Electrical and Electronics Engineers Spectrum news site, reporting on the new system, which is called SynthID-Text, notes it doesn't harm any of the "quality, accuracy, creativity, or speed" of text generation by AIs like Gemini, according to Pushmeet Kohli, vice president of research at Google DeepMind. The site explains that the tool subtly alters some of the words a chatbot outputs in a way that no human user could spot, but which is detectable by SynthID. It is, however, far from foolproof, and though Google's made the tool open source so other people can integrate the watermarks into their own AI systems, Google's keeping half the system for itself, for now: only Google has access to the tool that can detect AI watermarks applied by SynthID. Why should you care about this? It sounds genuinely useful for businesses and individual creators , and it's interesting that it's open source, but skeptics may question Google's motives. The tech giant is no stranger to accusations (and court rulings) that it's a monopolist -- enough to trigger legal consideration of a Google breakup -- and by open-sourcing the new watermarking tool it could be considered to be exerting a form of "soft control" over the entire AI scene.

[19]

Google just open-sourced its AI text detection tool for everyone

SynthID can integrate "watermarks" into AI-generated text and detect them in systems that use the same LLM setup. "PCWorld is on a journey to delve into information that resonates with readers and creates a multifaceted tapestry to convey a landscape of profound enrichment." That drivel may sound like it was AI-generated, but it was, in fact, written by a fleshy human -- yours truly. Truth is, it's hard to know whether a particular chunk of text is AI-generated or actually written by a human. Google is hoping to make it easier to spot by open-sourcing its new software tool. Google calls it SynthID, a method that "watermarks and identifies AI-generated content." Previously limited to Google's own language and image generation systems, the company has announced that SynthID is being released as open-source code that can be applied to other AI text generation setups as well. (If you're more comp-sci literate than me, you can check out all the details in the prestigious Nature journal.) But in layman's terms -- at least to the degree that this layman can actually understand them -- SynthID hides specific patterns in images and text that are generally too subtle for humans to spot, with a scheme to detect them when tested. SynthID can "encode a watermark into AI-generated text in a way that helps you determine if text was generated from your LLM without affecting how the underlying LLM works or negatively impacting generation quality," according to a post on the open-source machine learning database Hugging Face. The good news is that Google says these watermarks can be integrated with pretty much any AI text generation tool. The bad news is that actually detecting the watermarks still isn't something that can be nailed down. While SynthID watermarks can survive some of the basic tricks used to get around auto-detection -- like "don't call it plagiarism" word-swapping -- it can only indicate the presence of watermarks with varying degrees of certainty, and that certainty goes way down when applied to "factual responses," some of the most important and problematic uses of generative text, and when big batches of text go through automatic translation or other re-writing. "SynthID text is not designed to directly stop motivated adversaries from causing harm," says Google. (And frankly, I think even if Google had made a panacea against LLM-generated misinformation, it would be hesitant to frame it as such for liability reasons.) It also requires the watermark system to be integrated into the text generation tool before it's actually used, so there's nothing stopping someone from simply choosing not to do that, as malicious state actors or even more explicitly "free" tools like xAI's Grok are wont to do. And I should point out that Google isn't exactly being gregarious here. While the company is pushing its own AI tools on both consumers and businesses, its core Search product is in danger from a web that seems to be rapidly filling up with auto-generated text and images. Google's competition, like OpenAI, might elect not to use these kinds of tools simply as a matter of doing business, hoping to create a standard of their own to drive the marketplace towards their own products.

[20]

AI watermarking must be watertight to be effective

Scientists are closing in on a tool that can reliably identify AI-generated text without affecting the user's experience. But the technology's robustness remains a challenge. Seldom has a tool or technology erupted so suddenly from the research world and into the public consciousness -- and widespread use -- as generative artificial intelligence (AI). The ability of large language models (LLMs) to create text and images almost indistinguishable from those created by humans is disrupting, if not revolutionizing, countless fields of human activity. Yet the potential for misuse is already manifest, from academic plagiarism to the mass generation of misinformation. The fear is that AI is developing so rapidly that, without guard rails, it could soon be too late to ensure accuracy and reduce harm. This week, Sumanth Dathathri at DeepMind, Google's AI research lab in London, and his colleagues report their test of a new approach to 'watermarking' AI-generated text by embedding a 'statistical signature', a form of digital identifier, that can be used to certify the text's origin. The word watermark comes from the era of paper and print, and describes a variation in paper thickness, not usually immediately obvious to the naked eye, that does not change the printed text. A watermark in digitally generated text or images should be similarly invisible to the user -- but immediately evident to specialized software. Dathathri and his colleagues' work represents an important milestone for digital-text watermarking. But there is still some way to go before companies and regulators will be able to confidently state whether a piece of text is the product of a human or a machine. Given the imperatives to reduce harm from AI, more researchers need to step up to ensure that watermarking technology fulfils its promise. The authors' approach to watermarking LLM outputs is not new. A version of it is also being tested by OpenAI, the company in San Francisco, California, behind ChatGPT. But there is limited literature on how the technology works and its strengths and limitations. One of the most important contributions came in 2022, when Scott Aaronson, a computer scientist at the University of Texas at Austin, described, in a much-discussed talk, how watermarking can be achieved. Others have also made valuable contributions -- among them John Kirchenbauer and his colleagues at the University of Maryland in College Park, who published a watermark-detection algorithm last year. The DeepMind team has gone further, demonstrating that watermarking can be achieved on a large scale. The researchers incorporated a technology that they call SynthID-Text into Google's AI-powered chatbot, Gemini. In a live experiment involving nearly 20 million Gemini users who put queries to the chatbot, people didn't notice a diminution in quality in watermarked responses compared with non-watermarked responses. This is important, because users are unlikely to accept watermarked content if they see it as inferior to non-watermarked text. However, it is still comparatively easy for a determined individual to remove a watermark and make AI-generated text look as if it was written by a person. This is because the watermarking process used in DeepMind's experiment works by subtly altering the way in which an LLM statistically selects its 'tokens' -- how, in the face of a given user prompt, it draws from its huge training set of billions of words from articles, books and other sources to string together a plausible-sounding response. This alteration can be spotted by an analysing algorithm. But there are ways in which the signal can be removed -- by paraphrasing or translating the LLM's output, for example, or asking another LLM to rewrite it. And a watermark once removed is not really a watermark. Getting watermarking right matters because authorities are limbering up to regulate AI in a way that limits the harm it could cause. Watermarking is seen as a linchpin technology. Last October, US President Joe Biden instructed the National Institute of Standards and Technology (NIST), based in Gaithersburg, Maryland, to set rigorous safety-testing standards for AI systems before they are released for public use. NIST is seeking public comments on its plans to reduce the risks of harm from AI, including the use of watermarking, which it says will need to be robust. There is no firm date yet on when plans will be finalized. In contrast to the United States, the European Union has adopted a legislative approach, with the passage in March of the EU Artificial Intelligence Act, and the establishment of an AI Office to enforce it. China's government has already introduced mandatory watermarking, and the state of California is looking to do the same. However, even if the technical hurdles can be overcome, watermarking will only be truly useful if it is acceptable to companies and users. Although regulation is likely, to some extent, to force companies to take action in the next few years, whether users will trust watermarking and similar technologies is another matter. There is an urgent need for improved technological capabilities to combat the misuse of generative AI, and a need to understand the way people interact with these tools -- how malicious actors use AI, whether users trust watermarking and what a trustworthy information environment looks like in the realm of generative AI. These are all questions that researchers need to study. In a welcome move, DeepMind has made the model and underlying code for SynthID-Text free for anyone to use. The work is an important step forwards, but the technique itself is in its infancy. We need it to grow up fast.

[21]

DeepMind and Hugging Face release SynthID to watermark LLM-generated text

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Google DeepMind and Hugging Face have just released SynthID Text, a tool for marking and detecting text generated by large language models (LLMs). SynthID Text encodes a watermark into AI-generated text in a way that helps determine if a specific LLM produced it. More importantly, it does so without modifying how the underlying LLM works or reducing the quality of the generated text. The technique behind SynthID Text was developed by researchers at DeepMind and presented in a paper published in Nature on Oct. 23. An implementation of SynthID Text has been added to Hugging Face's Transformers library, which is used to create LLM-based applications. It is worth noting that SynthID is not meant to detect any text generated by an LLM. It is designed to watermark the output for a specific LLM. Using SynthID does not require retraining the underlying LLM. It uses a set of parameters that can configure the balance between watermarking strength and response preservation. An enterprise that uses LLMs can have different watermarking configurations for different models. These configurations should be stored securely and privately to avoid being replicated by others. For each watermarking configuration, you must train a classifier model that takes in a text sequence and determines whether it contains the model's watermark or not. Watermark detectors can be trained with a few thousand examples of normal text and responses that have been watermarked with the specified configuration. How SynthID Text works Watermarking is an active area of research, especially with the rise and adoption of LLMs in different fields and applications. Companies and institutions are looking for ways to detect AI-generated text to prevent mass misinformation campaigns, moderate AI-generated content, and prevent the use of AI tools in education. Various techniques exist for watermarking LLM-generated text, each with limitations. Some require collecting and storing sensitive information, while others require computationally expensive processing after the model generates its response. SynthID uses "generative modeling," a class of watermarking techniques that do not affect LLM training and only modify the sampling procedure of the model. Generative watermarking techniques modify the next-token generation procedure to make subtle, context-specific changes to the generated text. These modifications create a statistical signature in the generated text while maintaining its quality. A classifier model is then trained to detect the statistical signature of the watermark to determine whether a response was generated by the model or not. A key benefit of this technique is that detecting the watermark is computationally efficient and does not require access to the underlying LLM. SynthID Text builds on previous work on generative watermarking and uses a novel sampling algorithm called "Tournament sampling," which uses a multi-stage process to choose the next token when creating watermarks. The watermarking technique uses a pseudo-random function to augment the generation process of any LLM such that the watermark is imperceptible to humans but is visible to a trained classifier model. The integration into the Hugging Face library will make it easy for developers to add watermarking capabilities to existing applications. To demonstrate the feasibility of watermarking in large-scale production systems, DeepMind researchers conducted a live experiment that assessed feedback from nearly 20 million responses generated by Gemini models. Their findings show that SynthID was able to preserve response qualities while also remaining detectable by their classifiers. According to DeepMind, SynthID-Text has been used to watermark Gemini and Gemini Advanced. "This serves as practical proof that generative text watermarking can be successfully implemented and scaled to real-world production systems, serving millions of users and playing an integral role in the identification and management of artificial-intelligence-generated content," they write in their paper. Limitations According to the researchers, SynthID Text is robust to some post-generation transformations such as cropping pieces of text or modifying a few words in the generated text. It is also resilient to paraphrasing to some degree. However, the technique also has a few limitations. For example, it is less effective on queries that require factual responses and doesn't have room for modification without reducing the accuracy. They also warn that the quality of the watermark detector can drop considerably when the text is rewritten thoroughly. "SynthID Text is not built to directly stop motivated adversaries from causing harm," they write. "However, it can make it harder to use AI-generated content for malicious purposes, and it can be combined with other approaches to give better coverage across content types and platforms."

[22]

Sampling algorithm can 'watermark' AI-generated text to show where it came from

A tool that can watermark text generated by large language models, improving the ability for it to identify and trace synthetic content, is described in Nature this week. Large language models (LLMs) are widely used artificial intelligence (AI) tools that can generate text for chatbots, writing support and other purposes. However, it can be difficult to identify and attribute AI-generated text to a specific source, putting the reliability of the information into question. Watermarks have been proposed as a solution to this problem, but have not been deployed at scale because of stringent quality and computational efficiency requirements in production systems. Sumanth Dathathri, Pushmeet Kohli and colleagues have developed a scheme that uses a novel sampling algorithm to apply watermarks to AI-generated text, known as SynthID-Text. The tool uses a sampling algorithm to subtly bias the word choice of the LLM, inserting a signature that can be recognized by the associated detection software. This can either be done via a "distortionary" pathway, which improves the watermark at a slight cost of output quality, or a "non-distortionary" pathway, which preserves text quality. The detectability of these watermarks was evaluated across several publicly available models, with SynthID-Text showing improved detectability compared to existing approaches. The quality of the text was also assessed using nearly 20 million responses from live chat interactions using the Gemini LLM, with results suggesting that the non-distortionary mode of watermarking did not decrease the text quality. Finally, the use of SynthID-Text has a negligible impact on the computational power needed to run the LLM, reducing the barrier to implementation. The authors caution that text watermarks can be circumvented by editing or paraphrasing the output. However, this work shows the viability of a tool that can produce generative text watermarks for AI-generated content, in a further step to improving the accountability and transparency of responsible LLM use.

[23]

Don't be fooled: Google SynthID watermarks can tag deepfakes

It was April of 2018, and a day like any other until the first text arrived asking "Have you seen this yet?!" with a link to YouTube. Seconds later, former President Barack Obama was on screen delivering a speech in which he proclaimed President Donald Trump "is a total and complete [expletive]." Except, it wasn't actually former President Obama. It was a deepfake video produced by Jordan Peele and Buzzfeed. The video, in its entirety, was to raise awareness of the AI capabilities we had at the time to create talking heads with voiceovers. In this case, Peele used Barack Obama to deliver a message to be cautious, wary, and stick to using trusted news sources. Since then, we've made countless strides in AI technology. Literally leaps and bounds from the technology Peele used to create his Obama deepfake. GPT-2 was introduced to the public in 2019, which could be used for text generation with a simple prompt. 2021 saw the release of DALL-E, an AI image generation tool capable of fooling even some of the keenest eyes with some of its photorealistic imagery. 2022 saw even more improvements in its capabilities with DALL-E 2. MidJourney was also released that year. Both take text inputs for subject, situation, action and style to output unique artwork, including photo-realistic images. In 2024, generative AI has gone absolutely bananas. Meta's Make-A-Video allows users to generate five-second-long videos from text descriptions alone, and Meta's new Movie Gen has taken AI video generation to new heights. OpenAI Sora, Google Veo, Runway ML, HeyGen... With text prompts, we can now generate anything our wildest imaginations can think of. Perhaps even more, since AI-generated videos sometimes run amok with our inputs, leading to some pretty fascinating and psychedelic visuals. Synthesia and DeepBrain are two more AI video platforms specifically designed to deliver human-like content using AI-generated avatars, much like newscasters delivering the latest news on your favorite local channel - speaking of which, your entire local channel might soon be AI-generated - like the remarkable Channel One. And there are so many more. What's real and what's fake? Who can tell the difference? Certainly not your aunt on Facebook who keeps sharing those ridiculous images. The concepts of truth, reality and veracity are under attack, with repercussions that reverberate far beyond the screen. So in order to give humanity some chance against the incoming tsunami of lies Google DeepMind has developed a technology to watermark and identify AI-generated media - it's called SynthID. SynthID can separate legitimate authentic content from AI-generated content by digitally watermarking AI content in a way that humans won't be able to perceive but will be easily recognizable by software specifically looking for the watermarks. This isn't just for video, but also images, audio, and even text. It does so, says Deepmind, without compromising the integrity of the original content. Large Language Models (LLMs) like ChatGPT use "tokens" for reading input and generating output. Tokens are basically part of, or whole words or phrases. If you've ever used an LLM, you've likely noticed that it tends to repeat certain words or phrases in its responses. Patterns are common with LLMs. It's pretty tricky how SynthID watermarks AI-generated text - but put simply, it subtly manipulates the probabilities of different tokens throughout the text. It might tweak ten probabilities in a sentence, hundreds on a whole page, leaving what Deepmind calls a "statistical signature" in the generated text. It still comes out perfectly readable to humans, and unless you've got pattern recognition skills bordering on the paranormal, you'd have no way to tell. But a SynthID watermark detector can tell, with greater accuracy as the text gets longer - and since there's no specific character patterns involved, the digital watermark should be fairly robust against a degree of text editing, as well. Multimedia content should be considerably easier, since all sorts of information can be coded into unseen, unheard artifacts in the files. With audio, SynthID creates a spectrogram of the file and drops in a watermark that's imperceptible by the human ear, before converting it back to waveform. Photo and video simply have the watermarks embedded into pixels in the image in a non-destructive way. The watermark is still detectable even if the image or video has been altered with filters or cropping. Google has open-sourced the SynthID tech and is encouraging companies building generative AI tools to use it. There's more at stake here than just people being fooled by AI fakes - the big companies themselves need to make sure AI-generated content can be distinguished from human-generated content for a different reason - so that the AI models of tomorrow are trained on 'real' human-generated content rather than AI-generated BS. If AI models are forced to eat too much of their own excrement, all the 'hallucinations' prevalent in today's early models will become part of the new models' understanding of ground truth. Google definitely has a vested interest in making sure the next Gemini model is trained on the best possible data. At the end of the day though, schemes like SynthID are very much opt-in, and as such, companies that opt out, and whose GenAI text, images, videos and audio are that much harder to detect, will have a compelling sales pitch to offer to anyone that really wants to twist the truth or fool people, from election interference types to kids who can't be bothered writing their own assignments. Perhaps countries could legislate to make these watermarking technologies mandatory, but then there will certainly be countries that elect not to, and shady operations that build their own AI models to get around any such restrictions. But it's a start - and while you or I may still initially be fooled by Taylor Swift videos on TikTok giving away pots and pans, with SynthID technology, we'll be able to check their authenticity before sending in our $9.99 shipping fee.

[24]

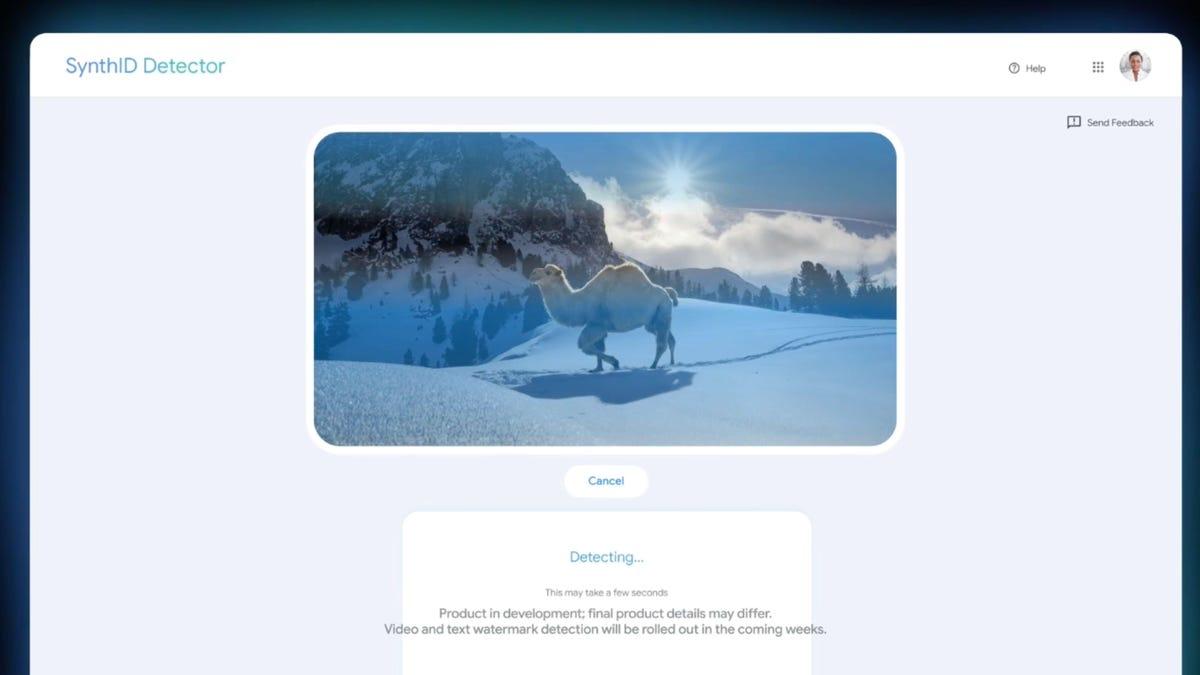

Google's AI detection tool can now be tried by anyone | Digital Trends

Google announced via a post on X (formerly Twitter) on Wednesday that SynthID is now available to anybody who wants to try it. The authentication system for AI-generated content embeds imperceptible watermarks into generated images, video, and text, enabling users to verify whether a piece of content was made by humans or machines. "We're open-sourcing our SynthID Text watermarking tool," the company wrote. "Available freely to developers and businesses, it will help them identify their AI-generated content." Recommended Videos SynthID debuted in 2023 as a means to watermark AI-generated images, audio, and video. It was initially integrated into Imagen, and the company subsequently announced its incorporation into the Gemini chatbot this past May at I/O 2024. The system works by encoding tokens -- those are the foundational chunks of data (be it a single character, word, or part of a phrase) that a generative AI uses to understand the prompt and predict the next word in its reply -- with imperceptible watermarks during the text generation process. It does so, according to a DeepMind blog from May, by "introducing additional information in the token distribution at the point of generation by modulating the likelihood of tokens being generated." By comparing the model's word choices along with its "adjusted probability scores" against the expected pattern of scores for watermarked and unwatermarked text, SynthID can detect whether an AI wrote that sentence. This process does not impact the response's accuracy, quality, or speed, according to a study published in Nature on Wednesday, nor can it be easily bypassed. Unlike standard metadata, which can be easily stripped and erased, SynthID's watermark reportedly remains even if the content has been cropped, edited, or otherwise modified. "Achieving reliable and imperceptible watermarking of AI-generated text is fundamentally challenging, especially in scenarios where [large language model] outputs are near deterministic, such as factual questions or code generation tasks," Soheil Feizi, an associate professor at the University of Maryland, told MIT Technology Review, noting that its open-source nature "allows the community to test these detectors and evaluate their robustness in different settings, helping to better understand the limitations of these techniques." The system is not foolproof, however. While it is resistant to tampering, SynthID's watermarks can be removed if the text is run through a language translation app or if it's been heavily rewritten. It is also less effective with short passages of text and in determining whether a reply based on a factual statement was generated by AI. For example, there's only one right answer to the prompt, "what is the capital of France?" and both humans and AI will tell you that it's Paris. If you'd like to try SynthID yourself, it can be downloaded from Hugging Face as part of Google's updated Responsible GenAI Toolkit.

Share

Share

Copy Link

Google's DeepMind researchers have developed SynthID Text, an innovative watermarking solution for AI-generated content. This technology, now open-sourced, aims to enhance transparency and detectability of AI-written text without compromising quality.

Google Introduces SynthID Text: A Game-Changer in AI Content Watermarking

In a significant development for the AI industry, researchers from Google's DeepMind have unveiled SynthID Text, an innovative watermarking solution for AI-generated content. This breakthrough, reported in Nature on October 23, 2024, marks a major step towards addressing concerns surrounding the proliferation of AI-written text

3

.How SynthID Text Works

SynthID Text operates by subtly altering the word selection process in AI-generated text. The system uses a cryptographic key to assign random scores to potential tokens (words or word parts) during the text generation process. These tokens then undergo a "tournament" of one-on-one knockouts, with the highest-scoring token being selected for use in the final text

3

.This intricate process creates an invisible watermark that can be detected using the same cryptographic code, making it easier to identify AI-generated content without compromising the quality or readability of the text

3

4

.Deployment and Accessibility

Google has already integrated SynthID Text into its Gemini large language model (LLM), with impressive results. In a massive trial involving 20 million responses, users rated watermarked texts as being of equal quality to unwatermarked ones

3

.The company has now made SynthID Text open-source, allowing developers and businesses to freely access and implement the technology. It is available for download from the AI platform Hugging Face and Google's updated Responsible GenAI Toolkit

4

5

.Implications and Potential Impact

The release of SynthID Text comes at a crucial time, as experts predict that AI could generate more than 50% of internet content by 2030

2

. This technology has several potential applications:- Combating misinformation and fake news

- Deterring academic cheating

- Preventing the degradation of future AI models by avoiding training on AI-generated content

- Ensuring proper attribution of AI-generated text

3

5

Related Stories

Limitations and Challenges

While promising, SynthID Text is not without limitations:

- Less effective with short texts, translations, or responses to factual questions

- Vulnerable to determined removal attempts ("scrubbing") or false application ("spoofing")

- Reduced effectiveness when text is thoroughly rewritten

3

4

5

Industry and Policy Implications

The introduction of SynthID Text raises important questions about the future of AI content detection:

- Will other AI developers adopt similar watermarking techniques?

- How will different watermarking systems interoperate?

- Will legal frameworks eventually mandate the use of such technologies?

5

As AI-generated content becomes increasingly prevalent, the race to develop effective watermarking solutions intensifies. Google's open-sourcing of SynthID Text represents a significant step towards creating a more transparent and accountable AI ecosystem.

References

Summarized by

Navi

[1]

[2]

[4]

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology