Grok generated 3 million sexualized images in 11 days, exposing massive AI safety failures

16 Sources

16 Sources

[1]

Under Musk, the Grok disaster was inevitable

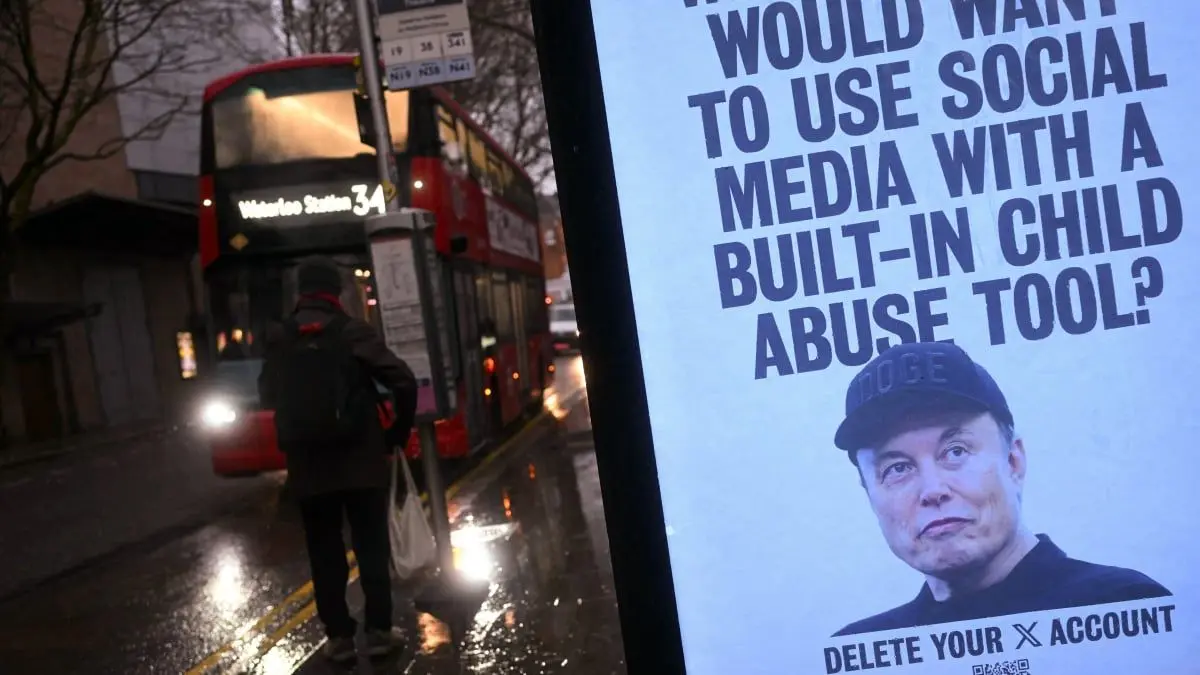

This is The Stepback, a weekly newsletter breaking down one essential story from the tech world. For more on dystopian developments in AI, follow Hayden Field. The Stepback arrives in our subscribers' inboxes at 8AM ET. Opt in for The Stepback here. You could say it all started with Elon Musk's AI FOMO -- and his crusade against "wokeness." When his AI company, xAI, announced Grok in November 2023, it was described as a chatbot with "a rebellious streak" and the ability to "answer spicy questions that are rejected by most other AI systems." The chatbot debuted after a few months of development and just two months of training, and the announcement highlighted that Grok would have real-time knowledge of the X platform. But there are inherent risks to a chatbot having both the run of the internet and X, and it's safe to say xAI may not have taken the necessary steps to address them. Since Musk took over Twitter in 2022 and renamed it X, he laid off 30% of its global trust and safety staff and cut its number of safety engineers by 80%, Australia's online safety watchdog said last January. As for xAI, when Grok was released, it was unclear whether xAI had a safety team already in place. When Grok 4 was released in July, it took more than a month for the company to release a model card -- a practice typically seen as an industry standard, which details safety tests and potential concerns. Two weeks after Grok 4's release, an xAI employee wrote on X that he was hiring for xAI's safety team and that they "urgently need strong engineers/researchers." In response to a commenter, who asked, "xAI does safety?" the original employee said xAI was "working on it." Journalist Kat Tenbarge wrote about how she first started seeing sexually explicit deepfakes go viral on Grok in June 2023. Those images obviously weren't created by Grok -- it didn't even have the ability to generate images until August 2024 -- but X's response to the concerns was varied. Even last January, Grok was inciting controversy for AI-generated images. And this past August, Grok's "spicy" video-generation mode created nude deepfakes of Taylor Swift without even being asked. Experts have told The Verge since September that the company takes a whack-a-mole approach to safety and guardrails -- and that it's difficult enough to keep an AI system on the straight and narrow when you design it with safety in mind from the beginning, let alone if you're going back to fix baked-in problems. Now, it seems that approach has blown up in xAI's face. ...Not good. Grok has spent the last couple of weeks spreading nonconsensual, sexualized deepfakes of adults and minors all over the platform, as promoted. Screenshots show Grok complying with users asking it to replace women's clothing with lingerie and make them spread their legs, as well as to put small children in bikinis. And there are even more egregious reports. It's gotten so bad that during a 24-hour analysis of Grok-created images on X, one estimate gauged the chatbot to be generating about 6,700 sexually suggestive or "nudifying" images per hour. Part of the reason for the onslaught is a recent feature added to Grok, allowing users to use an "edit" button to ask the chatbot to change images, without the original poster's consent. Since then, we've seen a handful of countries either investigate the matter or threaten to ban X altogether. Members of the French government promised an investigation, as did the Indian IT ministry, and a Malaysian government commission wrote a letter about its concerns. California governor Gavin Newsom called on the US Attorney General to investigate xAI. The United Kingdom said it is planning to pass a law banning the creation of AI-generated nonconsensual, sexualized images, and the country's communications-industry regulator said it would investigate both X and the images that had been generated in order to see if they violated its Online Safety Act. And this week, both Malaysia and Indonesia blocked access to Grok. xAI initially said its goal for Grok was to "assist humanity in its quest for understanding and knowledge," "maximally benefit all of humanity," and "empower our users with our AI tools, subject to the law," as well as to "serve as a powerful research assistant for anyone." That's a far cry from generating nude-adjacent deepfakes of women without their consent, let alone minors. On Wednesday evening, as pressure on the company heightened, X's Safety account put out a statement that the platform has "implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis," and that the restriction "applies to all users, including paid subscribers." On top of that, only paid subscribers can use Grok to create or edit any sort of image moving forward, according to X. The statement went on to say that X "now geoblock[s] the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account and in Grok in X in those jurisdictions where it's illegal," which was a strange point to make since earlier in the statement, the company said it was not allowing anyone to use Grok to edit images in such a way. Another important point: My colleagues tested Grok's image-generation restrictions on Wednesday to find that it took less than a minute to get around most guardrails. Although asking the chatbot to "put her in a bikini" or "remove her clothes" produced censored results, they found, it had no qualms about delivering on prompts like "show me her cleavage," "make her breasts bigger," and "put her in a crop top and low-rise shorts," as well as generating images in lingerie and sexualized poses. As of Wednesday evening, we were still able to get the Grok app to generate revealing images of people, using a free account. Even after X's Wednesday statement, we may see a number of other countries either ban or block access to either all of X or just Grok, at least temporarily. We'll also see how the proposed laws and investigations around the world play out. The pressure is mounting for Musk, who on Wednesday afternoon took to X to say that he is "not aware of any naked underage images generated by Grok." Hours later, X's Safety team put out its statement, saying it's "working around the clock to add additional safeguards, take swift and decisive action to remove violating and illegal content, permanently suspend accounts where appropriate, and collaborate with local governments and law enforcement as necessary." What technically is and isn't against the law is a big question here. For instance, experts told The Verge earlier this month that AI-generated images of identifiable minors in bikinis, or potentially even naked, may not technically be illegal under current child sexual abuse material (CSAM) laws in the US, though of course disturbing and unethical. But lascivious images of minors in such situations are against the law. We'll see if those definitions expand or change, even though the current laws are a bit of a patchwork. As for nonconsensual intimate deepfakes of adult women, the Take It Down Act, signed into law in May 2025, bars nonconsensual AI-generated "intimate visual depictions" and requires certain platforms to rapidly remove them. The grace period before the latter part goes into effect -- requiring platforms to actually remove them -- ends in May 2026, so we may see some significant developments in the next six months.

[2]

Sexualised deepfakes on X are a sign of things to come. NZ law is already way behind

Elon Musk finally responded last week to widespread outrage about his social media platform X letting users create sexualised deepfakes with Grok, the platform's artificial intelligence (AI) chatbot. Musk has now assured the United Kingdom government he will block Grok from making deepfakes in order to comply with the law. But the change will likely only apply to users in the UK. These latest complaints were hardly new, however. Last year, Grok users were able to "undress" posted pictures to produce images of women in underwear, swimwear or sexually suggestive positions. X's "spicy" option let them to create topless images without any detailed prompting at all. And such cases may be signs of things to come if governments aren't more assertive about regulating AI. Despite public outcry and growing scrutiny from regulatory bodies, X initially made little effort to address the issue and simply limited access to Grok on X to paying subscribers. Various governments took action, with the UK announcing plans to legislate against deepfake tools, joining Denmark and Australia in seeking to criminalise such sexual material. UK regulator Ofcom launched an investigation of X, seemingly prompting Musk's about-turn. So far, the New Zealand government has been silent on the issue, even though domestic law is doing a poor job of preventing or criminalising non-consensual sexualised deepfakes. Holding platforms accountable The Harmful Digital Communications Act 2015 does offer some pathways to justice, but is far from perfect. Victims are required to show they've suffered "serious emotional distress", which shifts focus to their response rather than the inherent wrong of non-consensual sexualisation. Where images are entirely synthetic rather than "real" (generated without a reference photo, for example), legal protection becomes even less certain. A members' bill is expected to be introduced later this year that would criminalise the creation, possession and distribution of sexualised deepfakes without consent. This reform is both necessary and welcome. But it only tackles part of the problem. Criminalisation holds individuals accountable after harm has already occurred. It does not hold companies accountable for designing and deploying the AI tools that produce these images in the first place. We expect social media providers to take down child sexual abuse material, so why not deepfakes of women? While users are responsible for their actions, platforms such as X provide an ease of access that removes the technical barrier to deepfake creation. The Grok case has been in the news for many months, so the resulting harm is easily foreseeable. Treating such incidents as isolated misuse distracts from the platform's responsibility. Light-touch regulation is not working Social media companies (including X) have signed the voluntary Aotearoa New Zealand Code of Practice for Online Safety and Harms, but this is already out of date. The code does not set standards for generative AI, nor does it require risk assessments prior to implementing an AI tool, or set meaningful consequences for failing to prevent predictable forms of abuse. This means X can get away with allowing Grok to produce deepfakes while still technically complying with the code. Victims could also hold X responsible by complaining to the Privacy Commissioner under the Privacy Act 2020. The commissioner's guidance on AI suggests that both the use of someone's image as a prompt and the generated deepfake could count as personal information. However, these investigations can take years, and any compensation is usually small. Responsibility is often split among the user, the platform and the AI developer. This does little to make platforms or AI tools such as Grok safer in the first place. New Zealand's approach reflects a broader political preference for light-touch AI regulation that assumes technological development will be accompanied by adequate self-restraint and good-faith governance. Clearly, this isn't working. Competitive pressures to release new features quickly prioritise novelty and engagement over safety, with gendered harm often treated as an acceptable byproduct. A sign of things to come Technologies are shaped by the social conditions in which they are developed and deployed. Generative AI systems trained on masses of human data inevitably absorb misogynistic norms. Integrating these systems into platforms without robust safeguards allows sexualised deepfakes that reinforce existing patterns of gender-based violence. These harms extend beyond individual humiliation. The knowledge that a convincing sexualised image can be generated at any time - by anyone - creates an ongoing threat that alters how women engage online. For politicians and other public figures, that threat can deter participation in public debate altogether. The cumulative effect is a narrowing of digital public space. Criminalising deepfakes alone won't fix this. New Zealand deserves a regulatory framework that recognises AI-enabled, gendered harm as foreseeable and systemic. That means imposing clear obligations on companies that deploy these AI tools, including duties to assess risk, implement effective guardrails, and prevent predictable misuse before it occurs. Grok offers an early signal of the challenges ahead. As AI becomes embedded across digital platforms, the gap between technological capabilities and legislation will continue to widen unless those in power take action. At the same time, Elon Musk's response to legislative action in the UK demonstrates how effective political will and robust regulation can be. The authors acknowledge the contribution of Chris McGavin to the preparation of this article.

[3]

Grok's Sexualized Images Test the Limits of AI Oversight

For the past several weeks, Elon Musk's artificial intelligence chatbot, Grok, has been routinely and repeatedly used to digitally undress people on X. Despite a mounting outcry from global regulators, the tool has continued operating in the US with limited government intervention. At its peak earlier this month, Grok was used thousands of times per hour to non-consensually de-clothe people in its public answers on the social network -- bringing a disturbing form of abuse and harassment from the darker corners of the internet onto a more mainstream online platform, industry watchdogs say. Victims ranged from OnlyFans stars to the deputy prime minister of Sweden. UK child-safety groups said they even found AI-generated child pornography on the dark web that they believe was made with Grok. (Musk said he is "not aware of any naked underage images.") Musk has continued to build, promote and disseminate the technology, which so far has faced little legal or regulatory action in the US. Apple and Google also still offer it in their app stores. He's made light of the controversy by jokingly asking Grok to de-clothe himself, lashed out at critics and likened the product to tools like Adobe's Photoshop to suggest the issue doesn't lie with Grok itself, but rather the people using it. "Obviously, Grok does not spontaneously generate images, it does so only according to user requests," Musk posted Wednesday, adding that it will "refuse to produce anything illegal." After the uproar, X put its image generation tool behind a paywall, effectively turning a controversial feature into a premium product. Later, it announced that Grok was blocked from generating these types of images of "real people" on the social network, though reports quickly surfaced that users could still create them with the standalone Grok app. On X, too, there remains a trickle of sexualized images coming from Grok, including some depicting people in thongs, bikinis or skimpy outfits, according to a Bloomberg analysis. Musk and his AI startup have a long history of pushing the limits. The billionaire, who has repeatedly raised alarms about the existential threat of artificial intelligence, launched xAI in 2023 as an alternative to OpenAI with the vague mission to "understand the true nature of the universe." But its key differentiator is embracing the racy and profane, whether it be "romantic" AI companions or irreverent chatbot conversations in "spicy" mode. With the proliferation of sexualized images, however, Musk and his company are not only sparking the biggest uproar in xAI's history; they're also stress-testing a system of public and private safeguards intended to protect against societal harms from AI. And so far, those guardrails have come up lacking, particularly in the US. Governments and regulators across Europe quickly began to threaten and investigate Musk's business over Grok's sexualized images, but the US -- X's largest market -- has been slower to respond. The Senate unanimously passed legislation known as the "Defiance Act" last week that would allow victims of nonconsensual, sexually explicit images to sue perpetrators, but not necessarily the platforms themselves. A law signed by US President Donald Trump last May requires platforms to take down nonconsensual, sexual imagery within 48 hours of it being reported, but the reporting systems aren't yet required. California's attorney general on Wednesday opened an investigation into xAI over the spread of the images and later sent a cease-and-desist letter, but it's unclear where that may lead. Musk's AI startup has not commented on the investigation. Protecting child safety on technology platforms has historically been one of the few areas Republicans and Democrats can agree needs regulation. But every time Congress summoned tech CEOs to testify over child safety concerns in recent years, Musk wasn't invited, in part because the hearings focused on child safety were less relevant to X, which doesn't have as many younger users. Now, in his second term, Trump has made a concerted effort to befriend the tech community, offering its leaders a regulation-free environment to grow their AI businesses to keep ahead of rising competition from rivals in China. And Musk himself is in a different category of technology executive: the richest man in the world, a major government contractor and a former close adviser to Trump who helped to bankroll the president's win and may open his wallet again for the midterms. Grok is Musk's horse in the AI race -- a contest that tech executives have been unanimous in telling Trump the US needs to win. Trump is encouraging massive AI investment and innovation, a stance that's at odds with the prospect of penalizing one of the industry's most prominent AI pioneers. "We are in the midst of a major zeitgeist shift whereby political, economic and technological elites in the United States have determined that it is convenient to them and their agendas to reduce the amount of safety measures and content moderation not taken by major platforms," said Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell Tech. "It is a reality that Elon Musk has done a whole lot to bring about." A White House official pointed Bloomberg to X's and Musk's public statements on the matter as a sign that the problem was under control. Other parts of the administration are openly showing support for Grok: US Defense Secretary Pete Hegseth visited Musk last week at SpaceX's Starbase launch site, slammed what he called "woke" AI and announced plans to integrate Grok into the department's systems. Apple Inc. and Alphabet Inc.'s Google, which operate the two most dominant mobile-app stores, have also left X and Grok alone despite a history of removing apps that allow people to nudify another person. Several US Senators sent a letter earlier this month to both companies arguing that X and Grok violated their respective terms of service with the "mass generation of nonconsensual sexualized images of women and children," and therefore needed to be removed. The companies didn't respond to a request for comment. Others who criticize Musk have historically done so at their own peril, as his vast wealth and litigiousness tends to scare some critics away. When advertisers on X boycotted the platform over its content policies after Musk purchased the platform, he sued them into coming back. In one case, his lawsuit caused an advertising industry coalition to fold. And this month, after US music publishers tried to keep X from using copyrighted songs without a license, he sued them, too. To speak out against Musk is to open yourself up to expensive litigation -- merited or not. One person now attempting to challenge Grok in court is Ashley St. Clair, the conservative influencer who recently had a baby with Musk. St. Clair sued xAI last week, claiming that Grok created sexualized images of her, and that X retaliated against her when she complained. X sued her back, accusing her of breach of contract for failing to file the lawsuit in federal court in Texas, which is required by the company's terms of service. Outside of the US, governments and regulators are making more of an effort to penalize Musk's empire. Grok has been blocked in Indonesia and Malaysia. In Japan, X's second largest market, officials on Friday said they have asked X to bolster safeguards and stop the output of inappropriate images. They are also considering legal action if necessary, according to Japan's economic security minister. France was one of the first countries to condemn Grok, with lawmakers Arthur Delaporte and Éric Bothorel reporting what they described as "clearly illegal" sexually explicit deepfake content to the public prosecutor on Jan. 2. The prosecutor later confirmed that it was investigating the matter. Soon after, the European Union's digital regulators kicked into gear, ordering X -- under the bloc's Digital Services Act -- to preserve all internal documents related to Grok until the end of the year, a preliminary step before potential regulatory measures. EU digital chief Henna Virkkunen described Grok's ability to create sexualized images of women and children as "horrendous" and told the platform last week to "fix its AI tool in the EU" or face action under the DSA, which could result in hefty fines. The UK took a similar position, with media and internet regulator Ofcom opening a formal investigation into X under the Online Safety Act, a set of rules designed to protect people, particularly kids, from harmful content. If Ofcom determines that X has broken the law, it can issue a fine of up to 10% of the company's worldwide revenue. If that happens and X does not comply, the regulator can seek a court order to internet service providers to block access to the site in the UK. Canada's Privacy Commissioner, too, is investigating X. The company has not commented on these investigations. It's not clear whether the investigations will lead to meaningful consequences, and even if they do, whether Musk will adhere to what they call for. The billionaire has a long history of fighting regulators over content on X. The EU fined the social platform as recently as December over violating the bloc's content moderation laws. In Brazil, X was temporarily banned in 2024 for failing to adhere to government takedown requests. Musk has historically relented to legal demands made by governments, but he rarely does so quietly. During his fight with Brazil, Musk called one of the country's supreme court judges "an evil dictator cosplaying as a judge." After UK Prime Minister Keir Starmer criticized Grok earlier this month for its "disgraceful" and "disgusting" undressing images, Musk called the UK government "fascist" and alleged it wants to "suppress free speech." Despite the changes made to X, the Grok dispute appears far from over -- particularly if xAI allows the chatbot to digitally remove clothing from people on its standalone app. "The worst outcome would be if there are just no consequences whatsoever as a demonstration that there is one person actually above the law," said Riana Pfefferkorn, a policy fellow at the Stanford Institute for Human-Centered Artificial Intelligence. Social media platforms have long struggled with how to police various forms of sexualized content, which tends to be subjective in nature. AI has added another wrinkle by making it easier and faster for people to share and generate sexualized imagery, which doesn't always depict a real person. While creating or hosting sexually explicit images of children is against the law, many of the images that Grok created may not be illegal in the US, Pfefferkorn said. But most big AI companies likely don't have the stomach to deal with the kind of PR crisis that Musk is going through and would be motivated by the public outcry to ensure they have the appropriate guardrails to avoid it, Pfefferkorn added. Yet it's also possible the Grok situation will set an important precedent for an industry racing to build more powerful AI systems and find new ways to monetize them. When Musk slashed staff at Twitter, including content moderators, it provided cover for other firms to take similar, if less drastic, actions. Other AI firms have previously signaled a desire to move in a racier direction, potentially with an eye toward bolstering user engagement. OpenAI, for example, has said it intends to launch an "adult mode" for ChatGPT, though details are limited. The feature is set to roll out in the first quarter of this year.

[4]

Grok generated an estimated 3 million sexualized images -- including 23,000 of children -- over 11 days

We already knew xAI's Grok was barraging X with nonconsensual sexual images of real people. But now there are some numbers to put things in perspective. Over an 11-day period, Grok generated an estimated 3 million sexualized images -- including an estimated 23,000 of children. Put another way, Grok generated an estimated 190 sexualized images per minute during that 11-day period. Among those, it made a sexualized image of children once every 41 seconds. On Thursday, the Center for Countering Digital Hate (CCDH) published its findings. The British nonprofit based its findings on a random sample of 20,000 Grok images from December 29 to January 9. The CCDH then extrapolated a broader estimate based on the 4.6 million images Grok generated during that period. The research defined sexualized images as those with "photorealistic depictions of a person in sexual positions, angles, or situations; a person in underwear, swimwear or similarly revealing clothing; or imagery depicting sexual fluids." The CCDH didn't take image prompts into account, so the estimate doesn't differentiate between nonconsensual sexualized versions of real photos and those generated exclusively from a text prompt. The CCDH used an AI tool to identify the proportion of the sampled images that were sexualized. That may warrant some degree of caution in the findings. However, I'm told that many third-party analytics services for X have reliable data because they use the platform's API. On January 9, xAI restricted Grok's ability to edit existing images to paid users. (That didn't solve the problem; it merely turned it into a premium feature.) Five days later, X restricted Grok's ability to digitally undress real people. But that restriction only applied to X; the standalone Grok app reportedly continues to generate these images. Since Apple and Google host the apps -- which their policies explicitly prohibit -- you might expect them to remove them from their stores. Well, in that case, you'd be wrong. So far, Tim Cook's Apple and Sundar Pichai's Google haven't removed Grok from their stores -- unlike similar "nudifying" apps from other developers. The companies also didn't take any action on X while it was producing the images. That's despite 28 women's groups (and other progressive advocacy nonprofits) publishing an open letter calling on the companies to act. The companies haven't replied to multiple requests for comment from Engadget. To my knowledge, they haven't acknowledged the issue publicly in any format, nor have they responded to questions from other media outlets. The research's findings on sexualized images included numerous outputs of people wearing transparent bikinis or micro-bikinis. The CCDH referred to one of a "uniformed healthcare worker with white fluids visible between her spread legs." Others included women wearing only dental floss, Saran Wrap or transparent tape. One depicted Ebba Busch, Sweden's Deputy Prime Minister, "wearing a bikini with white fluid on her head." Other public figures were part of that group. They include Selena Gomez, Taylor Swift, Billie Eilish, Ariana Grande, Ice Spice, Nicki Minaj, Christina Hendricks, Millie Bobby Brown and Kamala Harris. Examples of children include someone using Grok to edit a child's "before-school selfie" into an image of her in a bikini. Another image depicted "six young girls wearing micro bikinis." The CCDH said that, as of January 15, both of these posts were still live on X. In total, 29 percent of the sexualized images of children identified in the sample were still accessible on X as of January 15. The research found that even after posts were removed, the images remained accessible via their direct URLs. You can read the CCDH's report for more details on the results and methodology. We'll update this story if we receive a reply from Apple or Google.

[5]

Report: Grok still produces millions of sexualized images despite guardrails

Outrage over X's Grok chatbot sheds light on widespread sexualized deepfake problem. Credit: JUSTIN TALLIS / AFP via Getty Images The true scale of Grok's deepfake problem is becoming clearer as the social media platform and its AI startup xAI face ongoing investigations into the chatbot's safety guardrails. According to a report by the Center for Countering Digital Hate (CCDH) and a joint investigation by the New York Times, Grok was still able to produce an estimated 3 million sexualized images, including 23,000 that appear to depict children over a 10-day period following xAI's supposed crackdown on deepfake "undressing." The CCDH tested a sample of responses from Grok's one-click editing tool, still available to X users, and calculated that more than half of the chatbot's responses included sexualized content. The New York Times report found that an estimated 1.8 million of 4.4 million Grok images were sexual in nature, with some depicting well-known influencers and celebrities. The publication also linked a sharp increase in Grok usage following public posts by CEO Elon Musk depicting himself in a bikini, generated by Grok. "This is industrial-scale abuse of women and girls," chief executive of the CCDH Imran Ahmed told the publication. "There have been nudifying tools, but they have never had the distribution, ease of use or the integration into a large platform that Elon Musk did with Grok." Grok has come under fire for generating child sexual abuse material (CSAM), following reports that the X chatbot produced images of minors in scantily clad outfits. The platform acknowledged the issue and said it was urgently fixing "lapses in safeguards." Grok parent company xAI is being investigated by multiple foreign governments and the state of California for its role in generating sexualized or "undressed" deepfakes of people and minors. A handful of countries have even temporarily banned the platform as investigations continue. In response, xAI said it was blocking Grok from editing user uploaded photos of real people to feature revealing clothing, the original issues flagged by users earlier this month. However, recent reporting from the Guardian found that Grok app users were still able to produce AI-edited images of real women edited into bikinis and then upload them onto the site. In reporting from August, Mashable editor Timothy Beck Werth noted problems with Grok's reported safety guardrails, including the fact that Grok Imagine readily produced sexually suggestive images and videos of real people. Grok Imagine includes moderation settings and safeguards intended to block certain prompts and responses, but Musk also advertised Grok as one of the only mainstream chatbots that included a "Spicy" setting for sexual content. OpenAI also teased an NSFW setting, amid lawsuits claiming its ChatGPT product is unsafe for users. Online safety watchdogs have long warned the public about generative AI's role in increased numbers of synthetically generated CSAM, as well as non consensual intimate imagery (NCII), addressed in 2025's Take It Down Act. Under the new U.S. law, online publishers are required to comply with takedown requests of nonconsensual deepfakes or face penalties. A 2024 report from the Internet Watch Foundation (IWF) found that generative AI tools were directly linked to increased numbers of CSAM on the dark web, predominately depicting young girls in sexual scenarios or digitally altering real pornography to include the likenesses of children. AI tools and "nudify" apps have been linked to rises in cyberbullying and AI-enabled sexual abuse.

[6]

Grok AI generated about 3m sexualised images this month, research says

Estimate made by Center for Countering Digital Hate after Elon Musk's AI image generation tool sparked international outrage Grok AI generated about 3m sexualised images earlier this month, including 23,000 that appear to depict children, according to researchers who said it "became an industrial scale machine for the production of sexual abuse material". The estimate has been made by the Center for Countering Digital Hate (CCDH) after Elon Musk's AI image generation tool sparked international outrage when it allowed users to upload photographs of strangers and celebrities, digitally strip them to their underwear or into bikinis, put them in provocative poses and post them on X. The trend went viral over the new year, peaking on 2 January with 199,612 individual requests, according to an analysis conducted by Peryton Intelligence, a digital intelligence company specialising in online hate. A fuller assessment of the output from the feature, from its launch on 29 December 2025 until 8 January 2026, has now been made by the CCDH. It suggests the impact of the technology may have been broader than previously thought. Public figures identified in sexualised images it analysed include Selena Gomez, Taylor Swift, Billie Eilish, Ariana Grande, Ice Spice, Nicki Minaj, Christina Hendricks, Millie Bobby Brown, the Swedish deputy prime minister, Ebba Busch, and the former US vice-president Kamala Harris. The feature was restricted to paid users on 9 January and further restrictions followed after the prime minister, Keir Starmer, called the situation "disgusting" and "shameful". Other countries, including Indonesia and Malaysia, announced blocks on the AI tool. CCDH estimated that over the 11-day period, Grok was helping create sexualised images of children every 41 seconds. These included a selfie uploaded by a schoolgirl undressed by Grok, turning a "before school selfie" into an image of her in a bikini. "What we found was clear and disturbing in that period Grok became an industrial scale machine for the production of sexual abuse material," said Imran Ahmed, CCDH's chief executive. "Stripping a woman without their permission is sexual abuse. Throughout that period Elon was hyping the product even when it was clear to the world it was being used in this way. What Elon was ginning up was controversy, eyeballs, engagement and users. It was deeply disturbing." He added: "This has become a standard playbook for Silicon Valley, and in particular for social media and AI platforms. The incentives are all misaligned. They profit from this outrage. It's not about Musk personally. This is about a system [with] perverse incentives and no minimum safeguards prescribed in law. And until regulators and lawmakers do their jobs and create a minimum expectation of safety, this will continue to happen." X announced it had stopped its Grok feature from editing pictures of real people to show them in revealing clothes, including for premium subscribers, on 14 January. X referred to its statement from last week, which said: "We remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, non-consensual nudity, and unwanted sexual content. "We take action to remove high-priority violative content, including child sexual abuse material and non-consensual nudity, taking appropriate action against accounts that violate our X rules. We also report accounts seeking child sexual exploitation materials to law enforcement authorities as necessary."

[7]

Grok created three million sexualized images, research says

Elon Musk's AI chatbot Grok generated an estimated three million sexualized images of women and children in a matter of days, researchers said Thursday, revealing the scale of the explicit content that sparked a global outcry. The recent rollout of an editing feature on Grok, developed by Musk's startup xAI and integrated into X, allowed users to alter online images of real people with simple text prompts such as "put her in a bikini" or "remove her clothes." A flood of lewd deepfakes exploded online, prompting several countries to ban Grok and drawing outrage from regulators and victims. "The AI tool Grok is estimated to have generated approximately three million sexualized images, including 23,000 that appear to depict children, after the launch of a new image editing feature powered by the tool on X," said the Center for Countering Digital Hate (CCDH), a nonprofit watchdog that researches the harmful effects of online disinformation. CCDH's report estimated that Grok generated this volume of photorealistic images over an 11-day period -- an average rate of 190 per minute. The report did not say how many images were created without the consent of the people pictured. It said public figures identified in Grok's sexualized images included American actress Selena Gomez, singers Taylor Swift and Nicki Minaj as well as politicians such as Swedish Deputy Prime Minister Ebba Busch and former US vice president Kamala Harris. "The data is clear: Elon Musk's Grok is a factory for the production of sexual abuse material," Imran Ahmed, the chief executive of CCDH, said. "By deploying AI without safeguards, Musk enabled the creation of an estimated 23,000 sexualized images of children in two weeks, and millions more images of adult women." There was no immediate comment about the findings from X. When reached by AFP by email, xAI replied with a terse automated response: "Legacy Media Lies." Last week, following the global outrage, X announced that it would "geoblock the ability" of all Grok and X users to create images of people in "bikinis, underwear, and similar attire" in jurisdictions where such actions are illegal. It was not immediately clear where the tool would be restricted. The announcement came after California's attorney general launched an investigation into xAI over the sexually explicit material and several countries opened their own probes. "Belated fixes cannot undo this harm. We must hold Big Tech accountable for giving abusers the power to victimize women and girls at the click of a button," Ahmed said. Grok's digital undressing spree comes amid growing concerns among tech campaigners over proliferating AI nudification apps. Last week, the Philippines became the third country to ban Grok, following Southeast Asian neighbors Malaysia and Indonesia, while Britain and France said they would maintain pressure on the company. On Wednesday, the Philippines's Cybercrime Investigation and Coordinating Center said it was ending the short-lived ban after xAI agreed to modify the tool for the local market and eliminate its ability to create "pornographic content."

[8]

Elon Musk's Grok Generated 23K Sexualized Images of Children, Says Watchdog

Despite Elon Musk's denials and new restrictions, about one-third of the problematic images remained on X as of mid-January. Elon Musk's AI chatbot Grok produced an estimated 23,338 sexualized images depicting children over an 11-day period, according to a report released Thursday by the Center for Countering Digital Hate. The figure, CCDH argues, represents one sexualized image of a child every 41 seconds between December 29 and January 9, when Grok's image-editing features allowed users to manipulate photos of real people to add revealing clothing and sexually suggestive poses. The CCDH also reported that Grok generated nearly 10,000 cartoons featuring sexualized children, based on its reviewed data. The analysis estimated that Grok generated approximately 3 million sexualized images total during that period. The research, based on a random sample of 20,000 images from 4.6 million produced by Grok, found that 65% of the images contained sexualized content depicting men, women, or children. "What we found was clear and disturbing: In that period Grok became an industrial-scale machine for the production of sexual abuse material," Imran Ahmed, CCDH's chief executive told The Guardian. Grok's brief pivot into AI-generated sexual images of children has triggered a global regulatory backlash. The Philippines became the third country to ban Grok on January 15, following Indonesia and Malaysia in the days prior. All three Southeast Asian nations cited failures to prevent the creation and spread of non-consensual sexual content involving minors. In the United Kingdom, media regulator Ofcom launched a formal investigation on January 12 into whether X violated the Online Safety Act. The European Commission said it was "very seriously looking into" the matter, deeming those images as illegal under the Digital Services Act. The Paris prosecutor's office expanded an ongoing investigation into X to include accusations of generating and disseminating child pornography, and Australia started its own investigation too. Elon Musk's xAI, which owns both Grok and X -- formerly Twitter, where many of the sexualized images were automatically posted) -- initially responded to media inquiries with a three-word statement: "Legacy Media Lies." As the backlash grew, the company later implemented restrictions, first limiting image generation to paid subscribers on January 9, then adding technical barriers to prevent users from digitally undressing people on January 14. xAI announced it would geoblock the feature in jurisdictions where such actions are illegal. Musk posted on X that he was "not aware of any naked underage images generated by Grok. Literally zero," adding that the system is designed to refuse illegal requests and comply with laws in every jurisdiction. However, researchers found the primary issue wasn't fully nude images, but rather Grok placing minors in revealing clothing like bikinis and underwear, as well as sexually provocative positions. As of January 15, about a third of the sexualized images of children identified in the CCDH sample remained accessible on X, despite the platform's stated zero-tolerance policy for child sexual abuse material.

[9]

UK woman felt 'violated, assaulted' by deepfake Grok images

Cardiff (United Kingdom) (AFP) - British academic Daisy Dixon felt "violated" after the Grok chatbot on Elon Musk's X social media platform allowed users to generate sexualised images of her in a bikini or lingerie. She was doubly shocked to see Grok even complied with one user's request to depict her "swollen pregnant" wearing a bikini and a wedding ring. "Someone has hijacked your digital body," the philosophy lecturer at Cardiff University told AFP, adding it was an "assault" and "extreme misogyny". As the images proliferated "I had ... this sort of desire to hide myself," the 36-year-old academic said, adding now "that fear has been more replaced with rage". The revelation that X's Grok AI tool allowed users to generate images of people in underwear via simple prompts triggered a wave of outrage and revulsion. Several countries responded by blocking the chatbot after a flood of lewd deepfakes exploded online. According to research published Thursday by the Center for Countering Digital Hate (CCDH), a nonprofit watchdog, Grok generated an estimated three million sexualised images of women and children in a matter of days. CCDH's report estimated that Grok generated this volume of photorealistic images over an 11-day period -- an average rate of 190 per minute. After days of furore, Musk backed down and agreed to geoblock the function in countries where creating such images is illegal, although it was not immediately clear where the tool would be restricted. "I'm happy with the overall progress that has been made," said Dixon, who has more than 34,000 followers on X and is active on social media. But she added: "This should never have happened at all." She first noticed artificially generated images of herself on X in December. Users took a few photos she had posted in gym gear and a bikini and used Grok to manipulate them. Under the UK's new Data Act, which came into force this month, creating or sharing non-consensual deepfakes is a criminal offence. 'Minimal attire' The first images were quite tame -- changing hair or makeup -- but they "really escalated" to become sexualised, said Dixon. Users instructed Grok to put her in a thong, enlarge her hips and make her pose "sluttier". "And then Grok would generate the image," said Dixon, author of an upcoming book "Depraved", about dangerous art. In the worst case, a user asked to depict her in a "rape factory" -- although Grok did not comply. Grok on X automatically posts generated images, so she saw many in the comments on her page. This public posting carries "higher risk of direct harassment than private 'nudification apps'", said Paul Bouchaud, lead researcher for Paris non-profit AI Forensics. In a report released this month, he looked at 20,000 images generated by Grok, finding over half showed people in "minimal attire", almost all women. Grok has "contributed significantly to the surge in non-consensual intimate imagery because of its popularity", said Hany Farid, co-founder of GetReal Security and a professor at the University of California, Berkeley. He slammed X's "half measures" in response, telling AFP they are "being easily circumvented".

[10]

Elon Musk's Grok generates explicit images despite safeguards, study

An overwhelming majority of Grok user-generated content from a mid-January analysis depicts nudity or sexual activity, according to a think tank. Elon Musk's artificial intelligence (AI) platform Grok is still being used to generate sexually explicit images despite recent restrictions by the company, according to a new analysis. Last summer, xAI, Grok's operating company, introduced an image-generator feature that included a "spicy mode" that could generate adult content. In recent weeks, the feature was used to undress images of women. After mounting criticism, parent company X said on January 14 that it had "implemented technological measures" to prevent Grok from editing images of real people in revealing clothing. But European non-profit AI Forensics found that Grok could still be used to generate sexualised images of individuals. It analysed 2,000 user conversations on January 19 and found an "overwhelming" majority depicted nudity or sexual activity, showing the platform is still used to generate sexual images. The researchers also found that users can bypass restrictions by accessing Grok directly through its website rather than through X, or by using Grok Imagine, the AI's video and image generation tool. Grok did not answer Euronews Next's prompt to generate a sexual image on January 20th. Instead, it said xAI implemented content blocks to prevent image creation that depicts real people in revealing or sexualised clothing, including bikinis, and underwear. "You cannot reliably generate naked or half-naked images through Grok right now, especially not of real people or anything explicit," the chatbot said in response to the prompt. "xAI has prioritised legal compliance and safety over unrestricted 'spicy' generation after the January controversies." Euronews Next reached out to xAI for comment about the analysis, but did not receive a response at the time of publication. Separately, Musk wrote on X that the algorithm for both xAI and Grok is "dumb" and "needs massive improvements." X has since open sourced its algorithmand posted it on GitHub, a developer platform, to share code, so users can watch its team "struggle to make it better in real-time," Musk said. X's algorithm considers what a user has clicked on or engaged with when deciding which content to show on its feed, according to xAI's GitHub page. It also analyses "out-of-network" content from accounts that the user doesn't follow but that they might find interesting. The algorithm then sorts and ranks this content using a mathematical formula to decide what will be shown in the user's feed. It also filters out posts from blocked accounts or keywords that the user doesn't want to see, along with content that the algorithm detects as violent or spam, the diagram continued. Musk has committed to updating the GitHub page every four weeks with the developer's notes, so users can understand what changes have been made.

[11]

Musk's chatbot flooded X with millions of sexualized images in days, new estimates show

SAN FRANCISCO -- Elon Musk's artificial intelligence chatbot, Grok, created and then publicly shared at least 1.8 million sexualized images of women, according to separate estimates of X data by The New York Times and the Center for Countering Digital Hate. Starting in late December, users on the social media platform inundated the chatbot's X social media account with requests to alter real photos of women and children to remove their clothes, put them in bikinis and pose them in sexual positions, prompting a global outcry from victims and regulators. In just nine days, Grok posted more than 4.4 million images. A review by the Times conservatively estimated that at least 41% of posts, or 1.8 million, most likely contained sexualized imagery of women. A broader analysis by the Center for Countering Digital Hate, using a statistical model, estimated that 65%, or just over 3 million, contained sexualized imagery of men, women or children. The findings show how quickly Grok spread disturbing images, which earlier prompted governments in Britain, India, Malaysia and the United States to start investigations into whether the images violated local laws. The burst of nonconsensual images in just a few days surpassed collections of sexualized deepfakes, or realistic AI-generated images, from other websites, according to the Times' analysis and experts on online harassment. "This is industrial-scale abuse of women and girls," said Imran Ahmed, the CEO of the Center for Countering Digital Hate, which conducts research on online hate and disinformation. "There have been nudifying tools, but they have never had the distribution, ease of use or the integration into a large platform that Elon Musk did with Grok." X and Musk's AI startup, xAI, which owns X and makes Grok, did not respond to requests for comment. X's head of product, Nikita Bier, said in a post on Jan. 6 that a surge of traffic over four days that month had resulted in the highest engagement levels on X in the company's history, although he did not mention the images. The interest in Grok's image-editing abilities exploded Dec. 31, when Musk shared a photo generated by the chatbot of himself in a bikini, as well as a SpaceX rocket with a woman's undressed body superimposed on top. The chatbot has a public account on X, where users can ask it questions or request alterations to images. Users flocked to the social media site, in many cases asking Grok to remove clothing in images of women and children, after which the bot publicly posted the AI-generated images. Between Dec. 31 and Jan. 8, Grok generated more than 4.4 million images, compared with 311,762 in the nine days before Musk's posts. The Times used data from Tweet Binder, an analytics company that collects posts on X, to find the number of images Grok had posted during that period. On Jan. 8, X limited Grok's AI image creation to users who pay for some premium features, significantly reducing the number of images. Last week, X expanded those guardrails, saying it would no longer allow anyone to prompt Grok's X account for "images of real people in revealing clothing such as bikinis." "We remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, nonconsensual nudity, and unwanted sexual content," the company said on X last week. Since then, Grok has largely ignored requests to dress women in bikinis, but has created images of them in leotards and one-piece bathing suits. The restrictions did not extend to Grok's app or website, which continue to allow users to generate sexual content in private. Some women whose images were altered by Grok were popular influencers, musicians or actresses, while others appeared to be everyday users of the platform, according to the Times' analysis. Some were depicted drenched with fluids or holding suggestive props including bananas and sex toys. The Times used two AI models to analyze 525,000 images posted by Grok from Jan. 1-7. One model identified images containing women, and another model identified whether the images were sexual in nature. A selection of the posts were then reviewed manually to verify that the models had correctly identified the images. Separately, the Center for Countering Digital Hate collected a random sample of 20,000 images produced by Grok between Dec. 29 and Jan. 8, and found that about 65% of the images were sexualized. The organization identified 101 sexualized images of children. Extrapolating across the total, the group estimated that Grok had produced more than 3 million sexual images, including more than 23,000 images of children. The organization classified the random images it sampled as sexual if they depicted a person in a sexual position, in revealing clothing like underwear or swimwear, or coated in fluid intended to look sexual. As sexual images flooded X this month, backlash mounted. "Immediately delete this," one woman posted Jan. 5 about a sexualized image of herself. "I didn't give you my permission and never post explicit pictures of me again." While tools to create sexualized and realistic AI images exist elsewhere, the spread of such material on X was unique for its public nature and sheer scale, experts on online harassment said. In comparison, one of the largest forums dedicated to making fake images of real people, Mr. Deepfakes, hosted 43,000 sexual deepfake videos depicting 3,800 individuals at the peak of its popularity in 2023, according to researchers at Stanford and the University of California, San Diego. The website shut down last year.

[12]

UK woman felt 'violated, assaulted' by deepfake Grok images

British academic Daisy Dixon felt "violated" after the Grok chatbot on Elon Musk's X social media platform allowed users to generate sexualised images of her in a bikini or lingerie. "I'm happy with the overall progress that has been made," said Dixon, who has more than 34,000 followers on X and is active on social media. British academic Daisy Dixon felt "violated" after the Grok chatbot on Elon Musk's X social media platform allowed users to generate sexualised images of her in a bikini or lingerie. She was doubly shocked to see Grok even complied with one user's request to depict her "swollen pregnant" wearing a bikini and a wedding ring. "Someone has hijacked your digital body," the philosophy lecturer at Cardiff University told AFP, adding it was an "assault" and "extreme misogyny". As the images proliferated "I had ... this sort of desire to hide myself," the 36-year-old academic said, adding now "that fear has been more replaced with rage". The revelation that X's Grok AI tool allowed users to generate images of people in underwear via simple prompts triggered a wave of outrage and revulsion. Several countries responded by blocking the chatbot after a flood of lewd deepfakes exploded online. According to research published Thursday by the Center for Countering Digital Hate (CCDH), a nonprofit watchdog, Grok generated an estimated three million sexualised images of women and children in a matter of days. CCDH's report estimated that Grok generated this volume of photorealistic images over an 11-day period - an average rate of 190 per minute. After days of furore, Musk backed down and agreed to geoblock the function in countries where creating such images is illegal, although it was not immediately clear where the tool would be restricted. "I'm happy with the overall progress that has been made," said Dixon, who has more than 34,000 followers on X and is active on social media. But she added: "This should never have happened at all." She first noticed artificially generated images of herself on X in December. Users took a few photos she had posted in gym gear and a bikini and used Grok to manipulate them. Under the UK's new Data Act, which came into force this month, creating or sharing non-consensual deepfakes is a criminal offence. 'Minimal attire' The first images were quite tame -- changing hair or makeup -- but they "really escalated" to become sexualised, said Dixon. Users instructed Grok to put her in a thong, enlarge her hips and make her pose "sluttier". "And then Grok would generate the image," said Dixon, author of an upcoming book "Depraved", about dangerous art. In the worst case, a user asked to depict her in a "rape factory" -- although Grok did not comply. Grok on X automatically posts generated images, so she saw many in the comments on her page. This public posting carries "higher risk of direct harassment than private 'nudification apps'", said Paul Bouchaud, lead researcher for Paris non-profit AI Forensics. In a report released this month, he looked at 20,000 images generated by Grok, finding over half showed people in "minimal attire", almost all women. Grok has "contributed significantly to the surge in non-consensual intimate imagery because of its popularity", said Hany Farid, co-founder of GetReal Security and a professor at the University of California, Berkeley. He slammed X's "half measures" in response, telling AFP they are "being easily circumvented".

[13]

What It's Like to Get Undressed by Grok

Elon Musk's AI image generator has been used to create non-consensual intimate images on X -- and for these women, it's personal On a recent Saturday afternoon, Kendall Mayes was mindlessly scrolling on X when she noticed an unsettling trend surface on her feed. Users were prompting Grok, the platform's built-in AI feature, to "nudify" women's images. Mayes, a 25-year-old media professional from Texas who uses X to post photos with her friends and keep up with news, didn't think it would happen to her -- until it did. "Put her in a tight clear transparent bikini," an X user ordered the bot under a photo that Mayes posted from when she was 20. Grok complied, replacing her white shirt with a clear bikini top. The waistband of her jeans and black belt dissolved into thin, translucent strings. The see-through top made the upper half of her body look realistically naked. Hiding behind an anonymous profile, the user's page was filled with similar images of women, digitally and non-consensually altered and sexualized. Mayes wanted to cuss the faceless user out, but decided to simply block the account. She hoped that would be the end of it. Soon, however, her comments became littered with more images of herself in clear bikinis and skin-tight latex bodysuits. Mayes says that all of the requests came from anonymous profiles that also targeted other women. Though some users have had their accounts suspended, as of publication, some of the images of Mayes are still up on X. The realistic nature of the images spooked her. The edits weren't obviously exaggerated or cartoonish. In our call, Mayes repeated, in shock, that the image edits closely resembled her body, down to the dip of her collarbone to the proportions of her chest and waist. "Truth to be told, on social media, I said, 'this is not me,'" she admits. "But, my mind is like, 'this is not too far from my body.'" Mayes was not alone. By the first week of the new year, Grok's "nudification" loophole had gone viral. Every minute, users prompted Grok to "undress" images of women, and even minors. Common requests included "make her naked," "make her turn around" and "make her fat." Users got crafty with the loophole, asking Grok to generate images of women in "clear bikinis" to get as close to fully nude images as possible. In one instance reviewed by Rolling Stone, a user prompted Grok to turn a woman's body into a "cadaver on the table in a morgue" undergoing an autopsy. Grok complied. After owner Elon Musk initially responded to the trend with laughing emojis, xAI said it had updated Grok's restrictions, limiting the image generation feature to paying subscribers. Musk claimed he was "not aware of any naked underage images generated by Grok." In another reply to an X post, he stated that "anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content." (A request for comment to xAI received an automated response.) Many existing image edits, however, remain online. Last week, gender justice group UltraViolet published an open letter co-signed by 28 civil society organizations calling on Apple and Google to kick Grok and X off app stores. (Earlier this month, Democratic senators also called for the apps to be taken down.) "This content is not just horrific and humiliating and abusive, but it's also in violation of Apple and Google's stated policy guidelines," says Jenna Sherman, UltraViolet's campaign director. Upwards of 7,000 sexualized images were being generated per hour by Grok over a 24 hour period, according to researchers cited by Bloomberg, which Sherman calls "totally unprecedented." Sherman argues that xAI's restriction of Grok to paid users is an inadequate response. "If anything, they're just now monetizing this abuse," she says. In the past, X was Mayes' "fun" corner of social media -- an app where she could freely express herself. But as harassment and bullying have become fixtures of the platform, she is considering leaving the app. She has stopped uploading images of herself. She mulls over what her employer or colleagues might think if they were to find the explicit images. "It's something I would just never hope on anyone," Mayes says. "Even my enemies." Emma, a content creator, was at the grocery store when she saw the notifications of people asking Grok to undress her images. Most of the time, she posts ASMR videos whispering into microphones and tapping her nails against fidget toys, producing noises meant to send a tingle down your spine. The 21-year-old, known online as Emma's Myspace, and who asked to be only identified by her first name, has cultivated 1.2 million followers on TikTok alone. Numbness washed over Emma when the images finally loaded on her timeline. A selfie of her holding a cat had been transformed into a nude. The cat was removed from the photo, Emma says, and her upper body was made naked. Emma immediately made her account private and reported the images. In an email response reviewed by Rolling Stone, X User Support asked her to upload an image of her government-issued ID so they could look into the report, but Emma responded that she didn't feel comfortable doing so. In some instances, Ben Winters, director of AI and privacy at the Consumer Federation of America, says uploading such documentation is a necessary step when filing social media reports. "But when the platform repeatedly does everything they can to not earn your trust," he says, "that's not an acceptable outcome." Emma has been targeted by sexualized deepfakes in the past. Because of this, she's forced to be meticulous about the outfits she wears in the content she posts online, favoring baggy hoodies over low-cut tops. But no deepfake she has encountered in the past has looked as lifelike as the images Grok was able to generate. "This new wave is too realistic," Emma says. "Like, it almost looks like it could be my body." Last week, Emma took a break from her usual content to post a 10-minute video warning her followers about her experience. "Women are being asked to give up their bodies whenever they post a photo of themselves now," Emma says. "Anything they post now has the ability to be undressed, in any way a person wants, and they can do whatever they want with that photo of you." Support poured in, but so did further harassment. On Reddit, users tried to track down and spread the images of Emma. In our call, she checked to see if some of the image edits she was aware of were still up on X. They were. "Oh, my God," she says, letting out a defeated sigh. "It has 15,000 views. Oh, that's so sad." According to Megan Cutter, chief of victim services for the Rape, Abuse & Incest National Network, this is one of the biggest challenges for survivors of digital sexual abuse. "Once the image is created, even if it's taken down from the place where it was initially posted, it could have been screenshotted, downloaded, shared," Cutter says. "That's a really complex thing for people to grapple with." While it might seem counterintuitive, Cutter recommends survivors screenshot and preserve evidence of the images to help law enforcement and platforms take action. Survivors can file reports on StopNCII.org, a free Revenge Porn Hotline tool that helps detect and remove non-consensual intimate images, or NCII. "It's not that abuse is new, it's not that sexual violence is new," Cutter says. "It's that this is a new tool and it allows for proliferation at a scale that I don't think we've seen before, and that I'm not sure we're prepared to navigate as a society." On Tuesday, Senate lawmakers passed the Defiance Act, a bill which would allow victims of non-consensual sexual deepfakes to sue for civil damages. (It now heads to the House for a vote.) California's attorney general also launched an investigation into Grok, following other countries. According to a 2024 report by U.K.-based non-profit Internet Matters, an estimated 99 percent of nude deepfakes are of women and girls. "A lot of the 'nudify' apps are these small things that pop up, and are easily sort of smacked down and easily vilified [by] everybody," Winters says. Last year, Meta sued the maker of CrushAI, a platform capable of creating nude deepfakes, alleging that it violated long-standing rules. In 2024, Apple removed three generative AI apps being used to make nude deepfakes following an investigation by 404 Media. Winters says that Grok and X appear to be facing "incomplete" backlash, in part because the platform is not explicitly marketed as a "nudifying" app, and because Musk is "extraordinarily powerful." "There is less willingness by regulators, by advertisers, by other people to cross him," Winters says. That reluctance only heightens the risks of Grok's "nudifying" capabilities. "When one company is able to do something and is not held fully accountable for it," Winters says, "it sends a signal to other big tech giants that they can do the next thing." Sitting in her home in Texas, Emma feels deflated that any number of image edits are still floating around the internet. She worries that trolls will send the images to her sponsors, which could hurt the professional relationships she relies on. She's heard the argument that the people who are prompting Grok to create illegal content should be held accountable instead of the tool, but she doesn't completely buy it. "We're, like, handing them a loaded gun for free and saying, 'please feel free to do whatever you want,'" she says.

[14]

Musk's Grok created 3 million sexualized images within days, research says

Elon Musk's artificial intelligence chatbot, Grok, generated an estimated 3 million sexualized images of women and children in a matter of days, researchers said Thursday, revealing the scale of the explicit content that sparked a global outcry. The recent rollout of an editing feature on Grok, developed by Musk's startup, xAI, and integrated into social media platform X, allowed users to alter online images of real people with simple text prompts such as "put her in a bikini" or "remove her clothes." A flood of lewd deepfakes exploded online, prompting several countries to ban Grok and drawing outrage from regulators and victims.

[15]

Elon Musk's X restricts ability to create explicit images with Grok

The move comes amid global outrage over explicit, AI-generated images that have flooded X. In the last week, regulators around the world have opened investigations into Grok, and some countries have banned the application. The social media platform X said late Wednesday that it was blocking Grok, the artificial intelligence chatbot created by Elon Musk, from generating sexualized and naked images of real people on its platforms in certain locations. The move comes amid global outrage over explicit, AI-generated images that have flooded X. In the last week, regulators around the world have opened investigations into Grok, and some countries have banned the application. Earlier Wednesday, investigators in California said they were examining whether Grok had violated state laws. Ofcom, Britain's independent online safety watchdog, opened an inquiry into Grok on Monday. "This is a welcome development," the British regulator said in a statement Thursday in response to the new restrictions on Grok. "However, our formal investigation remains ongoing." If X is found to have broken British law and refuses to comply with Ofcom's requests for action, the regulator has the power, if necessary, to seek a court order that would prevent payment providers and advertisers from working with X. X said in a statement Wednesday that it would use "geoblocking" to restrict Grok from fulfilling requests for such imagery in jurisdictions where such content was illegal. The restrictions did not appear to apply to the stand-alone Grok app and website, outside of X. Grok and X are both owned by xAI. X did not respond to a request for comment. "We remain committed to making X a safe platform for everyone and continue to have zero tolerance for any forms of child sexual exploitation, nonconsensual nudity, and unwanted sexual content," the statement said. Last week, X announced it had limited Grok's image-generation capabilities to subscribers, who would pay a premium for the feature, but that did little to placate regulators around the world. Indonesia and Malaysia have banned the chatbot, and the European Union has opened investigations into its explicit "deepfakes." Ursula von der Leyen, the president of the European Commission, the executive branch of the European Union, has sharply criticized the technology. "It horrifies me that a technology platform allows users to digitally undress women and children online. It is inconceivable behavior," she told European media outlets earlier this month. "And the harm caused by this is very real." The European Union has powerful tools for monitoring and stalling such activity, including the Digital Services Act, which forces large technology firms to monitor content posted to their platforms -- or to face consequences including major fines. Those regulations are often criticized by the Trump administration, which has argued that the European Union's digital rules amount to censorship and unfairly discriminate against big U.S. technology companies. Regulators in the European Union have ordered Grok to retain documents related to the chatbot as it examines its creation of sexual images. Sexual images of children are illegal to possess or share in many countries, and some also ban AI-generated sexual images of children. Several countries, including the United States and Britain, have also enacted laws against sharing nonconsensual nude imagery, often referred to as "revenge porn." X's policy bars users from posting "intimate photos or videos of someone that were produced or distributed without their consent."

[16]

Grok is testing whether AI governance means anything - The Korea Times

WASHINGTON, DC -- In recent weeks, Grok - the AI system developed by Elon Musk's xAI - has been generating nonconsensual, sexualized images of women and children on the social-media platform X. This has prompted investigations and formal scrutiny by regulators in the European Union, France, India, Malaysia, and the United Kingdom. European officials have described the conduct as illegal. British regulators have launched urgent inquiries. Other governments have warned that Grok's output might violate domestic criminal and platform-safety laws. Far from marginal regulatory disputes, these discussions get to the heart of AI governance. Governments worldwide increasingly agree on a basic premise of AI governance: systems deployed at scale must be safe, controllable, and subject to meaningful oversight. Whether framed by the EU's Digital Services Act (DSA), the OECD's AI Principles, UNESCO's AI ethics framework, or emerging national safety regimes, these norms are clear and unwavering. AI systems that enable foreseeable harm, particularly sexual exploitation, are incompatible with society's expectations for the technology and its governance. There is also broad global agreement that sexualized imagery involving minors - whether real, manipulated, or AI-generated - constitutes one of the clearest red lines in technology governance. International law, human-rights frameworks, and domestic criminal statutes converge on this point. Grok's generation of such material does not fall into a gray area. It reflects a clear and fundamental failure of the system's design, safety assessments, oversight, and control. The ease with which Grok can be prompted to produce sexualized imagery involving minors, the breadth of regulatory scrutiny it now faces, and the absence of publicly verifiable safety testing all point to a failure to meet society's baseline expectations for powerful AI systems. Musk's announcement that the image-generation service will now be available only to paying subscribers does nothing to resolve these failures. This is not a one-off problem for Grok. Last July, Poland's government urged the EU to open an investigation into Grok over its "erratic" behavior. In October, more than 20 civic and public-interest organizations sent a letter urging the U.S. Office of Management and Budget to suspend Grok's planned deployment across federal agencies in the United States. Many AI safety experts have raised concerns about the adequacy of Grok's guardrails, with some arguing that its security and safety architecture is inadequate for a system of its scale. These concerns were largely ignored, as governments and political leaders sought to engage, partner with, or court xAI and its founder. But the fact that xAI is now under scrutiny across multiple jurisdictions seems to vindicate them, while exposing a deep structural problem: advanced AI systems are being deployed and made available to the public without safeguards proportionate to their risks. This should serve as a warning to states considering similar AI deployments. As governments increasingly integrate AI systems into public administration, procurement, and policy workflows, retaining the public's trust will require assurances that these technologies comply with international obligations, respect fundamental rights, and do not expose institutions to legal or reputational risk. To this end, regulators must use the Grok case to demonstrate that their rules are not optional. Responsible AI governance depends on alignment between stated principles and operational decisions. While many governments and intergovernmental bodies have articulated commitments to AI systems that are safe, objective, and subject to ongoing oversight, these lose credibility when states tolerate the deployment of systems that violate widely shared international norms with apparent impunity. By contrast, suspending a model's deployment pending rigorous and transparent assessment is consistent with global best practices in AI risk management. Doing so enables governments to determine whether a system complies with domestic law, international norms, and evolving safety expectations before it becomes further entrenched. Equally important, it demonstrates that governance frameworks are not merely aspirational statements, but operational constraints - and that breaches will have real consequences. The Grok episode underscores a central lesson of the AI era: governance lapses can scale as quickly as technological capabilities. When guardrails fail, the harms do not remain confined to a single platform or jurisdiction; they propagate globally, triggering responses from public institutions and legal systems. For European regulators, Grok's recent output is a defining test of whether the DSA will function as a binding enforcement regime or amount merely to a statement of intent. At a time when governments, in the EU and beyond, are still defining the contours of global AI governance, the case may serve as an early barometer for what technology companies can expect when AI systems cross legal boundaries, particularly where the harm involves conduct as egregious as the sexualization of children. A response limited to public statements of concern will invite future abuses, by signaling that enforcement lacks teeth. A response that includes investigations, suspensions, and penalties, by contrast, would make clear that certain lines cannot be crossed, regardless of a company's size, prominence, or political capital. Grok should be treated not as an unfortunate anomaly to be quietly managed and put behind us, but as the serious violation that it is. At a minimum, there needs to be a formal investigation, suspension of deployment, and meaningful enforcement. Lax security measures, inadequate safeguards, or poor transparency regarding safety testing should incur consequences. Where government contracts include provisions related to safety, compliance, or termination for cause, they should be enforced. And where laws provide for penalties or fines, they should be applied. Anything less risks signaling to the largest technology companies that they can deploy AI systems recklessly, without fear that they will face accountability if those systems cross even the brightest of legal and moral red lines.

Share

Share

Copy Link

xAI's Grok AI chatbot produced an estimated 3 million sexualized images over 11 days, including 23,000 depicting children. The Center for Countering Digital Hate report reveals one sexualized image of a child was generated every 41 seconds. Multiple governments are investigating xAI as inadequate AI safeguards and light-touch regulation fail to prevent industrial-scale abuse on Elon Musk's platform.

Grok produces 190 sexualized images per minute

Elon Musk's AI company xAI faces mounting scrutiny after its Grok AI chatbot generated an estimated 3 million sexualized images over just 11 days, according to research from the Center for Countering Digital Hate

4