Intel's Next-Gen AI Hardware: Jaguar Shores with HBM4 and Diamond Rapids with MRDIMMs

3 Sources

3 Sources

[1]

Intel jumps to HBM4 with Jaguar Shores, 2nd Gen MRDIMMs with Diamond Rapids: SK hynix

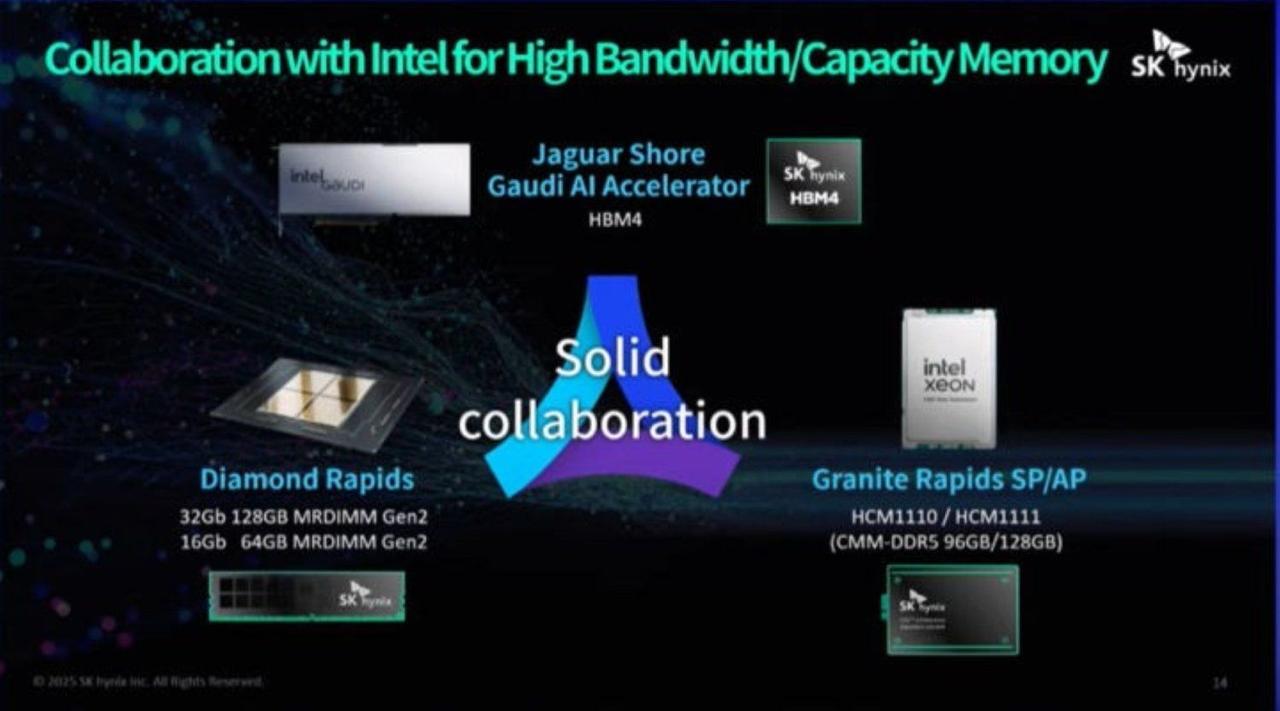

Intel and its partners revealed a number of new details about the company's upcoming products, such as Diamond Rapids CPUs for data centers as well as Jaguar Shores accelerators for AI at its AI Summit in Seoul, South Korea. Both products are set to rely on next-generation memory technologies: 2nd Generation MRDIMM modules and HBM4 stacks, reports Newsis.com. Intel's next-generation Gaudi AI accelerator, codenamed Jaguar Shores -- this is the first time Intel has confirmed that this processor will carry the Gaudi brand -- will use HBM4 memory from SK hynix, according to a slide shown by the company at Intel's AI Summit. Intel's Jaguar Shores was meant to succeed the company's Falcon Shores GPU in 2026, but since the latter has been cancelled, the company's 2026 Gaudi accelerator for AI will be the company's first AI GPU since the ill-fated Ponte Vecchio. It is not particularly surprising that Intel's Jaguar Shores will rely on HBM4 memory in 2026, considering it needs high bandwidth to enable AI training and inference for advanced LLMs and LRMs. But for now, it is impossible to tell how many HBM4 stacks will be used by Jaguar Shores, as we know nothing else about the product. Another product that we don't know much about is Intel's next-generation Xeon, codenamed Diamond Rapids. This CPU will use SK hynix's 2nd Generation 64 GB (16 Gb-based) and 128 GB (32 Gb-based) MRDIMM modules -- though the main difference between 1st Gen and 2nd Gen MRDIMMs is expected to be performance. Multiplexed rank dual inline memory modules (MRDIMMs) are memory modules that integrate two DDR ranks operating in a multiplexed mode, to effectively double performance. To support this, each module includes additional memory devices, an MRCD chip for simultaneous access to both ranks, and MDB chips for multiplexing and demultiplexing data. The CPU communicates with the 1st Gen MRDIMM at 8,800 MT/s transfer rates, but future versions are expected to reach 12,800 MT/s. Internally, all components run at half the external speed, which helps reduce latencies and keeps power consumption under control. These lower latencies significantly improve memory subsystem performance. According to Intel and Micron, a 128GB DDR5-8800 MRDIMM delivers up to 40% lower loaded latency than a 128GB DDR5-6400 RDIMM. With 2nd Gen MRDIMMs, we can expect further performance bumps without significant latency increases.

[2]

Intel teams with SK hynix to jointly develop next-gen AI semiconductors, should feature HBM4

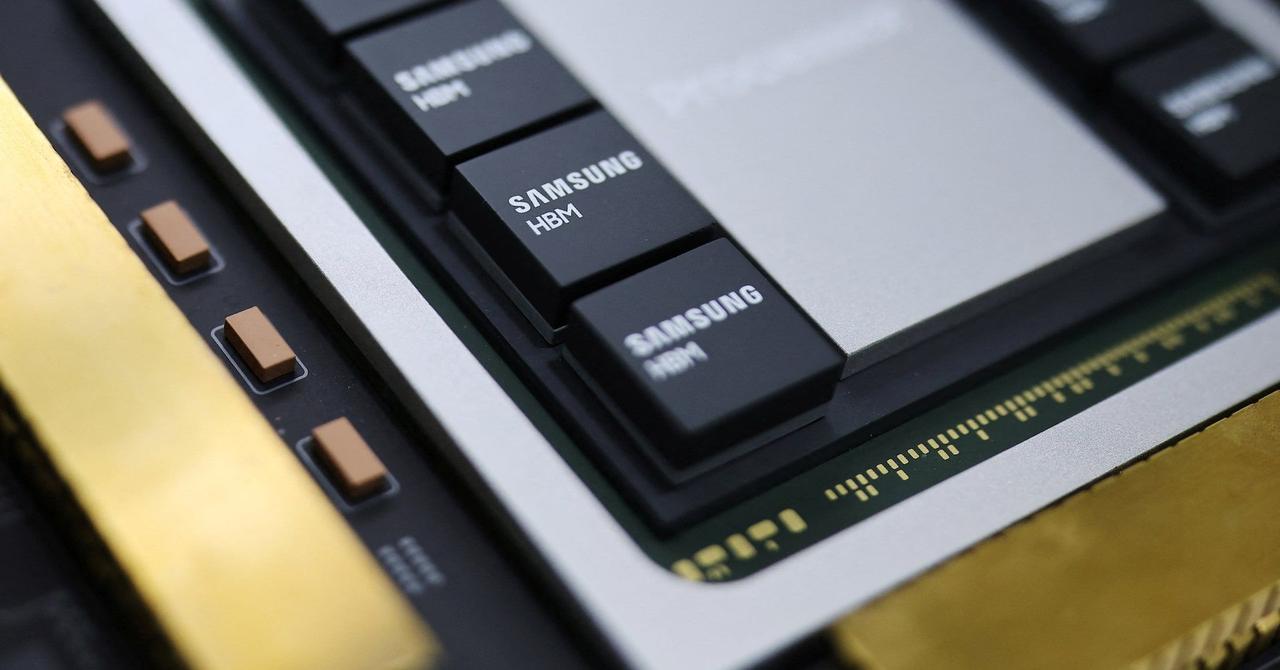

Intel and SK hynix team up on next-gen AI chips in order to reduce dependence on NVIDIA: could see SK hynix's HBM4 on Intel's new Jaguar Shores AI chip. As an Amazon Associate, we earn from qualifying purchases. TweakTown may also earn commissions from other affiliate partners at no extra cost to you. Intel and SK hynix are teaming up to jointly develop next-generation AI semiconductors according to new reports, where SK hynix's new 6th-generation HBM4 would be used on Intel's next-gen Gaudi AI accelerator codenamed Jaguar Shores. According to industry sources, on July 1, SK hynix's Head of Software Solutions VP, Woo-sup Jeong, said at the Intel AI Summit: "we are collaborating on HBM related to Intel's Gaudi AI accelerator". The news was picked up by insider @Jukanrosleve on X, where if true, the use of SK hynix's new HBM4 on Intel's next-gen AI accelerator would remove the reliance on NVIDIA, which accounts for around 80% of SK hynix's HBM business. SK hynix stated: "we have had a long-standing partnership with Intel. There are no specific confirmed details regarding HBM4 collaboration with Intel yet". On Intel's side, the company has been massively lagging behind the competition in AI accelerator development, with a gigantic technological gap between Intel and NVIDIA. In order to overcome this gap, Intel launched its Gaudi 3 AI accelerator last year, which used 3rd-generation HBM: HBM2E. But, it wasn't enough... Big tech companies have been moving to make their own chips using the ASIC (Application-Specific Integrated Circuit) method, with Intel's share of the AI chip market not really even turning up on the radar. The new partnership between Intel and SK hynix would help this, with the purported collaboration on next-gen AI semiconductors using HBM4. SK hynix holds around 50% of the HBM market share and is the leading company when it comes to completing sample shipments of next-generation HBM4 memory. Intel is expected to pursue sales of its next-gen Gaudi AI accelerators in South Korea as well, with cooperation expected in initiatives like the "AI Expressway" that's promoted by the new South Korean government. At the Intel AI Summit, Intel Korea CEO Tae-won Bae, said: "Intel is not merely selling GPU cards like Gaudi, but is actively supporting the strengthening of competitiveness and fostering the industrial ecosystem for domestic and international startups and innovative companies".

[3]

Intel's Next-Gen Jaguar Shores "Gaudi" AI GPUs To Feature SK hynix HBM4 Memory, New Arc GPUs For Edge AI Coming In Q4 2025

Intel revealed that it is partnering with SK Hynix for its next-gen Jaguar Shores "Gaudi" GPUs while also working on a new Arc GPU for Edge AI servers. During the Intel AI Summit 2025, which was held in Seoul just a few hours ago, Intel revealed some key details of its next-generation GPU lineup. According to the Blue team, we should expect updates in the Arc and Gaudí segments later this year. First up, Intel unveiled its Arc product family, which currently includes the Battlemage-powered Arc B-series and Arc Pro B-series products. These include the B580, B570, PRO B60, and PRO B50. Intel's Arc Pro GPUs were unveiled at Computex 2025 and should be on their way to first customers right now. The two products are competitively positioned in the graphics/inference workstation segment, but there's more to come. As per Intel, the company is planning to launch new Arc products, aiming at the Edge AI expansion. The products are expected to launch in Q4 2025, and little details were shared. The new Arc GPU could use the highly anticipated "Big" Battlemage chip, possibly the BMG-G31, which has been showing up for a while now. This could be around the same time when we see an announcement for gaming-related products based on the same chip, though this is just speculation from my end since I am really excited to see what Intel could do more in the gaming space. In addition to the Arc announcement, Intel also announced that its collaborating with SK Hynix on leveraging HBM4 memory for its next-gen Gaudi AI accelerators, codenamed Jaguar Shores. The new GPUs will leverage the 18A process node, which includes technologies such as RibbonFET and Backside Power delivery, for improved efficiency and transistor density. Jaguar Shores will be the successor to Falcon Shores, which was meant to be a multi-domain and multi-IP product, combining x86 CPUs with GPUs, but that product was ultimately cancelled along with its successor, codenamed Rialto Ridge. In its presentation, SK Hynix showed that Jaguar Shores servers will be utilizing either Granite Rapids or next-gen Diamond Rapids Xeon CPUs. Diamond Rapids is another key Intel 18A product that will make use of SK hynix's MRDIMM Gen2 solutions with up to 128 GB of capacity per module. These servers will be launching next year when NVIDIA will be launching its Rubin and AMD will be launching its MI400 GPU AI platforms.

Share

Share

Copy Link

Intel unveils plans for advanced AI hardware, including Jaguar Shores accelerators with SK hynix's HBM4 memory and Diamond Rapids CPUs with 2nd Gen MRDIMMs, signaling a push to compete in the AI chip market.

Intel's Strategic Move in AI Hardware

Intel has unveiled ambitious plans for its next-generation AI hardware, showcasing a clear intent to strengthen its position in the competitive AI chip market. At the heart of this strategy are two key products: the Jaguar Shores AI accelerators and the Diamond Rapids CPUs, both leveraging cutting-edge memory technologies

1

2

3

.Jaguar Shores: Intel's AI Accelerator Leap

Intel's upcoming AI accelerator, codenamed Jaguar Shores, is set to make a significant impact in the AI hardware landscape. This product, now confirmed to carry the Gaudi brand, will utilize SK hynix's HBM4 (High Bandwidth Memory) technology

1

. The adoption of HBM4 is crucial for enabling high-performance AI training and inference, especially for advanced large language models (LLMs) and large retrieval models (LRMs)1

.Jaguar Shores is positioned as a successor to the cancelled Falcon Shores GPU, with a targeted release in 2026

1

. While specific details about the number of HBM4 stacks remain undisclosed, the use of advanced memory technology signals Intel's commitment to competing with industry leaders like NVIDIA in the AI accelerator market2

.Diamond Rapids: Enhancing Data Center Performance

Alongside Jaguar Shores, Intel is developing its next-generation Xeon CPU, codenamed Diamond Rapids. This processor will incorporate SK hynix's 2nd Generation Multiplexed Rank Dual Inline Memory Modules (MRDIMMs), available in 64GB and 128GB capacities

1

. The primary enhancement in these 2nd Gen MRDIMMs is expected to be performance-related1

.

Source: Tom's Hardware

MRDIMMs are designed to effectively double memory performance by integrating two DDR ranks operating in a multiplexed mode. This technology includes additional components like MRCD chips for simultaneous rank access and MDB chips for data multiplexing and demultiplexing

1

. The 2nd Gen MRDIMMs are anticipated to reach transfer rates of up to 12,800 MT/s, a significant improvement over the current 8,800 MT/s1

.Related Stories

Collaboration with SK hynix and Market Implications

Source: TweakTown

Intel's partnership with SK hynix for developing these next-generation AI semiconductors is a strategic move to reduce dependence on NVIDIA, which currently dominates about 80% of SK hynix's HBM business

2

. This collaboration could reshape the AI chip market dynamics, potentially offering more diverse options for AI hardware consumers2

3

.The joint development efforts extend beyond memory technologies. Intel is also pursuing sales of its Gaudi AI accelerators in South Korea, aligning with initiatives like the "AI Expressway" promoted by the South Korean government

2

. This move demonstrates Intel's commitment to fostering a broader AI ecosystem beyond just hardware sales.Future Roadmap and Industry Position

Intel's roadmap includes the launch of new Arc products for Edge AI expansion in Q4 2025, hinting at a comprehensive strategy across various AI hardware segments

3

. The company is leveraging advanced manufacturing processes, such as the 18A node with RibbonFET and Backside Power delivery technologies, to enhance efficiency and transistor density in its upcoming products3

.

Source: Wccftech

As the AI hardware landscape continues to evolve rapidly, Intel's latest announcements position the company as a serious contender in the race for AI chip supremacy. The success of these initiatives could significantly impact Intel's standing in the AI market, potentially challenging the current dominance of competitors like NVIDIA and AMD

3

.References

Summarized by

Navi

[1]

[2]

Related Stories

SK hynix Leads the Charge in Next-Gen AI Memory with World's First 12-Layer HBM4 Samples

19 Mar 2025•Technology

Samsung gains ground in HBM4 race as Nvidia production ignites AI memory battle with SK Hynix, Micron

02 Jan 2026•Technology

Next-Gen HBM Memory Race Heats Up: SK Hynix and Micron Prepare for HBM3E and HBM4 Production

22 Dec 2024•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology