Meta Enhances Safety Measures for Teen and Child-Focused Instagram Accounts

6 Sources

6 Sources

[1]

Meta is adding new safety features to kid-focused IG accounts run by adults

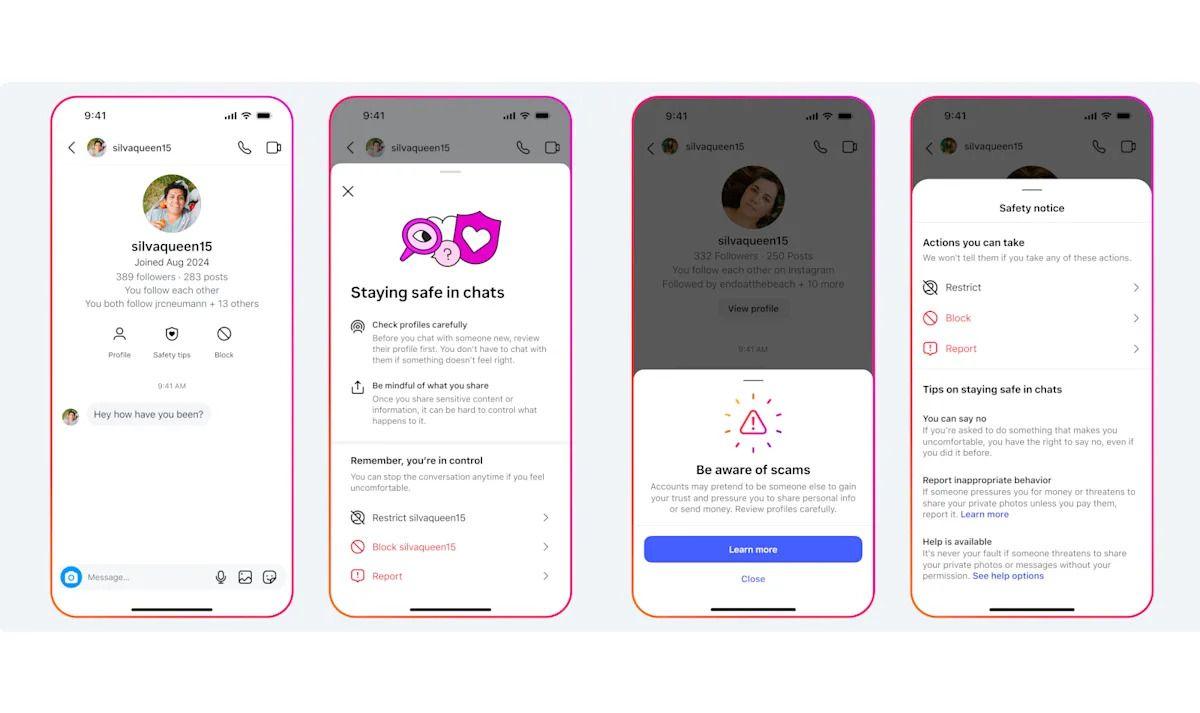

They'll protect those accounts from users who leave sexual comments and ask for sexual images in DMs. Meta is adding some of its teen safety features to Instagram accounts featuring children, even if they're ran by adults. While children under 13 years of age aren't allowed to sign up on the social media app, Meta allows adults like parents and managers to run accounts for children and post videos and photos of them. The company says that these accounts are "overwhelmingly used in benign ways," but they're also targeted by predators who leave sexual comments and ask for sexual images in DMs. In the coming months, the company is giving these adult-ran kid accounts its strictest message settings to prevent unsavory DMs. It will also automatically turn on Hidden Words for them so that account owners can filter out unwanted comments on their posts. In addition, Meta will avoid recommending them to accounts blocked by teen users to lessen the chances predators finding them. The company will also make it harder for suspicious users to find them through search and will hide comments from potentially suspicious adults on their posts. Meta says will continue "to take aggressive action" on accounts breaking its rules: It has already removed 135,000 Instagram accounts for leaving sexual comments on and requesting sexual images from adult-managed accounts featuring children earlier this year. It also deleted an additional, 500,000 Facebook and Instagram accounts linked to those original ones. Meta introduced teen accounts on Instagram last year to automatically opt users 13 to 18 years of age into stricter privacy features. The company then launched teen accounts on Facebook and Messenger in April and is even testing AI age-detection tech to determine whether a supposed adult user has lied about their birthday so they could be moved to a teen account if needed. Since then, Meta has rolled out more and more safety features meant for younger teens. It released Location Notice in June to let younger teens know that they're chatting with someone from another country, since sextortion scammers typically lie about their location. (To note, authorities have observed a huge increase in "sextortion" cases involving kids being threatened online to send explicit images.) Meta also introduced a nudity protection feature, which blurs images in DM detected as containing nudity, since sextortion scammers may send nude pictures first in an effort to convince a victim to send reciprocate. Today, Meta is also launching new ways for teens to view safety tips. When they chat with someone in DMs, they can now tap on the "Safety Tips" icon at the top of the conversation to bring up a screen where they can restrict, block or report the other user. Meta has also launched a combined block and report option in DMs, so that users can take both actions together in one tap.

[2]

Meta launches new teen safety features, removes 635,000 accounts that sexualize children

Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap. The company also announced Wednesday that it has removed thousands of accounts that were leaving sexualized comments or requesting sexual images from adult-run accounts of kids under 13. Of these, 135,000 were commenting and another 500,000 were linked to accounts that "interacted inappropriately," Meta said in a blog post. The heightened measures arrive as social media companies face increased scrutiny over how their platform affects the mental health and well-being of younger users. This includes protecting children from predatory adults and scammers who ask -- then extort -- them for nude images. Meta said teen users blocked more than a million accounts and reported another million after seeing a "safety notice" that reminds people to "be cautious in private messages and to block and report anything that makes them uncomfortable." Earlier this year, Meta began to test the use of artificial intelligence to determine if kids are lying about their ages on Instagram, which is technically only allowed for those over 13. If it is determined that a user is misrepresenting their age, the account will automatically become a teen account, which has more restrictions than an adult account. Teen accounts are private by default. Private messages are restricted so teens can only receive them from people they follow or are already connected to. In 2024, the company made teen accounts private by default. Meta faces lawsuits from dozens of U.S. states that accuse it of harming young people and contributing to the youth mental health crisis by knowingly and deliberately designing features on Instagram and Facebook that addict children to its platforms.

[3]

Meta launches new teen safety features, removes 635,000 accounts that sexualize children

Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap. The company also announced Wednesday that it has removed thousands of accounts that were leaving sexualized comments or requesting sexual images from adult-run accounts of kids under 13. Of these, 135,000 were commenting and another 500,000 were linked to accounts that "interacted inappropriately," Meta said in a blog post. The heightened measures arrive as social media companies face increased scrutiny over how their platform affects the mental health and well-being of younger users. This includes protecting children from predatory adults and scammers who ask -- then extort -- them for nude images. Meta said teen users blocked more than a million accounts and reported another million after seeing a "safety notice" that reminds people to "be cautious in private messages and to block and report anything that makes them uncomfortable." Earlier this year, Meta began to test the use of artificial intelligence to determine if kids are lying about their ages on Instagram, which is technically only allowed for those over 13. If it is determined that a user is misrepresenting their age, the account will automatically become a teen account, which has more restrictions than an adult account. Teen accounts are private by default. Private messages are restricted so teens can only receive them from people they follow or are already connected to. In 2024, the company made teen accounts private by default. Meta faces lawsuits from dozens of U.S. states that accuse it of harming young people and contributing to the youth mental health crisis by knowingly and deliberately designing features on Instagram and Facebook that addict children to its platforms.

[4]

Meta launches new teen safety features, removes 635,000 accounts that sexualize children

Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap. The company also announced Wednesday that it has removed thousands of accounts that were leaving sexualized comments or requesting sexual images from adult-run accounts of kids under 13. Of these, 135,000 were commenting and another 500,000 were linked to accounts that "interacted inappropriately," Meta said in a blog post. The heightened measures arrive as social media companies face increased scrutiny over how their platform affects the mental health and well-being of younger users. This includes protecting children from predatory adults and scammers who ask -- then extort -- them for nude images. Meta said teen users blocked more than a million accounts and reported another million after seeing a "safety notice" that reminds people to "be cautious in private messages and to block and report anything that makes them uncomfortable." Earlier this year, Meta began to test the use of artificial intelligence to determine if kids are lying about their ages on Instagram, which is technically only allowed for those over 13. If it is determined that a user is misrepresenting their age, the account will automatically become a teen account, which has more restrictions than an adult account. Teen accounts are private by default. Private messages are restricted so teens can only receive them from people they follow or are already connected to. In 2024, the company made teen accounts private by default. Meta faces lawsuits from dozens of U.S. states that accuse it of harming young people and contributing to the youth mental health crisis by knowingly and deliberately designing features on Instagram and Facebook that addict children to its platforms.

[5]

Meta Launches New Teen Safety Features, Removes 635,000 Accounts That Sexualize Children

Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap. The company also announced Wednesday that it has removed thousands of accounts that were leaving sexualized comments or requesting sexual images from adult-run accounts of kids under 13. Of these, 135,000 were commenting and another 500,000 were linked to accounts that "interacted inappropriately," Meta said in a blog post. The heightened measures arrive as social media companies face increased scrutiny over how their platform affects the mental health and well-being of younger users. This includes protecting children from predatory adults and scammers who ask -- then extort -- them for nude images. Meta said teen users blocked more than a million accounts and reported another million after seeing a "safety notice" that reminds people to "be cautious in private messages and to block and report anything that makes them uncomfortable." Earlier this year, Meta began to test the use of artificial intelligence to determine if kids are lying about their ages on Instagram, which is technically only allowed for those over 13. If it is determined that a user is misrepresenting their age, the account will automatically become a teen account, which has more restrictions than an adult account. Teen accounts are private by default. Private messages are restricted so teens can only receive them from people they follow or are already connected to. In 2024, the company made teen accounts private by default. Meta faces lawsuits from dozens of U.S. states that accuse it of harming young people and contributing to the youth mental health crisis by knowingly and deliberately designing features on Instagram and Facebook that addict children to its platforms.

[6]

Meta launches new teen safety features, removes 635,000 accounts that sexualise children - The Economic Times

Instagram parent company Meta has introduced new safety features aimed at protecting teens who use its platforms, including information about accounts that message them and an option to block and report accounts with one tap. The company also announced Wednesday that it has removed thousands of accounts that were leaving sexualised comments or requesting sexual images from adult-run accounts of kids under 13. Of these, 135,000 were commenting and another 500,000 were linked to accounts that "interacted inappropriately," Meta said in a blog post. The heightened measures arrive as social media companies face increased scrutiny over how their platform affects the mental health and well-being of younger users. This includes protecting children from predatory adults and scammers who ask - then extort - them for nude images. Meta said teen users blocked more than a million accounts and reported another million after seeing a "safety notice" that reminds people to "be cautious in private messages and to block and report anything that makes them uncomfortable." Earlier this year, Meta began to test the use of artificial intelligence to determine if kids are lying about their ages on Instagram, which is technically only allowed for those over 13. If it is determined that a user is misrepresenting their age, the account will automatically become a teen account, which has more restrictions than an adult account. Teen accounts are private by default. Private messages are restricted so teens can only receive them from people they follow or are already connected to. In 2024, the company made teen accounts private by default. Meta faces lawsuits from dozens of US states that accuse it of harming young people and contributing to the youth mental health crisis by knowingly and deliberately designing features on Instagram and Facebook that addict children to its platforms.

Share

Share

Copy Link

Meta introduces new safety features for Instagram accounts featuring children and teens, including stricter messaging settings and AI-powered age detection, while removing hundreds of thousands of accounts involved in inappropriate interactions.

Meta's New Safety Measures for Young Users

Meta, the parent company of Instagram, has announced a series of new safety features aimed at protecting teen and child-focused accounts on its platforms. These measures come in response to growing concerns about online predators and the impact of social media on youth mental health

1

.

Source: AP

Enhanced Protection for Child-Focused Accounts

While Instagram prohibits users under 13 from creating accounts, it allows adults to manage accounts featuring children. Meta acknowledges that these accounts are primarily used benignly but have become targets for predators. In response, the company is implementing its strictest message settings for these accounts and automatically enabling Hidden Words to filter unwanted comments

1

.Account Removals and Restrictions

Meta has taken aggressive action against accounts violating its rules:

- Removed 135,000 Instagram accounts for leaving sexual comments or requesting sexual images from adult-managed child accounts

- Deleted an additional 500,000 Facebook and Instagram accounts linked to inappropriate interactions

- Implemented measures to make it harder for suspicious users to find child-focused accounts through search

2

New Safety Features for Teens

Source: Engadget

Meta has introduced several features to enhance teen safety on its platforms:

- A "Safety Tips" icon in direct messages, allowing teens to easily restrict, block, or report other users

- A combined block and report option in DMs for quicker action

- Location Notice feature to alert younger teens when chatting with someone from another country

- Nudity protection feature that blurs images detected as containing nudity in DMs

3

AI-Powered Age Detection

Meta has begun testing artificial intelligence to determine if users are misrepresenting their age on Instagram. If detected, accounts are automatically converted to teen accounts with more restrictions, including:

- Private accounts by default

- Limited private messaging to only followers or connected users

4

Related Stories

Impact and User Response

The company reports that teen users have blocked over a million accounts and reported another million after seeing safety notices. These notices remind users to be cautious in private messages and to report anything that makes them uncomfortable

5

.Legal Challenges

Despite these efforts, Meta faces lawsuits from numerous U.S. states accusing the company of knowingly designing addictive features on Instagram and Facebook that harm young people and contribute to the youth mental health crisis

5

.References

Summarized by

Navi

[3]

[4]

Related Stories

Instagram Implements Mandatory Privacy Settings and Parental Controls for Teen Accounts

17 Sept 2024

Instagram Deploys AI to Detect and Restrict Teen Accounts Masquerading as Adults

21 Apr 2025•Technology

Instagram Implements PG-13 Filters for Teen Accounts in Major Safety Update

14 Oct 2025•Entertainment and Society

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology