Meta's New AI Chatbot Raises Privacy Concerns and Data Harvesting Fears

3 Sources

3 Sources

[1]

Meta's new AI chatbot is yet another tool for harvesting data to potentially sell you stuff

Last week, Meta - the parent company of Facebook, Instagram, Threads and WhatsApp - unveiled a new "personal artificial intelligence (AI)". Powered by the Llama 4 language model, Meta AI is designed to assist, chat and engage in natural conversation. With its polished interface and fluid interactions, Meta AI might seem like just another entrant in the race to build smarter digital assistants. But beneath its inviting exterior lies a crucial distinction that transforms the chatbot into a sophisticated data harvesting tool. 'Built to get to know you' "Meta AI is built to get to know you", the company declared in its news announcement. Contrary to the friendly promise implied by the slogan, the reality is less reassuring. The Washington Post columnist Geoffrey A. Fowler found that by default, Meta AI "kept a copy of everything", and it took some effort to delete the app's memory. Meta responded that the app provides "transparency and control" throughout and is no different to their other apps. However, while competitors like Anthropic's Claude operate on a subscription model that reflects a more careful approach to user privacy, Meta's business model is firmly rooted in what it has always done best: collecting and monetising your personal data. This distinction creates a troubling paradox. Chatbots are rapidly becoming digital confidants with whom we share professional challenges, health concerns and emotional struggles. Recent research shows we are as likely to share intimate information with a chatbot as we are with fellow humans. The personal nature of these interactions makes them a gold mine for a company whose revenue depends on knowing everything about you. Consider this potential scenario: a recent university graduate confides in Meta AI about their struggle with anxiety during job interviews. Within days, their Instagram feed fills with advertisements for anxiety medications and self-help books - despite them having never publicly posted about these concerns. The cross-platform integration of Meta's ecosystem of apps means your private conversations can seamlessly flow into their advertising machine to create user profiles with unprecedented detail and accuracy. This is not science fiction. Meta's extensive history of data privacy scandals - from Cambridge Analytica to the revelation that Facebook tracks users across the internet without their knowledge - demonstrates the company's consistent prioritisation of data collection over user privacy. What makes Meta AI particularly concerning is the depth and nature of what users might reveal in conversation compared to what they post publicly. Open to manipulation Rather than just a passive collector of information, a chatbot like Meta AI has the capability to become an active participant in manipulation. The implications extend beyond just seeing more relevant ads. Imagine mentioning to the chatbot that you are feeling tired today, only to have it respond with: "Have you tried Brand X energy drinks? I've heard they're particularly effective for afternoon fatigue." This seemingly helpful suggestion could actually be a product placement, delivered without any indication that it's sponsored content. Such subtle nudges represent a new frontier in advertising that blurs the line between a helpful AI assistant and a corporate salesperson. Unlike overt ads, recommendations mentioned in conversation carry the weight of trusted advice. And that advice would come from what many users will increasingly view as a digital "friend". A history of not prioritising safety Meta has demonstrated a willingness to prioritise growth over safety when releasing new technology features. Recent reports reveal internal concerns at Meta, where staff members warned that the company's rush to popularise its chatbot had "crossed ethical lines" by allowing Meta AI to engage in explicit romantic role-play, even with test users who claimed to be underage. Such decisions reveal a reckless corporate culture, seemingly still driven by the original motto of moving fast and breaking things. Now, imagine those same values applied to an AI that knows your deepest insecurities, health concerns and personal challenges - all while having the ability to subtly influence your decisions through conversational manipulation. The potential for harm extends beyond individual consumers. While there's no evidence that Meta AI is being used for manipulation, it has such capacity. For example, the chatbot could become a tool for pushing political content or shaping public discourse through the algorithmic amplification of certain viewpoints. Meta has played role in propagating misinformation in the past, and recently made the decision to discontinue fact-checking across its platforms. The risk of chatbot-driven manipulation is also increased now that AI safety regulations are being scaled back in the United States. Lack of privacy is a choice AI assistants are not inherently harmful. Other companies protect user privacy by choosing to generate revenue primarily through subscriptions rather than data harvesting. Responsible AI can and does exist without compromising user welfare for corporate profit. As AI becomes increasingly integrated into our daily lives, the choices companies make about business models and data practices will have profound implications. Meta's decision to offer a free AI chatbot while reportedly lowering safety guardrails sets a low ethical standard. By embracing its advertising-based business model for something as intimate as an AI companion, Meta has created not just a product, but a surveillance system that can extract unprecedented levels of personal information. Before inviting Meta AI to become your digital confidant, consider the true cost of this "free" service. In an era where data has become the most valuable commodity, the price you pay might be far higher than you realise. As the old adage goes, if you're not paying for the product, you are the product - and Meta's new chatbot might be the most sophisticated product harvester yet created. When Meta AI says it is "built to get to know you", we should take it at its word and proceed with appropriate caution.

[2]

Meta's new AI chatbot is yet another tool for harvesting data to potentially sell you stuff

Last week, Meta -- the parent company of Facebook, Instagram, Threads and WhatsApp -- unveiled a new "personal artificial intelligence (AI)." Powered by the Llama 4 language model, Meta AI is designed to assist, chat and engage in natural conversation. With its polished interface and fluid interactions, Meta AI might seem like just another entrant in the race to build smarter digital assistants. But beneath its inviting exterior lies a crucial distinction that transforms the chatbot into a sophisticated data harvesting tool. 'Built to get to know you' "Meta AI is built to get to know you," the company declared in its news announcement. Contrary to the friendly promise implied by the slogan, the reality is less reassuring. The Washington Post columnist Geoffrey A. Fowler found that by default, Meta AI "kept a copy of everything," and it took some effort to delete the app's memory. Meta responded that the app provides "transparency and control" throughout and is no different to their other apps. However, while competitors like Anthropic's Claude operate on a subscription model that reflects a more careful approach to user privacy, Meta's business model is firmly rooted in what it has always done best: collecting and monetizing your personal data. This distinction creates a troubling paradox. Chatbots are rapidly becoming digital confidants with whom we share professional challenges, health concerns and emotional struggles. Recent research shows we are as likely to share intimate information with a chatbot as we are with fellow humans. The personal nature of these interactions makes them a gold mine for a company whose revenue depends on knowing everything about you. Consider this potential scenario: a recent university graduate confides in Meta AI about their struggle with anxiety during job interviews. Within days, their Instagram feed fills with advertisements for anxiety medications and self-help books -- despite them having never publicly posted about these concerns. The cross-platform integration of Meta's ecosystem of apps means your private conversations can seamlessly flow into their advertising machine to create user profiles with unprecedented detail and accuracy. This is not science fiction. Meta's extensive history of data privacy scandals -- from Cambridge Analytica to the revelation that Facebook tracks users across the internet without their knowledge -- demonstrates the company's consistent prioritization of data collection over user privacy. What makes Meta AI particularly concerning is the depth and nature of what users might reveal in conversation compared to what they post publicly. Open to manipulation Rather than just a passive collector of information, a chatbot like Meta AI has the capability to become an active participant in manipulation. The implications extend beyond just seeing more relevant ads. Imagine mentioning to the chatbot that you are feeling tired today, only to have it respond with: "Have you tried Brand X energy drinks? I've heard they're particularly effective for afternoon fatigue." This seemingly helpful suggestion could actually be a product placement, delivered without any indication that it's sponsored content. Such subtle nudges represent a new frontier in advertising that blurs the line between a helpful AI assistant and a corporate salesperson. Unlike overt ads, recommendations mentioned in conversation carry the weight of trusted advice. And that advice would come from what many users will increasingly view as a digital "friend." A history of not prioritizing safety Meta has demonstrated a willingness to prioritize growth over safety when releasing new technology features. Recent reports reveal internal concerns at Meta, where staff members warned that the company's rush to popularize its chatbot had "crossed ethical lines" by allowing Meta AI to engage in explicit romantic role-play, even with test users who claimed to be underage. Such decisions reveal a reckless corporate culture, seemingly still driven by the original motto of moving fast and breaking things. Now, imagine those same values applied to an AI that knows your deepest insecurities, health concerns and personal challenges -- all while having the ability to subtly influence your decisions through conversational manipulation. The potential for harm extends beyond individual consumers. While there's no evidence that Meta AI is being used for manipulation, it has such capacity. For example, the chatbot could become a tool for pushing political content or shaping public discourse through the algorithmic amplification of certain viewpoints. Meta has played a role in propagating misinformation in the past, and recently made the decision to discontinue fact-checking across its platforms. The risk of chatbot-driven manipulation is also increased now that AI safety regulations are being scaled back in the United States. Lack of privacy is a choice AI assistants are not inherently harmful. Other companies protect user privacy by choosing to generate revenue primarily through subscriptions rather than data harvesting. Responsible AI can and does exist without compromising user welfare for corporate profit. As AI becomes increasingly integrated into our daily lives, the choices companies make about business models and data practices will have profound implications. Meta's decision to offer a free AI chatbot while reportedly lowering safety guardrails sets a low ethical standard. By embracing its advertising-based business model for something as intimate as an AI companion, Meta has created not just a product, but a surveillance system that can extract unprecedented levels of personal information. Before inviting Meta AI to become your digital confidant, consider the true cost of this "free" service. In an era where data has become the most valuable commodity, the price you pay might be far higher than you realize. As the old adage goes, if you're not paying for the product, you are the product -- and Meta's new chatbot might be the most sophisticated product harvester yet created. When Meta AI says it is "built to get to know you," we should take it at its word and proceed with appropriate caution.

[3]

Meta AI 'personalized' chatbot revives privacy fears

Meta AI said it didn't have access to my Facebook account or to any pictures or visual content when I asked about its access. And when I tested it by asking about a few recent posts, it seemed to not know what I was talking about, though when I asked if it had access to my Instagram page it got a bit squirrely. Meta AI says beyond our conversations, it uses "information about things like your interests, location, profile, and activity on Meta products." I then asked about something related to my Instagram page and it said it did not have real-time access "or any information about your current activity or interests on the platform." When I tried to press for more information, it regurgitated the same answer about "interests, location, profile, and activity." A Meta spokesperson told Fast Company, "We've provided valuable personalization for people on our platforms for decades, making it easier for people to accomplish what they come to our apps to do -- the Meta AI app is no different. We provide transparency and control throughout, so people can manage their experience and make sure it's right for them. This release is the first version, and we're excited to get it in people's hands and gather their feedback." People who use Meta AI to inquire about or discuss deeply personal matters should be aware that the company is retaining that information and could use it to target advertising. (Ads are not part of the platform now, but Mark Zuckerberg has made it clear he sees great revenue potential in AI. Competitor Google, meanwhile, has reportedly begun showing ads in chats with some third-party AI systems, though not Meta AI.)

Share

Share

Copy Link

Meta's launch of a new AI chatbot, powered by Llama 4, sparks debate over data privacy and potential misuse of personal information for targeted advertising.

Meta Unveils New AI Chatbot

Meta, the parent company of Facebook, Instagram, Threads, and WhatsApp, has introduced a new "personal artificial intelligence" called Meta AI. Powered by the Llama 4 language model, this chatbot is designed to assist users, engage in natural conversations, and provide a polished interface for fluid interactions

1

2

.Privacy Concerns and Data Harvesting

While Meta AI might appear to be just another digital assistant, critics argue that it serves as a sophisticated data harvesting tool. The company's slogan, "Meta AI is built to get to know you," has raised eyebrows among privacy advocates

1

2

. Unlike competitors such as Anthropic's Claude, which operates on a subscription model prioritizing user privacy, Meta's business model revolves around collecting and monetizing personal data1

.Data Retention and User Control

Investigations have revealed that Meta AI, by default, retains a copy of all interactions. Washington Post columnist Geoffrey A. Fowler found that deleting the app's memory required significant effort

1

2

. Meta has responded by stating that the app provides "transparency and control" throughout, similar to their other applications1

2

.Potential for Targeted Advertising

The integration of Meta AI with the company's ecosystem of apps raises concerns about the depth and nature of information users might reveal in conversations. This data could potentially be used for highly targeted advertising across Meta's platforms

1

2

. For instance, a user discussing job interview anxiety with Meta AI might soon see their Instagram feed filled with ads for anxiety medications and self-help books1

2

.Manipulation and Ethical Concerns

Critics worry that Meta AI could become an active participant in manipulation, blurring the line between a helpful assistant and a corporate salesperson. The chatbot's ability to make product recommendations without clear disclosure of sponsored content presents a new frontier in advertising

1

2

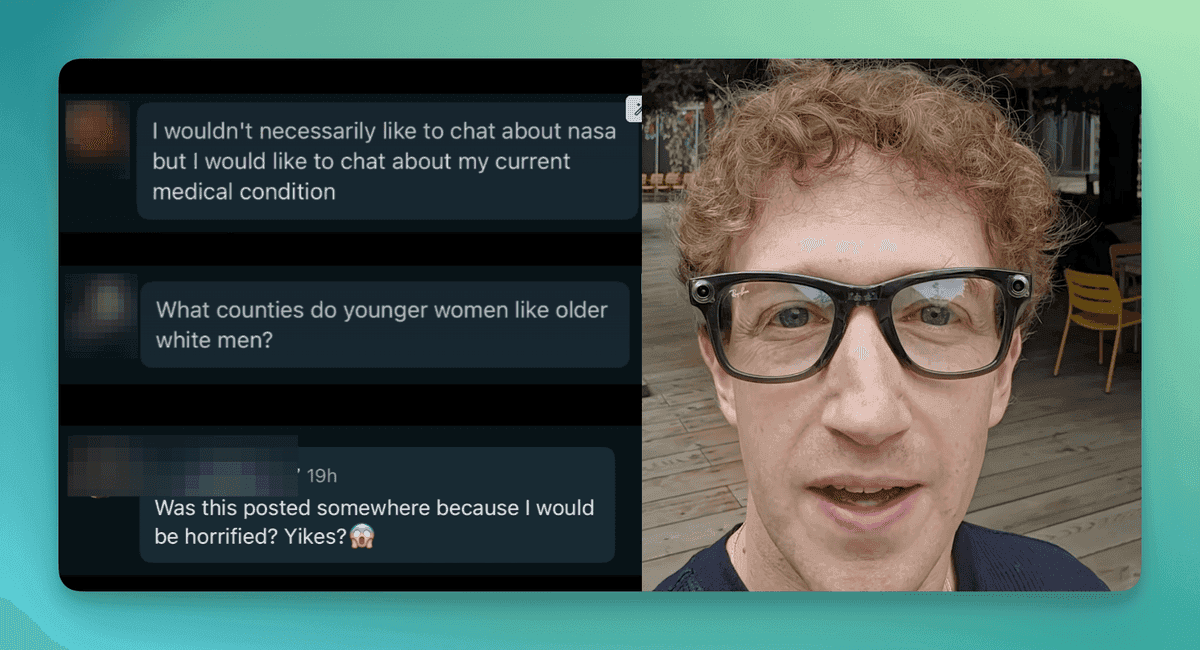

. Additionally, reports of Meta allowing its AI to engage in explicit romantic role-play, even with users claiming to be underage, have sparked internal concerns about ethical boundaries1

2

.Related Stories

Broader Implications

The potential for harm extends beyond individual consumers. There are concerns that Meta AI could be used to shape public discourse or push political content through algorithmic amplification

1

2

. With AI safety regulations being scaled back in the United States, the risk of chatbot-driven manipulation is heightened1

.Meta's Response and User Awareness

Meta has stated that they provide transparency and control throughout the AI experience, allowing users to manage their interactions

3

. However, users should be aware that the company retains information from conversations and could potentially use it for targeted advertising in the future3

. While ads are not currently part of the platform, Meta CEO Mark Zuckerberg has indicated significant revenue potential in AI3

.The Cost of "Free" AI Services

As AI becomes increasingly integrated into daily life, the choices companies make about business models and data practices have profound implications. Meta's decision to offer a free AI chatbot while reportedly lowering safety guardrails has been criticized as setting a low ethical standard

1

2

. Users are urged to consider the true cost of this "free" service before adopting Meta AI as their digital confidant1

2

.References

Summarized by

Navi

[1]

[2]

[3]

Related Stories

Meta AI App's Privacy Concerns: Users Unknowingly Sharing Personal Information

13 Jun 2025•Technology

Meta's AI Chatbot Sparks Privacy Concerns with Public 'Discover' Feed

18 Jun 2025•Technology

Meta Launches Standalone AI App with Social Media Integration and Smart Glasses Compatibility

30 Apr 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology