Meta releases SAM Audio AI model to isolate and edit sounds with simple text prompts

4 Sources

4 Sources

[1]

Meta introduces new SAM AI able to isolate and edit audio

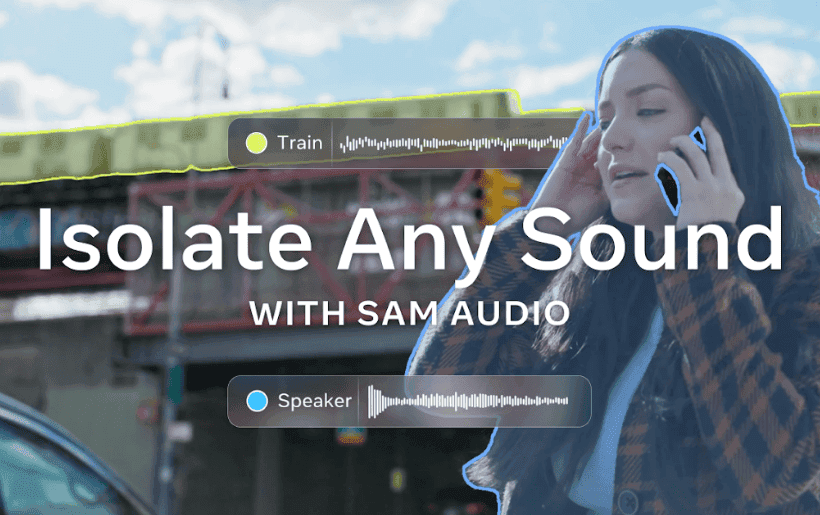

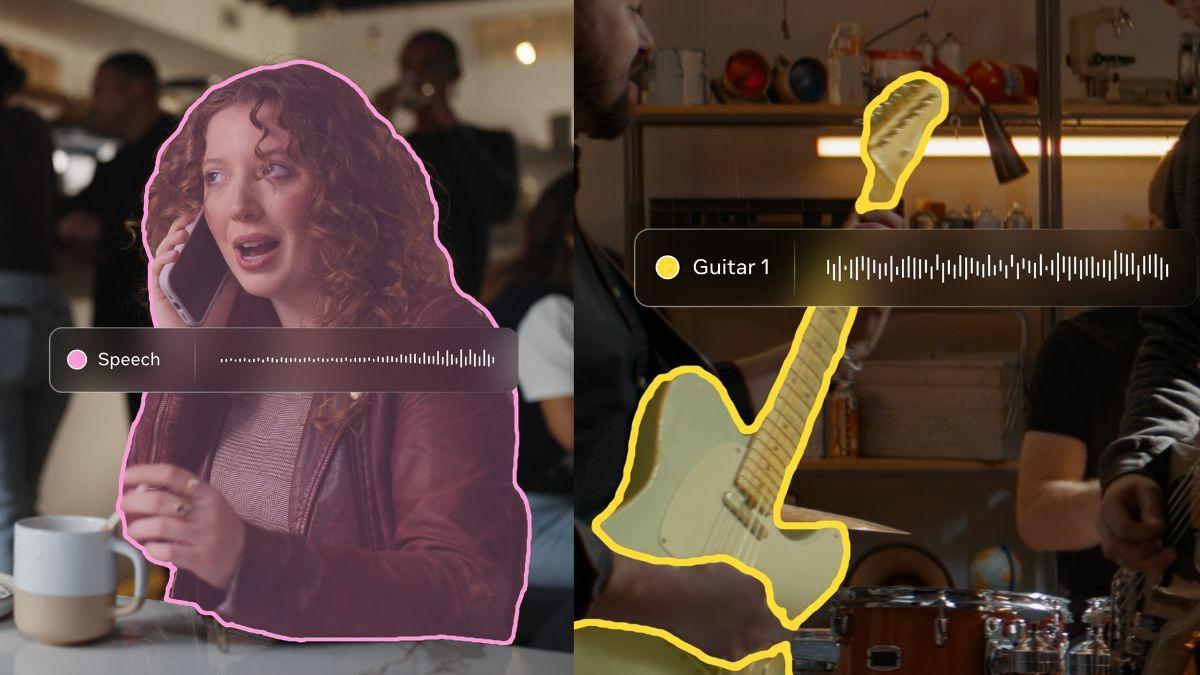

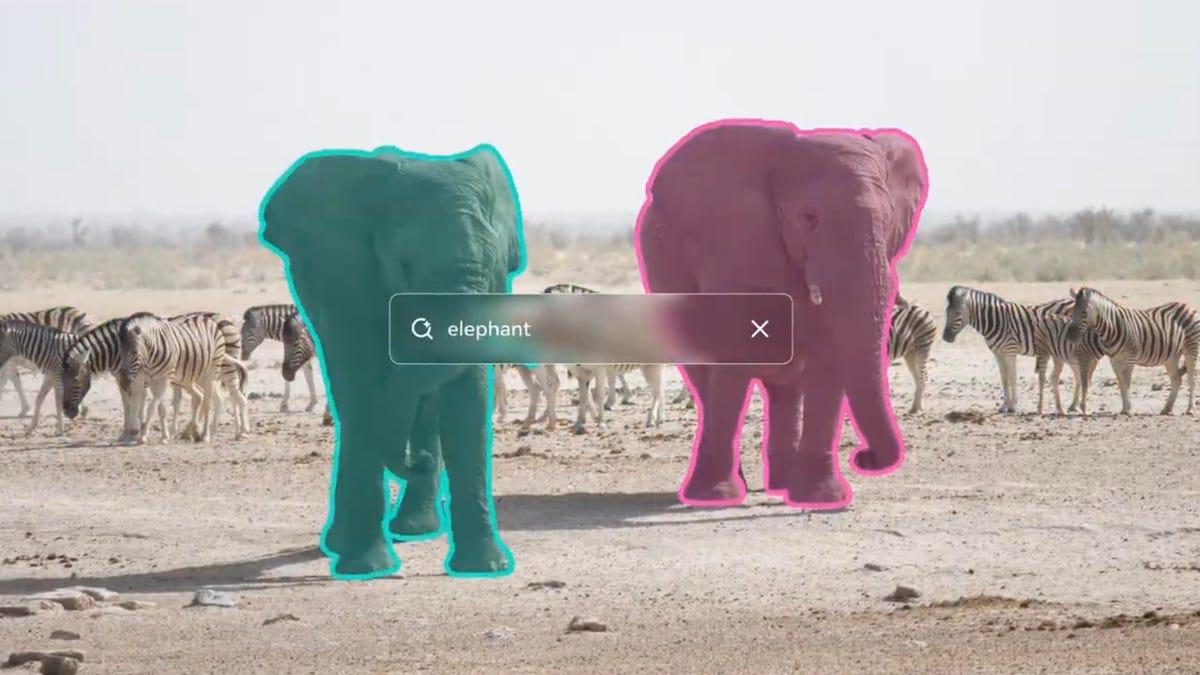

No mention of protections to stop it being used to snoop on people Want to hear just the guitar riff from a song? How about cutting out the train noise from a voice recording? Meta says its new SAM Audio model can separate and edit sounds using simple prompts, cutting down on the manual work typical of audio-editing tools. The release of the Segment Anything Model (SAM) Audio follows the previous release of Meta-made segmentation models for visual assets. Meta now claims that it has created "the first unified multimodal model for audio separation" in SAM Audio, which is available today on the company's Segment Anything Playground as well as for download. By "multimodal," Meta is referring to SAM Audio's ability to interpret three types of prompts for audio segmentation: text prompts, time-segment markings, and visual selections in video used to isolate or remove specific sounds. Take a video of a band playing, for example, and select the guitarist to have SAM Audio automatically isolate that player. Highlight the waveform of a barking dog in an outdoor recording, tell SAM to remove that sound, and it can trace and eliminate those interruptions throughout the entire file. "SAM Audio performs reliably across diverse, real-world scenarios -- using text, visual, and temporal cues," Meta said in its SAM Audio announcement. "This approach gives people precise and intuitive control over how audio is separated." The company said it sees a number of use cases for SAM Audio, like cleaning up an audio file, removing background noise, and other tasks that previously required hands-on work in audio-editing software or dedicated sound-mixing tools. That said, using AI to process audio isn't exactly a new idea - there are plenty of products out there that do what SAM Audio does, but Meta describes the space as a "fragmented" one, "with a variety of tools designed for single-purpose use cases," unlike SAM Audio's so-called unified model. Given its ability to isolate specific sounds based on user prompts, questions may naturally arise about the safety of such a model and whether it could be used to single out voices or conversations in public recordings, potentially creating a new avenue for snooping. We picked through Meta's SAM Audio page and an associated research paper to get more information on safety features built into the new model, but the company didn't cover that at all. When asked about safety, Meta only told us that if it's illegal without AI, you shouldn't use AI to do it. "As the SAM license notes, use of the SAM Materials must comply with applicable laws and regulations, including Trade Control Laws and applicable privacy and data protection laws," a Meta spokesperson told The Register, making it sound suspiciously like using SAM Audio for evil would be perfectly within its capabilities. Then again, it's possible Meta's own admission that SAM Audio has "some limitations" may mean that it's not exactly ready for those who want to use AI to reenact a modern version of The Conversation. It's still "a challenge" for SAM Audio to separate out "highly similar audio events," like picking out one voice among many or isolating a single instrument from an orchestra, Meta noted. SAM Audio also can't complete any audio separation without a prompt, and can't take audio as a prompt either, meaning feeding it a sound you want it to isolate is still outside of the scope of the bot. One area that SAM Audio could be useful for is in the accessibility space, which Meta said it's actively working toward. The company said it's partnered with US hearing aid manufacturer Starkey to look at potential integrations, as well as working with 2gether-International, an accelerator for disabled startup founders, to explore more accessibility possibilities that SAM Audio could serve. ®

[2]

Meta's new open-source AI tool helps you clean up noisy recordings just by typing

It supports text, visual, and time-based prompts for precise sound separation. Cleaning up audio usually means scrubbing timelines and tweaking filters, but Meta thinks it should be as easy as describing the sound you want. The company has released a new open-source AI model called SAM Audio that can isolate almost any sound from a complex recording using simple text prompts. Users can pull out specific noises like voices, instruments, or background sounds without digging through complicated editing software. The model is now available through Meta's Segment Anything Playground that houses other prompt-based image and video editing tools. Broadly speaking, SAM Audio is designed to understand what sound you want to work with and separate it cleanly from everything else. Meta says this opens the door to faster audio editing for use cases like music production, podcasting, film and television, accessibility tools, and research. Recommended Videos For example, a creator could isolate vocals from a band recording, remove traffic noise from a podcast, or delete a barking dog from an otherwise perfect recording, all by describing what they want the model to target. How SAM Audio works SAM Audio is a multimodal model that supports three different types of prompts. Users can describe a sound using text, click on a person or object in a video to visually identify the sound they want to isolate, or mark a time span where the sound first appears. These prompts can be used alone or combined, giving users fine-grained control over what gets separated. Under the hood, the system relies on Meta's Perception Encoder Audiovisual engine. It acts as the model's ability to recognize and understand sounds before slicing them out of the mix. To improve audio separation evaluation, Meta has also introduced SAM Audio-Bench, a benchmark for measuring how well models handle speech, music, and sound effects. It is accompanied by SAM Audio Judge, which evaluates how natural and accurate the separated audio sounds to human listeners, even without reference tracks to compare against. Meta claims these evaluations show SAM Audio performs best when different prompt types are combined and can handle audio faster than real-time, even at scale. That said, the model has clear limitations. It does not support audio-based prompts, cannot perform full separation without any prompting, and struggles with similar overlapping sounds, such as isolating a single voice from a choir. Meta says it plans to improve these areas and is already exploring real-world applications, including accessibility work with hearing-aid makers and organizations supporting people with disabilities. The launch of SAM Audio ties into Meta's broader AI push. The company is improving voice clarity on its AI glasses for noisy environments, working toward next-generation mixed reality glasses expected to arrive in 2027, and developing a conversational AI that could rival ChatGPT, signaling a wider focus on AI models that understands sound, context, and interaction.

[3]

Meta Platforms transforms audio editing with prompt-based sound separation - SiliconANGLE

Meta Platforms transforms audio editing with prompt-based sound separation Meta Platforms Inc. is bringing prompt-based editing to the world of sound with a new model called SAM Audio that can segment individual sounds from complex audio recordings. The new model, available today through Meta's Segment Anything Playground, has the potential to transform audio editing into a streamlined process that's far more fluid than the cumbersome tools used today to achieve the same goal. Just as the company's earlier Segment Anything models dramatically simplified video and image editing with prompts, SAM Audio is doing the same for sound editing. The company said in a blog post that SAM Audio has incredible potential for tasks such as music creation, podcasting, television, film, scientific research, accessibility and just about any other use case that involves sound. For instance, it makes it possible to take a recording of a band and isolate the vocals or the guitar with a single, natural language prompt. Alternatively, someone recording a podcast in a city might want to filter out the noise of the traffic - they can either turn down the volume of the passing cars or eliminate their sound entirely. The model could also be used to delete that inopportune moment when a dog starts barking in an otherwise perfect video presentation someone has just recorded. SAM Audio is the latest addition to Meta's Segment Anything model collection. Its earlier models, such as SAM 3 and SAM 3D, were all focused on using prompts to manipulate images, but until now the task of editing sound has always been much more complex. Typically, content creators have had no choice but to work with various clunky and difficult-to-use tools that can often only be applied to single-purpose use cases, Meta explained. As a unified model, SAM Audio is able to identity and edit out any kind of sound. The core innovation in SAM Audio is the Perception Encoder Audiovisual engine, which is built on Meta's open-source Perception Founder model that was released earlier this year. PE-AV can be thought of as SAM Audio's "ears," Meta explained, allowing it to comprehend the sound the user has described in the prompt, isolate it in the audio file, and then slice it out without affecting any of the other sounds. SAM Audio is a multimodal model that's able to support three kinds of prompts. The most standard way people will use it is through text prompting - for instance, someone might type "dog barking" or "singing voice" to identify a specific sound within their audio track. It also supports visual prompting, so when a user is editing the audio in a video, they can click on the person or object that's generating sound to have the model isolate or remove it, without having to go through the trouble of typing it. That could be useful in those situations where the user struggles to articulate the exact nature of the sound in question. Finally, the model also supports "span prompting," which is an entirely new kind of mode that allows users to mark the time segment where a certain sound first occurs. Meta said the three prompts can be used individually or in any combination, meaning users will have extremely precise control over how they isolate and separate different sounds. "We see so many potential use cases, including sound isolation, noise filtering, and more to help people bring their creative visions to life, and we're already using SAM Audio to help build more creative tools in our apps," Meta wrote in a blog post. Although SAM Audio isn't the first AI model focused on sound editing, the audio separation discipline is nascent. But it's something Meta hopes to grow, and to encourage further innovation in this are,a it has created a new benchmark for models of this type, called SAM Audio-Bench. The benchmark covers all major audio domains, including speech, music and general sound effects, together with text, visual and span-prompt types, Meta said. The purpose is to fairly assess all audio separation models and provide developers with a way to accurately measure how effective they are. Going by the results, Meta said SAM Audio represents a significant advance in audio separation AI, outperforming its competitors on a wide range of tasks: "Performance evaluations show that SAM Audio achieves state-of-the-art results in modality-specific tasks, with mixed-modality prompting (such as combining text and span inputs) delivering even stronger outcomes than single-modality approaches. Notably, the model operates faster than real-time (RTF ≈ 0.7), processing audio efficiently at scale from 500M to 3B parameters." Meta's claims that SAM Audio is the best model in its class aren't really a surprise, but the company did admit some limitations. For instance, it does not support audio-based prompts, which seems like a necessary capability for such models, and it also cannot perform complete audio separation without any prompting. It also struggles with "similar audio events," such as isolating an individual voice from a choir or an instrument from an orchestra, meaning there's still lots of room for improvement. SAM Audio is available to try out now in Segment Anything Playground, along with all of the company's earlier Segment Anything models for image and video editing. Meta said it's hoping to have a real-world impact with SAM Audio, particularly in terms of accessibility. To that end, it's working with the hearing-aid manufacturer Starkey Laboratories Inc. to explore how SAM Audio can be used to enhance the capabilities of its devices for people who are hard of hearing. It's also partnering with 2gether-International, a startup accelerator for disabled founders, to explore other ways SAM Audio might be used.

[4]

Meta's New AI Model Will Let You Isolate Any Sound in an Audio File

Meta says the model can be used for noise filtering and isolating sounds Meta has released another new artificial intelligence (AI) model in the Segment Anything Model (SAM) family. On Tuesday, the Menlo Park-based tech giant released SAM Audio, a large language model (LLM) that can identify, separate, and isolate particular sounds in an audio mixture. The model can handle audio editing based on either text prompts, visual signals, or time stamps, automating the entire workflow. Like the other models in the SAM series, it is also an open-source model that comes with a permissive licence. Meta Introduces SAM Audio AI Model In a newsroom post, the tech giant announced and detailed its new audio-focused AI model. SAM Audio is currently available to download either via Meta's website, GitHub listing, or Hugging Face. Those users who would prefer to use the model's capabilities without running it locally can visit the Segment Anything Playground to test it out. The website also allows users to access all the other SAM models. Notably, it is available under the SAM Licence, a custom, Meta-owned licence that allows both research-related and commercial usage. Meta describes SAM Audio as a unified AI audio model that uses text-based commands, visual cues, and time-based instructions to identify and separate sounds from a complex mixture. Traditionally, audio editing, especially isolating individual sound elements, has required specialised tools and manual work, often with limited precision. Meta's latest entry in the SAM series addresses this gap. The model supports three types of prompting. With text prompts, users can type descriptions, such as "drum beat" or "background noise." Visual prompting allows users to click on an object or a human in a video, and if a sound is being produced from there, it will be isolated. Finally, time span prompting lets anyone mark a segment of the timeline to target a sound. To highlight an example, imagine there is an audio file of a person speaking on the phone while music plays in the background, and children's voices can be heard playing at a distance. Users can isolate any of these audio sources, be it the primary voice, the music, or the ambient noise made by the children, with a single command. Gadgets 360 staff members briefly tested the model and found it to be both fast and efficient. However, we were not able to test it in real-world situations. Under the hood, SAM Audio is a generative separation model that extracts both target and residual stems from an audio mixture. It is equipped with a flow-matching Diffusion Transformer and operates in a Descript Audio Codec - Variational Autoencoder Variant (DAC-VAE) space.

Share

Share

Copy Link

Meta has launched SAM Audio, an open-source AI model that simplifies audio editing by isolating specific sounds from complex recordings using text, visual, or time-based prompts. The unified multimodal model can separate voices, instruments, and background noise without manual editing, though it raises questions about privacy safeguards and struggles with similar overlapping sounds.

Meta Unveils Open-Source AI Model for Prompt-Based Sound Separation

Meta has released SAM Audio, a new AI model designed to isolate and edit audio through simple prompts, marking the company's expansion of its Segment Anything Model family into the audio domain

1

. The open-source AI model is now available through Meta's Segment Anything Playground and for download via the company's website, GitHub, and Hugging Face4

. Meta describes SAM Audio as "the first unified multimodal model for audio separation," capable of interpreting text prompts, visual selections in video, and time-segment markings to isolate specific sounds1

.

Source: SiliconANGLE

How the Unified Multimodal Model Works with Audio Editing

SAM Audio supports three distinct prompting methods that can be used individually or combined for precise control. Users can describe sounds using text prompts like "drum beat" or "background noise," click on people or objects in videos to visually identify sounds, or mark time spans where specific sounds first appear

4

. The core technology relies on the Perception Encoder Audiovisual engine, built on Meta's open-source Perception Founder model released earlier this year3

. This engine acts as the model's "ears," allowing it to comprehend described sounds, isolate them in audio files, and extract them without affecting other audio elements3

.

Source: Gadgets 360

Applications Span Music Production, Podcasting, and Accessibility

The AI model addresses use cases across multiple industries, including music production, podcasting, film and television, and scientific research

2

. Creators can clean up noisy recordings by removing traffic sounds from podcasts, isolate vocals from band recordings, or delete unwanted barking dogs from video presentations3

. Meta has partnered with US hearing aid manufacturer Starkey to explore potential integrations and is working with 2gether-International, an accelerator for disabled startup founders, to develop accessibility solutions1

. The model operates faster than real-time with RTF ≈ 0.7, processing audio efficiently at scale from 500M to 3B parameters3

.

Source: Digital Trends

Related Stories

Privacy Concerns and Technical Limitations Emerge

Questions about safety features have surfaced given the model's ability to isolate specific sounds based on user prompts, potentially creating avenues for surveillance. When asked about safeguards, Meta only stated that use of SAM Audio must comply with applicable laws and regulations, including data protection laws, without detailing built-in protections

1

. The company acknowledged several limitations: SAM Audio cannot perform complete audio separation without prompting, does not support audio-based prompts, and struggles with highly similar audio events like isolating one voice from a choir or a single instrument from an orchestra1

2

.New Benchmark Measures Audio Separation Performance

To advance the field of audio separation, Meta introduced SAM Audio-Bench, a benchmark covering speech, music, and sound effects across text, visual, and span-prompt types

3

. The company also released SAM Audio Judge, which evaluates how natural and accurate separated audio sounds to human listeners without requiring reference tracks2

. Performance evaluations show SAM Audio achieves state-of-the-art results in modality-specific tasks, with mixed-modality prompting delivering stronger outcomes than single-modality approaches3

. The launch connects to Meta's broader AI strategy, including improving voice clarity on AI-powered glasses for noisy environments and developing conversational AI to rival ChatGPT2

.References

Summarized by

Navi

[1]

[3]

Related Stories

Meta Unveils SAM 2: A Revolutionary AI Model for Video Object Manipulation

31 Jul 2024

Meta Unveils SAM 3 and SAM 3D: Advanced AI Models for Visual Intelligence and 3D Reconstruction

20 Nov 2025•Technology

Meta Unveils Suite of Advanced AI Models and Tools, Emphasizing Open-Source Collaboration

20 Oct 2024•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology