Meta Unveils Video Seal: An Open-Source AI Watermarking Tool to Combat Deepfakes

4 Sources

4 Sources

[1]

Meta's deepfake-fighting AI video watermarking tool is here, and for some reason it's decided to call it the Video Seal

Look, forgive me, all right? It's Friday afternoon as I write this, and I know I've picked a silly image to go with an article on what is quite an important topic. But Meta has called its new AI-generated video watermarking tool the Video Seal, and sometimes the header image picks itself. Let's get down to brass tacks. Or beach balls, one of the two. (Stop it now - Ed). Deepfakes are a serious concern, with a recent Ofcom survey reporting that two in five participants said they'd seen at least one AI-generated deepfake in the last six months. Deepfake content has the potential to harm and spread disinformation, so Meta releasing a tool to watermark AI-generated videos is probably a net benefit for the world (via TechCrunch). Meta Video Seal is open source, and designed to be integrated into existing software to apply imperceptible watermarks to AI-generated video clips. Speaking to TechCrunch, Pierre Fernandez, an AI research scientist at Meta, said: "We developed Video Seal to provide a more effective video watermarking solution, particularly for detecting AI-generated videos and protecting originality. "While other watermarking tools exist, they don't offer sufficient robustness to video compression, which is very prevalent when sharing content through social platforms; weren't efficient enough to run at scale; weren't open or reproducible; or were derived from image watermarking, which is suboptimal for videos." Meta has already released a non-video specific watermarking tool, Watermark Anything, and a tool specifically for audio, called, you guessed it, Audio Seal. This latest, video-focussed effort is designed to be much more resilient than similar software from DeepMind and Microsoft, although Fernandez admits that heavy compression and significant edits may alter the watermarks or "render them unrecoverable." Still, anything more resistant to removal than the current options strikes as a good thing, as a quick Google reveals multiple methods of AI-generated content watermark removal, which I won't link to here. Still, the info is out there, and that means potentially harmful content is currently going unchecked. With a watermark in place, news outlets and fact checkers the world over can correctly determine whether a video is potentially real, or created by AI. The real uphill battle now is widespread adoption, as no matter how effective the tool is at flagging AI videos, it'll matter not a jot if no-one uses it. To that end, Meta also has plans to launch a public leaderboard, called the Meta Omni Seal Bench, which will compare the performance of watermarking methods, and is currently organising workshops at next years ICLR AI conference. Fernandez says the team hopes more AI researchers and developers will integrate watermarking into their work, and that they want to collaborate with the industry and academic community to "progress faster in the field." So come for the seals, stay for the fight against AI-generated misinformation. Sometimes it can feel like we're drowning under a wave of an AI industry moving incredibly quickly into the future -- with minimal checks and balances -- so it's difficult not to applaud Meta for making attempts to rein in some of the more egregious side effects.

[2]

Meta debuts a tool for watermarking AI-generated videos | TechCrunch

Throw a stone and you'll likely hit a deepfake. The commoditization of generative AI has led to an absolute explosion of fake content online: According to ID verification platform Sumsub, there's been a 4x increase in deepfakes worldwide from 2023 to 2024. In 2024, deepfakes accounted for 7% of all fraud, per Sumsub, ranging from impersonations and account takeovers to sophisticated social engineering campaigns. In what it hopes will be a meaningful contribution to the fight against deepfakes, Meta is releasing a tool to apply imperceptible watermarks to AI-generated video clips. Announced on Thursday, the tool, called Meta Video Seal, is available in open source and designed to be integrated into existing software. The tool joins Meta's other watermarking tools, Watermark Anything (re-released today under a permissive license) and Audio Seal. "We developed Video Seal to provide a more effective video watermarking solution, particularly for detecting AI-generated videos and protecting originality," Pierre Fernandez, AI research scientist at Meta, told TechCrunch in an interview. Video Seal isn't the first technology of its kind. DeepMind's SynthID can watermark videos, and Microsoft has its own video watermarking methodologies. But Fernandez asserts that many existing approaches fall short. "While other watermarking tools exist, they don't offer sufficient robustness to video compression, which is very prevalent when sharing content through social platforms; weren't efficient enough to run at scale; weren't open or reproducible; or were derived from image watermarking, which is suboptimal for videos," Fernandez said. In addition to a watermark, Video Seal can add a hidden message to videos that can later be uncovered to determine their origins. Meta claims that Video Seal is resilient against common edits like blurring and cropping, as well as popular compression algorithms. Fernandez admits that Video Seal has certain limitations, mainly the trade-off between how perceptible the tool's watermarks are and their overall resilience to manipulation. Heavy compression and significant edits may alter the watermarks or render them unrecoverable, he added. Of course, the bigger problem facing Video Seal is that devs and industry won't have much reason to adopt it, particularly those already using proprietary solutions. In a bid to address that, Meta is launching a public leaderboard, Meta Omni Seal Bench, dedicated to comparing the performance of various watermarking methods, and organizing a workshop on watermarking this year at ICLR, a major AI conference. "We hope that more and more AI researchers and developers will integrate some form of watermarking into their work," Fernandez said. "We want to collaborate with the industry and the academic community to progress faster in the field."

[3]

Meta to Combat Deepfakes With This Open-Source AI Watermarking Tool

The watermark is said to be resilient against blurring and cropping Meta is releasing a new tool that can add an invisible watermark to videos generated using artificial intelligence (AI). Dubbed Video Seal, the new tool joins the company's existing watermarking tools, Audio Seal and Watermark Anything. The company suggested that the tool will be open-sourced, however, it has yet to publish the code. Interestingly, the company claims that the watermarking technique will not affect the video quality, yet will be resilient against the common methods of removing them from videos. Deepfakes have flooded the Internet ever since the rise of generative AI. Deepfakes are synthetic content, usually generated using AI, that shows false and misleading objects, people, or scenarios. Such content is often used to spread misinformation about a public figure, create fake sexual content, or carry out fraud and scams. Additionally, as AI systems get better, deepfake content will become harder to recognise, making it even more difficult to differentiate from real content. According to a McAfee survey, 70 percent of people already feel that they are not confident in telling the difference between a real voice and an AI-generated voice. As per internal data by Sumsub, deepfake frauds increased by 1,740 percent in North America and by 1,530 percent in the Asia-Pacific region in 2022. The number was found to increase tenfold between 2022 and 2023. As the concerns about deepfakes rise, many companies developing AI models have started releasing watermarking tools that can identify synthetic content from real ones. Earlier this year, Google released SynthID to watermark AI-generated text and videos. Microsoft has also released similar tools. In addition, the Coalition for Content Provenance and Authenticity (C2PA) is also working on new standards to identify AI-generated content. Now, Meta has released its own Video Seal tool to watermark AI videos. The researchers highlight that the tool can watermark every frame of a video with an imperceptible tag that cannot be tampered with. It is said to be resilient against techniques such as blurring, cropping, and compression software. However, despite adding the watermark, the researchers claim that the quality of the video will not be compromised. Meta has announced that Video Seal will be open-sourced under a permissive licence, however, it is yet to release the tool and its codebase in the public domain.

[4]

Meta unveils watermarking tool for AI videos

The business' latest announcement coincides with the release of its new AI model, Meta Motivo. Social media giant Meta has revealed a new watermarking tool for detecting videos generated by artificial intelligence (AI). The company, which counts Facebook, Instagram, Threads and WhatsApp among its ranks, has unveiled Meta Video Seal, which is open source and designed to be integrated into existing software. This latest invention echoes similar watermarking tools by DeepMind, Microsoft and OpenAI. Meta Video Seal joins Meta's other watermarking tools, Audio Seal and Watermark Anything (which was also recently re-released under a permissive license). The company claims that its new tool possesses "a comprehensive framework for neural video watermarking and a competitive open-sourced model". "Our approach jointly trains an embedder and an extractor, while ensuring the watermark robustness by applying transformations in-between, for example video codecs." According to Meta, the training for the tool is multistage, and includes image pre-training, hybrid post-training and extractor fine-tuning. "We also introduce temporal watermark propagation, a technique to convert any image watermarking model to an efficient video watermarking model without the need to watermark every high-resolution frame," Meta explained. "We present experimental results demonstrating the effectiveness of the approach in terms of speed, imperceptibility and robustness." In addition, Meta said that contributions in this work - including the codebase, models and a public demo - are open-sourced under permissive licenses. Meta's latest announcement coincides with the business today (13 December) releasing an AI model called Meta Motivo, which can control the movements of a human-like virtual agent. Meta has turned its focus towards AI in recent months. In October, it announced it would be increasing its AI expenditure as its revenue soars. The company revealed a significant net income increase of 35pc to $15.68bn, raising the diluted earnings per share to $6.03. CFO Susan Li expects Meta's fourth quarter revenue to be up by a further $5bn, taking it to the $45bn to $48bn range. Meta has also been keeping a close eye when it comes to AI-generated content popping up on its platforms. Earlier this year, the business revealed plans to set up a dedicated team to combat disinformation and AI misuse ahead of the EU elections, which took place in June. And last week, Meta claimed that it found AI content made up less than 1pc of election-related misinformation on its apps this year. Don't miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic's digest of need-to-know sci-tech news.

Share

Share

Copy Link

Meta has introduced Video Seal, an open-source tool designed to watermark AI-generated videos. This technology aims to combat the rising threat of deepfakes and misinformation by providing a robust method to identify AI-created content.

Meta Introduces Video Seal to Combat Deepfakes

Meta, the parent company of Facebook and Instagram, has unveiled a new open-source tool called Video Seal, designed to watermark AI-generated videos. This development comes as part of Meta's ongoing efforts to address the growing concern of deepfakes and misinformation in the digital landscape

1

2

.The Rising Threat of Deepfakes

The proliferation of AI-generated content has led to a significant increase in deepfakes. According to ID verification platform Sumsub, there has been a 4x increase in deepfakes worldwide from 2023 to 2024, with deepfakes accounting for 7% of all fraud in 2024

2

. This surge in synthetic content poses serious risks, including impersonations, account takeovers, and sophisticated social engineering campaigns.Video Seal: A New Approach to Watermarking

Video Seal is designed to apply imperceptible watermarks to AI-generated video clips. The tool is open-source and can be integrated into existing software, making it accessible to developers and content creators

1

2

. Key features of Video Seal include:- Resilience against common edits like blurring and cropping

- Compatibility with popular compression algorithms

- Ability to add a hidden message to videos for origin verification

Pierre Fernandez, an AI research scientist at Meta, emphasized that Video Seal offers a more effective solution compared to existing watermarking tools, particularly in terms of robustness against video compression and efficiency at scale

1

2

.Complementing Existing Tools

Video Seal joins Meta's suite of watermarking tools, including Watermark Anything and Audio Seal

2

. This comprehensive approach aims to provide solutions for various types of AI-generated content, from images to audio and now video.Challenges and Limitations

While Video Seal represents a significant step forward, it's not without limitations. Fernandez acknowledges that heavy compression and significant edits may alter the watermarks or render them unrecoverable

1

. Additionally, the tool faces the challenge of widespread adoption, as its effectiveness relies on industry-wide implementation.Related Stories

Promoting Adoption and Collaboration

To encourage adoption and further development in the field, Meta is taking several steps:

- Launching a public leaderboard called Meta Omni Seal Bench to compare watermarking methods' performance

1

- Organizing workshops at major AI conferences, such as ICLR

2

- Collaborating with industry and academic communities to advance watermarking technology

1

2

The Broader Context of AI Content Detection

Meta's efforts align with similar initiatives from other tech giants. Google's SynthID and Microsoft's watermarking methodologies also aim to address the challenge of identifying AI-generated content

3

. These tools collectively represent the tech industry's response to the growing concerns about the potential misuse of AI-generated media.Implications for the Future

As AI-generated content becomes increasingly sophisticated, tools like Video Seal play a crucial role in maintaining trust in digital media. However, the effectiveness of these solutions will depend on their widespread adoption and continuous improvement to stay ahead of potential circumvention techniques

4

.Meta's commitment to open-sourcing Video Seal under a permissive license demonstrates a collaborative approach to tackling the challenges posed by AI-generated content, potentially setting a precedent for future developments in this critical area of technology and information integrity

3

4

.References

Summarized by

Navi

[4]

Related Stories

UnMarker: Canadian Researchers Develop Tool to Remove AI Image Watermarks, Challenging Deepfake Defense Strategies

24 Jul 2025•Technology

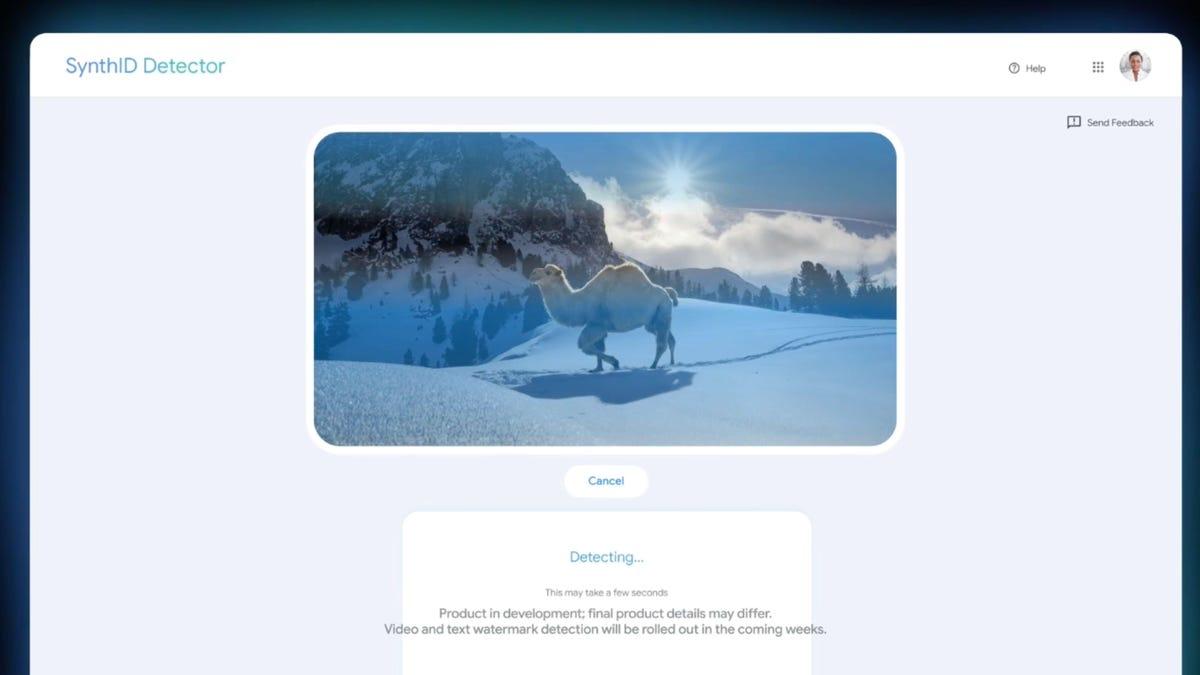

Google Unveils SynthID Text: A Breakthrough in AI-Generated Content Watermarking

24 Oct 2024•Technology

Google Unveils SynthID Detector: A New Tool to Identify AI-Generated Content

21 May 2025•Technology

Recent Highlights

1

OpenAI secures $110 billion funding round from Amazon, Nvidia, and SoftBank at $730B valuation

Business and Economy

2

Anthropic stands firm against Pentagon's demand for unrestricted military AI access

Policy and Regulation

3

Pentagon Clashes With AI Firms Over Autonomous Weapons and Mass Surveillance Red Lines

Policy and Regulation