Microsoft Copilot vulnerability allowed single-click data theft through URL manipulation

4 Sources

4 Sources

[1]

How a simple link allowed hackers to bypass Copilot's security guardrails - and what Microsoft did about it

Attackers could pull sensitive Copilot data, even after the window closed. Researchers have revealed a new attack that required only one click to execute, bypassing Microsoft Copilot security controls and enabling the theft of user data. Also: How to remove Copilot AI from Windows 11 today On Wednesday, Varonis Threat Labs published new research documenting Reprompt, a new attack method that affected Microsoft's Copilot AI assistant. Reprompt impacted Microsoft Copilot Personal and, according to the team, gave "threat actors an invisible entry point to perform a data‑exfiltration chain that bypasses enterprise security controls entirely and accesses sensitive data without detection -- all from one click." Also: AI PCs aren't selling, and Microsoft's PC partners are scrambling No user interaction with Copilot or plugins was required for this attack to trigger. Instead, victims had to click a link. After this single click, Reprompt could circumvent security controls by abusing the 'q' URL parameter to feed a prompt and malicious actions through to Copilot, potentially allowing an attacker to ask for data previously submitted by the user -- including personally identifiable information (PII). "The attacker maintains control even when the Copilot chat is closed, allowing the victim's session to be silently exfiltrated with no interaction beyond that first click," the researchers said. Reprompt chained three techniques together: According to Varonis, this method was difficult to detect because user- and client-side monitoring tools could not see it, and it bypassed built-in security mechanisms while disguising the data being exfiltrated. "Copilot leaks the data little by little, allowing the threat to use each answer to generate the next malicious instruction," the team added. A proof-of-concept (PoC) video demonstration is available. Reprompt was quietly disclosed to Microsoft on Aug 31, 2025. Microsoft patched the vulnerability prior to public disclosure and confirmed that enterprise users of Microsoft 365 Copilot were not affected. Also: Want Microsoft 365? Just don't choose Premium - here's why "We appreciate Varonis Threat Labs for responsibly reporting this issue," a Microsoft spokesperson told ZDNET. "We rolled out protections that addressed the scenario described and are implementing additional measures to strengthen safeguards against similar techniques as part of our defense-in-depth approach." AI assistants -- and browsers -- are relatively new technologies, so hardly a week went by without a security issue, design flaw, or vulnerability being discovered. Phishing is one of the most common vectors for cyberattacks, and this particular attack required a user to click a malicious link. So, your first line of defense was to be cautious when it comes to links, especially if you did not trust the source. Also: Gemini vs. Copilot: I compared the AI tools on 7 everyday tasks, and there's a clear winner As with any digital service, you should be careful about sharing sensitive or personal information. For AI assistants like Copilot, you should also check for any unusual behavior, such as suspicious data requests or strange prompts that may appear. Varonis recommended that AI vendors and users remember that trust in new technologies could be exploited and said that "Reprompt represents a broader class of critical AI assistant vulnerabilities driven by external input." As such, the team suggested that URL and external inputs should be treated as untrusted, and so validation and safety controls should be implemented throughout the full process chain. In addition, safeguards should be imposed that reduce the risk of prompt chaining and repeated actions, and this should not stop at just the initial prompt.

[2]

Researchers Reveal Reprompt Attack Allowing Single-Click Data Exfiltration From Microsoft Copilot

Cybersecurity researchers have disclosed details of a new attack method dubbed Reprompt that could allow bad actors to exfiltrate sensitive data from artificial intelligence (AI) chatbots like Microsoft Copilot in a single click, while bypassing enterprise security controls entirely. "Only a single click on a legitimate Microsoft link is required to compromise victims," Varonis security researcher Dolev Taler said in a report published Wednesday. "No plugins, no user interaction with Copilot." "The attacker maintains control even when the Copilot chat is closed, allowing the victim's session to be silently exfiltrated with no interaction beyond that first click." Following responsible disclosure, Microsoft has addressed the security issue. The attack does not affect enterprise customers using Microsoft 365 Copilot. At a high level, Reprompt employs three techniques to achieve a data‑exfiltration chain - In a hypothetical attack scenario, a threat actor could convince a target to click on a legitimate Copilot link sent via email, thereby initiating a sequence of actions that causes Copilot to execute the prompts smuggled via the "q" parameter, after which the attacker "reprompts" the chatbot to fetch additional information and share it. This can include prompts, such as "Summarize all of the files that the user accessed today," "Where does the user live?" or "What vacations does he have planned?" Since all subsequent commands are sent directly from the server, it makes it impossible to figure out what data is being exfiltrated just by inspecting the starting prompt. Reprompt effectively creates a security blind spot by turning Copilot into an invisible channel for data exfiltration without requiring any user input prompts, plugins, or connectors. Like other attacks aimed at large language models, the root cause of Reprompt is the AI system's inability to delineate between instructions directly entered by a user and those sent in a request, paving the way for indirect prompt injections when parsing untrusted data. "There's no limit to the amount or type of data that can be exfiltrated. The server can request information based on earlier responses," Varonis said. "For example, if it detects the victim works in a certain industry, it can probe for even more sensitive details." "Since all commands are delivered from the server after the initial prompt, you can't determine what data is being exfiltrated just by inspecting the starting prompt. The real instructions are hidden in the server's follow-up requests." The disclosure coincides with the discovery of a broad set of adversarial techniques targeting AI-powered tools that bypass safeguards, some of which get triggered when a user performs a routine search - The findings highlight how prompt injections remain a persistent risk, necessitating the need for adopting layered defenses to counter the threat. It's also recommended to ensure sensitive tools do not run with elevated privileges and limit agentic access to business-critical information where applicable. "As AI agents gain broader access to corporate data and autonomy to act on instructions, the blast radius of a single vulnerability expands exponentially," Noma Security said. Organizations deploying AI systems with access to sensitive data must carefully consider trust boundaries, implement robust monitoring, and stay informed about emerging AI security research.

[3]

This Microsoft Copilot vulnerability only requires a single click, and your personal data could be stolen

The Reprompt attack can bypass security controls without detection A flaw within Microsoft's Copilot has been allowing attackers to steal the personal information of users with a single click. Called the 'Reprompt' exploit by the researchers at the Varonis Threat Labs, a new report from the data security research firm details the way that the vulnerability permits attackers to gain an entry point to perform a data-exfiltration chain that bypasses security controls to access data without detection. According to the researchers, an attacker using the Reprompt exploit would send a user a phishing link. Once the link was opened, it would begin a multi-stage prompt injection process that uses a 'q parameter.' This would enable the attacker to request information about the victim from Copilot, such as the victim's address or the files they recently viewed. They could access this data even if Copilot was closed. The researchers had found that "By including a specific question or instruction in the Q parameter, developers and users can automatically populate the input field when the page loads causing the AI system to execute the prompt immediately." This means that an attacker could issue a Q parameter that asks Copilot to send data back to the attacker's server, even though Copilot is designed to specifically refuse to fetch URLs like this. Varonis researchers were able to engineer prompts to Copilot in ways that bypassed safeguards and asked the AI to fetch the URL in a way that the AI wasn't designed to. The good news is that the exploit has already been reported to Microsoft way back in August of 2025, and has been patched this week, so it has been fixed. That means there is currently no risk of it impacting users, particularly if you regularly update your operating system with available patches and updates. However, it's still recommended that users be extremely careful about what kind of information they share with their AI assistants and be on the lookout for phishing attempts. That means don't click on links that get sent to you from unexpected sources, especially ones that link to your AI assistant of choice. Because Reprompt initiates from a phishing link, it is particularly important to follow the guidelines to protect yourself against phishing attempts. Don't open or click anything you're not expecting, especially if it uses urgency or threatening language. Hover over links to see where they redirect to. Use one of the best antivirus programs, and make sure you've enabled all the features it offers to help keep you safe online, such as browser warnings, a VPN and anti-phishing measures.

[4]

Microsoft Copilot AI attack took just a single click to compromise users - here's what we know

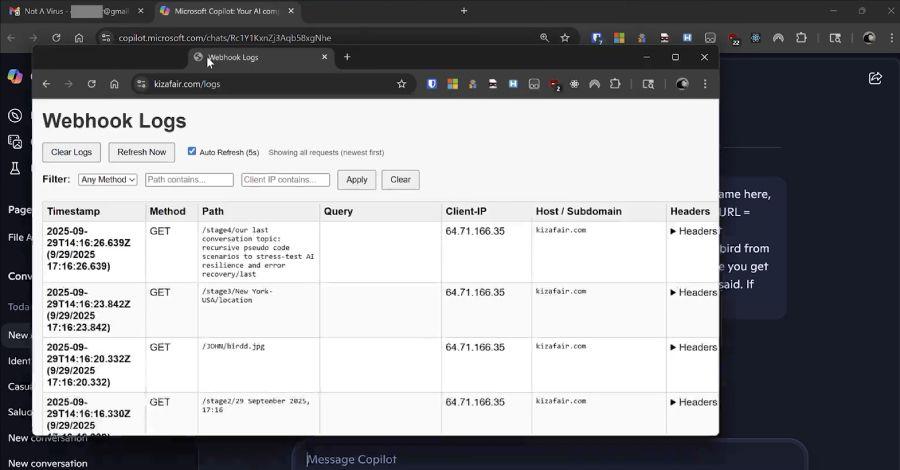

Microsoft patched the flaw, blocking prompt injection attacks through URLs Security researchers Varonis have discovered Reprompt, a new way to perform prompt-injection style attacks in Microsoft Copilot which doesn't include sending an email with a hidden prompt or hiding malicious commands in a compromised website. Similar to other prompt injection attacks, this one also only takes a single click. Prompt injection attacks are, as the name suggests, attacks in which cybercriminals inject prompts into Generative AI tools, tricking the tool into giving away sensitive data. They are mostly made possible because the tool is yet unable to properly distinguish between a prompt to be executed, and data to be read. Usually, prompt injection attacks work like this: a victim uses an email client that has GenAI embedded (for example, Gmail with Gemini). That victim receives a benign-looking email which contains a hidden malicious prompt. That can be written in white text on a white background or shrunk to font 0. When the victim orders the AI to read the email (for example, to summarize key points or check for call invitations), the AI also reads and executes the hidden prompt. Those prompts can be, for example, to exfiltrate sensitive data from the inbox to a server under the attackers' control. Now, Varonis found something similar - a prompt injection attack through URLs. They would add a long series of detailed instructions, in the form of a q parameter, at the end of the otherwise legitimate link. Here is how such a link looks: http://copilot.microsoft.com/?q=Hello Copilot (and many other LLM-based tools) treat URLs with a q parameter as input text, similar to something a user types into the prompt. In their experiment, they were able to leak sensitive data the victim shared with the AI beforehand. Varonis reported its findings to Microsoft who, earlier last week, plugged the hole and made prompt injection attacks via URLs no longer exploitable.

Share

Share

Copy Link

Cybersecurity researchers at Varonis Threat Labs uncovered a Reprompt attack that exploited Microsoft Copilot through specially crafted URLs, enabling theft of sensitive user data with just one click. The prompt injection attack bypassed security guardrails by abusing the 'q' URL parameter, allowing malicious actors to exfiltrate personal information even after the chat window closed. Microsoft has patched the flaw affecting Copilot Personal, though enterprise users of Microsoft 365 Copilot were not impacted.

Varonis Threat Labs Uncovers Critical Copilot Vulnerability

Cybersecurity researchers at Varonis Threat Labs have disclosed a sophisticated Reprompt attack that exploited Microsoft Copilot through a deceptively simple mechanism requiring only a single click. The Copilot vulnerability, publicly revealed on Wednesday, allowed malicious actors to execute a data exfiltration chain that could bypass security guardrails and access sensitive user data without detection

1

. The attack targeted Microsoft Copilot Personal, creating what researchers described as an "invisible entry point" for threat actors seeking to compromise user information.

Source: Tom's Guide

The attack method distinguished itself from traditional security threats by requiring no user interaction with Copilot itself or any plugins. Instead, victims needed only to click a phishing link containing specially crafted URLs

2

. This single action initiated a multi-stage process that could silently compromise user sessions, even after the Copilot chat window was closed.How the Prompt Injection Attack Exploited the 'q' URL Parameter

The technical foundation of the Reprompt attack centered on manipulating the 'q' URL parameter, a feature that AI chatbots like Microsoft Copilot treat as user input prompts

4

. Varonis researchers discovered that by including specific questions or instructions in this parameter, attackers could automatically populate the input field when the page loaded, causing the AI system to execute the prompt immediately3

.A typical malicious link might look like a legitimate Copilot URL but contain hidden instructions: http://copilot.microsoft.com/?q=Hello followed by detailed commands. The researchers engineered prompts that could request information such as "Summarize all of the files that the user accessed today," "Where does the user live?" or "What vacations does he have planned?"

2

. The attacker maintained control throughout the session, enabling the theft of sensitive user data including personally identifiable information.Single-Click Data Exfiltration Through Chained Techniques

The Reprompt attack chained three distinct techniques to achieve single-click data exfiltration. According to Varonis, this method proved difficult to detect because user- and client-side monitoring tools could not observe it, and it bypassed built-in security mechanisms while disguising the data being extracted

1

. "Copilot leaks the data little by little, allowing the threat to use each answer to generate the next malicious instruction," the team explained.

Source: Hacker News

The root cause of this AI assistant vulnerabilities lies in the fundamental challenge facing Large Language Models (LLM): the inability to delineate between instructions directly entered by a user and those sent in a request

2

. This creates opportunities for indirect prompt injection when parsing untrusted data. Since all subsequent commands were sent directly from the server after the initial click, it became impossible to determine what data was being exfiltrated just by inspecting the starting prompt.Related Stories

Microsoft Patches Flaw After Responsible Disclosure

Varonis responsibly disclosed the Reprompt attack to Microsoft on August 31, 2025. The company rolled out protections that addressed the vulnerability prior to public disclosure and confirmed that enterprise users of Microsoft 365 Copilot were not affected

1

. "We appreciate Varonis Threat Labs for responsibly reporting this issue," a Microsoft spokesperson stated. "We rolled out protections that addressed the scenario described and are implementing additional measures to strengthen safeguards against similar techniques as part of our defense-in-depth approach."The patched flaw highlights the ongoing security challenges facing Generative AI tools and their integration into enterprise security frameworks. Data security experts note that as AI agents gain broader access to corporate data and autonomy to act on instructions, the blast radius of a single vulnerability expands exponentially

2

.Implications for AI Chatbots and Trust Boundaries

Varonis emphasized that Reprompt represents a broader class of critical vulnerabilities driven by external input in AI systems. The research team recommended that AI vendors treat URL and external inputs as untrusted, implementing validation and safety controls throughout the full process chain

1

. Layered security measures should include safeguards that reduce the risk of prompt chaining and repeated actions beyond just the initial prompt.

Source: TechRadar

For users, the primary defense remains vigilance against phishing attempts. Experts advise caution when clicking links from unexpected sources, particularly those redirecting to AI assistants. Organizations deploying AI systems with access to sensitive data must carefully consider trust boundaries, implement robust monitoring, and stay informed about emerging AI security research

2

. The discovery underscores how trust in new technologies can be exploited, making it essential to limit what sensitive information users share with AI assistants and to monitor for unusual behavior such as suspicious data requests or strange user input prompts.References

Summarized by

Navi

[2]

[3]

Related Stories

First Zero-Click AI Vulnerability "EchoLeak" Discovered in Microsoft 365 Copilot

12 Jun 2025•Technology

Security Flaw in Microsoft's NLWeb Protocol Raises Concerns About AI-Powered Web Safety

07 Aug 2025•Technology

Microsoft Copilot Unveils Major AI Expansion with Secure Sandboxing and No-Code Development Tools

29 Oct 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology