Microsoft Revamps Controversial AI-Powered Recall Feature with Enhanced Security Measures

15 Sources

15 Sources

[1]

Microsoft's beefed up Recall security model includes a blessed uninstall option

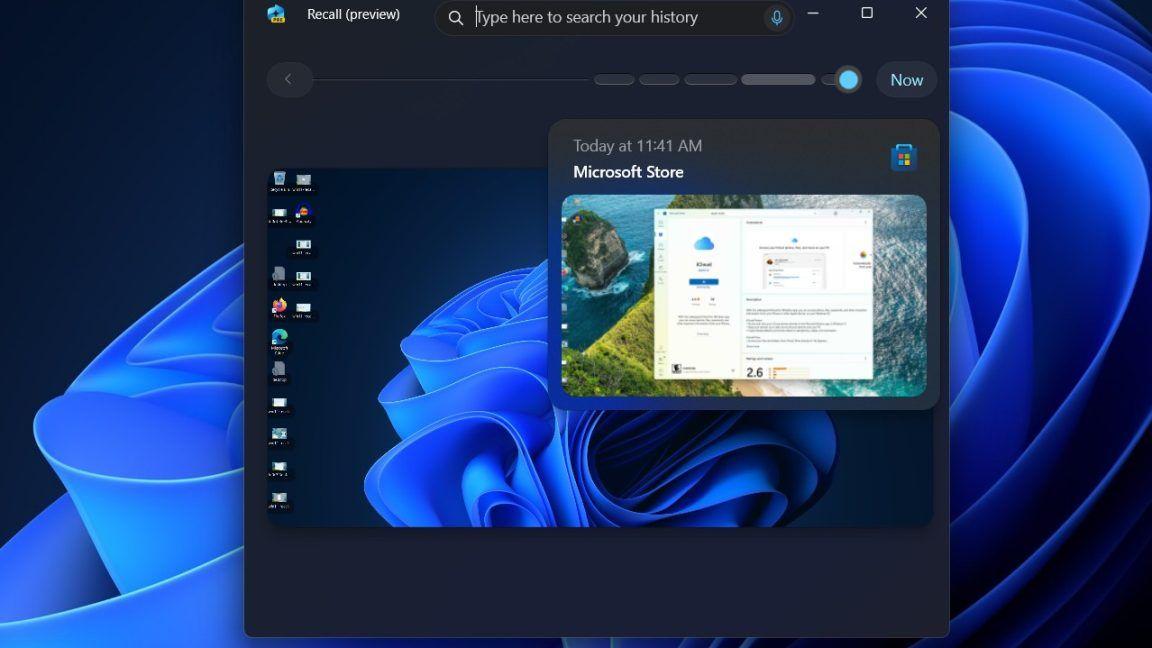

Recall will finally be available for testing on Copilot Plus PCs in October. Big companies don't need to listen to the little guy, right? Progress is coming for us all, whether we like it or not? One of the many AI-adjacent controversies to grab headlines this year has been Microsoft's announcement of Recall, a feature for Copilot Plus PCs that uses AI processing of automatically grabbed screenshots to create a record of your historical usage. After facing delays, and rumors of an uninstall option, Microsoft is actually stepping up to explain that it hears us, that it's made some changes to Recall in the interest of security -- and that you really can uninstall it.

[2]

Microsoft will let Windows Copilot+ PC users completely uninstall new Recall AI feature

In its latest attempt to address AI security and privacy concerns, Microsoft provided new details about the underlying architecture for its upcoming Recall feature, which promises to let Windows users quickly locate information they've previously viewed on their PCs with help from artificial intelligence. Snapshots taken in Recall will be encrypted and accessible only if the user has been authenticated using the Windows Hello biometric sign-in feature, the company said. In addition to making Recall opt-in, the company now says it will give users the ability to completely remove Recall from their machines if they choose. Those were among a series of details in a Microsoft post Friday morning. Recall, which takes advantage of advanced Neural Processing Units (NPUs) in new Windows PCs, was announced in May as the flagship feature in Copilot+ PCs from Microsoft and other computer makers. The first Copilot+ PCs were released in June without Recall, pending future software updates. Recall has drawn close scrutiny from security researchers and legislators, especially in light of Microsoft's pledge to prioritize security over new features following a series of high-profile breaches exploiting flaws in its software and cloud services. Microsoft gave a broader update about its security initiatives earlier this week. "Recall's secure design and implementation provides a robust set of controls against known threats," wrote David Weston, Microsoft's VP of Enterprise and OS Security, on Friday. "Microsoft is committed to making the power of AI available to everyone while retaining security and privacy against even the most sophisticated attacks." Microsoft said previously that it plans to release the Recall feature initially through its Windows Insider program in October.

[3]

Microsoft's Maligned AI-Enabled 'Recall' Gets a Security Reboot

Microsoft Corp. has announced upgrades for Recall, an artificial intelligence feature that creates a record of everything users do on their PCs, following criticism that the tool created an attractive target for hackers. In an interview Thursday, David Weston, a vice president for enterprise and operating system security, said the company heard the critiques "loud and clear" and set about devising layers of security safeguards for Recall designed to thwart even the world's most sophisticated hackers.

[4]

Microsoft Gives Controversial Recall AI Feature Some Extra Security

After security experts raised concerns about Microsoft's upcoming Recall AI feature, the tech giant has added a few more security features to help Windows users protect their data and browsing history from attackers or snoops. Microsoft will now require Recall users to biometrically authenticate their identities via Windows Hello whenever they try to access the feature. Personally identifiable information -- like your name, address, Social Security number, or credit card info -- will no longer be screenshotted by the AI, says Microsoft EVP and Consumer CMO Yusuf Mehdi. Health or financial websites will also not be captured. Screenshots will be stored on the device itself, so they won't be sent to any Microsoft servers or data centers. Sensitive information can be filtered out, though you can turn off the "filter sensitive info" toggle. Microsoft has also added more Recall settings so users can purge all of their snapshots in one click. They can also choose to delete screenshots within the past hour or a longer period of time with the click of a button. Microsoft previously announced that it's also possible to filter out specific apps or websites at the user's discretion. Recall is an upcoming, opt-in AI feature for compatible Windows 11 PCs, including Copilot+ PCs, that will let you search your device to pull up any moment in your PC's history. In order for Recall to work, your device will need a Windows on ARM CPU as well as a certain amount of minimum RAM and disk storage. Recall will work with Microsoft Edge, Google Chrome, Firefox, Opera, and other Chromium-based web browsers and will not track or screenshot any "private browsing" activity. Recall uses AI to search for things that might match a search term. For example, if you type in "red dress" into Recall, the AI will search your computer for any image of a red dress even if "red dress" isn't actually written on that page. In other words, the AI attempts to match words to images and videos to help you "recall" a previous moment in time. The AI is able to do this by taking constant screenshots of your computer and using them to take you back and re-open those apps or web pages. Though it's opt-in, it doesn't appear you can remove Recall entirely from your PC. A bug in an early-access version of Windows' anticipated 24H2 update previously showed Recall as something that could be uninstalled via the Windows Control Panel. But a Microsoft product manager said that was an error and the option to remove it would not be in the official release.

[5]

Microsoft Makes Security Case for Much-Maligned Copilot Plus Recall Feature

Microsoft says the controversial Recall search feature will only be on Copilot Plus PCs that meet its security standards. In a detailed blog post on Friday, Microsoft vice president for enterprise and OS security David Weston gave an update on how security will work on the company's controversial Recall search feature, to be available on new Copilot Plus PCs. The feature, which uses AI to help users visually search through snapshots of their past PC activity, was met with a significant backlash after it was announced in May. In the blog post, Weston details some of the security features that Recall will have when it begins rolling out, apparently in an attempt to make the case that the concerns about its underlying security and privacy controls have been overblown. Read more: Microsoft's AI Recall Feature May Not Even Hit Your PC, but Here's How to Disable It Weston stresses early in the post that Recall is opt-in and that Snapshots are not taken or stored unless a user enables Recall. "You are always in control, and you can delete snapshots, pause or turn them off at any time," Weston writes. "Any future options for the user to share data will require fully informed explicit action by the user." Read more: Microsoft's Controversial Windows Recall Now Coming to Testers in October He also writes that Snapshots are not shared with Microsoft, third parties or even other users on the same PC. There is not, however, any mention in the post about the option to uninstall the software option completely from a Copilot Plus PC. In an interview with The Verge, Weston confirmed that this option will be available. "If you choose to uninstall this, we remove the bits from your machine," Weston said. The uninstall would include AI models that inform Recall. Weston also says that sensitive data is always encrypted in Recall and that screenshots and associated data are isolated and local and are only accessible through a Windows Hello Enhanced Sign-in Security login. It also only runs on Copilot Plus PCs that meet Microsoft's "Secured-core standard." The post contains illustrations of Recall's security architecture. The company has an internal team working on design reviews and penetration testing, a third-party vendor doing the same, and a Responsible AI Impact Assessment completed, according to the post.

[6]

Microsoft: Windows Recall now can be removed, is more secure

Microsoft has announced security and privacy upgrades to its AI-powered Windows Recall feature, which now can be removed and has stronger default protection for user data and tighter access controls. Today's announcement comes in response to customer pushback requesting stronger default data privacy and security protections, which prompted the company to delay its public release by making it first available for preview with Windows Insiders. Redmond also previously revealed that customers would have to opt-in to enable Recall on their computers and that authentication via Windows Hello would be required to confirm the user's presence in front of the PC. Recall takes screenshots of active windows on your PC every few seconds, analyzes them on-device using a Neural Processing Unit (NPU) and an AI model, and adds the information to an SQLite database. You can later search for this data using natural language to prompt Windows Recall to retrieve relevant screenshots. Since Microsoft announced this feature in May, cybersecurity experts and privacy advocates warned that Windows Recall is a privacy nightmare and would likely be abused by malware and threat actors to steal users' data. In response to negative feedback from customers and privacy and security experts, David Weston, Microsoft's vice president for Enterprise and OS Security, revealed today that Recall is always opt-in, automatically filters sensitive content, allows users to exclude specific apps, websites, or in-private browsing sessions, and can be removed if needed. "If a user doesn't proactively choose to turn it on, it will be off, and snapshots will not be taken or saved. Users can also remove Recall entirely by using the optional features settings in Windows," Weston said. Recall now also comes with a sensitive information filter designed to protect confidential data, such as passwords, credit card numbers, and personal identification details, by automatically applying filters over this content. Weston assured users that they retain complete control over their data, as Recall will allow them to delete snapshots, pause them, or turn them off at any time. "Any future option to share data will require fully informed, explicit action by the user," he added. Recall has also been redesigned to operate on four core principles: user control, encryption of sensitive data, isolation of services, and intentional use. Weston says snapshots and associated data are also encrypted, with the encryption keys protected by the device's Trusted Platform Module (TPM). This module is tied to the user's Windows Hello credentials and biometric identity and ensures that no data leaves the system without the user's explicit request. "Recall snapshots are only available after users authenticate using Windows Hello credentials. Windows Hello's Enhanced Sign-In Security ensures privacy and actively authenticates users before allowing access to their data," he said. "Using VBS Enclaves with Windows Hello Enhanced Sign-in Security allows data to be briefly decrypted while you use the Recall feature to search. Authorization will time out and require the user to authorize access for future sessions. This restricts attempts by latent malware trying to 'ride along' with a user authentication to steal data." Furthermore, Recall also includes malware protection features such as rate-limiting and anti-hammering measures. "Recall is always opt-in. Snapshots are not saved unless you choose to use Recall, and everything is stored locally," Weston concluded. "Recall does not share snapshots or data with Microsoft or third parties, nor between different Windows users on the same device. Windows will ask for permission before saving any snapshots."

[7]

Microsoft describes Recall's new security features, says the feature is opt-in

After a major PR blunder, Microsoft is detailing its Recall's new security model. Microsoft is set to bring back Recall, the Copilot+ feature it, well, recalled back in June just before its release due to security concerns and negative feedback. Today, the company released a detailed blog post authored by president, OS security and enterprise David Weston explaining all of the security-based changes that it made to Recall. The blog post doesn't list a specific release date for the feature, but Microsoft previously said Insiders would see Recall come back in October. Weston reiterated that Recall "is an opt-in experience" that you decide on when first setting up a Copilot+ PC. "If a user doesn't proactively choose to turn it on, it will be off, and snapshots will not be taken or saved," he wrote. "Users can also remove recall entirely by using the optional features settings in Windows." (This is seemingly a reversal of what Microsoft said earlier this month when Recall was found in a list of features you could disable.) The snapshots that Recall takes will be encrypted with the Trusted Platform Module and tied to your account through Windows Hello. Weston states that the snapshots "can only be used by operations within a secure environment called a Virtualization-based security Enclave (VBS Enclave)," which prevents other users on your PCs from decrypting and seeing your information. The only data that ever leaves the enclave is what you specifically request while using Recall. Recall is also using Windows Hello as authorization to change settings, with your Windows PIN as a fallback measure in case your camera or fingerprint reader is damaged. Microsoft says Recall will prevent malware attacks with "rate-limiting and anti-hammering measures." Not all of this is brand new, however. Some of it has been previously detailed in previous blog posts. The VBS Enclave is the key to Microsoft's security approach for Recall, which Weston describes as a "locked box" that uses Windows Hello authorization as the key, and serves as an "isolation boundary" from both users with administrative privileges and the Windows kernel. This means that you need to have biometrics enabled in order to use Recall, and you'll need to repeatedly use it as the authorization will expire. Weston reiterated that Recall only takes snapshots when you have turned the feature on, and that the data isn't shared with Microsoft or third party companies. "You are always in control, and you can delete snapshots, pause, or turn them off at any time," Weston wrote. "Any future options for the user to share data will require fully informed explicit action by the user." Weston also shared a list of customization tools that you can use to adjust what gets saved for you in Recall: He also notes, however, that some diagnostic data may end up going back to Microsoft based on settings, "like any Windows feature." MIcrosoft detailed three sets of tests and assessments, some of which sound like they will be ongoing, for Recall's security. They include Microsoft's Offensive Research and Security Engineering team working on "months" of penetration testing and reviews, as well as working with an unnamed third-party security company and a "Responsible AI Impact Assessment." It isn't yet clear when Recall will get a wide rollout, but considering the strong messaging from Microsoft and Weston, I do wonder if it will be soon. The initial Recall announcement was met with surprise, especially among the security community. It may not be until the new Recall is inspected by the same people who met it with such shock that people using Windows start to trust it -- or they try it and find out whether or not they think it's useful. But for now, it's good to see Microsoft being transparent about chances -- and that it's making Recall optional for those who are still wary about the AI feature.

[8]

Microsoft has some thoughts about Windows Recall security

AI screengrab service to be opt-in, features encryption, biometrics, enclaves, more Microsoft has revised the Recall feature for its Copilot+ PCs and insists that the self-surveillance system is secure. "Recall," as Microsoft describes it, "is designed to help you instantly and securely find what you've seen on your PC." You may not recall what you were doing on your PC but rest assured that Microsoft's Copilot AI can remember it for you wholesale, to borrow the title of the Philip K. Dick story that inspired the film Total Recall. Microsoft Recall works by capturing snapshots of your Windows desktop every few seconds, and recording what you're doing in applications, and storing the results so that it can be, well, recalled with text searches or by visually sliding back through the timeline. It's a visual activity log with associated data that can be queried using an AI model, basically. When Recall was announced in May at Microsoft Build 2024, it was pilloried as a privacy and security horror show. Security researcher and pundit Kevin Beaumont described it as a keylogger for Windows. And author Charlie Stross flagged the tool as a magnet for legal discovery demands. Recall could record sensitive info, such as your banking details, as well as your communications, app usage, and file updates, all while using your PC, users were warned. So in June, after Microsoft Research's chief scientist brushed off questions at an AI conference about the Recall backlash, Microsoft delayed its Recall rollout to rethink things. By August, Microsoft determined that Recall had been sufficiently rethought and declared that the system monitoring software would be released this October to Windows Insiders. Laying the groundwork for that happy occasion, David Weston, VP of enterprise and OS security at Microsoft, took a moment on Friday to explain in a blog post that Windows users have nothing to fear from the "unique security challenges" that Microsoft created with Recall and had to solve. First, there's the fact that "Recall is designed with security and privacy in mind," which presumably makes it no different from any other Microsoft software. It's not as if the IT giant openly markets a separate line of vulnerable, data broadcasting apps. OK, let's not go there. Next, you don't even have to use Recall, assuming you have some say in such matters. Recall is opt-in. And Recall can be removed entirely via optional features settings in Windows. But why would you want to exorcise Recall when it encrypts its snapshots in a vector database and locks the encryption keys away, under the protection of the associated PC's Trusted Platform Module. Access requires the user's Windows Hello Enhanced Sign-in Security identity (tied to fingerprint or face biometrics) and is limited to operations executed within a Virtualization-based Security Enclave (VBS Enclave). Beyond that, authorization to Recall data is set to time-out so re-authentication is required for future sessions, a safeguard designed to prevent malware from leveraging user authentication to steal data. Enclaves also have rate limiting and anti-hammering protections to mitigate the risk of brute force attacks. "Recall is always opt-in," says Weston. "Snapshots are not taken or saved unless you choose to use Recall. Snapshots and associated data are stored locally on the device. Recall does not share snapshots or associated data with Microsoft or third parties, nor is it shared between different Windows users on the same device. Windows will ask for your permission before saving snapshots. You are always in control, and you can delete snapshots, pause or turn them off at any time. Any future options for the user to share data will require fully informed explicit action by the user." In defiance of its name, Recall won't recall certain things. Private browsing in supported browsers (Edge, Chrome and Chromium, Firefox, Opera) isn't saved. Nor are activities within user-designated apps and websites (blocking sites from Recall is available for Edge, Chrome but not all Chromium clients, Firefox, and Opera.) Sensitive content filtering, active by default, tries to prevent passwords, national ID numbers, and credit card numbers from being recorded. And the user has controls for Recall content retention time, disk space allocation for snapshot storage, and record deletion - by time, app, website, or the entirety of what Recall can search. And what is saved will be accessible via an AI agent. "Recall's secure design and implementation provides a robust set of controls against known threats," says Weston. "Microsoft is committed to making the power of AI available to everyone while retaining security and privacy against even the most sophisticated attacks." ®

[9]

Microsoft AI PC Recall Feature Gets Security Update

'We've taken some important steps to secure your snapshots and then put you in complete control,' Microsoft Consumer CMO Yusuf Mehdi says. Microsoft has a new security architecture for its controversial Recall feature powering improved search on artificial intelligence PCs, set to become available for Windows Insider Program members in October. In a video announcing the changes to Recall, Yusuf Mehdi, Microsoft's executive vice president and consumer chief marketing officer, said that part of the changes to Recall include users needing to opt in to turn on and use Recall. If users don't proactively turn Recall on, no snapshots are taken or saved. Windows settings will even have an option for removing Recall entirely. "We've taken some important steps to secure your snapshots and then put you in complete control," Mehdi said. [RELATED: Microsoft's Joy Chik On 'Acceleration' Of Internal Security Across Identity, Network, Supply Chain] John Snyder, CEO of Durham, N.C.-based solution provider Net Friends, told CRN in a recent interview that he looks forward to Microsoft adding more AI-powered security into its Defender for Endpoints and the extended detection and response (XDR) space, a space where Microsoft partners including Snyder's business participate. "There's so much potential to aggregate all the security alerts and actionable tasks" within Defender, Snyder said. Recall will only work on Copilot PCs verified by the feature to have BitLocker (Windows 11 Pro) and Device Encryption (Windows 11 Home) Trusted Platform Module (TPM), according to Microsoft. The vendor has previously said that hardware for its latest Windows 11 operating system (OS) needs TPM 2.0 security chips. The devices also need virtualization-based security (VBS) and Hypervisor-Protected Code Integrity (HVCI), Measured Boot and System Guard Secure Launch and Kernel Direct Memory Access (DMA) Protection, according to the vendor. Microsoft said that it has conducted security assessments of Recall before its launch including months of design reviews and penetration testing by the Microsoft Offensive Research and Security Engineering team (MORSE), an independent security design review and pen test by an unnamed third-party security vendor and a Responsible AI Impact (RAI) Assessment, which measured fairness reliability, security, inclusion, accountability and other factors. Recall users will also need to biometrically authenticate themselves with Windows Hello every time they use Recall to increase security, according to Microsoft. Recall will detect sensitive data and personal information including passwords and credit card numbers to avoid capturing the information. The feature leverages the same library powering Microsoft Purview information protection. Recall users will have privacy and security settings for opting in to saving snapshots and deleting them. Users can delete individual snapshots, do bulk deletion or delete snapshots from specific periods of times and involving specific applications or websites-even after screenshots are taken. Microsoft will use an icon in the system tray to notify users when snapshots are saving and allow users to pause snapshots. Recall doesn't save snapshots when users leverage private browsing in supported browsers. Users can set how long Recall retains content and the amount of disk space allotted for snapshots, according to Microsoft. Microsoft reiterated that Recall snapshots are stored locally on devices without access by Microsoft, third parties or different Windows users on the same device. In Recall, users can leverage the timeline or search box for finding an item previously searched for by using a keyword even partially related to the past search. Recall will provide related results for that keyword. Recall snapshots and associated information in the vector database are encrypted with keys protected by the TPM. Encryption keys are tied to users' Windows Hello Enhanced Sign-In Security identity and used only by operations in a secure environment. That secure environment is the VBS Enclave. Enclaves use the same hypervisor as Azure to segment computer memory into special protected areas where information is processed. No other user can access the keys. Enclaves use zero-trust principles, cryptographic attestation protocols and are accessed through Windows Hello permission. They are an isolation boundary from kernel and administrative users, and authorization times out and requires access authorization for future sessions to avoid latent malware ride alongs. Recall services operating on screenshots and associated data and performing decryption are within a secure VBS Enclave. Users must request information when actively using Recall for it to leave the enclave, according to Microsoft. Enclaves also use concurrency protection and monotonic counters to prevent system overload from too many requests. The Recall feature leverages rate-limiting and anti-hammering to protect against malware. It supports users' personal identification numbers (PINs) as a fallback after Recall is configured to avoid data loss if a secure sensor is damaged, according to the vendor. Recall was first introduced in May during an unveiling of the Redmond, Wash.-based vendor's Copilot+ PC line of AI devices. Backlash from cybersecurity professionals around the devices constantly taking and storing screenshots of user activity resulted in Microsoft pushing Recall back from a preview experience for Copilot+ PCs available in June to a WIP available experience in October. Originally, Microsoft said Recall wouldn't hide any sensitive or confidential information captured in the screenshots unless a user filters out specific applications or websites or browses privately on supported browsers such as Microsoft Edge, Firefox, Opera and Google Chrome. It was also confirmed that Microsoft would keep Recall on by default for Copilot+ PCs. The Recall updates come days after Microsoft introduced a series of new product capabilities aimed at making AI systems more secure, including a correction capability in Azure AI Content Safety for fixing hallucination issues in real time and a preview for confidential inferencing capability in the Azure OpenAI Service Whisper model.

[10]

Microsoft explains how it's tackling security and privacy for Recall

The company is being more careful after Recall's botched launch alongside Copilot+ AI PCs. The condemnation of Microsoft's Recall feature for Copilot+ AI PCs was swift and damning. While it's meant to let you find anything you've ever done on your PC, it also involves taking constant screenshots of your PC, and critics noticed that information wasn't being stored securely. Microsoft ended up delaying its rollout for Windows Insider beta testers, and in June it announced more stringent security measures: It's making Recall opt-in by default; it will require Windows Hello biometric authentication; and it will encrypt the screenshot database. Today, ahead of the impending launch of the next major Windows 11 launch in November, Microsoft offered up more details about Recall's security and privacy measures. The company says Recall's snapshots and related data will be protected by VBS Enclaves, which it describes as a "software-based trusted execution environment (TEE) inside a host application." Users will have to actively turn Recall on during Windows setup, and they can also remove the feature entirely. Microsoft also reiterated that encryption will be a major part of the entire Recall experience, and it will be using Windows Hello to interact with every aspect of the feature, including changing settings. "Recall also protects against malware through rate-limiting and anti-hammering measures," David Weston, Microsoft's VP of OS and enterprise security, wrote in a blog post today. "Recall currently supports PIN as a fallback method only after Recall is configured, and this is to avoid data loss if a secure sensor is damaged." When it comes to privacy controls, Weston reiterates that "you are always in control." By default, Recall won't save private browsing data across supported browsers like Edge, Chrome and Firefox. The feature will also have sensitive content filtering on by default to keep things like passwords and credit card numbers from being stored. Microsoft says Recall has also been reviewed by an unnamed third-party vendor, who performed a penetration test and security design overview. The Microsoft Offensive Research and Security Engineering team (MORSE) has also been testing the feature for months. Given the near instant backlash, it's not too surprising to see Microsoft being extra cautious with Recall's eventual rollout. The real question is how the the company didn't foresee the initial criticisms, which included the Recall database being easily accessible from other local accounts. Thanks to the use of encryption and additional security, that should no longer be an issue, but it makes me wonder what else Microsoft missed early on.

[11]

Microsoft announces sweeping changes to controversial Recall feature for Windows 11 Copilot+ PCs

Microsoft's Recall was supposed to be the marquee feature for the new Copilot+ PCs Microsoft announced in May 2024. Its stated goal was to give Windows 11 users an AI-powered "photographic memory" to help them instantly find something they'd previously seen on their PC. In theory, Recall offers a clever solution to a classic problem of information overload, tapping powerful neural processing units to turn a vague search into a specific result. However, the initial design created the potential for serious privacy and security issues and unleashed a torrent of criticism from security experts who called it a "privacy nightmare." Also: Have a Windows 10 PC that can't be upgraded? You have 5 options before support ends next year The criticism was so intense, in fact, that the company scrapped its plans to launch a preview of the feature as part of the Copilot+ PC launch, instead sending the entire codebase back to the developers for a major overhaul. So, what have they been doing for the past four months? Today's blog post from David Weston, VP of Enterprise and OS Security at Microsoft, has the answers. In a remarkable departure from typical corporate pronouncements from Redmond, this one reads like it was written by engineers rather than lawyers, and it contains an astonishing level of detail about sweeping changes to the security architecture of Recall. Here are the highlights. The Recall feature will only be available on Copilot+ PCs, Microsoft says. Those devices must meet the secured-core standard, and the feature will only be enabled if Windows can verify that the system drive is encrypted and a Trusted Platform Module (TPM version 2.0) is enabled. The TPM, Microsoft says, provides the root of trust for the secure platform and manages the keys used for the encryption and decryption of data. Also: Why Windows 11 requires a TPM - and how to get around that In addition, the feature as it will ship takes advantage of some core security features of Windows 11, including Virtualization-Based Security, Hypervisor-enforced Code Integrity, and Kernel DMA Protection. It will also use the Measured Boot and System Guard Secure Launch features to block the use of Recall if a machine is not booted securely (so-called "early boot" attacks). Although it might be possible for security researchers to find hacks that allow them to test Recall on incompatible hardware, those workarounds should be significantly more difficult than they were in the leaked May preview that was the subject of the initial disclosures. One of the critics' biggest concerns was that Microsoft would try to push Windows users into adopting the feature. Today's announcement says, "Recall is an opt-in experience," and in a separate interview, Weston emphasized that the feature will remain off unless you specifically choose to turn it on. The blog post says, "During the set-up experience for Copilot+ PCs, users are given a clear option whether to opt-in to saving snapshots using Recall. If a user doesn't proactively choose to turn it on, it will be off, and snapshots will not be taken or saved." In addition, customers running OEM and retail versions of Windows 11 (Home and Pro) will be able to completely remove Recall by using the Optional Features settings in Windows 11. (That's a change from previous reports based on leaked builds.) Also: 7 password rules to live by in 2024, according to security experts On PCs running Windows 11 Enterprise, the feature will not be available as part of a standard installation, Weston told me. Administrators who want to use Recall in their organizations must deploy the feature separately and enable it using Group Policy or other management tools. Even then, individual users would have to use Windows Hello biometrics on supported hardware to enable the feature. Microsoft says an icon in the system tray will notify users each time a Recall snapshot is saved and also provide the option to pause the feature. Some types of content will never be saved as a Recall snapshot. Any browsing done in a private session within a supported browser (Edge, Chrome, Firefox, and Opera) is blocked by default, and you can filter out specific apps and websites as well. Recall also filters out sensitive information types, such as passwords, credit card numbers, and national ID numbers. The library that powers this feature is the same one used by enterprises that subscribe to Microsoft's Purview information protection product. If the Recall analysis phase determines that a snapshot contains sensitive information or content from a filtered app or website, the entire snapshot is discarded and its contents aren't saved to the Recall database. Additional configuration tools allow users to retroactively delete a time range, all content from an app or website, or the contents of a Recall search. The biggest concern with the initial announcement of Recall was that it offered a prime target for attackers, with scenarios that included local attacks (another user on the same Windows 11 PC) and remote (via malware or remote access). The revised architecture offers multiple layers of protection against those scenarios. First, setting up Recall requires biometric authentication to the user's account, and additional operations are tied to that account using the Windows Hello Enhanced-Sign-in Security identity. That ensures that Recall searches and other operations are only possible when the user is physically present and confirmed by biometrics. Next, snapshot data is encrypted, as is the so-called vector database that contains the information used to search through stored snapshots. Decrypting those databases also requires biometric authentication, and any operations on those data (saving, searching, and so on) take place within a secure environment called a Virtualization-based security Enclave (VBS Enclave). This design ensures that other users can't access the decryption keys and thus can't access the contents of the database. The Recall services that operate on snapshots and the associated database are isolated, making it nearly impossible for other processes, including malware, to take over those services. Other protections against malware include rate-limiting and anti-hammering measures designed to stop brute-force attacks. Under the heading "Recall Security Reviews," the company claims that it has conducted multiple reviews of the new security architecture. Internally, it's been red-team tested by the Microsoft Offensive Research and Security Engineering team (MORSE). In addition, the company says it hired an unnamed third-party security vendor to perform an independent security design review and penetration test. Finally, Redmond says they've done a "Responsible AI Impact Assessment (RAI)" covering "risks, harms, and mitigations analysis across our six RAI principles (Fairness, Reliability & Safety, Privacy & Security, Inclusion, Transparency, Accountability)." And, of course, the company says it will pay bug bounties for anyone who reports a serious security issue that can be verified. The botched initial rollout of Recall squandered a lot of goodwill, so security experts have a right to be skeptical. Still, today's announcement contains a wealth of detail, and the Insider testing that will start in October should provide an ample opportunity for additional feedback. That feedback will have a huge impact on Microsoft's AI plans, so I expect that everyone up to and including CEO Satya Nadella will be paying close attention.

[12]

The controversial Windows Recall is returning to Copilot+ PCs -- here's how Microsoft says it will keep your data safe

Here's how Microsoft plans to keep its AI-powered snapshots secure on your Copilot+ PC Ahead of its October relaunch, Microsoft is highlighting the security and privacy features baked into Windows Recall that are designed to help keep your private data and the AI-powered Snapshots it takes safe from prying eyes. Back in June, we got our first taste of the software giant's new Copilot+ PCs. Powered by Snapdragon X Elite and Snapdragon X Plus, these AI laptops offer significantly improved battery life, strong performance and are available at a relatively affordable price despite their high-end features. While Copilot+ PCs have been met with critical acclaim, one of their most unique features, Windows Recall, has remained controversial since it was first announced back at Microsoft Build 2024. For those unfamiliar, Windows Recall is intended to help you find things you've done on your PC more easily and faster by letting you search for anything that has graced your laptop's screen. This is done by taking screenshots or "snapshots" of your screen at regular intervals which are stored locally on your computer and then analyzed and indexed to make searching for what they show easier. As you can imagine, this feature was met with controversy when it was first announced and was itself recalled by Microsoft after briefly launching in preview over the summer. Now though, Windows Recall is set for a return next month. To reassure Copilot+ PC users, Microsoft has released a new blog post detailing its security and privacy protections. For starters, Windows Recall on Copilot+ PCs is an opt-in feature meaning that it won't be enabled by default. Instead, you'll have to manually enable it when setting up a new Copilot+ PC for the first time. If you don't though, Windows Recall will be turned off and no snapshots will be taken or saved. Likewise, you also have the option to remove it entirely using the optional features settings in Windows 11. For those who do decide to enable Windows Recall, you are in full control of snapshots. You can delete them after they've been taken but you also have the option to pause Windows Recall or to turn it off at any time. It's also worth noting that Windows Recall is limited to Copilot+ PCs as they have extra security features built in that you won't find in other Windows laptops or desktops. These include a Microsoft Pluton security processor, Windows Hello Enhanced Sign-in Security and a TPM or Trusted Platform Module 2.0 chip among others. Since a screenshot of what's on your laptop's screen can contain loads of sensitive info, sensitive content filtering is turned on by default with Windows Recall. This helps prevent your passwords, national ID numbers, credit card numbers and other sensitive data from being stored in Recall. If you do come across something you didn't mean to save, you can delete a time range, all of the content from a particular app or website or anything else found in a Recall search. You also have the ability to control how long Recall content is kept on your Copilot+ PC along with how much disk space is allocated for snapshots. When it comes to hackers and malware, all of Recall's storage resides in a VBS Enclave to help protect your data and keys as well as to avoid any tampering on your laptop by a hacker. In order to search Recall content, you also need to use biometric credentials. While searching, Microsoft's VBS Enclaves and Windows Hello Enhanced Sign-in Security allow for data to be briefly decrypted. However, a Recall search will time out and you'll need to reauthorize for future searches. This is done to restrict any latent malware on your PC from trying to "ride along" with you during authentication to steal data. Now that Microsoft has outlined how it will keep snapshots and other Recall data both secure and private, the software giant's next battle is ahead of it: selling this AI-powered feature to consumers. The controversy around this feature was so strong at launch that Microsoft's efforts to sell us on it didn't quite land. I expect that over the coming months and going into the holidays that the company will try to show us all of the ways in which Windows Recall can actually be useful for both consumers and employees. Only time will tell as to whether or not Windows Recall's relaunch will be a success and once this feature does roll out to Copilot+ PCs, we're going to put through extensive testing to see whether or not it really does help us 'recall' useful info and things we've done on our PCs.

[13]

Microsoft now confirms you can opt out of, and remove, Windows Recall

If you don't want to deal with Windows Recall, Microsoft says that you won't have to. Microsoft has released a white paper of sorts outlining what the company is doing to secure user data within Windows Recall, the controversial Windows feature that takes snapshots of your activity for later searching. As of late last night, Microsoft still hasn't said whether they will release Recall to the Windows Insider channels for further testing as originally planned. In fact, Microsoft's paper says very little about Recall as a product or when they will push Recall live to the public. Recall was first launched back in May as part of the Windows 11 24H2 update and it uses the local AI capabilities of Copilot+ PCs. The idea is that Recall captures periodic snapshots of your screen, then uses optical character recognition plus AI-driven techniques to translate and understand your activity. If you need to revisit something from earlier but don't remember what it was or where it was stored, Recall steps in. However, Recall was seen as a privacy risk and was subsequently withdrawn from its intended public launch, with Microsoft saying that Windows Recall would later re-release in October. Microsoft latest post, authored by vice president of OS and enterprise security David Weston, details how the company intends to protect data within Recall. Though journalists previously found that Recall was "on" by default with an opt out, it will now be opt in for everyone. Users will be offered a "clear" choice on whether they want to use Recall, said Weston, and even after Recall is opted into, you can later opt out or even remove it entirely from Windows. The post goes into more detail about how data is stored within Windows, which has become one of the focal points of Recall's controversy. In May, cybersecurity researcher Kevin Beaumont tweeted that Recall stored snapshots in "plain text," and he published screenshots of what the database looked like within Windows. Beaumont has since deleted his tweet and removed the image of the database from his blog post outlining his findings. Alex Hagenah then published "TotalRecall," a tool designed to extract information from the Recall stored files, as reported by Wired. That tool, stored on a GitHub page, still includes screenshots of the Recall database, or at least what was accessible at the time. Microsoft never publicly confirmed Beaumont's findings, and doesn't refer to them in its latest post. The company's representatives also did not return written requests for further comment. Microsoft now says that Recall data is always encrypted and stored within what it calls the Virtualization-based Security Enclave, or VBS Enclave. That VBS Enclave can only be unlocked via Windows Hello Enhanced Sign-in Security, and the only data that ever leaves the VBS Enclave is whatever the user explicitly asks for. (PIN codes are only accepted after the user has added a Windows Hello key.) "Biometric credentials must be enrolled to search Recall content. Using VBS Enclaves with Windows Hello Enhanced Sign-in Security allows data to be briefly decrypted while you use the Recall feature to search," Microsoft said. "Authorization will time out and require the user to authorize access for future sessions. This restricts attempts by latent malware trying to 'ride along' with a user authentication to steal data." Weston also strove to make it clear that if you use "private browsing," Recall never captures screenshots. (It's not clear whether this applies only to Edge or to other browsers as well.) Recall snapshots can be deleted, including by a range of dates. And an icon in the system tray will flash when a screenshots is saved. What we don't know is whether Recall will store deeply personal data (like passwords) within Recall. "Sensitive content filtering is on by default and helps reduce passwords, national ID numbers, and credit card numbers from being stored in Recall." (Emphasis mine.) Microsoft said they have designed Recall to be part of a "zero trust" environment, where the VBS Enclave can only be unlocked after it's deemed secure. But trust will be an issue for consumers, too. It appears that Microsoft is at least offering controls to turn off Recall if users are worried about what the feature will track.

[14]

Microsoft finally details how Recall on Windows 11 will keep your data safe

Microsoft has been dealing with a lot of blowback ever since the reveal of its Recall software, powered by Copilot+. As a recap, every few seconds, Windows will take snapshots of whatever you're doing on your computer. These snapshots can then be searched, and your device will profile what's going on in those photos. As an example, you may be looking for the time you were looking at a specific image, and Recall can find that image using natural language. On the surface, Recall seemed to be an interesting feature. All of your data is stored on-device, and the AI that analyzes those snapshots is running locally. You can exclude apps, set the maximum amount of storage that it can use, and even pause it if you're doing something you don't want Recall to see and save. While all of that was a little creepy to some people, the damning evidence came once people got Copilot working on Windows machines early. The data was stored in a plaintext database and wasn't encrypted, meaning that any program on your PC with elevated permissions could see what was in there. That could include the time you logged into your bank, the time you sent some personal messages or the time you paid for something online. As a result, it was back to the drawing board with Microsoft, and Recall was delayed for an unknown length of time. At the time, Microsoft committed to making Recall an opt-in experience, rather than an opt-out experience, but gave no further information. Now it's coming back to Copilot+ PCs, and Microsoft has outlined how exactly it will keep your data safe. Related 4 third-party apps that are getting a Copilot+ boost Copilot+ PCs aren't only for Microsoft's tools - here are some third-party apps that put the Snapdragon X to good use. How Recall will keep your data secure For real, this time Recall's data, first and foremost, is encrypted and makes use of secure key management. Every snapshot taken by Recall is encrypted, with encryption keys stored securely with assistance from the Trusted Platform Module (TPM). These keys are tied to a user's Windows Hello Enhanced Sign-in Security and require biometric credentials to be enrolled in order to search Recall content. Additionally, the sensitive operations that manage this encrypted data are performed within a Virtualization-based Security (VBS) Enclave, which functions as a secure environment isolated from the rest of the system, preventing unauthorized access. A VBS Enclave is a software-based trusted execution environment (TEE) inside a host application and utilizes Hyper-V to create an environment that's higher-privileged than the rest of the system kernel. VBS is a core feature of Windows and is used by security features like Credential Guard, too. It protects all of the data from being accessed outside of contexts that it's not supposed to be and is a long-standing feature of Windows that can be trusted. Thanks to the VBS Enclave, all services that handle snapshots and associated data, including decryption and indexing processes, are isolated from the rest of the system. Programs within an Enclave can also use cryptographic attestation protocols to check that the environment is secure before performing sensitive operations. Finally, when a user wants to access their stored snapshots, they must authenticate through Windows Hello. This is the built-in biometric system in Windows that's aimed to ensure that only the true owner of the device can access their data. As a fallback, PIN will be accepted only after Recall is configured, so that a user can avoid data loss if a sensor is damaged. Recall sessions are also designed to timeout, requiring the user to re-authenticate, which prevents malware from leveraging a previously open session to steal information. This reduces the window of opportunity for unauthorized access, be it through malware or even physical access to the machine. Recall's new architecture Security-first Microsoft has outlined Recall's new security architecture, separated into five core components. Secure Settings Semantic Index Snapshot store Recall User Experience Snapshot Service First and foremost, Microsoft says that settings are secure by default, meaning if tampering is detected, they automatically revert to their secure state. This prevents another application from modifying those settings and potentially trying to take the machine over. Also, data in Recall is managed through a semantic index that converts images and text into encrypted vectors, all of which are encrypted by keys protected within the VBS Enclave. The snapshot store holds these snapshots along with metadata such as timestamps, app dwell times, and launch URIs. All search queries are performed within the Enclave, too. Finally, the Snapshot Service, which saves, queries, and processes data returned by the Enclave, ensures that only authorized users can retrieve data. To prevent unauthorized access, data from snapshots is only released after proof of human presence has been acquired, and access sessions are time-limited. The system includes protections such as anti-hammering mechanisms and concurrency limits to prevent malicious overloading or tampering attempts. Related Copilot+ dumbs down AI in the name of privacy -- and still got it wrong Microsoft combined the worst of two worlds when it made Copilot+. 3 Recall might be good this time Here's hoping Close All of this seems promising, and with the usage of security features that safeguard other data in Windows, it seems that Microsoft is thinking the right way around this time. Whether or not that holds up once Recall rolls out to devices obviously remains to be seen, but given the PR nightmare that was Recall when it first launched, the company would want to get it right to avoid a repeat of that again. Of course, Microsoft is also working on putting this all together in time for other Copilot+ CPUs arriving, like AMD's Ryzen AI 300 series and Intel's Core Ultra 200V series. AI is becoming more and more important on PCs (according to the tech industry, anyway), and features like Recall are ones that may actually prove to be useful going forward. As for Recall, with the steps Microsoft is taking to ensure that Recall is safe and secure, it might actually be worth giving it a shot once it launches.

[15]

Microsoft to re-launch 'privacy nightmare' AI screenshot tool

"We will be continuing to assess Recall as Microsoft moves toward launch", it said in a statement. When it initially announced the tool at its developer conference in May, Microsoft said it used AI "to make it possible to access virtually anything you have ever seen on your PC", and likened it to having photographic memory. It said Recall could search through a users' past activity, including their files, photos, emails and browsing history. It was designed to help people find things they had looked at or worked on previously by searching through desktop screenshots taken every few seconds. But critics quickly raised concerns, given the quantity of sensitive data the system would harvest, with one expert labelling it a potential "privacy nightmare." Recall was never made publicly available. A version of the tool was set to be rolled out with CoPilot+ computers - which Microsoft billed as the fastest, most intelligent Windows PCs ever built - when they launched in June, after Microsoft told users it had made changes to make it more secure. But its launch was delayed further and has now been pushed back to the autumn. The company has also announced extra security measures for it. "Recall is an opt-in experience. Snapshots and any associated information are always encrypted," said Pavan Davuluri, Microsoft's corporate vice president of Windows and devices. He added that "Windows offers tools to help you control your privacy and customise what gets saved for you to find later". However a technical blog about it states that "diagnostic data" from the tool may be shared with the firm depending on individual privacy settings. The firm added that screenshots can only be accessed with a biometric login, and sensitive information such as credit card details will not be snapped by default. Recall is only available on the CoPilot+ range of bespoke laptops featuring powerful inbuilt AI chips. Professor Alan Woodward, a cybersecurity expert at Surrey University, said the new measures were a significant improvement. "Before any functionality like Recall is deployed the security and privacy aspects will need to be comprehensively tested," he said. However he added he would be rushing to use it. "Personally I would not opt-in until this has been tested in the wild for some time."

Share

Share

Copy Link

Microsoft addresses privacy concerns surrounding its AI-enabled Recall feature in Copilot, implementing new security measures to regain user trust and improve functionality.

Microsoft's Recall Feature: A Rocky Start

Microsoft's AI-powered Recall feature, introduced as part of its Copilot AI assistant, faced significant backlash upon its initial release. The feature, designed to help users retrieve information from their digital history, raised serious privacy concerns among users and cybersecurity experts alike

1

. Many users were alarmed by the potential for unauthorized access to sensitive personal data, leading to a wave of uninstallations and criticism.Security Overhaul and New Measures

In response to the widespread concerns, Microsoft has announced a comprehensive security reboot for the Recall feature

2

. The tech giant has implemented several new security measures aimed at addressing user privacy and data protection:-

Enhanced encryption: All data processed by Recall is now end-to-end encrypted, ensuring that even Microsoft cannot access users' personal information

3

. -

Granular controls: Users now have more detailed options to control what information Recall can access and for how long

4

. -

Transparency reports: Microsoft has committed to regular transparency reports detailing how Recall data is used and protected.

Rebuilding Trust and Improving Functionality

Microsoft's efforts to address privacy concerns go beyond just security measures. The company has also focused on improving the overall functionality and user experience of the Recall feature:

-

Improved accuracy: The AI algorithms powering Recall have been refined to provide more relevant and accurate information retrieval

5

. -

User education: Microsoft has launched a comprehensive education campaign to help users understand how Recall works and how to use it safely.

-

Third-party audits: The company has invited independent security firms to audit the Recall feature, aiming to provide additional assurance to users.

Related Stories

Industry Implications and Future Outlook

The controversy surrounding Microsoft's Recall feature and the subsequent security overhaul have broader implications for the AI industry. As AI-powered tools become more integrated into our daily lives, the incident highlights the critical importance of prioritizing user privacy and security from the outset.

Microsoft's response to the Recall backlash may set a precedent for how tech companies handle similar challenges in the future. The incident serves as a reminder that even tech giants must remain vigilant and responsive to user concerns, especially when dealing with sensitive personal data in AI applications.

References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy