Microsoft's Recall Feature: A Controversial AI Tool Revamped for Security and Privacy

6 Sources

6 Sources

[1]

I just demoed Windows 11 Recall: 3 useful features that may surprise you

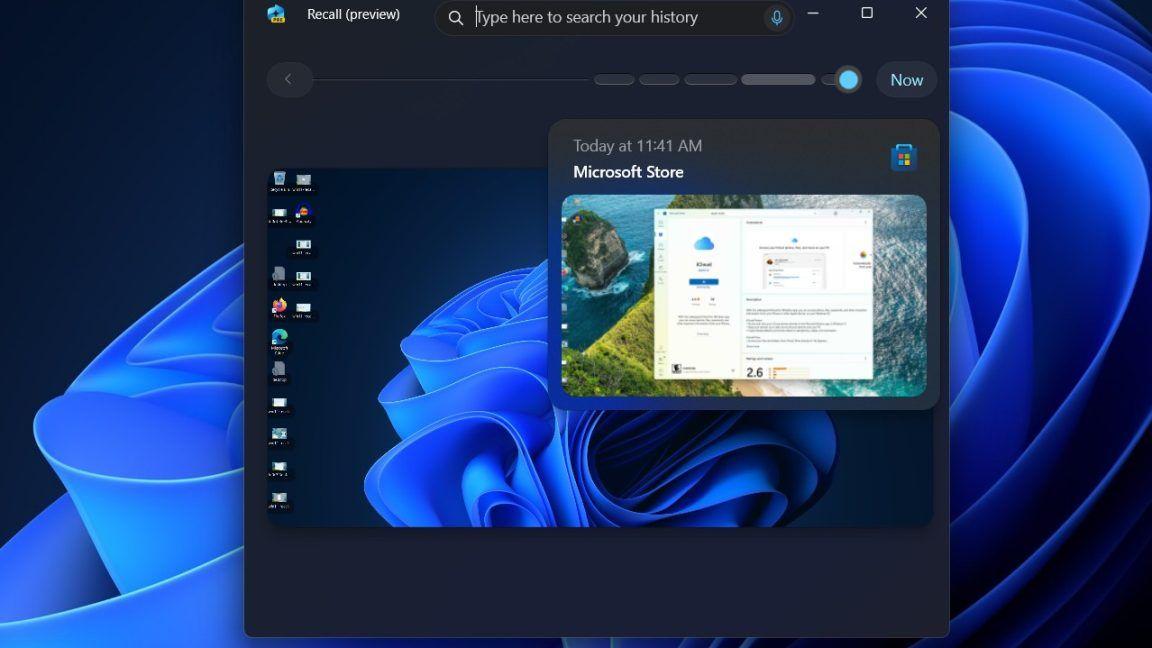

What is Recall? Only the most controversial AI-powered feature that Microsoft has dropped this year. Long story short, it's like your own personal digital scrapbook. Using frequently taken screenshots, it saves snapshots of your PC activity, allowing you to go back and revisit them whenever you want. Thanks to a timeline scrubber, you can scroll through everything that happened each day. Plus, you can use natural language to search for things within your Recall history. However, some security experts expressed concerns about Recall, fearing that hackers would see it as a gateway for stealing user data. In response, Microsoft delayed Recall's rollout and addressed fears and anxieties by, in part, requiring Windows Hello (e.g., biometric authentication like fingerprint scanning and facial recognition) to access Recall. Now that Recall is more secure, Microsoft wants Windows users to give it a chance. I had a Microsoft rep demo Recall at a recent press event. Demonstrating a practical use case, the rep pretended that he was interested in running the Boston Marathon and discovered a PowerPoint-based itinerary for it -- but never saved it. He launched Recall and searched for the word "itinerary" -- and voilà -- it appeared in his search results because the AI recognized the word among some screenshots in his timeline. The Microsoft rep boasted that Recall can even identify images. After typing, "Chart with purple arrow," Recall was able to find a document with -- you guessed it -- a purple arrow, even though the words "chart with purple arrow" never appeared in the timeline. While these perks are pretty impressive, it's the following three features that won me over. Once search results populate in Recall, you can click on a screenshot and a button below it will allow you to access the URL associated with it. For example, if you want to find that Mashable article about the "Lover Girl" dating trend, but you forgot to bookmark it, you can lean on Recall and type in the words "couple in love." Not only will you see the screenshot of the article (thanks to the AI-based image recognition), but you'll be able to access it again via Microsoft Edge. Recall can pull up files stored locally, too, if you click on a screenshot of a document you saved on your computer. You can interact with screenshots of documents, webpages, and more without ever leaving Recall. For example, if a screenshot captures a PDF you opened during your PC activity history, you don't need to pull up the actual document to interact with it. If there is text, your PC will recognize it and allow you to copy and paste it elsewhere. You can click on URLs, too. I asked the Microsoft rep, "Wouldn't Recall destroy my storage?" As it turns out, in the Settings menu, there's a way to limit Recall's presence on your storage in the Settings app. Depending on your preference, you can ensure that the PC doesn't exceed the following storage thresholds: The Microsoft rep explained that 150GB is "over a year's worth of snapshots" while 25GB will save about "several months" of data. As we reported last week, Microsoft announced a slew of updates to Recall to make it more secure. In addition to requiring a Windows Hello login, Recall requires an opt-in process. It's not on by default. Secondly, users can uninstall Recall from their system. Thirdly, Microsoft said that Recall data is encrypted and isolated in something called a "VBS Enclave." In layman's terms, this means that your screenshots will be secured in a contained environment that is safe and unreadable from third-party apps and users. Plus, Microsoft says that AI for Recall is processed on-device and Microsoft never uploads user data to the cloud. Keep in mind that only Copilot+ PCs, like the Surface Laptop 7, support Recall. Laptops with this branding can handle on-device AI processing due to their NPUs (a processor that is dedicated to running AI tasks). I tried to get Recall on my own PC (i.e., Surface Laptop 7), but it required me to jump through several hoops. Firstly, I had to sign up for a free Windows Insider membership. Secondly, I had to go through a wave of updates to make sure that my system is on the latest Windows version available. But even then, because Microsoft is doing a staggered rollout of Recall to Windows Insiders, I haven't seen Recall appear on my machine yet. Bummer! Microsoft has tough challenge on its hands: redeeming Recall from a sullied reputation. Recall has been called creepy, dystopian, controversial, and gimmicky. However, there are still some users who are optimistic about its usefulness. I fall in the latter camp; I've been in countless situations where I browse the internet, neglect to bookmark or save something, and end up pulling my hair out trying to rediscover it. As someone who struggles with forgetfulness, I can see Recall playing the hero in moments when my mind fails me.

[2]

Microsoft's controversial Recall feature is back -- but is it safe to use?

Mircosoft finally released an update on its controversial Recall AI feature that was put on hold earlier this year due to security concerns. Microsoft Recall had a bumpy road from the start. It faced backlash over security concerns before it was even released due to the nature of what the feature does. Recall uses AI to track everything you do on your Windows 11 device so Copilot can "remember" activity or items if you ask about them (i.e. "Where did I store that email draft I was writing the other day?"). The idea of an AI watching everything you do on your device could be offputting all on its own, but the situation got worse for Microsoft when users realized that all that activity data from Recall was being stored as plain text files, making it extremely vulnerable to hacking and data theft. This prompted Microsoft to change Recall to an opt-in feature (rather than something that was activated by default) on Copilot+ PCs. Microsoft also pushed back Recall's broad release to October, meaning we're now just days away from finally having access to it. Now, after months of reworking the feature, Microsoft is bringing Recall back to Copilot+ PCs, which begs the question: Is Recall safe to use now? On September 27, Microsoft finally released an update on Recall outlining some extensive security changes. The feature is slated to launch sometime in October, so this is our first look at what Microsoft has been up to since Recall's turbulent start this summer. It looks like Microsoft took users' security concerns to heart. Recall will now be an opt-in-only feature, meaning Microsoft will not have Recall running by default on Copilot+ PCs. You will have to manually turn it on if you want to use it. Users will also have the option to completely remove Recall from their devices, going a step further than simply leaving it turned off. Microsoft revised the way data from Recall is stored, which was a huge point of contention originally. Storing so much personal data as plain text files was clearly not acceptable, so this change was a must-have for Recall to have any chance of being secure. Now sensitive data and screenshots from Recall are encrypted. Basically, data from Microsoft Recall will now be stored in an isolated, locked section of memory on your device. Think of it like a deserted island you can only access if you have a map and a password. Recall data isn't going to be freely accessible like Word documents, photos, or other everyday files on your device. The new and improved version of Recall also includes measures to prevent unauthorized access to users' data. You will need to use Windows Hello sign-in for "Recall-related operations," such as changing your Recall settings. Microsoft is also using rate-limiting and anti-hammering to prevent malware from accessing Recall data. Additionally, Recall now has more privacy-focused features and settings. For example, if you're using private browsing, Recall will automatically not save any snapshots. You can also manually block specific websites and apps from Recall, control how long Recall data is saved for, and delete unwanted Recall data. Finally, Recall now has automatic sensitive content filtering, which will help block sensitive data like passwords from being stored in snapshots. According to Microsoft's blog post, "Snapshots and associated data are stored locally on the device. Recall does not share snapshots or associated data with Microsoft or third parties, nor is it shared between different Windows users on the same device. Windows will ask for your permission before saving snapshots." Microsoft made some extensive changes to Recall and it looks like there was a good faith effort to address users' security concerns. Does that really mean you should use Recall now, though? If you're unfamiliar with cybersecurity terminology or how data is stored on laptops, all the info in Microsoft's blog post might be a bit hard to digest. The first main takeaway is that Recall will not run or record snapshots without users manually turning it on. So, you don't need to worry about the feature running without your knowledge and if you want to you can even completely delete it from your device. If Recall sounds like it might be useful to you, it's definitely safer to use now than it was before, although that's not saying a whole lot. That being said, considering all the new layers of security and additional privacy filters and settings, Recall is generally safer to use now than it was when it originally launched as a preview feature in June. If you're going to use it, it's a good idea to leave snapshot notifications turned on and set Recall to ignore any sensitive websites and apps. It's also a good idea to periodically clear your Recall data so you're not keeping a massive history of your activity stored on your device.

[3]

Microsoft brings back its most controversial Windows AI feature, with beefed up security

Microsoft Recall, the AI-powered search tool for Copilot+ PCs, was quickly delayed after people realized what it did and how it worked. For those who needed a reminder, Recall was touted as the big AI feature for Copilot+. It would continuously take screenshots of your PC, index them, and use generative AI to turn them into a searchable database of your PC history. Microsoft's Recall, now with added security and privacy, image credit: Microsoft. "Hey Recall, what was that funny video I watched last night on YouTube," or "Hey Recall, can you put together a list of all the new sneakers I was looking at a few weeks ago." These are two examples of a potential use; however, early hands-on with Recall showed that its screenshots were not only unencrypted but would contain sensitive material like banking information, and the database itself was an indexed wet dream for hackers. Well, Recall is coming back, and in a new Microsoft blog post, the company has outlined how this latest version is designed with "security and privacy in mind" and core principles like ensuring sensitive data is always encrypted and that other users won't be able to access encryption keys. It pairs with Windows Hello Enhanced Sign-in Security for all access, with a PIN as a fallback method in case a sensor like a camera is damaged. Recall is now also opt-in, won't be enabled by default, and can be removed entirely from Windows. Recall screenshots or snapshots and all associated data are now stored within a "secure VBS Enclave" and are only accessible when a user is actively using the Recall feature. Microsoft describes VBS Enclaves as "a revolutionary change in our security model for [an] application, allowing an app to protect its secrets using the power of VBS from admin-level attacks." All in all, this new version of Recall sounds a lot better - and if you're interested in the technical details, be sure to read the full post by David Weston, Vice President of Enterprise and OS Security at Microsoft. However, you do have to wonder why Recall wasn't like this in the first place.

[4]

Microsoft explains how Windows 11's controversial Recall feature is now ready for release - it's coming to Copilot+ PCs in November

Recall retooled: major security and privacy tweaks have been made Microsoft has provided an update on Windows 11's Recall feature - which has been on ice for some time now, since its revelation caused a massive stir due to security and privacy worries - and when it plans to forge ahead with the feature and bring it to Copilot+ PCs. As the BBC reports, Microsoft said in a statement that the plan is to launch Recall on CoPilot+ laptops in November, with a bunch of measures being implemented to ensure the feature is secure enough detailed in a separate blog post. So, what are these measures designed to placate the critics of Recall - a capability which is a supercharged AI-powered search in Windows 11 that leverages regular screenshots ('snapshots' as Microsoft calls them) of the activity on your PC - as it was originally envisioned? One of the key changes is that Recall will be strictly opt-in, as Microsoft had told us before, as opposed to the default-on approach that was taken when the feature was first unveiled. Microsoft notes: "During the set-up experience for Copilot+ PCs, users are given a clear option whether to opt-in to saving snapshots using Recall. If a user doesn't proactively choose to turn it on, it will be off, and snapshots will not be taken or saved." Also, as Microsoft previously told us, snapshots - and other Recall-related data - will be fully encrypted, and Windows Hello authentication will be a requirement to use the feature. In other words, you'll need to sign in via Hello to ensure that it's you actually using Recall (and not someone else on your PC). Furthermore, Recall will use a secure environment called a Virtualization-based Security Enclave, or VBS Enclave, which is a fully secure virtual machine isolated from the Windows 11 system, that only the user can access with a decryption key (provided with that Windows Hello sign-in). David Weston, who wrote Microsoft's blog post and is VP of Enterprise and OS Security, explained to Windows Central: "All of the sensitive Recall processes, so screenshots, screenshot processing, vector database, are now in a VBS Enclave. We basically took Recall and put it in a virtual machine [VM], so even administrative users are not able to interact in that VM or run any code or see any data." For that matter, Microsoft can't get in to look at your Recall data, either. And as the software giant has made clear before, all this data is kept locally on your machine - none of it is sent to the cloud (that could be a big security worry if it was). This is why Recall is a Copilot+ PC exclusive, by the way - because it needs a powerful NPU for acceleration and local processing for Recall to work responsively enough (as the cloud can't be leveraged to speed up the AI grunt work). Finally, Microsoft combats a previous concern about Recall taking screenshots of, for example, your online banking site and perhaps sensitive financial info - the feature now filters out things like passwords, credit card numbers and so on. Other privacy tightening measures include the ability to exclude specific apps or websites from ever having snapshots taken by Recall (and we should note that private browsing sessions, such as Chrome's Incognito mode, are never subject to being screenshotted - at least in supported web browsers). An icon will appear in the taskbar when a Recall snapshot is being saved, incidentally, and it'll be easy to pause these screenshots from there if you wish to do so. Microsoft has basically taken Recall back to the drawing board on the security and privacy fronts over the past few months, and in broad terms, the results deserve a thumbs-up. (Although let's be honest, elements like the tight encryption should have been in place to begin with - and it's a bit frightening that they weren't). If you're still concerned about Recall despite these measures, you simply don't have to enable it. And with it being off by default in a clear manner now, there's no danger of less tech-savvy folks ending up using the feature by accident, without realizing what it is. The path Recall is on now is that it's returning to testing in October, so very soon, and with the release coming to Copilot+ PCs in November, it's on something of a fast track to arrive with the computing public - well, those who've invested in a Copilot+ laptop anyway. We're sure that for those folks, Recall will still be marked as in 'preview' and it's debatable whether you should be taking the plunge with an ability like this when it's not quite fully finished. Of course, we're getting a bit ahead of ourselves here - the next step is for Recall to arrive in Windows 11 test builds, and see what Windows Insiders make of it. If problems crop up in those preview builds, we may yet see Recall delayed for release to Copilot+ PCs. Microsoft is talking a much bigger security game for Recall here, without a doubt, and let's hope there are no setbacks or mistakes in terms of actually implementing all of this. Given how the initial incarnation of Recall was put together - with a worrying lack of attention to detail - it's easy to be cynical here, but presumably Microsoft is not going to fall into this trap again.

[5]

Microsoft Recall Security and Privacy Update - Sept 2024

If you have been worried about installing the new Recall feature which captures screenshots of your screen intermittently to help you manage your computer. You will be pleased to know that Microsoft is making strides in security and privacy with its new feature, Recall, built into Copilot+ PCs. As AI becomes more deeply integrated into Windows, there are new demands on the security and privacy architecture to ensure that user data is handled safely. At the heart of this evolution is the concept of moving AI processing to the device, allowing for lower latency, better battery life, and enhanced privacy. While this shift brings many benefits, it also introduces fresh challenges that must be addressed. Specifically, how can Microsoft ensure that sensitive user data processed locally remains secure and that privacy is respected? By placing AI processing on the device itself, Microsoft eliminates the need for constant internet connectivity, reducing data exposure. However, processing this data locally on a device opens up potential security risks -- such as access by malware or unauthorized users -- that must be mitigated. This is where Recall's architecture comes into play. It is designed to protect users by leveraging a secure environment that ensures any data saved or processed locally is encrypted, isolated, and strictly controlled. In recent updates, Microsoft introduced robust technical controls that enhance both privacy and security. These measures focus on keeping users in control, protecting sensitive data with advanced encryption, and ensuring that any operations involving this data are contained within a secure enclave. Recall also offers transparency and accountability, allowing users to know exactly when snapshots of their activities are being saved and giving them the option to pause or delete this data. But even with all of these safeguards, there are still important risks that need careful management to ensure a balance between functionality and security. Microsoft's Recall tackles a significant problem: how to handle sensitive data while ensuring privacy in an environment where AI processing happens directly on the device. AI tasks traditionally required cloud-based solutions, which pose privacy risks by transmitting data back and forth over the internet. With Recall, data stays on the device, but the challenge is to ensure this local processing doesn't create security vulnerabilities. To address this, Microsoft developed Recall as a privacy-first feature where the user remains in complete control, and their data is encrypted and safeguarded within secure, isolated environments. Agitating this issue further is the nature of personal data itself. Users today are more aware than ever of the potential misuse of their private information. From national identification numbers to browsing habits, the modern PC user wants assurances that this information is not vulnerable to hacking or unauthorized access. In the case of Recall, users must feel confident that their snapshots -- essentially records of what they have done on their devices -- are safe from malicious actors or malware attempting to extract this data. The solution to these concerns lies in the architecture of Recall. Built on four core principles, Recall offers a security model that is deeply integrated with the PC's hardware. The first principle is that the user is always in control. During setup, Recall is an entirely opt-in experience, and it can only be activated if the user chooses to do so. Users are informed upfront about what Recall will save and are given clear instructions on how to manage or delete this data. The second principle centers on encryption. Any data captured by Recall -- whether it's a screenshot or associated metadata -- is encrypted, and the encryption keys are protected by the Trusted Platform Module (TPM). These keys are accessible only through operations within a Virtualization-based Security (VBS) Enclave, meaning that even administrators or other users on the same device cannot access these snapshots. The third principle focuses on the isolation of services. The services that operate on Recall data are isolated within the VBS Enclave, ensuring that no data leaves this secure environment unless explicitly requested by the user. This adds an additional layer of protection, particularly against malware that might attempt to intercept data. The final principle of Recall's architecture is ensuring that users are always present and intentional in their use of the feature. Biometric authentication using Windows Hello is mandatory to access Recall. Once a user authenticates, they can search through their saved snapshots, but any session will time out to prevent unauthorized access. Microsoft has even built anti-hammering measures into Recall, limiting the number of attempts malware or hackers can make to access data. Recall's architecture is not without its complexity, but it is this complexity that enables its robustness. For example, the secure enclave model used in Recall is similar to what Azure employs for protecting sensitive cloud-based data. By using cryptographic attestation protocols and zero trust principles, Microsoft ensures that sensitive operations, such as searching through snapshots, are performed in a secure and isolated environment. Furthermore, Recall gives users full control over the data stored locally on their devices. They can filter out specific applications or websites, manage how long content is retained, and even decide how much disk space Recall can use for storing snapshots. Sensitive content, like passwords or credit card numbers, is automatically filtered out by default, thanks to Microsoft's Purview information protection technology. While Recall provides a high level of privacy and security, Microsoft also backs its architecture with rigorous testing and assessments. Penetration testing by the Microsoft Offensive Research & Security Engineering team, third-party audits, and Responsible AI Impact Assessments ensure that Recall is designed not only to protect user data but to meet the evolving needs of security in an AI-driven world. Ultimately, Microsoft's Recall presents a solution to the modern challenge of secure local AI data processing. It allows users to benefit from AI experiences without compromising on privacy or security, and it offers them the tools to stay in control of their data at all times. In doing so, Recall stands as a significant step forward in how we think about the future of AI, privacy, and security. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of Microsoft AI :

[6]

Microsoft Is Finally Letting You Take Control and Uninstall Windows Recall

Microsoft has also significantly improved the security model of Recall. All data is now stored in the VBS Enclave. Microsoft has been under fire ever since its rocky announcement of the much-anticipated and equally feared Windows Recall AI feature back in May. Security researchers called Windows Recall a privacy nightmare due to unencrypted data stored in the AppData folder. Later in June, Microsoft addressed Windows Recall concerns and said that major security changes will be implemented before a wider rollout. Later in September, some reports suggested that you could actually uninstall the feature on the Windows 11 24H2 build. However, Microsoft killed all hope by calling it a bug at that time. Now, the US tech giant has officially announced that users will actually be able to uninstall Windows Recall completely if they don't want to use it. And it will be turned off by default. Now that there's official confirmation, Microsoft themselves have stated that: Recall is an opt-in experience. During the set-up experience for Copilot+ PCs, users are given a clear option whether to opt-in to saving snapshots using Recall. If a user doesn't proactively choose to turn it on, it will be off, and snapshots will not be taken or saved. Users can also remove Recall entirely by using the optional features settings in Windows. But, that's not the end of it, as Microsoft has also implemented a bunch of security and privacy measures to mitigate the risks. In an interview with The Verge, David Weston, VP of enterprise and OS security at Microsoft, said, I'm actually really excited about how nerdy we got on the security architecture. I'm excited because I think the security community is going to get how much we've pushed [into Recall]. In addition to letting users uninstall Windows Recall, Microsoft is taking the security of the feature a step further by taking advantage of the TPM (Trusted Platform Module) chip. For the curious, TPM is a security chip, that creates, stores, and attests cryptographic keys. Services like Windows Hello and BitLocker drive use this for encryption. Anyway, Microsoft states that to access Recall, Windows users will need to use Hello sign-in, only after that, the tool will start working. This is definitely a better approach than the earlier version that allowed users to access the Recall timeline without any authentication. Most importantly, Recall will operate in a secure environment called VBS Enclave, aka Virtualization-based Security Enclave. All associated data and operations will be processed in the VBS Enclave, a special protected environment. So, when a user gets into Recall and drops a query, the VBS returns that data to the memory. Then, once the information is extracted and the user exits Recall, all processed data is wiped off as well. And, as Microsoft states, "The only information that leaves the VBS Enclave is what is requested by the user when actively using Recall." In addition to all that, Recall also has anti-hammering protocols in place, further securing it against malware attacks. Finally, Windows has made it very clear that Recall will only work on Copilot+ PCs. So, all those reports about being able to sideload the Recall app are nullified now. As for Recall's availability, the first Windows 11 Preview builds with Recall will start rolling out to Insiders sometime in October. Regular users will get it gradually, following Insider testing. While I'm quite happy that we will be able to uninstall Windows Recall, I'm honestly surprised that it took them this long in the first place. In my opinion, this should have been security model in the first place. Data privacy is everything, and even the smallest leak of sensitive data can be incredibly detrimental to users. Well, better late than never, I guess. What do you think about Microsoft's new update to its Recall feature? Drop your thoughts in the comments below!

Share

Share

Copy Link

Microsoft reintroduces its AI-powered Recall feature for Windows 11 with enhanced security measures, addressing previous privacy concerns and preparing for a November launch on Copilot+ PCs.

The Return of Microsoft Recall

Microsoft is set to relaunch its controversial AI-powered feature, Recall, for Windows 11 in November. This tool, designed for Copilot+ PCs, allows users to search through their PC activity history using AI-powered screenshots and natural language processing

1

. Initially met with skepticism due to security concerns, Microsoft has now addressed these issues with significant updates to the feature's privacy and security measures2

.Enhanced Security Measures

Microsoft has implemented several key changes to improve Recall's security:

- Opt-in only: Users must manually activate Recall during setup

4

. - Data encryption: All snapshots and associated data are now fully encrypted

2

. - Windows Hello authentication: Biometric verification is required to access Recall

4

. - VBS Enclave: Data is stored in an isolated, secure virtual environment

3

. - Local processing: All data remains on the device, with no cloud uploads

1

.

Privacy Controls

To address privacy concerns, Microsoft has added several user controls:

- Automatic filtering of sensitive content like passwords and credit card numbers

4

. - Option to exclude specific apps or websites from Recall

2

. - Control over data retention periods and storage limits

5

. - Ability to pause or delete Recall data

4

.

How Recall Works

Recall functions as a personal digital scrapbook, taking frequent screenshots of PC activity and allowing users to search through their history using natural language

1

. It can identify images, text, and even contextual information within screenshots, making it easier for users to find specific information or documents they may have forgotten to save1

.Related Stories

Availability and Requirements

Recall will be available exclusively on Copilot+ PCs, which are equipped with NPUs (Neural Processing Units) capable of handling on-device AI processing

1

. The feature is expected to launch in November, following a testing phase in October4

.Ongoing Concerns and Future Outlook

While Microsoft has made significant strides in addressing security and privacy concerns, some experts remain cautious about the potential risks associated with such a comprehensive data collection tool

2

. As Recall moves into its testing phase, it remains to be seen how users and security professionals will respond to these new measures and whether further adjustments will be necessary before its full release4

.References

Summarized by

Navi

[3]

[5]

Related Stories

Microsoft Relaunches Controversial Recall Feature for Copilot+ PCs Amid Privacy Concerns

26 Apr 2025•Technology

Microsoft's Controversial Recall Feature Returns to Windows 11, Sparking Privacy Concerns

11 Apr 2025•Technology

Microsoft's Controversial AI-Powered Recall Feature Enters Limited Public Preview

23 Nov 2024•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation