MIT's "Relevance" System Enables Robots to Intuitively Assist Humans

2 Sources

2 Sources

[1]

Robotic system zeroes in on objects most relevant for helping humans

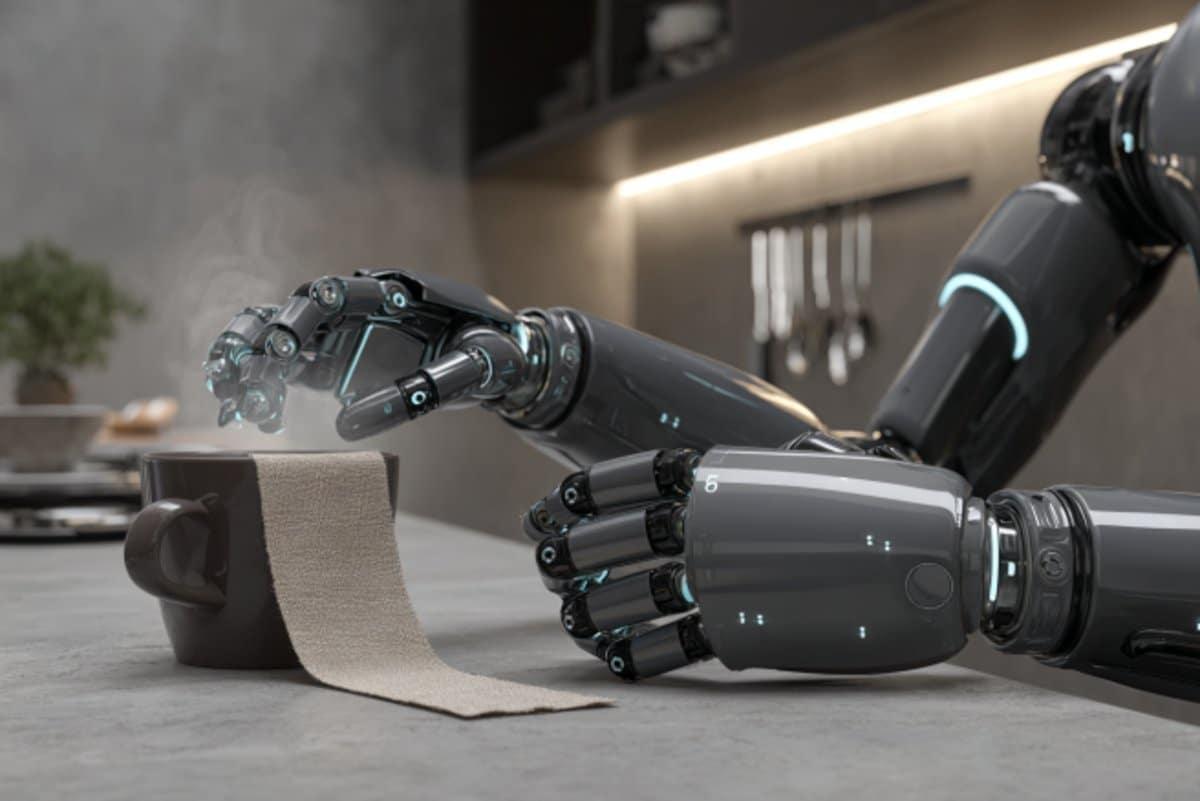

Caption: Using a novel relevance framework developed at MIT, the robot identifies and prioritizes objects in the scene to autonomously assist humans in a seamless, intelligent, and safe manner. For a robot, the real world is a lot to take in. Making sense of every data point in a scene can take a huge amount of computational effort and time. Using that information to then decide how to best help a human is an even thornier exercise. Now, MIT roboticists have a way to cut through the data noise, to help robots focus on the features in a scene that are most relevant for assisting humans. Their approach, which they aptly dub "Relevance," enables a robot to use cues in a scene, such as audio and visual information, to determine a human's objective and then quickly identify the objects that are most likely to be relevant in fulfilling that objective. The robot then carries out a set of maneuvers to safely offer the relevant objects or actions to the human. The researchers demonstrated the approach with an experiment that simulated a conference breakfast buffet. They set up a table with various fruits, drinks, snacks, and tableware, along with a robotic arm outfitted with a microphone and camera. Applying the new Relevance approach, they showed that the robot was able to correctly identify a human's objective and appropriately assist them in different scenarios. In one case, the robot took in visual cues of a human reaching for a can of prepared coffee, and quickly handed the person milk and a stir stick. In another scenario, the robot picked up on a conversation between two people talking about coffee, and offered them a can of coffee and creamer. Overall, the robot was able to predict a human's objective with 90 percent accuracy and to identify relevant objects with 96 percent accuracy. The method also improved a robot's safety, reducing the number of collisions by more than 60 percent, compared to carrying out the same tasks without applying the new method. "This approach of enabling relevance could make it much easier for a robot to interact with humans," says Kamal Youcef-Toumi, professor of mechanical engineering at MIT. "A robot wouldn't have to ask a human so many questions about what they need. It would just actively take information from the scene to figure out how to help." Youcef-Toumi's group is exploring how robots programmed with Relevance can help in smart manufacturing and warehouse settings, where they envision robots working alongside and intuitively assisting humans. Youcef-Toumi, along with graduate students Xiaotong Zhang and Dingcheng Huang, will present their new method at the IEEE International Conference on Robotics and Automation (ICRA) in May. The work builds on another paper presented at ICRA the previous year. Finding focus The team's approach is inspired by our own ability to gauge what's relevant in daily life. Humans can filter out distractions and focus on what's important, thanks to a region of the brain known as the Reticular Activating System (RAS). The RAS is a bundle of neurons in the brainstem that acts subconsciously to prune away unnecessary stimuli, so that a person can consciously perceive the relevant stimuli. The RAS helps to prevent sensory overload, keeping us, for example, from fixating on every single item on a kitchen counter, and instead helping us to focus on pouring a cup of coffee. "The amazing thing is, these groups of neurons filter everything that is not important, and then it has the brain focus on what is relevant at the time," Youcef-Toumi explains. "That's basically what our proposition is." He and his team developed a robotic system that broadly mimics the RAS's ability to selectively process and filter information. The approach consists of four main phases. The first is a watch-and-learn "perception" stage, during which a robot takes in audio and visual cues, for instance from a microphone and camera, that are continuously fed into an AI "toolkit." This toolkit can include a large language model (LLM) that processes audio conversations to identify keywords and phrases, and various algorithms that detect and classify objects, humans, physical actions, and task objectives. The AI toolkit is designed to run continuously in the background, similarly to the subconscious filtering that the brain's RAS performs. The second stage is a "trigger check" phase, which is a periodic check that the system performs to assess if anything important is happening, such as whether a human is present or not. If a human has stepped into the environment, the system's third phase will kick in. This phase is the heart of the team's system, which acts to determine the features in the environment that are most likely relevant to assist the human. To establish relevance, the researchers developed an algorithm that takes in real-time predictions made by the AI toolkit. For instance, the toolkit's LLM may pick up the keyword "coffee," and an action-classifying algorithm may label a person reaching for a cup as having the objective of "making coffee." The team's Relevance method would factor in this information to first determine the "class" of objects that have the highest probability of being relevant to the objective of "making coffee." This might automatically filter out classes such as "fruits" and "snacks," in favor of "cups" and "creamers." The algorithm would then further filter within the relevant classes to determine the most relevant "elements." For instance, based on visual cues of the environment, the system may label a cup closest to a person as more relevant -- and helpful -- than a cup that is farther away. In the fourth and final phase, the robot would then take the identified relevant objects and plan a path to physically access and offer the objects to the human. Helper mode The researchers tested the new system in experiments that simulate a conference breakfast buffet. They chose this scenario based on the publicly available Breakfast Actions Dataset, which comprises videos and images of typical activities that people perform during breakfast time, such as preparing coffee, cooking pancakes, making cereal, and frying eggs. Actions in each video and image are labeled, along with the overall objective (frying eggs, versus making coffee). Using this dataset, the team tested various algorithms in their AI toolkit, such that, when receiving actions of a person in a new scene, the algorithms could accurately label and classify the human tasks and objectives, and the associated relevant objects. In their experiments, they set up a robotic arm and gripper and instructed the system to assist humans as they approached a table filled with various drinks, snacks, and tableware. They found that when no humans were present, the robot's AI toolkit operated continuously in the background, labeling and classifying objects on the table. When, during a trigger check, the robot detected a human, it snapped to attention, turning on its Relevance phase and quickly identifying objects in the scene that were most likely to be relevant, based on the human's objective, which was determined by the AI toolkit. "Relevance can guide the robot to generate seamless, intelligent, safe, and efficient assistance in a highly dynamic environment," says co-author Zhang. Going forward, the team hopes to apply the system to scenarios that resemble workplace and warehouse environments, as well as to other tasks and objectives typically performed in household settings. "I would want to test this system in my home to see, for instance, if I'm reading the paper, maybe it can bring me coffee. If I'm doing laundry, it can bring me a laundry pod. If I'm doing repair, it can bring me a screwdriver," Zhang says. "Our vision is to enable human-robot interactions that can be much more natural and fluent." This research was made possible by the support and partnership of King Abdulaziz City for Science and Technology (KACST) through the Center for Complex Engineering Systems at MIT and KACST.

[2]

Robotic system zeroes in on objects most relevant for helping humans

For a robot, the real world is a lot to take in. Making sense of every data point in a scene can take a huge amount of computational effort and time. Using that information to then decide how to best help a human is an even thornier exercise. Now, MIT roboticists have a way to cut through the data noise, to help robots focus on the features in a scene that are most relevant for assisting humans. Their approach, which they aptly dub "Relevance," enables a robot to use cues in a scene, such as audio and visual information, to determine a human's objective and then quickly identify the objects that are most likely to be relevant in fulfilling that objective. The robot then carries out a set of maneuvers to safely offer the relevant objects or actions to the human. The paper is available on the arXiv preprint server. The researchers demonstrated the approach with an experiment that simulated a conference breakfast buffet. They set up a table with various fruits, drinks, snacks, and tableware, along with a robotic arm outfitted with a microphone and camera. Applying the new Relevance approach, they showed that the robot was able to correctly identify a human's objective and appropriately assist them in different scenarios. In one case, the robot took in visual cues of a human reaching for a can of prepared coffee, and quickly handed the person milk and a stir stick. In another scenario, the robot picked up on a conversation between two people talking about coffee, and offered them a can of coffee and creamer. Overall, the robot was able to predict a human's objective with 90% accuracy and to identify relevant objects with 96% accuracy. The method also improved a robot's safety, reducing the number of collisions by more than 60%, compared to carrying out the same tasks without applying the new method. "This approach of enabling relevance could make it much easier for a robot to interact with humans," says Kamal Youcef-Toumi, professor of mechanical engineering at MIT. "A robot wouldn't have to ask a human so many questions about what they need. It would just actively take information from the scene to figure out how to help." Youcef-Toumi's group is exploring how robots programmed with Relevance can help in smart manufacturing and warehouse settings, where they envision robots working alongside and intuitively assisting humans. Youcef-Toumi, along with graduate students Xiaotong Zhang and Dingcheng Huang, will present their new method at the IEEE International Conference on Robotics and Automation (ICRA 2025) in May. The work builds on another paper presented at ICRA the previous year. Finding focus The team's approach is inspired by our own ability to gauge what's relevant in daily life. Humans can filter out distractions and focus on what's important, thanks to a region of the brain known as the Reticular Activating System (RAS). The RAS is a bundle of neurons in the brainstem that acts subconsciously to prune away unnecessary stimuli, so that a person can consciously perceive the relevant stimuli. The RAS helps to prevent sensory overload, keeping us, for example, from fixating on every single item on a kitchen counter, and instead helping us to focus on pouring a cup of coffee. "The amazing thing is, these groups of neurons filter everything that is not important, and then it has the brain focus on what is relevant at the time," Youcef-Toumi explains. "That's basically what our proposition is." He and his team developed a robotic system that broadly mimics the RAS's ability to selectively process and filter information. The approach consists of four main phases. The first is a watch-and-learn "perception" stage, during which a robot takes in audio and visual cues, for instance from a microphone and camera, that are continuously fed into an AI "toolkit." This toolkit can include a large language model (LLM) that processes audio conversations to identify keywords and phrases, and various algorithms that detect and classify objects, humans, physical actions, and task objectives. The AI toolkit is designed to run continuously in the background, similarly to the subconscious filtering that the brain's RAS performs. The second stage is a "trigger check" phase, which is a periodic check that the system performs to assess if anything important is happening, such as whether a human is present or not. If a human has stepped into the environment, the system's third phase will kick in. This phase is the heart of the team's system, which acts to determine the features in the environment that are most likely relevant to assist the human. To establish relevance, the researchers developed an algorithm that takes in real-time predictions made by the AI toolkit. For instance, the toolkit's LLM may pick up the keyword "coffee," and an action-classifying algorithm may label a person reaching for a cup as having the objective of "making coffee." The team's Relevance method would factor in this information to first determine the "class" of objects that have the highest probability of being relevant to the objective of "making coffee." This might automatically filter out classes such as "fruits" and "snacks," in favor of "cups" and "creamers." The algorithm would then further filter within the relevant classes to determine the most relevant "elements." For instance, based on visual cues of the environment, the system may label a cup closest to a person as more relevant -- and helpful -- than a cup that is farther away. In the fourth and final phase, the robot would then take the identified relevant objects and plan a path to physically access and offer the objects to the human. Helper mode The researchers tested the new system in experiments that simulate a conference breakfast buffet. They chose this scenario based on the publicly available Breakfast Actions Dataset, which comprises videos and images of typical activities that people perform during breakfast time, such as preparing coffee, cooking pancakes, making cereal, and frying eggs. Actions in each video and image are labeled, along with the overall objective (frying eggs, versus making coffee). Using this dataset, the team tested various algorithms in their AI toolkit, such that, when receiving actions of a person in a new scene, the algorithms could accurately label and classify the human tasks and objectives, and the associated relevant objects. In their experiments, they set up a robotic arm and gripper and instructed the system to assist humans as they approached a table filled with various drinks, snacks, and tableware. They found that when no humans were present, the robot's AI toolkit operated continuously in the background, labeling and classifying objects on the table. When, during a trigger check, the robot detected a human, it snapped to attention, turning on its Relevance phase and quickly identifying objects in the scene that were most likely to be relevant, based on the human's objective, which was determined by the AI toolkit. "Relevance can guide the robot to generate seamless, intelligent, safe, and efficient assistance in a highly dynamic environment," says co-author Zhang. Going forward, the team hopes to apply the system to scenarios that resemble workplace and warehouse environments, as well as to other tasks and objectives typically performed in household settings. "I would want to test this system in my home to see, for instance, if I'm reading the paper, maybe it can bring me coffee. If I'm doing laundry, it can bring me a laundry pod. If I'm doing repair, it can bring me a screwdriver," Zhang says. "Our vision is to enable human-robot interactions that can be much more natural and fluent."

Share

Share

Copy Link

MIT researchers have developed a novel robotic system called "Relevance" that allows robots to focus on the most important aspects of their environment to assist humans more effectively and safely.

MIT Develops "Relevance" System for Intuitive Robot Assistance

Researchers at the Massachusetts Institute of Technology (MIT) have created a groundbreaking robotic system called "Relevance," designed to enhance human-robot interaction by enabling robots to focus on the most pertinent aspects of their environment

1

2

.Inspiration from Human Cognition

The system draws inspiration from the human brain's Reticular Activating System (RAS), which helps filter out unnecessary stimuli and focus on relevant information. Professor Kamal Youcef-Toumi of MIT's mechanical engineering department explains, "The amazing thing is, these groups of neurons filter everything that is not important, and then it has the brain focus on what is relevant at the time. That's basically what our proposition is"

1

.The Four-Phase Approach

The "Relevance" system operates in four main phases:

- Perception: The robot continuously gathers audio and visual data from its environment.

- Trigger Check: The system periodically assesses if any significant events, such as human presence, are occurring.

- Relevance Determination: This core phase identifies the most relevant environmental features for assisting humans.

- Action Planning: The robot plans and executes actions to offer relevant objects or assistance to humans

1

2

.

AI Toolkit and Relevance Algorithm

The system utilizes an AI toolkit that includes a large language model (LLM) for processing audio conversations and various algorithms for object detection, human recognition, and task objective classification. The researchers developed a specialized algorithm that processes real-time predictions from the AI toolkit to determine the most relevant objects and actions for a given situation

1

2

.Related Stories

Impressive Performance in Experiments

The team demonstrated the system's effectiveness through experiments simulating a conference breakfast buffet. The robot, equipped with a microphone and camera, successfully identified human objectives with 90% accuracy and relevant objects with 96% accuracy. Notably, the system also improved safety by reducing collisions by more than 60% compared to traditional methods

1

2

.Potential Applications and Future Development

Professor Youcef-Toumi and his team are exploring applications for the "Relevance" system in smart manufacturing and warehouse settings. They envision robots working alongside humans, providing intuitive assistance without the need for extensive verbal communication

1

2

.The researchers, including graduate students Xiaotong Zhang and Dingcheng Huang, will present their findings at the upcoming IEEE International Conference on Robotics and Automation (ICRA) in May 2025

1

2

.As robotics continues to advance, systems like "Relevance" promise to make human-robot interactions more natural, efficient, and safe, potentially revolutionizing various industries and daily life scenarios.

References

Summarized by

Navi

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation