MobilePoser: Revolutionary AI-Powered Motion Capture App for Smartphones

3 Sources

3 Sources

[1]

New app performs real-time, full-body motion capture with a smartphone

Northwestern University engineers have developed a new system for full-body motion capture -- and it doesn't require specialized rooms, expensive equipment, bulky cameras or an array of sensors. Called MobilePoser, the new system leverages sensors already embedded within consumer mobile devices, including smartphones, smart watches and wireless earbuds. Using a combination of sensor data, machine learning and physics, MobilePoser accurately tracks a person's full-body pose and global translation in space in real time. "Running in real time on mobile devices, MobilePoser achieves state-of-the-art accuracy through advanced machine learning and physics-based optimization, unlocking new possibilities in gaming, fitness and indoor navigation without needing specialized equipment," said Northwestern's Karan Ahuja, who led the study. "This technology marks a significant leap toward mobile motion capture, making immersive experiences more accessible and opening doors for innovative applications across various industries." Ahuja's team will unveil MobilePoser on Oct. 15, at the 2024 ACM Symposium on User Interface Software and Technology in Pittsburgh. "MobilePoser: Real-time full-body pose estimation and 3D human translation from IMUs in mobile consumer devices" will take place as a part of a session on "Poses as Input." An expert in human-computer interaction, Ahuja is the Lisa Wissner-Slivka and Benjamin Slivka Assistant Professor of Computer Science at Northwestern's McCormick School of Engineering, where he directs the Sensing, Perception, Interactive Computing and Experience (SPICE) Lab. Limitations of current systems Most movie buffs are familiar with motion-capture techniques, which are often revealed in behind-the-scenes footage. To create CGI characters -- like Gollum in "Lord of the Rings" or the Na'vi in "Avatar" -- actors wear form-fitting suits covered in sensors, as they prowl around specialized rooms. A computer captures the sensor data and then displays the actor's movements and subtle expressions. "This is the gold standard of motion capture, but it costs upward of $100,000 to run that setup," Ahuja said. "We wanted to develop an accessible, democratized version that basically anyone can use with equipment they already have." Other motion-sensing systems, like Microsoft Kinect, for example, rely on stationary cameras that view body movements. If a person is within the camera's field of view, these systems work well. But they are impractical for mobile or on-the-go applications. Predicting poses To overcome these limitations, Ahuja's team turned to inertial measurement units (IMUs), a system that uses a combination of sensors -- accelerometers, gyroscopes and magnetometers -- to measure a body's movement and orientation. These sensors already reside within smartphones and other devices, but the fidelity is too low for accurate motion-capture applications. To enhance their performance, Ahuja's team added a custom-built, multi-stage artificial intelligence (AI) algorithm, which they trained using a publicly available, large dataset of synthesized IMU measurements generated from high-quality motion capture data. With the sensor data, MobilePoser gains information about acceleration and body orientation. Then, it feeds this data through AI algorithm, which estimates joint positions and joint rotations, walking speed and direction, and contact between the user's feet and the ground. Finally, MobilePoser uses a physics-based optimizer to refine the predicted movements to ensure they match real-life body movements. In real life, for example, joints cannot bend backward, and a head cannot rotate 360 degrees. The physics optimizer ensures that captured motions also cannot move in physically impossible ways. The resulting system has a tracking error of just 8 to 10 centimeters. For comparison, the Microsoft Kinect has a tracking error of 4 to 5 centimeters, assuming the user stays within the camera's field of view. With MobilePoser, the user has freedom to roam. "The accuracy is better when a person is wearing more than one device, such as a smartwatch on their wrist plus a smartphone in their pocket," Ahuja said. "But a key part of the system is that it's adaptive. Even if you don't have your watch one day and only have your phone, it can adapt to figure out your full-body pose." Potential use cases While MobilePoser could give gamers more immersive experiences, the new app also presents new possibilities for health and fitness. It goes beyond simply counting steps to enable the user to view their full-body posture, so they can ensure their form is correct when exercising. The new app also could help physicians analyze patients' mobility, activity level and gait. Ahuja also imagines the technology could be used for indoor navigation -- a current weakness for GPS, which only works outdoors. "Right now, physicians track patient mobility with a step counter," Ahuja said. "That's kind of sad, right? Our phones can calculate the temperature in Rome. They know more about the outside world than about our own bodies. We would like phones to become more than just intelligent step counters. A phone should be able to detect different activities, determine your poses and be a more proactive assistant." To encourage other researchers to build upon this work, Ahuja's team has released its pre-trained models, data pre-processing scripts and model training code as open-source software. Ahuja also says the app will soon be available for iPhone, AirPods and Apple Watch.

[2]

New app performs real-time, full-body motion capture with a smartphone

Northwestern University engineers have developed a new system for full-body motion capture -- and it doesn't require specialized rooms, expensive equipment, bulky cameras or an array of sensors. Instead, it requires a simple mobile device. Called MobilePoser, the new system leverages sensors already embedded within consumer mobile devices, including smartphones, smart watches and wireless earbuds. Using a combination of sensor data, machine learning and physics, MobilePoser accurately tracks a person's full-body pose and global translation in space in real time. "Running in real time on mobile devices, MobilePoser achieves state-of-the-art accuracy through advanced machine learning and physics-based optimization, unlocking new possibilities in gaming, fitness and indoor navigation without needing specialized equipment," said Northwestern's Karan Ahuja, who led the study. "This technology marks a significant leap toward mobile motion capture, making immersive experiences more accessible and opening doors for innovative applications across various industries." Ahuja's team will unveil MobilePoser on Oct. 15, at the 2024 ACM Symposium on User Interface Software and Technology in Pittsburgh. "MobilePoser: Real-time full-body pose estimation and 3D human translation from IMUs in mobile consumer devices" will take place as a part of a session on "Poses as Input." An expert in human-computer interaction, Ahuja is the Lisa Wissner-Slivka and Benjamin Slivka Assistant Professor of Computer Science at Northwestern's McCormick School of Engineering, where he directs the Sensing, Perception, Interactive Computing and Experience (SPICE) Lab. Limitations of current systems Most movie buffs are familiar with motion-capture techniques, which are often revealed in behind-the-scenes footage. To create CGI characters -- like Gollum in "Lord of the Rings" or the Na'vi in "Avatar" -- actors wear form-fitting suits covered in sensors, as they prowl around specialized rooms. A computer captures the sensor data and then displays the actor's movements and subtle expressions. "This is the gold standard of motion capture, but it costs upward of $100,000 to run that setup," Ahuja said. "We wanted to develop an accessible, democratized version that basically anyone can use with equipment they already have." Other motion-sensing systems, like Microsoft Kinect, for example, rely on stationary cameras that view body movements. If a person is within the camera's field of view, these systems work well. But they are impractical for mobile or on-the-go applications. Predicting poses To overcome these limitations, Ahuja's team turned to inertial measurement units (IMUs), a system that uses a combination of sensors -- accelerometers, gyroscopes and magnetometers -- to measure a body's movement and orientation. These sensors already reside within smartphones and other devices, but the fidelity is too low for accurate motion-capture applications. To enhance their performance, Ahuja's team added a custom-built, multi-stage artificial intelligence (AI) algorithm, which they trained using a publicly available, large dataset of synthesized IMU measurements generated from high-quality motion capture data. With the sensor data, MobilePoser gains information about acceleration and body orientation. Then, it feeds this data through an AI algorithm, which estimates joint positions and joint rotations, walking speed and direction, and contact between the user's feet and the ground. Finally, MobilePoser uses a physics-based optimizer to refine the predicted movements to ensure they match real-life body movements. In real life, for example, joints cannot bend backward, and a head cannot rotate 360 degrees. The physics optimizer ensures that captured motions also cannot move in physically impossible ways. The resulting system has a tracking error of just 8 to 10 centimeters. For comparison, the Microsoft Kinect has a tracking error of 4 to 5 centimeters, assuming the user stays within the camera's field of view. With MobilePoser, the user has freedom to roam. "The accuracy is better when a person is wearing more than one device, such as a smartwatch on their wrist plus a smartphone in their pocket," Ahuja said. "But a key part of the system is that it's adaptive. Even if you don't have your watch one day and only have your phone, it can adapt to figure out your full-body pose." Potential use cases While MobilePoser could give gamers more immersive experiences, the new app also presents new possibilities for health and fitness. It goes beyond simply counting steps to enable the user to view their full-body posture, so they can ensure their form is correct when exercising. The new app could also help physicians analyze patients' mobility, activity level and gait. Ahuja also imagines the technology could be used for indoor navigation -- a current weakness for GPS, which only works outdoors. "Right now, physicians track patient mobility with a step counter," Ahuja said. "That's kind of sad, right? Our phones can calculate the temperature in Rome. They know more about the outside world than about our own bodies. We would like phones to become more than just intelligent step counters. A phone should be able to detect different activities, determine your poses and be a more proactive assistant." To encourage other researchers to build upon this work, Ahuja's team has released its pre-trained models, data pre-processing scripts and model training code as open-source software. Ahuja also says the app will soon be available for iPhone, AirPods and Apple Watch.

[3]

A new system for real-time full-body motion capture using consumer devices

Northwestern UniversityOct 16 2024 Northwestern University engineers have developed a new system for full-body motion capture -; and it doesn't require specialized rooms, expensive equipment, bulky cameras or an array of sensors. Instead, it requires a simple mobile device. Called MobilePoser, the new system leverages sensors already embedded within consumer mobile devices, including smartphones, smart watches and wireless earbuds. Using a combination of sensor data, machine learning and physics, MobilePoser accurately tracks a person's full-body pose and global translation in space in real time. "Running in real time on mobile devices, MobilePoser achieves state-of-the-art accuracy through advanced machine learning and physics-based optimization, unlocking new possibilities in gaming, fitness and indoor navigation without needing specialized equipment," said Northwestern's Karan Ahuja, who led the study. This technology marks a significant leap toward mobile motion capture, making immersive experiences more accessible and opening doors for innovative applications across various industries." Karan Ahuja, Northwestern University Ahuja's team will unveil MobilePoser on Oct. 15, at the 2024 ACM Symposium on User Interface Software and Technology in Pittsburgh. "MobilePoser: Real-time full-body pose estimation and 3D human translation from IMUs in mobile consumer devices" will take place as a part of a session on "Poses as Input." An expert in human-computer interaction, Ahuja is the Lisa Wissner-Slivka and Benjamin Slivka Assistant Professor of Computer Science at Northwestern's McCormick School of Engineering, where he directs the Sensing, Perception, Interactive Computing and Experience (SPICE) Lab. Limitations of current systems Most movie buffs are familiar with motion-capture techniques, which are often revealed in behind-the-scenes footage. To create CGI characters -; like Gollum in "Lord of the Rings" or the Na'vi in "Avatar" -; actors wear form-fitting suits covered in sensors, as they prowl around specialized rooms. A computer captures the sensor data and then displays the actor's movements and subtle expressions. "This is the gold standard of motion capture, but it costs upward of $100,000 to run that setup," Ahuja said. "We wanted to develop an accessible, democratized version that basically anyone can use with equipment they already have." Other motion-sensing systems, like Microsoft Kinect, for example, rely on stationary cameras that view body movements. If a person is within the camera's field of view, these systems work well. But they are impractical for mobile or on-the-go applications. Predicting poses To overcome these limitations, Ahuja's team turned to inertial measurement units (IMUs), a system that uses a combination of sensors -; accelerometers, gyroscopes and magnetometers -; to measure a body's movement and orientation. These sensors already reside within smartphones and other devices, but the fidelity is too low for accurate motion-capture applications. To enhance their performance, Ahuja's team added a custom-built, multi-stage artificial intelligence (AI) algorithm, which they trained using a publicly available, large dataset of synthesized IMU measurements generated from high-quality motion capture data. With the sensor data, MobilePoser gains information about acceleration and body orientation. Then, it feeds this data through AI algorithm, which estimates joint positions and joint rotations, walking speed and direction, and contact between the user's feet and the ground. Finally, MobilePoser uses a physics-based optimizer to refine the predicted movements to ensure they match real-life body movements. In real life, for example, joints cannot bend backward, and a head cannot rotate 360 degrees. The physics optimizer ensures that captured motions also cannot move in physically impossible ways. The resulting system has a tracking error of just 8 to 10 centimeters. For comparison, the Microsoft Kinect has a tracking error of 4 to 5 centimeters, assuming the user stays within the camera's field of view. With MobilePoser, the user has freedom to roam. "The accuracy is better when a person is wearing more than one device, such as a smartwatch on their wrist plus a smartphone in their pocket," Ahuja said. "But a key part of the system is that it's adaptive. Even if you don't have your watch one day and only have your phone, it can adapt to figure out your full-body pose." Potential use cases While MobilePoser could give gamers more immersive experiences, the new app also presents new possibilities for health and fitness. It goes beyond simply counting steps to enable the user to view their full-body posture, so they can ensure their form is correct when exercising. The new app also could help physicians analyze patients' mobility, activity level and gait. Ahuja also imagines the technology could be used for indoor navigation -; a current weakness for GPS, which only works outdoors. "Right now, physicians track patient mobility with a step counter," Ahuja said. "That's kind of sad, right? Our phones can calculate the temperature in Rome. They know more about the outside world than about our own bodies. We would like phones to become more than just intelligent step counters. A phone should be able to detect different activities, determine your poses and be a more proactive assistant." To encourage other researchers to build upon this work, Ahuja's team has released its pre-trained models, data pre-processing scripts and model training code as open-source software. Ahuja also says the app will soon be available for iPhone, AirPods and Apple Watch. Northwestern University

Share

Share

Copy Link

Northwestern University engineers develop MobilePoser, a groundbreaking app that performs real-time, full-body motion capture using everyday mobile devices, potentially revolutionizing gaming, fitness, and healthcare applications.

Northwestern Engineers Unveil MobilePoser: A Game-Changing Motion Capture App

Engineers at Northwestern University have developed MobilePoser, a revolutionary system for full-body motion capture that operates using everyday mobile devices. This innovative technology marks a significant advancement in making motion capture accessible and affordable, potentially transforming industries from gaming to healthcare

1

.How MobilePoser Works

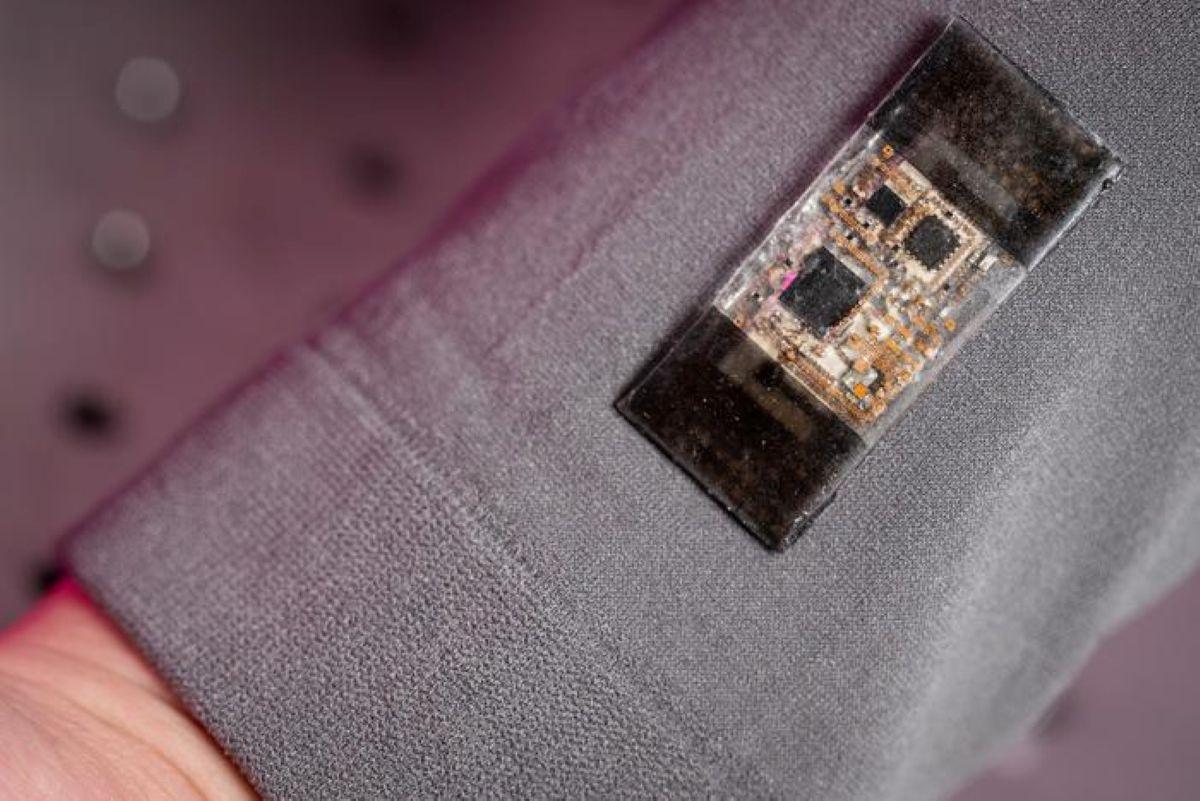

MobilePoser leverages inertial measurement units (IMUs) already present in consumer devices such as smartphones, smartwatches, and wireless earbuds. The system combines sensor data with advanced machine learning and physics-based optimization to accurately track a person's full-body pose and movement in real-time

2

.Key components of the technology include:

- Sensor data collection from IMUs

- A custom-built, multi-stage AI algorithm

- A physics-based optimizer for refining predicted movements

The resulting system achieves a tracking error of just 8 to 10 centimeters, comparable to more expensive and stationary systems like Microsoft Kinect

3

.Advantages Over Traditional Motion Capture

Traditional motion capture systems, such as those used in film production, can cost upwards of $100,000 and require specialized equipment and environments. MobilePoser aims to democratize this technology, making it accessible to anyone with a smartphone or other mobile devices

1

.Unlike stationary camera-based systems, MobilePoser allows users the freedom to move without being confined to a specific area. The system's adaptive nature also means it can function with varying combinations of devices, from a single smartphone to multiple wearables

2

.Related Stories

Potential Applications

MobilePoser's versatility opens up a wide range of potential applications:

- Gaming: Enhanced immersive experiences for players

- Fitness: Accurate tracking of full-body posture for improved exercise form

- Healthcare: Assisting physicians in analyzing patient mobility, activity levels, and gait

- Indoor Navigation: Potential solution for GPS limitations in indoor spaces

Future Developments and Availability

To foster further innovation, the research team has released their pre-trained models, data pre-processing scripts, and model training code as open-source software. The app is expected to be available soon for iPhone, AirPods, and Apple Watch

3

.As mobile devices continue to evolve, technologies like MobilePoser could transform how we interact with our devices and monitor our physical activities. This development represents a significant step towards more intelligent and proactive mobile assistants that can understand and respond to human movement in real-time.

References

Summarized by

Navi

Related Stories

Cornell Researchers Develop AI-Powered Smart Clothing for Exercise Tracking

10 Apr 2025•Technology

UC San Diego Engineers Develop Motion-Tolerant Wearable for Gesture-Based Robot Control

18 Nov 2025•Technology

MIT Develops Fiber Computer for Smart Clothing: A Breakthrough in Wearable Technology

27 Feb 2025•Technology

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy