New AI browsers run local LLMs on your device, even without internet connection

2 Sources

2 Sources

[1]

This browser lets you use AI locally on your phone, even offline - here's how

Puma Browser is a free mobile AI-centric web browser.Puma Browser allows you to make use of Local AI.You can select from several LLMs, ranging in size and scope. On the rare occasion that I use AI, I always opt for a local version. Most often, that comes in the form of Ollama installed on a desktop or laptop. I have been leery of using cloud-based AI for some time now for several reasons: Those three reasons alone have me always opting for local AI. That's all fine and good on the desktop, but what about mobile devices? Is there a way to use AI in a browser that doesn't depend on a third party? Also: My top 4 browsers after testing nearly every one (spoiler: Chrome doesn't make the list) There is now, thanks to Puma Browser. It's available for both Android and iOS and can use local LLMs such as Qwen 3 1.5b, Qwen 3 4B, LFM2 1.2, LFM2 700M, Google Gemma 3n E2B, and more. I installed Puma Browser on my Pixel 9 Pro and then downloaded Qwen 3 1.5b to see how well it fared. Given I've used local AI plenty of times (on various hardware), I know how it can be a drain on system resources, so I assumed local AI on a phone would be dreadfully slow. I also know how much storage those LLMs can take up, so I downloaded the LLM with a light bit of trepidation (unsure if uninstalling Puma Browser would also remove the LLM). Keep in mind that local AI on Puma Browser is still in the experimental phase, so it's going to have issues. As well, downloading an LLM will take some time. You should also make sure that you are on a wireless network; otherwise, the download will gobble up your data plan and will take forever. Even on wireless, the download of Qwen 3 1.5b took over 10 minutes. But how does it perform? I was shocked. After downloading an LLM, I ran my usual test query: What is Linux? To my surprise, Puma Browser responded immediately with its answer. Honestly, the local LLM on my Pixel 9 Pro performed as well as Ollama on my System76 Thelio desktop PC (running Pop!_OS) and my MacBook Pro. I couldn't believe it. To verify Puma was actually using the local LLM, I disabled both internet and wireless connectivity on my Pixel 9 Pro and ran a different query. Again, the AI responded with an answer and did so very quickly. Color me impressed. Also: I thought Perplexity's Comet browser on my Android would be a chore - but I was totally wrong I had very low expectations of this browser and am so glad my assumptions were proved to be wrong. I expected slow performance or even no performance via local LLM, but Puma Browser delivered. First off, it means you can use AI on your phone, even when you don't have a network connection. It also means you won't be placing even more strain on already strained power grids. Both of those are big pluses in my book. The only thing you have to consider (as I mentioned earlier) is that LLMs can consume a lot of storage on your phone. If you're someone who tends to regularly run out of space and has to delete photos and videos to make room, using local AI is not really an option for you. Also: I've been testing the top AI browsers - here's which ones actually impressed me You need plenty of storage on your device, especially if you plan on switching between LLMs for different purposes. I've downloaded LLMs on my desktop that were nearly 20GB in size, so you want to be very careful about which LLM you use. For example, Qwen 3 1.5b is nearly 6GB in size. Do you have space for that? If you'd like to give local AI a try on your phone, Puma Browser is a fantastic option. It's fast, easy to use, and allows you to select from several LLMs. Give this new browser a go and see if it doesn't become your default for mobile AI queries.

[2]

Move over Perplexity: BrowserAI is the agentic browser that uses your local LLM

If you've been keeping an eye on the sheer number of AI-based agentic browsers releasing this year, you know where the browser market is heading. Between Perplexity, Arc and a plethora of extensions that promise to make browsing easier by pulling answers from the internet, shopping on your behalf, summarizing pages or helping you research, there's a lot happening in the space. All these browsers, of course, depend on cloud-hosted models and remote servers with questionable data privacy policies. BrowserAI goes in the opposite direction. It brings the power of large language models right into your browser tab, except, this time, everything runs on your computer. The tool makes use of WebGPU acceleration to give you a speedy interface, privacy and complete control without relying on external systems. As someone who has been following the AI browser wars right from the beginning, this move is particularly exciting. BrowserAI treats the browser like a platform for intelligent tools rather than just showing you a simplified version of a web page through summarization. Here's what BrowserAI offers, what makes it stand out, and how it can fit into your workflow. Deta Surf is a promising new browser that aims to change how you browse the web If you're looking for a browser that might change how you browse the web, Deta's Surf aims to do just that. Posts 3 By Adam Conway Mar 25, 2025 What BrowserAI brings to your browser Local AI models, flexibility, and features that work like a full AI engine BrowserAI is built around a pretty simple concept. It lets you load up an LLM on your own machine and the browser takes over from there. It can work with pretty much any open-source LLM like Llama 3.2, Gemma, or DeepSeek. Since the models run locally, nothing you feed into it leaves your personal device. Once you've downloaded the model, you can work with it offline, making it a great tool for remote work or while traveling, perhaps on a flight. This is a pretty dramatic shift from most AI browsers that come to a screeching halt with limited internet connectivity. There's no data sharing, no logging, and no dependency on a cloud server. Nor are you limited by rate caps. So you have full end-to-end control over the pipeline. BrowserAI goes a step further by letting you swap engines depending on what you are doing. You could run a lightweight model for basic tasks like, say, grammar checking. Or you could switch over to Deepseek Coder for code explainers. You've got options. The tool adapts to whatever you want to work with without forcing you into a single model. Of course, BrowserAI isn't just about running models. It comes with a range of features that make it work more like a traditional AI platform within the browser. It supports structured responses, so you can extract clean data without working with messy text. There's support for text-to-speech and speech-to-text, which lets you convert speech to text or have it read content back to you. It stores your conversations locally, of course, and you can build more advanced workflows on top of it. How BrowserAI fits into your daily workflow A great fit for developers, students, and tinkerers The more time you spend with BrowserAI, the more you can see how it can slot into different kinds of work without the overhead or disadvantages of a cloud-based model. All the APIs are exposed, so you can build a web page including whatever tools you need for your own use case. For the sake of testing, I've only spun up a basic web page that lets me interact with the model and get immediate responses. But for developers, it opens up options like giving you an easy way to try ideas without spinning up servers. You can use it to perform all the usual use cases, like summarizing texts, use it as a small assistant that can answer queries, or have it give you quick code information, all without worrying about accidental data leaks. BrowserAI even lets you build browser-based tools that rely entirely on local models, which opens the door for private AI features in simple websites and local tools. If you are the kind of person who likes experimenting with ideas, you can use BrowserAI to build small helper agents or set up quick automations, all of which run locally. Think of an extension that can invoke a local AI to clean up your notes, organize content you read online, generate summaries, and more. The sky is the limit. Those benefits extend to teams and organizations, too, who can further build internal tools on top of BrowserAI while retaining benefits such as privacy and local control. The smarter way to use AI in your browser I've used pretty much every AI browser that's been released this year, and I get how they try to weave AI directly into your tabs and workflow. But that's not for me. I'm not open to the idea of an LLM learning from everything I do on the internet. BrowserAI works differently. It turns your browser into a small AI environment powered by the hardware that you already own and gives you privacy, full control, flexibility, and a powerful feature set that runs entirely locally. Whether you're a developer trying out new features, a privacy-conscious user, or just someone looking to experiment with AI running in your browser, the locally-hosted open-source BrowserAI enables all of that and more. BrowserAI BrowserAI lets you run AI models within your browser environment using WebGPU. See at Github Expand Collapse

Share

Share

Copy Link

Two new AI browser solutions are challenging cloud-based AI dominance by bringing local LLM capabilities directly to your devices. Puma Browser enables offline AI on mobile phones, while BrowserAI turns desktop browsers into private AI environments. Both eliminate cloud dependency and data privacy concerns while delivering surprisingly fast performance.

AI Browser Solutions Shift Processing Power to Your Device

A new wave of AI browser technology is challenging the cloud-based model that has dominated artificial intelligence applications. Puma Browser and BrowserAI represent a fundamental shift in how users interact with AI, enabling them to run large language models locally on their own hardware rather than relying on remote servers

1

2

. This approach addresses growing concerns about data privacy, internet dependency, and the environmental impact of cloud computing. For users seeking AI without an internet connection, these solutions deliver functionality that was previously impossible on mobile devices and challenging on desktops.Puma Browser brings local LLM capabilities to both Android and iOS devices, supporting models like Qwen 3 1.5b, Qwen 3 4B, LFM2 1.2, LFM2 700M, and Google Gemma 3n E2B

1

. Testing on a Pixel 9 Pro revealed surprisingly fast performance, with the AI browser responding immediately to queries even with all network connectivity disabled. The Qwen 3 1.5b model, which requires nearly 6GB of storage, took over 10 minutes to download on wireless but performed comparably to desktop implementations using Ollama.

Source: ZDNet

Private AI Environment Without Cloud Dependency

BrowserAI takes a different approach by transforming desktop browsers into an agentic browser platform powered by WebGPU acceleration

2

. Unlike Perplexity and other cloud-dependent solutions, BrowserAI works with open-source models including Llama 3.2, Gemma, and DeepSeek, all running entirely on user hardware. This architecture ensures nothing leaves the personal device, eliminating data privacy concerns and rate limitations that plague cloud services. Users can swap between lightweight models for basic tasks like grammar checking and more robust options like Deepseek Coder for technical work.The platform includes structured response support, text-to-speech and speech-to-text capabilities, and local conversation storage

2

. For developers, all APIs are exposed, enabling custom browser-based tools and workflows without server infrastructure. This opens possibilities for building private helper agents, automating content organization, and creating internal tools that maintain complete data control.On-Device AI Delivers Performance and Independence

The shift to on-device AI addresses three critical concerns driving adoption: data privacy risks, environmental impact from energy-intensive data centers, and dependency on internet connectivity

1

. Both solutions eliminate the need to transmit sensitive queries to third-party servers, a significant advantage for professionals handling confidential information. The offline AI capability proves particularly valuable for remote work scenarios, travel situations, and areas with unreliable connectivity.Storage requirements remain the primary constraint for mobile implementations. While desktop users routinely work with LLMs approaching 20GB, mobile users must carefully select models that fit available space. Puma Browser's experimental phase means users should expect occasional issues, though initial testing suggests the technology has matured beyond proof-of-concept stage

1

.Related Stories

What This Means for AI Browser Development

These developments signal a broader trend in browser technology, where AI integration moves beyond simple summarization to become a platform for intelligent tools. The ability to work with local LLM models without external systems gives users, developers, and organizations unprecedented control over their AI workflows. Students can experiment without data concerns, developers can prototype without spinning up servers, and teams can build internal tools while maintaining privacy standards

2

.

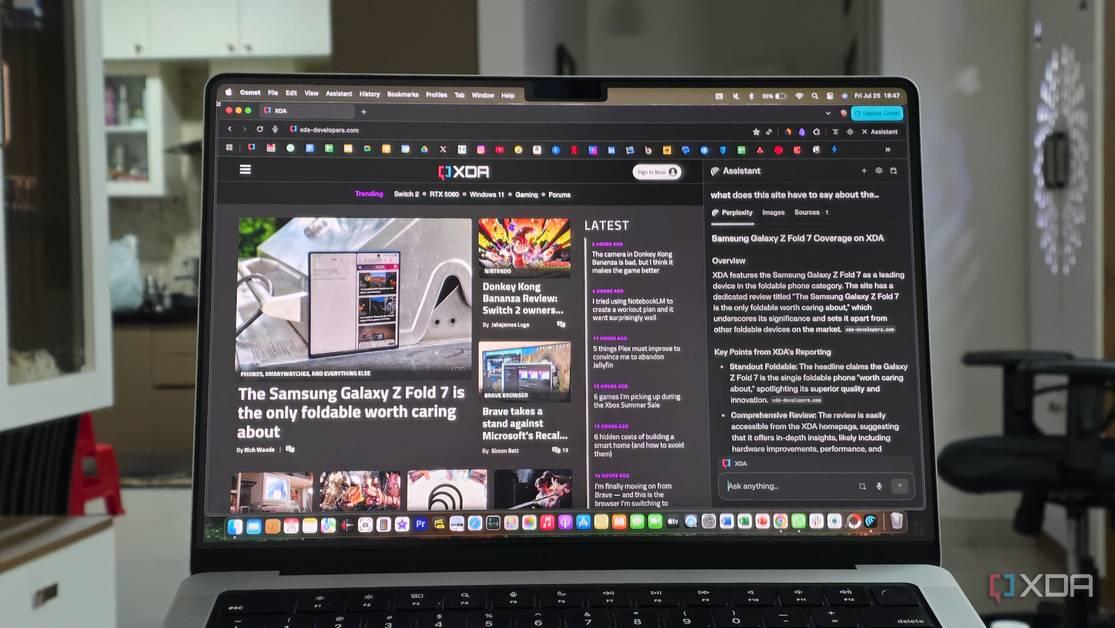

Source: XDA-Developers

As WebGPU support expands and mobile processors grow more capable, the performance gap between local and cloud-based AI continues narrowing. Watch for increased model optimization specifically targeting mobile hardware, expanded LLM options designed for constrained storage environments, and deeper integration between local AI capabilities and existing browser extensions. The question is no longer whether local AI can match cloud performance, but how quickly it will become the preferred option for privacy-conscious users.

References

Summarized by

Navi

Related Stories

Perplexity's Comet AI Browser: Revolutionizing Web Browsing with Integrated AI

16 Aug 2025•Technology

Perplexity Launches Comet AI Browser on Android, Intensifying Mobile AI Browser Competition

20 Nov 2025•Technology

Perplexity's Comet AI Browser: A Game-Changer or Overhyped Innovation?

26 Jul 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology