Nvidia CEO Jensen Huang Unveils "Agentic AI" Vision at CES 2025, Predicting Multi-Trillion Dollar Industry Shift

37 Sources

37 Sources

[1]

Jensen Huang Declares the Age of "Agentic AI" at CES 2025 - A Multi-Trillion-Dollar Shift in Work and Industry

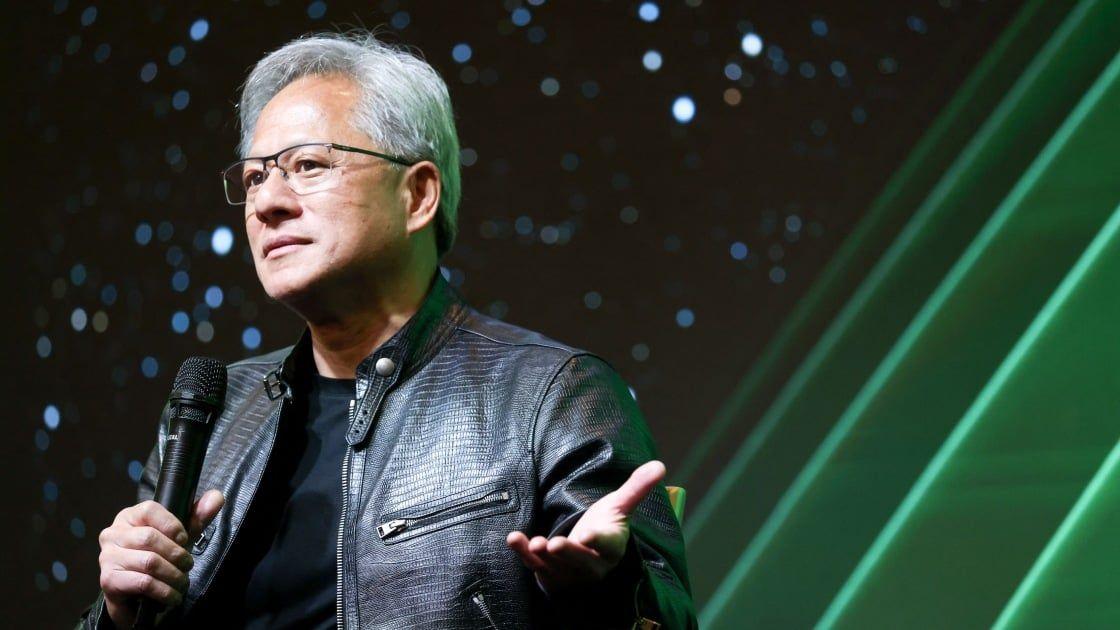

The Consumer Electronics Show (CES) 2025 kicked off with a bang as Nvidia CEO Jensen Huang took the stage to unveil the company's latest innovations. Sporting a flashy jacket he joked was perfect for Vegas, Huang took the audience through Nvidia's past, present and ambitious plans for the future. The spotlight? The rise of Agentic AI. "The age of AI Agentics is here," Huang announced, describing a new wave of artificial intelligence. Unlike generative AI, which creates content and tools, Agentic AI revolves around intelligent agents capable of assisting with tasks across industries. Don't Miss: The global games market is projected to generate $272B by the end of the year -- for $0.55/share, this VC-backed startup with a 7M+ userbase gives investors easy access to this asset market. With 100+ historic trademarks including some of the high grossing characters in history, like Cinderella, Snow White and Peter Pan, this company is transforming the $2 trillion entertainment market with patented AR, VR, and AI tech. -- For a short window, investors are able to claim $2/share ($980 min). Huang called this shift a "multi-trillion-dollar opportunity," painting a bold picture of AI-driven workflows in medicine, human resources and software engineering. "AI agents are the new digital workforce," he said, predicting a future where every company's IT department will effectively become HR for AI agents. The keynote wasn't all talk. Huang revealed Nvidia's latest GPU lineup, the RTX Blackwell series, which he described as a game-changer. The GeForce RTX 5070 leads the pack, offering the same performance as the previous generation's flagship RTX 4090 but at a jaw-dropping $549 - far below the 4090's $1,599 price tag. "This is impossible without artificial intelligence," Huang explained, crediting AI-driven engineering for the efficiency boost. Other models include the RTX 5070 Ti, which costs $749, the midrange RTX 5080, which costs $999 and the flagship RTX 5090, which costs $1,999. With features like RTX Neural Shaders for lifelike rendering and DLSS 4 upscaling technology, these GPUs are designed to handle everything from gaming to complex AI workloads. See Also: Deloitte's fastest-growing software company partners with Amazon, Walmart & Target - Many are rushing to grab 4,000 of its pre-IPO shares for just $0.26/share! In a demonstration, DLSS 4 rendered a scene at 238 frames per second, over eight times faster than traditional methods, all while keeping latency to just 34 milliseconds. Nvidia didn't stop there. Huang unveiled Project Digits, a personal AI supercomputer powered by the Grace Blackwell Superchip. It promises a petaflop of computing power, bringing supercomputing capabilities to AI researchers, students and developers right at their desks. Priced starting at $3,000, Project Digits is scheduled for release in May 2025. "AI will be mainstream in every application for every industry," Huang said, describing Project Digits as a tool to empower millions to innovate in the age of AI. Autonomous machines were also a key highlight. Huang introduced "Cosmos," a foundation model designed to accelerate training for AI systems in robotics and autonomous vehicles. Toyota will integrate Nvidia's Orin chips and DriveOS operating system into its next-generation autonomous vehicles to improve their advanced driver assistance systems. Huang boldly predicted that "a trillion miles driven each year will soon be either highly or fully autonomous," calling it the first "multi-trillion-dollar robotics industry." Read Next: Maker of the $60,000 foldable home has 3 factory buildings, 600+ houses built, and big plans to solve housing -- you can become an investor for $0.80 per share today. Inspired by Uber and Airbnb - Deloitte's fastest-growing software company is transforming 7 billion smartphones into income-generating assets - with $1,000 you can invest at just $0.26/share! Market News and Data brought to you by Benzinga APIs

[2]

Jensen Huang Says Nvidia Is a â€~Technology Company,’ but It's Really an AI Company

Nvidia and Jensen Huang took over CES 2025 with the RTX 50-series debut, but the hardware is a vehicle for AI ambitions. Nvidia is a “technology company,†not a “consumer†or “enterprise†company, as emphasized by CEO Jensen Huang. What does he mean, exactly? Doesn’t Nvidia want consumers to spend hundreds or thousands of dollars on the new, expensive RTX 50-series GPUs? Don’t they want more companies to buy their AI training chips? Nvidia is the kind of company with a lot of fingers in a lot of pies. To hear Huang tell it, if the crust of those pies is the company’s chips, then AI is the filling. â€Our technology influence is going to impact the future of consumer platforms,†Huangâ€"clad in his typical black jacket and the warm bosom of AI hypeâ€"said in a Q&A with reporters a day after his blowout opening CES keynote. But how does a company like Nvidia fund all those epic AI experiments? The H100 AI training chips made Nvidia such a tech powerhouse over the past two years, with a few stumbles along the way. But Amazon and other companies are trying to create alternatives to cut out Nvidia’s monopoly. What should happen if competition cuts the spree short? “We’re going to respond to customers wherever they are,†Huang said. Part of that is helping companies build “agentic AI,†AKA multiple AI models able to complete complex tasks. That includes several AI toolkits made to throw a bone to businesses. While the H100 has made Nvidia big, and RTX keeps gamers coming back, it wants its new $3,000 “Project Digits†AI processing hub to open up “a whole new universe†for those who can use it. Who will use it? Nvidia said it’s a tool for researchers, scientists, and maybe studentsâ€"or at least those who stumble across $3,000 in their cup of $1.50 instant ramen they’re eating for dinner for the fifth night in a row. Nvidia made sure you knew about the RTX 5090’s 3,352 TOPS of AI performance. Then, Huang’s company dropped details on several software initiativesâ€"both gaming and non-gaming related. None of his declarations were more confusing than its “world foundation†AI models. These models should be able to train on real-life environments, which could be used for helping autonomous vehicles or robots navigate their environment. It’s a lot of future tech, and Huang admitted he failed to better articulate it to a crowd who had mostly come to see cool new GPUs. “[The world foundation model] understands things like friction, inertia, grabbing, object presence, and elements, geometric and spatial understanding,†he said. “You know, the things that children know. They understand the physical world in a way that language models didn't know.†Huang opened up CES 2025 on Jan. 6 with a keynote that packed the Michelob Ultra arena in Las Vegas’ Mandalay Bay casino. There was certainly a huge portion of gamers who'd come to see the latest RTX 50-series cards in the flesh, but more were there to see how a company as lucrative as Nvidia moves forward. RTX and Project Digits drew hollers and shouts from the crowd. Spending half his time talking about his world foundation model, the audience didn’t seem nearly as enthused. It points to how awkward AI messaging can be, especially for a company that bears much of its popularity to the attentive population of PC gamers. There has been so much talk about AI that it’s easy to forget Nvidia was in this game years before ChatGPT came on the scene. Nvidia’s in-game AI upscaling tech, DLSS, has been around for close to six years, improving all the time, and it’s now one of the best AI-upscalers in games, though limited by its exclusivity to Nvidia’s cards. It was good before the advent of generative AI. Now, Nvidia promises Transformer models will further enhance upscaling and ray reconstruction. To top it off, the touted multi-frame gen could possibly grant four times the performance for 50-series GPUs, at least if the game supports it. That is a boon for those who can afford the new RTX 50-series. The RTX 5090 tops off at $2,000. The gamers who would most benefit from frame gen are those who may only afford a lower-end GPU. Huang declined to offer any hints about an RTX 5050 or 5060, joking “We announced four cards, and you want more?†The world foundation model is just a prototype, just like much of Nvidia’s new AI software on display to the public. The real questions are, when will it be ready for primetime, and who will end up using it? Nvidia showed off oddball AI NPCs, in-game chatbots, AI nurses, and an audio generator last year. This year, it wants to bloom with its world foundation model, plus a host of AI “microservices,†including a weird animated talking head that’s supposed to serve as your PC’s always-on assistant. Perhaps, some of these will stick. In the cases where Nvidia hopes AI replaces nurses or audio engineers, we hope that doesn’t happen. Huang considers Nvidia “a small company†with 32,000 worldwide employees. Yes, that’s less than half of the staff Meta has, but you can’t think of it as small in terms of the market influence for AI training chips. Because of its market position, it holds an outsized influence on the tech industry. The more people using AI, the more people will need to buy its AI-specific GPUs, plus any of its other AI software. If everybody buys their own at-home AI processing chip, they don’t have to rely on outside data centers and external chatbots. Nvidia, just like every tech company, just needs to find a use for AI beyond replacing all our jobs.

[3]

Nvidia goes all in on AI agents and humanoid robots at CES

As the AI world races toward next-generation breakthroughs, Nvidia (NVDA+3.84%) fortified its position with a flood of new chips, software and services designed to keep the industry plugged into its expanding tech ecosystem. Nvidia chief executive Jensen Huang announced a suite of AI tools and updates during the first keynote speech at the Consumer Electronics Show (CES) on Monday, focusing on AI agents -- systems that can complete tasks autonomously. Huang demonstrated these capabilities through animated versions of himself in different outfits, showing how AI agents could handle roles like customer service, coding, and research assistance. "The IT department of every company is going to be the HR department of AI agents in the future," Huang said in the keynote. The chipmaker unveiled AI Blueprints, which will help companies build and deploy these AI agents using technology built on Meta's (META+3.38%) Llama models. These "knowledge robots," as Nvidia describes them, can analyze large amounts of data, summarize information from videos and PDFs, and take actions based on what they learn. To make this happen, Nvidia partnered with five leading AI companies -- CrewAI, Daily, LangChain, LlamaIndex, and Weights & Biases -- who will help integrate Nvidia's technology into usable tools for businesses. "These AI agents act like 'knowledge robots' that can reason, plan and take action to quickly analyze large quantities of data, summarize and distill real-time insights from video, PDF and other images," Justin Boitano, Nvidia's vice president of enterprise AI software products said in a statement. Nvidia also made a major push into robotics, unveiling new tools to help companies simulate and deploy robot workforces. The centerpiece is "Mega," a new Omniverse Blueprint that lets companies develop, test, and optimize robot fleets in virtual environments before deploying them in real warehouses and factories. "The ChatGPT moment for general robotics is just around the corner," Huang said during his keynote. To back this claim, Nvidia announced a collection of robot foundation models, including new capabilities for generating synthetic motion data for training humanoid robots. The company says these pre-trained models were developed using massive amounts of data, including millions of hours of autonomous driving and drone footage. A key part of this effort is the new Isaac GR00T Blueprint, which helps solve a major challenge in robotics: generating the massive amounts of motion data needed to train humanoid robots. Instead of the expensive and time-consuming process of collecting real-world data, developers can use GR00T to generate large synthetic datasets from just a small number of human motions. "Collecting these extensive, high-quality datasets in the real world is tedious, time-consuming, and often prohibitively expensive," Nvidia said in a press release. With the Isaac GR00T blueprint, developers can generate the large data sets needed to train AI models from a small number of human motions. The new tools are already attracting major industry players. KION Group, a supply chain solutions company, is working with Accenture (ACN-0.91%) to use Nvidia's Mega blueprint to optimize warehouse operations that involve both human workers and robots. Nvidia's efforts into the physical world didn't stop there: it announced the expansion of its automobile partners, including with the world's largest automaker, which will use its accelerated computing and AI for driving assistance capabilities and autonomous vehicles. Toyota (TM-2.56%) will use Nvidia's DRIVE AGX Orin system-on-a-chip (SoC) technology for its next-generation vehicles for driving assistance. Meanwhile, the chipmaker said Aurora (AUR-5.21%) and Continental will both use Nvidia's DRIVE accelerated compute to deploy driverless trucks through a long-term strategic partnership. On the consumer front -- it is CES, after all -- Huang showed off the next generation of its RTX Blackwell GPUs, with the four versions priced between $549 and $1,999. The popular gaming chips will be released later this month and in February. And to cap off the keynote, Huang lifted the curtain on Project DIGITS, a palm-sized personal AI supercomputer powerful enough to run AI models with up to 200 billion parameters - for comparison, GPT-3 has 175 billion parameters while GPT-4 is rumored to have 1.76 trillion parameters. The device, which will be available starting at $3,000 from Nvidia and its partners in May, aims to put AI development in more hands. "AI will be mainstream in every application for every industry," Huang said in a statement. "With Project DIGITS, the Grace Blackwell Superchip comes to millions of developers. Placing an AI supercomputer on the desks of every data scientist, AI researcher and student empowers them to engage and shape the age of AI."

[4]

Nvidia Is Officially on the A.I. Agents Train With New Family of LLM Models

"The IT department of every company is going to be the HR department of A.I. agents in the future," Jensen Huang said. Nvidia (NVDA) is going all-in on A.I. agents as it seeks to cash in on what CEO Jensen Huang calls a "multi-trillion dollar opportunity" that could change how IT teams operate. During his keynote speech at the 2025 Consumer Electronics Show (CES) yesterday (Jan. 6) in Las Vegas, Huang unveiled Nvidia's Nemotron family of large language models that developers can use to build A.I. agents -- autonomous bots that can perform complex tasks without human control. Sign Up For Our Daily Newsletter Sign Up Thank you for signing up! By clicking submit, you agree to our <a href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime. See all of our newsletters Built with Meta (META)'s Llama model, the open-sourced Nemotron models, including Nano, Super, and Ultra, allow developers to "create and deploy" A.I. agents they can fine tune to specific business needs, Nvidia said. Applications include customer support, fraud detection, and inventory management optimization. By deploying A.I. agents, companies can "achieve unprecedented productivity," according to the company. "In the future, these A.I. agents are essentially a digital workforce that are working alongside your employees doing things for you on your behalf," Huang said during his keynote. "The way you would bring these specialized agents into your company is to onboard them just like you would on-board an employee." In a live demonstration, Huang showcased examples of A.I. agents with digital replicas of his face. The A.I. research assistant agent for students, for instance, is designed to take documents like lectures, academic journals and financial results and synthesize them into an interactive podcast for "easy learning." The software security agent can scan software and alert developers if vulnerabilities are detected so they can take immediate action. Other A.I. agents displayed included roles like financial analyst, employee support and factory operations. "The IT department of every company is going to be the HR department of A.I. agents in the future," Huang said. Major companies are already lining up to use Nvidia's Nemotron models. One early user is the enterprise software giant SAP. Philipp Herzig, chief A.I. officer at SAP, said in a release the company expects "hundreds of millions of enterprise users" to interact with Nvidia's A.I. agents to "accomplish their goals faster than ever before," Another enterprise software giant, ServiceNow, will use Nvidia's new models to "build advanced A.I. agent services" that can solve a complex range of problems in a move to "achieve more with less effort," Jeremy Barnes, the company's vice president of platform A.I., said in a release. Nemotron is just one of many products Huang announced during his nearly two-hour-long keynote. The CEO also unveiled a new family of its latest Blackwell chips which the company claims is three times more powerful than previous generations of GPUs. The Blackwell family is expected to create a $100 billion market opportunity for Nvidia, Stifel analyst Ruben Roy wrote in a note last November. On top of that, Huang announced the GB200 NVl2, a super chip that it claims will supercharge the capacity for data centers to run A.I. workloads. Nvidia isn't just betting big on its highly sought-after chips. Huang also announced the Cosmos foundation model to advance physical A.I. like humanoid robots, the DRIVE Hyperion platform to train self-driving vehicles, and Project Digits, a $3,000 personal supercomputer that Nvidia says is 1,000 times more powerful than a typical laptop. Nvidia also announced two autonomous driving partnerships, one with Toyota and the other with Aurora, a self-driving truck startup. The latest A.I. developments cements Nvidia's position as a global leader in the A.I. revolution. Prior to Huang's speech, Nvidia's stock jumped more than 3.4 percent to a record high of $149.43 per share after market close in anticipation of the CES announcements. That surge sent Nvidia's market value up to $3.47 trillion, briefly surpassing Apple's. Nvidia's market value grew by over $2 trillion last year.

[5]

Nvidia CEO Jensen Huang: "AI Agents Likely to Be a Multitrillion-Dollar Opportunity" | The Motley Fool

Last Monday night, Nvidia (NVDA -3.00%) CEO Jensen Huang gave the opening keynote speech to kick off CES 2025, which ran until Friday in Las Vegas. Nvidia is the leader in providing chips -- primarily graphics processing units (GPUs) -- and related technology to enable artificial intelligence (AI) capabilities. So, Huang naturally spent much of his approximately 1.5-hour presentation on the topic of AI. He covered Nvidia's new AI-related products and partnerships along with how he sees the AI industry evolving. There was much great content in Huang's speech, but one of the most exciting things for Nvidia stock investors was this comment: "AI agents [are] likely to be a multitrillion-dollar opportunity." A group of AI agents is a "digital workforce," as Huang said last Tuesday at Nvidia's CES financial analyst conference. The development of AI agents is now possible due to generative AI, a relatively new technology that greatly increases the possible use cases of AI. Generative AI's amazing capabilities were first demonstrated to consumers in late 2022 with OpenAI's release of its ChatGPT chatbot. What differentiates a chatbot, which can be extremely useful in some situations, from an AI agent is the degree of autonomy. A chatbot can do things like answer questions, generate text, and help solve problems. But it is not capable of working independently or taking initiative, as an AI agent can do. On last quarter's earnings call, CFO Colette Kress summarized Nvidia's involvement in AI agents: Nvidia AI Enterprise, which includes Nvidia NeMo and NIM microservices, is an operating platform of agentic AI. Industry leaders are using Nvidia AI to build copilots and agents. Working with Nvidia, Cadence [Design Systems], Cloudera, Cohesity, NetApp, Salesforce, SAP, and ServiceNow are racing to accelerate development of these applications with the potential for billions of agents to be deployed in the coming years. Consulting leaders like Accenture and Deloitte are taking Nvidia AI to the world's enterprises. A couple of comments about this quote: First, while Nvidia AI Enterprise is the company's operating platform to create AI agents, it's not exclusively devoted to agentic AI. Second, the list of companies using Nvidia's technology to develop AI agents is not meant to be all-inclusive. The process varies, but how it generally works is that large enterprises use Nvidia AI Enterprise to create AI agents of their tool or tools. The enterprises will then rent out these agents to their customers via the cloud. Moreover, companies across various industries are expected to use AI agents to improve their own operations. Management expects "Nvidia AI Enterprise full-year revenue to increase over 2x from last year," Kress said on last quarter's earnings call. This fast growth is being driven partly by the rush among enterprises to develop AI agents. There is indeed a rush because top execs in many industries know that their businesses will suffer if they are slower than their competitors to use AI agents to improve their own businesses, and -- in the case of cloud-based software companies -- to offer AI agents to their customers. At Nvidia's CES financial analyst conference, Huang made this comment that highlights how fast he expects AI agents to be used in certain fields: "There are 30 million software engineers [globally]. Starting next year, if a software engineer in your company is not assisted with an AI [agent] you are losing already fast." Along with software engineers, Huang expects that folks who develop marketing campaigns will be among early adopters of AI agents. Moreover, he added that all knowledge workers -- a number he pegged at about 1 billion worldwide -- will eventually be assisted by AI agents. Huang didn't expand on what he meant by "multitrillion" dollars, but "multi" is generally defined as more than two. So, multitrillion should mean at least $3 trillion. Moreover, he didn't share how long he thought it would take the agentic AI market to reach that sum in annual revenue. But we know from his remarks that he expects this market to grow very rapidly. Let's go with "multitrillion" referring to $3 trillion, which is a conservative assumption. Here is some data to help put this massive number in context: Let's assume that Nvidia captures just 5% of what I'm estimating to be a $3 trillion agentic AI market. This is another conservative estimate, given Nvidia's dominance of AI-enabling chip and related technology. Five percent of $3 trillion is $150 billion. This would be nearly all new revenue since Nvidia is in the very early stages of making money from AI agents. In its third quarter of fiscal year 2025 (ended Oct. 27, 2024), Nvidia generated revenue of $35.1 billion, and Wall Street expects its fiscal year 2025 (ends late January) revenue will grow 112% year over year to $129.1 billion. These numbers illustrate how the AI agent market has the potential to absolutely turbocharge Nvidia's revenue growth, which is already impressive. Much higher revenue should lead to much higher earnings, which in turn, should help power Nvidia stock higher over the long term.

[6]

AI Agents in 2025: Nvidia's Vision for a Machine-Led Economy

Nvidia's CEO, Jensen Huang, has declared 2025 as the "Year of AI Agents," emphasizing their potential to automate tasks across industries. These agents are designed to execute simple instructions and complex multistep tasks, enhancing efficiency and productivity. Nvidia has already integrated AI agents into its chip design processes, showcasing their practical applications and benefits. At the Consumer Electronics Show (CES) 2025, Nvidia unveiled the GeForce RTX 50-series GPUs, powered by the Blackwell architecture. These GPUs are engineered to handle the intensive computational demands of AI applications, facilitating the development and deployment of sophisticated AI agents. The RTX 5090, priced at $1,999, and the RTX 5070, at $549, offer enhanced performance and cost-efficiency, making AI technology more accessible to a broader audience.

[7]

What You Need to Know About Nvidia's AI Announcements at CES 2025 - Decrypt

After a record-breaking 2024, Nvidia is kicking off 2025 with a bang, unveiling a slate of products that could solidify its dominance in the fields of AI development and gaming. CEO Jensen Huang took the stage at CES in Las Vegas to showcase new hardware and software offerings that span everything from personal AI supercomputers to next-generation gaming cards. Nvidia's biggest announcement: Project DIGITS, a $3,000 personal AI supercomputer that packs a petaflop of computing power into a desktop-sized box. Built around the new -- and up until now, secret -- GB10 Grace Blackwell Superchip, this machine can handle AI models with up to 200 billion parameters while drawing power from a standard outlet. For heavier workloads, users can link two units to tackle models up to 405 billion parameters. For context, the largest Llama 3.2 model, the most advanced open-source LLM from Meta, has 405 billion parameters and cannot be run on consumer hardware. Up until now, it required around 8 Nvidia A100/H100 Superchips, each one costing around $30K, totaling more than $240K just in processing hardware. Two of Nvidia's new consumer-grade AI supercomputers would cost $6K and be capable of running the same quantized model. "AI will be mainstream in every application for every industry. With Project DIGITS, the Grace Blackwell Superchip comes to millions of developers," Jensen Huang, CEO of Nvidia, said in an official blog post. "Placing an AI supercomputer on the desks of every data scientist, AI researcher, and student empowers them to engage and shape the age of AI." For those who love technical details, the GB10 chip represents a significant engineering achievement born from a collaboration with MediaTek. The system-on-chip combines Nvidia's latest GPU architecture with 20 power-efficient ARM cores connected via NVLink-C2C interconnect. Each DIGITS unit sports 128GB of unified memory and up to 4TB of NVMe storage. Again, for context, the most powerful GPUs to date pack around 24GB of VRAM (the memory required to run AI models) each, and the H100 Superchip starts at 80GB of VRAM. Companies are rushing to deploy AI agents, and Nvidia knows it, which is probably why it developed Nemotron, a new family of models that comes in three sizes, and announced its expansion today with two new models: Nvidia NIIM for video summarization and understanding and Nvidia Cosmos to give Nemotron vision capabilities -- the ability to understand visual instructions. Until now, the LLMs were only text-based. However, the models excelled at the following instruction: chat, function calls, coding, and math tasks. They're available through both Hugging Face and Nvidia's website, with enterprise access through the company's AI Enterprise software platform. Again, for context, In the LLM Arena, Nvidia's Llama Nemotron 70b ranks higher than the original Llama 405b developed by Meta. It also beats different versions of Claude, Gemini Advanced, Grok-2 mini and GPT-4o. Nvidia's agent push is now also related to infrastructure. The company announced partnerships with major agentic tech providers like LangChain, LlamaIndex, and CrewAI to build blueprints on Nvidia AI Enterprise. These ready-to-deploy templates tackle specific tasks that make it easier for developers to build highly specialized agents. A new PDF-to-podcast blueprint aims to compete with Google's NotebookLM, while another blueprint helps build video search and summary agents. Developers can test these blueprints through the new Nvidia Launchables platform, which enables one-click prototyping and deployment. Nvidia saved its gaming announcements for last, unveiling the much-expected GeForce RTX 5000 Series. The flagship RTX 5090 houses 92 billion transistors and delivers 3,352 trillion AI operations per second -- double the performance of the current RTX 4090. The entire lineup features fifth-generation Tensor Cores and fourth-generation RT Cores. The new cards introduce DLSS 4, which can boost frame rates up to 8x by using AI to generate multiple frames per render. Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Jensen Huang said, "fusing AI-driven neural rendering and ray tracing, Blackwell is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." The new cards also employ transformer models for super-resolution, promising highly realistic graphics and a lot more performance for their price -- which is not cheap, btw: $549 for the RTX 5070, with the 5070 Ti at $749, the 5080 at $999, and the 5090 at $1,999. If you don't have that kind of money and want to game, don't worry. AMD also announced today its Radeon RX 9070 series. The cards are built on the new RDNA 4 architecture using a 4nm manufacturing process and feature dedicated AI accelerators to compete with Nvidia's tensor cores. While full specifications remain under wraps, AMD's latest Ryzen AI chips already achieve 50 TOPS at peak performance. Sadly, Nvidia is still the king of AI applications thanks to its CUDA technology, Nvidia's proprietary AI architecture. To tackle this, AMD has secured partnerships with HP and Asus for system integration, and over 100 enterprise platform brands will use AMD Pro technology through 2025. The Radeon cards are expected to hit the market in Q1 2025, giving Nvidia an interesting battle in both gaming and AI acceleration.

[8]

Nvidia's secret weapon: It's the software, stupid

The big picture: Nvidia's Jensen Huang kickstarted CES this year with his keynote. We listened to it and read a fair amount of analysis since. If you don't have 90 minutes to spare, we can sum it up for you: Nvidia has all the software. Or at least it has more than you do. Want to build a robot? They have software for that. Design a factory? Check. Autonomous cars, drug discovery, video games - they have that too. And it is not just a basic application on offer; it has multiple layers - for designing a robot, modeling out its physical world interactions, and then putting it into production. Nvidia has software for all of those. Editor's Note: Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries. This is not exactly news, we have written about this before, but the point of all this is to drive home the message that most companies will get a big leg up by starting with Nvidia's offerings. In fairness, we have no idea how successful any of these will be. We are fairly certain that Nvidia is not too clear on that either. Their superpower is the ability to take chances without fear of failure, and our suspicion is that many of this week's announcements will face mixed results. That being said, the sheer quantity and depth of their offerings should be sobering for everyone else. Put simply, for companies who do not plan to train their own foundational models, working with Nvidia's tools is going to be the easiest way to develop AI applications. Jensen showcased AI-powered simulations for autonomous driving at CES, highlighting Nvidia's cutting-edge Cosmos platform. This is especially true for other semiconductor companies. Here, Nvidia's lead is doubly formidable. First, competing with Nvidia in selling AI semis requires a massive investment in software, and probably half a decade to build it out. AMD is a year or two along that journey, and they are way ahead of number three. Broadcom does not have the software offerings, but they are going to do just fine selling to that handful of companies building their own foundational models. Everyone else has a long journey just to reach table stakes. The other item we pulled out of Huang's remarks was the level to which Nvidia is 'eating its own dog food.' They seem to be using AI tools to accelerate the development of their own chips. We think it is too soon to tell how much of the semiconductor design cycle can benefit from transformer-based AI models, but if even half of the workflow can be improved (dare we say 'accelerated') by AI, then Nvidia is going to have a meaningful productivity advantage over its competitors. Last year, Nvidia added $70 billion of revenue and $52 billion in operating profit, while only adding $6 billion in operating expenses. And now there is a risk that they are going to get even more productive?

[9]

Biggest Nvidia Takeaways From Jensen Huang's CES 2025 Keynote

LAS VEGAS (AP) -- Nvidia CEO Jensen Huang unveiled a suite of new products, services and partnerships at CES 2025. In a packed Las Vegas arena, Huang kicked off the CES this week with his vision for how his companies' products will drive gaming, robotics, personal computing and even self-driving vehicles forward. Here's a look at the biggest announcements to come out of his appearance. New graphics cards and AI chips Going back to its roots in gaming, the chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its consumer graphics processor units for gamers, creators and developers. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. The flagship RTX 5090 model will be available in January for $1,999. The RTX 5070 will launch later in February for $549 AI models to help with robotics and vehicles Huang also introduced a series of new AI models -- dubbed Cosmos -- that can generate cost-efficient photo-realistic video that can then be used to train robots and other automated services. The open-source model, which works with the Nvidia's Omniverse -- a physics simulation tool -- to create more realistic video, promises to be much cheaper than traditional forms of gathering training, such as having cars record road experiences or having people teach robots repetitive tasks. Central to this is Nvidia's new partnership with Japanese automaker Toyota to build its next-generation autonomous vehicles, and its announced partnership with Aurora to power its autonomous shipping trucks. Nvidia's DriveOS operating system would power the new cars, which Huang said has the highest standard of safety. "I predict that this will likely be the first multi-trillion dollar robotics industry." Aurora, based in Pittsburgh, plans to launch its driverless trucks -- with Nvidia's hardware -- commercially in April 2025. And a supercomputer on your desk And finally, Huang announced Project DIGITS, a $3,000 desktop computer targeted at developers or gen AI enthusiasts who want to experiment with AI models at home. The machine will launch in May and is powered by the new Blackwell chip. In all, Project DIGITS will allow users to run AI models with up to 200 billion parameters. This means models previously requiring expensive cloud infrastructure to operate can run on your desktop. Copyright 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed.

[10]

Biggest Nvidia takeaways from Jensen Huang's CES 2025 keynote

LAS VEGAS (AP) -- Nvidia CEO Jensen Huang unveiled a suite of new products, services and partnerships at CES 2025. In a packed Las Vegas arena, Huang kicked off the CES this week with his vision for how his companies' products will drive gaming, robotics, personal computing and even self-driving vehicles forward. Here's a look at the biggest announcements to come out of his appearance. New graphics cards and AI chips Going back to its roots in gaming, the chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its consumer graphics processor units for gamers, creators and developers. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. The flagship RTX 5090 model will be available in January for $1,999. The RTX 5070 will launch later in February for $549 AI models to help with robotics and vehicles Huang also introduced a series of new AI models -- dubbed Cosmos -- that can generate cost-efficient photo-realistic video that can then be used to train robots and other automated services. The open-source model, which works with the Nvidia's Omniverse -- a physics simulation tool -- to create more realistic video, promises to be much cheaper than traditional forms of gathering training, such as having cars record road experiences or having people teach robots repetitive tasks. Central to this is Nvidia's new partnership with Japanese automaker Toyota to build its next-generation autonomous vehicles, and its announced partnership with Aurora to power its autonomous shipping trucks. Nvidia's DriveOS operating system would power the new cars, which Huang said has the highest standard of safety. "I predict that this will likely be the first multi-trillion dollar robotics industry." Aurora, based in Pittsburgh, plans to launch its driverless trucks -- with Nvidia's hardware -- commercially in April 2025. And a supercomputer on your desk And finally, Huang announced Project DIGITS, a $3,000 desktop computer targeted at developers or gen AI enthusiasts who want to experiment with AI models at home. The machine will launch in May and is powered by the new Blackwell chip. In all, Project DIGITS will allow users to run AI models with up to 200 billion parameters. This means models previously requiring expensive cloud infrastructure to operate can run on your desktop.

[11]

Biggest Nvidia takeaways from Jensen Huang's CES 2025 keynote

LAS VEGAS (AP) -- Nvidia CEO Jensen Huang unveiled a suite of new products, services and partnerships at CES 2025. In a packed Las Vegas arena, Huang kicked off the CES this week with his vision for how his companies' products will drive gaming, robotics, personal computing and even self-driving vehicles forward. Here's a look at the biggest announcements to come out of his appearance. Going back to its roots in gaming, the chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its consumer graphics processor units for gamers, creators and developers. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. The flagship RTX 5090 model will be available in January for $1,999. The RTX 5070 will launch later in February for $549 Huang also introduced a series of new AI models -- dubbed Cosmos -- that can generate cost-efficient photo-realistic video that can then be used to train robots and other automated services. The open-source model, which works with the Nvidia's Omniverse -- a physics simulation tool -- to create more realistic video, promises to be much cheaper than traditional forms of gathering training, such as having cars record road experiences or having people teach robots repetitive tasks. Central to this is Nvidia's new partnership with Japanese automaker Toyota to build its next-generation autonomous vehicles, and its announced partnership with Aurora to power its autonomous shipping trucks. Nvidia's DriveOS operating system would power the new cars, which Huang said has the highest standard of safety. "I predict that this will likely be the first multi-trillion dollar robotics industry." Aurora, based in Pittsburgh, plans to launch its driverless trucks -- with Nvidia's hardware -- commercially in April 2025. And finally, Huang announced Project DIGITS, a $3,000 desktop computer targeted at developers or gen AI enthusiasts who want to experiment with AI models at home. The machine will launch in May and is powered by the new Blackwell chip. In all, Project DIGITS will allow users to run AI models with up to 200 billion parameters. This means models previously requiring expensive cloud infrastructure to operate can run on your desktop.

[12]

Nvidia founder Jensen Huang unveils next generation of AI and gaming chips at CES 2025

In a packed Las Vegas arena, Nvidia founder Jensen Huang stood on stage and marveled over the crisp real-time computer graphics displayed on the screen behind him. He watched as a dark-haired woman walked through ornate gilded double doors and took in the rays of light that poured in through stained glass windows. "The amount of geometry that you saw was absolutely insane," Huang told an audience of thousands at CES 2025 Monday night. "It would have been impossible without artificial intelligence." The chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its most advanced consumer graphics processor units for gamers, creators and developers. The tech is designed for use on both desktop and laptop computers. Ahead of Huang's speech, Nvidia stock climbed 3.4% to top its record set in November. Nvidia and other AI stocks keep climbing even as criticism rises that their stock prices have already shot too high, too fast. Despite worries about a potential bubble, the industry continues to talk up its potential. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. Building on the tech Nvidia released 25 years ago, the company announced that it would also introduce "RTX Neural Shaders," which use AI to help render game characters in deep detail -- a task that's notoriously tricky because people can easily spot a small error on digital humans. Huang said Nvidia is also introducing a new suite of technologies that enable "autonomous characters" to perceive, plan and act like human players. Those characters can help players plan strategies or adapt tactics to challenge players and create more dynamic battles. In addition to Nvidia, tech giants such as AMD, Google and Samsung are at CES 2025 to unveil artificial intelligence tools aimed at helping both content creators and consumers alike in their quest for entertainment.

[13]

Nvidia Hands-Down Won AI At CES 2025, And Also The Show Itself. Here's Why That Matters

There were plenty of AI announcements at CES 2025 and while some were charming -- like the robot vacuum that can pick up dirty socks or TVs that can generate recipes -- none of them has the power to transform society like Nvidia. Calling Nvidia the chipmaker powering the AI revolution is no overstatement, which is why its Cosmos AI model won the official Best of CES award not only for AI, but for the entire show. CNET Group, which is made up of CNET, PCMag, ZDNET, Mashable and Lifehacker, is the official awards partner for CTA, which puts on the annual mega tech show. Back to the robot for a moment, if Roborock and Dreame are making home tech cleaning helpers that use AI to identify clutter that doesn't belong and ledges to "climb" over with their respective robot arms and legs, then Nvidia is the engine that is openly releasing models to allow robots like these to function in the real world. Not solely robots, either. Also smartglasses to process speech and images in the surrounding world and cars -- Nvidia and Toyota have already inked a deal to use the Cosmos AI model to train cars. (The company also released powerful new graphics cards.) As ZDNET Editor-in-Chief Jason Hiner put it, "Nvidia Cosmos demonstrates the biggest and boldest ambition we've seen at CES 2025 for how technology could help people and communities in the years ahead." Earlier this week, Nvidia CEO Jensen Huang took the stage to unveil Cosmos, the foundational AI model that helps robots and autonomous vehicles understand the physical world, calling it "the ChatGPT moment for robotics." Huang also announced a new chip named Thor for cars and trucks that uses AI to process visual information coming in from cameras and lidar sensors to lead the way in level 4 autonomous driving. And he revealed the 50-series lineup of gaming and laptop GPUs that promises to deliver massive leaps in performance and "breakthroughs in AI-driven rendering" at a lower cost than the 40 series (in most cases). CES is the largest consumer technology show in North America. Held annually in Las Vegas, It brings in the world's top tech makers to show off devices and concepts that may or may not ever reach consumers. It's also a way for smaller companies to get in front of the press and fans to demo what they've been working on. AI was the major trend last year. When OpenAI launched ChatGPT in late 2022, it showed general consumers what generative AI was capable of. What followed were all the major tech companies releasing AI products of their own and seeing stock valuations jump in the process. Now, seemingly every company is wanting to integrate AI into its products in some fashion as a way to court investor and consumer interest, even as consumers shrug at AI-powered iPhones. Amidst the miasma of AI goop flowing through the showrooms in Las Vegas, the iterative remixes of existing AI tech can sometimes end up having a snake-oil-like quality. But people chose to stand in long lines to see Huang, who has achieved tech celebrity status in his own right. His quirky announcement videos attract millions of views and his down-to-earth demeanor, plus his adornment of leather jackets, make him a likable hawker of cutting-edge gaming graphics. And it's paying off for Nvidia. In early January of 2023, Nvidia stock hovered around $15. With the AI revolution, companies have been scrambling to buy Nvidia chips over the past two years to power their servers. After Huang's keynote on Monday, the stock hit record highs above $150 before cooling off a bit, but still representing about a tenfold increase in just two years. It's also worth noting how Nvidia's tech has seemingly pushed aside other players in the GPU space. Nvidia is so heavily dominating the GPU market that AMD and Intel have been relegated to competing in the midrange category. AMD did announce a series of new midrange GPUs, but changed the naming convention to better match Nvidia. For example, the AMD RX 9070 is taking clear shots at the 5070 cards, making it easier for consumers to compare the two. Intel just recently entered the dedicated GPU card market after failing to meet the moment on the CPU space against Qualcomm, AMD and Nvidia. But it's only trying to carve out a space in the budget GPU category. Thankfully, this past year has shown that AI hype can only go so far. AI wearables failed to impress and the market cooled on throwing billions at companies releasing AI-polka-dotted press releases. Next year's CES will likely have its fair share of AI bloat, most of which will likely be met with yawns -- maybe it should be renamed as Nvidia Greenlight.

[14]

Nvidia founder Jensen Huang unveils next generation of AI and gaming chips at CES 2025

In a packed Las Vegas arena, Nvidia founder Jensen Huang stood on stage and marveled over the crisp real-time computer graphics displayed on the screen behind him. He watched as a dark-haired woman walked through ornate gilded double doors and took in the rays of light that poured in through stained glass windows. "The amount of geometry that you saw was absolutely insane," Huang told an audience of thousands at CES 2025 Monday night. "It would have been impossible without artificial intelligence." The chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its most advanced consumer graphics processor units for gamers, creators and developers. The tech is designed for use on both desktop and laptop computers. Ahead of Huang's speech, Nvidia stock climbed 3.4% to top its record set in November. Nvidia and other AI stocks keep climbing despite concerns over Nvidia's share price being bloated and the company being overvalued. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. Building on the tech Nvidia released 25 years ago, the company announced that it would also introduce "RTX Neural Shaders," which use AI to help render game characters in deep detail - a task that's notoriously tricky because people can easily spot a small error on digital humans. Huang said Nvidia is also introducing a new suite of technologies that enable "autonomous characters" to perceive, plan and act like human players. Those characters can help players plan strategies or adapt tactics to challenge players and create more dynamic battles. In addition to Nvidia, tech giants such as AMD, Google and Samsung are at CES 2025 to unveil artificial intelligence tools aimed at helping both content creators and consumers alike in their quest for entertainment. © 2025 The Associated Press. All rights reserved. This material may not be published, broadcast, rewritten or redistributed without permission.

[15]

CES 2025: AI Advancing at 'Incredible Pace,' NVIDIA CEO Says

Jensen Huang unveils NVIDIA Cosmos, Blackwell RTX 50 Series GPUs, and AI tools for PCs NVIDIA founder and CEO Jensen Huang kicked off CES 2025 with a 90-minute keynote that included new products to advance gaming, autonomous vehicles, robotics, and agentic AI. AI has been "advancing at an incredible pace," he said before an audience of more than 6,000 packed into the Michelob Ultra Arena in Las Vegas. "It started with perception AI -- understanding images, words, and sounds. Then generative AI -- creating text, images and sound," Huang said. Now, we're entering the era of "physical AI, AI that can proceed, reason, plan and act." NVIDIA GPUs and platforms are at the heart of this transformation, Huang explained, enabling breakthroughs across industries, including gaming, robotics and autonomous vehicles (AVs). Huang's keynote showcased how NVIDIA's latest innovations are enabling this new era of AI, with several groundbreaking announcements, including: Huang started off his talk by reflecting on NVIDIA's three-decade journey. In 1999, NVIDIA invented the programmable GPU. Since then, modern AI has fundamentally changed how computing works, he said. "Every single layer of the technology stack has been transformed, an incredible transformation, in just 12 years." Revolutionizing Graphics With GeForce RTX 50 Series "GeForce enabled AI to reach the masses, and now AI is coming home to GeForce," Huang said. With that, he introduced the NVIDIA GeForce RTX 5090 GPU, the most powerful GeForce RTX GPU so far, with 92 billion transistors and delivering 3,352 trillion AI operations per second (TOPS). "Here it is -- our brand-new GeForce RTX 50 series, Blackwell architecture," Huang said, holding the blacked-out GPU aloft and noting how it's able to harness advanced AI to enable breakthrough graphics. "The GPU is just a beast." "Even the mechanical design is a miracle," Huang said, noting that the graphics card has two cooling fans. More variations in the GPU series are coming. The GeForce RTX 5090 and GeForce RTX 5080 desktop GPUs are scheduled to be available Jan. 30. The GeForce RTX 5070 Ti and the GeForce RTX 5070 desktops are slated to be available starting in February. Laptop GPUs are expected in March. DLSS 4 introduces Multi Frame Generation, working in unison with the complete suite of DLSS technologies to boost performance by up to 8x. NVIDIA also unveiled NVIDIA Reflex 2, which can reduce PC latency by up to 75%. The latest generation of DLSS can generate three additional frames for every frame we calculate, Huang explained. "As a result, we're able to render at incredibly high performance, because AI does a lot less computation." RTX Neural Shaders use small neural networks to improve textures, materials and lighting in real-time gameplay. RTX Neural Faces and RTX Hair advance real-time face and hair rendering, using generative AI to animate the most realistic digital characters ever. RTX Mega Geometry increases the number of ray-traced triangles by up to 100x, providing more detail. Advancing Physical AI With Cosmos| In addition to advancements in graphics, Huang introduced the NVIDIA Cosmos world foundation model platform, describing it as a game-changer for robotics and industrial AI. The next frontier of AI is physical AI, Huang explained. He likened this moment to the transformative impact of large language models on generative AI. "The ChatGPT moment for general robotics is just around the corner," he explained. Like large language models, world foundation models are fundamental to advancing robot and AV development, yet not all developers have the expertise and resources to train their own, Huang said. Cosmos integrates generative models, tokenizers, and a video processing pipeline to power physical AI systems like AVs and robots. Cosmos aims to bring the power of foresight and multiverse simulation to AI models, enabling them to simulate every possible future and select optimal actions. Cosmos models ingest text, image or video prompts and generate virtual world states as videos, Huang explained. "Cosmos generations prioritize the unique requirements of AV and robotics use cases like real-world environments, lighting and object permanence." Leading robotics and automotive companies, including 1X, Agile Robots, Agility, Figure AI, Foretellix, Fourier, Galbot, Hillbot, IntBot, Neura Robotics, Skild AI, Virtual Incision, Waabi and XPENG, along with ridesharing giant Uber, are among the first to adopt Cosmos. In addition, Hyundai Motor Group is adopting NVIDIA AI and Omniverse to create safer, smarter vehicles, supercharge manufacturing and deploy cutting-edge robotics. Cosmos is open license and available on GitHub. Empowering Developers With AI Foundation Models Beyond robotics and autonomous vehicles, NVIDIA is empowering developers and creators with AI foundation models. Huang introduced AI foundation models for RTX PCs that supercharge digital humans, content creation, productivity and development. "These AI models run in every single cloud because NVIDIA GPUs are now available in every single cloud," Huang said. "It's available in every single OEM, so you could literally take these models, integrate them into your software packages, create AI agents and deploy them wherever the customers want to run the software." These models -- offered as NVIDIA NIM microservices -- are accelerated by the new GeForce RTX 50 Series GPUs. The GPUs have what it takes to run these swiftly, adding support for FP4 computing, boosting AI inference by up to 2x and enabling generative AI models to run locally in a smaller memory footprint compared with previous-generation hardware. Huang explained the potential of new tools for creators: "We're creating a whole bunch of blueprints that our ecosystem could take advantage of. All of this is completely open source, so you could take it and modify the blueprints." Top PC manufacturers and system builders are launching NIM-ready RTX AI PCs with GeForce RTX 50 Series GPUs. "AI PCs are coming to a home near you," Huang said. While these tools bring AI capabilities to personal computing, NVIDIA is also advancing AI-driven solutions in the automotive industry, where safety and intelligence are paramount. Innovations in Autonomous Vehicles Huang announced the NVIDIA DRIVE Hyperion AV platform, built on the new NVIDIA AGX Thor system-on-a-chip (SoC), designed for generative AI models and delivering advanced functional safety and autonomous driving capabilities. "The autonomous vehicle revolution is here," Huang said. "Building autonomous vehicles, like all robots, requires three computers: NVIDIA DGX to train AI models, Omniverse to test drive and generate synthetic data, and DRIVE AGX, a supercomputer in the car." DRIVE Hyperion, the first end-to-end AV platform, integrates advanced SoCs, sensors, and safety systems for next-gen vehicles, a sensor suite and an active safety and level 2 driving stack, with adoption by automotive safety pioneers such as Mercedes-Benz, JLR and Volvo Cars. Huang highlighted the critical role of synthetic data in advancing autonomous vehicles. Real-world data is limited, so synthetic data is essential for training the autonomous vehicle data factory, he explained. Powered by NVIDIA Omniverse AI models and Cosmos, this approach "generates synthetic driving scenarios that enhance training data by orders of magnitude." Using Omniverse and Cosmos, NVIDIA's AI data factory can scale "hundreds of drives into billions of effective miles," Huang said, dramatically increasing the datasets needed for safe and advanced autonomous driving. "We are going to have mountains of training data for autonomous vehicles," he added. Toyota, the world's largest automaker, will build its next-generation vehicles on the NVIDIA DRIVE AGX Orin, running the safety-certified NVIDIA DriveOS operating system, Huang said. "Just as computer graphics was revolutionized at such an incredible pace, you're going to see the pace of AV development increasing tremendously over the next several years," Huang said. These vehicles will offer functionally safe, advanced driving assistance capabilities. Agentic AI and Digital Manufacturing NVIDIA and its partners have launched AI Blueprints for agentic AI, including PDF-to-podcast for efficient research and video search and summarization for analyzing large quantities of video and images -- enabling developers to build, test and run AI agents anywhere. AI Blueprints empower developers to deploy custom agents for automating enterprise workflows This new category of partner blueprints integrates NVIDIA AI Enterprise software, including NVIDIA NIM microservices and NVIDIA NeMo, with platforms from leading providers like CrewAI, Daily, LangChain, LlamaIndex and Weights & Biases. Additionally, Huang announced new Llama Nemotron. Developers can use NVIDIA NIM microservices to build AI agents for tasks like customer support, fraud detection, and supply chain optimization. Available as NVIDIA NIM microservices, the models can supercharge AI agents on any accelerated system. NVIDIA NIM microservices streamline video content management, boosting efficiency and audience engagement in the media industry. Moving beyond digital applications, NVIDIA's innovations are paving the way for AI to revolutionize the physical world with robotics. "All of the enabling technologies that I've been talking about are going to make it possible for us in the next several years to see very rapid breakthroughs, surprising breakthroughs, in general robotics." In manufacturing, the NVIDIA Isaac GR00T Blueprint for synthetic motion generation will help developers generate exponentially large synthetic motion data to train their humanoids using imitation learning. Huang emphasized the importance of training robots efficiently, using NVIDIA's Omniverse to generate millions of synthetic motions for humanoid training. The Mega blueprint enables large-scale simulation of robot fleets, adopted by leaders like Accenture and KION for warehouse automation. These AI tools set the stage for NVIDIA's latest innovation: a personal AI supercomputer called Project DIGITS. NVIDIA Unveils Project Digits Putting NVIDIA Grace Blackwell on every desk and at every AI developer's fingertips, Huang unveiled NVIDIA Project DIGITS. "I have one more thing that I want to show you," Huang said. "None of this would be possible if not for this incredible project that we started about a decade ago. Inside the company, it was called Project DIGITS -- deep learning GPU intelligence training system." Huang highlighted the legacy of NVIDIA's AI supercomputing journey, telling the story of how in 2016 he delivered the first NVIDIA DGX system to OpenAI. "And obviously, it revolutionized artificial intelligence computing." The new Project DIGITS takes this mission further. "Every software engineer, every engineer, every creative artist -- everybody who uses computers today as a tool -- will need an AI supercomputer," Huang said. Huang revealed that Project DIGITS, powered by the GB10 Grace Blackwell Superchip, represents NVIDIA's smallest yet most powerful AI supercomputer. "This is NVIDIA's latest AI supercomputer," Huang said, showcasing the device. "It runs the entire NVIDIA AI stack -- all of NVIDIA software runs on this. DGX Cloud runs on this." The compact yet powerful Project DIGITS is expected to be available in May. A Year of Breakthroughs "It's been an incredible year," Huang said as he wrapped up the keynote. Huang highlighted NVIDIA's major achievements: Blackwell systems, physical AI foundation models, and breakthroughs in agentic AI and robotics "I want to thank all of you for your partnership," Huang said.

[16]

Roundup: Nvidia's impressive list of new and upgraded products at CES - SiliconANGLE

Roundup: Nvidia's impressive list of new and upgraded products at CES Although the holiday gift-giving season may be over, Nvidia Corp. co-founder and Chief Executive Jensen Huang was in a very generous mood during his Monday keynote address at the CES consumer electronics show in Las Vegas. The leader in accelerated computing, which invented the graphics processing unit more than 25 years ago, still has an insatiable appetite for innovation. Huang (pictured), dressed in a more Vegas version of his customary black leather jacket, kicked off this keynote with a history lesson on how Nvidia went from a company that made video games better to the AI powerhouse it is today. He then shifted into product mode and showcased his company's continuing leadership in the AI revolution by announcing several new and enhanced products for AI-based robotics, autonomous vehicles, agentic AI and more. Here are the five I felt were most meaningful: Nvidia's Cosmos platform consists of what the company calls "state-of-the-art generative world foundation models, advanced tokenizers, guardrails and an accelerated video processing pipeline" for advancing the development of physical AI capabilities, including autonomous vehicles and robots. Using Nvidia's world foundation models or WFMs, Cosmos makes it easy for organizations to produce vast amounts of "photoreal, physics-based synthetic data" for training and evaluating their existing models. Developers can also fine-tune Cosmos WFMs to build custom models. Physical AI can be very expensive to implement, requiring robots, cars and other systems to be built and trained in real-life scenarios. Cars crash and robots fall, adding cost and time to the process. With Cosmos, everything can simulated virtually, and when the training is complete, the information is uploaded into the physical device. Nvidia is providing Cosmos models under an open model license to help the robotics and AV community work faster and more effectively. Many of the world's leading physical AI companies use Cosmos to accelerate their work. Huang also announced new generative AI models and blueprints that expand and further integrate Nvidia Omniverse into physical AI applications. The company said leading software development and professional services firms are leveraging Omniverse to drive the growth of new products and services designed to "accelerate the next era of industrial AI." Companies such as Accenture, Microsoft and Siemens are integrating Omniverse into their next-generation software products and professional services. Siemens announced at CES the availability of Teamcenter Digital Reality Viewer, its first Xcelerator application powered by Nvidia's Omniverse libraries. Nvidia debuted four new blueprints for developers to use in building Universal Scene Description (OpenUSD)-based Omniverse digital twins for physical AI. The new blueprints are: Nvidia announced the GeForce RTX 50 series of desktop and laptop graphics processing units. The RTX 50 series is powered by Nvidia's Blackwell architecture and the latest Tensor Cores and RT Cores. Huang said it delivers breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," he said. "Fusing AI-driven neural rendering and ray tracing, Blackwell is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." The pricing of the new systems gave rise to a loud cheer from the crowd. The previous generation GPU, RTX 4090, retailed for $1,599. The low end of the 50 series, the RTX 5070, which offers comparable performance (1,000 trillion AI operations per second) to the RTX 4090, is available for the low price of $549. The RTX 5070 Ti, 1,400 AI TOPS is $749, the RTX 5080 (1,800 AI TOPS) sells for $999, and the RTX 5090, which offers a whopping 3,400 AI TOPS, is $1,999. The company also announced a family of laptops where the massive RTX processor has been shrunk down and put into a small form factor. Huang explained that Nvidia used AI to accomplish this, as it generates most of the pixels using Tensor Cores. This means only the required pixels are raytraced, and AI is used to develop all the other pixels, creating a significantly more energy-efficient system. "The future of computer graphics is neural rendering, which fuses AI with traditional graphics," Huang explained. Laptop pricing ranges from $1,299 for the RTX 5070 model to $2,899 for the RTX 5090. Huang introduced a small desktop computer system called Project DIGITS powered by Nvidia's new GB10 Grace Blackwell Superchip. The system is small but powerful. It will provide a petaflop of AI performance with 120 gigabytes of coherent, unified memory. The company said it will enable developers to work with AI models of up to 200 billion parameters at their desks. The system is designed for AI developers, researchers, data scientists and students working with AI workloads. Nvidia envisions key workloads for the new computer, including AI model experimentation and prototyping. Rev Labaredian, vice president of Omniverse and simulation technology at Nvidia, told analysts in a briefing before Huang's keynote that the massive shift in computing now occurring represents software 2.0, which is machine learning AI that is "basically software writing software." To meet this need, Nvidia is introducing new products to enable agentic AI, including the Llama Nemotron family of open large language models. The models can help developers create and deploy AI agents across various applications -- including customer support, fraud detection, and product supply chain and inventory management optimization. Huang explained that the Llama models could be "better fine-tuned for enterprise use," so Nvidia used its expertise to create the Llama Nemotron suite of open models. There are currently three models: Nano is small and low latency with fast response times for PCs and edge devices, Super is balanced for accuracy and computer efficiency, and Ultra is the highest-accuracy model for data center-scale applications. If it's not clear by now, the AI era has arrived. Many industry watchers believe AI is currently overhyped, but I think the opposite. AI will eventually be embedded into every application, device and system we use. The internet has changed how we work, live and learn, and AI will have the same impact. Huang did an excellent job of explaining the relevance of AI to all of us today and what an AI-infused world will look like. It was a great way to kick off CES 2025.

[17]

I am thrilled by Nvidia's cute petaflop mini PC wonder, and it's time for Jensen's law: it takes 100 months to get equal AI performance for 1/25th of the cost

Nvidia's desktop super computer is probably the greatest revolution in tech hardware since the IBM PC Nobody really expected Nvidia to release something like the GB10. After all, why would a tech company that transformed itself into the most valuable firm ever by selling parts that cost hundreds of thousands of dollars, suddenly decide to sell an entire system for a fraction of the price? I believe that Nvidia wants to revolutionize computing the way IBM did it almost 45 years ago with the original IBM PC. Project DIGITS, as a reminder, is a fully formed, off-the-shelf super computer built into something the size of a mini PC. It is essentially a smaller version of the DGX-1, the first of its kind launched almost a decade ago, back in April 2016. Then, it sold for $129,000 with a 16-core Intel Xeon CPU and eight P100 GPGPU cards; Digits costs $3,000. Nvidia confirmed it has an AI performance of 1,000 Teraflops at FP4 precision (dense/sparse?). Although there's no direct comparison, one can estimate that the diminutive super computer has roughly half the processing power of a fully loaded 8-card Pascal-based DGX-1. At the heart of Digits is the GB10 SoC, which has 20 Arm Cores (10 Arm Cortex-X925 and 10 Cortex-A725). Other than the confirmed presence of a Blackwell GPU (a lite version of the B100), one can only infer the power consumption (100W) and the bandwidth (825GB/s according to The Register). You should be able to connect two of these devices (but not more) via Nvidia's proprietary ConnectX technology to tackle larger LLMs such as Meta's Llama 3.1 405B. Shoving these tiny mini PCs in a 42U rack seems to be a near impossibility for now as it would encroach on Nvidia's far more lucrative DGX GB200 systems. Why did Nvidia embark on Project DIGITS? I think it is all about reinforcing its moat. Making your products so sticky that it becomes near impossible to move to the competition is something that worked very well for others: Microsoft and Windows, Google and Gmail, Apple and the iPhone. The same happened with Nvidia and CUDA - being in the driving seat allowed Nvidia to do things such as shuffling the goal posts and wrongfooting the competition. The move to FP4 for inference allowed Nvidia to deliver impressive benchmark claims such as "Blackwell delivers 2.5x its predecessor's performance in FP8 for training, per chip, and 5x with FP4 for inference". Of course, AMD doesn't offer FP4 computation in the MI300X/325X series and we will have to wait till later this year for it to roll out in the Instinct MI350X/355X. Nvidia is therefore laying the ground for future incursions, for lack of a better word or analogy, from existing and future competitors, including its own customers (think Microsoft and Google). Nvidia CEO Jensen Huang's ambition is clear; he wants to expand the company's domination beyond the realm of the hyperscalers. "AI will be mainstream in every application for every industry. With Project DIGITS, the Grace Blackwell Superchip comes to millions of developers, placing an AI supercomputer on the desks of every data scientist, AI researcher and student empowers them to engage and shape the age of AI," Huang recently commented. Short of renaming Nvidia as Nvid-ai, this is as close as it gets to Huang acknowledging his ambitions to make his company's name synonymous with AI, just like Tarmac and Hoover before them (albeit in more niche verticals). I was also, like many, perplexed by the Mediatek link and the rationale for this tie-up can be found in the Mediatek press release. The Taiwanese company "brings its design expertise in Arm-based SoC performance and power efficiency to [a] groundbreaking device for AI researchers and developers" it noted. The partnership, I believe, benefits Mediatek more than Nvidia and in the short run, I can see Nvidia quietly going solo. Reuters reported Huang dismissed the idea of Nvidia going after AMD and Intel, saying, "Now they [Mediatek] could provide that to us, and they could keep that for themselves and serve the market. And so it was a great win-win". This doesn't mean Nvidia will not deliver more mainstream products though, just they would be aimed at businesses and professionals, not consumers where cut throat competition makes things more challenging (and margins wafer thin). Reuters article quotes Huang saying, "We're going to make that a mainstream product, we'll support it with all the things that we do to support professional and high-quality software, and the PC (manufacturers) will make it available to end users." One theory I came across while researching this feature is that more data scientists are embracing Apple's Mac platform because it offers a balanced approach. Good enough performance - thanks to its unified memory architecture - at a 'reasonable' price. The Mac Studio with 128GB unified memory and 4TB SSD currently retails for $5,799. So where does Nvidia go from there? An obvious move would be to integrate the memory on the SoC, similar to what Apple has done with its M series SoC (and AMD with its HBM-fuelled Epyc). This would not only save on costs but would improve performance, something that its bigger sibling, the GB200 already does. Then it will depend on whether Nvidia wants to offer more at the same price or the same performance at a lower price point (or a bit of both). Nvidia could go Intel's way and use the GB10 as a prototype to encourage other key partners (PNY, Gigabyte, Asus) to launch similar projects (Intel did that with the Next Unit of Computing or NUC). I am also particularly interested to know what will happen to the Jetson Orin family; the NX 16GB version was upgraded just a few weeks ago to offer 157 TOPS in INT8 performance. This platform is destined to fulfill more DIY/edge use cases rather than pure training/inference tasks but I can't help but think about "What If" scenarios. Nvidia is clearly disrupting itself before others attempt to do so; the question is how far will it go.

[18]

Nvidia founder unveils new technology for gamers and creators at CES 2025

LAS VEGAS -- In a packed Las Vegas arena, Nvidia founder Jensen Huang stood on stage and marveled over the crisp real-time computer graphics displayed on the screen behind him. He watched as a dark-haired woman walked through ornate gilded double doors and took in the rays of light that poured in through stained glass windows. "The amount of geometry that you saw was absolutely insane," Huang told an audience of thousands at CES 2025 Monday night. "It would have been impossible without artificial intelligence." The chipmaker and AI darling unveiled its GeForce RTX 50 Series desktop and laptop GPUs -- its most advanced consumer graphics processor units for gamers, creators and developers. The tech is designed for use on both desktop and laptop computers. Ahead of Huang's speech, Nvidia stock climbed 3.4% to top its record set in November. Nvidia and other AI stocks keep climbing even as criticism rises that their stock prices have already shot too high, too fast. Despite worries about a potential bubble, the industry continues to talk up its potential. Huang said the GPUs, which use the company's next-generation artificial intelligence chip Blackwell, can deliver breakthroughs in AI-driven rendering. "Blackwell, the engine of AI, has arrived for PC gamers, developers and creatives," Huang said, adding that Blackwell "is the most significant computer graphics innovation since we introduced programmable shading 25 years ago." Blackwell technology is now in full production, he said. Building on the tech Nvidia released 25 years ago, the company announced that it would also introduce "RTX Neural Shaders," which use AI to help render game characters in deep detail - a task that's notoriously tricky because people can easily spot a small error on digital humans. Huang said Nvidia is also introducing a new suite of technologies that enable "autonomous characters" to perceive, plan and act like human players. Those characters can help players plan strategies or adapt tactics to challenge players and create more dynamic battles. In addition to Nvidia, tech giants such as AMD, Google and Samsung are at CES 2025 to unveil artificial intelligence tools aimed at helping both content creators and consumers alike in their quest for entertainment.

[19]

Nvidia founder Jensen Huang unveils new technology for gamers and creators at CES 2025