RTX 5090 Mobile GPU: A Powerful but Pricey Leap in Laptop Gaming

5 Sources

5 Sources

[1]

I Tested the GeForce RTX 5090 for Laptops. It's the New Fastest Mobile GPU

I'm one of the consumer PC experts at PCMag, with a particular love for PC gaming. I've played games on my computer for as long as I can remember, which eventually (as it does for many) led me to building and upgrading my own desktop. Through my years here, I've tested and reviewed many, many dozens of laptops and desktops, and I am always happy to recommend a PC for your needs and budget. Following hot after their desktop-card counterparts, launched this January after their announcement at CES 2025, Nvidia's GeForce RTX 50-series GPUs have officially arrived inside laptops. Mobile gamers patiently waiting to see what these chips can do while mulling over a new laptop purchase can finally get some definitive answers. GeForce RTX 50 cards for desktops may be pricey and scarce at the moment, but laptops equipped with the mobile versions of these chips go on sale March 28. To carry out my first performance tests on this new platform, Nvidia loaned me a brand-new Razer Blade 16 laptop outfitted with a GeForce RTX 5090 GPU. This implementation combines high-end laptop hardware with top-end silicon, demonstrating the power potential of such top-of-the-line systems before more-affordable midrange and budget solutions emerge later. (The first RTX 50-series laptops to hit the street will have RTX 5090, RTX 5080, and RTX 5070 Ti GPUs, with RTX 5070s coming later.) On the desktop side, the RTX 5090 is the tip-top card of Nvidia's latest generation, so I was excited to put the mobile version through its paces and see what the RTX 50 series and its new "Blackwell" architecture can do for laptops. First, it's important to explain what Blackwell brings to laptop graphics. Nvidia 'Blackwell' Explained: RTX 50 GPUs Bring DLSS 4 to Laptops We covered the initial announcement of RTX 50-series laptop GPUs during CES 2025, so while we don't need a complete rehash of the platform here, I have several relevant aspects to touch on before getting into the testing. On desktop and mobile, the RTX 50 series employs Blackwell-based graphics processors. Blackwell is Nvidia's latest microarchitecture, which succeeds the RTX 40-series' "Lovelace" design. Blackwell's design includes fifth-generation machine-learning Tensor cores, fourth-generation ray-tracing (RT) cores, and newly added support for GDDR7 memory. The platform is also more efficient, allowing for these improvements to be incorporated even into thin-and-light laptops. (Indeed, Nvidia sending over the always-thin Razer Blade as the test sample is something of a vote of confidence in Blackwell's ability to perform in a slimmer machine.) Blackwell doubles down on Nvidia's focus on AI-empowered GPUs with advancements in neural rendering rather than aiming for pure transistor count and raw horsepower increases. This development is most relevant for gaming, manifesting primarily in DLSS 4 introduced with these GPUs, though you'll find other related technologies at play during benchmarking that we'll touch on, too. Deep Learning Super Sampling (DLSS) has been around for years at this point -- read our DLSS explainer to catch up, if you need to -- and we've already done a deep DLSS 4 performance dive on the RTX 50 desktop graphics cards to gauge its impact. In a nutshell, DLSS (driven by the Tensor cores on local hardware) is a machine-learning technology that can do two things. First, DLSS can turn lower-resolution frames into higher-resolution images without rendering them on the GPU -- instead, the work is done via algorithms using the original frame data. Second, the technology can apply similar algorithmic techniques to generate artificial frames between originally rendered frames to boost the effective number of frames displayed per second. DLSS 4 has become incredibly adept at the latter, thanks to Multi-Frame Generation (MFG) in supported games and Blackwell's more efficient Tensor Cores. MFG can generate up to three additional frames for every traditionally rendered frame, significantly improving frame-rate counts. The new AI model also accomplishes this while running (according to Nvidia) 40% faster and using 30% less VRAM than the previous version. This technology also works via a newer AI "Transformer" model, moving on from the preceding Convolutional Neural Network (CNN) design, which Nvidia believes has reached its limits. This more intelligent architecture generates higher-quality and more stable pixels via supplemental DLSS 4 features such as RTX Neural Shaders, Ray Reconstruction, and Super Resolution. The new hardware and software improvements together can generate up to 15 out of every 16 pixels if your goal is as many frames (rendered or generated) as possible. (For more on some of these backing technologies, check out our rundown of the GeForce RTX 50-series-related tech that Nvidia outlined at CES 2025.) Schools of Thought on DLSS Those are the more technical aspects of Blackwell and its new technologies; if you're not as interested in this side of things, suffice it to say that DLSS 4 aims to improve gaming frame rates well beyond its predecessor while retaining as much picture quality and visual settings as possible. These factors, though, will affect real-world performance and the benchmarking testing results in this article. I should acknowledge that, between resolution upscaling and artificially generated frames, gamers feel differently about whether these improvements really "count" as generational performance, not to mention whether gaining access to these artificial frame-rate improvements merits buying a new GPU. (Bearing in mind that the game in question has to actually support the DLSS flavor in question for you to make use of it.) Other gamers, especially those on lower-end systems, will take any practical frame-rate improvement. Regardless of where your opinion falls, this technology can be key in getting high-fidelity games (or demanding features like path tracing) to run smoothly, so even if you see a quality trade-off, it may be worth it. I don't have a philosophical opposition to these methods of attaining higher frame rates, but they're not without caveats or downsides, which I'll get into later. You'll find objective (rendered versus inserted frames) and subjective (how one feels about the image quality of DLSS upscaling) aspects to all of this, but the algebra is also a bit different on laptops versus desktops. You can't un-bake a laptop GPU from the laptop itself, so to speak. You're not buying the GPU itself; it's only one part of a laptop's total cost. Additionally, unlike on a desktop, as laptop components wear down or age out, most of them are not replaceable, so you'll need to buy a whole new system and, thus, a new GPU anyway. When you're ready for a new machine, laptops with the most recent GPU generation are what you'll find on the market. Generation-over-generation improvements still matter to the enthusiast crowd, and I have those comparisons below. However, the reality for most shoppers is a bit different. Nvidia shared the stat that 70% of laptop owners are currently running systems with the RTX 30 series or older. The question of whether 40-series owners should upgrade to the latest generation is only a tiny piece of the picture. I'll look at both the generational improvement and the concrete results the RTX 50 series can achieve regardless of what GPU you're currently running. GeForce RTX 5090 Performance Testing: The New Graphics King Arrives Again, Nvidia sent over the slick Razer Blade 16 with an RTX 5090 GPU to run our first tests of this new generation. Currently, Nvidia has announced the RTX 5090, RTX 5080, RTX 5070 Ti, and RTX 5070 mobile GPUs in descending power order. As mentioned, this first wave of laptops will include the three higher-tier GPUs, with RTX 5070 laptops coming a bit later in 2025. For those with smaller budgets, benchmark results on the rest of these 50-series GPUs will take longer to publish since the RTX 5090 and 5080 arrived first. As for the Blade 16 in particular, this laptop line is always premium, so even Razer's "least" expensive RTX 5070 Ti model starts at $2,999.99 with an AMD Ryzen AI 9 365 processor, 32GB of memory, and a 1TB SSD. Upgrading to the RTX 5080 costs $3,499.99 and keeps the other specs the same (though you're free to upgrade RAM and storage up to 64GB and 4TB, too). Choosing the RTX 5090 configuration I've tested here forces a bump to the Ryzen AI 9 HX 370 CPU and a double-up on storage to a 2TB SSD, all for a whopping $4,499.99. All models include a 240Hz QHD+ resolution (2,560-by-1,600-pixel) display. All models of other RTX 5090 laptops I've seen available for order before launch are above $4,000, too; some may drop below that mark, and other RTX 50-series GPUs will be in less expensive systems. But the RTX 5090 will almost always pair with top-end parts and features because of its own costly nature and high throughput. The new Blade I have in hand runs at 135 watts TGP (plus 25W of Dynamic Boost, for 160W total) on Razer's Performance Mode, which I tested. Below, you'll note the new GDDR7 VRAM debuting, versus the RTX 4090's GDDR6 memory, plus the RTX 5090's increased memory allotment (24GB). To test Nvidia's latest top-end GPU, I put it through our usual graphics and gaming benchmark suite and followed up with some anecdotal testing to account for the new features. Here are the systems I'll compare the results against... These are ordered by relevance as comparisons to the Blade 16 and its RTX 5090, starting with the 2024 Razer Blade with its RTX 4090 -- a near-perfect generational battle. Next, I've included the hulking MSI Titan 18 HX for a best-case-scenario for the RTX 4090 (this is a thick, super-powered laptop with a load of thermal headroom) and then a slightly older (13th Gen) Razer Blade 18 with an RTX 4080. Finally, I also included the Asus ROG Zephyrus G16 to show how an RTX 4070 stacks up against the higher-end chips in other thin 16-inch systems. Most of our tested gaming laptops are Intel-based, so the processor matchups aren't 1:1 (except for the Zephyrus), but these are all top-of-the-line, performant chips. Additionally, while I wish we could directly compare RTX 3090 performance, we have long since updated our benchmark suite from when we were testing RTX 30 Series laptops, and I don't still have any on hand to re-test the new games on. Those looking to upgrade from the 30 series or older must judge the RTX 5090's performance on its own terms to see if the frame rates are enough to make the jump. Graphics and Gaming Tests Here are the benchmark results, with descriptions of each test preceding them -- first, our usual graphics test suite for laptop reviews. We challenge all test laptops' graphics with a quartet of animations or gaming simulations from UL's 3DMark test suite. Wild Life (1440p) and Wild Life Extreme (4K) use the Vulkan graphics API to measure GPU speeds. Steel Nomad's regular and Light subtests focus on APIs more commonly used for game development, like Metal and DirectX 12, to assess gaming geometry and particle effects. We also turn to 3DMark's Solar Bay to measure ray tracing performance in a synthetic environment. This benchmark works with native APIs, subjecting 3D scenes to increasingly intense ray-traced workloads at 1440p. Our real-world gaming testing comes from in-game benchmarks within Call of Duty: Modern Warfare 3, Cyberpunk 2077, and F1 2024. These three games -- all benchmarked at the system's full HD (1080p or 1200p) resolution -- represent competitive shooter, open-world, and simulation games, respectively. If the screen is capable of a higher resolution, we rerun the tests at the QHD equivalent of 1440p or 1600p. Each game runs at two sets of graphics settings per resolution for up to four runs total on each game. We run the Call of Duty benchmark at the Minimum graphics preset -- aimed at maximizing frame rates to test display refresh rates -- and again at the Extreme preset. Our Cyberpunk 2077 test settings aim to push PCs fully, so we run it on the Ultra graphics preset and again at the all-out Ray Tracing Overdrive preset without DLSS or FSR. Finally, F1 represents our DLSS effectiveness (or FSR on AMD systems) test, demonstrating a GPU's capacity for frame-boosting upscaling technologies. Starting with the synthetic 3DMark tests, the RTX 5090 comes off well. For those concerned about a lack of raw power gains without DLSS active, the RTX 5090 scored the highest on almost all of these tests, even over the much larger RTX 4090-bearing Titan 18 HX. The gains are moderate, but all considered, more performance is more performance -- especially in a thin 16-inch laptop. This trend mostly continues into real game testing. I'll focus on percentage gains over the RTX 4090 since the actual frame rates vary widely, and that's the most relevant GPU comparison. Looking at Cyberpunk 2077 at 1200p, the RTX 5090's 109fps and 41fps results were a 6% and 11% increase over the RTX 4090 on Ultra and Ray Tracing Overdrive settings, respectively. Cranked up to native 1600p resolution, the RTX 5090 saw a 16% and 4% increase on these same runs. On Modern Warfare 3 at 1200p, the RTX 5090's 225fps score was 4% lower than the RTX 4090's Minimum settings run, just about within the margin of error, while 173fps was a 9% increase on the RTX 4090's Extreme settings run. At 1600p, the improvements grouped tighter regardless of visual setting: The RTX 5090 scored about 8.5% higher than the RTX 4090 on Minimum settings and 8% higher on Extreme settings. The F1 2024 results are not as cut and dry, with some CPU-bound limitations of this system capping the efficacy of DLSS on its Ultra performance setting. Other processors may be able to open this up further, as we see on the other systems, but the RTX 5090 is still pushing higher traditionally rendered frame rates at both resolutions than the RTX 4090 on this game, too. Additional Upscaling and Frame Generation Tests Outside our usual test suite, I ran additional DLSS tests focusing on Frame Generation on the Razer machine. These are all run at 1600p, and the higher visual setting preset from the previous batch of tests; the first result for each game here is carried over from those tests to serve as the new baseline. Pay special attention to Cyberpunk 2077, as it's the only game here that supports DLSS 4 and Multi Frame Generation. You can find more than 100 titles that support DLSS 4 as of now, but few with a built-in benchmark test, and Cyberpunk is already our go-to system crusher of choice. The other two games here show how the RTX 5090 performs with the previous version of DLSS (not Multi-Frame Generation), which will be the reality for many games for the time being. As we saw before, Cyberpunk is practically unplayable at 1600p and maximum settings with no DLSS active. The upscaling technology saves the day here, more than tripling the score to 77fps. Despite some qualms about artifacts or ghosting -- of which I saw a little, but less than previous DLSS generations -- at the end of the day, DLSS turns the game from sub-30fps to comfortably above 60fps. That's only half the story, though. Turning on Frame Generation (at "2X" in Cyberpunk's settings) nearly doubles the score again to 147fps -- roughly expected given how the technology works. Finally, Multi Frame Generation set to "4X" increases the Frame Generation result by about 73%. Nvidia Battery Boost and Off-Plug Battery Life One last area I tested was battery life, using Nvidia's new Battery Boost feature. Activated in the Nvidia App, this feature dynamically balances your CPU and GPU output while monitoring battery discharge when gaming off the charger. While playing Avowed -- another DLSS 4 game with a big open world and high visual fidelity -- on High visual settings with DLSS active, the Razer Blade 16 ran for almost exactly an hour and a half off the plug. This was in sustained, active gameplay, with nary a pause. Most of us would be hard-pressed to find ourselves often in a situation where we could game for that long, uninterrupted and away from a power outlet. I also ran the Blade 16 through our usual battery life test (a full battery rundown while playing a local video file at 50% brightness with airplane mode active until the laptop dies), and it ran for 10 hours and 12 minutes, so you can definitely expect much more when not playing a demanding game. The Takeaway: RTX 5090 Makes DLSS 4 Dazzle On the high end, those DLSS and Frame Generation results are fantastic for letting you keep all the visual details active with minimal downsides; extrapolate that to lower-end hardware, and unplayable games become playable on a budget. Frame Generation isn't perfect, and some gamers may be wary of the input lag or latency in competitive multiplayer games in particular -- only you can say how much it bothers you. Others will be glad a given demanding game runs at all, or at high frame rates, on their systems. DLSS 4 is especially useful to gamers who would rather run games at maximum settings and extra-high resolutions, and less so for 1080p gamers. From experience, I think the visual clarity is worth the trade-off -- DLSS 4 looks sharp and cuts down on the visual artifacts and fuzziness that I've seen from past editions. Playing Avowed outside of our testing suite and tweaking different visual settings, I found some detectable differences in the look and feel, but they were pretty minor, and the game looked crisp even with DLSS running. You can mitigate any detectable latency via the settings, and if I felt like trying the game with Frame Generation off, it wasn't hard to find a playable frame rate with standard DLSS active. The other option is dialing down the visual settings; I don't need to play Cyberpunk 2077 with path tracing active, but it looks slick, and DLSS and/or Frame Generation make it possible while running smoothly. You'll also find levels of granularity for the DLSS level of quality versus performance -- it's all a trade-off. I'm personally not dismissive of the DLSS upscaling gains as in some way false, as they demonstrably improve the experience. At the same time, pure hardware gains these days are producing diminishing returns. I'm more sympathetic to concerns over Frame Generation, as I recognize the potential perceived-latency issue in some game genres, which you can try to combat with Nvidia Reflex; this feature can reduce system latency in games. We'll have many more months and years with the RTX 50 series to dig deeper into the results, upsides, and trade-offs versus my relatively short time with the RTX 5090 so far. I look forward to testing the other GPUs in the lineup and exploring more scenarios and features than I could here. Check back soon for full RTX 50-series laptop reviews and additional testing. (Indeed, we've got an RTX 5080 machine on the bench right now.)

[2]

Razer Blade 16 Review: A Toasty Powerhouse

I’m having a love/hate relationship with the Razer Blade 16 right now. There’s so much to love about this system. It’s got two of the most powerful mobile components on the block with an AMD Strix Point CPU and an Nvidia Blackwell 5000 series GPU. Together, it makes the Blade 16 an outright AI-centric juggernaut whether you’re gaming, creating content, or working. The OLED panel is stunning to look at as is the laptop’s bright, comfortable keyboard and it’s got a solid suite of ports. And then there's the hate. The audio could use some work. With a six speaker system, I was expecting a much louder performance. Speaking of loud, the fans can become a bit of a distraction while they fight to keep the system cool. But with an all-metal chassis, it’s a losing battle. And naturally, the battery life is short lived whether you’re working or gaming. But the biggest offender is the price. The entry model of the Blade 16 costs $2,999 while my review unit, decked out to the nines, will set you back a hurtful $4,499. I get that it’s a premium gaming laptop, but damn, that price is eye watering. Still, if you want a thin-and-light gaming laptop with the works, including the AI sprinkles on top, the Razer Blade 16 is a prime contender. When it comes to design, only a few things in this life are certain. The sun rises in the east and sets in the west, Pi is irrational and expressed infinitely, and the Razer Blade in all but several cases is a beautiful piece of black CNC aluminum. I stand behind the claim that the Blade is the gamer’s MacBook Pro. But instead of the fruit logo, there’s that acid green, tri-headed snake emblem in the center with a enticing glow, daring you to open the lid. And when you finally open it, you’re greeted with one of the prettiest RGB keyboards in the game by way of Razer’s Chroma lighting. Affirming its chops as a creator laptop, the notebook has a full SD card reader along with two USB-C ports, a pair of USB-A 3.2 ports, HDMI 2.1, a headset jack, a Kensington lock, and a proprietary power port. The Blade 16 (4.6 pounds) currently holds the title of the “thinnest Blade ever†at 0.59 inches. The notebook is 30% smaller and 15% lighter than its predecessor. It’s slimmer than the MacBook Pro 16 (0.61 inches). However, the Blade 16 is heavier than the weightiest version of the MacBook Pro 16 (3.4 pounds). But there’s a beast beneath that beautiful chassis. The Blade 16 has a Nvidia GeForce 5090 GPU under the hood. Currently one of the most powerful mobile graphic chips on the market, the 5090 can handle just about anything you can throw at it. Built off Nvidia’s Blackwell architecture, the chip is designed for both power efficiency and AI neural rendering. With the exception of Black Myth Wukong and Cyberpunk 2077, every game I benchmarked averaged well above 100 frames per second, even on the highest settings at native resolution. The aforementioned frame rates soon matched the other games once Nvidia’s DLSS technology, which uses AI to generate extra frame rates kicked in. Just keep in mind that the fans kick in something fierce when you’re gaming or performing something graphics intensive like video editing. And despite a vapor chamber, plus fans working as hard as they can, that all-aluminum chassis can get uncomfortably hot. Although it's natural to zone in on the GPU in a gaming laptop, the 2.0-GHz AMD Ryzen AI 9 HX 370 CPU with its 32GB of RAM is a force in its own right. One of AMD’s new Strix Point processors, the chip is designed for thin-and-light laptops. And just like the laptop’s GPU, the CPU is AI-centric, but doesn’t skimp on the power efficiency or performance. The Blade 16 chewed through my 75 open Google Chrome tabs with its menagerie of G-Suite docs, social media, and videos. Batch resizing large caches of photos was a breeze as was cutting together video. I know I’ve talked a lot about gaming, but the Blade is equally a creator-class system, capable of delivering a stellar visual experience. Colors on the Blade’s 16-inch, 2560 x 1600 OLED display are so vivid, sometimes it felt like the notebook was trying to sear the color into my retinas. Whether I watched Sakamoto Days, Paradise, or Found, the contrasts and colors were excellent. It’s also great for photo and video editing. And thanks to the 240Hz refresh rate and Nvidia G-Sync software, I never encountered any unsightly tearing or latency as I played Assassin’s Creed Shadows. However, with all the power the Blade 16 wields, there’s got to be a weakness. And like most gaming laptops, the Achilles Heel lies in the battery life. I ran the PCMark 10 Modern Office test which mimics typical office use (spreadsheets, social media scrolling, video conferencing, web surfing, etc.) with the display set to 50% brightness and the 90Whr battery only lasted 3 hours and 36 minutes. The time dropped to 1:24 on the gaming test. I managed to squeeze out four hours in actual use, but the game tapped out after an hour and ten minutes during Assassin’s Creed Shadows. And what I could hear while gaming wasn't impressive. Similar to the MacBook Pro 16, the Blade 16 has six speakers and a smart AMP. It also has spatial audio care of THX. However, the speakers lack volume, especially when compared to last year’s Razer Blade 18 or my 2023 MacBook Pro 16. And the bass is all but non-existent even with the four audio presets. It’s disappointing, but it’s not something a good gaming headset can’t fix. So yes, the battery life is fleeting, but that's to be expected with a gaming laptop! They're all bad at battery life! But I do wish the audio was a bit louder and the fans a bit quieter, and we can agree the price will do a number on many a budget. But despite the minor flaws this is still a premium system, and man, I love a premium system. They're a showcase of the best the industry has to offer. That is the story of the 2025 Razer Blade too. Powered by some of the most powerful mobile components from AMD and Nvidia, it's all but guaranteed that the Blade will push through most workloads like Saquon Barkley cuts through D-lines.

[3]

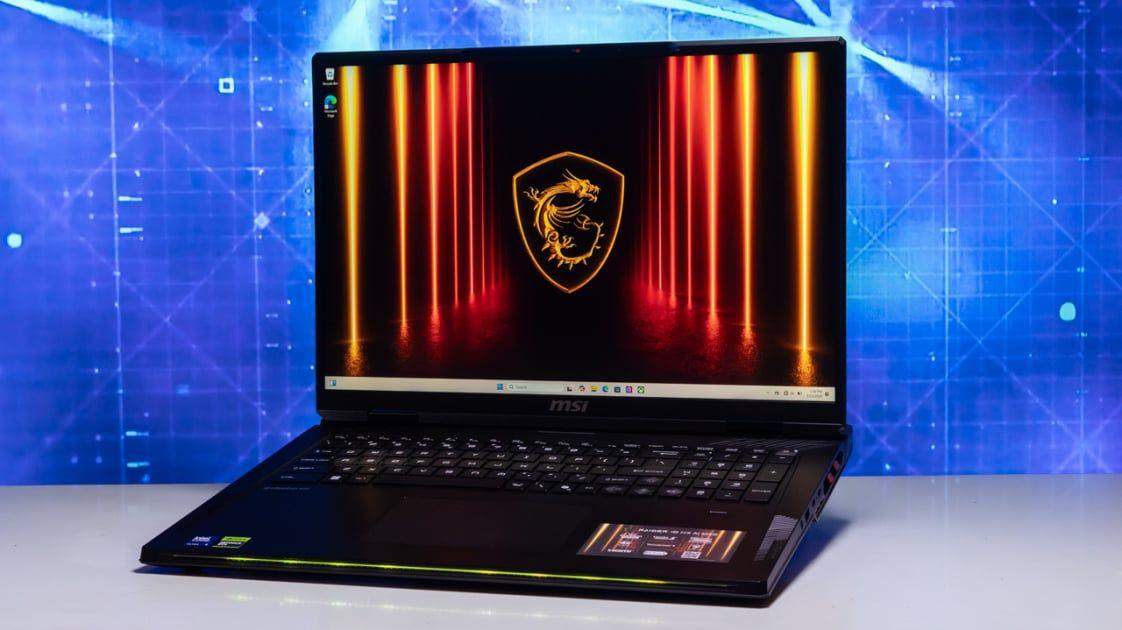

I tested an RTX 5090 gaming laptop -- it's a beast, but I can't ignore this problem

You may remember that I was one of the first lucky few to try out gaming on the RTX 5090 at CES 2025. I've got a lot of love for the desktop GPUs (though slightly less, given the price increases). But with Nvidia's new DLSS 4 trickery pulling off some real magic, Team Green is on the right path. Now, RTX 5090 laptops are here, and just like before, I've been one of a few to play some games on here -- namely on the MSI Raider 18 HX and the oh-so-eye catching Titan 18 HX Dragon Edition Norse Myth. (Yes, I do love that artwork on the lid.) But while I'm almost just as gobsmacked playing 5090 laptop games like I was with its desktop counterpart, there is one thing that does give me pause. Let me tell you about it. Allow me to introduce you to the RTX 5090 laptop GPU. As you can tell, Nvidia understood the brief about where the 4090 fell off in comparison to picking up the 4080, and this is an absolute monster. Based on the numbers, this falls largely in line with the difference you'd expect between desktop and laptop GPUs -- with the notebook getting about a third of the power going to roughly half the number of cores. There are other Max-Q optimizations too, such as voltage-optimized GDDR7 video memory, low latency sleep to shut down that GPU quickly when the lid is closed, accelerated frequency switching to adaptively optimize clock speeds, and battery boost for finding the right balance between CPU and GPU for stamina. But with all these test models plugged in, I wasn't able to really see what these did to performance and power efficiency when taken off the mains. I just went in on the frame rates here. To test this, I managed to fire up Cyberpunk 2077 and Indiana Jones and the Great Circle in a whole array of modes on the MSI Raider 18 HX AI -- a monster of a portable machine packing all the latest internals, alongside a 4K 120Hz mini LED display and a hefty 64GB of DDR5 RAM. Of course, I went through all the usual motions of testing something like this, and I'll go in depth on what settings I used. But just so I don't have to keep saying the resolution every time, each test was done in 4K. Let's start with that raw rendering capability -- no AI trickery or anything. At ultra settings with ray tracing, path tracing, the whole shebang, you're looking at an average of 28.7 FPS when combining the scores of Cyberpunk and Indiana Jones. Given that you're seeing some dedicated desktop GPUs struggle with these games at these graphical settings, while having twice the amount of watts pumped through it, that is a seriously impressive number for us to build on. But that's only half the story -- maybe actually a smaller fraction than that, as Nvidia's architecture shows a clear intention to bet big on AI being the next big thing in game fidelity and smoothness. You can see it by looking at the near-3X increase in total AI TOPS for the 5090. And how is Team Green using all that additional power? DLSS 4 and neural rendering techniques. While we wait for developers to get to work with the latter, the former is very much here, and the jump to anywhere between 130-150 FPS for a 4K Ultra-settings game is simply mesmerizing. In the immediate aftermath of visiting MSI, I was still reeling. To see this from a laptop of all things is a powerful feat, and to see how DLSS 4 has come along to a point where it produces only the tiniest moments of ghosting with image bugs that don't distract whatsoever makes it all the more sweet. However, once I saw the $5,000-plus price tags these were going for (an up-to $500 price increase over 4090 from before), this is where the pause happened. Once you slap a gigantic price on something, you start to look at some of those elements in a different light. Instead of thinking "RTX 5090 is around 20% faster than 4090? Great," you're mind will flip it to "oh, it's only 20% faster." And at up to six stacks for the Titan 18 HX, that's a fair cop. Then you start to pore over the rest of the spec list, such as that 18-inch 4K mini LED panel you've got in these models. MSI made a decision to stick with this here, and it comes down to the weight of priorities here. In the company's testing, games were easily jumping the 4K 120FPS hurdle, but not quite reaching 240FPS on a QHD display -- putting it into a bit of a no man's land of sorts. So as logic tracks, that makes sense (though it will be interesting to see how other laptop makers are tackling this with their own QHD panels). But my issue is with the need for a 4K at all on an 18-inch screen. Don't get me wrong, you can see the slightly improved sharpness on a laptop screen that's on the bigger end of the scale. But 4K resolution really comes into its own on larger monitors. On a screen like this, it can be very much "blink and you miss it" levels of needing to get up close to see the path-traced particles in the sun beam. And there lies the nugget of the problem: overkill. If there's one thing that this hugely versatile industry of PC gaming has given us, it's adaptability -- being able to keep your game looking good and running smoothly whatever the device. Sure, Cyberpunk 2077 looks and plays incredibly on the Raider 18, and so it should with that huge level of video memory, massive bandwidth and DLSS 4. But CD Projekt Red has made it so that I can have almost as much fun on my RTX 4070-armed Lenovo Legion Pro 7i, or my HP Victus 15, or even my Steam Deck. I still get a good enough experience from all of these, and at a time where we're all having to be a lot more careful with our money, that matters. If I was to sum this up with a totally scientifically accurate fun-to-price XY graph -- breaking down the return on investment in the form of fun -- things start good, but you'll see there is a diminishing return on fun as the price goes up. Meanwhile, thanks to whatever machinations are happening here (be they tariff fears or scalping problems) RTX 50-series laptops show a markedly massive price increase in exchange for a small bump in fun. And in fact, it's at RTX 5090 where the fun dips below the trend line. This may be a silly example -- using data plucked out of the thin air of vibes -- but it's a visualization of what has me torn. The performance is incredible, but I can't ignore the fact that for gamers who want to play AAA titles for the next few years on-the-go, there are wiser choices to be made (until these get cheaper).

[4]

RTX 5090 Laptop GPU performance: The frame-gen future has arrived

Nvidia's RTX 50-series is arriving on laptops this week, and we got a chance to spend some time with an RTX 5090 gaming laptop to see how the Blackwell architecture handles on mobile. While the GPU's pure silicon performance is solid across the board, Nvidia's big push with the Blackwell mobile GPUs comes down to supersampling and AI frame generation, which can help smooth performance. This is especially helpful with games that are poorly optimized and highly demanding, like Black Myth: Wukong, Cyberpunk 2077, and Monster Hunter: Wilds. Nvidia has also upgraded its power efficiency models with an updated Battery Boost system that offers up to 40% better battery life while gaming using the discrete RTX 50-series GPU. But is all that worth the RTX 5090 price tag? Let's take a look. In our hardware testing lab, we benchmark GPUs on laptops and desktops with no software support enabled. Super sampling, frame generation, and vertical sync are all toggled off for our benchmarking, and we run games at high graphic settings on both 1080p and the native resolution. As we expected, with pure silicon power, the RTX 5090 struggled to surpass the 60-fps mark on some of the more demanding games. Our RTX 5090 laptop managed just 58 frames per second in Black Myth: Wukong on the Cinematic preset at 1080p, and 44 fps at its native 1600p resolution. Older and more well-optimized titles like DiRT 5 and Grand Theft Auto V were well above 120 fps, hitting 170 fps and 165 fps at 1080p, respectively. While most gamers won't upgrade their hardware every year, we did take a look at the gen-to-gen performance just to see the pure power upgrade of the RTX 5090. We compared our 5090 laptop to the previous generation of the same model, since both featured 32GB of RAM, a 2TB SSD, and 240Hz, 2560 x 1600, OLED display. The key differences are the GPUs and CPUs, though the 50-series version is also in a slimmer chassis. Our RTX 5090 laptop is powered by an AMD Ryzen AI 9 HX 370 processor, meanwhile, our RTX 4090 laptop was powered by an Intel Core i9-14900HX CPU. In most cases, the RTX 5090 outperformed the 4090, but there were a few cases where the non-gaming CPU and slimmed down chassis of the RTX 5090 laptop underperformed compared to the previous generation. Assassin's Creed Mirage and Far Cry 6 were the most notable exceptions, with the RTX 4090 laptop outperforming its newer counterpart by up to 15 fps. As a gamer, I tend to be skeptical of graphics upscaling and AI-generated frame technology. Frame generation is a client-side performance boost that can be helpful in some single-player games, especially if you have low system latency. Nvidia's Reflex Low Latency can also help smooth out the frame-gen experience. However, because it's a client-side AI feature, frame generation can be problematic for competitive multiplayer gaming. Graphics upscaling technology like DLSS 4 - the fourth generation of Nvidia's deep learning super sampling tech - uses AI to increase performance and enhance visuals, but it may give you less graphic detail than playing without DLSS enabled. For years now, Nvidia has invested a lot of time in the software side of its GPU architecture. After all, Nvida's Jensen Huang is a known believer in the death of Moore's Law of semiconductor design. Technologies like DLSS 4 and frame generation are intended to extend the performance of hardware past the physical limits of pure silicon. So for this Blackwell mobile deep-dive, I spent a solid amount of my game time testing out Nvidia's DLSS and frame-generation tech on the RTX 5090. With DLSS and frame-generation, I saw massive improvements in performance on Cyberpunk 2077 and Monster Hunter Wilds. Cyberpunk offers the latest version of DLSS 4 and thus saw the greatest improvement with frame-generation enabled, scaling from 72 fps to 290 fps on RTX Medium settings at 1080p. Monster Hunter offers only DLSS 3.7, and saw a jump from 71 fps to 148 fps with DLSS 3 and frame-generation enabled. But benchmarks aren't everything. I also spent some time running around Night City and hunting monsters in the Forbidden Lands. While DLSS gaming can be occasionally clunky, both games were pretty darn smooth with DLSS and frame-gen enabled. I did have the occasional stutter with Monster Hunter Wilds, but that could have been network fluctuations when answering SOS signal flares and entering my squad lobby rather than issues with the frame generation tech. I had no trouble at all with Cyberpunk 2077. DLSS 4 offered smooth frame rates even when set to Quality, and easily hit 200 fps with DLSS 4 set to Balanced and frame-gen enabled. I also didn't notice much graphic degradation in Cyberpunk 2077 between running the game with DLSS off compared to either DLSS 4 model, especially with ray reconstruction on for the ray-traced reflections, light diffusion, and shadows. While Avowed doesn't have a benchmarking tool, I did run around and make a note of my average frame rates on a fresh character. With the graphics set to Epic and the resolution set to 1600p, I got frame rates in the 42 to 44 fps range. With DLSS 4 set to Quality, I saw my frame rates jump to 62 to 72 fps, and adding frame generation saw peaks of 110 to 120 fps. Gaming laptops have poor battery life. That's just facts. Discrete GPUs are power-hungry pieces of hardware, and that's always going to come at the expense of power efficiency. With the RTX 50-series mobile GPUs, Nvidia revamped its Battery Boost optimization. The system has pre-optimized settings for the games installed on the laptop, and they are aimed to give you the best balance of performance and battery longevity possible. To enable the Battery Boost settings, you need to disconnect the laptop from AC power and then launch the game you want to play. This should automatically boot into the Battery Boost preset, though you can also adjust these settings in the Nvidia app or in the game settings as well. Nvidia's Battery Boost is designed to lower performance during idle times or when watching pre-rendered cutscenes, so I figured it was ideal for an MMORPG like Final Fantasy XIV: Dawntrail. I launched FFXIV in 1600p on the Nvidia BatteryBoost optimized presets, which enabled FSR (because DLSS optimization in Final Fantasy XIV is notoriously terrible). My frame rates dropped to 30 fps during idle wait times and kicked up to 50 to 60 fps during gameplay. The transition wasn't always smooth, but it was playable. About an hour of my daily roulettes took me from 100% battery down to 45%, and I got about 2 hours of total game time. This isn't fantastic battery life. There are handheld gaming PCs that get better gaming battery life, but it's a lot better than what we'd seen previously, where gaming laptops really couldn't game when on battery power. As a former lab tester and hardware enthusiast, I'm still selective about what games I play with super sampling and frame-gen tech enabled. But most of the games that are notoriously difficult benchmarks are single-player, and in that case, I think it's worth using the AI upscaling. I wouldn't recommend using DLSS 4 with the latest frame-generation technology on a MOBA like Smite. It can be helpful for Monster Hunter Wilds, but if you have an older Nvidia GPU, you may be better off using AMD's FSR (FidelityFX Super Resolution) instead of Nvidia's DLSS model. That said, gaming AI software like DLSS and frame-gen are only getting better and smoother. And it can get you absolutely wild performance in a thin and lightweight gaming laptop. The RTX 5090's pure performance is, overall, better than the RTX 4090 in most cases. And there will be more powerful iterations of the GPU in other laptops coming soon. But access to the enhanced battery efficiency and software upscaling tech are nothing to scoff at. For all of my grouchiness about AI upscaling, DLSS has gotten better and better over the years and is a solid solution for many games. It can also help mobile GPUs keep up with desktop-level performance.

[5]

Nvidia RTX 5090 mobile tested: The needle hasn't moved on performance but this is the first time I'd consider ditching my desktop for a gaming laptop

With Nvidia's focus on efficiency over raw performance gains there will be pushback, but this is the mobile gaming experience I've been after. Nvidia has released the largest part of its RTX Blackwell desktop graphics card range now, and so, isn't it about time we had a swell of brand new gaming laptops replete with new RTX 50-series mobile chips? I think so, too, and so does Nvidia, which is why we're about to see a glut of new notebooks with shiny new GPUs and Intel and AMD's latest CPUs. Though I think it's fair to say this year's launch of the RTX Blackwell architecture has been a mixed success. Though that mix is heavily weighted on the side of the outright failures of the new GPU launches. On the one hand, we've got a super-efficient GPU architecture that's bringing neural shaders to consumer GPUs for the first time, and offers ludicrous frame rates in Multi Frame Generation-compatible games. But on the other we've had more reports in this generation of melting cables, blackscreen issues, crashing PCs, incomplete GPUs missing ROPs, and that's before mentioning the ephemeral MSRPs, frankly offensive retail pricing, and a dearth of GPU stock in the wild. Here's hoping the launch of the mobile versions of the RTX 50-series chips is more successful. By rights, it ought to be -- there are no cables or adapters to mess around with and the GPUs are built into existing systems that will have been through (hopefully) rigorous stability testing. So it should all just work together from the get-go and not have to find a fit within existing gaming PCs with myriad specs, and different generations of mixed and matched hardware. What the mobile side of things can't necessarily deal with are pricing and stock issues and, while Nvidia has given out an MSRP of sorts for notebooks featuring its different chips, there will undoubtedly be a new generation tax tacked onto the gaming laptops getting released this month. But will they be as egregious as the desktop add-in card market? An RTX 5090 laptop isn't going to be cheap, but laptop manufacturers have a certain set of price points that they rarely step away from, at least outside of sale times. So, what makes the mobile version of the RTX Blackwell tech tick, where does it differ, and how does it compare to the previous generation? Well, it's going to be an interesting one, because in terms of raw performance not a lot has really changed, but in terms of the actual experience, it's so much better than Ada. At its heart we are effectively talking about the same GPU architecture as has fitted into the desktop graphics cards we've already seen. That means essentially a graphics architecture which is more or less a refinement of the Ada Lovelace architecture from the RTX 40-series GPUs, but with some extra features to bolster the Neural Rendering era that we're now living in. But there are some things -- parts of the design which existed in the desktop chips -- that are more relevant for the mobile parts. Efficiency is the absolute most important thing for any kind of mobile chip, and most especially for a discrete laptop graphics card which will take up the lion's share of any system power budget. So, that's where I'm going to start first. Nvidia calls it Advanced Power Gating, and it's something that features in the desktop GPUs, too. It essentially allows for individual parts of the chip to just have a sleep if they're not being used, giving incredibly fine grain power control across the entire GPU. It's also able to shut these parts down very quickly, too, so where an idle time would traditionally have been so short as to otherwise be considered active, RTX Blackwell is able to quickly shut those down. It's not just around the GPU core itself, either, Nvidia has leaned into the memory system to drive power saving, too. There are separate power rails for both the GPU and the memory within RTX Blackwell, which gives more independent voltage control across the entirety of the chip and means sections of the GPU can be shut down even for very short periods of idle time. Another feature of the desktop GPUs which makes more sense in the mobile iteration is the speed at which RTX Blackwell can switch between clock frequencies. Nvidia claims that this switching is some 1000x faster with the new architecture, though honestly, that's not something I can honestly verify. It says this speed of adjustment allows full granularity of clock speed throughout a game frame's creation, rather than being locked at a certain level for the duration. Now, that frame time may be less than 10 ms, but in that time many different parts of the GPU are being used to create the final image, especially in these AI-accelerated times of ours. It should mean each part of the GPU can perform at its fullest, but also at its most efficient, hopefully saving you power while gaming on battery. To aid that, Nvidia has made some changes to its BatteryBoost technology. Where once it was limited to 30 fps, it now uses in-game settings optimised via the Nvidia App with the aim of hitting 60 fps on battery. It also has a "scene-aware algorithm" that will lower this fps target to 30 frames per second in "scenes of low action", with the examples given of looking at map screens, dialogue screens, or skill trees. Though for players of Football Manager, such as I, it does currently seem to think the entire game is a scene of low action... Those are the key technical features for laptops, but I would also add in that the expansion of Nvidia's Frame Generation to Multi Frame Generation can also make a significant impact on gaming on a laptop, especially for a high-end card, such as the RTX 5090. It's the main sell right now for the desktop RTX 50-series, and looking at the performance delta between Ada and Blackwell chips on mobile, that's going to be even more the case when it comes to frame rates with laptops. But, by way of a refresher... this is exactly what it says on the box, it's frame gen with multiple frames, up to 4x the traditionally rendered frames. But it's a little more complicated than just the Lossless Scaling version of MFG; the addition of enhanced Flip Metering hardware in the display engine of RTX Blackwell cards gives it twice the pixel processing capabilities and pulls the load away from the CPU in terms of ordering up those extra frames in a smooth manner. Yes, it's still interpolation -- the card is rendering two frames and then jamming in up to three extra frames in-between -- but it's using a new AI model instead of the optical flow hardware of the RTX 40-series. This is what allows it to do the frame gen dance up to 40% faster (hence being able to create those extra frames) and with a 30% reduction on the VRAM demands of the feature. The 5th Gen Tensor Cores have also been given more power to deal with the added load of MFG and the DLSS Transformer models (replacing the older Convolutional Neural Network method), and the AI Management Processor also added into the silicon mix means that this architecture is able to deal with extra AI processing expected to be part of graphics pipelines over the next few years. And that is expected to be the future of game graphics, which is why the programmable shaders of the RTX Blackwell architecture are now called Neural Shaders. These essentially now have far more direct access to the Tensor Cores of a chip, without having to go via CUDA to get to them, and should give developers access to a whole heap of new toys, such as Mega Geometry, Neural Materials, and Neural Radiance Cache. That last is playable in the HL2: Remix demo, but for a more detailed rundown of these future technologies before they appear in any game we can stick in our laptops, our Nick's checked out the Zorah demo in detail over at GDC. As you would expect the mobile and desktop GPUs don't 100% translate between each platform. There is no GB202 mobile chip -- last seen in the RTX 5090 desktop card -- so the top mobile card is using the same GB203 GPU as the desktop RTX 5080. Though this mobile RTX 5090 actually has fewer CUDA cores than its desktop equivalent that uses the same essential GPU. Given this is a 150 W chip versus a 360 W desktop GPU, however, I think that's probably fair. It is, however, a more powerful chip than the old RTX 4090 at least, with another six SMs worth of CUDA cores to call on (and therefore more Tensor and RT Cores, too). Thanks to the GDDR7 memory it also has a ton more memory bandwidth across its 256-bit bus. We're looking at 576 GB/s for the RTX 4090 and 896 GB/s for the RTX 5090, a 56% increase. It also has a lot more raw VRAM to call on, too, with a full 24 GB, the largest capacity of any consumer laptop GPU. The RTX 5080 then gets its own VRAM bump, up to 16 GB from the 12 GB of the RTX 4080 mobile, and keeps the same 256-bit bus as the RTX 5090. Though it is only a modest upgrade in terms of CUDA core count, with only another 2 SMs worth of cores added to the mix. The RTX 5070 Ti has no direct match from the previous generation, and it honestly feels a lot like Nvidia could have made a bit of a stir by designating it the straight RTX 5070, giving it almost parity with the desktop RTX 5070. That would have felt like a tangible mid-range upgrade for the volume sector of the market and a real generational boost. But no, it's the RTX 5070 Ti, has fewer cores than the desktop RTX 5070 (not a positively reviewed GPU) and comes with 12 GB of GDDR7 on a 192-bit bus. That leaves the mobile RTX 5070 chip looking for all the world like it really should have been the new RTX 5060 GPU, and leaving us all feeling a little sad that AMD isn't prepared to drop RDNA 4 GPUs into the laptop market to give us some much-needed competition here, too. I've only got the RTX 5090 mobile chip to play with today and it paints a very similar picture to the previous generation in terms of performance. Given that similar performance, unless something catastrophic happens with the RTX 5080 frame rates, yet again, it's possible that the lower-spec Nvidia GPU might perform at the same levels as the more constrained RTX 5090. It is a beefy GPU, and in the Razer laptop I've been testing it in, it's obviously not running to the same performance levels as you will see the RTX 5090 hitting in larger laptops and with something like the upcoming AMD X3D mobile CPUs. The similarity in performance was something I castigated the mobile Ada lineup for in the past generation -- after all, why are you paying top dollar for the high-end laptop GPU when the next tier down is often just as capable. Without having spent the past few weeks messing around with this seriously impressive gaming laptop, I understand this is going to be hard for a lot of people to hear: with this generation of GPUs it's the gaming experience which is really the big differentiator between the Blackwell and Ada lineups, not the overall performance. Frame rates, honestly, have been largely the same across the RTX 4090 and RTX 5090 gaming laptops I've been testing against each other. There may be more of a difference in other machines with an RTX 5090 inside it, because the Lenovo Legion 9 comes with a higher power Intel CPU that really makes a difference in some games, and especially at the 1080p level. But essentially, the creator, AI, and Multi Frame Gen performance aside, Nvidia hasn't really moved the needle in terms of the sort of frame rates you can expect from its top mobile chips, and that's likely to cause a certain level of consternation and accusations of sand-baggery. The difference, however, is that I would actually enjoy using the RTX 5090 as my main machine. I've been using the RTX 4090-powered Lenovo as my mobile FC25 machine, when I head around my friend's place for an evening of virtual coop kickball. But while it's more than capable of driving high frame rates on a 4K OLED TV, the sound it kicks out is abhorrent. The noise and fan levels necessary to have an RTX 4090 running at the same level as the RTX 5090 is a distraction at best and an aural assault at worst. With the RTX 5090, however, it's far more restrained. Don't get me wrong, the fan noise is still noticeable, but it's nowhere near the same level. And the other thing is that such is the latent performance in the RTX Blackwell chip I was happily running the Blade 16 in Silent mode (where it is much, much quieter even if it's not exactly silent) and still getting great gaming performance out of it. Which is also where Multi Frame Gen really makes a difference on the laptop scene. On your desktop you can throw MFG on an RTX 5080 and RTX 5090 and marvel at ludicrous triple-figure frame rates. But on the laptop side, you can use that extra frame rate legroom, dial back your system settings to chill out the cooling or even the battery power drain, and still be gaming at excellent average fps levels without the commonly sonically offensive fan-based jump scares. Ah yes, I mentioned battery drain, didn't I. This is the first gaming laptop I've used that I have been happily gaming on without it plugged into the mains. And not just for some 40 minutes. That sounds like an exaggeration, but the RTX 4090 Lenovo literally will only run for 41 minutes when gaming if you're not attached to a socket. The Blade 16 here, with a slightly smaller battery, will go on for over two hours. And it's not the rubbish experience laptop gaming has traditionally been on battery, either. This is actually a thing with RTX Blackwell, something that honestly wasn't really possible with older gaming laptop cards. With the Blade 16 and its 90 Whr battery the battery performance is markedly different. I'm seeing well over two hours of game time out of the PCMark 10 gaming battery life test, which tallies perfectly with Nvidia's claims of 40% better battery life. My previous Blade 16 test runs, with an RTX 4090 inside it, managed just over 90 minutes with a 99 Whr battery, and all told is over a 43% improvement. All of the efficiency improvements have absolutely borne fruit when it comes to getting legitimate gaming time away from a power source. And it's not just a case of the BatteryBoost feature cratering a game's graphics settings to lighten the load -- even with BatteryBoost disabled I'm still seeing long levels of battery life. Though, if you let it, the feature will absolutely destroy the in-game settings should you optimise them via the Nvidia App. For me, it's too aggressive, but it's not something you need to follow religiously. You still get the scene-aware algo which will drop perf down to 30 fps in non-action oriented scenes. It was clear playing Kingdom Come Deliverance 2 for a while on battery that it was accurately detecting the dialogue and inventory screens, quickly dropping to 30 fps in those scenes and just as quickly going back up to 60 fps. Though it's not perfect, certainly not for me, a Football Manager obsessive. I need my laptop to be able to run FM happily on battery for a good long while, something most gaming laptops aren't equipped to do. But I can't run with BatteryBoost enabled with the RTX 5090, however, as even in the match highlights, it doesn't deem anything on-screen to be action-y enough to warrant the 60 fps treatment, constantly restricting me to 30 fps no matter what. Luckily the Blade 16 has a decent integrated GPU so I could entirely shut the RTX 5090 down when I'm continuing my current long-term FM save. Still, I am seriously impressed with the battery life and gaming experience when playing away from the plug. This is the first gaming laptop -- especially high-performance one -- that actually delivers on the promise of truly mobile gaming for an extended period of time. Sure, it ain't going to get you across the Atlantic on a flight, but neither will playing KCD2 on your ROG Ally X, either. I can certainly see there being a rather negative response to the launch of Nvidia's new gaming laptop GPUs. There was certainly consternation on the desktop side of the equation where gen-on-gen performance was only moderately ahead of the previous chips, but on the mobile side it looks like all you have to call on for higher frame rates now is MFG. And only then when it's available in the game you want to play. If we were judging purely on frame rates alone that would make the RTX Blackwell generation a bit of a bust. But more than on desktop, gaming on a laptop is about the actual experience of using it as a device than the raw frame per second count. And this is where the extra efficiency of RTX Blackwell comes in, allowing for the same level of performance, but without the brutal aural assault of excessive fan noise. No longer will we have to recommend a good gaming headset alongside your gaming laptop purchase. And gaming on a battery is truly a thing now, as is using something like the Blade with an RTX 5090 inside it as your daily driver. It can come in a svelte chassis and still deliver long battery life whether gaming or just doing your daily work away from a plug. It really is the first gaming laptop I've seen that would actually have me consider dropping my desktop. Plugged into a monitor and it's a great gaming machine, away from the plug it's a stellar laptop setup. Well, it would be if it wasn't for pricing. The pricing of RTX 5090 laptops is likely to remain around the $4,000 mark for a long while -- especially for one with the style of the Blade -- despite the claims of Nvidia around $2,900 starting prices for RTX 5090 notebooks. In the end that sort of money would still have me running to see what sort of desktop rig I could build, but if you're after a top-end laptop that can do everything from creative work, to daily work, to gaming fun times, this generation is going to deliver.

Share

Share

Copy Link

Nvidia's new RTX 5090 mobile GPU brings impressive performance and AI-enhanced features to gaming laptops, but at a steep price point.

Powerful Performance with AI Enhancements

Nvidia's RTX 5090 mobile GPU, based on the new Blackwell architecture, has arrived in high-end gaming laptops, promising significant performance improvements over its predecessors

1

. Early tests show the GPU delivering impressive frame rates, especially when paired with Nvidia's latest AI-powered technologies like DLSS 4 and frame generation2

3

.In raw performance tests without AI enhancements, the RTX 5090 mobile GPU showed solid improvements over the previous generation, though some games saw only modest gains

4

. However, the real leap comes when utilizing DLSS 4 and frame generation, with some games seeing frame rates jump from around 70 fps to over 200 fps at high settings3

.AI-Driven Features Take Center Stage

The Blackwell architecture heavily emphasizes AI capabilities, with a nearly 3x increase in AI TOPS (Trillion Operations Per Second) compared to the previous generation

2

. This focus enables advanced features like:- DLSS 4: The latest version of Nvidia's AI upscaling technology, which can significantly boost frame rates while maintaining image quality

3

4

. - Frame Generation: AI-powered technology that can generate additional frames, further increasing perceived smoothness

2

3

. - Neural Rendering: New techniques that leverage AI to enhance graphics quality and performance

1

2

.

Efficiency Improvements for Mobile Gaming

Nvidia has also made strides in power efficiency, crucial for laptop performance:

- Advanced Power Gating: Allows for fine-grained control over which parts of the GPU are active, improving efficiency

5

. - Faster Clock Speed Switching: Enables more responsive performance adjustments

5

. - Updated Battery Boost: Aims to deliver 60 fps gaming on battery power when possible

5

.

Related Stories

High Price Tag and Potential Drawbacks

While the performance gains are impressive, the RTX 5090 mobile GPU comes with some caveats:

- Extremely High Cost: Laptops featuring the RTX 5090 are priced at $4,000 to $5,000+, representing a significant price increase over previous generations

2

3

4

. - Heat and Noise: Some reviewers noted that the powerful GPU can lead to high temperatures and loud fan noise during intense gaming sessions

2

. - Battery Life: Despite efficiency improvements, battery life remains a challenge for these high-performance laptops

2

3

.

Industry Impact and Future Outlook

The release of the RTX 5090 mobile GPU signals Nvidia's continued push towards AI-enhanced gaming experiences. While the raw performance gains may be incremental, the combination of traditional rendering with AI upscaling and frame generation appears to be the future direction for high-end gaming graphics

1

5

.This launch also highlights the growing importance of software optimizations and AI technologies in the gaming industry, as GPU manufacturers look beyond pure hardware improvements to deliver better gaming experiences

4

5

.References

Summarized by

Navi

[2]

Related Stories

Recent Highlights

1

Pentagon threatens to cut Anthropic's $200M contract over AI safety restrictions in military ops

Policy and Regulation

2

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

3

OpenAI closes in on $100 billion funding round with $850 billion valuation as spending plans shift

Business and Economy