NVIDIA RTX delivers 3x faster AI video generation and 35% boost for language models on PC

2 Sources

2 Sources

[1]

NVIDIA RTX Accelerates 4K AI Video Generation on PC With LTX-2 and ComfyUI Upgrades

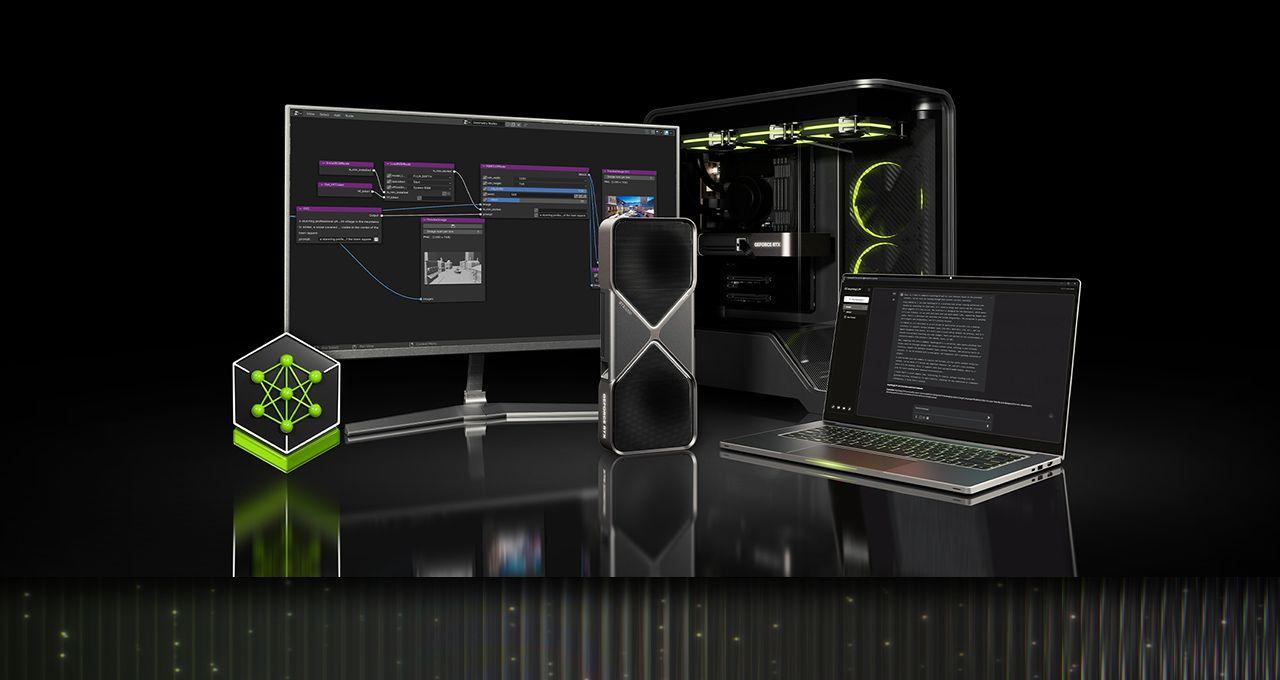

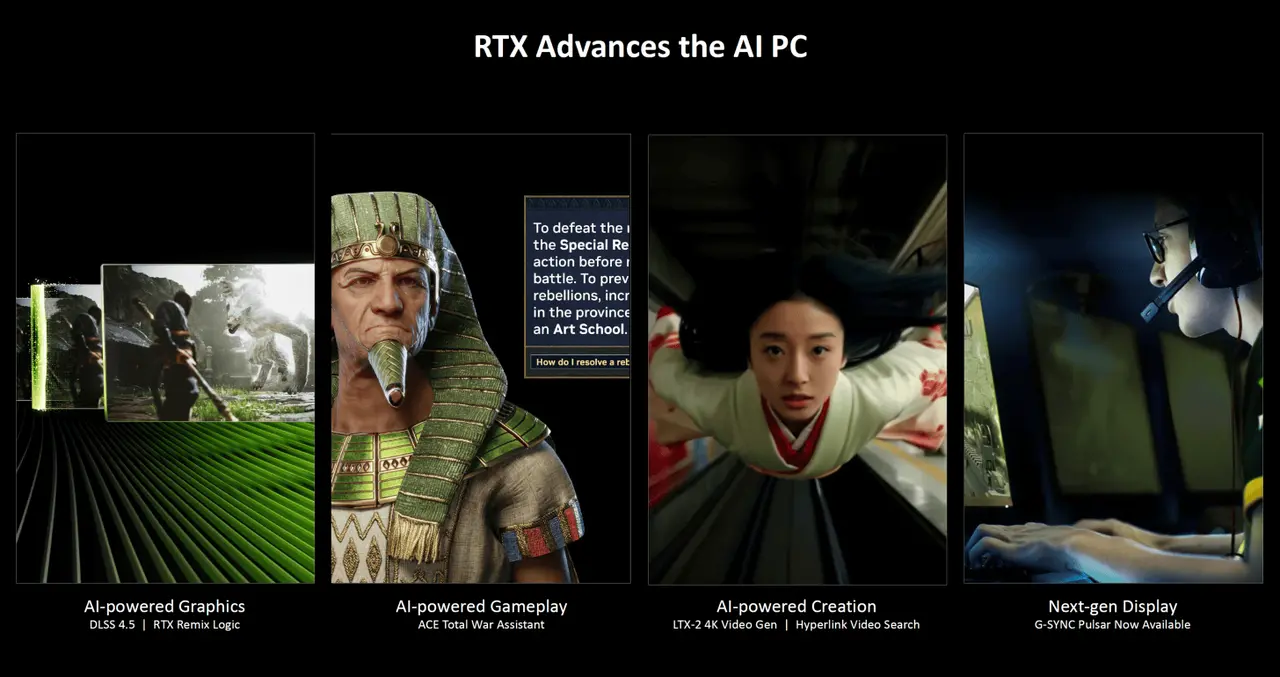

Major RTX accelerations across ComfyUI, LTX-2, Llama.cpp, Ollama, Hyperlink and more unlock video, image and text generation use cases on AI PCs. 2025 marked a breakout year for AI development on PC. PC-class small language models (SLMs) improved accuracy by nearly 2x over 2024, dramatically closing the gap with frontier cloud-based large language models (LLMs). AI PC developer tools including Ollama, ComfyUI, llama.cpp and Unsloth have matured, their popularity has doubled year over year and the number of users downloading PC-class models grew tenfold from 2024. These developments are paving the way for generative AI to gain widespread adoption among everyday PC creators, gamers and productivity users this year. At CES this week, NVIDIA is announcing announcing a wave of AI upgrades for GeForce RTX, NVIDIA RTX PRO and NVIDIA DGX Spark devices that unlock the performance and memory needed for developers to deploy generative AI on PC, including: * Up to 3x performance and 60% reduction in VRAM for video and image generative AI via PyTorch-CUDA optimizations and native NVFP4/FP8 precision support in ComfyUI. * RTX Video Super Resolution integration in ComfyUI, accelerating 4K video generation. * NVIDIA NVFP8 optimizations for the open weights release of Lightricks' state-of-the-art LTX-2 audio-video generation model. * A new video generation pipeline for generating 4K AI video using a 3D scene in Blender to precisely control outputs. * Up to 35% faster inference performance for SLMs via Ollama and llama.cpp. * RTX acceleration for Nexa.ai's Hyperlink new video search capability. These advancements will allow users to seamlessly run advanced video, image and language AI workflows with the privacy, security and low latency offered by local RTX AI PCs. Generate Videos 3x Faster and in 4K on RTX PCs Generative AI can make amazing videos, but online tools can be difficult to control with just prompts. And trying to generate 4K videos is near impossible, as most models are too large to fit on PC VRAM. Today, NVIDIA is introducing an RTX-powered video generation pipeline that enables artists to gain accurate control over their generations while generating videos 3x faster and upscaling them to 4K -- only using a fraction of the VRAM. This video pipeline allows emerging artists to create a storyboard, turn it into photorealistic keyframes and then turn these keyframes into a high-quality, 4K video. The pipeline is split into three blueprints that artists can mix and match or modify to their needs: * A 3D object generator that creates assets for scenes. * A 3D-guided image generator that allows users to set their scene in Blender and generate photorealistic keyframes from it. * A video generator that follows a user's start and end key frames to animate their video, and uses NVIDIA RTX Video technology to upscale it to 4K This pipeline is possible by the groundbreaking release of the new LTX-2 model from Lightricks, available for download today. A major milestone for local AI video creation, LTX-2 delivers results that stand toe-to-toe with leading cloud-based models while generating up to 20 seconds of 4K video with impressive visual fidelity. The model features built-in audio, multi-keyframe support and advanced conditioning capabilities enhanced with controllability low-rank adaptations -- giving creators cinematic-level quality and control without relying on cloud dependencies. Under the hood, the pipeline is powered by ComfyUI. Over the past few months, NVIDIA has worked closely with ComfyUI to optimize performance by 40% on NVIDIA GPUs, and the latest update adds support for the NVFP4 and NVFP8 data formats. All combined, performance is 3x faster and VRAM is reduced by 60% with RTX 50 Series' NVFP4 format, and performance is 2x faster and VRAM is reduced by 40% with NVFP8. NVFP4 and NVFP8 checkpoints are now available for some of the top models directly in ComfyUI. These models include LTX-2 from Lightricks, FLUX.1 and FLUX.2 from Black Forest Labs, and Qwen-Image and Z-Image from Alibaba. Download them directly in ComfyUI, with additional model support coming soon. Once a video clip is generated, videos are upscaled to 4K in just seconds using the new RTX Video node in ComfyUI. This upscaler works in real time, sharpens edges and cleans up compression artifacts for a clear final image. RTX Video will be available in ComfyUI next month. To help users push beyond the limits of GPU memory, NVIDIA has collaborated with ComfyUI to improve its memory offload feature, known as weight streaming. With weight streaming enabled, ComfyUI can use system RAM when it runs out of VRAM, enabling larger models and more complex multistage node graphs on mid-range RTX GPUs. The video generation workflow will be available for download next month, with the newly released open weights of the LTX-2 Video Model and ComfyUI RTX updates available now. A New Way to Search PC Files and Videos File searching on PCs has been the same for decades. It still mostly relies on file names and spotty metadata, which makes tracking down that one document from last year way harder than it should be. Hyperlink -- Nexa.ai's local search agent -- turns RTX PCs into a searchable knowledge base that can answer questions in natural language with inline citations. It can scan and index documents, slides, PDFs and images, so searches can be driven by ideas and content instead of file name guesswork. All data is processed locally and stays on the user's PC for privacy and security. Plus, it's RTX-accelerated, taking 30 seconds per gigabyte to index text and image files and three seconds for a response on a RTX 5090 GPU, compared with an hour per gigabyte to index files and 90 seconds for a response on CPUs. At CES, Nexa.ai is unveiling a new beta version of Hyperlink that adds support for video content, enabling users to search through their videos for objects, actions and speech. This is ideal for users ranging from video artists looking for B-roll to gamers who want to find that time they won a battle royale match to share with their friends. For those interested in trying the Hyperlink private beta, sign up for access on this webpage. Access will roll out starting this month. Small Language Models Get 35% Faster NVIDIA has collaborated with the open‑source community to deliver major performance gains for SLMs on RTX GPUs and the NVIDIA DGX Spark desktop supercomputer using Llama.cpp and Ollama. The latest changes are especially beneficial for mixture-of-experts models, including the new NVIDIA Nemotron 3 family of open models. SLM inference performance has improved by 35% and 30% for llama.cpp and Ollama, respectively, over the past four months. These updates are available now, and a quality-of-life upgrade for llama.cpp also speeds up LLM loading times. These speedups will be available in the next update of LM Studio, and will be coming soon to agentic apps like the new MSI AI Robot app. The MSI AI Robot app, which also takes advantage of the Llama.cpp optimizations, lets users control their MSI device settings and will incorporate the latest updates in an upcoming release. NVIDIA Broadcast 2.1 Brings Virtual Key Light to More PC Users The NVIDIA Broadcast app improves the quality of a user's PC microphone and webcam with AI effects, ideal for livestreaming and video conferencing. Version 2.1 updates the Virtual Key Light effect to improve performance -- making it available to RTX 3060 desktop GPUs and higher -- handle more lighting conditions, offer broader color temperature control and use an updated HDRi base map for a two‑key‑light style often seen in professional streams. Download the NVIDIA Broadcast update today. Transform an At-Home Creative Studio Into an AI Powerhouse With DGX Spark As new and increasingly capable AI models arrive on PC each month, developer interest in more powerful and flexible local AI setups continues to grow. DGX Spark -- a compact AI supercomputer that fits on users' desks and pairs seamlessly with a primary desktop or laptop -- enables experimenting, prototyping and running advanced AI workloads alongside an existing PC. Spark is ideal for those interested in testing out LLMs or prototyping agentic workflows, or for artists who want to generate assets in parallel to their workflow so that their main PC is still available for editing. At CES, NVIDIA is unveiling major AI performance updates to Spark, delivering up to 2.6x faster performance since it launched just under three months ago. New DGX Spark playbooks are also available, including one for speculative decoding and another to fine-tune models with two DGX Spark modules. Plug in to NVIDIA AI PC on Facebook, Instagram, TikTok and X -- and stay informed by subscribing to the RTX AI PC newsletter. Follow NVIDIA Workstation on LinkedIn and X. See notice regarding software product information.

[2]

NVIDIA Boosts RTX AI PCs With 35% Faster LLM & 3x Faster Creative AI Performance, NVFP4 To Reduce VRAM Usage

NVIDIA continues to add more performance to its RTX AI PCs with features such as NVFP4 and further AI/RTX optimizations. NVIDIA RTX PCs Now Enjoy Even More AI & Creator Performance With Latest NVFP4 Support, & More NVIDIA has been accelerating its RTX AI PCs with some major performance upgrades over the years. Back in 2023, NVIDIA introduced TensorRT-LLM for Windows 11, offering a 5x boost, and that was followed by a 3x uplift next year for AI workloads. The company also offers a wide range of AI-based solutions for its RTX platforms. All of these updates have truly made RTX the "Premium" AI PC platform. This year, NVIDIA is starting with another major update for RTX AI PCs, which it has labeled as a "Free RTX AI Performance Upgrade. There are two parts of this update, first is faster LLM performance, offering up to 40% higher performance in LLMs such as GPT-OSS, Nemotron Nano V2, and Sque 3 308. The second part enables native NVFP4 support in ComfyUI Flux.1, Flux.2, and Quen Image. This yields up to a 4.6x gain in performance. Even more interesting is that the new native NVFP4/NVFP8 support not only lands in up to 60% smaller LLMs, but also offloads the work to system memory, freeing up graphics resources. The new model also reduces VRAM usage significantly versus the previous BF16 instructions. In addition to these, NVIDIA is also announcing a new Audio-to-Video model called LTX-2, which is going to be powered by RTX. This is the #1 open weights video model on the market right now and offers up to 4K video generation in just 20 seconds. With NVFP8 support, users will be able to see an impressive 2.0x performance gain. Furthermore, NVIDIA is also bringing Super Resolution support to GenAI Videos with RTX Video. This update lands on ComfyUI in February and upscales 720p GenAI videos to 4K, offering better quality & detail. The whole process of generating a video on the RTX AI PC platform with NVFP8 support, & then doing Super Res takes 3 minutes for a 4K 10 sec video versus the older method, which took 15 minutes. Lastly, NVIDIA is bringing AI Video Search to Nexa Hyperlink. With this update, users will be able to get an RTX-optimized private search for videos, iamges and documents. Overall, these are a good list of updates for RTX AI PC owners who keep getting free performance and better optimizations for the latest in AI. Follow Wccftech on Google to get more of our news coverage in your feeds.

Share

Share

Copy Link

NVIDIA announced major AI performance upgrades for RTX AI PCs at CES 2025, delivering 3x faster video generation and 35% faster language model inference. The NVFP4 format reduces VRAM usage by 60%, while new LTX-2 integration enables 4K AI video generation in under 20 seconds. These optimizations bring cloud-level generative AI capabilities to local PCs with enhanced privacy and control.

NVIDIA RTX Unlocks Major AI Performance Gains for Local PC Workflows

NVIDIA RTX has delivered a substantial free performance upgrade for AI PCs, introducing native NVFP4 and NVFP8 precision support that accelerates generative AI applications while dramatically cutting memory requirements

1

. The announcement at CES 2025 marks a turning point for creators and developers seeking to run advanced AI workflows locally without cloud dependencies.

Source: Wccftech

The new optimizations deliver up to 3x faster performance for video and image generation through PyTorch-CUDA enhancements and native precision format support in ComfyUI

1

. More importantly, these updates reduce VRAM usage by 60% with the RTX 50 Series' NVFP4 format, while NVFP8 achieves 2x faster performance with a 40% reduction in VRAM2

. This memory efficiency allows mid-range GeForce RTX GPUs to handle larger models and more complex workflows that previously required high-end hardware.4K AI Video Generation Comes to RTX AI PCs With LTX-2 Integration

The integration of Lightricks' LTX-2 audio-video generation model represents a major milestone for local AI video creation

1

. This state-of-the-art model generates up to 20 seconds of 4K video with built-in audio, multi-keyframe support, and advanced conditioning capabilities that rival cloud-based solutions. With NVFP8 optimizations, LTX-2 achieves an impressive 2.0x performance gain, making professional-quality video generation accessible on consumer hardware2

.NVIDIA introduced a complete RTX-powered video generation pipeline that gives artists precise control over their creations

1

. The pipeline includes three modular blueprints: a 3D object generator for scene assets, a 3D-guided image generator using Blender to create photorealistic keyframes, and a video generator that animates between keyframes before upscaling to 4K. This approach allows creators to storyboard scenes, generate controlled outputs, and produce high-quality results without relying on prompt-based guesswork.RTX Video Super Resolution Accelerates 4K Upscaling in ComfyUI

The new RTX Video node integration in ComfyUI, arriving next month, enables real-time upscaling of generated videos to 4K resolution

1

. This Super Resolution technology sharpens edges and removes compression artifacts in seconds, transforming 720p generative AI videos into crisp 4K output2

. The complete workflow—from generating a video with NVFP8 support to upscaling—now takes just 3 minutes for a 10-second 4K clip, compared to 15 minutes using previous methods.ComfyUI has gained significant performance improvements through NVIDIA's collaboration, including a 40% optimization on NVIDIA GPUs and enhanced memory offload features

1

. The weight streaming capability allows ComfyUI to tap into system RAM when VRAM runs out, enabling larger models and complex multistage workflows on mid-range RTX GPUs. NVFP4 and NVFP8 checkpoints are now available for top models including FLUX.1, FLUX.2, Qwen-Image, and Z-Image directly within ComfyUI.Related Stories

Large Language Models Get 35% Faster Inference on RTX Hardware

AI performance extends beyond creative applications, with large language models receiving up to 35% faster inference through Ollama and llama.cpp optimizations

1

. Some models see even higher gains, with up to 40% performance improvements for LLMs such as GPT-OSS, Nemotron Nano V2, and others2

. Native NVFP4 support in models like ComfyUI Flux.1, Flux.2, and Quen Image delivers up to 4.6x performance gains while reducing model sizes by up to 60%.These RTX accelerations build on NVIDIA's continuous optimization efforts, following the 5x boost from TensorRT-LLM for Windows 11 introduced in 2023 and subsequent 3x uplift in 2024

2

. The new precision formats not only improve speed but also offload work to system memory, freeing up graphics resources for other tasks. This approach addresses a critical bottleneck that previously limited the complexity of AI workflows on consumer hardware.Local AI Gains Traction as PC-Class Models Close Gap With Cloud Services

The year 2025 marks a breakout period for AI development on PC, with small language models improving accuracy by nearly 2x over 2024

1

. Developer tools including Ollama, ComfyUI, llama.cpp, and Unsloth have matured significantly, with user downloads of PC-class models growing tenfold from 2024. This rapid adoption signals growing demand for local AI solutions that offer privacy, security, and low latency without cloud dependencies.NVIDIA also announced RTX acceleration for Nexa.ai's Hyperlink video search capability, bringing AI-powered private search for videos, images, and documents to RTX AI PCs

1

2

. These updates apply across GeForce RTX, NVIDIA RTX PRO, and NVIDIA DGX Spark devices, ensuring broad accessibility for developers deploying generative AI on PC. The video generation workflow will be available for download next month, with LTX-2 open weights and ComfyUI RTX updates already accessible.References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology