NVIDIA's Blackwell GPUs Break AI Performance Barriers, Achieving Over 1,000 TPS/User with Meta's Llama 4 Maverick

2 Sources

2 Sources

[1]

DGX B200 Blackwell node sets world record, breaking over 1,000 TPS/user

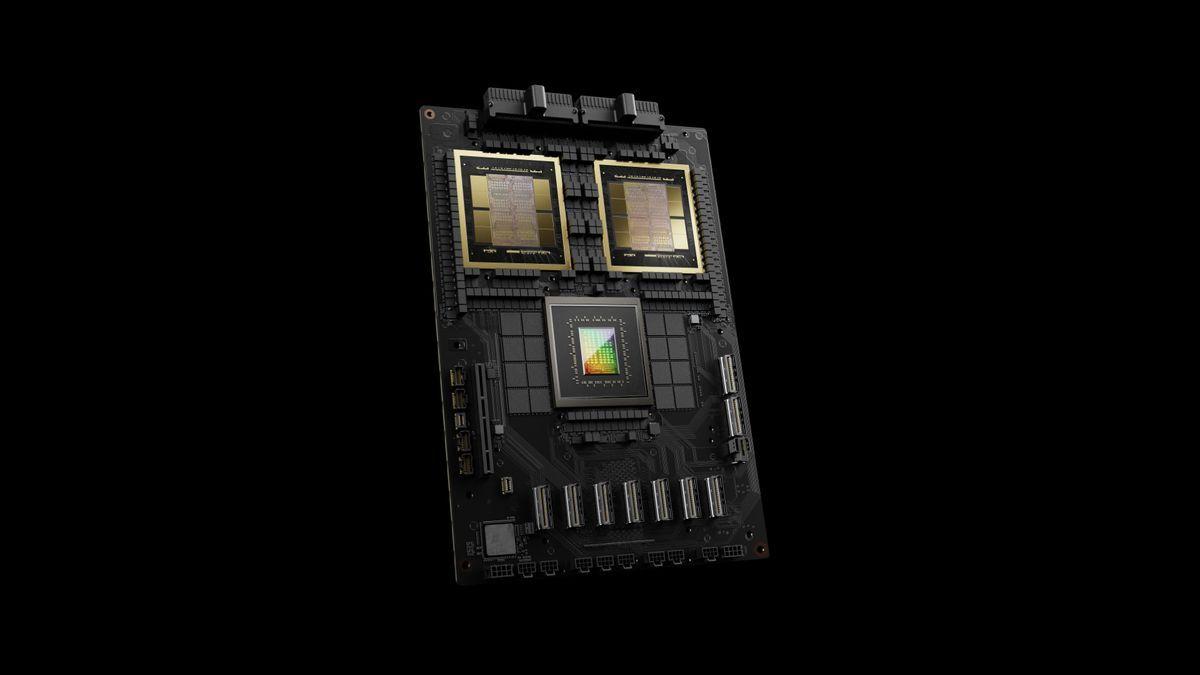

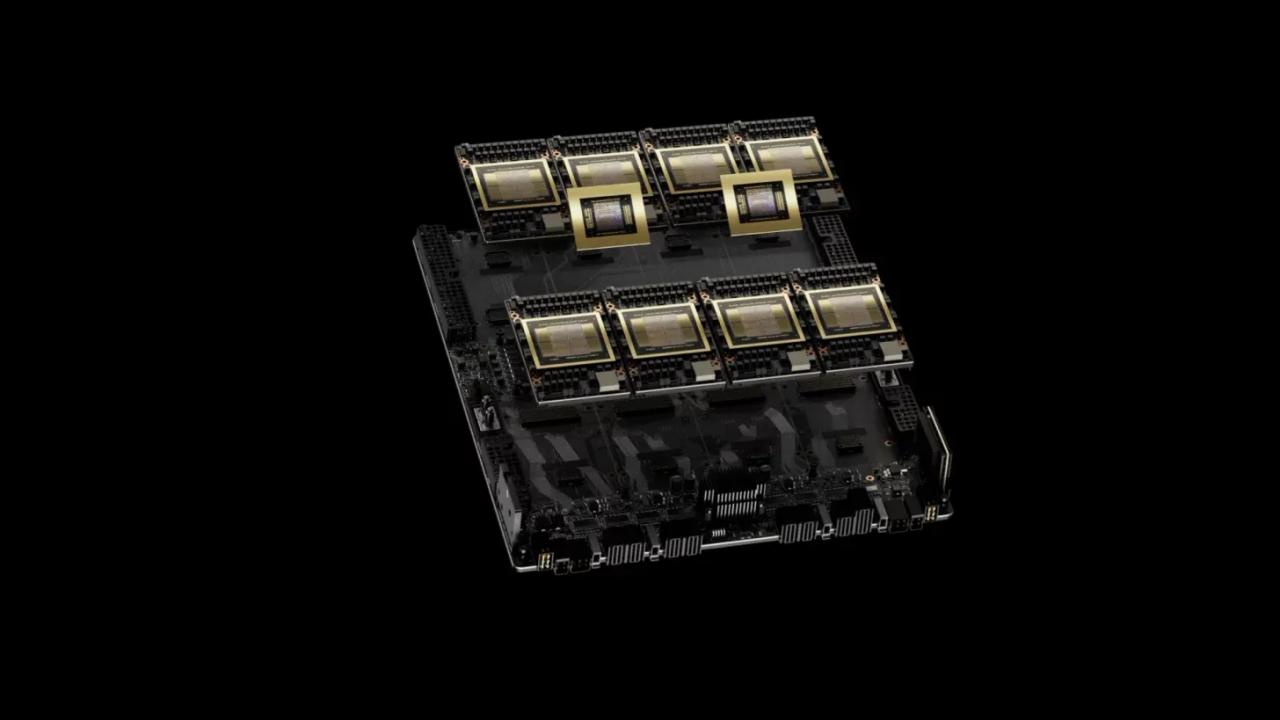

Nvidia has reportedly broken another AI world record, breaking the 1,000 tokens per second (TPS) barrier per user with Meta's Llama 4 Maverick large language model, according to Artificial Analysis in a post on LinkedIn. This breakthrough was achieved with Nvidia's latest DGX B200 node, which features eight Blackwell GPUs. Nvidia outperformed the previous record holder, SambaNova, by 31%, achieving 1,038 TPS/user compared to AI chipmaker SambaNova's prior record of 792 TPS/user. According to Artificial Analysis's benchmark report, Nvidia and SambaNova are well ahead of everyone in this performance metric. Amazon and Groq achieved scores just shy of 300 TPS/user -- the rest, Fireworks, Lambda Labs, Kluster.ai, CentML, Google Vertex, Together.ai, Deepinfra, Novita, and Azure, all achieved scores below 200 TPS/user. Blackwell's record-breaking result was achieved using a plethora of performance optimizations tailor-made to the Llama 4 Maverick architecture. Nvidia allegedly made extensive software optimizations using TensorRT and trained a speculative decoding draft model using Eagle-3 techniques, which are designed to accelerate inference in LLMs by predicting tokens ahead of time. These two optimizations alone achieved a 4x performance uplift compared to Blackwell's best prior results. Accuracy was also improved using FP8 data types (rather than BF16), Attention operations, and the Mixture of Experts AI technique that took the world by storm when it was first introduced with the DeepSeek R1 model. Nvidia also shared a variety of other optimizations its software engineers made to the CUDA kernel to optimize performance further, including techniques such as spatial partitioning and GEMM weight shuffling. TPS/user is an AI performance metric that stands for tokens per second per user. Tokens are the foundation of LLM-powered software such as Copilot and ChatGPT; when you type a question into ChatGPT or Copilot, your individual words and characters are tokens. The LLM takes these tokens and outputs an answer based on those tokens according to the LLM's programming. The user part (of TPS/user) is aimed at single-user-focused benchmarking, rather than batching. This method of benchmarking is important for AI chatbot developers to create a better experience for people. The faster a GPU cluster can process tokens per second per user, the faster an AI chatbot will respond to you. Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

[2]

NVIDIA Achieves Record Token Speeds With Blackwell GPUs, Breaking The 1,000 TPS Barrier With Meta's Llama 4 Maverick

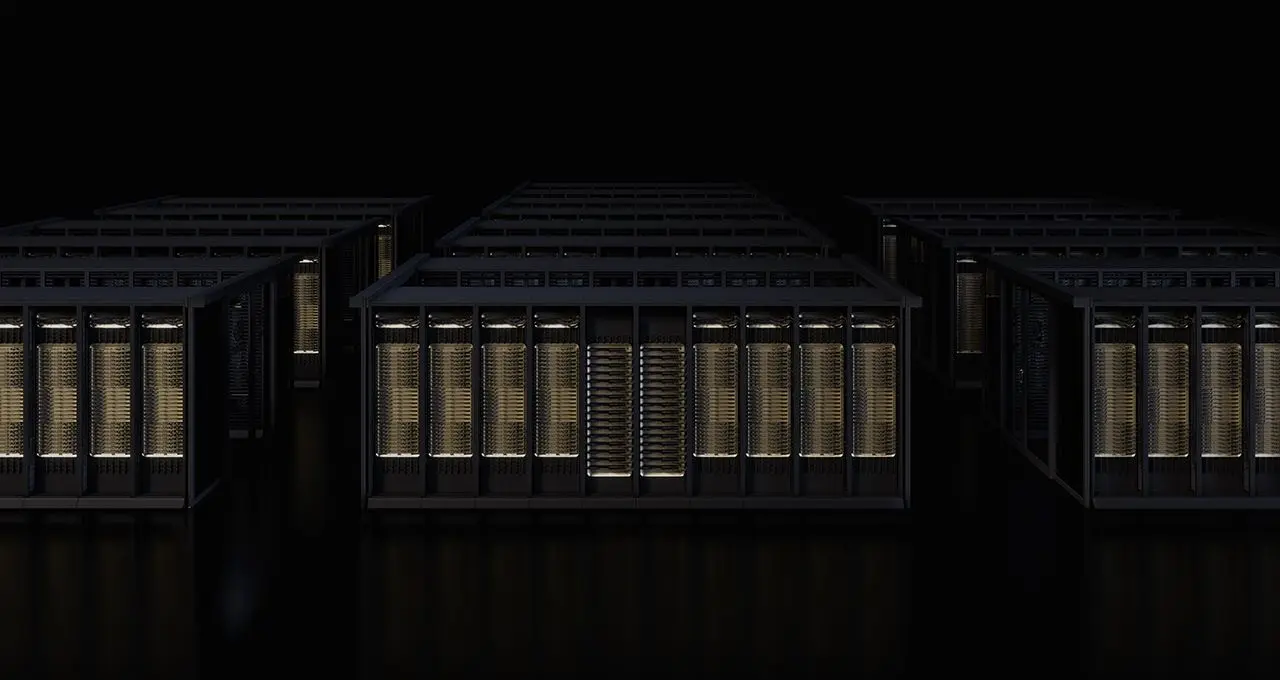

NVIDIA has revealed that they have managed to break AI performance barriers with their Blackwell architecture, which is credited to a round of optimizations and hardware power. NVIDIA Manages To Further Optimize Blackwell For Large-Scale LLMs, Fuels Up The Race For "Token Generation" Speeds Team Green has been making strides in the AI segment for quite some time now, but the firm has recently stepped up through its Blackwell-powered solutions. In a new blog post, NVIDIA revealed that they achieved 1,000 TPS, which is also true with a single DGX B200 node with eight NVIDIA Blackwell GPUs. This was done on Meta's 400-billion-parameter Llama 4 Maverick model, which is one of the firm's largest offerings, and this indicates that NVIDIA's AI ecosystem has made a massive impact on the segment. With this configuration, NVIDIA can now achieve up to 72,000 TPS in a Blackwell server, and, like Jensen said in his Computex keynote, companies will now flaunt their AI progress by showing how far they have come with token output through their hardware, and it seems like NVIDIA is entirely focused on this aspect. As to how the firm managed to break TP/s barriers, it is revealed that they employed extensive software optimizations using TensorRT-LLM and a speculative decoding draft model, bagging in a 4x speed-up in performance. In their post, Team Green has dived into several aspects of how they managed to optimze Blackwell for large-scale LLMs, but one of the more significant roles was played by speculative decoding, which is a technique where a smaller, faster "draft" model predicts several tokens ahead, and the main (larger) model verifies them in parallel. Here's how NVIDIA describes it: Speculative decoding is a popular technique used to accelerate the inference speed of LLMs without compromising the quality of the generated text. It achieves this goal by having a smaller, faster "draft" model predict a sequence of speculative tokens, which are then verified in parallel by the larger "target" LLM. The speed-up comes from generating potentially multiple tokens in one target model iteration at the cost of extra draft model overhead. The firm utilized an EAGLE3-based architecture, which is a software-level architecture aimed at accelerating large language model inference rather than a GPU hardware architecture. NVIDIA says that with this achievement, they have shown leadership in the AI segment, and Blackwell is now optimized for LLMs as large as the Llama 4 Maverick. This is undoubtedly a massive achievement and one of the first steps towards making AI interactions more seamless and faster.

Share

Share

Copy Link

NVIDIA sets a new world record in AI performance with its DGX B200 Blackwell node, surpassing 1,000 tokens per second per user using Meta's Llama 4 Maverick model, showcasing significant advancements in AI processing capabilities.

NVIDIA Shatters AI Performance Records with Blackwell GPUs

NVIDIA has once again pushed the boundaries of AI performance, breaking the 1,000 tokens per second (TPS) per user barrier with Meta's Llama 4 Maverick large language model. This groundbreaking achievement was accomplished using NVIDIA's latest DGX B200 node, which features eight Blackwell GPUs

1

.

Source: Tom's Hardware

Record-Breaking Performance

The new benchmark set by NVIDIA's Blackwell architecture is a significant leap forward in AI processing capabilities:

- Achieved 1,038 TPS/user, surpassing the previous record of 792 TPS/user held by SambaNova by 31%

- Outperformed competitors like Amazon and Groq, who scored just under 300 TPS/user

- Other companies, including Google Vertex and Azure, achieved scores below 200 TPS/user

1

Optimizations Driving Performance Gains

NVIDIA's record-breaking result was achieved through a combination of hardware power and software optimizations:

- Extensive software optimizations using TensorRT-LLM

- Implementation of a speculative decoding draft model using Eagle-3 techniques

- Utilization of FP8 data types for improved accuracy

- Application of Attention operations and Mixture of Experts AI technique

- CUDA kernel optimizations, including spatial partitioning and GEMM weight shuffling

1

2

These optimizations resulted in a 4x performance uplift compared to Blackwell's previous best results.

Source: Wccftech

Significance of TPS/User Metric

The tokens per second per user (TPS/user) metric is crucial for AI chatbot developers:

- Measures the speed at which a GPU cluster can process tokens for individual users

- Directly impacts the responsiveness of AI chatbots like ChatGPT and Copilot

- Focuses on single-user performance rather than batched processing

1

Related Stories

Speculative Decoding: A Key Innovation

NVIDIA's implementation of speculative decoding played a significant role in achieving this performance milestone:

- Utilizes a smaller, faster "draft" model to predict several tokens ahead

- The main (larger) model verifies these predictions in parallel

- Accelerates inference speed without compromising text quality

- Based on the EAGLE3 software architecture for LLM inference acceleration

2

Implications for AI Industry

NVIDIA's achievement has far-reaching implications for the AI industry:

- Demonstrates NVIDIA's leadership in AI hardware and software optimization

- Sets a new standard for AI performance, particularly for large language models

- Paves the way for more responsive and efficient AI-powered applications

- Highlights the growing importance of token generation speeds as a benchmark for AI progress

2

As AI continues to evolve, NVIDIA's Blackwell architecture and its optimizations for large-scale LLMs position the company at the forefront of AI technology, promising faster and more seamless AI interactions in the future.

References

Summarized by

Navi

[1]

Related Stories

NVIDIA Blackwell Ultra slashes AI inference costs by 35x while delivering 50x better performance

12 Feb 2026•Technology

NVIDIA's Blackwell B200 GPU Shatters AI Performance Records in MLPerf Inference Benchmark

29 Aug 2024

NVIDIA Blackwell Dominates MLPerf Inference Benchmarks, AMD's MI325X Challenges Hopper

03 Apr 2025•Technology

Recent Highlights

1

ByteDance's Seedance 2.0 AI video generator triggers copyright infringement battle with Hollywood

Policy and Regulation

2

Demis Hassabis predicts AGI in 5-8 years, sees new golden era transforming medicine and science

Technology

3

Nvidia and Meta forge massive chip deal as computing power demands reshape AI infrastructure

Technology