NVIDIA's AI AI server evolution: GB300 production ramps up as Vera Rubin looms on the horizon

4 Sources

4 Sources

[1]

Large-scale shipments of Nvidia GB300 servers tipped to start in September -- GB200 demand remains 'robust' despite widespread coolant leak reports

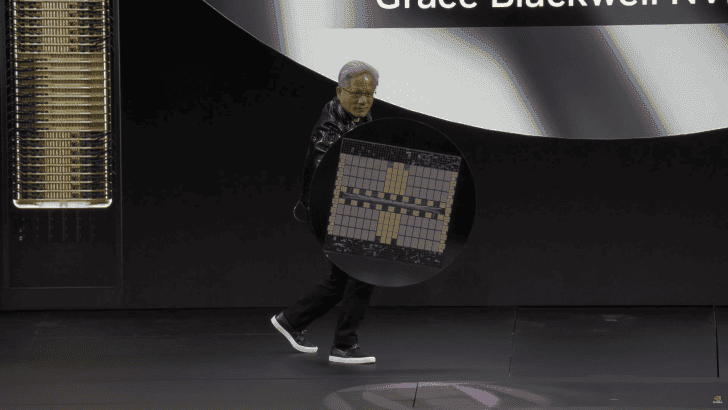

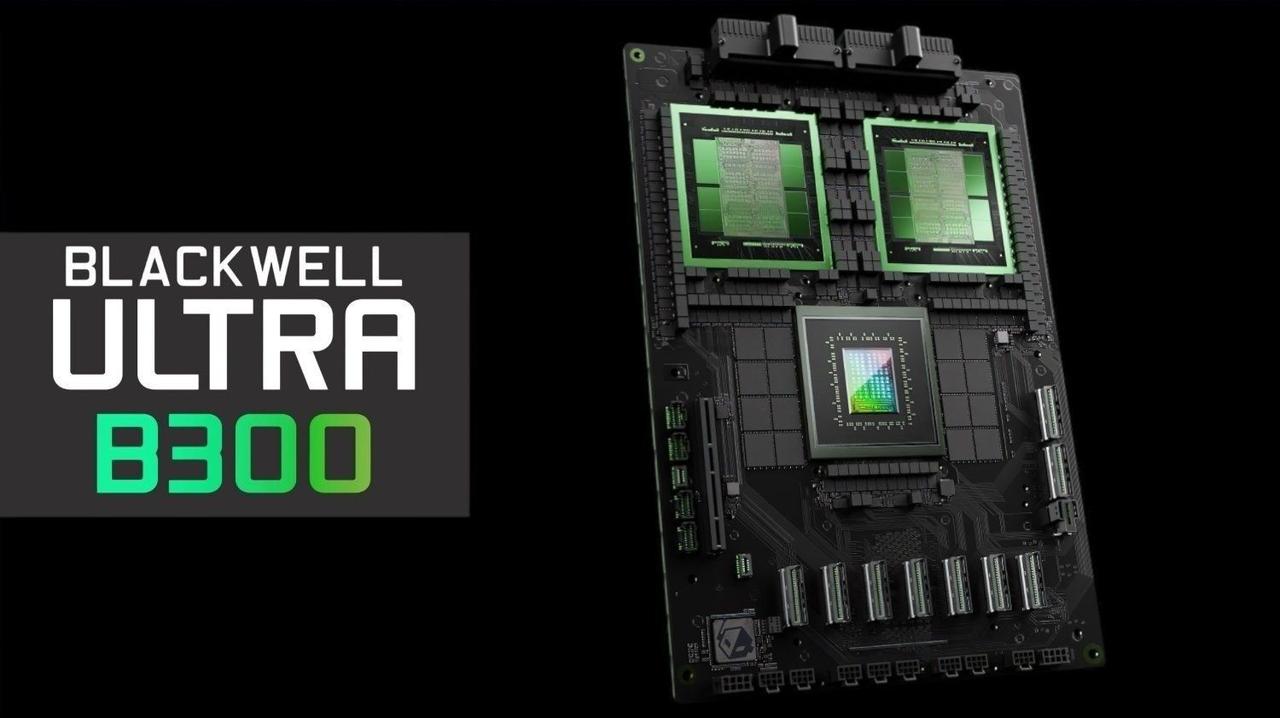

While Dell and probably some other Nvidia partners have initiated early production of their GB300-based servers, large-scale shipments of such machines are expected to begin only in September 2025, reports DigiTimes. The rollout is expected to proceed more smoothly than prior generations due to strategic design reuse and improved coordination across the supply chain. Yet, liquid cooling still represents a challenge for original design manufacturers (ODMs). One of the major factors enabling the faster transition is Nvidia's decision to retain the motherboard design used in the current GB200 platform, according to DigiTimes. But that's not all, as Nvidia gives its partners a lot more freedom than before. For the GB300, Nvidia is shifting to a more modular approach, according to SemiAnalysis. Instead of delivering a fully assembled motherboard, Nvidia is said to provide the B300 GPU on an SXM Puck module, the Grace CPU in a separate BGA package, and the hardware management controller (HMC) from Axiado. Customers now source the remaining motherboard components themselves, and the CPU memory uses standard SOCAMM memory modules that are obtainable from various vendors. Nvidia continues to provide the switch tray and copper backplane as before. The reuse eliminates the need for a complete redesign, streamlining production processes and reducing risk. With GB200, Nvidia provides the complete Bianca motherboard, which includes the B200 GPU, Grace CPU, 512GB of LPDDR5X memory, and power delivery components -- all integrated on a single printed circuit board (PCB). Nvidia also supplies the switch tray and copper backplane for this system. With the GB300 now in validation and early production phases, ODMs report no significant hurdles, DigiTimes claims. Feedback from partners indicates that component qualification is progressing as planned, and Nvidia is on track to increase output steadily throughout the third quarter. By the fourth quarter of 2025, shipment volumes are expected to ramp up significantly, according to DigiTimes. Wistron, a key supplier of compute boards, has indicated that revenue this quarter will remain flat due to the generational overlap between GB200 and GB300, the report says. Good news, the transition appears to be proceeding smoothly compared to the transition to the current platform, which faced multiple delays due to Nvidia's silicon problems, dense server layouts, and cooling requirements. By now, server ODMs seem to have learnt how to manage all of the challenges applicable to them. Although the GB200 is shipping in high volumes to data centers, it has faced persistent problems with its liquid cooling systems, according to DigiTimes. The primary failures occur with quick-connect fittings, which have shown a tendency to leak despite undergoing factory stress tests. Data center operators have responded by adopting measures like localized shutdowns and extensive leak testing, which means that they essentially prioritize deployment speed and performance over hardware reliability. Beyond GB300, Nvidia is prepping its next-generation codenamed Vera Rubin platform for AI servers. This platform will roll out in two phases. The first phase will replace Grace CPUs with Vera CPUs and Blackwell GPUs with Rubin GPUs, but will retain the current Oberon rack, which will carry the NVL144 name (despite using 72 dual compute chiplet GPU packages). The second phase will involve an all-new Kyber rack with Vera CPUs and Rubin Ultra GPUs with four compute chiplets. As Rubin GPUs are expected to be more power hungry than Blackwell GPUs, the next-generation platform will further increase reliance on liquid cooling. While necessary for performance, this cooling method remains challenging to implement reliably, as it turns out from the DigiTimes report. In the GB200 systems, variability in plumbing setups and water pressure across deployments has made it difficult to fully eliminate leaks, leading to significant post-deployment servicing requirements and labor costs.

[2]

NVIDIA's next-gen GB300 AI servers now in production, will begin shipping in September

TL;DR: NVIDIA's next-gen GB300 "Blackwell Ultra" AI servers have entered production, leveraging the existing GB200 motherboard design to streamline manufacturing. Shipments are set to begin in the second half of 2025, promising smooth supply chain operations and steady volume production through Q4 2025. NVIDIA's next-gen GB300 AI servers have entered production, with the new GB300 "Blackwell Ultra" AI servers to begin shipping in September... on time, and ready to rock and roll. In a new report from DigiTimes picked up by insider @Jukanrosleve on X, we're hearing that NVIDIA's new GB300 "Blackwell Ultra" AI servers have entered production according to supply chain sources. Industry sources add that they expect a smooth production trajectory into the second half of 2025, which is said to be from a strategic shift that's making it easier for AI server manufacturers. NVIDIA decided to reuse the motherboard design from its current GB200 platform -- known as the Bianca board -- for its new GB300 platform. This move has significantly shortened the learning curve for suppliers, many of which were struggling to keep up with NVIDIA's incredibly fast product update cycle in the past. One ODM representative noted: "there are no major issues with the GB300 at this stage. Shipments should proceed smoothly in the second half". One particular industry executive said: "NVIDIA's upgrade pace in AI servers is like a military blitz. Competitors can't even see their taillights, but the supply chain is feeling the strain". In breakneck speed, NVIDIA has shifted from Hopper to the current Blackwell architecture, and soon the Blackwell Ultra architecture, and in 2026 we'll see the introduction of the next-generation Rubin architecture that will also adopt next-gen HBM4 memory. Each generation has its own tweaks and changes, which has been forcing AI server manufacturers struggling to keep up. GB200 dramatically compressed the server layout, which saw multiple delays getting into mass production, but with GB300 reusing the existing infrastructure, suppliers won't need to experience the same headaches. ODMs are now actively testing NVIDIA's new GB300 "Blackwell Ultra" and early production results are "promising". The transition is expected to be seamless, with steady shipments projected through Q3 2025, and volume production in Q4 2025. Compute board supplier Wistron has confirmed that revenue this quarter won't grow sequentially due to the generational overlap, which DigiTimes reports is an indirect confirmation that GB300 production is underway.

[3]

NVIDIA's High-End "Blackwell Ultra" GB300 AI Servers to Begin Shipping by September as Design Changes Ease Pressure on the Supply Chain

NVIDIA's GB300 AI servers are finally expected to see volume shipments commence by September, as the supply chain has adjusted to Team Green's design changes. The "Blackwell Ultra" lineup of AI servers by NVIDIA did see a hiccup when they were released in H1 2025, as Team Green introduced several design changes that the supply chain found difficult to adjust to. This resulted in low-volume shipments, and only NVIDIA's exclusive partners, such as Dell and Microsoft, managed to get the more high-end NVL72 AI clusters. Now, based on a report by DigiTimes, it is revealed that NVIDIA plans to initiate volume production of its GB300 AI clusters by September, allowing a larger market segment to access high-end clusters from the company. For those unaware of what causes the production issues, it was mainly because with the GB300, NVIDIA employed a Cordelia board design, which integrated modular design features and integrated the newer SOCAMM memory design, which hadn't been adopted before. However, due to NVIDIA's short-term product update cycle, followed by the issues with SOCAMM memory, the firm decided to switch to the Bianca architecture, which was also adopted with the GB200. While the markets saw this as a move of vulnerability, it had turned out to be a significant step. It is claimed that the supply chain no longer feels pressure to ramp up GB300 supply to customers since the adjustments are relatively lower compared to the GB200, which has the same fundamentals. NVIDIA's suppliers are currently testing out low-volume GB300 shipments with the Bianca board, and it is expected that volume will catch up in the upcoming quarters, which means that Q4 will serve as the timeline for "Blackwell Ultra" to enter and potentially disrupt the AI industry. GB300 AI servers have already started to witness massive orders, particularly from NVIDIA's "Sovereign AI" initiative, so it is safe to say that the demand is there. Moreover, when you look at how quickly NVIDIA is proceeding with upgrading its architectural advancements, no one can compete with them right now. With Rubin being introduced in the markets by either year-end of the start of 2026, Team Green is currently operating on a six to eight months product cycle, which is one of the fastest ever.

[4]

NVIDIA Gears Up for Next-Gen Vera Rubin AI Servers While Rolling Out Blackwell Ultra GB300 Units; A Relentless Standard Only Jensen Dares to Set

NVIDIA is now preparing for the next generation of AI servers, at a time when the company has just started low-volume production of Blackwell Ultra, which shows that there won't be another NVIDIA at all. Team Green has been accelerating through the AI hype at a rate that no other competitor has managed to replicate. NVIDIA is operating at a six to eight-month product cycle for now, and these are ordinary GPUs; they are massive AI clusters that are worth billions of dollars, and the firm is producing them at a relentless pace, which is simply astonishing. In a report by the Taiwan Economic Daily, it is revealed that NVIDIA is preparing the design for Vera Rubin servers racks, which will be introduced to mainstream suppliers by the end of this month, marking the first step towards production. NVIDIA's Rubin architecture is seen as the next leap in computing capabilities since Team Green is expected to make changes from the ground up, starting with HBM, process node, design, and much more. It can be called as a landmark similar to the Hopper generation, and how significant of a leap it took with Ampere AI accelerators. It is claimed that Vera Rubin AI server racks will be available in the market from 2026 to 2027, and it will keep the AI bandwagon up and running, to say the least. For a quick rundown on what to expect with NVIDIA's Rubin, the firm will utilize the next-generation HBM4 chips to power its R100 GPUs, which are said to be a significant upgrade from the modern-day HBM3E standard. Team Green will also adopt TSMC's 3nm (N3P) process and CoWoS-L packaging, which means that Rubin will adopt newer industry standards that will likely take performance to greater levels. More importantly, Rubin will adopt a chiplet design, a first-of-a-kind NVIDIA implementation, and a 4x reticle design (versus 3.3x of Blackwell). While the Rubin release certainly shows optimism, there's always a question about how NVIDIA would release independent architectures in such short intervals, given that the supply chain gets so little time to adopt newer frameworks. We saw a similar situation with the GB300 AI platform as well, and Team Green ultimately had to resort to using the older Bianca board, which came with the GB200 platform. So, it would be interesting to see how the situation pans out for NVIDIA, but one thing is certain, no company can match the "Jensen speed".

Share

Share

Copy Link

NVIDIA's GB300 "Blackwell Ultra" AI servers enter production with shipments starting in September, while the company already prepares for the next-generation Vera Rubin platform, showcasing NVIDIA's relentless pace in AI innovation.

NVIDIA's GB300 "Blackwell Ultra" Enters Production

NVIDIA's next-generation GB300 "Blackwell Ultra" AI servers have entered production, with large-scale shipments expected to begin in September 2025

1

2

. This rollout is anticipated to proceed more smoothly than previous generations, thanks to strategic design reuse and improved coordination across the supply chain1

.Design Reuse and Modular Approach

Source: TweakTown

A key factor enabling the faster transition is NVIDIA's decision to retain the motherboard design used in the current GB200 platform, known as the Bianca board

1

2

. This move has significantly shortened the learning curve for suppliers, many of which were struggling to keep up with NVIDIA's rapid product update cycle2

.For the GB300, NVIDIA is shifting to a more modular approach:

- The B300 GPU is provided on an SXM Puck module

- The Grace CPU comes in a separate BGA package

- The hardware management controller (HMC) is supplied by Axiado

- Customers source remaining motherboard components themselves

- CPU memory uses standard SOCAMM memory modules from various vendors

1

This reuse and modular design eliminate the need for a complete redesign, streamlining production processes and reducing risk

1

.Production and Shipment Timeline

ODMs report no significant hurdles in the validation and early production phases of GB300

1

. Feedback from partners indicates that component qualification is progressing as planned, and NVIDIA is on track to increase output steadily throughout the third quarter of 20251

2

. By the fourth quarter of 2025, shipment volumes are expected to ramp up significantly1

3

.Challenges with GB200 Liquid Cooling

Source: Tom's Hardware

Despite high-volume shipments of GB200 to data centers, persistent problems with its liquid cooling systems have been reported

1

. The primary failures occur with quick-connect fittings, which have shown a tendency to leak despite undergoing factory stress tests1

. Data center operators have responded by adopting measures like localized shutdowns and extensive leak testing, prioritizing deployment speed and performance over hardware reliability1

.Related Stories

Looking Ahead: Vera Rubin Platform

Even as GB300 production ramps up, NVIDIA is already preparing for its next-generation AI servers, codenamed Vera Rubin

1

4

. This platform will roll out in two phases:- Replace Grace CPUs with Vera CPUs and Blackwell GPUs with Rubin GPUs, retaining the current Oberon rack (NVL144)

- Introduce an all-new Kyber rack with Vera CPUs and Rubin Ultra GPUs featuring four compute chiplets

1

Source: Wccftech

The Vera Rubin platform is expected to be available in the market from 2026 to 2027

4

. It will utilize next-generation HBM4 chips, adopt TSMC's 3nm (N3P) process, and implement CoWoS-L packaging4

. Notably, Rubin will be NVIDIA's first implementation of a chiplet design, featuring a 4x reticle design compared to Blackwell's 3.3x4

.NVIDIA's Relentless Innovation Pace

NVIDIA's rapid product cycle, releasing new AI server architectures every six to eight months, has set a blistering pace that competitors struggle to match

2

4

. This relentless standard, driven by CEO Jensen Huang, has positioned NVIDIA at the forefront of AI innovation4

. However, it also raises questions about how the supply chain can adapt to such frequent architectural changes4

.As NVIDIA continues to push the boundaries of AI server technology, the industry watches closely to see how this rapid innovation cycle will shape the future of artificial intelligence and high-performance computing.

References

Summarized by

Navi

[1]

Related Stories

Nvidia Unveils Blackwell Ultra B300: A Leap Forward in AI Computing

19 Mar 2025•Technology

NVIDIA's GB300 'Blackwell Ultra' AI Servers: A Leap Towards Fully Liquid-Cooled AI Clusters

11 Mar 2025•Technology

Nvidia Overcomes Technical Hurdles, Accelerates Production of Blackwell AI Servers

28 May 2025•Technology

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology