Nvidia's Neural Texture Compression: A Game-Changer for VRAM Usage and Game Sizes

2 Sources

2 Sources

[1]

Nvidia's new texture compression tech slashes VRAM usage by up to 95%

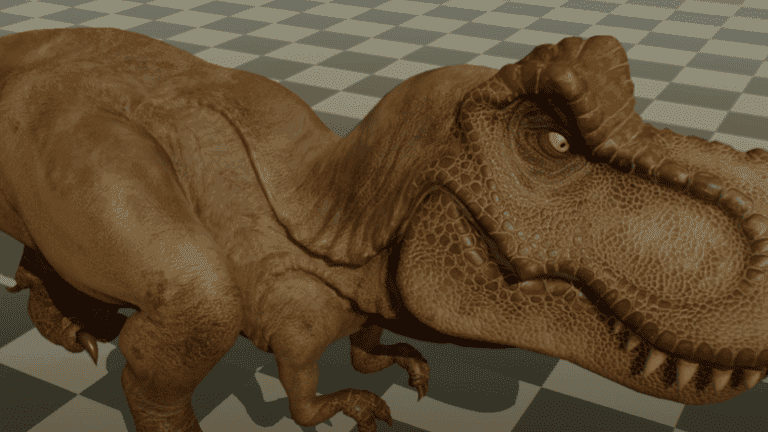

Forward-looking: Nvidia has been working on a new method to compress textures and save GPU memory for a few years now. Although the technology remains in beta, a newly released demo showcases how AI-based solutions could help address the increasingly controversial VRAM limitations of modern GPUs. Nvidia's Neural Texture Compression can provide gigantic savings in the amount of VRAM required to render complex 3D graphics, even though no one is using it (yet). While still in beta, the technology was tested by YouTube channel Compusemble, which ran the official demo on a modern gaming system to provide an early benchmark of its potential impact and what developers could achieve with it in the not-so-distant future. As explained by Compusemble in the video below, RTX Neural Texture Compression uses a specialized neural network to compress and decompress material textures dynamically. Nvidia's demo includes three rendering modes: Reference Material, NTC Transcoded to BCn, and Inference on Sample. Compusemble tested the demo at 1440p and 4K resolutions, alternating between DLSS and TAA. The results suggest that while NTC can dramatically reduce VRAM and disk space usage, it may also impact frame rates. At 1440p with DLSS, Nvidia's NTC transcoded to BCn mode reduced texture memory usage by 64% (from 272MB to 98MB), while NTC inference on sample drastically cut it to 11.37MB, a 95.8% reduction compared to non-neural compression. The demo ran on a GeForce RTX 4090 GPU, where DLSS and higher resolutions placed additional load on the Tensor Cores, affecting performance to some extent depending on the setting and resolution. However, newer GPUs may deliver higher frame rates and make the difference negligible when properly optimized. After all, Nvidia is heavily invested in AI-powered rendering techniques like NTC and other RTX applications. The demo also shows the importance of cooperative vectors in modern rendering pipelines. As Microsoft recently explained, cooperative vectors accelerate AI workloads for real-time rendering by optimizing vector operations. These computations play a crucial role in AI model training and fine-tuning and can also be leveraged to enhance game rendering efficiency.

[2]

I've been testing Nvidia's new Neural Texture Compression toolkit and the impressive results could be good news for game install sizes

At CES 2025, Nvidia announced so many new things that it was somewhat hard to figure out just what was really worth paying attention to. While the likes of the RTX 5090 and its enormous price tag were grabbing all the headlines, one new piece of tech sat to one side with lots of promise but no game to showcase it. However, Nvidia has now released a beta software toolkit for its RTX Neural Texture Compression (RTXNTC) system, and after playing around with it for an hour or two, I'm far more impressed with this than any hulking GPU. At the moment, all textures in games are compressed into a common format, to save on storage space and download requirements, and then decompressed when used in rendering. It can't have escaped your notice, though, that today's massive 3D games are...well...massive and 100 GB or more isn't unusual. RTXNTC works like this: The original textures are pre-converted into an array of weights for a small neural network. When the game's engine issues instructions to the GPU to apply these textures to an object, the graphics processor samples them. Then, the aforementioned neural network (aka decoding) reconstructs what the texture looks like at the sample point. The system can only produce a single unfiltered texel so for the sample demonstration, RTX Texture Filtering (also called stochastic texture filtering) is used to interpolate other texels. Nvidia describes the whole thing using the term 'Inference on Sample,' and the results are impressive, to say the least. Without any form of compression, the texture memory footprint in the demo is 272 MB. With RTXNTC in full swing, that reduces to a mere 11.37 MB. The whole process of sampling and decoding is pretty fast. It's not quite as fast as normal texture sampling and filtering, though. At 1080p, the non-NTC setup runs at 2,466 fps but this drops to 2,088 fps with Interfence on Sample. Stepping the resolution up to 4K the performance figures are 930 and 760 fps, respectively. In other words, RTXNTC incurs a frame rate penalty of 15% at 1080p and 18% at 4K -- for a 96% reduction in texture memory. Those frame rates were achieved using an RTX 4080 Super, and lower-tier or older RTX graphics cards are likely to see a larger performance drop. For that kind of hardware, Nvidia suggests using 'Inference on load' (NTC Transcoded to BCn in the demo) where the pre-compressed NTC textures are decompressed as the game (or demo, in this case) is loaded. They are then transcoded in a standard BCn block compression format, to be sampled and filtered as normal. The texture memory reduction isn't as impressive but the performance hit isn't anywhere near as big as with Interfence on Sample. At 1080p, 2,444 fps it's almost as fast as a standard texture sample and filtering, and the texture footprint is just 98 MB. That's a 64% reduction over the uncompressed format. All of this would be for nothing if the texture reconstruction was rubbish but as you can see in the gallery below, RTXNTC generates texels that look almost identical to the originals. Even Inference on Load looks the same. Of course, this is a demonstration and a simple beta one at that, and it's not even remotely like Alan Wake 2, in terms of texture resolution and environment complexity. RTXNTC isn't suitable for every texture, either, being designed to be applied to 'physically-based rendering (PBR) materials' rather than a single, basic texture. And it also requires cooperative vector support to work as quickly as this and that's essentially limited to RTX 40 or 50 series graphics cards. A cynical PC enthusiast might be tempted to claim that Nvidia only developed this system to justify equipping its desktop GPUs with less VRAM than the competition, too. But the tech itself clearly has lots of potential and it's possible that AMD and Intel are working on developing their own systems that achieve the same result. While three proprietary algorithms for reducing texture memory footprints aren't what anyone wants to see, if developers show enough interest in using them, then one of them (or an amalgamation of all three) might end up being a standard aspect of DirectX and Vulkan. That would be the best outcome for everyone, so it's worth keeping an eye on AI-based texture compression because just like with Nvidia's other first-to-market technologies (e.g. ray tracing acceleration, AI upscaling), the industry eventually adapts them as being the norm. I don't think this means we'll see a 20 GB version of Baldur's Gate 3 any time soon but the future certainly looks a lot smaller.

Share

Share

Copy Link

Nvidia's new Neural Texture Compression technology promises to dramatically reduce VRAM usage and game install sizes, potentially revolutionizing game development and graphics rendering.

Nvidia Introduces Revolutionary Neural Texture Compression

Nvidia has unveiled a groundbreaking technology called Neural Texture Compression (NTC), which promises to significantly reduce VRAM usage and game install sizes. This AI-powered solution could address the growing concerns over VRAM limitations in modern GPUs and the ballooning sizes of video game installations

1

2

.How Neural Texture Compression Works

NTC utilizes a specialized neural network to compress and decompress material textures dynamically. The process involves pre-converting original textures into an array of weights for a small neural network. When the game engine instructs the GPU to apply these textures to an object, the graphics processor samples them, and the neural network reconstructs the texture at the sample point

2

.Impressive Performance in Beta Testing

Early tests of the NTC beta software toolkit have shown promising results:

- VRAM Usage Reduction: At 1440p resolution with DLSS, NTC transcoded to BCn mode reduced texture memory usage by 64% (from 272MB to 98MB).

- Extreme Compression: NTC inference on sample mode achieved a staggering 95.8% reduction, bringing texture memory usage down to just 11.37MB

1

. - Visual Quality: Despite the significant compression, the reconstructed textures appear almost identical to the originals

2

.

Performance Impact and Optimization

While NTC offers substantial memory savings, it does come with a slight performance cost:

- Frame Rate Impact: Tests showed a 15% frame rate reduction at 1080p and 18% at 4K when using full NTC (Inference on Sample)

2

. - Hardware Considerations: The technology relies on cooperative vector support, currently limited to RTX 40 or 50 series graphics cards

2

. - Optimization Potential: Newer GPUs and further optimization may make the performance difference negligible in the future

1

.

Related Stories

Implications for Game Development and Storage

The introduction of NTC could have far-reaching effects on the gaming industry:

- Reduced Game Sizes: With many modern games exceeding 100GB in size, NTC could significantly reduce storage requirements and download times

2

. - VRAM Management: The technology could help address the controversial VRAM limitations of some modern GPUs

1

. - Industry Adoption: While currently proprietary to Nvidia, similar technologies from AMD and Intel could lead to standardization in DirectX and Vulkan

2

.

Future Prospects and Industry Impact

As with previous Nvidia innovations like ray tracing acceleration and AI upscaling, Neural Texture Compression has the potential to become an industry standard. While it may not immediately result in dramatically smaller game sizes, it represents a significant step towards more efficient graphics rendering and storage utilization in the gaming industry

2

.The development of NTC aligns with the growing trend of AI-powered solutions in graphics technology, showcasing the potential for machine learning to address longstanding challenges in game development and hardware limitations.

References

Summarized by

Navi

Related Stories

Neural Texture Compression: AI-Powered Solution for VRAM Efficiency in Gaming GPUs

19 Jun 2025•Technology

Nvidia's AI Texture Compression: A Potential Game-Changer for 8GB GPUs

17 Jul 2025•Technology

NVIDIA Unveils RTX Kit Update with Revolutionary AI-Powered Neural Rendering Technologies

11 Feb 2025•Technology

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Meta strikes up to $100 billion AI chips deal with AMD, could acquire 10% stake in chipmaker

Technology

3

Pentagon threatens Anthropic with supply chain risk label over AI safeguards for military use

Policy and Regulation