NVIDIA's Sustainability Push: Simulation and AI in Digital Content Creation

2 Sources

2 Sources

[1]

NVIDIA's Rev Lebaredian - planting seeds for sustainability simulation interop

The recent boom in generative AI enthusiasm was driven by seminal innovations in Large Language Models (LLMs) trained on large collections of text for answering questions. The next wave will be Vision Language Models (VLM) that can answer questions and translate 3D data across applications and tools. NVIDIA recently made significant progress to connect these silos with better USD integration, a new 3D processing framework called fVDB, and AI integrations. Recent milestones include: Early adopters are taking advantage of these innovations to connect the dots between modeling and simulation silos to improve product designs (Siemens), optimize factory layouts (Foxconn and Pegatron), and improve traffic management (City of Palermo). I recently sat down with Rev Lebaredian, NVIDIA VP of Omniverse & Simulation Technology, to talk about their recent progress, roadmap, and plans for the future. NVIDIA was an early advocate for building open 3D data formats and standards. However, it has not been an easy road owing to engineering differences, not to mention vendor bias towards proprietary lock-in. Lebaredian says: A large part of what we're doing, our investment isn't for Nvidia. It's just furthering the industry. We're leading it here by our investment in USD and trying to establish these standards. Somebody's got to do it. And we've just taken on this burden because it seems like if we don't do it, it's not going to happen anytime soon. But what's at stake is the potential for connecting these silos to give people simulation superpowers like teleportation, prediction, and optimization to learn faster and make better decisions. These come in handy when trying to meet sustainability goals, train a better robot, or improve a factory layout. Lebaredian explains: We believe that this is the only way we are going to build a sustainable, efficient future for all of the humans that are going to be on this earth. We have to build housing for them. Climate change is changing everything about where we live and how we produce food, shelter, and products. Without simulation, without being able to predict the future based on these what-if questions, there's no way we're going to do this efficiently. Digital twins pioneers Michaels Grieves and Paul Vickers previously told me that despite advances in areas like model-based systems engineering (MBSE) and multiphysics, siloed data was still a big problem. Despite some progress in USD interoperability, Lebaredian acknowledges that this also continues to be a challenge: We've been using computer-aided design (CAD) and computer-aided engineering (CAE) for decades now. There are a lot of great tools to help you design products and buildings, as well as the layers of things you have in our buildings and space, like electrical, plumbing, and HVAC. But all of these tools are independent of each other. They rarely work together. And it starts with the data model. Each one has its own way of representing these worlds. It's been okay and helped us get to where we are today, but we've reached a limit. But if you can't put them all into one model. There's no way to make a full digital twin of what you're talking about. You can't have multiple separate simulations that simulate the different layers of the building. You want to do it all at once. And if you can't do that, then we're back to square one, with just making educated guesses about how things should be designed. It needs to start with harmonizing all of this data. However, the world is really complex, and describing various facets of the world in a unified way is even harder. There is also pushback from vendors biased toward proprietary lock-in. Lebaredian notes: In some cases, there's short-term interest. They don't necessarily want to interoperate and allow the data to flow from in and out of their systems. Doing that takes a certain kind of long-term, collectivist, collective good thinking. He thinks it is important to consider the impact of HTML open standards on the massive growth of the Internet. It would be impossible to imagine the web happening in the way it did without the open data standards to begin with. So, it's also important for vendors to consider the opportunity costs of not collaborating on 3D data to create a network effect. Lebaredian breaks down their recent progress into two tracks. On the one hand, they are looking for better ways to build on and integrate across the large body of work on representing things inside the 3D world. They are also exploring the best ways to incorporate these existing formats with innovations in AI, physics simulations, and modern development pipelines. The first priority is to take the 3D modeling approaches already out there and make an interoperable 3D standard, USD. This is hard because there are a lot of opinions, and companies might not like the idea of a standard when they are already dominant in one area. When NVIDIA first started down this path, it felt like a chicken or egg problem. Toolmakers pushed back, saying that no one was asking for USD support and that customers were not asking for it. So, NVIDIA decided to do the initial work of adding what was needed for USD and plugins and connectors to the major 3D formats. Vendors like Autodesk, Epic Games, and Siemens are adding native USD support. Another huge breakthrough was forming the Alliance for Open USD (AOUSD). Lebaredian says the five founding members surprised them a bit. Apple, for example, is not known for pushing open standards, but they needed something like web standards to build their spatial computing stack. Now, there are about thirty members of AOUSD. The other track combines this with recent innovations in 3D representations like Neural Radiance Fields (NeRFs) and Gaussian Splats for capturing reality and new physics-based AI models. This area continues to be a work in progress and is too early for standardization. Lebaredian explains: We want to allow for all those things to happen, but we also need them to stabilize before you make them into hard standards. So, you want to make the standards compatible with the new things happening. Gaussian splats just showed up right after NeRFs was the thing, and we're like, 'Which one's going to be the better representation going forward' It's not quite clear. We should add support for these in our software systems and make USD as compatible as possible. But it should not be a standard before we dictate any of these. Let's see it used for a while without changing because a lot of stuff is progressing quickly right now. NVIDIA has also made considerable progress on various physics capabilities on top of its PhysX open-source middleware. The first iteration focused on how things looked to improve game rendering. But increasingly, the engine is evolving to support how things act across different physical domains. For example, if you drop a ball, will it bounce, splat or thud? How will your new car or product hold up under different conditions, or how might soft bodies like cloth or malleable plastic behave? Lebaredian explains: We are adding new layers of other information for different types of physics, phenomena like soft bodies, to describe things like cloth or malleable plastics. We're adding layers to describe fluids and so on. The goal is to eventually get to the point where everything we see around us, everything you might have inside the world, can be described in a standard way, in USD, so that any simulator can take that USD data and set that up as the initial conditions from to predict a future. The next level of this is improving workflows for connecting these models to AI surrogate models that glean essential physics principles but can run thousands of times faster. These are still a work in progress and can help quickly identify candidate product designs or factory layouts. However, they must be double-checked against traditional physics simulation approaches to confirm results. I am pretty sure Max, my 5-year-old grandson, understands physics better than the latest LLMs. This is a pretty sad state of affairs, considering gen AI proponents were promising advanced general intelligence (AGI) is just around the corner. But don't ask Max to sort out your warehouse optimization or design problems any time soon. He is not the best artist, yet he is pretty clear about what physical forces might break his toys or the level of torture he can safely endure from his older brother. The main challenge with the current crop of AI tools is that they don't really understand physics, how things break, or how they might be designed more effectively. I brought up vision language models as the next iteration of all of this earlier. That's a bit of a misnomer, we should probably be thinking of 3DLMs or 4DLMs (including time) to describe these things. Camera data is just a placeholder for the things we really care about, which is why NeRFs and Gaussian Splats are so interesting since they make camera data 4D. It's surprising how little attention is being paid to these sorts of things that will actually solve real-world problems. Looking at the AOUSD members roster, NVIDIA and Intel are the only chip companies listed as members. There is no AMD, Samsung, Qualcomm, or the dozens of other companies developing innovative AI chips. This is a serious oversight on their part. Also missing are PTC, Dassault Systèmes, and Nemetschek. They all need to be involved to move this kind of collaboration along. Sure, they might lose out on some revenues in the short run, but as a result, they are also missing out on bigger long-term opportunities from more connected and integrated data. For what it's worth, I opined that NVIDIA was undervalued at $2 trillion in February. They have breached $3 trillion, and maybe that's a bit much, considering the reconsideration of AI hype. But if efforts like Lebaredian's to open up 3D/4D data succeed in unlocking further opportunities, there might yet be more room for growth. It boggles my mind most of the other chip vendors are not thinking this way. I would certainly apply a bit more trust to NVIDIA CEO Jenson Wang than most of the other AI vendors these days. He seems to have a long term vision and has not fallen to the affliction of firing good employees working on big and long term things in order to raise stock prices in the short run.

[2]

Nvidia's ecosystem ambition

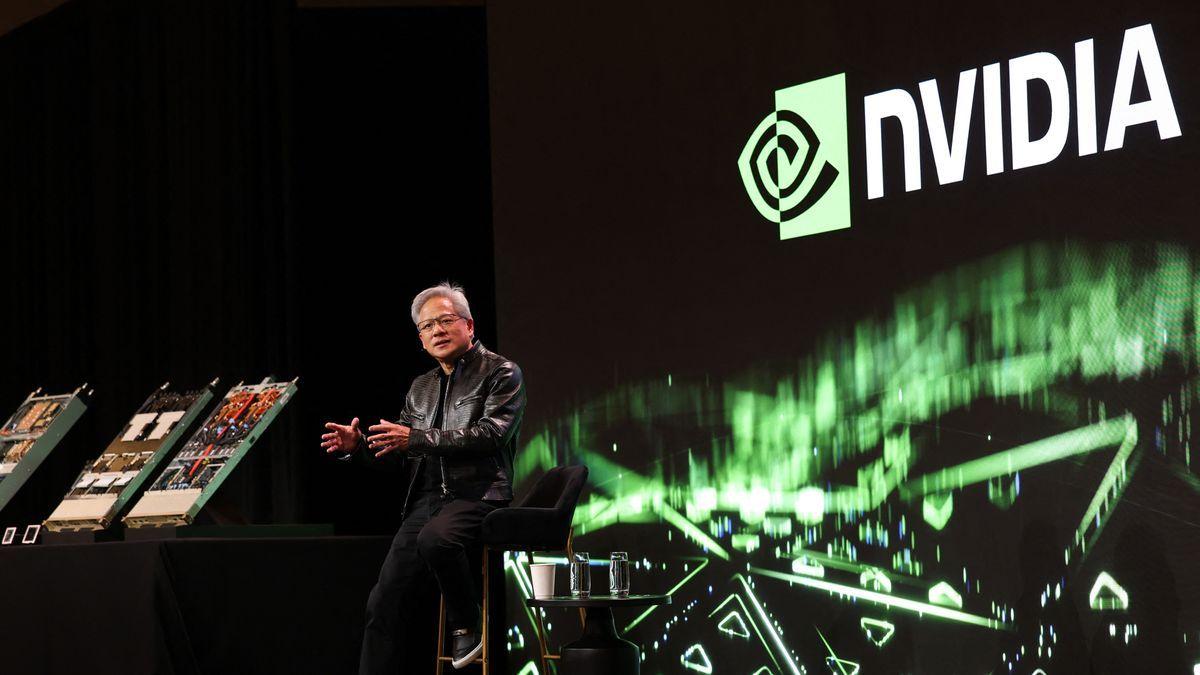

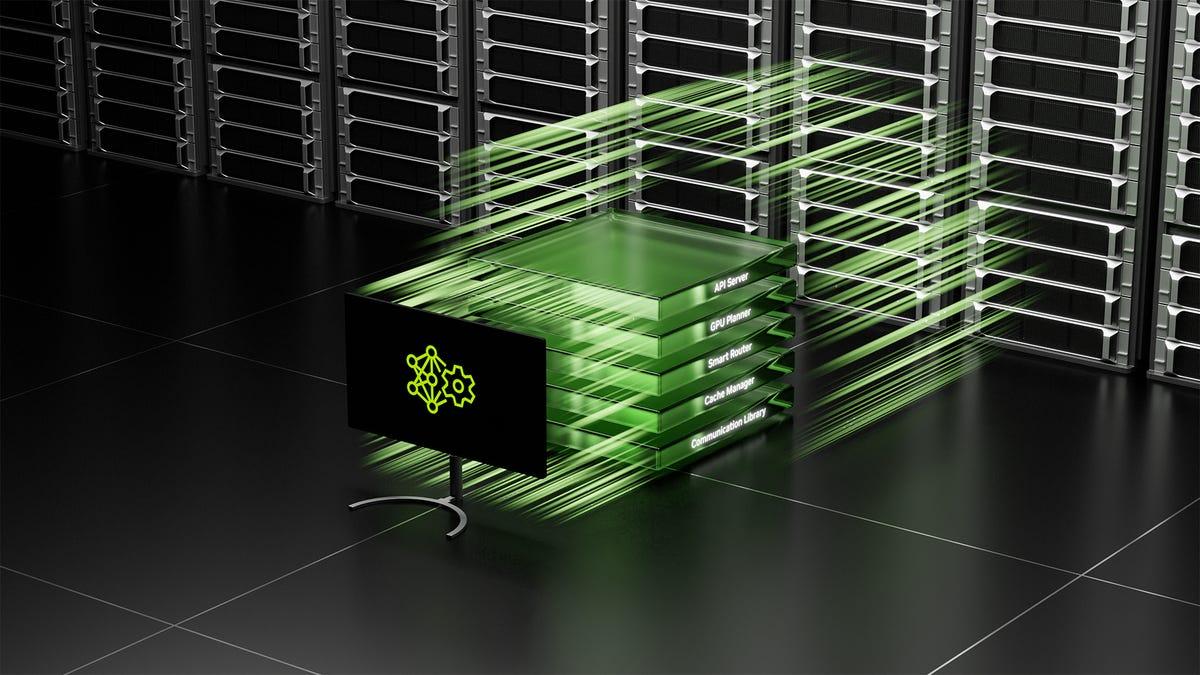

SIGGRAPH 2024 felt especially like a celebration party hosted by Nvidia, which has been sponsoring SIGGRAPH every year since 2020. Nvidia's presence was everywhere at this annual conference centered around computer graphics: 20 papers at the intersection of generative AI and simulation, Gen AI Day, developer education sessions, OpenUSD announcements... the list goes on. Nvidia's CEO Jensen Huang first had a fireside chat with Lauren Goode, senior writer at WIRED. During the thirty-minute break, Huang grabbed a microphone and asked the crowd to ask him anything. While many PhDs tried to find a good angle to take selfies with Hung speaking in the background, Huang discussed how the latest chip design increases inference speed, and how synthetic data, domain randomization, and reinforcement learning help push the frontier even when the industry runs out of human-generated data. These all accumulated to Huang, showing up as a host, welcoming Mark Zuckerberg, Meta's CEO to the stage for a conversation on the next computing platform. Meta, Google, Microsoft, and Amazon have been increasing capital spending that benefits Nvidia. Dan Gallagher, a Columnist for the Wall Street Journal asserted that "It's Nvidia's market. Everyone else just lives in it -- though not nearly as well." (See Big Tech's AI Race Has One Main Winner: Nvidia, published in the same week where SIGGRAPH took place.) The three waves This is just the beginning for Nvidia. At SIGGRAPH, Huang summarized the first and the second wave of AI and described the third wave. "The first wave is accelerated computing that reduces energy consumed, allows us to deliver continued computational demand without all of the power continuing to grow with it," Huang said, the second wave is for enterprises, ideally every organization, to create their own AIs. "Everybody would be augmented and to have a collaborative AI. They could empower them, helping them do better work." The next wave of AI is "physical AI", according to Huang. He used the Chinese science fiction "The Three-Body Problem" to introduce the concept. He called it "the three computer problem" where a computer creates the AI, another computer simulates the AI using synthetic data generation, or having humanoid robots refine its AI. Last but not least, another computer that runs the AI. "Each one of these computers, depending on whether you want to use the software stack, the algorithms on top, or just the computing infrastructure, or just the processor for the robot, or the functional safety operating system that runs on top of it, or the AI and computer vision models that run on top of that, or just the computer itself. Any layer of that stack is open for robotics developers." Huang said. The infrastructure layers of AI have been covered by big tech companies, with Nvidia leading in from the hardware side, and now more so than ever before on the software side as well. "We've always been a software company, and even first," said Huang. Nvidia set the industry standard back in 2006 with the introduction of CUDA which speeds up computing applications with GPUs. Now Nvidia's roots are not just expanding but also deepening. OpenUSD "One of the formats that we deeply believe in is OpenUSD," Huang said. To improve interoperability across 3D tools, data, and workflows, Nvidia co-founded the Alliance for OpenUSD (AOUSD) along with Pixar, Adobe, Apple, and Autodesk in 2023 to promote USD (Universal Scene Description). USD enables the assembly and organization of any number of assets into virtual sets, scenes, and shots, transmits them from application to application, and non-destructively edits them. An analogy can demonstrate the significance of USD: if the traditional file types .FBX and .OBJ are like .PNG and .JPEG, USD is similar to Adobe Photoshop's project file .PSD. USD's open-sourced layering system means each operation can be turned on and off. Production doesn't need to be done linearly with USD, which is ideal for small teams and even more tempting for a matrix organization. It can load complex data without having teams worried about data management. "OpenUSD is the first format that brings together multimodality from almost every single tool and allows it to interact, to be composed together, to go in and out of these virtual worlds." Huang claimed that over time, developers can "bring in just about any format" into USD. Nvidia announced that the unified robotics description format (URDF) now is compatible with USD. Pixar was the original inventor of USD and proved that it can be used to optimize 3D workflows; Apple and other companies have been active contributors to its development. The product releases Nvidia had at SIGGRAPH this year provide the developer community with even more reasons to be part of the ecosystem. "We taught an AI how to speak USD, OpenUSD." Huang introduced the USD Code NIM microservice, USD Search NIM microservice, and USD Validate NIM microservice. "Omniverse generates the USD and uses USD search to then find the catalog of 3D objects that it has. It composes the scene using words and then generative AI uses that augmentation to condition the generation process and so therefore, the work that you do could be much, much, better controlled." During the developer meetup organized by Nvidia, engineers from IKEA, Electronic Arts, and a European surveillance company all showed interest in potentially switching to USD. Depending on different projects and the supporting tools, USD could speed up creation from months to days. Even in some unique situations, when enterprises do not want the files to be opened by some applications, they can still write a converter to do so while getting the benefits of interoperability before files get to the converter. NIM microservices's early adopters and use cases are growing: Foxconn created a digital twin of a factory under development; WPP brought the solutions to their content creation pipeline that is built on Nvidia Omniverse, serving their client, The Coca-Cola Company. Partnership Other than interoperability, the lack of assets can be another showstopper for enterprise 3D adoption. There are several text-to-3D solutions out in the market, but most were trained with data on the open internet which has legal implications. Nvidia partnered with Shutterstock and Getty Images to help each of them train their own text to 3D model with their respective proprietary data. These models are accessible through Nvidia Picasso, an AI foundry for software developers and service providers to build and deploy generative AI models. With the advancement of model output and industrial awareness, Shutterstock predicted a "near-universal adoption" of generative AI in creative industries ranging from graphic design, 3D modeling, animation, video editing, and concept art. Author's bio Kari Wu is a Senior Technical Product Manager at Unity Technologies, the leading platform for creating real-time interactive 3D content. Previously, she was an entrepreneur focused on augmented and virtual reality. Kari founded FilmIt, a startup enabling users without formal training to film professional-looking videos using augmented reality and automated editing solutions. Born in Taiwan and raised across cultures, Kari brings a global vision to her work. Her experience consulting businesses in South Korea and Taiwan, and living in Boston, Los Angeles, and San Francisco, gives her a unique ability to see from multiple perspectives. From immigrant to entrepreneur to tech leader, Kari's lifelong curiosity has driven her journey of evolving identities and careers. Kari earned her MBA and MS in Media Ventures from Boston University.

Share

Share

Copy Link

NVIDIA showcases its commitment to sustainability and interoperability in digital content creation at SIGGRAPH 2024, highlighting advancements in simulation technology and AI-powered tools.

NVIDIA's Sustainability Vision

At SIGGRAPH 2024, NVIDIA's Rev Lebaredian, Vice President of Omniverse and Simulation Technology, unveiled the company's ambitious plans to revolutionize digital content creation with a focus on sustainability

1

. Lebaredian emphasized the importance of simulation in reducing the environmental impact of content production, stating that virtual environments can significantly decrease the need for physical resources and travel.Advancements in Simulation Technology

NVIDIA showcased its latest developments in simulation technology, demonstrating how it can be used to create highly realistic digital environments. These simulations are not only visually impressive but also serve as a sustainable alternative to traditional content creation methods. By leveraging AI and advanced graphics processing, NVIDIA aims to make digital content creation more efficient and environmentally friendly

1

.AI-Powered Tools for Content Creation

The company introduced a range of AI-powered tools designed to streamline the content creation process. These tools, which include advanced rendering capabilities and automated asset generation, are set to reduce the time and resources required for producing high-quality digital content. NVIDIA's commitment to AI integration in creative workflows was evident throughout their SIGGRAPH presentations

2

.Interoperability and OpenUSD

A key focus of NVIDIA's presentation was the promotion of interoperability within the digital content creation ecosystem. The company highlighted its support for OpenUSD (Universal Scene Description), an open-source framework for 3D computer graphics data interchange. By championing OpenUSD, NVIDIA aims to foster collaboration and efficiency across different software platforms and industries

2

.Related Stories

Impact on Industries

NVIDIA's innovations are poised to have a significant impact across various industries, including film production, game development, and architectural visualization. The company demonstrated how its technologies can be applied to create virtual production sets, design complex 3D environments, and simulate real-world scenarios with unprecedented accuracy

1

.Future of Digital Content Creation

Looking ahead, NVIDIA envisions a future where digital content creation is not only more sustainable but also more accessible and efficient. The company's investments in AI, simulation, and interoperability are laying the groundwork for a new era in creative industries, where the lines between physical and digital realities continue to blur

2

.References

Summarized by

Navi

[2]

Related Stories

Nvidia Unveils Blackwell Ultra GPUs and AI Desktops, Focusing on Reasoning Models and Revenue Generation

19 Mar 2025•Technology

Nvidia CEO Jensen Huang Unveils "Agentic AI" Vision at CES 2025, Predicting Multi-Trillion Dollar Industry Shift

07 Jan 2025•Technology

The Exponential Growth of AI Computing Power: From MIPS to Exaflops

07 Apr 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology