NVIDIA Unveils NVLink Fusion: Enabling Custom AI Infrastructure with Industry Partners

5 Sources

5 Sources

[1]

Nvidia announces NVLink Fusion to allow custom CPUs and AI Accelerators to work with its products

Nvidia made a slew of announcements at Computex 2025 here in Taipei, Taiwan, focused on its data center and enterprise AI initiatives, including its new NVLink Fusion program that allows customers and partners to use the company's key NVLink technology for their own custom rack-scale designs. The program enables system architects to leverage non-Nvidia CPUs or accelerators in tandem with Nvidia's products in its rack-scale architectures, thus opening up new possibilities. Nvidia has amassed a number of partners for the initiative, including Qualcomm and Fujitsu, who will integrate the technology into their CPUs. NVLink Fusion will also extend to custom AI accelerators, so Nvidia has roped in several silicon partners into the NVLink Fusion ecosystem, including Marvell and Mediatek, along with chip software design companies Synopsys and Cadence. NVLink has served as one of the key technologies that assured Nvidia's dominance in AI workloads, as communication speeds between GPUs and CPUs in an AI server are one of the largest barriers to scalability, and thus peak performance and power efficiency. Nvidia's NVLink is a proprietary interconnect for direct GPU-to-GPU and CPU-to-GPU communication that delivers far more bandwidth and superior latency than the standard PCIe interface, up to a 14X bandwidth advantage, even though it leverages the tried-and-true PCIe electrical interface. Nvidia has increased NVLink's performance over the course of several product generations, but the addition of custom NVLink Switch silicon allowed the company to extend NVLink from within a single server node to rack-scale architectures that enable massive clusters of GPUs to chew through AI workloads in tandem. Nvidia's NVLink advantage has thus served as a core advantage that its competitors, such as AMD and Broadcom, have failed to match. However, NVLink is a proprietary interface, and aside from the company's early work with IBM, Nvidia has largely kept this technology captive to products utilizing its own silicon. In 2022, Nvidia made its C2C (Chip-to-Chip) technology, an inter-die/inter-chip interconnect, available for other companies to use in their own silicon to facilitate communication with Nvidia GPUs by leveraging industry-standard Arm AMBA CHI and CXL protocols. However, the broader NVLink Fusion program is much broader, addressing larger scale-out and scale-up applications in rack-scale architectures using NVLink connections. NVLink Fusion changes that paradigm, allowing Fujitsu and Qualcomm to utilize the interface with their own CPUs, thus unlocking new options. Nvidia has also roped in custom silicon accelerators, like ASICs, from designers MediaTek, Marvell, and Alchip, enabling support for other types of custom AI accelerators to work in tandem with Nvidia's Grace CPUs. Astera Labs has also joined the ecosystem, presumably to provide specialized NVLink Fusion interconnectivity silicon. Chipmaking software providers Cadence and Synopsys have also joined the initiative to provide a robust set of design tools and IP. Qualcomm recently confirmed that it is bringing its own custom server CPU to market, and while details remain vague, the company's partnership with the NVLink ecosystem will allow its new CPUs to ride the wave of Nvidia's rapidly expanding AI ecosystem. Fujitsu has also been working on bringing its mammoth 144-core Monaka CPUs, which feature 3D-stacked CPU cores over memory, to market. "Fujitsu's next-generation processor, FUJITSU-MONAKA, is a 2-nanometer, Arm-based CPU aiming to achieve extreme power efficiency. Directly connecting our technologies to NVIDIA's architecture marks a monumental step forward in our vision to drive the evolution of AI through world-leading computing technology -- paving the way for a new class of scalable, sovereign and sustainable AI systems," said Vivek Mahajan, CTO at Fujitsu. Nvidia is also releasing new Nvidia Mission Control software to unify operations and orchestration, optimizing system-level validation and workload management, a key capability that speeds time to market. Nvidia rivals Broadcom, AMD, and Intel are notably absent from the NVLink Fusion ecosystem. These companies, and many more, are members of the Ultra Accelerator Link (UALink) consortium, which aims to provide an open industry-standard interconnect to rival NVLink, thus democratizing rack-scale interconnect technologies. Meanwhile, Nvidia's partners are forging ahead with chip design services and products the company says are available now.

[2]

NVIDIA Unveils NVLink Fusion for Industry to Build Semi-Custom AI Infrastructure With NVIDIA Partner Ecosystem

COMPUTEX -- NVIDIA today unveiled NVIDIA NVLink Fusion™ -- new silicon that lets industries build semi-custom AI infrastructure with the vast ecosystem of partners building with NVIDIA NVLink™, the world's most advanced and widely adopted computing fabric. MediaTek, Marvell, Alchip Technologies, Astera Labs, Synopsys and Cadence are among the first to adopt NVLink Fusion, enabling custom silicon scale-up to meet the requirements of demanding workloads for model training and agentic AI inference. Using NVLink Fusion, Fujitsu and Qualcomm Technologies CPUs can also be integrated with NVIDIA GPUs to build high-performance NVIDIA AI factories. "A tectonic shift is underway: for the first time in decades, data centers must be fundamentally rearchitected -- AI is being fused into every computing platform," said Jensen Huang, founder and CEO of NVIDIA. "NVLink Fusion opens NVIDIA's AI platform and rich ecosystem for partners to build specialized AI infrastructures." NVLink Fusion also equips cloud providers with an easy path to scale out AI factories to millions of GPUs, using any ASIC, NVIDIA's rack-scale systems and the NVIDIA end-to-end networking platform -- which delivers up to 800Gb/s of throughput and features NVIDIA ConnectX®-8 SuperNICs, NVIDIA Spectrum-X™ Ethernet and NVIDIA Quantum-X800 InfiniBand switches, with co-packaged optics available soon. A Technology Ecosystem Interconnected Using NVLink Fusion, hyperscalers can work with the NVIDIA partner ecosystem to integrate NVIDIA rack-scale solutions for seamless deployment in data center infrastructure. AI chipmaking partners creating custom AI compute deployable with NVIDIA NVLink Fusion include MediaTek, Marvell, Alchip, Astera Labs, Synopsys and Cadence. "By leveraging our world-class ASIC design services and deep expertise in high-speed interconnects, MediaTek is collaborating with NVIDIA to build the next generation of AI infrastructure," said Rick Tsai, vice chairman and CEO of MediaTek. "Our collaboration, which began in the automotive segment, now extends even further, enabling us to deliver scalable, efficient and flexible technologies that address the rapidly evolving needs of cloud-scale AI." "Marvell is collaborating with NVIDIA to redefine what's possible for AI factory integration," said Matt Murphy, chairman and CEO of Marvell. "Marvell custom silicon with NVLink Fusion gives customers a flexible, high-performance foundation to build advanced AI infrastructure -- delivering the bandwidth, reliability and agility required for the next generation of trillion-parameter AI models." "Alchip is supporting adoption of NVLink Fusion by broadening its availability through a design and manufacturing ecosystem, encompassing advanced processes and proven packaging and supported by the ASIC industry's most flexible engagement," said Johnny Shen, CEO of Alchip. "It's our contribution to ensuring that the next generation of AI models can be trained and deployed efficiently to meet the demands of tomorrow's intelligent applications." "Building on our rich history of close collaboration with NVIDIA, we are thrilled to add purpose-built connectivity solutions to address the NVLink Fusion ecosystem," said Jitendra Mohan, CEO of Astera Labs. "Low-latency and high-bandwidth scale-up interconnects with native support for memory semantics is critical for maximizing AI server utilization and performance. By expanding our scale-up connectivity portfolio with NVLink solutions, we are providing more optionality with faster time to market for our hyperscaler and enterprise AI customers." "Data centers are transforming into AI factories, and Synopsys' industry-leading AI chip design solutions and standards-based interface IP are mission-critical enablers," said Sassine Ghazi, president and CEO of Synopsys. "Our support for NVIDIA NVLink Fusion reflects our commitment to fostering an open and scalable ecosystem for next-generation AI and high-performance computing." "HPC and AI workload demands are unique and evolving rapidly, and hyperscalers architecting the most advanced custom AI systems rely on Cadence to deliver enabling technology from data centers to the edge," said Boyd Phelps, senior vice president and general manager of the Silicon Solutions Group at Cadence. "Our comprehensive IP portfolio, including design IP, chiplet infrastructure, subsystems and other critical IP, complements the NVIDIA NVLink ecosystem, accelerating the delivery of AI factories that are powerful, energy-efficient and production-ready at scale." NVLink Fusion also enables AI innovators like Fujitsu and Qualcomm Technologies to each couple their custom CPUs with NVIDIA GPUs in a rack-scale architecture to boost AI performance. "Combining Fujitsu's advanced CPU technology with NVIDIA's full-stack AI infrastructure delivers new levels of performance," said Vivek Mahajan, CTO at Fujitsu. "Fujitsu's next-generation processor, FUJITSU-MONAKA, is a 2-nanometer, Arm-based CPU aiming to achieve extreme power efficiency. Directly connecting our technologies to NVIDIA's architecture marks a monumental step forward in our vision to drive the evolution of AI through world-leading computing technology -- paving the way for a new class of scalable, sovereign and sustainable AI systems." "Qualcomm Technologies' advanced custom CPU technology with NVIDIA's full-stack AI platform brings powerful, efficient intelligence to data center infrastructure," said Cristiano Amon, president and CEO of Qualcomm Technologies. "With the ability to connect our custom processors to NVIDIA's rack-scale architecture, we're advancing our vision of high-performance, energy-efficient computing to the data center." NVIDIA NVLink Demonstrates Industry-Proven Scale To maximize AI factory throughput and performance in the most power-efficient way, the fifth-generation NVIDIA NVLink platform includes NVIDIA GB200 NVL72 and GB300 NVL72, compute-dense racks that provide a total bandwidth of 1.8 TB/s per GPU -- 14x faster than PCIe Gen5. Leading hyperscalers are already deploying NVIDIA NVLink full-rack solutions and can speed time to availability by standardizing their heterogenous silicon data centers on the NVIDIA rack architecture with NVLink Fusion. Software Crafted for AI Factories AI factories connected with NVIDIA NVLink Fusion are powered by NVIDIA Mission Control™, a unified operations and orchestration software platform that automates the complex management of AI data centers and workloads. NVIDIA Mission Control enhances every aspect of AI factory operations -- from configuring deployments to validating infrastructure to orchestrating mission-critical workloads -- to help enterprises get frontier models up and running faster. Availability NVIDIA NVLink Fusion silicon design services and solutions are available now from MediaTek, Marvell, Alchip, Astera Labs, Synopsys and Cadence.

[3]

At Computex, Nvidia debuts AI GPU compute marketplace, NVLink Fusion and the future of humanoid AI - SiliconANGLE

At Computex, Nvidia debuts AI GPU compute marketplace, NVLink Fusion and the future of humanoid AI Nvidia Corp. today announced a raft of advancements at Computex 2025 in Taipei across the spectrum of artificial intelligence, including an AI marketplace connecting the world's developers to GPU compute and humanoid robots. The company also unveiled Nvidia NVLink, a new silicon solution that lets partners build semicustom AI infrastructure using NVLink, the company's existing high-speed interconnect technology. Lepton is an AI platform that provides a compute marketplace that connects the world's developers building today's agentic and physical AI applications with tens of thousands of GPUs available from a global network of Nvidia's cloud partners. Nvidia's Cloud Partners include big names such as CoreWeave Inc., Crusoe Energy Systems LLC, Firmus Technologies Inc., Foxconn GMI Cloud Solutions Pvt. Ltd., Lambda Labs Inc., Nscale GmbH, Softbank Corp. and Yotta Data Services Private Ltd. Using Lepton, developers can tap into GPU compute capacity in specific regions for on-demand and long-term compute depending on their operational requirements. "Nvidia DGX Cloud Lepton connects our network of global GPU cloud providers with AI developers," said Jensen Huang, founder and chief executive of Nvidia. "Together with our NCPs, we're building a planetary-scale AI factory." Under the hood, Lepton abstracts away the purchase and pooling of management of compute for developers and allows partners to offer GPU capacity as a unified experience. Nvidia said this allows developers and businesses to focus on experimenting and building AI features; while flexibly receiving the GPU workloads they need to test, train and deploy AI models whenever they need them. Nvidia today announced the first update to its foundation model for humanoid robots, Isaac GR00T N1.5. Physical AI is the combination of AI with real-world physical interaction, allowing machines to perceive, understand and act upon the environment. Foundation models such as GR00T act as AI brains for robots that can perceive the world and reason about it, allowing them to behave similarly to humans so that they can complete tasks such as identifying objects and complete tasks such move through the world and pick up objects. GR00T N1.5 represents an upgrade over the last generation and can better adapt to new environments and workspace configurations. Nvidia said it significantly improves the model's success rates for common material handling and manufacturing tasks like sorting and putting away objects. It can be deployed on the company's Jetson Thor robot computer, launching later this year. Early adopters of GR00T N models include companies such as AeiRobot, Foxlink Lightweel and NEURa Robotics. Teaching robots requires a great deal of data and most of that has to be provided by human actors who can only deliver so much. That data is called "trajectories," or how the robot must move to grasp, handle and move objects to perform tasks in varied environments. There are only so many hours in a day for human actors to perform tasks to generate data, so Nvidia has created GR00T-Dreams, a way to generate videos of a robot performing new tasks in new environments. GR00T-Dreams can use a single image as the input and create videos for the robot to learn how to perform new tasks. In AI parlance, this is a way to produce synthetic data for robot AI training, giving large amounts of extra data that can be digested. GR00T-Dreams complements Isaac GR00T-Mimic blueprint, a tool that generates additional synthetic motion data from a small number of human demonstrations. Mimic uses Omniverse and Cosmos to augment existing data, and GR00T-Dreams generates entirely new data. Nvidia today announced NVLink Fusion, which it said is a custom central processing unit and accelerator at industry-proven scale. "A tectonic shift is underway: for the first time in decades, data centers must be fundamentally rearchitected -- AI is being fused into every computing platform," said Huang. Fusion is designed to provide cloud providers a way to scale up to millions of GPUs, using any application-specific integrated circuit, within Nvidia's rack-scale systems and the Nvidia end-to-end networking platform, which delivers up to 800 gigabits per second of throughput. The company said that using NVLink Fusion, Fujitsu Ltd. and Qualcomm Technologies Inc. CPUs can also be integrated with Nvidia GPUs to build high-performance Nvidia AI factories. AI chipmaker partners working with Nvidia, creating custom AI compute deployable on Nvidia NVLink, include MediaTek Inc., Marvell Technology Inc., Alchip Technologies Ltd., Astera Labs Inc., Synopsys Inc. and Cadence Design Systems Inc. "HPC and AI workload demands are unique and evolving rapidly, and hyperscalers architecting the most advanced custom AI systems rely on Cadence to deliver enabling technology from data centers to the edge," said Boyd Phelps, senior vice president and general manager of the Silicon Solutions Group at Cadence.

[4]

Nvidia Reveals Offering To Build Semi-Custom AI Systems With Hyperscalers

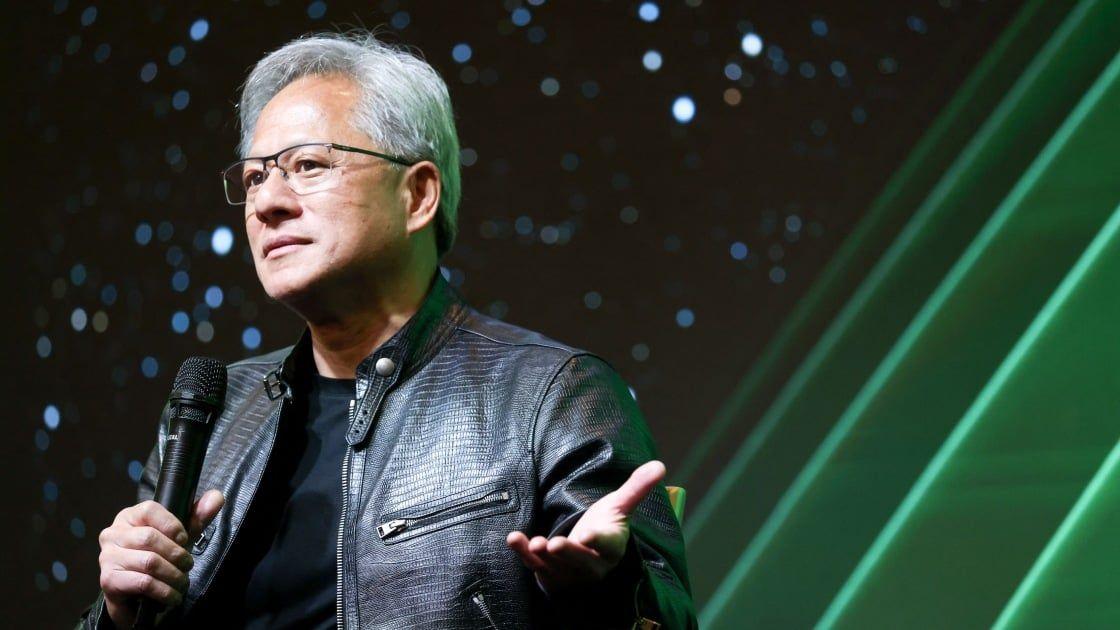

Nvidia reveals the NVLink Fusion offering that it says will allow the company to build semi-custom AI infrastructure with hyperscalers that are designing custom chips. 'This gives customers more choice and flexibility, while expanding the ecosystem and creating new opportunities for innovation with Nvidia at the center,' an Nvidia executive says. As hyperscalers like Amazon and Microsoft continue to diversify their supply chains by building custom AI chips, Nvidia is revealing a new silicon offering that it hopes will keep the company "at the center" of AI infrastructure for such customers. At Computex 2025 in Taiwan Monday, Nvidia unveiled NVLink Fusion, a silicon offering that it said will allow the company to use its NVLink interconnect technology to build semi-custom, rack-scale AI infrastructure with hyperscalers. [Related: How Dell, Lenovo And Supermicro Are Adapting To Nvidia's Fast AI Chip Transitions] The Santa Clara, Calif.-based company said several semiconductor firms, including MediaTek and Marvell, will adopt NVLink Fusion to create custom AI silicon that will be paired with the AI infrastructure giant's Arm-based Grace CPUs. Other companies planning to do this include Astera Labs, AIchip Technologies, Synopsys and Cadence. Design services from these companies are available now, according to Nvidia. Nvidia said it is also working with Fujitsu and Qualcomm, whose CPUs can be integrated with its GPUs using NVLink Fusion to "build high-performance Nvidia AI factories." "A tectonic shift is underway: For the first time in decades, data centers must be fundamentally rearchitected -- AI is being fused into every computing platform," said Nvidia founder and CEO Jensen Huang (pictured above) in a statement. "NVLink Fusion opens Nvidia's AI platform and rich ecosystem for partners to build specialized AI infrastructure." In a briefing, Dion Harris, senior director of high-performance computing and AI factory solutions go-to-market at Nvidia, said NVLink Fusion is the company's standard NVLink chip-to-chip interconnect technology made available for non-NVLink processors. "The reason why that's really important is when you think about the large hyperscale data centers that are being built today based on a lot of our scale-up architecture, this now allows them to go and standardize across their entire compute fleet on the Nvidia platform," he said. Nvidia uses NVLink in platforms like the GB200 NVL72 to provide high-speed connections between GPUs and CPUs. The company said the latest iteration provides a total bandwidth of 1.8 TBps per GPU, which is 14 times faster than PCIe Gen 5. In response to a question from CRN, Harris said Nvidia isn't announcing any customer engagements right now but teased that it will have "more updates in the near future." "This gives customers more choice and flexibility, while expanding the ecosystem and creating new opportunities for innovation with Nvidia at the center," he said. In the briefing, Nvidia showed diagrams of how NVLink Fusion can be used in two different rack-scale system configurations that resemble the company's GB300 NVL72 platform and future iterations. One configuration pairs Nvidia GPUs with a custom CPU while the other pairs Nvidia CPUs with a custom accelerator chip. Harris said these are the only two configurations the company is talking about for now and called them "a starting point." The implementation of NVLink Fusion is different depending on whether a customer wants to use a custom CPU or a custom accelerator chip, according to Harris. For the custom CPU configuration, the CPU is connected to Nvidia's GPUs via the NVLink chip-to-chip interconnect in a rack-scale platform. But for custom accelerator chips, Nvidia has developed an I/O chiplet that customers can integrate into their chip designs. Harris said the I/O chiplet has been "taped out," referring to the final stage of the design process before it is manufactured. This I/O chiplet will let the accelerator chip interface with the NVLink switch, which enables "access to the custom, scale-up NVLink architecture," and the NVLink chip-to-chip interconnect, which enables communication with Nvidia's Grace CPU, according to Harris. These NVLink Fusion-based systems take advantage of Nvidia's end-to-end networking platform, which includes the company's ConnectX-8 SuperNICs as well as the Spectrum-X Ethernet and Quantum-X InfiniBand switches. "This gives them a proven scale-up and scale-out solution that they can deploy and basically accelerate their time-to-market for having that rack-scale solution for their custom compute," Harris said.

[5]

DGX Spark, NVLink Fusion, RTX PRO Servers - NVIDIA's Full AI Stack Revealed

At COMPUTEX 2025, NVIDIA CEO Jensen Huang returned to Taipei with his trademark leather jacket and an arsenal of announcements that reinforce the company's vision of a world driven by AI factories. From partnerships with Foxconn and TSMC to rack-scale systems, semi-custom silicon, and desktop AI workstations, this year's keynote was less about launching a single chip and more about building the infrastructure for AI at every level -- from cloud to desktop. Here's a breakdown of everything NVIDIA revealed. Not all AI factories need 10,000 GPUs. For enterprises looking to deploy AI on-premises, NVIDIA introduced its new RTX PRO Servers -- built around the Blackwell-based RTX PRO 6000 Server Edition GPUs. These servers support up to 8 GPUs and come bundled with BlueField DPUs, Spectrum-X networking, and NVIDIA AI Enterprise software. The emphasis here is on universal acceleration -- allowing enterprises to run everything from multimodal AI inference and graphics to simulation and design workloads. NVIDIA also introduced a validated reference design called the "Enterprise AI Factory." Major OEMs such as Dell, HPE, Cisco, Lenovo, and Supermicro will be shipping servers based on this spec. Consulting giants including TCS, Accenture, Infosys and Wipro are already gearing up to help enterprises transition to Blackwell-based AI infrastructures. One of the most interesting announcements for the AI developer community was the introduction of DGX Spark and DGX Station -- high-end personal computing systems built for local AI workloads. The DGX Spark is powered by the GB10 Grace Blackwell Superchip and delivers 1 petaflop of AI performance. With 128GB of unified memory, it's designed for developers and researchers working on generative AI models who need data locality and privacy. The DGX Station takes it up several notches. Featuring the GB300 Grace Blackwell Ultra chip, it can reach 20 petaflops and 784GB of unified system memory. It supports up to seven GPU instances thanks to NVIDIA's Multi-Instance GPU tech and offers 800Gb/s connectivity via ConnectX-8 SuperNICs. Both systems are software-aligned with NVIDIA's DGX Cloud infrastructure and include support for industry-standard tools like PyTorch, Ollama, and Jupyter -- making it seamless to move workloads from local to cloud or hybrid environments. ASUS, Dell, GIGABYTE, HP, MSI, and others are set to ship these systems starting July. The biggest announcement centred around a new AI supercomputing factory being built in Taiwan. In partnership with Foxconn's Big Innovation Company, NVIDIA will provide Blackwell-powered infrastructure -- specifically 10,000 Blackwell GPUs -- to form the heart of a national AI cloud system. The AI factory will serve as the backbone for Taiwanese R&D efforts, with stakeholders including the Taiwan National Science and Technology Council and TSMC. The latter plans to leverage this infrastructure to dramatically accelerate semiconductor development. This system isn't just about compute -- it's a symbol of sovereignty. The goal: make Taiwan a "smart AI island" filled with smart cities, smart factories, and AI-native industries. NVIDIA also announced NVLink Fusion -- a new silicon interconnect platform that lets partners like MediaTek, Marvell, Alchip, and Qualcomm pair their own CPUs or custom silicon with NVIDIA GPUs. In essence, it enables industries to build semi-custom AI infrastructure by combining their compute IP with NVIDIA's scale-out capabilities. Partners including Fujitsu and Qualcomm plan to integrate their CPUs into NVIDIA's architecture, enabling a new class of sovereign, power-efficient AI data centres. NVLink Fusion supports throughput up to 800Gb/s and connects GPUs across racks with low latency. NVIDIA sees this as a necessary evolution for AI -- one where hyperscalers and sovereign cloud providers can build their own systems tailored for specific national or enterprise needs. For developers and enterprises that don't want to build in-house infrastructure, NVIDIA launched DGX Cloud Lepton -- a global compute marketplace that connects developers to GPU capacity offered by cloud partners. Participating vendors include CoreWeave, Foxconn, Lambda, and SoftBank, among others. Lepton allows developers to reserve GPU capacity -- from Blackwell to Hopper -- in specific regions, offering flexibility for sovereignty, low latency, or long-term workloads. Lepton is tightly integrated with NVIDIA's AI stack, including NIM inference microservices, NeMo, and Blueprints. For cloud providers, it also provides real-time GPU health monitoring and root-cause diagnostics -- reducing operational complexity. In parallel, NVIDIA also introduced "Exemplar Clouds" -- a reference framework for cloud partners to ensure security, resiliency, and performance, using NVIDIA's best practices. Though not a front-and-centre announcement, NVIDIA also revealed it is powering the world's largest quantum research supercomputer -- ABCI-Q -- located at the G-QuAT centre in Japan. The system uses over 2,000 H100 GPUs and supports hybrid workloads that combine quantum processors from Fujitsu, QuEra, and OptQC with GPU-accelerated AI. NVIDIA's CUDA-Q platform provides the orchestration layer here, allowing researchers to simulate and run quantum-classical hybrid applications. The hope is that this system will help fast-track quantum error correction, algorithm testing, and broader commercial use cases. This year's COMPUTEX keynote was less about flashy product launches and more about NVIDIA's continued evolution into an AI infrastructure company. While the Blackwell architecture continues to power most of the announcements, the bigger story is how NVIDIA is positioning itself as the backbone of AI -- whether that's sovereign clouds in Taiwan, semi-custom silicon via NVLink Fusion, or personal AI machines for developers. From hyperscale to desktop, NVIDIA wants to be the platform on which the next decade of AI innovation is built -- and at COMPUTEX 2025, Jensen Huang made it clear that the AI factory era is no longer aspirational. It's already here.

Share

Share

Copy Link

NVIDIA announces NVLink Fusion, allowing partners to build semi-custom AI infrastructure using NVIDIA's interconnect technology. This move opens up new possibilities for AI system design and collaboration.

NVIDIA Introduces NVLink Fusion for Custom AI Infrastructure

NVIDIA has unveiled NVLink Fusion, a groundbreaking technology that allows industry partners to build semi-custom AI infrastructure using NVIDIA's advanced interconnect technology. Announced at Computex 2025 in Taipei, this initiative marks a significant shift in NVIDIA's approach to AI system design and collaboration

1

2

.Expanding the AI Ecosystem

NVLink Fusion opens up NVIDIA's AI platform and ecosystem to partners, enabling them to create specialized AI infrastructures. This move is in response to the growing need for customized AI solutions in data centers and cloud environments. Jensen Huang, NVIDIA's founder and CEO, emphasized the importance of this development, stating, "A tectonic shift is underway: for the first time in decades, data centers must be fundamentally rearchitected -- AI is being fused into every computing platform"

2

.Key Partners and Adopters

Several major tech companies have already joined the NVLink Fusion ecosystem:

- Chip manufacturers: MediaTek, Marvell, and Alchip Technologies

- Interconnect specialists: Astera Labs

- Design tool providers: Synopsys and Cadence

- CPU manufacturers: Fujitsu and Qualcomm Technologies

2

3

These partnerships will enable the creation of custom AI compute solutions that can seamlessly integrate with NVIDIA's GPUs and rack-scale architectures.

Technical Capabilities and Benefits

NVLink Fusion offers significant advantages for AI infrastructure:

- High-speed interconnect: Up to 14 times faster than PCIe Gen 5, with 1.8 TBps bandwidth per GPU

4

- Scalability: Enables cloud providers to scale out AI factories to millions of GPUs

- Flexibility: Supports custom CPUs and AI accelerators in rack-scale architectures

- Networking performance: Up to 800Gb/s throughput with NVIDIA's end-to-end networking platform

2

4

Related Stories

Impact on AI Development and Deployment

The introduction of NVLink Fusion is expected to have far-reaching effects on the AI industry:

- Accelerated innovation: Partners can leverage NVIDIA's technology to create specialized AI solutions more rapidly

- Improved performance: Custom designs can optimize AI workloads for specific use cases

- Expanded ecosystem: More companies can participate in building advanced AI infrastructure

- Flexibility for cloud providers: Hyperscalers can integrate custom components while maintaining compatibility with NVIDIA's ecosystem

3

5

Future Outlook and Industry Implications

NVLink Fusion represents a strategic move by NVIDIA to maintain its central position in the AI infrastructure market. As hyperscalers and other companies develop their own custom AI chips, NVIDIA's technology will remain a crucial component in many systems

5

.The initiative also aligns with broader industry trends towards more specialized and efficient AI computing solutions. By enabling partners to create semi-custom designs, NVIDIA is fostering an environment of innovation while ensuring its technology remains at the core of many AI systems

4

5

.As the AI industry continues to evolve rapidly, NVLink Fusion positions NVIDIA to adapt to changing market demands while leveraging its strengths in GPU technology and interconnects. This flexible approach may prove crucial in maintaining NVIDIA's leadership in the competitive and fast-paced world of AI infrastructure.

References

Summarized by

Navi

[1]

[3]

Related Stories

Nvidia CEO Jensen Huang Unveils New AI Technologies at Computex 2025

15 May 2025•Technology

Nvidia's GTC 2025: Ambitious AI Advancements Amid Growing Challenges

15 Mar 2025•Business and Economy

NVIDIA Unveils Vision for Next-Gen 'AI Factories' with Vera Rubin and Kyber Architectures

13 Oct 2025•Technology

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology