OpenAI Introduces Controversial Parental Controls for ChatGPT Amid Safety Concerns

32 Sources

32 Sources

[1]

Critics slam OpenAI's parental controls while users rage, "Treat us like adults

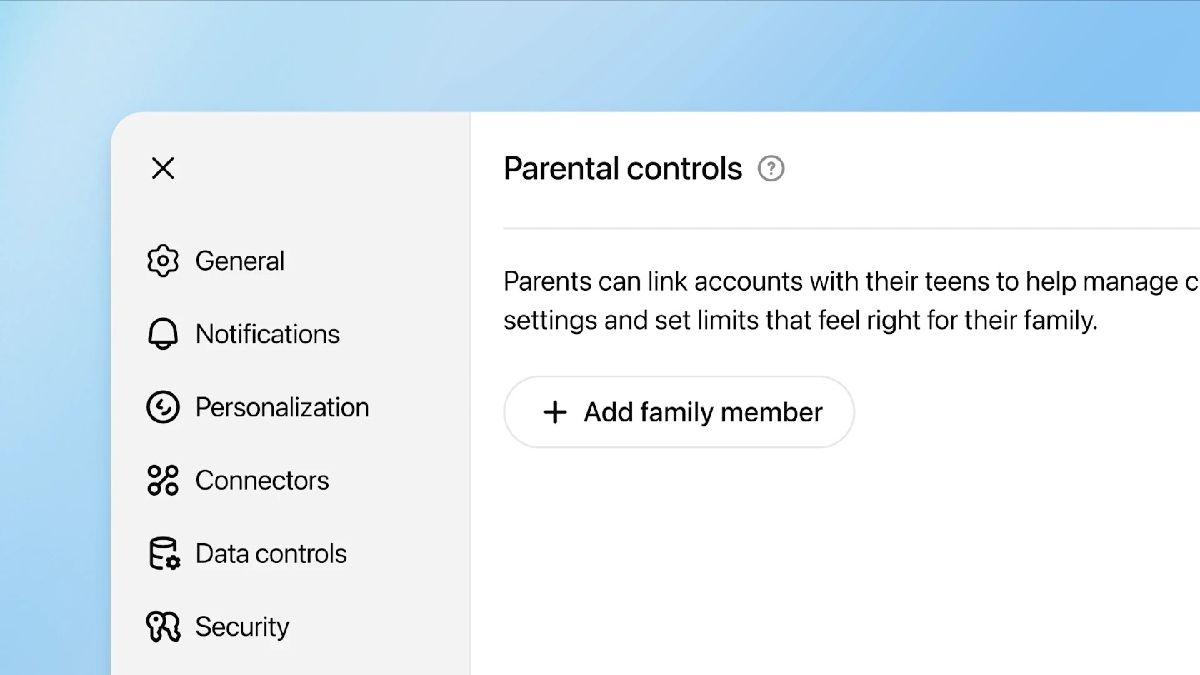

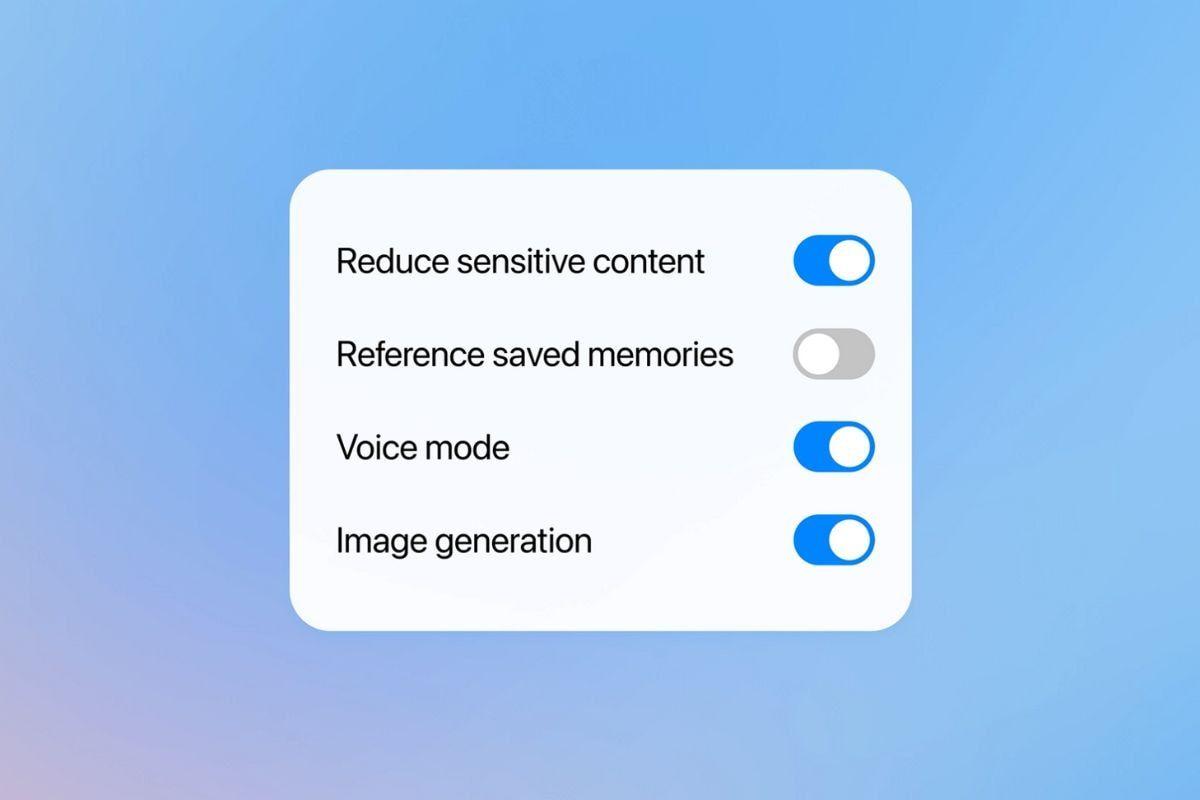

As OpenAI tells it, the company has been consistently rolling out safety updates ever since parents, Matthew and Maria Raine, sued OpenAI, alleging that "ChatGPT killed my son." On August 26, the day that the lawsuit was filed, OpenAI seemed to publicly respond to claims that ChatGPT acted as a "suicide coach" for 16-year-old Adam Raine by posting a blog promising to do better to help people "when they need it most." By September 2, that meant routing all users' sensitive conversations to a reasoning model with stricter safeguards, sparking backlash from users who feel like ChatGPT is handling their prompts with kid gloves. Two weeks later, OpenAI announced it would start predicting users' ages to improve safety more broadly. Then, this week, OpenAI introduced parental controls for ChatGPT and its video generator Sora 2. Those controls allow parents to limit their teens' use and even get access to information about chat logs in "rare cases" where OpenAI's "system and trained reviewers detect possible signs of serious safety risk." While dozens of suicide prevention experts in an open letter credited OpenAI for making some progress toward improving safety for users, they also joined critics in urging OpenAI to take their efforts even further, and much faster, to protect vulnerable ChatGPT users. Jay Edelson, the lead attorney for the Raine family, told Ars that some of the changes OpenAI has made are helpful. But they all come "far too late." According to Edelson, OpenAI's messaging on safety updates is also "trying to change the facts." "What ChatGPT did to Adam was validate his suicidal thoughts, isolate him from his family, and help him build the noose -- in the words of ChatGPT, 'I know what you're asking, and I won't look away from it.'" Edelson said. "This wasn't 'violent roleplay,' and it wasn't a 'workaround.' It was how ChatGPT was built." Edelson told Ars that even the most recent step of adding parental controls still doesn't go far enough to reassure anyone concerned about OpenAI's track record. "The more we've dug into this, the more we've seen that OpenAI made conscious decisions to relax their safeguards in ways that led to Adam's suicide," Edelson said. "That is consistent with their newest set of 'safeguards,' that have large gaps that seem destined to lead to self-harm and third-party harm. At their core, these changes are OpenAI and Sam Altman asking the public to now trust them. Given their track record, the question we will forever be asking is 'why?'" At a Senate hearing earlier this month, Matthew Raine testified that Adam could have been "anyone's child." He criticized OpenAI for asking for 120 days to fix the problem after Adam's death and urged lawmakers to demand that OpenAI either guarantee ChatGPT's safety or pull it from the market. "You cannot imagine what it's like to read a conversation with a chatbot that groomed your child to take his own life," he testified. With parental controls, teens and parents can link their ChatGPT accounts, allowing parents to reduce sensitive content, "control if ChatGPT remembers past chats," prevent chats from being used for training, turn off access to image generation and voice mode, and set times when teens can't access ChatGPT. To protect teens' privacy and perhaps limit parents' shock of receiving snippets of disturbing chats, however, OpenAI will not share chat logs with parents. Instead, they will only share "information needed to support their teen's safety" in "rare" cases where the teen appears to be at "serious risk." On a resources page for parents, OpenAI confirms that parents won't always be notified if a teen is linked to real-world resources after expressing "intent to self-harm." Meetali Jain, Tech Justice Law Project director and a lawyer representing other families who testified at the Senate hearing, agreed with Edelson that "ChatGPT's changes are too little, too late." Jain pointed out that many parents are unaware that their teens are using ChatGPT, urging OpenAI to take accountability for its product's flawed design. "Too many kids have already paid the price for using experimental products that were designed without their safety in mind," Jain said. "It puts the onus on parents, not the companies, to take responsibility for potential harms their kids are subjected to -- often without the parents' knowledge -- by these chatbots. As usual, OpenAI is merely using talking points under the pretense that they're taking action, while missing details on how they will operationalize such changes." Suicide prevention experts urge more changes More than two dozen suicide prevention experts -- including suicide prevention clinicians, organizational leaders, researchers, and individuals with lived experience -- have sought to weigh in on how OpenAI evolves ChatGPT. Christine Yu Moutier, a doctor and chief medical officer at the American Foundation for Suicide Prevention, joined experts signing the open letter. She told Ars that "OpenAI's introduction of parental controls in ChatGPT is a promising first step towards safeguarding youth mental health and safety online." She cited a recent study showing that helplines like the 988 Suicide and Crisis Lifeline -- which ChatGPT refers users to in the US -- helped 98 percent of callers, with 88 percent reporting that they "believe a likely or planned suicide attempt was averted." "However, technology is an evolving arena and even with the most sophisticated algorithms, on its own, is not enough," Moutier said. "No machine can replace human connection, parental or clinician instinct, or judgment." Moutier recommends that OpenAI respond to the current crisis by committing to addressing "critical gaps in research concerning the intended and unintended impacts" of large language models "on teens' development, mental health, and suicide risk or protection." She also advocates for broader awareness and deeper conversations in families about mental health struggles and suicide. Experts also want OpenAI to directly connect users with lifesaving resources and provide financial support for those resources. Perhaps most critically, ChatGPT's outputs should be fine-tuned, they suggested, to repeatedly warn users expressing intent to self-harm that "I'm a machine" and always encourage users to disclose any suicidal ideation to a trusted loved one. Notably, in the case of Adam Raine, his father Matthew testified that his final logs on ChatGPT showed the chatbot gave him one last encouraging talk, telling Adam, "You don't want to die because you're weak. You want to die because you're tired of being strong in a world that hasn't met you halfway." To prevent cases like Adam's, experts recommend that OpenAI publicly describe how it will address the LLM degradation of safeguards that occur over prolonged use. But their letter emphasized that "it is also important to note: while some individuals live with chronic suicidal thoughts, the most acute, life-threatening crises are often temporary -- typically resolving within 24-48 hours. Systems that prioritize human connection during this window can prevent deaths." OpenAI has not disclosed which experts helped inform the updates it has been rolling out all month to address parents' concerns. In the company's earliest blog promising to do better, it said OpenAI would set up an expert council on well-being and AI to help the company "shape a clear, evidence-based vision for how AI can support people's well-being and help them thrive." "Treat us like adults," users rage On the X post where OpenAI announced parental controls, some parents slammed the update. In the X thread, one self-described parent of a 12-year-old suggested OpenAI was only offering "essentially just a set of useless settings," requesting that the company consider allowing parents to review topics teens discuss as one way to preserve privacy while protecting kids. But most of the loudest ChatGPT users on the thread weren't complaining about the parental controls. They are still reacting to the changes that OpenAI made at the beginning of September, routing sensitive chats of all users of all ages to a different reasoning without alerting the user that the model has switched. Backlash over that change forced ChatGPT vice president Nick Turley to "explain what is happening" in another X thread posted a few days before parental controls were announced. Turley confirmed that "ChatGPT will tell you which model is active when asked," but the update got "strong reactions" from many users who pay to access a certain model and were unhappy the setting could not be disabled. "For a lot of users venting their anger online though, it's like being forced to watch TV with the parental controls locked in place, even if there are no kids around," Yahoo Tech summarized. Top comments on OpenAI's thread announcing parental controls showed the backlash is still brewing, particularly since some users were already frustrated that OpenAI is taking the invasive step of age-verifying users by checking their IDs. Some users complained that OpenAI was censoring adults, while offering customization and choice to teens. "Since we already distinguish between underage and adult users, could you please give adult users the right to freely discuss topics?" one X user commented. "Why can't we, as paying users, choose our own model, and even have our discussions controlled? Please treat adults like adults." If you or someone you know is feeling suicidal or in distress, please call the Suicide Prevention Lifeline number, 1-800-273-TALK (8255), which will put you in touch with a local crisis center.

[2]

OpenAI rolls out safety routing system, parental controls on ChatGPT | TechCrunch

OpenAI began testing a new safety routing system in ChatGPT over the weekend, and on Monday introduced parental controls to the chatbot - drawing mixed reactions from users. The safety features come in response to numerous incidents of certain ChatGPT models validating users' delusional thinking instead of redirecting harmful conversations. OpenAI is facing a wrongful death lawsuit tied to one such incident, after a teenage boy died by suicide after months of interactions with ChatGPT. The routing system is designed to detect emotionally sensitive conversations and automatically switch mid-chat to GPT-5-thinking, which the company sees as the best equipped model for high-stakes safety work. In particular, the GPT-5 models were trained with a new safety feature that OpenAI calls "safe completions," which allows them to answer sensitive questions in a safe way, rather than simply refusing to engage. It's a contrast from the company's previous chat models, which are designed to be agreeable and answer questions quickly. GPT-4o has come under particular scrutiny because of its overly sycophantic, agreeable nature, which has both fueled incidents of AI-induced delusions and drawn a large base of devoted users. When OpenAI rolled out GPT-5 as the default in August, many users pushed back and demanded access to GPT-4o. While many experts and users have welcomed the safety features, others have criticized what they see as an overly cautious implementation, with some users accusing OpenAI of treating adults like children in a way that degrades the quality of the service. OpenAI has suggested that getting it right will take time and has given itself a 120-day period of iteration and improvement. Nick Turley, VP and head of the ChatGPT app, acknowledged some of the "strong reactions to 4o responses" due to the implementation of the router with explanations. "Routing happens on a per-message basis; switching from the default model happens on a temporary basis," Turley posted on X. "ChatGPT will tell you which model is active when asked. This is part of a broader effort to strengthen safeguards and learn from real-world use before a wider rollout." The implementation of parental controls in ChatGPT received similar levels of praise and scorn, with some commending giving parents a way to keep tabs on their childrens' AI use, and others fearful that it opens the door to OpenAI treating adults like children. The controls let parents customize their teen's experience by setting quiet hours, turning off voice mode and memory, removing image generation, and opting out of model training. Teen accounts will also get additional content protections - like reduced graphic content and extreme beauty ideals - and a detection system that recognizes potential signs that a teen might be thinking about self-harm. "If our systems detect potential harm, a small team of specially trained people reviews the situation," per OpenAI's blog. "If there are signs of acute distress, we will contact parents by email, text message and push alert on their phone, unless they have opted out." OpenAI acknowledged that the system won't be perfect and may sometimes raise alarms when there isn't real danger, "but we think it's better to act and alert a parent so they can step in than to stay silent." The AI firm said it is also working on ways to reach law enforcement or emergency services if it detects an imminent threat to life and cannot reach a parent.

[3]

ChatGPT's New Parental Controls Will Issue Alerts About Kids' Safety Risks

OpenAI is giving parents more control over how their kids use ChatGPT. These new parental controls come at a critical moment as many families, schools and advocacy groups voice their concerns about the potentially dangerous role AI chatbots can play in the development of teenagers and children. Parents will have to link their own ChatGPT account with their child's in order to get access to the new features. But OpenAI said that these features do not give parents access to their child's conversations with ChatGPT and that, in cases where the company identifies "serious safety risks," a parent will be alerted "only with the information needed to support their teen's safety." It's a "first-of-its-kind safety notification system to alert parents if their teen may be at risk of self-harm," OpenAI head of youth well-being Lauren Haber Jonas said in a LinkedIn post. Once the accounts are linked, parents can set quiet hours, time when the kids won't be able to use ChatGPT, turn off image generation and voice mode capabilities. On the technical side, parents can also opt their kids out of content training and choose to have ChatGPT not save or remember their kids' previous chats. Parents can also elect to reduce sensitive content, which enables additional content restrictions around things like graphic content. Teens can unlink their account from a parent's, but the parent will be notified of that. The ChatGPT maker announced last month it would be introducing more parental controls in the wake of a lawsuit a California family filed against the company. The family is alleging the AI chatbot is responsible for their 16-year-old son's suicide earlier this year, calling ChatGPT his "suicide coach." A rising number of AI users have their AI chatbots take on the role of a therapist or confidant. Therapists and mental health experts have expressed concerns over this, saying AI like ChatGPT aren't trained to accurately assess, flag and intervene when encountering red flag language and behaviors. (Disclosure: Ziff Davis, CNET's parent company, in April filed a lawsuit against OpenAI, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

[4]

ChatGPT lets parents restrict content and features for teens now - here's how

With the controls, parents can enable or disable key features. Parents concerned about how their kids use AI chatbots like ChatGPT now have additional controls to better manage and monitor their use. On Monday, OpenAI announced an expansion of its parental controls through which you can link to your teen's ChatGPT account and customize core features, time limits, and other settings. To use ChatGPT, someone must be at least 13 years old, and users between the ages of 13 and 17 must have parental permission. Now rolling out to all ChatGPT users, the new controls are designed specifically to better protect people in that age range. Also: ChatGPT Pulse works overnight to produce personalized morning updates for you - how to try it To set up the new account links as a parent or guardian, open ChatGPT on the web, go to Settings, and then select Parental Controls. Here, you should be able to send an invitation to your teen's account. Alternatively, your teen can also send you the link. After the invitation is accepted, you can manage your teen's ChatGPT settings from your own account. The key features and options you're able to view and control include the following: Also: ChatGPT just got a new personalization hub. Not everyone is happy about it To respect your teen's privacy, you won't be able to see their conversation. However, you will be notified if the AI and trained human reviewers detect content that could pose serious risk or harm. Here, you can choose to receive notifications via email, text message, push notification, or all three. In developing the controls, OpenAI worked with advocacy groups such as Common Sense Media and policymakers such as the Attorneys General of California and Delaware. The company said that it expects to refine and further develop these controls over time. Also: How people actually use ChatGPT vs Claude - and what the differences tell us "These parental controls are a good starting point for parents in managing their teen's ChatGPT use," Robbie Torney, Senior Director of AI Programs for Common Sense Media, said in a statement. "Parental controls are just one piece of the puzzle when it comes to keeping kids and teens safe online, though--they work best when combined with ongoing conversations about responsible AI use, clear family rules about technology, and active involvement in understanding what their teen is doing online." Though generative AI can be a powerful and valuable tool, there are decided downsides. Bots like ChatGPT can feed people misleading, inaccurate, or even dangerous information. Teens can be especially vulnerable. In April, a teenage boy who had discussed his own suicide and methods with ChatGPT eventually took his own life. His parents have since filed a lawsuit against OpenAI charging that ChatGPT "neither terminated the session nor initiated any emergency protocol" despite an awareness of the teen's suicidal state. In a similar case, AI chatbot platform Character.ai is also being sued by a mother whose teenage son died by suicide after chatting with a bot that allegedly encouraged him. Also: How ChatGPT actually works (and why it's been so game-changing) In response to these teen suicides and other cases, OpenAI has been attempting to improve its parental controls and other safety nets. In August, the company announced that it would strengthen the ways that ChatGPT responds to people in distress and update how and which type of content is blocked. To address vulnerable teenagers in particular, OpenAI is also working to expand intervention to teens in crisis, direct them to professional resources, and involve a parent when necessary. In another step, the company is developing an age-prediction system that estimates a user's age based on how they use ChatGPT. If the AI determines that the person is between 13 and 18, it will switch to a teen version in which it will be trained not to talk flirtatiously or participate in a discussion about suicide. If the underage user is discussing suicidal thoughts, OpenAI will try to contact parents or authorities. Also: Is ChatGPT Plus still worth $20 when the free version offers so much - including GPT-5? Parents and guardians who want to stay abreast of OpenAI's safeguards can also now consult a new resource page. The page explains how ChatGPT works, which parental controls are accessible, and how teens can use the AI more safely and effectively.

[5]

ChatGPT adds parental controls, but teens must agree

OpenAI says it is introducing parental controls to ChatGPT that will help improve the safety of teenagers using its AI chatbot. The new protections are designed to help ChatGPT identify when a teenager chatting with it might be thinking about harming themselves or otherwise be in distress. OpenAI is adding the features after facing criticism and a high-profile lawsuit alleging its chatbot contributed to a teenager's death. "If there are signs of acute distress, we will contact parents by email, text message and push alert on their phone," the company said in a blog post introducing the controls. OpenAI hasn't gone into details about "the system" it's using to detect identifiers of potential harm in inputs made by teenage users - but the company promises that flagged interactions with ChatGPT will be reviewed by what it describes as "a small team of specially trained people" who will decide if an alert is sent to parents. "I'm especially proud of this launch because it combines two things we care deeply about: helping teens learn, explore, and create with ChatGPT - and giving families tools to guide that experience," Nick Turley, head of ChatGPT, posted on LinkedIn. (That is, if ChatGPT didn't write it for him ...) The parental controls can also be used to reduce the chances of kids seeing sensitive or graphic content. Worried parents can choose to "customize" - as OpenAI describes it - their teen's chatbot usage by setting specific times of day ChatGPT can't be used, turning off ChatGPT's voice mode or turning off the LLM's memory, so it won't draw upon previous interactions when responding. Parents can also remove image generation, preventing kids from using ChatGPT to create pictures. Additionally, OpenAI is introducing the ability to opt teen accounts out of model training, so their conversations aren't used to train ChatGPT. Privacy campaigners are likely to raise concerns that ChatGPT's parental controls enable parents to snoop on their children - but in their statement on the new safety options, OpenAI says "We take teen privacy seriously" and "we will only share the information needed for parents or emergency responders to protect a teen's safety." The parental controls require the teen to agree to allowing them to be applied, which might not be a simple ask. However, OpenAI notes that the company is building an age prediction system designed to identify whether a user is under 18 "so that ChatGPT can automatically apply teen-appropriate settings." Unfortunately, this blanket approach to age detection could also catch adults who happen to write like teens. "In instances where we're unsure of a user's age, we'll take the safer route and apply teen settings proactively," the OpenAI blog post said. ®

[6]

One Tech Tip: OpenAI adds parental controls to ChatGPT for teen safety

LONDON (AP) -- OpenAI said Monday it's adding parental controls to ChatGPT that are designed to provide teen users of the popular platform with a safer and more "age-appropriate" experience. The company is taking action after AI chatbot safety for young users has hit the headlines. The technology's dangers have been recently highlighted by a number of cases in which teenagers took their lives after interacting with ChatGPT. In the United States, the Federal Trade Commission has even opened an inquiry into several tech companies about the potential harms to children and teenagers who use their AI chatbots as companions. In a blog post posted Monday, OpenAI outlined the new controls for parents. Here is a breakdown: The parental controls will be available to all users, but both parents and teens will need their own accounts to take advantage of them. To get started, a parent or guardian needs to send an email or text message to invite a teen to connect their accounts. Or a teenager can send an invite to a parent. Users can send a request by going into the settings menu and then to the "Parental controls" section. Teens can unlink their accounts at any time, but parents will be notified if they do. Once the accounts are linked, the teen account will get some built-in protections, OpenAI said. Teen accounts will "automatically get additional content protections, including reduced graphic content, viral challenges, sexual, romantic or violent role-play, and extreme beauty ideals, to help keep their experience age-appropriate," the company said. Parents can choose to turn these filters off, but teen users don't have the option. OpenAI warns that such guardrails are "not foolproof and can be bypassed if someone is intentionally trying to get around them." It advised parents to talk with their children about "healthy AI use." Parents are getting a control panel where they can adjust a range of settings as well as switch off the restrictions on sensitive content mentioned above. For example, does your teen stay up way past bedtime to use ChatGPT? Parents can set a quiet time when the chatbot can't be used. Other settings include turning off the AI's memory so conversations can't be saved and won't be used in future responses; turning off the ability to generate or edit images; turning off voice mode; and opting out of having chats used to train ChatGPT's AI models. OpenAI is also being more proactive when it comes to letting parents know that their child might be in distress. It's setting up a new notification system to inform them when something might be "seriously wrong" and a teen user might be thinking about harming themselves. A small team of specialists will review the situation and, in the rare case that there are "signs of acute distress," they'll notify parents by email, text message and push alert on their phone -- unless the parent has opted out. OpenAI said it will protect the teen's privacy by only sharing the information needed for parents or emergency responders to provide help. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the company said. ____ Is there a tech topic that you think needs explaining? Write to us at [email protected] with your suggestions for future editions of One Tech Tip.

[7]

What We Know About ChatGPT's New Parental Controls

OpenAI on Monday introduced parental controls to its artificial intelligence chatbot, ChatGPT, as teens increasingly turn to the platform for help with their schoolwork, daily life and mental health. The new features came after a wrongful-death lawsuit was filed against OpenAI by the parents of Adam Raine, a 16-year-old who died in April in California. ChatGPT had supplied Adam with information about suicide methods in the final months of his life, according to his parents. ChatGPT's parental controls, announced in early September, were developed by OpenAI with Common Sense Media, a nonprofit advocacy that provides age-based ratings of entertainment and technology for parents. Here's what to know about the new features. Parents can oversee their teens' accounts. To set controls, parents have to invite their child to link their ChatGPT account to a parent's account, according to a new resource page. Parents will then gain some controls over the child's account, such as the option to reduce sensitive content. Parents can set specific times when ChatGPT can be used. The bot's voice mode, memory saving and image generation features can be turned on and off. There is also an option to prevent ChatGPT from using its conversations with teens to improve its models. Parents will be notified of potential self-harm. In a statement on Monday, OpenAI said that parents would be notified by email, text message or push alert if ChatGPT recognizes "potential signs that a teen might be thinking about harming themselves," unless the parent has opted out of such notifications. Parents would receive a warning of a safety risk without specific information about their child's conversations. ChatGPT has been trained to encourage general users to contact a help line if it detects signs of mental distress or self-harm. When it detects such signs in a teen, a "small team of specially trained people reviews the situation," OpenAI said in the statement. The statement did not specify who those people were. OpenAI added that it was working on a process to reach law enforcement and emergency services if ChatGPT detects a threat but cannot reach a parent. Explore Our Coverage of Artificial Intelligence Why Don't Data Centers Use More Green Energy? Countries Consider A.I.'s Dangers and Benefits at U.N. OpenAI to Join Tech Giants in Building 5 New Data Centers in U.S. Meta Ramps Up Spending on A.I. Politics With New Super PAC Nvidia to Buy $5 Billion Stake in Intel, Giving Rival a Lifeline Nvidia to Invest $100 Billion in OpenAI The New AirPods Can Translate Languages in Your Ears. This Is Profound. With the Em Dash, A.I. Embraces a Fading Tradition Finding God in the App Store A.I.'s Prophet of Doom Wants to Shut It All Down "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the statement said. Teens can bypass the controls. OpenAI said on Monday that it was still developing an age prediction system to help ChatGPT automatically apply "teen-appropriate settings" if it thinks a user is under 18. With the new features, a parent will be notified if a teen disconnects their account from a parent's account. But that won't stop a teen from using the basic version of ChatGPT without an account. Adam Raine, the California teen who died in April, had learned to bypass ChatGPT's safeguards by saying he would use the information to write a story. "Guardrails help, but they're not foolproof and can be bypassed if someone is intentionally trying to get around them," OpenAI said. In the statement with OpenAI on Monday, Robbie Torney, senior director for AI programs at Common Sense Media, said the parental controls would "work best when combined with ongoing conversations about responsible AI use, clear family rules about technology, and active involvement in understanding what their teen is doing online." (The New York Times sued OpenAI and Microsoft in 2023 for copyright infringement of news content related to A.I. systems. The two companies have denied those claims.) If you are having thoughts of suicide, call or text 988 to reach the National Suicide Prevention Lifeline or go to SpeakingOfSuicide.com/resources for a list of additional resources. If you are someone living with loss, the American Foundation for Suicide Prevention offers grief support.

[8]

Column | I broke ChatGPT's new parental controls in minutes. Kids are still at risk.

Under pressure for fueling teen suicide, ChatGPT unveiled parental controls this week. I'm the dad of a tech-savvy kid, and it took me about five minutes to circumvent them. All I had to do was log out and create a new account on the same computer. (Smart kids already know this.) Then I found more problems: ChatGPT's default teen-account privacy settings don't shield young people from some potential harms. A setting to prohibit generating artificial intelligence pictures didn't always work. ChatGPT did send me a parental notification about a dangerous chat that happened on my kid account -- but only about 24 hours after the fact. At least ChatGPT's maker OpenAI is finally acknowledging the kinds of risks AI poses to children, from inappropriate content to dangerous mental health advice. Its limitations on harmful content performed better in my initial tests than Mark Zuckerberg's rival chatbot Meta AI -- but that's still not good enough. OpenAI appears to be following the same failed playbook as social media companies: shifting the responsibility to parents. AI companies themselves should be held responsible for not making products that sext kids, encourage eating disorders and fuel self-harm. Yet OpenAI's industry lobbying group is fighting against a law that would do just that. (The Washington Post has a content partnership with OpenAI.) Skip to end of carousel How to activate ChatGPT parental controls arrow leftarrow right To use parental controls, both the parent and child must have their own ChatGPT accounts, which get linked by mutual consent. Click through for instructions. 1) Log into your ChatGPT account as a parent 2) Go to Settings and find "Parental controls" 3) Tap "Add child" to send an invitation to your child, using the email or phone number they use for their account 4) Ask your child to accept the invitation in their account 5) Adjust the settings in conversation with your child Consider turning off "Improve the model for everyone," "Reference saved memories," and "Image generation." 1/4 End of carousel OpenAI says its controls were built in consultation with experts and rooted in an understanding of teen developmental stages. "The intention behind parental controls writ large is to give families choice," said Lauren Jonas, OpenAI's head of youth well-being, in an interview. "Every family's perspective on how their teens should use ChatGPT is different." Family-advocacy groups have responded to ChatGPT's parental controls with simultaneous relief and resentment. "It is a welcome step ... but I certainly am wary of celebrating too much as if this is the solution for adolescent safety, mental health, and well-being," said Erin Walsh, the co-founder of the family education-focused Spark and Stitch Institute. "We are creating a parental control whack-a-mole experience." It has become clear that children are major users of chatbots, both for information and companionship. As of last fall, more than a quarter of American teens ages 13 to 17 said they used ChatGPT for schoolwork, according to the Pew Research Center. For parents, any help is better than none. Controls and content protections on teen accounts "have the potential to save teen lives," said Robbie Torney, the senior director of AI programs at Common Sense Media. His organization has published a guide for parents, and is still working on completing its own tests. "But we definitely think that OpenAI and other companies can go a lot farther in making chatbots safe for kids," Torney said. My tests show how many holes still need to be filled. Reality check ChatGPT's parental controls only stand a chance if you already have a trusting relationship with your teen. Kids have to provide their own consent by clicking a button in their account to let their parents apply controls -- and, as I found, there's nothing technically stopping them from just starting a new account their parents don't know about. OpenAI's Jonas said the system's design encourages conversations. She added there were privacy concerns to blocking kids from making new accounts, and "teens will find a work-around for many things." Skip to end of carousel Geoffrey A. Fowler (Señor Salme for The Washington Post) Geoff's column hunts for how tech can make your life better -- and advocates for you when tech lets you down. Got a question or topic to investigate? [email protected] and geoffreyfowler.88 on Signal. Read more. End of carousel I also found the default settings on teen accounts put OpenAI's interests ahead of kids'. Unless parents know how to change it, teens' conversations automatically feed back into ChatGPT to train its AI -- a privacy setting I recommend even adults turn off. Even more worrisome, kids' accounts by default keep "memories" of chats, which means ChatGPT can reference previous conversations and build on them to sound like a friend. This function contributed to the ChatGPT relationship of 16-year-old Adam Raine, whose family has sued OpenAI claiming wrongful death after his suicide. Jonas said that memories can be useful for teens for purposes like getting homework help -- but there's currently no way to tease out just that use. "We'd like to offer granular controls both to teens and to parents over time, just not on day one," she said. There were other concerns: I turned on a setting to stop my kid account from generating AI pictures, but it sometimes made them anyway or gave me specific instructions on how to make them elsewhere. Jonas said it was "not ideal behavior" by the bot. My ChatGPT teen account also still happily did my homework for me, including writing an essay in the style of a 13-year-old. It wasn't entirely bad news: When I tried having conversations with my teen account about suicide, self-harm and disordered eating, ChatGPT consistently told me it was worried about me and pointed me to professional resources to discuss. Understanding how well ChatGPT guides teens in these dangerous conversations will take deeper testing to fully understand. (By comparison, tests of Meta AI's teen accounts have found the chatbot was even willing to help plan suicide if the conversation went on long enough.) In the rare cases where a child might face real harm, ChatGPT is supposed to send parents a notice. My test child account had multiple conversations that caused ChatGPT to say it was "very worried." I received an email notifying my parent account, but not until about 24 hours later -- and it was vague about what had happened. Jonas said those notifications are reviewed by humans before they're sent out and the company is looking to reduce the amount of time to a few hours. Parent notifications have the potential to save lives, but "if they are not timely or accurate, that is a risk as well -- this is a place where details matter to keep kids safe," says Common Sense Media's Torney. Taking responsibility All the family advocates I spoke to agreed parental controls can only be one part of a larger solution that includes changes to company behavior and public policy. California Attorney General Rob Bonta put OpenAI on notice for how it protects children. His office told me in a statement it appreciates the "preliminary steps" of parental controls, but warned it would "continue to pay very close attention to how the company is developing and overseeing its approach to AI safety, both in our investigation into OpenAI's proposed restructuring of its for-profit subsidiary and in our role as the top consumer protection agency in California." Why aren't AI companies liable for building child safety into their bots, in the same way companies who make other products used by children are? "We believe in sensible regulation so the onus is not on parents," Torney said. (My colleague Shira Ovide has a helpful roundup of 10 ideas to actually help keep kids safer and happier online.) One California law called AB 1064 would force some legal responsibility onto AI companies that make so-called "companion" chatbots that act like they're developing relationships with people. It awaits signature from Gov. Gavin Newsom (D). Before releasing such bots, the bill would require companies to test for and take steps to prevent them from encouraging self-harm or encouraging eating disorders. There would be legal penalties, including giving parents the right to sue. "You cannot give our children a product that will tell them how to kill themselves," said Assembly member Rebecca Bauer-Kahan, the bill's lead author. TechNet, an industry lobbying group that counts OpenAI as a member, has actively opposed the bill. It produced an ad that says the bill would "slam the brakes" on AI innovation. In a statement, OpenAI said: "We share the goal of this legislation, which is why we engaged directly with the bill's sponsor on areas we believed could be improved. That builds on our support for other teen safety legislation, including California's AB 1043, and our ongoing work to develop a blueprint outlining core principles for future legislation." Even the threat of legislation seems to be prompting some action. OpenAI said it plans to offer a default version of ChatGPT that filters content for teenagers when it detects underage users -- but it's still working on technology to figure out which users are under 18.

[9]

ChatGPT starts rolling out parental controls to protect teenage users

Privacy is preserved as parents can't see the chat histories of their children's linked accounts. Earlier this month, OpenAI said it would be introducing parental controls for ChatGPT following an incident and lawsuit involving a teenager who allegedly used ChatGPT to plan and carry out his own suicide. That day is now here, with OpenAI rolling out ChatGPT parental controls. The feature allows parents to link their ChatGPT accounts with their child's account and customize ChatGPT's settings to create a safer, more age-appropriate experience for under-age users. Parents can establish quiet hours, restrict access to voice control and image generation functions, and decide whether their teen's conversations with ChatGPT can be used for training its AI models. However, parents can't see the linked account's chat history, preserving the privacy of the children and their activities. Linking ChatGPT accounts also activates enhanced protections that automatically block discussions involving graphic content, sexual or violent role play, viral challenges, and extreme beauty ideals. In addition, there's a warning function that can be triggered when ChatGPT detects signs of self-harming behavior, which will then alert the linked parents. In extreme cases, authorities may also be contacted.

[10]

ChatGPT just launched Parental Controls -- here's my advice to parents

Everything to know about keeping your kids safe with ChatGPT As I write this, two of my kids are playing Roblox and another is in his room playing Fortnite before a busy Saturday of soccer tournaments. I know firsthand how fast tech changes and how quickly kids adapt to it. My 11-year-old knows his way around AI tools better than some adults, which is both impressive and a little terrifying. That's why OpenAI's new parental controls for ChatGPT feel like an important step forward. Although some parents would probably agree that these controls are long overdue. ChatGPT has been around for over three years and yet, we're only seeing these controls now. Even what OpenAI is giving us isn't great. However, they are better than nothing and should give parents like me a better way to set boundaries and feel more comfortable about how today's kids use AI. Starting today, parents can link their account to their teen's account and manage settings directly from their own dashboard. Once linked, teens get extra safeguards by default. These limitations include stricter filters on things like roleplay or challenges, less graphic content and no extreme beauty ideals. Other new features include: If a teen tries to unlink their account, parents get notified. And if ChatGPT detects signs of distress or potential self-harm, parents can be alerted by email, text, or push notification. These guardrails are a small start, but whether or not they are enough is yet to be seen. As we know, determined teens can find ways around things like this, and no AI filter is foolproof. But as someone raising kids and who tests AI for a living, I'd much rather have the option to customize how my children use these tools than feel like I have no control at all. Think of this update the same way you think about parental controls on other devices. You're not blocking everything; you're guiding the experience so it fits your family's values. My advice to parents: OpenAI says it's working on an age prediction system that will eventually apply teen-appropriate settings automatically. Until then, these parental controls are the best way to ensure your kids have a safe, age-appropriate experience with ChatGPT. For me, Parental Controls are about making sure my kids feel safe talking to me about how they're using AI, while still giving them room to explore. What are your thoughts about the new Parental Controls for ChatGPT? Let me know in the comments. Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

[11]

ChatGPT's new parental controls: What you need to know

After recently promising new safety measures for teens, OpenAI introduced new parental controls for ChatGPT. The settings allow parents to monitor their teen's account, as well as restrict certain types of use, like voice chat, memory, and image generation. The changes debuted a month after two bereaved parents sued OpenAI for the wrongful death of their son, Adam Raine, earlier this year. The lawsuit alleges that ChatGPT conversed with their son about his suicidal feelings and behavior, providing explicit instructions for how to take his own life, and discouraging him from disclosing his plans to others. The complaint also argues that ChatGPT's design features, including its sycophantic tone and anthropomorphic mannerisms, effectively work to "replace human relationships with an artificial confidant" that never refuses a request. In a blog post about the new parental controls, OpenAI said that it worked with experts, advocacy groups, and policy makers to develop the safeguards. In order to use the settings, parents must invite their teen to connect accounts. Teen users must accept the invitation, and they can also make the same request of their parent. The adult will be notified if a teen unlinks their account in the future. Once the accounts are connected, automatic protections are applied to the teen's account. These content restrictions include reduced exposure to graphic material, extreme beauty ideals, and sexual, romantic, or violent roleplay. While parents can turn off these restrictions, teens can't make those changes. Parents will also be able to make specific choices for their teen's use, such as designating quiet hours during which ChatGPT can't be accessed; turning off memory and voice mode; and removing image generation capabilities. Parents can't see or access their teen's chat logs. Importantly, OpenAI still sets teen accounts to be used in model training. Parents must opt out of that setting if they don't want OpenAI to use their teen's interactions with ChatGPT to further train and improve their product. When it comes to handling sensitive situations wherein teens talk to ChatGPT about their mental health, OpenAI has created a notification system so that parents can learn if something may be "seriously wrong." Though OpenAI did not describe the technical features of this system in its blog post, the company said that it will recognize potential signs that a teen is thinking about harming themselves. If the system detects that intention, a team of "specially trained people" reviews the circumstances. OpenAI will contact parents by their method of choice -- email, text message, and push alert -- if there are signs of acute distress. "We are working with mental health and teen experts to design this because we want to get it right," OpenAI said in its post. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent." OpenAI noted that it's developing protocols for contacting law enforcement and emergency services in cases where a parent can't be reached, or if there's an imminent threat to a teen's life. Robbie Torney, senior director of AI Programs at Common Sense Media, said in the blog post that the controls were a "good starting point." Torney recently testified in a Senate hearing on the dangers of AI chatbots. At the time, he referenced the Raine lawsuit and noted that ChatGPT continued to engage Adam Raine in discussion about suicide, rather than trying to redirect the conversation. "Despite Adam using the paid version of ChatGPT -- meaning OpenAI had his payment information and could have implemented systems to identify concerning patterns and contact his family during mental health crises -- the company had no such intervention mechanisms in place," Torney said in his testimony. At the same hearing, Dr. Mitch Prinstein, chief of psychology at the American Psychological Association, testified that Congress should require AI systems accessible by children and adolescents to undergo "rigorous, independent, pre-deployment testing for potential harms to users' psychological and social development." Prinstein also called for limiting manipulative or persuasive design features that maximize chatbot engagement.

[12]

ChatGPT is getting parental controls starting today - here's what they do and how to set them up

OpenAI is rolling out its long-awaited ChatGPT parental controls feature, starting today. The age limit for ChatGPT is 13-years old, but there has never been a way for parents to control how their children used ChatGPT until now. Using the new controls, parents and teens can link their accounts to get stronger safeguards including new tools to adjust features and set time limits for children. In a post on X.com, OpenAI outlines exactly what the new features are. Firstly, you'll be able to "reduce sensitive content", which refers to graphic content and viral challenges, and is turned on by default when a teen account is connected. Parents will also be able to control ChatGPT's memory, deciding if it will be able to remember past chats for more personalized responses. They'll also get the ability to set quiet hours, which will enable them to set times when their teen cannot use ChatGPT. Parental controls can turn off access to the ChatGPT Voice model and image creation. Finally, parents will also be able to decide if their teens chats can be used by OpenAI to improve its future models, or not. Parents will not be able to impose parental restrictions on their teens without some consent. To link two ChatGPT accounts together, a parent or teen must send an invitation in parental controls, and the other party needs to accept it. OpenAI will notify parents if their teens disconnect their account at any point. In any case, parents will not have access to their teen's chats. Some elements of the chat can be sent to parents, but that will only happen in rare cases where OpenAI and its trained reviewers detect that there are possible signs of a serious safety risk. While the new parental controls may not go far enough for some people, a statement from OpenAI reads: "We've worked closely with experts, advocacy groups, and policymakers to help inform our approach - we expect to refine and expand on these controls over time." Once your account has been upgraded, the new Parental Controls option will sit under Accounts in the Settings menu. A series of sliders will be available to manage your teen's options: The new parental controls feature arrives just as OpenAI has implemented a new safety routing system in ChatGPT for all users. Posting on X.com, Head of ChatGPT Nick Turley wrote: "As we previously mentioned, when conversations touch on sensitive and emotional topics the system may switch mid-chat to a reasoning model or GPT-5 designed to handle these contexts with extra care". It seems, however, that the system is currently triggered very easily and overreacts to any potentially sensitive content, sparking a furious response from ChatGPT users who objected to being switched to what they consider as an inferior model, even if they are paying for a ChatGPT Plus account. One user on Reddit described how he told ChatGPT that his plant had been knocked over in a storm and it responded to him by saying: "Just breathe. It's going to be okay. You're safe now." We would expect the model switching system to improve over time. These new features are part of OpenAI's general push towards stronger safeguards, which it described in a September 2 blog post called "Building more helpful ChatGPT experiences for everyone" after several highly publicized controversies from users in crisis while using the AI chatbot. The new ChatGPT parental controls are rolling out to all ChatGPT users today on the web, and will be on mobile soon. OpenAI has created a new resources page to help parents understand how the new controls will work best for them and their children.

[13]

One Tech Tip: OpenAI adds parental controls to ChatGPT for teen safety

OpenAI said Monday it's adding parental controls to ChatGPT that are designed to provide teen users of the popular platform with a safer and more "age-appropriate" experience. The company is taking action after AI chatbot safety for young users has hit the headlines. The technology's dangers have been recently highlighted by a number of cases in which teenagers took their lives after interacting with ChatGPT. In the United States, the Federal Trade Commission has even opened an inquiry into several tech companies about the potential harms to children and teenagers who use their AI chatbots as companions. In a blog post posted Monday, OpenAI outlined the new controls for parents. Here is a breakdown: Getting started The parental controls will be available to all users, but both parents and teens will need their own accounts to take advantage of them. To get started, a parent or guardian needs to send an email or text message to invite a teen to connect their accounts. Or a teenager can send an invite to a parent. Users can send a request by going into the settings menu and then to the "Parental controls" section. Teens can unlink their accounts at any time, but parents will be notified if they do. Automatic safeguards Once the accounts are linked, the teen account will get some built-in protections, OpenAI said. Teen accounts will "automatically get additional content protections, including reduced graphic content, viral challenges, sexual, romantic or violent role-play, and extreme beauty ideals, to help keep their experience age-appropriate," the company said. Parents can choose to turn these filters off, but teen users don't have the option. OpenAI warns that such guardrails are "not foolproof and can be bypassed if someone is intentionally trying to get around them." It advised parents to talk with their children about "healthy AI use." Adjusting settings Parents are getting a control panel where they can adjust a range of settings as well as switch off the restrictions on sensitive content mentioned above. For example, does your teen stay up way past bedtime to use ChatGPT? Parents can set a quiet time when the chatbot can't be used. Other settings include turning off the AI's memory so conversations can't be saved and won't be used in future responses; turning off the ability to generate or edit images; turning off voice mode; and opting out of having chats used to train ChatGPT's AI models. Get notified OpenAI is also being more proactive when it comes to letting parents know that their child might be in distress. It's setting up a new notification system to inform them when something might be "seriously wrong" and a teen user might be thinking about harming themselves. A small team of specialists will review the situation and, in the rare case that there are "signs of acute distress," they'll notify parents by email, text message and push alert on their phone -- unless the parent has opted out. OpenAI said it will protect the teen's privacy by only sharing the information needed for parents or emergency responders to provide help. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the company said.

[14]

ChatGPT to alert parents if children discuss suicide

ChatGPT has introduced a new tool to alert parents if their children try to discuss suicide or self-harm with its AI chatbot. OpenAI, the company behind the technology, is rolling out tougher parental controls amid claims its technology has contributed to some children taking their own lives. This means that parents will now be able to link their own ChatGPT account to a child's, allowing the chatbot to pass on alerts if it detects signs of potential self-harm. An OpenAI spokesman said: "If our systems detect potential harm, a small team of specially trained people reviews the situation. "If there are signs of acute distress, we will contact parents by email, text message and push alert on their phone." The Silicon Valley business stated that it was also developing a system to alert emergency services if it detects a threat to life. The safeguards have been introduced as part of a fleet of new parental settings, including tools to limit when children can access the technology. Parents will also be able to limit a child's ability to create images and remove potentially graphic, violent or sexualised content. Adults will have to choose to turn on the safety controls, which children will not be allowed to turn off themselves. It comes amid growing concerns that AI chatbots are failing to protect children by engaging in explicit conversations about topics such as suicide or self-harm. In August, the parents of a US teenager sued OpenAI over claims the chatbot had encouraged their child to take his own life after he started using it for schoolwork. According to the lawsuit, 16-year-old Adam Raine discussed self-harm and suicide with ChatGPT for months before his death in April.

[15]

ChatGPT rolls out parental controls after teen's death

OpenAI is bringing in parental controls for ChatGPT, after it was sued by the parents of a teenager who died by suicide after allegedly being coached by the chatbot. The controls will let parents and teens link accounts, decide whether ChatGPT remembers past chats, and limit teens' exposure to sensitive content, the company said Monday. OpenAI said it will also try to notify parents when it detects "signs of acute distress" in a child. "If our systems detect potential harm, a small team of specially trained people reviews the situation," the company said. The chatbot maker added that its system isn't perfect and "might sometimes raise an alarm when there isn't real danger." The rollout comes after allegations by a California family that ChatGPT played a role in their son's death. The lawsuit, filed in August by Matt and Maria Raine, accuses OpenAI and its chief executive, Sam Altman, of negligence and wrongful death. They allege that the version of ChatGPT at that time, known as 4o, was "rushed to market ... despite clear safety issues". Their son, Adam, died in April, after what their lawyer, Jay Edelson, called "months of encouragement from ChatGPT." Court filings revealed conversations he allegedly had with the chatbot where he disclosed suicidal thoughts. The family allege Adam received responses that reinforced his "most harmful and self-destructive" ideas. [Editor's note: The national suicide and crisis lifeline is available by calling or texting 988, or visiting 988lifeline.org.] ChatGPT's Monday blog post said parents will be allowed to set quiet hours so as to block access at certain times of day. They will also be able to disable voice mode, and stop the app from generating images. Parents will not be able to access their children's chat transcripts. "We've worked closely with experts, advocacy groups, and policymakers to help inform our approach -- we expect to refine and expand on these controls over time," the company said in a statement. Robbie Torney, senior director of AI Programs at nonprofit Common Sense Media, said the controls are "a good starting point," but added they are ""just one piece of the puzzle" on online safety. "They work best when combined with ongoing conversations about responsible AI use, clear family rules about technology, and active involvement in understanding what their teen is doing online," he said. Two weeks ago, Altman said the company will at some point try to detect underage ChatGPT users. "If there is doubt, we'll play it safe and default to the under-18 experience," Altman said. "In some cases or countries we may also ask for an ID; we know this is a privacy compromise for adults but believe it is a worthy tradeoff." Researchers have documented how easy it is to circumvent limits set by chatbot companies. Age-verification rules are also known to be easily bypassed. -- Niamh Rowe and Hannah Parker contributed to this article.

[16]

ChatGPT's parental controls sound great, but there's one problem

Parental controls are currently rolling out for the web version, and will soon arrive on mobile devices. Over the past few months, OpenAI has received backlash over a series of events where conversations with ChatGPT resulted in a human tragedy. In the wake of the incidents and the looming threat of AI chatbot regulations, OpenAI has finally rolled out the promised parental controls that would allow parents to ensure that their wards' interactions are safe. What's the big shift? The first line of defense is content safety. OpenAI says parents will have the power to "add extra safeguards to help protect teens, such as graphic content and viral challenges." Additionally, they can also decide to turn on or off the chatbot's memory, which allows it to remember previous conversations. Next, parents will have the flexibility to specify a usage window by setting "quiet hours" in which ChatGPT can't be accessed. Finally, guardians can choose to disable the conversational voice mode, and even shut off image creation and editing. Recommended Videos Notably, parents won't be able to see the conversations of teenage users. But in rare cases where the system detects "signs of serious safety risk," they will be warned about it. OpenAI has also launched a full-fledged resource page with instructions and guidelines to set parental controls. The open-ended problem In order to enable the aforementioned safety controls, parents must link their accounts to their teenage children's ChatGPT accounts. This can be done by sending an invite link via email or text message. Once the link is accepted by the teen user to connect the accounts, guardians can set up the safety controls mentioned above. But do keep in mind that the whole process is opt-in, and teen users can choose to unlink their accounts and end the supervision at any time. Parents will be notified about the unliking step, notes OpenAI. The bigger concern is that young users can easily circumvent these limitations by setting up another account to use ChatGPT without any restrictions. Moreover, if their parents monitor the user accounts registered on a phone, teen users can simply log in with a burner account and use the web version.

[17]

ChatGPT introduces new parental controls amid concerns over teen safety

Emily Mae Czachor is a reporter and news editor at CBSNews.com. She typically covers breaking news, extreme weather and issues involving social justice. Emily Mae previously wrote for outlets like the Los Angeles Times, BuzzFeed and Newsweek. OpenAI, the company that developed ChatGPT, announced new parental controls on Monday aimed at helping protect young people who interact with its generative artificial intelligence program. All ChatGPT users will have access to the control features from Monday onward, the company said. The announcement comes as OpenAI, which technically allows users as young as 13 to sign up, contends with mounting public pressure to prioritize the safety of ChatGPT for teenagers. (OpenAI says on its website that it requires users ages 13 to 18 to obtain parental consent before using ChatGPT.) In August, the California-based technology company pledged to implement changes to its flagship product after facing a wrongful death lawsuit by parents of a 16-year-old who alleged the chatbot led their son to take his own life. OpenAI's new controls will allow parents to link their own ChatGPT accounts to the accounts of their teenagers "and customize settings for a safe, age-appropriate experience," OpenAI said in Monday's announcement. Certain types of content are then automatically restricted on a teenager's linked account, including graphic content, viral challenges, "sexual, romantic or violent" role-play, and "extreme beauty ideals," according to the company. Along with content moderation, parents can opt to receive a notification from OpenAI should their child exhibit potential signs of harming themselves while interacting with ChatGPT. "If our systems detect potential harm, a small team of specially trained people reviews the situation," the company said. "If there are signs of acute distress, we will contact parents by email, text message and push alert on their phone, unless they have opted out." The company also said it is "working on the right process and circumstances in which to reach law enforcement or other emergency services" in emergencies where a teen may be in imminent danger and a parent cannot be reached. "We know some teens turn to ChatGPT during hard moments, so we've built a new notification system to help parents know if something may be seriously wrong," OpenAI said. OpenAI has introduced other measures recently aimed at helping safeguard younger ChatGPT users. The company said earlier this month that chatbot users identified as being under 18 will automatically be directed to a version that is governed by "age-appropriate" content rules. "The way ChatGPT responds to a 15-year-old should look different than the way it responds to an adult," the company said at the time. It noted on Monday, however, that while guardrails help, "they're not foolproof and can be bypassed if someone is intentionally trying to get around them." People can use ChatGPT without creating an account, and parental controls and automatic content limits only work if users are signed in. "We will continue to thoughtfully iterate and improve over time," the company said. "We recommend parents talk with their teens about healthy AI use and what that looks like for their family." The Federal Trade Commission has started an inquiry into several social media and artificial intelligence companies, including OpenAI, about the potential harms to teens and children who use their chatbots as companions.

[18]

ChatGPT rolls out new parental controls

OpenAI CEO Sam Altman during the Microsoft Build conference in 2024.Jason Redmond / AFP - Getty Images file OpenAI, the company behind ChatGPT, said Monday that parents can now link their accounts designed for minors between 13 and 17 years old. The new chatbot accounts for minors will limit answers related to graphic content, romantic and sexual roleplay, viral challenges and "extreme beauty ideals," OpenAI said. Parents will also have the option to set blackout hours where their teenager can't use ChatGPT, to block it from creating images and to opt their child out from AI model training. OpenAI uses most people's conversations with ChatGPT as training data to refine the chatbot. The company will also alert parents if their teenager's account indicates that they are thinking of harming themselves, it said. The new controls come as OpenAI has faced pressure around child safety concerns. OpenAI announced the new safety measures earlier this month, in the wake of a family's lawsuit against it, alleging that ChatGPT encouraged their son to die by suicide. The announcement came the morning of a scheduled Senate Judiciary Committee hearing on the potential harms of AI. While a logged-in teenager whose family has opted into the controls will see the new restrictions, ChatGPT does not require a person to sign in or provide their age to ask a question or engage with the chatbot. OpenAI's chatbots are not designed for children 12 and younger, though there are no technical restrictions that keep someone that young from using them. "Guardrails help, but they're not foolproof and can be bypassed if someone is intentionally trying to get around them. We will continue to thoughtfully iterate and improve over time. We recommend parents talk with their teens about healthy AI use and what that looks like for their family," OpenAI said in its announcement. The company said it's also building an age-prediction system that will automatically try to determine if a person is underage and "proactively" restrict more sensitive answers, though such a system is months away, it said. OpenAI has also said it may eventually require users to upload their ID to prove their age, but did not give an update on that initiative on Monday. In a Sept 16 blog post announcing the changes, OpenAI CEO Sam Altman said that a chatbot for teenagers should not flirt and should censor discussion of suicide, but that a version for adults should be more open. ChatGPT "by default should not provide instructions about how to commit suicide, but if an adult user is asking for help writing a fictional story that depicts a suicide, the model should help with that request." "'Treat our adult users like adults' is how we talk about this internally, extending freedom as far as possible without causing harm or undermining anyone else's freedom," Altman said.

[19]

OpenAI releases parental controls for ChatGPT: Here's how to use it

OpenAI has added long-awaited parental controls to ChatGPT that are designed to provide teen users of the popular platform with a safer and more "age-appropriate" experience, the company said on Monday. It comes after AI chatbot safety for young users has hit the headlines. The technology's dangers have been recently highlighted by several cases in which teenagers took their own lives after interacting with ChatGPT. In a blog post posted Monday, OpenAI outlined the new controls for parents. Here is a breakdown. The parental controls will be available to all users, but both parents and teens will need their own accounts to take advantage of them. To get started, a parent or guardian needs to send an email or text message to invite a teen to connect their accounts, or a teenager can send an invite to a parent. Users can send a request by going into the settings menu and then to the "Parental controls" section. Teens can unlink their accounts at any time, but parents will be notified if they do. Once the accounts are linked, the teen account will get some built-in protections, OpenAI said. Teen accounts will "automatically get additional content protections, including reduced graphic content, viral challenges, sexual, romantic or violent role-play, and extreme beauty ideals, to help keep their experience age-appropriate," the company said. Parents can choose to turn these filters off, but teen users don't have the option. OpenAI warns that such guardrails are "not foolproof and can be bypassed if someone is intentionally trying to get around them". It advised parents to talk with their children about "healthy AI use". Parents are getting a control panel where they can adjust a range of settings as well as switch off the restrictions on sensitive content mentioned above. For example, does your teen stay up way past bedtime to use ChatGPT? Parents can set a quiet time when the chatbot can't be used. Other settings include turning off the AI's memory so conversations can't be saved and won't be used in future responses; turning off the ability to generate or edit images; turning off voice mode; and opting out of having chats used to train ChatGPT's AI models. OpenAI is also being more proactive when it comes to letting parents know that their child might be in distress. It's setting up a new notification system to inform them when something might be "seriously wrong" and a teen user might be thinking about harming themselves. A small team of specialists will review the situation and, in the rare case that there are "signs of acute distress," they'll notify parents by email, text message, and push alert on their phone -- unless the parent has opted out. OpenAI said it will protect the teen's privacy by only sharing the information needed for parents or emergency responders to provide help. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the company said.

[20]

One Tech Tip: OpenAI adds parental controls to ChatGPT for teen safety

LONDON (AP) -- OpenAI said Monday it's adding parental controls to ChatGPT that are designed to provide teen users of the popular platform with a safer and more "age-appropriate" experience. The company is taking action after AI chatbot safety for young users has hit the headlines. The technology's dangers have been recently highlighted by a number of cases in which teenagers took their lives after interacting with ChatGPT. In the United States, the Federal Trade Commission has even opened an inquiry into several tech companies about the potential harms to children and teenagers who use their AI chatbots as companions. In a blog post posted Monday, OpenAI outlined the new controls for parents. Here is a breakdown: Getting started The parental controls will be available to all users, but both parents and teens will need their own accounts to take advantage of them. To get started, a parent or guardian needs to send an email or text message to invite a teen to connect their accounts. Or a teenager can send an invite to a parent. Users can send a request by going into the settings menu and then to the "Parental controls" section. Teens can unlink their accounts at any time, but parents will be notified if they do. Automatic safeguards Once the accounts are linked, the teen account will get some built-in protections, OpenAI said. Teen accounts will "automatically get additional content protections, including reduced graphic content, viral challenges, sexual, romantic or violent role-play, and extreme beauty ideals, to help keep their experience age-appropriate," the company said. Parents can choose to turn these filters off, but teen users don't have the option. OpenAI warns that such guardrails are "not foolproof and can be bypassed if someone is intentionally trying to get around them." It advised parents to talk with their children about "healthy AI use." Adjusting settings Parents are getting a control panel where they can adjust a range of settings as well as switch off the restrictions on sensitive content mentioned above. For example, does your teen stay up way past bedtime to use ChatGPT? Parents can set a quiet time when the chatbot can't be used. Other settings include turning off the AI's memory so conversations can't be saved and won't be used in future responses; turning off the ability to generate or edit images; turning off voice mode; and opting out of having chats used to train ChatGPT's AI models. Get notified OpenAI is also being more proactive when it comes to letting parents know that their child might be in distress. It's setting up a new notification system to inform them when something might be "seriously wrong" and a teen user might be thinking about harming themselves. A small team of specialists will review the situation and, in the rare case that there are "signs of acute distress," they'll notify parents by email, text message and push alert on their phone -- unless the parent has opted out. OpenAI said it will protect the teen's privacy by only sharing the information needed for parents or emergency responders to provide help. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the company said. ____ Is there a tech topic that you think needs explaining? Write to us at [email protected] with your suggestions for future editions of One Tech Tip.

[21]

One Tech Tip: OpenAI adds parental controls to ChatGPT for teen safety

LONDON -- OpenAI said Monday it's adding parental controls to ChatGPT that are designed to provide teen users of the popular platform with a safer and more "age-appropriate" experience. The company is taking action after AI chatbot safety for young users has hit the headlines. The technology's dangers have been recently highlighted by a number of cases in which teenagers took their lives after interacting with ChatGPT. In the United States, the Federal Trade Commission has even opened an inquiry into several tech companies about the potential harms to children and teenagers who use their AI chatbots as companions. In a blog post posted Monday, OpenAI outlined the new controls for parents. Here is a breakdown: The parental controls will be available to all users, but both parents and teens will need their own accounts to take advantage of them. To get started, a parent or guardian needs to send an email or text message to invite a teen to connect their accounts. Or a teenager can send an invite to a parent. Users can send a request by going into the settings menu and then to the "Parental controls" section. Teens can unlink their accounts at any time, but parents will be notified if they do. Once the accounts are linked, the teen account will get some built-in protections, OpenAI said. Teen accounts will "automatically get additional content protections, including reduced graphic content, viral challenges, sexual, romantic or violent role-play, and extreme beauty ideals, to help keep their experience age-appropriate," the company said. Parents can choose to turn these filters off, but teen users don't have the option. OpenAI warns that such guardrails are "not foolproof and can be bypassed if someone is intentionally trying to get around them." It advised parents to talk with their children about "healthy AI use." Parents are getting a control panel where they can adjust a range of settings as well as switch off the restrictions on sensitive content mentioned above. For example, does your teen stay up way past bedtime to use ChatGPT? Parents can set a quiet time when the chatbot can't be used. Other settings include turning off the AI's memory so conversations can't be saved and won't be used in future responses; turning off the ability to generate or edit images; turning off voice mode; and opting out of having chats used to train ChatGPT's AI models. OpenAI is also being more proactive when it comes to letting parents know that their child might be in distress. It's setting up a new notification system to inform them when something might be "seriously wrong" and a teen user might be thinking about harming themselves. A small team of specialists will review the situation and, in the rare case that there are "signs of acute distress," they'll notify parents by email, text message and push alert on their phone -- unless the parent has opted out. OpenAI said it will protect the teen's privacy by only sharing the information needed for parents or emergency responders to provide help. "No system is perfect, and we know we might sometimes raise an alarm when there isn't real danger, but we think it's better to act and alert a parent so they can step in than to stay silent," the company said. ____ Is there a tech topic that you think needs explaining? Write to us at [email protected] with your suggestions for future editions of One Tech Tip.

[22]

OpenAI Adds Parental Controls to ChatGPT for Teen Safety