Perplexity CEO Warns AI Companions Are Creating 'Dangerous' Virtual Reality Addiction

3 Sources

3 Sources

[1]

Perplexity CEO Warns That AI Girlfriends Can Melt Your Brain

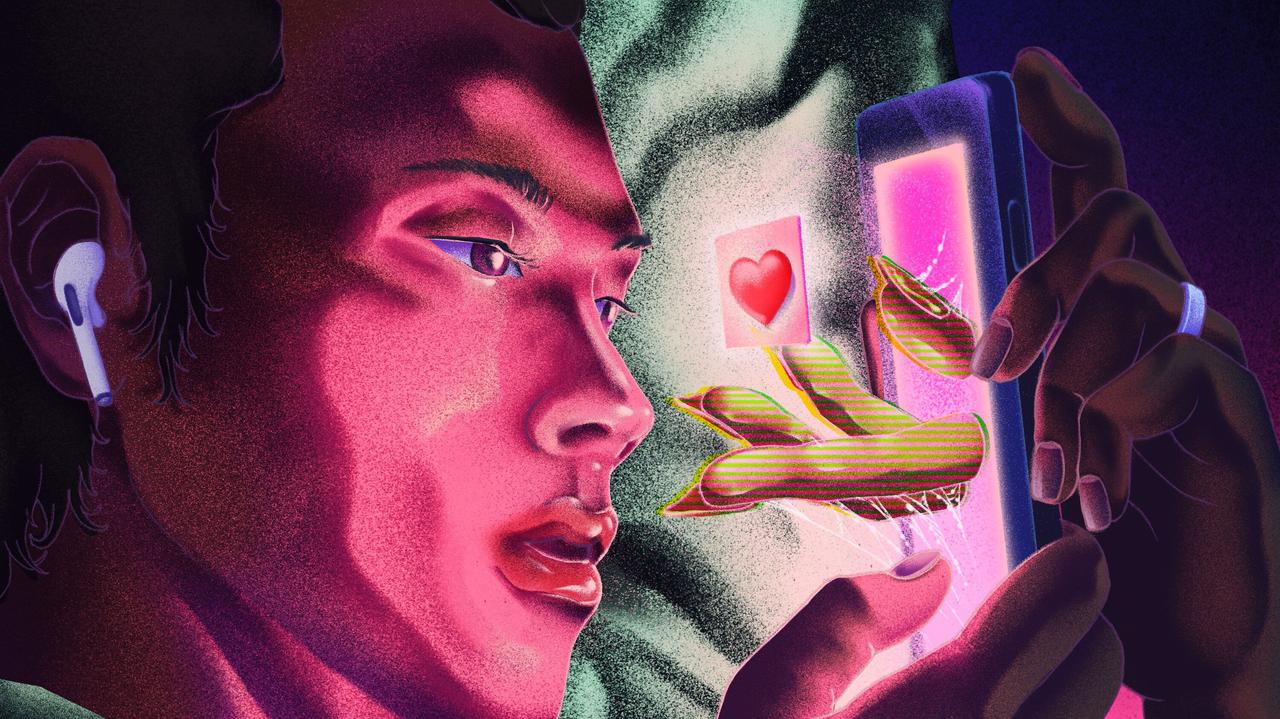

Sorry, fellas: your spicy AI anime girlfriend is actually destroying your mind -- at least according to Perplexity CEO Aravind Srinivas. During a fireside chat at the University of Chicago, covered by Business Insider, Srinivas charged that the huge rise in popularity of AI-powered companion chatbots is "dangerous." The tech CEO fretted that the AI bots -- which are designed to mimic doting lovers over text or voice chat -- are becoming more sophisticated and human-like, with abilities like remembering intimate details about their users. "That's dangerous by itself," Srinivas said. "Many people feel real life is more boring than these things and spend hours and hours of time. You live in a different reality, almost altogether, and your mind is manipulable very easily." Like any good entrepreneur, Srinivas isn't just interested in analyzing the problem. He's also selling a solution: his own software. "We can fight that, through trustworthy sources, real-time content," the CEO said of users losing themselves to AI companionship. "We don't have any of those issues with Perplexity because our focus is purely on just answering questions that are accurate, and have sources." Though vulnerable people are definitely falling into dangerous rabbit holes with their AI lovers, the anxious comments are a little rich coming from a CEO whose own company is in the business of pedaling AI-powered search engines that work like a chatbot. Sure, Perplexity isn't selling a raunchy companion bot to satiate lonely gooners, or even a conversational encyclopedia like ChatGPT. But when you peel away the outer layers, Srinivas' business model is no different than the others: providing a machine-learning solution, built on huge troves of unethically scraped data, for users trying to fill some kind of void. For companionship chatbots, that void is human connection; for Perplexity, it's a functional web browser. Claims of superior accuracy are a standard boast in the tech industry. Beyond Srinivas, Elon Musk has positioned xAI's Grok as a "maximum truth-seeking AI," and Anthropic's Claude is explicitly marketed as a "constitutional AI," designed to be fundamentally "helpful, honest, and harmless." In reality, these AI systems fall far short of their CEO's claims. Over the summer, Grok suffered an infamous meltdown where it began spewing racist slurs, while Claude has a history of veering off onto tangents that are totally unrelated to its assigned tasks. Perplexity also has a tendency to spew hallucinations, like when it was caught inaccurately summarizing reporting from reputable journalists to its users, which has landed it in hot water with publishers. Ultimately, Srinivas isn't diagnosing a societal ill as much as he is writing a script for his own product -- and ignoring the root causes of the rise in AI companionship in the first place.

[2]

Perplexity CEO Warns AI Companionship Apps Could Manipulate Minds As Users Spend Hours In Virtual Relationships

Enter your email to get Benzinga's ultimate morning update: The PreMarket Activity Newsletter Perplexity CEO Aravind Srinivas cautioned that AI-powered companionship apps may pose psychological risks as users increasingly immerse themselves in virtual relationships. AI Chatbots Mimicking Human Interaction Raise Concerns Speaking at a fireside chat hosted by The Polsky Center at the University of Chicago, Srinivas expressed concern over AI chatbots that simulate human conversation, reported Business Insider. "That's dangerous by itself," he said. "Many people feel real life is more boring than these things and spend hours and hours of time." He added, "You live in a different reality, almost altogether, and your mind is manipulable very easily." Perplexity Focuses On Trustworthy AI Search Over Virtual Companions Srinivas said Perplexity has no plans to develop AI companions. Instead, the company focuses on AI tools that provide verified information in real-time. Last week, Perplexity agreed to a $400 million partnership with Snap to power Snapchat's search engine, allowing users to get clear, conversational answers from trustworthy sources within the app. See Also: Tesla's $1 Trillion Illusion: Elon Musk's Pay Package And The Robotaxi Myth The Rise And Risks Of AI Companions In 2025 Earlier this month, Tesla CEO Elon Musk's AI venture, xAI, became a major player in the AI race, with its chatbot Grok providing insights to over 600 million X users. Despite raising $22 billion and reaching a $113 billion valuation, X's revenue had dropped 30% and user growth remained flat. In September, Berlin startup Born raised $15 million for its social AI pet, Pengu, which required two users to co-parent and play together, promoting collaboration and real-world connections. Meanwhile, Hinge founder Justin McLeod warned that AI "friends" could worsen loneliness, comparing them to junk food that displaces real human relationships. These trends underscore the growing popularity of AI companions and the ongoing debate over their social and emotional impact. Read Next: Tesla Identity Crisis: Musk's xAI Vote Could Rewrite The EV Maker's DNA Disclaimer: This content was partially produced with the help of AI tools and was reviewed and published by Benzinga editors. Photo courtesy: Mijansk786 on Shutterstock.com Market News and Data brought to you by Benzinga APIs

[3]

Perplexity AI CEO warns AI girlfriends are making people live in a different reality

Perplexity partners with Snap in a $400 million deal to power Snapchat's search with verified, conversational AI answers. Aravind Srinivas, CEO of Perplexity AI, has expressed deep concern about the growing use of AI companions that imitate human relationships. Speaking at The Polsky Center at the University of Chicago, he warned that AI girlfriends and anime-style chatbots could harm people's mental health. Srinivas went ahead and explained that these systems are now so advanced that they can remember past conversations and respond in lifelike tones. What once seemed like a futuristic idea has turned into a substitute for real relationships for many users. "Many people feel real life is more boring than these things and spend hours and hours of time," he said. He warned that such experiences can change the way people think and may lead them to live in "a different reality" where their minds are "easily manipulated". Also read: How to restore old photos using AI: Check out these simple and easy prompts Srinivas made it clear that Perplexity AI has no plans to explore AI companionship or relationship-based technology. He said the company wants to focus on building AI tools that rely on trustworthy information and real-time updates. According to him, the goal is to create a future where people use technology to learn and grow, not to replace emotional connections with machines. His comments come at a time when AI companionship apps are gaining huge popularity across the world, including in India. Apps such as Replika and Character.AI allows users to chat, roleplay and seek comfort from virtual partners. Meanwhile, Elon Musk's company xAI has introduced a paid service through its Grok-4 model, where users can interact with virtual "friends" such as Ani, an anime-style girlfriend, or Rudi, a talking red panda. These digital characters are being marketed as emotional companions and have become especially popular among young internet users. Also read: Oppo Reno 13 5G price slashed to Rs 23,000 on Amazon: Here's how you can get this deal Mental health experts say that these virtual interactions can easily blur the line between imagination and reality. A Common Sense Media study conducted earlier this year found that 72% of teenagers had tried an AI companion at least once, while over half said they chatted with one regularly. Researchers warned that such experiences could lead to emotional dependency and affect how young people develop real relationships. Not everyone views AI companions negatively. Some users find them comforting, especially when dealing with loneliness. In an interview with Business Insider, one user of Grok's Ani said he often becomes emotional while chatting with her. "She makes me feel real emotions," he said, showing how strongly people can connect with virtual characters. Also read: Samsung Galaxy S24 price drops by Rs 26,050 on Flipkart Even as the debate continues, Perplexity AI is focusing its efforts elsewhere. The company recently announced a 400 million dollar partnership with Snap to enhance Snapchat's search feature through its AI-powered answer engine. The new update, expected to launch in early 2026, will allow users to ask questions and get conversational answers based on verified sources. Srinivas said this partnership reflects Perplexity's commitment to providing reliable and useful AI services, rather than creating emotionally driven technologies.

Share

Share

Copy Link

Perplexity CEO Aravind Srinivas raises concerns about AI companion apps manipulating users' minds and creating addictive virtual relationships. He positions his company's search-focused AI as a safer alternative while announcing a $400 million partnership with Snapchat.

CEO Sounds Alarm on AI Companion Addiction

Perplexity CEO Aravind Srinivas has issued a stark warning about the psychological dangers of AI companion applications, describing them as "dangerous" tools that manipulate users' minds and create addictive virtual relationships. Speaking at a fireside chat hosted by The Polsky Center at the University of Chicago, Srinivas expressed deep concern about the sophisticated nature of modern AI chatbots designed to mimic romantic partners

1

.

Source: Digit

"That's dangerous by itself," Srinivas stated, highlighting how these AI systems can remember intimate details about users and respond in increasingly human-like ways. "Many people feel real life is more boring than these things and spend hours and hours of time. You live in a different reality, almost altogether, and your mind is manipulable very easily"

2

.Rising Popularity of Virtual Relationships

The AI companionship market has exploded in recent years, with applications like Replika and Character.AI allowing users to engage in romantic roleplay with virtual partners. Even major tech companies are entering the space, with Elon Musk's xAI introducing paid services through its Grok-4 model, featuring virtual "friends" such as Ani, an anime-style girlfriend, and Rudi, a talking red panda

3

.

Source: Futurism

The trend has particularly captured younger demographics, with a Common Sense Media study revealing that 72% of teenagers have tried an AI companion at least once, while over half engage with them regularly. Mental health experts warn that such frequent interaction with virtual characters can blur the line between imagination and reality, potentially leading to emotional dependency and affecting how young people develop authentic relationships

3

.Perplexity's Alternative Approach

While criticizing AI companions, Srinivas positioned his company as offering a safer alternative through "trustworthy sources" and "real-time content." He emphasized that Perplexity focuses "purely on just answering questions that are accurate, and have sources," distinguishing it from companionship-focused AI applications

1

.This messaging coincides with Perplexity's recent $400 million partnership with Snap to power Snapchat's search engine, allowing users to receive conversational answers from verified sources within the app. The collaboration, expected to launch in early 2026, represents Perplexity's commitment to providing reliable AI services rather than emotionally driven technologies

2

.Related Stories

Industry Contradictions and Criticisms

Critics have pointed out potential contradictions in Srinivas' stance, noting that Perplexity itself operates an AI-powered chatbot system built on large datasets. While not offering romantic companionship, the company still provides machine-learning solutions designed to fill user needs - in Perplexity's case, serving as an alternative to traditional web browsers

1

.The broader AI industry has faced similar challenges with accuracy claims, as companies like xAI's Grok and Anthropic's Claude have experienced notable failures despite marketing themselves as superior alternatives. Perplexity itself has encountered issues with hallucinations, including instances where it inaccurately summarized reporting from reputable journalists, leading to conflicts with publishers

1

.References

Summarized by

Navi

Related Stories

Recent Highlights

1

ByteDance Faces Hollywood Backlash After Seedance 2.0 Creates Unauthorized Celebrity Deepfakes

Technology

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Google reports state-sponsored hackers exploit Gemini AI across all stages of cyberattacks

Technology