Positron AI Challenges Nvidia with Energy-Efficient AI Inference Chips

4 Sources

4 Sources

[1]

Positron AI says its Atlas accelerator beats Nvidia H200 on inference in just 33% of the power -- delivers 280 tokens per second per user with Llama 3.1 8B in 2000W envelope

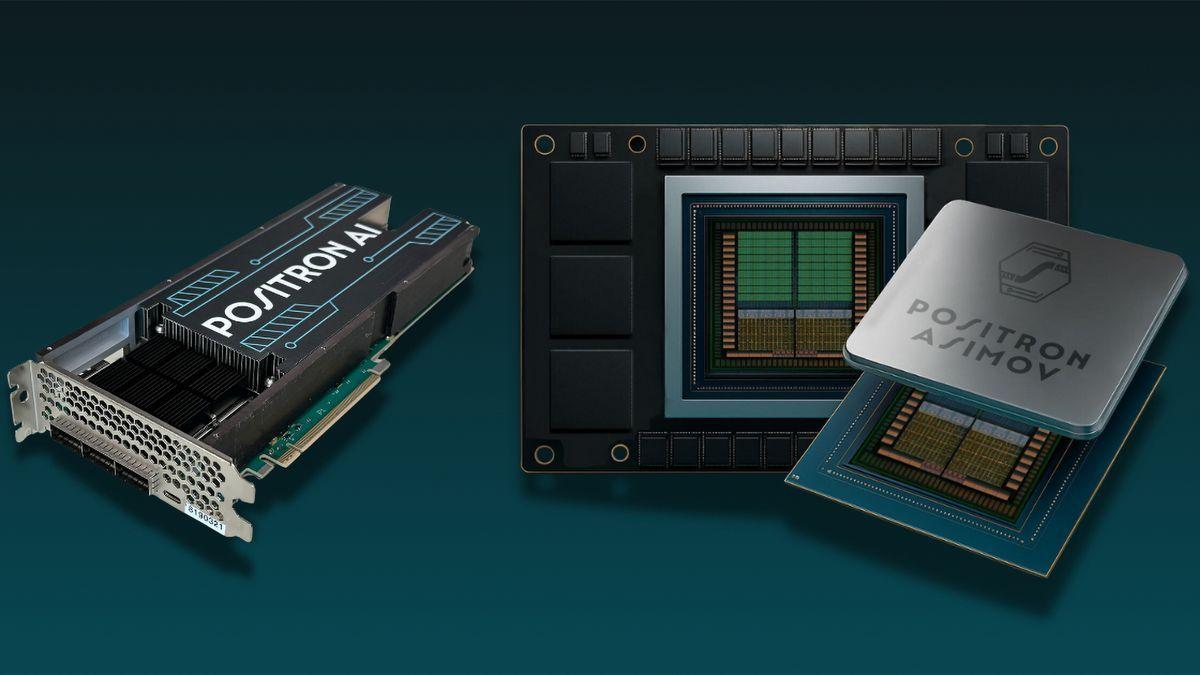

To address concerns about power consumption of systems used for AI inference, hyperscale cloud service provider (CSP) Cloudflare is testing various AI accelerators that are not AI GPUs from AMD or Nvidia, reports the Wall Street Journal. Recently, the company began to test drive Positron AI's Atlas solution that promises to beat Nvidia's H200 at just 33% of its power consumption. Positron is a U.S.-based company founded in 2023 that develops AI accelerators focused exclusively on inference. Unlike general-purpose GPUs that are designed for AI training, AI inference, technical computing, and a wide range of other workloads, Positron's hardware is built from scratch to perform inference tasks efficiently and with minimal power consumption. Position AI's first-generation solution for large-scale transformer models is called Atlas. It packs eight Archer accelerators and is designed to beat Nvidia's Hopper-based systems while consuming a fraction of power. Positron AI's Atlas can reportedly deliver around 280 tokens per second per user in Llama 3.1 8B with BF16 compute at 2000W, whereas an 8-way Nvidia DGX H200 server can only achieve around 180 tokens per second per user in the same scenario, while using a whopping 5900W of power, according to a comparison conducted by Positron AI itself. This would make the Atlas three times more efficient in terms of performance-per-watt and in terms of performance-per-dollar compared to Nvidia's DGX H200 system. This claim, of course, requires verification by a third party. It is noteworthy that Positron AI makes its ASIC hardware at TSMC's Fab 21 in Arizona (i.e., using an N4 or N5 process technology), and the cards are also assembled in the U.S., which makes them an almost entirely American product. Still, since the ASIC is mated with 32GB of HBM memory, it uses an advanced packaging technology and, therefore, is likely assembled in Taiwan. Positron AI's Atlas systems and Archer AI accelerators are compatible with widely used AI tools like Hugging Face and serve inference requests through an OpenAI API-compatible endpoint, enabling users to adopt them without major changes to their workflows. Positron has raised over $75 million in total funding, including a recent $51.6 million round led by investors such as Valor Equity Partners, Atreides Management, and DFJ Growth. The company is also working on its 2nd Generation AI inference accelerator dubbed Asimov, an 8-way Titan machine that is expected in 2026 to compete against inference systems based on Nvidia's Vera Rubin platforms. Positron AI's Asimov AI accelerator will come with 2 TB of memory per ASIC, and, based on an image published by the company, will cease to use HBM, but will use another type of memory. The ASIC will also feature a 16 Tb/s external network bandwidth for more efficient operations in rack-scale systems. The Titan -- based on eight Asimov AI accelerators with 16 GB of memory in total -- is expected to be able to run models with up to 16 trillion parameters on a single machine, significantly expanding the context limits for large-scale generative AI applications. The system also supports simultaneous execution of multiple models, eliminating the one-model-per-GPU constraint, according to Positron AI. The AI industry's accelerating power demands are raising alarms as some massive clusters used for AI model training consume the same amount of power as cities. The situation is only getting worse as AI models are getting bigger and usage of AI is increasing, which means that the power consumption of AI data centers used for inference is also increasing at a rapid pace. Cloudflare is among the early adopters currently testing hardware from Positron AI. By contrast, companies like Google, Meta, and Microsoft are developing their own inference accelerators to keep their power consumption in check.

[2]

Positron bets on energy-efficient AI chips to challenge Nvidia's dominance

Highly anticipated: A new front is emerging in the race to power the next generation of artificial intelligence, and at the center of it is a startup called Positron whose bold ambitions are gaining traction in the semiconductor industry. As companies scramble to rein in the soaring energy demands of AI systems, Positron and a handful of challengers are betting that radically different chip architectures could loosen the grip of industry giants like Nvidia and reshape the AI hardware landscape. Founded in 2023, Positron has rapidly attracted investment and attention from major cloud providers. The startup recently raised $51.6 million in new funding, bringing its total to $75 million. Its value proposition is straightforward: delivering AI inference - the process of generating responses from trained models - far more efficiently and at a significantly lower cost than current hardware. Positron's chips are already being tested by customers such as Cloudflare. Andrew Wee, Cloudflare's head of hardware, has expressed concern over the unsustainable energy demands of AI data centers. "We need to find technical solutions, policy solutions, and other solutions that solve this collectively," he told The Wall Street Journal. According to CEO Mitesh Agrawal, Positron's next-generation hardware will directly compete with Nvidia's upcoming Vera Rubin platform. Agrawal claims that Positron's chips can deliver two to three times better performance per dollar - and up to six times greater energy efficiency - compared to Nvidia's projected offerings. Rather than trying to match Nvidia across every workload, Positron is focusing on a narrower but critical slice of AI inference, optimizing for speed and power savings. Its latest chip design embraces this focus by simplifying functions to handle the most demanding inference tasks with maximum efficiency. Positron isn't alone in the race for energy-efficient AI chips. Competitors like Groq and Mythic are also pursuing alternative architectures. Groq, for example, integrates memory directly into its processors, an approach it says enables faster inference while consuming a fraction of the power required by Nvidia's GPUs. The company claims its chips operate at one-third to one-sixth of Nvidia's energy costs. Meanwhile, tech giants such as Google, Amazon, and Microsoft are investing billions in developing their own proprietary inference hardware for internal deployment and cloud customers. Nvidia, for its part, has acknowledged both the market's appetite for alternatives and the growing concerns around energy use. According to senior director Dion Harris, the company's new Blackwell chips deliver up to 30 times the inference efficiency of previous models. Those claims are now being tested, as cloud providers seek practical solutions that can scale. Cloudflare has launched long-term trials of Positron's chips, with Wee noting that only one other startup has ever warranted such in-depth evaluation. Pressed on the stakes, he said that if the chips "deliver the advertised metrics, we will open the spigot and allow them to deploy in much larger numbers globally." As competition heats up, analysts caution that improved chip efficiency alone won't be enough to counter the explosive growth in AI workloads. Historically, gains in hardware performance are quickly consumed by new use cases and increasingly powerful models. Still, with fresh funding, interest from major customers, and a tightly focused design, Positron has positioned itself at the center of a critical debate about the future of AI infrastructure. Whether it - or any of its rivals - can deliver on its promises could shape how the world builds, powers, and pays for AI in the years ahead.

[3]

Positron believes it has found the secret to take on Nvidia in AI inference chips -- here's how it could benefit enterprises

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now As demand for large-scale AI deployment skyrockets, the lesser-known, private chip startup Positron is positioning itself as a direct challenger to market leader Nvidia by offering dedicated, energy-efficient, memory-optimized inference chips aimed at relieving the industry's mounting cost, power, and availability bottlenecks. "A key differentiator is our ability to run frontier AI models with better efficiency -- achieving 2x to 5x performance per watt and dollar compared to Nvidia," said Thomas Sohmers, Positron co-founder and CTO, in a recent video call interview with VentureBeat. Obviously, that's good news for big AI model providers, but Positron's leadership contends it is helpful for many more enterprises beyond, including those using AI models in their workflows, not as service offerings to customers. "We build chips that can be deployed in hundreds of existing data centers because they don't require liquid cooling or extreme power densities," pointed out Mitesh Agrawal, Positron's CEO and the former chief operating officer of AI cloud inference provider Lambda, also in the same video call interview with VentureBeat. Venture capitalists and early users seem to agree. Positron yesterday announced an oversubscribed $51.6 million Series A funding round led by Valor Equity Partners, Atreides Management and DFJ Growth, with support from Flume Ventures, Resilience Reserve, 1517 Fund and Unless. As for Positron's early customer base, that includes both name-brand enterprises and companies operating in inference-heavy sectors. Confirmed deployments include the major security and cloud content networking provider Cloudflare, which uses Positron's Atlas hardware in its globally distributed, power-constrained data centers, and Parasail, via its AI-native data infrastructure platform SnapServe. Beyond these, Positron reports adoption across several key verticals where efficient inference is critical, such as networking, gaming, content moderation, content delivery networks (CDNs), and Token-as-a-Service providers. These early users are reportedly drawn in by Atlas's ability to deliver high throughput and lower power consumption without requiring specialized cooling or reworked infrastructure, making it an attractive drop-in option for AI workloads across enterprise environments. Entering a challenging market that is decreasing AI model size and increasing efficiency But Positron is also entering a challenging market. The Information just reported that rival buzzy AI inference chip startup Groq -- where Sohmers previously worked as Director of Technology Strategy -- has reduced its 2025 revenue projection from $2 billion+ to $500 million, highlighting just how volatile the AI hardware space can be. Even well-funded firms face headwinds as they compete for data center capacity and enterprise mindshare against entrenched GPU providers like Nvidia, not to mention the elephant in the room: the rise of more efficient, smaller large language models (LLMs) and specialized small language models (SLMs) that can even run on devices as small and low-powered as smartphones. Yet Positron's leadership is for now embracing the trend and shrugging off the possible impacts on its growth trajectory. "There's always been this duality -- lightweight applications on local devices and heavyweight processing in centralized infrastructure," said Agrawal. "We believe both will keep growing." Sohmers agreed, stating: "We see a future where every person might have a capable model on their phone, but those will still rely on large models in data centers to generate deeper insights." Atlas is an inference-first AI chip While Nvidia GPUs helped catalyze the deep learning boom by accelerating model training, Positron argues that inference -- the stage where models generate output in production -- is now the true bottleneck. Its founders call it the most under-optimized part of the "AI stack," especially for generative AI workloads that depend on fast, efficient model serving. Positron's solution is Atlas, its first-generation inference accelerator built specifically to handle large transformer models. Unlike general-purpose GPUs, Atlas is optimized for the unique memory and throughput needs of modern inference tasks. The company claims Atlas delivers 3.5x better performance per dollar and up to 66% lower power usage than Nvidia's H100, while also achieving 93% memory bandwidth utilization -- far above the typical 10-30% range seen in GPUs. From Atlas to Titan, supporting multi-trillion parameter models Launched just 15 months after founding -- and with only $12.5 million in seed capital -- Atlas is already shipping and in production. The system supports up to 0.5 trillion-parameter models in a single 2kW server and is compatible with Hugging Face transformer models via an OpenAI API-compatible endpoint. Positron is now preparing to launch its next-generation platform, Titan, in 2026. Built on custom-designed "Asimov" silicon, Titan will feature up to two terabytes of high-speed memory per accelerator and support models up to 16 trillion parameters. Today's frontier models are in the hundred billions and single digit trillions of parameters, but newer models like OpenAI's GPT-5 are presumed to be in the multi-trillions, and larger models are currently thought to be required to reach artificial general intelligence (AGI), AI that outperforms humans on most economically valuable work, and superintelligence, AI that exceeds the ability for humans to understand and control. Crucially, Titan is designed to operate with standard air cooling in conventional data center environments, avoiding the high-density, liquid-cooled configurations that next-gen GPUs increasingly require. Engineering for efficiency and compatibility From the start, Positron designed its system to be a drop-in replacement, allowing customers to use existing model binaries without code rewrites. "If a customer had to change their behavior or their actions in any way, shape or form, that was a barrier," said Sohmers. Sohmers explained that instead of building a complex compiler stack or rearchitecting software ecosystems, Positron focused narrowly on inference, designing hardware that ingests Nvidia-trained models directly. "CUDA mode isn't something to fight," said Agrawal. "It's an ecosystem to participate in." This pragmatic approach helped the company ship its first product quickly, validate performance with real enterprise users, and secure significant follow-on investment. In addition, its focus on air cooling versus liquid cooling makes its Atlas chips the only option for some deployments. "We're focused entirely on purely air-cooled deployments... all these Nvidia Hopper- and Blackwell-based solutions going forward are required liquid cooling... The only place you can put those racks are in data centers that are being newly built now in the middle of nowhere," said Sohmers. All told, Positron's ability to execute quickly and capital-efficiently has helped distinguish it in a crowded AI hardware market. Memory is what you need Sohmers and Agrawal point to a fundamental shift in AI workloads: from compute-bound convolutional neural networks to memory-bound transformer architectures. Whereas older models demanded high FLOPs (floating-point operations), modern transformers require massive memory capacity and bandwidth to run efficiently. While Nvidia and others continue to focus on compute scaling, Positron is betting on memory-first design. Sohmers noted that with transformer inference, the ratio of compute to memory operations flips to near 1:1, meaning that boosting memory utilization has a direct and dramatic impact on performance and power efficiency. With Atlas already outperforming contemporary GPUs on key efficiency metrics, Titan aims to take this further by offering the highest memory capacity per chip in the industry. At launch, Titan is expected to offer an order-of-magnitude increase over typical GPU memory configurations -- without demanding specialized cooling or boutique networking setups. U.S.-built chips Positron's production pipeline is proudly domestic. The company's first-generation chips were fabricated in the U.S. using Intel facilities, with final server assembly and integration also based domestically. For the Asimov chip, fabrication will shift to TSMC, though the team is aiming to keep as much of the rest of the production chain in the U.S. as possible, depending on foundry capacity. Geopolitical resilience and supply chain stability are becoming key purchasing criteria for many customers -- another reason Positron believes its U.S.-made hardware offers a compelling alternative. What's next? Agrawal noted that Positron's silicon targets not just broad compatibility but maximum utility for enterprise, cloud, and research labs alike. While the company has not named any frontier model providers as customers yet, he confirmed that outreach and conversations are underway. Agrawal emphasized that selling physical infrastructure based on economics and performance -- not bundling it with proprietary APIs or business models -- is part of what gives Positron credibility in a skeptical market. "If you can't convince a customer to deploy your hardware based on its economics, you're not going to be profitable," he said.

[4]

Positron AI Secures $51.6 Mn in Series A to Build its AI Inference Engine | AIM

Positron claims that Atlas offers 3.5 times better performance per dollar compared to NVIDIA's H100. Positron AI, known for developing American-made hardware and software for AI inference, has bagged a $51.6 million oversubscribed Series A funding round, raising its total capital for the year to over $75 million. The round was led by Valor Equity Partners, Atreides Management, and DFJ Growth, with additional investments from Flume Ventures, Resilience Reserve, 1517 Fund, and Unless. This funding will go towards the deployment of Positron's first product, Atlas, and accelerate the launch of its second-generation products in 2026. With global tech firms projected to spend over $320 billion on AI infrastructure by 2025, businesses are facing rising cost pressures, power limits, and shortages of NVIDIA GPUs. Positron's specialised solution provides cost and efficiency advantages. Positron claims that Atlas offers 3.5 times better performance per dollar and up to 66% lower power consumption compared to NVIDIA's H100. Atlas is specifically designed to enhance generative AI applications. "By generating 3x more tokens per watt than existing GPUs, Positron multiplies the revenue potential of data centres. Positron's innovative approach to AI inference chip and memory architecture removes existing bottlenecks on performance and democratizes access to the world's information and knowledge," said Randy Glein, co-founder and managing partner at DFJ Growth. Positron Atlas boasts a memory-optimised FPGA architecture that achieves 93% bandwidth utilisation, far exceeding the typical 10-30% of GPU systems and supports models with up to half a trillion parameters in a single 2-kilowatt server. It is also compatible with Hugging Face transformer models and processes inference requests through an OpenAI API-compatible endpoint. Powered by US-made chips, Atlas is already in use for LLM hosting, generative agents, and enterprise copilots, offering lower latency and reduced hardware demands. "Our highly optimised silicon and memory architecture allows for superintelligence to be run in a single system with our target of running up to 16-trillion-parameter models per system on models with tens of millions of tokens of context length or memory-intensive video generation models," said Mitesh Agrawal, CEO of Positron AI. With the series A funding secured, Positron is advancing its next-gen system for large-scale frontier model inference. Titan, the successor to Atlas and powered by Positron's 'Asimov' silicon, will support up to two terabytes of high-speed memory per accelerator, enabling the handling of 16-trillion-parameter models and significantly expanding context limits for the largest models.

Share

Share

Copy Link

Positron AI, a startup founded in 2023, is making waves in the AI hardware industry with its Atlas accelerator, claiming superior performance and energy efficiency compared to Nvidia's offerings for AI inference tasks.

Positron AI Challenges Nvidia with Atlas Accelerator

Positron AI, a startup founded in 2023, is making waves in the AI hardware industry with its Atlas accelerator, claiming superior performance and energy efficiency compared to Nvidia's offerings for AI inference tasks

1

. The company has recently secured $51.6 million in Series A funding, bringing its total capital raised to over $75 million4

.Atlas Accelerator: Performance and Efficiency Claims

Source: Tom's Hardware

According to Positron AI, the Atlas accelerator can deliver around 280 tokens per second per user in Llama 3.1 8B with BF16 compute at 2000W, compared to approximately 180 tokens per second per user for an 8-way Nvidia DGX H200 server consuming 5900W

1

. The company claims that Atlas offers:- 3.5 times better performance per dollar compared to Nvidia's H100

- Up to 66% lower power consumption

- 93% memory bandwidth utilization, far exceeding the typical 10-30% range seen in GPUs

3

Technical Specifications and Compatibility

Atlas is designed specifically for large-scale transformer models and packs eight Archer accelerators

1

. Key features include:- Support for models with up to 0.5 trillion parameters in a single 2-kilowatt server

- Compatibility with Hugging Face transformer models

- OpenAI API-compatible endpoint for inference requests

4

Market Position and Early Adoption

Source: AIM

Positron AI is positioning itself as a direct challenger to Nvidia in the AI inference chip market. The company's focus on energy efficiency and cost-effectiveness has attracted attention from major cloud providers and enterprises

2

. Early adopters include:- Cloudflare, using Atlas hardware in globally distributed, power-constrained data centers

- Parasail, via its AI-native data infrastructure platform SnapServe

3

Related Stories

Future Developments: Titan and Asimov

Positron AI is already working on its next-generation system, Titan, powered by the Asimov AI accelerator. Expected to launch in 2026, Titan aims to compete against inference systems based on Nvidia's Vera Rubin platforms

1

. Key features of the upcoming system include:- Support for models with up to 16 trillion parameters on a single machine

- 2 TB of memory per ASIC

- 16 Tb/s external network bandwidth

- Ability to run multiple models simultaneously

1

Industry Impact and Challenges

Source: VentureBeat

The emergence of Positron AI and other startups in the AI chip space highlights the growing concern over the power consumption and cost of AI infrastructure. As AI models continue to grow in size and complexity, efficient inference solutions become increasingly critical

2

.However, Positron AI faces significant challenges in a market dominated by established players like Nvidia. The company will need to deliver on its performance and efficiency claims to gain widespread adoption and compete effectively in this rapidly evolving industry

3

.References

Summarized by

Navi

[3]

Related Stories

Positron Secures $23.5 Million to Challenge NVIDIA with Made-in-America AI Chips

13 Feb 2025•Technology

Positron raises $230M Series B to challenge Nvidia with energy-efficient AI chips at $1B valuation

04 Feb 2026•Startups

TensorWave Secures $43M Funding to Challenge Nvidia's AI GPU Dominance with AMD-Powered Cloud

08 Oct 2024•Technology

Recent Highlights

1

Pentagon threatens Anthropic with Defense Production Act over AI military use restrictions

Policy and Regulation

2

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

3

Anthropic accuses Chinese AI labs of stealing Claude through 24,000 fake accounts

Policy and Regulation