Reflection 70B AI Model: From Promise to Controversy

2 Sources

2 Sources

[1]

Reflection 70B AI model the story so far

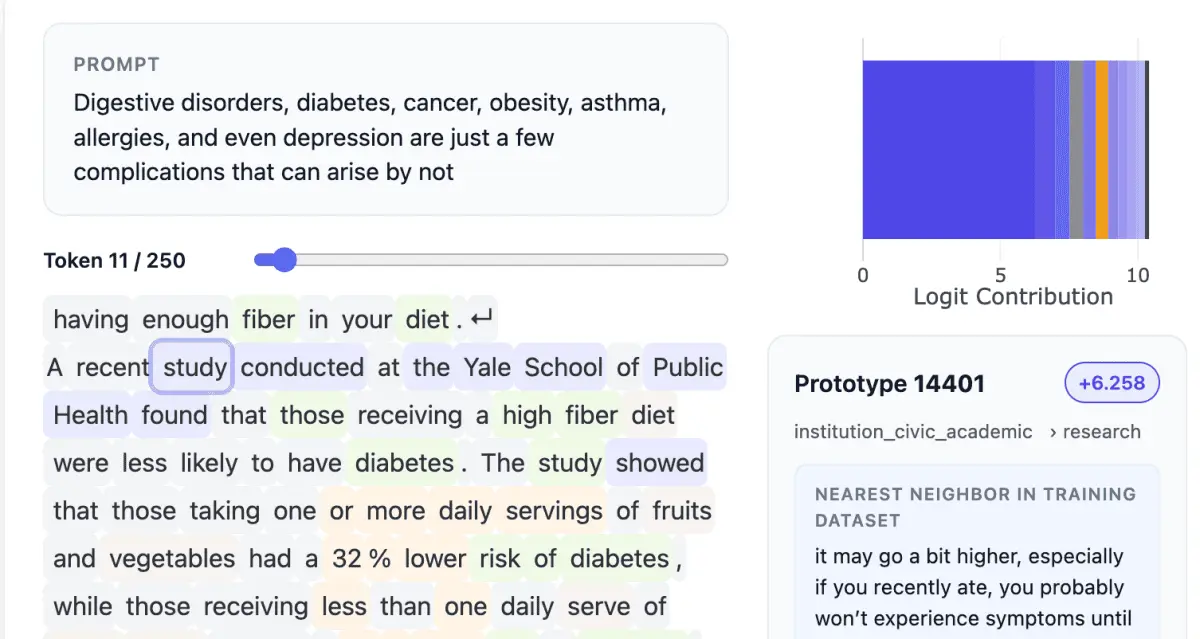

The unveiling of the AI model Reflection 70B, developed by Matt Shumer and Sahil from Glaive, sparked both excitement and controversy within the AI community. Initially hailed as a groundbreaking open-source model that could rival closed-source counterparts, Reflection 70B now finds itself under intense scrutiny due to inconsistencies in its performance claims and allegations of potential fraud. This overview of the story so far provide more insights into the unfolding story, examining the community's reaction, the model's performance issues, and the broader implications for AI model evaluation and reporting practices. When Matt Shumer first announced Reflection 70B, it was presented as a top-performing open-source AI model that could outperform many proprietary technologies. Shumer attributed the model's success to an innovative technique called "reflection tuning," which generated significant buzz and anticipation within the AI community. However, the initial enthusiasm was quickly tempered by a wave of skepticism as users on platforms like Twitter and Reddit began to question the validity of the model's benchmarks and performance claims. As the controversy deepened, allegations emerged suggesting that the private API for Reflection 70B might be wrapping another model, specifically Claude 3.5. This led to accusations of gaming benchmarks and misleading performance metrics, which, if proven true, would represent a serious breach of trust within the AI community. In response to the mounting criticism, Matt Shumer provided explanations and attempted to address the issues. He admitted to a mix-up in the model weights during the upload process, which he claimed was responsible for some of the performance discrepancies. However, many in the community remained unconvinced, demanding greater transparency and accountability from the developers. Here are a selection of other articles from our extensive library of content you may find of interest on the subject of Llama 3 : The Reflection 70B controversy has sparked important discussions within the AI community about the need for more robust evaluation methods and the ease with which AI benchmarks can be manipulated. AI researchers and analysts have provided detailed breakdowns and critiques, emphasizing the importance of transparency and rigorous testing in the development and reporting of AI models. The story of Reflection 70B serves as a cautionary tale, reminding us of the challenges and responsibilities that come with pushing the boundaries of AI technology. It is through open dialogue, rigorous testing, and a commitment to transparency that the AI community can continue to make meaningful progress while maintaining the trust and confidence of the public.

[2]

The Reflection 70B model held huge promise for AI but now its creators are accused of fraud -- here's what went wrong

The creators of Reflection 70B, a tuned-up version of Meta Llama 70B that was recently touted as the world's top open-source AI model, have just opened up after being accused of fraud. Based on independent tests run by Artificial Analysis, the model fails to deliver on the promises made by Matt Shumer, CEO of OthersideAI and HypeWrite, the company behind Reflection 70B. Shumer, who initially attributed the discrepancies to an issue with the model's upload process, has since admitted that he may have gotten ahead of himself in the claims he had made. But critics in the AI research community have gone as far as accusing Shumer of fraud, stating that the model is just a thin wrapper based on Anthropic's Claude, rather than a tuned-up version of Meta Llama. Developed by New York startup HyperWrite AI, Reflection 70B was touted as "the world's top open-source model" by Matt Shumer, the company's CEO. Yet on September 7, a day after Shumer's announcement on X, Artificial Analysis reported that their evaluation of Reflection 70B yielded results significantly lower than Shumer's claims. Shumer attributed these to an upload error affecting the model's weights, which caused a discrepancy between Shumer's private API and the weights uploaded to Hugging Face's model repository. However, further analysis by the AI community on platforms like Reddit and Github suggested that Reflection 70B's performance mirrors closer to Meta Llama 3 rather than Llama 3.1, as claimed by Shumer. Suspicions were raised further when it was found that Shumer had an undisclosed vested interest in Glaive, the platform he claimed was used to generate the model's synthetic training data. Some went on to suggest that Reflection 70B was merely a "wrapper" built on top of Anthropic's proprietary AI model, Claude 3. On September 8, X user Shin Megami Boson publicly accused Matt Shumer of "fraud in the AI research community." After initially going silent as the controversy erupted, Shumer issued a public response through X on September 10, acknowledging the skepticism around the model's performance. He claimed a team was working to understand what went wrong and promised transparency once they had the facts. However, Shumer did not provide a clear explanation for the performance discrepancies. Sahil Chaudhary, founder of Glaive, the platform Shumer said was used to train Reflection 70B, also admitted uncertainty about the model's capabilities and that the touted benchmark scores had not been reproducible. Critics have remained unsatisfied with Shumer's response so far. "Shumer's explanations and apologies have failed to provide a satisfactory explanation for the discrepancies," reported analytics firm GlobalVillageSpace. Yuchen Jin, co-founder of Hyperbolic Labs, expressed disappointment in the lack of transparency and called for more thorough explanations from Shumer.

Share

Share

Copy Link

The Reflection 70B AI model, initially hailed as a breakthrough, is now embroiled in controversy. Its creators face accusations of fraud, raising questions about the model's legitimacy and the future of AI development.

The Rise of Reflection 70B

The artificial intelligence community was buzzing with excitement when the Reflection 70B model was introduced. Developed by a team of researchers, this large language model was touted as a significant advancement in AI technology. With 70 billion parameters, it promised improved performance and capabilities compared to its predecessors

1

.Impressive Claims and Initial Reception

Reflection 70B was said to outperform other popular models like GPT-3.5 and Claude-v1 in various benchmarks. The developers claimed it excelled in tasks such as reasoning, math, and coding

1

. These assertions garnered attention from AI enthusiasts and researchers alike, who were eager to explore the model's potential applications.The Controversy Unfolds

However, the excitement surrounding Reflection 70B was short-lived. Allegations of fraud soon emerged, casting a shadow over the model and its creators

2

. The controversy has raised serious questions about the legitimacy of the model and the integrity of the research behind it.Accusations and Investigations

The exact nature of the fraud accusations remains unclear, but they have prompted investigations into the development process and the claims made about Reflection 70B's capabilities. The AI community, once enthusiastic about the model, is now grappling with the implications of these allegations

2

.Related Stories

Impact on AI Research and Development

This controversy has sent shockwaves through the AI research community. It highlights the importance of transparency, peer review, and reproducibility in AI development. The incident may lead to increased scrutiny of claims made by AI researchers and developers in the future

2

.The Future of Reflection 70B

As investigations continue, the future of Reflection 70B remains uncertain. The model's availability and use may be affected by the outcome of these inquiries. This situation serves as a cautionary tale for the AI community, emphasizing the need for rigorous validation and ethical practices in AI research and development

1

2

.References

Summarized by

Navi

[1]

Related Stories

Recent Highlights

1

Google Gemini 3.1 Pro doubles reasoning score, beats rivals in key AI benchmarks

Technology

2

Pentagon Summons Anthropic CEO as $200M Contract Faces Supply Chain Risk Over AI Restrictions

Policy and Regulation

3

Canada Summons OpenAI Executives After ChatGPT User Became Mass Shooting Suspect

Policy and Regulation