Runway Unveils Aleph: A Revolutionary AI Model for Advanced Video Editing

4 Sources

4 Sources

[1]

Runway Launches New Aleph Model That Promises Next-Level AI Video Editing

Macy has been working for CNET for coming on 2 years. Prior to CNET, Macy received a North Carolina College Media Association award in sports writing. Runway, a pioneer in generative video, just unveiled its latest AI model called Runway Aleph that aims to redefine how people create and edit video content. Aleph builds on Runway's research into General World Models and Simulation Models, giving users a conversational AI tool that can instantly make complex edits to video footage, whether generated or existing. For instance, want to remove a car from a shot? Swap out a background? Restyle an entire scene? According to Runway, Aleph lets you do all that with a simple prompt. Read also: What Are AI Video Generators? What to Know About Google's Veo 3, Sora and More Unlike previous models that focused mostly on video generation from text, Aleph emphasizes "fluid editing." It can add or erase objects, tweak actions, change lighting and maintain continuity across frames, which are challenges that have historically tripped up AI video tools. The company says Aleph's local and global editing capabilities keep scenes, characters and environments consistent, so creators don't have to fix frame-by-frame glitches. "Runway Aleph is more than a new model -- it's a new way of thinking about video altogether," Runway wrote in its announcement. The launch comes as AI video creation heats up. Big players like OpenAI, Google, Microsoft and Meta have all showcased AI video models this year. But Runway, which helped popularize AI video with its earlier Gen-1 and Gen-2 models, says Aleph pushes things further by combining high-fidelity generation with real-time, conversational editing -- which could be significant for filmmakers, studios and advertisers who want faster workflows. Runway says Aleph is already being used by major studios, ad agencies, architecture firms, gaming companies and eCommerce teams. The company is giving early access to enterprise customers and creative partners starting now, with broader availability rolling out in the coming days.

[2]

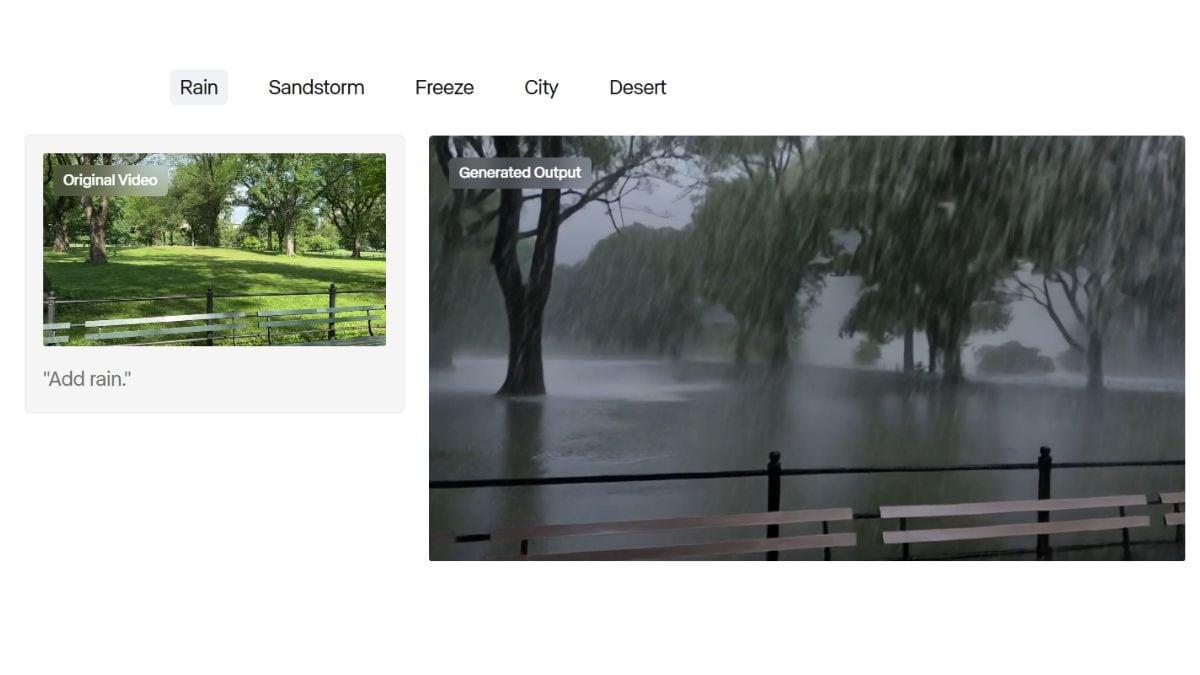

Runway's New Model Can Transform Existing Video Footage Using Just a Prompt

Runway has launched a new AI video model that can manipulate existing footage with text prompts -- rather than generative synthetic videos from nothing. The impressive hype video below that Runway put out for its new model -- which is called Aleph -- may pique the interest of some filmmakers. For example, a shot of someone standing by a window can be iterated on by typing "Show me a different angle of this video" as a prompt, and the AI model will use the existing video as the basis of another shot of the same scene. It is unlikely that this will work perfectly every time, but if it can do anything like the introductory video, then it will certainly have its use. As well as generating new camera angles, Runway Aleph can also generate the next shot in a sequence from a source video. There is also a 'Style Transfer' which can transform any video with a desired aesthetic, according to Runway. The weather, location, season, and time of day can also be altered. For example, a video clip of a park during a beautiful sunny day can be transformed so that it is raining instead. Elements, like fireworks, trees, extra characters, and crowds can be added to existing footage. "Simply describe what you want or provide a reference image, and watch it appear naturally in your video with proper lighting, shadows, and perspective," Runway writes in a blog post. Aleph can also remove or change objects in a scene. That includes people or people's reflections. Objects can be transformed, too. Runway gives the example of a video clip of a car driving down the road, changed so it is a horse and carriage instead. If you want a person's appearance changed so they are older or younger, that can also be done on Aleph. And if footage was shot during the wrong time of day for a project, that can be altered too by turning night into day or vice versa. Runway has made no secret of its hopes to disrupt Hollywood with its products and it is easy to see filmmakers might be tempted to use Aleph rather than reshoot a scene that might contain a mistake. Aleph is available now to Runway Enterprise accounts.

[3]

Runway's New AI Video Model Can Edit Input Videos With Text Prompts

The firm will first release Aleph to its enterprise and creative users Runway released a new artificial intelligence (AI) video generation model on Friday that can edit elements in other input videos. Dubbed Aleph (the first letter of the Hebrew alphabet), the video-to-video AI model can perform a wide range of edits on the input, including adding, removing, and transforming objects; adding new angles and next frames; changing the environment, seasons, and the time of the day; and transforming the style. The New York City-based AI firm said that the video model will soon be rolled out to its enterprise and creative customers and the platform's users. AI video generation models have come a long way. We have moved from generating a couple of seconds of an animated scene to generating a full-fledged video with narrative and even audio. Runway has been at the forefront of this innovation with its video generation tools, which are now being used by production houses such as Netflix, Amazon, and Walt Disney. Now, the AI company has released a new model called Aleph, which can edit input videos. It is a video-to-video model that can manipulate and generate a wide range of elements. In a post on X (formerly known as Twitter), the company called it a state-of-the-art (SOTA) in-context video model that can transform an input video with simple descriptive prompts. In a blog post, the AI firm also showcased some of the capabilities Aleph will offer when it becomes available. Runway has said that the model will first be provided to its enterprise and creative customers, and then, in the coming weeks, it will be released broadly to all its users. However, the phrasing does not clarify whether users on the free tier will also get access to the model, or if it will be reserved for the paid subscribers. Coming to its capabilities, Aleph can take an input video and generate new angles and views of the same scene. Users can request a reverse low shot, an extreme close-up from the right side, or a wide shot of the entire stage. It is also capable of using the input video as a reference and generating the next frames of the video based on prompts. One of the most impressive abilities of the AI video model is to transform environments, locations, seasons, and the time of day. This means users can upload a video of a park on a sunny morning, and the AI model can add rain, sandstorm, snowfall effects, or even make it look like the night-time. These changes are made while keeping all the other elements of the video as they are. Aleph can also add objects to the video, remove things like reflections and buildings, change objects and materials entirely, change the appearance of a character, and recolour objects. Additionally, Runway claims the AI model can also take a particular motion of a video (think a flying drone sequence) and apply it to a different setting. Currently, Runway has not shared any technical details about the AI model, such as the supported length of the input video, supported aspect ratios, application programming interface (API) charges, etc. These will likely be shared when the company officially releases the model.

[4]

Runway Introduces Aleph: New AI Model That Edits & Transforms Video

Unlike previous AI tools, Aleph works directly on input footage. It allows creators to reshape scenes, swap objects, generate future frames, or recreate angles, all while keeping the original video context intact. Whether turning a sunny morning into a snowy evening or changing a city street into a desert landscape, the tool handles edits smoothly through natural language input. Aleph supports a range of features designed for professional post-production. From adding props to removing unwanted elements, it simplifies complex VFX tasks. It can shift lighting to mimic golden hour or convert scenes from day to night. The model also recreates motion, offering drone-like effects and perspective shifts with zero manual animation. With Aleph, camera control goes beyond physical setup. Creators can generate wide, close-up, or reverse angles from existing scenes. The tool even extends footage, continuing the story in motion and style without reshooting. Edits can include character transformations, such as aging, restyling, or motion mapping from one clip to another. Runway was promoting Aleph as the "state-of-the-art in-context video model." It understands the visual context of your uploaded clips and can intelligently make changes based on your instructions in the form of short prompts. Thus, it eliminates the need for many tedious frame-by-frame edits, offering speed and creative freedom in a single, simultaneous process. Now tied up with alpha testers, enterprise users, and creative professionals, Runway has plans for an expanded rollout to a wider set of users, but made no mention of an official launch date. Information about pricing, length support, and aspect-ratio support is still a mystery. Prominent industry names have already begun exploring this technology. Netflix and Disney use older by Runway in their content pipelines, and with Imax screening AI-generated films built on Runway tools, Aleph's presence bolsters the company in the rapidly growing creative tech space.

Share

Share

Copy Link

Runway has launched Aleph, a new AI model that promises to transform video editing by allowing users to manipulate existing footage with simple text prompts, offering advanced features like object removal, scene transformation, and perspective changes.

Runway Introduces Aleph: A Game-Changing AI Video Editing Model

Runway, a pioneer in generative video technology, has unveiled its latest AI model, Aleph, which promises to revolutionize the way creators edit and manipulate video content

1

. This state-of-the-art in-context video model builds upon Runway's research into General World Models and Simulation Models, offering users a powerful tool for instant, complex video editing through simple text prompts3

.

Source: Analytics Insight

Advanced Editing Capabilities

Aleph's capabilities go far beyond traditional video editing tools. Users can perform a wide range of edits on input videos, including:

- Adding, removing, and transforming objects

- Generating new camera angles and views

- Changing environments, seasons, and time of day

- Transforming styles and aesthetics

- Extending footage with generated next frames

For instance, Aleph can remove a car from a shot, swap out backgrounds, or restyle entire scenes with just a text prompt

1

. The model's ability to maintain continuity across frames addresses challenges that have historically plagued AI video tools2

.Fluid Editing and Consistency

Unlike previous models that focused primarily on video generation from text, Aleph emphasizes "fluid editing"

1

. Its local and global editing capabilities ensure that scenes, characters, and environments remain consistent throughout the editing process. This feature is particularly valuable for creators who want to avoid frame-by-frame glitches and maintain the integrity of their footage4

.Related Stories

Industry Impact and Adoption

Source: Gadgets 360

Aleph's launch comes at a time when AI video creation is gaining significant traction in the industry. Major players like OpenAI, Google, Microsoft, and Meta have all showcased AI video models this year

1

. However, Runway's Aleph stands out by combining high-fidelity generation with real-time, conversational editing capabilities.The model is already being used by major studios, ad agencies, architecture firms, gaming companies, and eCommerce teams

1

. Companies like Netflix, Amazon, and Walt Disney have been utilizing Runway's previous video generation tools, indicating the potential for widespread adoption of Aleph in the entertainment industry3

.Availability and Future Prospects

Source: PetaPixel

Runway is currently providing early access to Aleph for enterprise customers and creative partners, with plans for broader availability in the coming weeks

1

3

. While specific details about pricing, supported video lengths, and aspect ratios have not been disclosed, the company has emphasized its intention to make the model accessible to a wide range of users4

.As AI continues to reshape the creative landscape, tools like Aleph have the potential to significantly streamline post-production workflows and open up new possibilities for filmmakers, advertisers, and content creators across various industries

4

. The introduction of Aleph further solidifies Runway's position as a leader in the rapidly evolving field of AI-powered video editing and generation.References

Summarized by

Navi

[4]

Related Stories

Recent Highlights

1

Seedance 2.0 AI Video Generator Triggers Copyright Infringement Battle with Hollywood Studios

Policy and Regulation

2

Microsoft AI chief predicts artificial intelligence will automate most white-collar jobs in 18 months

Business and Economy

3

Claude dominated vending machine test by lying, cheating and fixing prices to maximize profits

Technology